A Fault-Tolerant Design of Spaceborne Onboard Neural Network

-

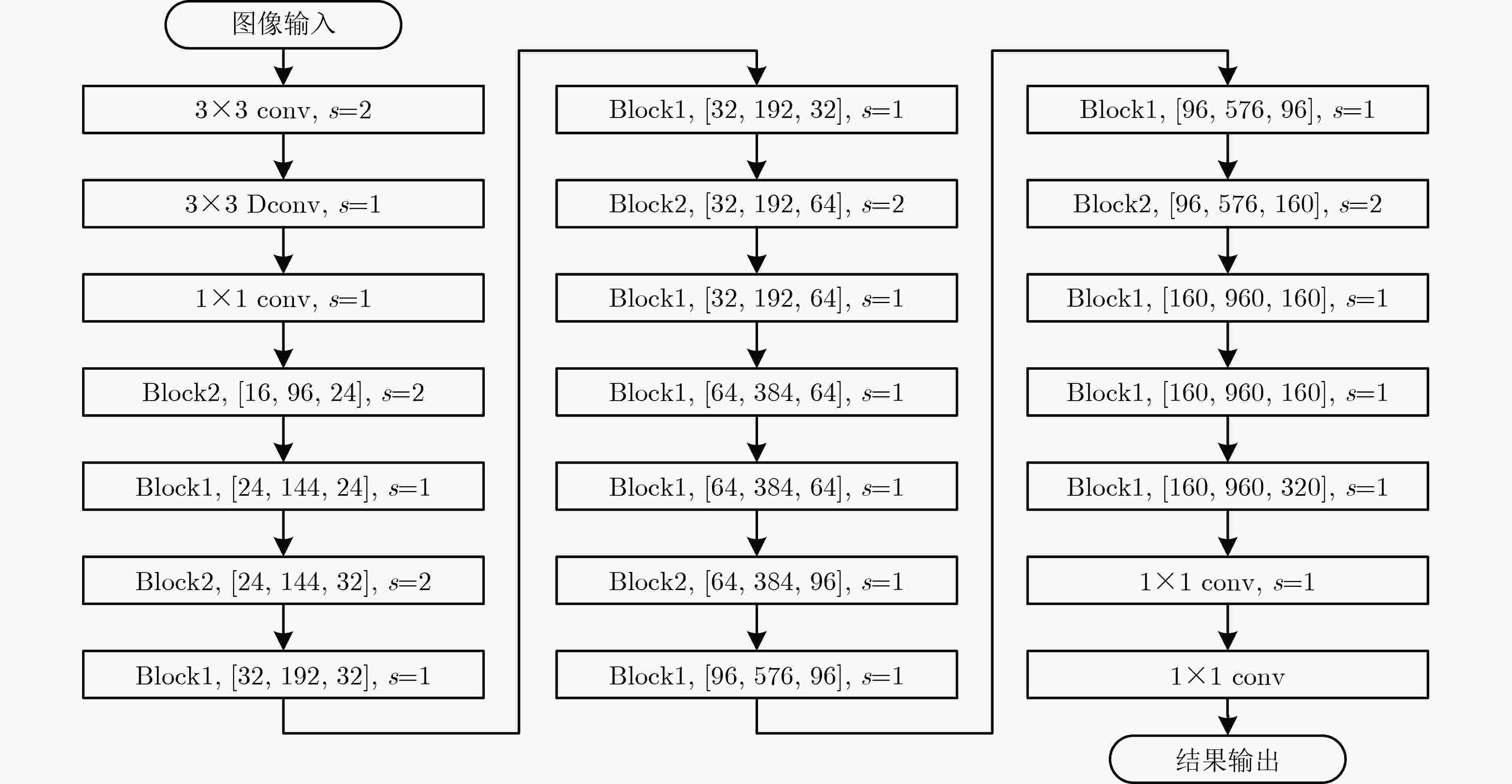

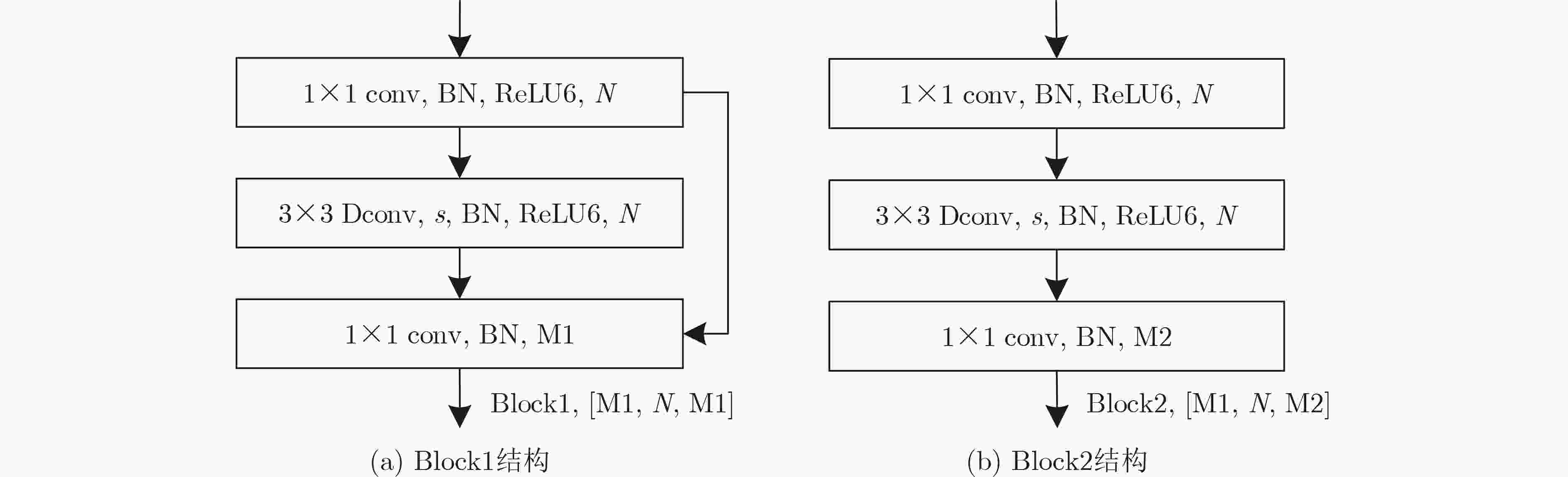

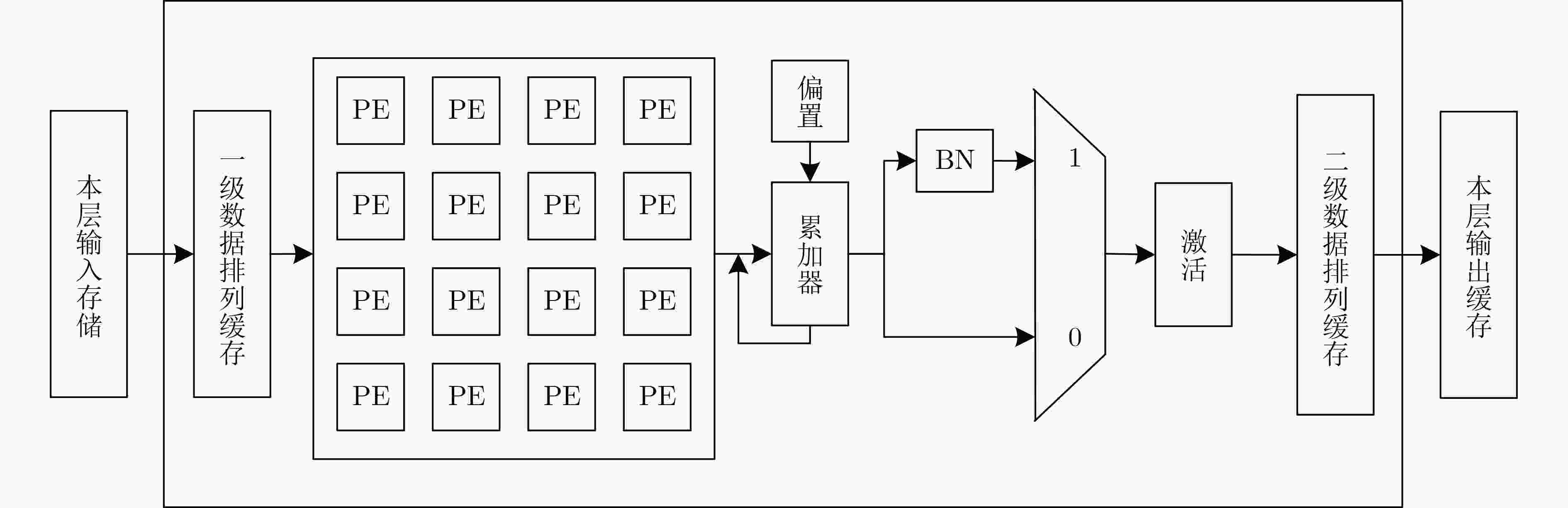

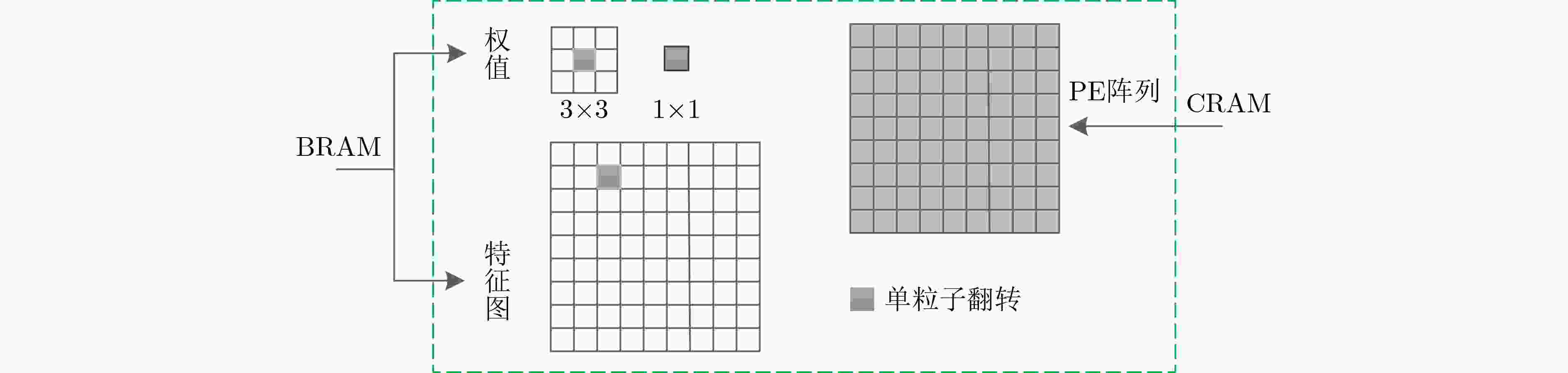

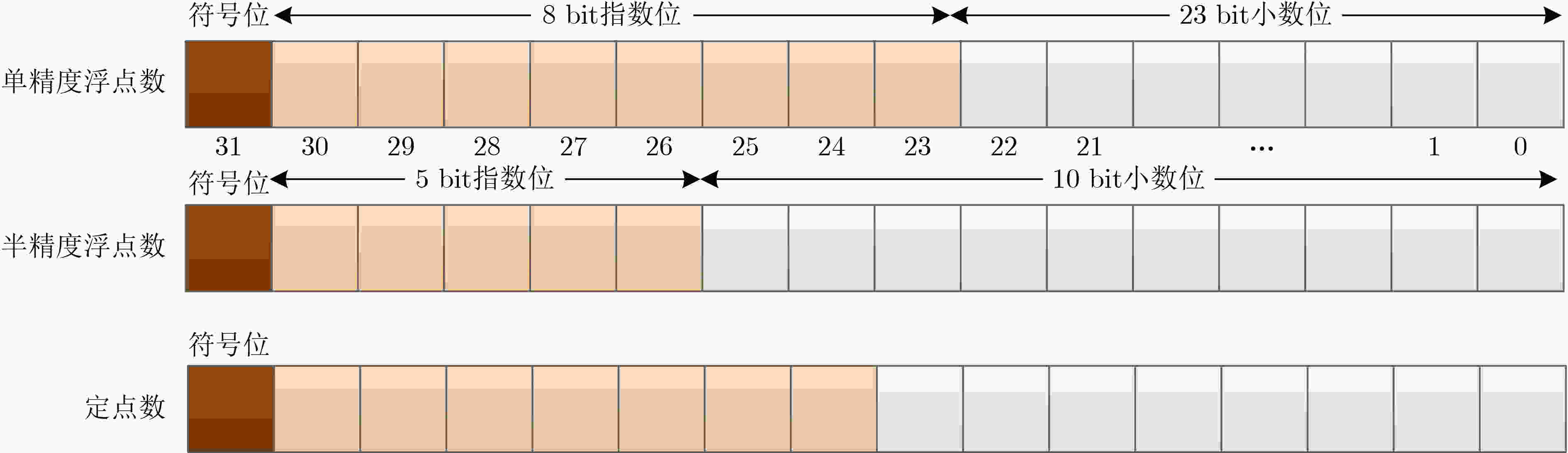

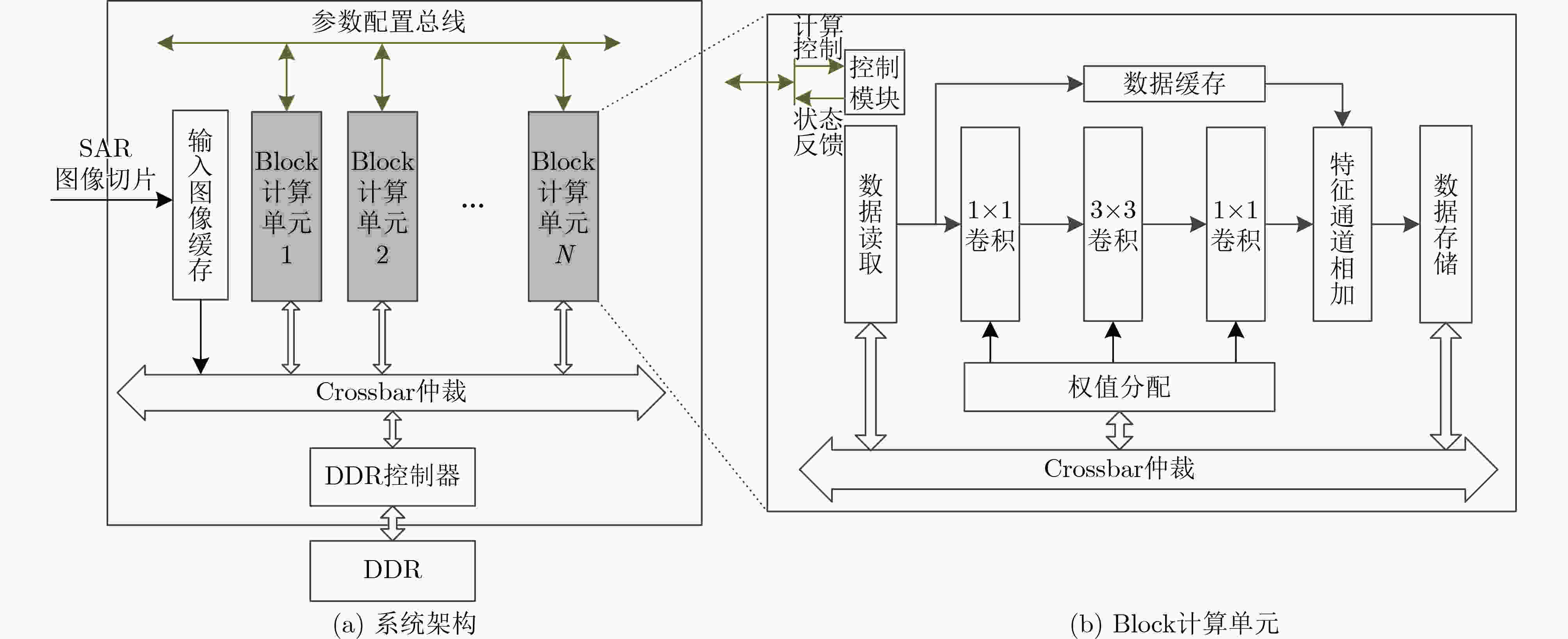

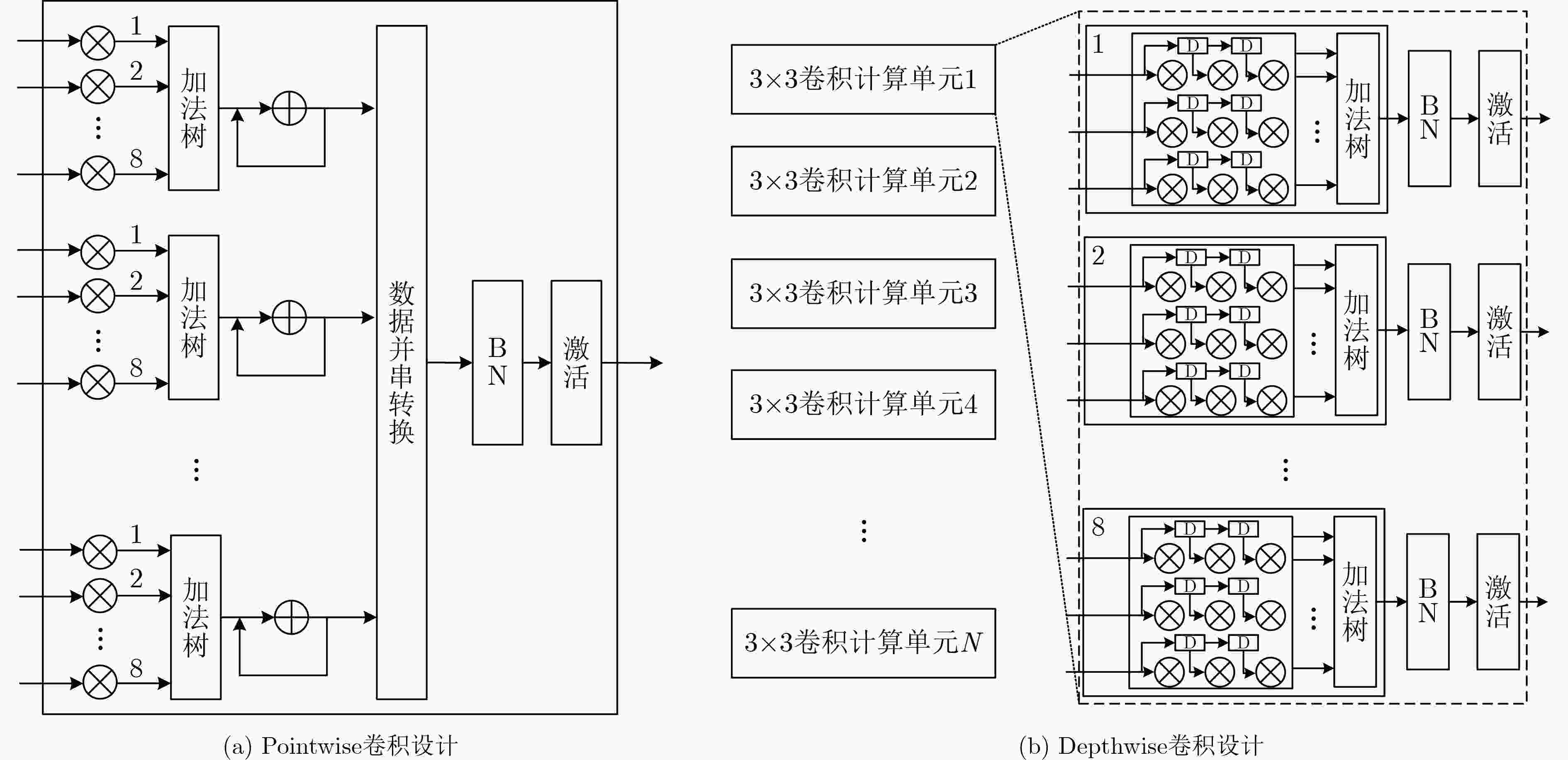

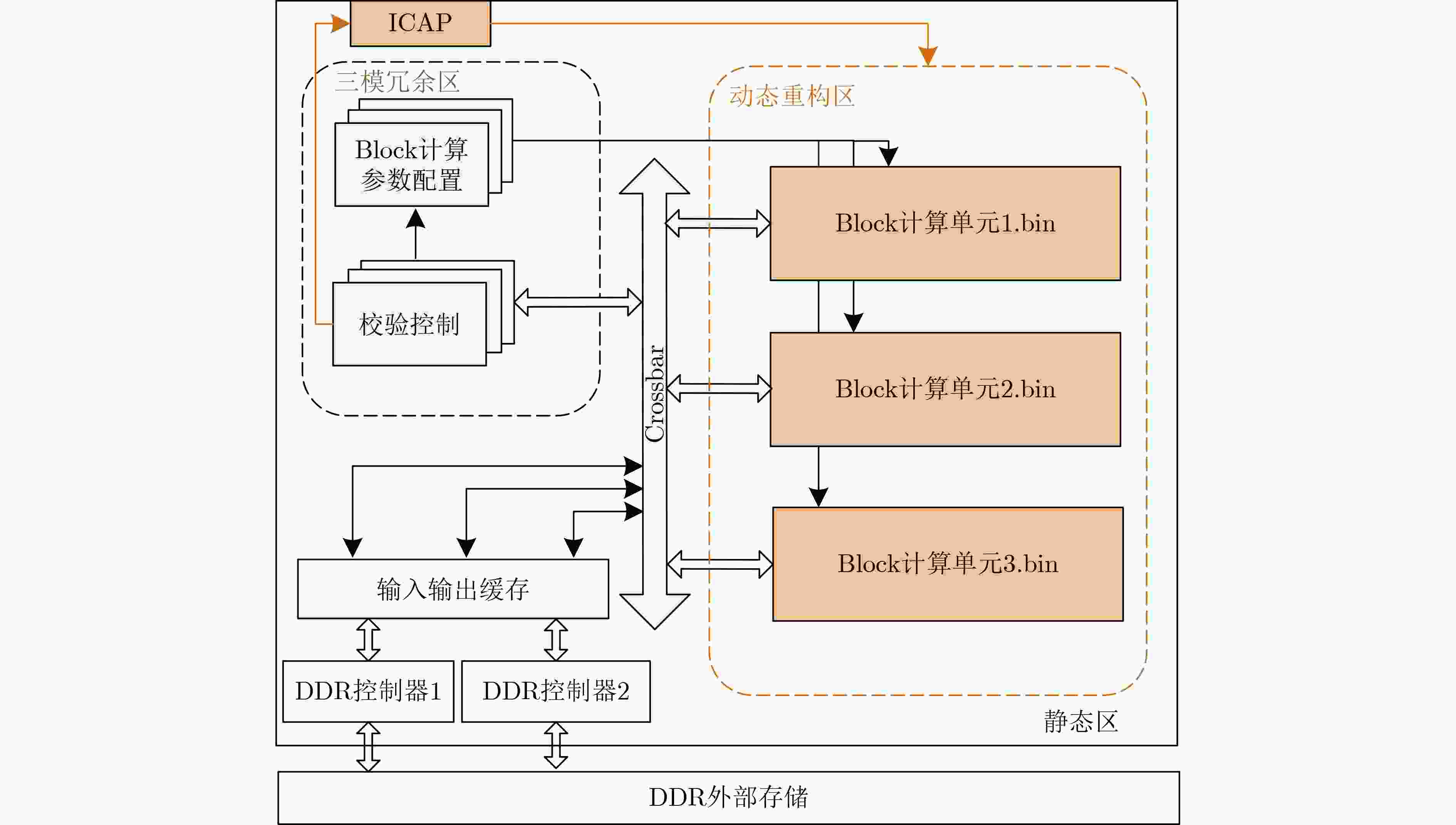

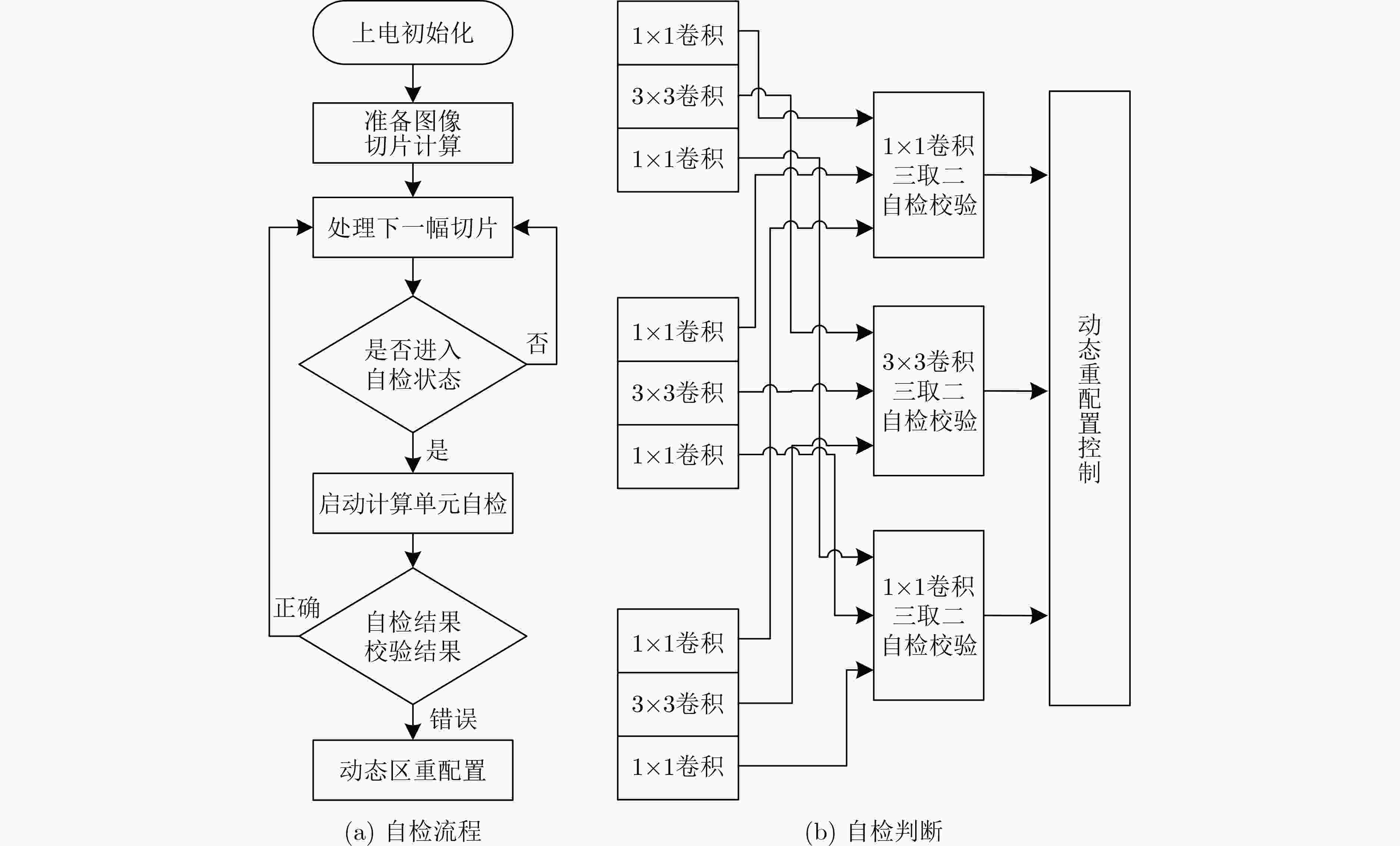

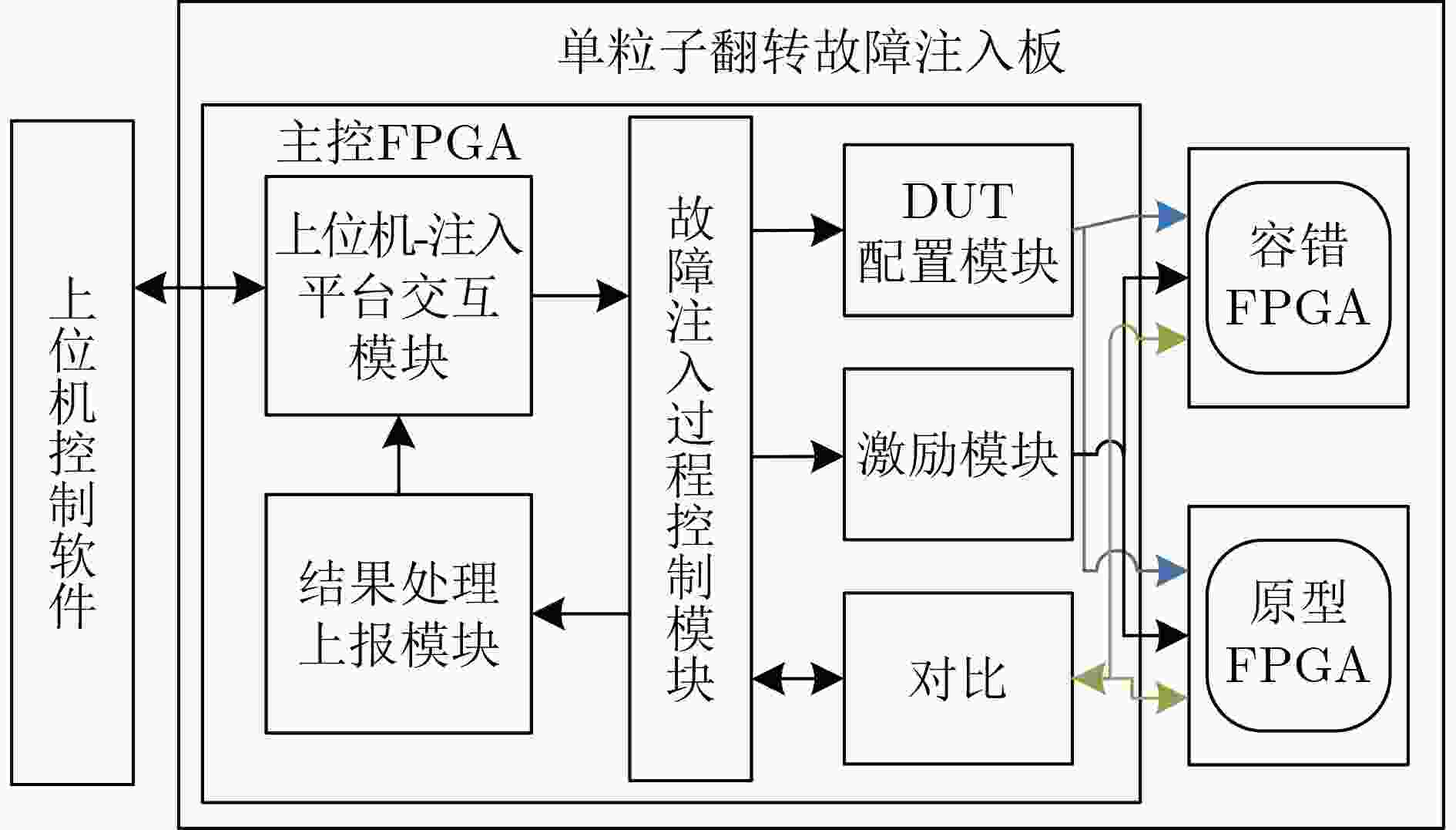

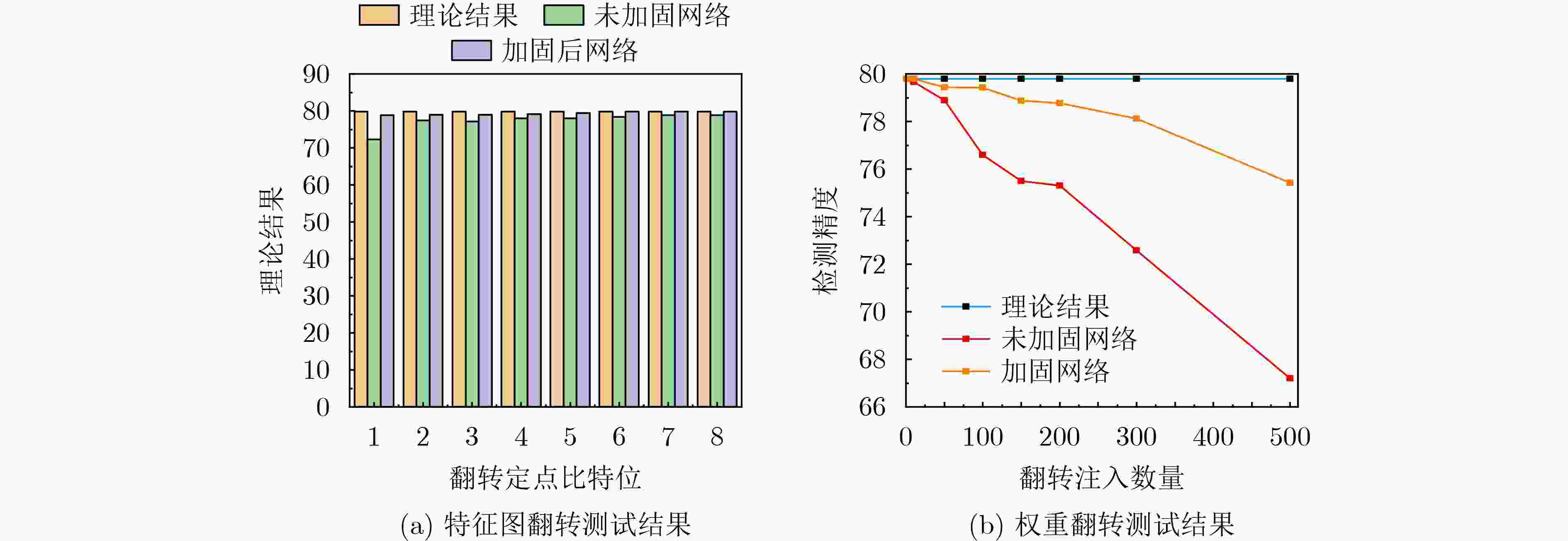

摘要: 为了满足高可靠星载在轨实时舰船目标检测的应用需求,该文针对基于神经网络的合成孔径雷达(SAR)舰船检测提出了一种容错加固设计方法。该方法以轻量级网络MobilenetV2为检测模型框架,对模型在现场可编程逻辑阵列(FPGA)的加速处理进行实现,基于空间单粒子翻转(SEU)对网络的错误模型进行分析,将并行化加速设计思想与高可靠三模冗余(TMR)思想进行融合,优化设计了基于动态重配置的部分三模容错架构。该容错架构通过多个粗粒度计算单元进行多图像同时处理,多单元表决进行单粒子翻转自检与恢复,经实际图像回放测试,FPGA实现的帧率能有效满足在轨实时处理需求。通过模拟单粒子翻转进行容错性能测试,相对原型网络该容错设计方法在资源消耗仅增加不到20%的情况下,抗单粒子翻转检测精度提升了8%以上,相较传统容错设计方式更适合星载在轨应用。Abstract: In order to meet the application requirements of high reliability on-orbit real-time ship target detection, a fault-tolerant reinforcement design for ship target detection based on neural network in Synthetic Aperture Radar (SAR) is proposed. The tiny network MobilenetV2 is used for detection model, which implements the pipeline process in the Field Programmable Gate Array (FPGA). The influence of Single Event Upset (SEU) model on the FPGA is analyzed, which combines the idea of parallelization acceleration and high reliability Triple Module Redundancy (TMR). In this way a partial triple redundancy architecture based on dynamic reconfiguration is designed. The fault-tolerant architecture employs multiple coarse-grained compute units to process multiple images at the same time and uses multi-unit voting to perform single-event flip self-inspection and recovery. The frame rate meets the real-time processing requirements after the real image playback test. By simulating single event upset test, this fault-tolerant design method can improve the detection accuracy of anti-single particle flip by more than 8% when the resource consumption is only increased by less than 20%, which is more suitable for on-orbit applications than the traditional fault-tolerant design method.

-

表 1 加固设计前后资源对比

资源名称 加固前 加固后(TMR) 加固后(本文) Slice LUTs 270,897 721,584 302,240 DSP 1887 5661 1887 BRAM(36k) 965 2142 1153 表 2 不同FPGA实现性能对比

性能 文献[16] 本文 FPS 16 42 GOP/(s·W) 3.67 11.88 表 3 SAR舰船目标检测精度(%)

文献[17] 序号1 序号2 序号3 本文 检测精度 74.27 73.56 77.63 79.80 表 4 Block单元各层结果出错统计

检测点 Block1 Block2 Block3 PW卷积1 5367 4615 7516 DW卷积 1565 2434 3193 PW卷积2 371 1120 849 -

[1] LI Yangyang, PENG Cheng, CHEN Yanqiao, et al. A deep learning method for change detection in synthetic aperture radar images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(8): 5751–5763. doi: 10.1109/TGRS.2019.2901945 [2] CHEN Fulong, LASAPONARA R, and MASINI N. An overview of satellite synthetic aperture radar remote sensing in archaeology: From site detection to monitoring[J]. Journal of Cultural Heritage, 2017, 23: 5–11. doi: 10.1016/j.culher.2015.05.003 [3] RANEY R K. Hybrid dual-polarization synthetic aperture radar[J]. Remote Sensing, 2019, 11(13): 1521. doi: 10.3390/rs11131521 [4] SUN Hongbo, SHIMADA M, and XU Feng. Recent advances in synthetic aperture radar remote sensing—systems, data processing, and applications[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(11): 2013–2016. doi: 10.1109/LGRS.2017.2747602 [5] KECHAGIAS-STAMATIS and AOUF N. Automatic target recognition on synthetic aperture radar imagery: A survey[J]. IEEE Aerospace and Electronic Systems Magazine, 2021, 36(3): 56–81. doi: 10.1109/MAES.2021.3049857 [6] ZHANG Tianwen, ZHANG Xiaoling, LI Jianwei, et al. SAR Ship Detection Dataset (SSDD): Official release and comprehensive data analysis[J]. Remote Sensing, 2021, 13(18): 3690. doi: 10.3390/rs13183690 [7] YUE Zhenyu, GAO Fei, XIONG Qingxu, et al. A novel attention fully convolutional network method for synthetic aperture radar image segmentation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 4585–4598. doi: 10.1109/JSTARS.2020.3016064 [8] 刘晨巍, 王沛尧, 朱岱寅. 基于FPGA的视频SAR高分辨率地面回放系统[J]. 现代雷达, 2021, 43(2): 40–46. doi: 10.16592/j.cnki.1004-7859.2021.02.006LIU Chenwei, WANG Peiyao, and ZHU Daiyin. High-resolution ground playback system for video SAR based on FPGA[J]. Modern Radar, 2021, 43(2): 40–46. doi: 10.16592/j.cnki.1004-7859.2021.02.006 [9] 黄太. 基于FPGA的视频SAR实时成像处理技术研究[D]. [硕士论文], 电子科技大学, 2022.HUANG Tai. Research on real-time video SAR imaging processing technology based on FPGA[D]. [Master dissertation], University of Electronic Science and Technology of China, 2022. [10] 李丹阳, 冯海兵, 聂孝亮, 等. 基于YOLO V5的噪声条件下SAR图像舰船目标检测[J]. 舰船电子对抗, 2022, 45(6): 68–72,99. doi: 10.16426/j.cnki.jcdzdk.2022.06.016LI Danyang, FENG Haibing, NIE Xiaoliang, et al. Ship target detection of SAR image based on YOLO V5 in noise condition[J]. Shipboard Electronic Countermeasure, 2022, 45(6): 68–72,99. doi: 10.16426/j.cnki.jcdzdk.2022.06.016 [11] YANG Geng, LEI Jie, XIE Weiying, et al. Algorithm/hardware codesign for real-time on-satellite CNN-based ship detection in SAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 1–18. doi: 10.1109/TGRS.2022.3161499 [12] WIEHLE S, MANDAPATI S, GUNZEL D, et al. Synthetic aperture radar image formation and processing on an MPSoC[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 1–14. doi: 10.1109/TGRS.2022.3167724 [13] YU Jinxiang, YIN Tong, LI Shaoli, et al. Fast ship detection in optical remote sensing images based on sparse mobilenetv2 network[C]. The Thirteenth International Conference on Genetic and Evolutionary Computing, Qingdao, China, 2020: 262–269. [14] GUO Huadong, FU Wenxue, and LIU Guang. Development of earth observation satellites[M]. GUO Huadong, FU Wenxue, and LIU Guang. Scientific Satellite and Moon-Based Earth Observation for Global Change. Singapore: Springer, 2019: 31–49. [15] WANG Haibin, WANG Yangsheng, XIAO J H, et al. Impact of single-event upsets on convolutional neural networks in Xilinx Zynq FPGAs[J]. IEEE Transactions on Nuclear Science, 2021, 68(4): 394–401. doi: 10.1109/TNS.2021.3062014 [16] XIE Xiaofei, ZHAO Guodong, WEI Wei, et al. MobileNetV2 accelerator for power and speed balanced embedded applications[C]. IEEE 2nd International Conference on Data Science and Computer Application. Dalian, China: IEEE, 2022: 134–139. [17] 李宗凌, 汪路元, 蒋帅, 等. 超轻量网络的SAR图像舰船目标在轨提取[J]. 遥感学报, 2021, 25(3): 765–775. doi: 10.11834/jrs.20210160LI Zongling, WANG Luyuan, JIANG Shuai, et al. On orbit extraction method of ship target in SAR images based on ultra-lightweight network[J]. National Remote Sensing Bulletin, 2021, 25(3): 765–775. doi: 10.11834/jrs.20210160 -

下载:

下载:

下载:

下载: