Asynchronous Federated Learning via Blockchain in Edge Computing Networks

-

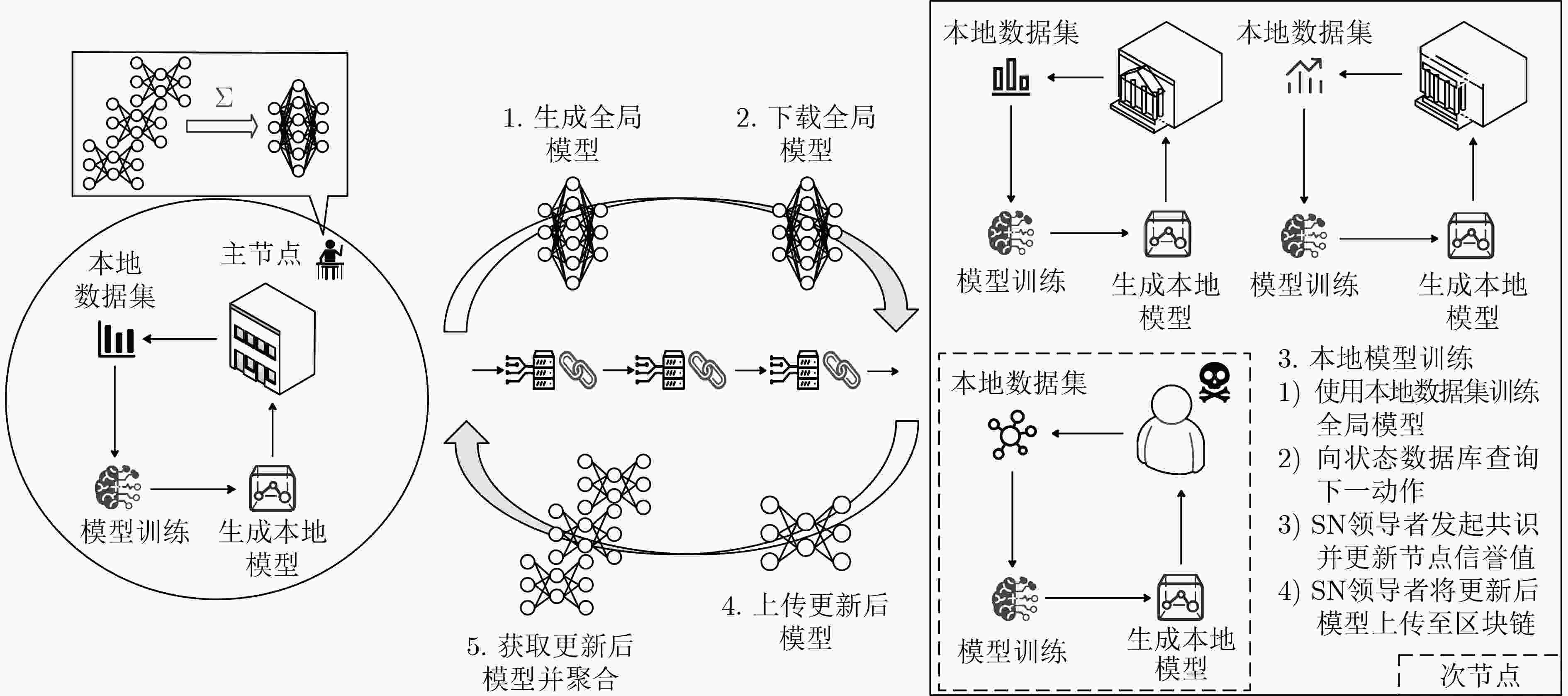

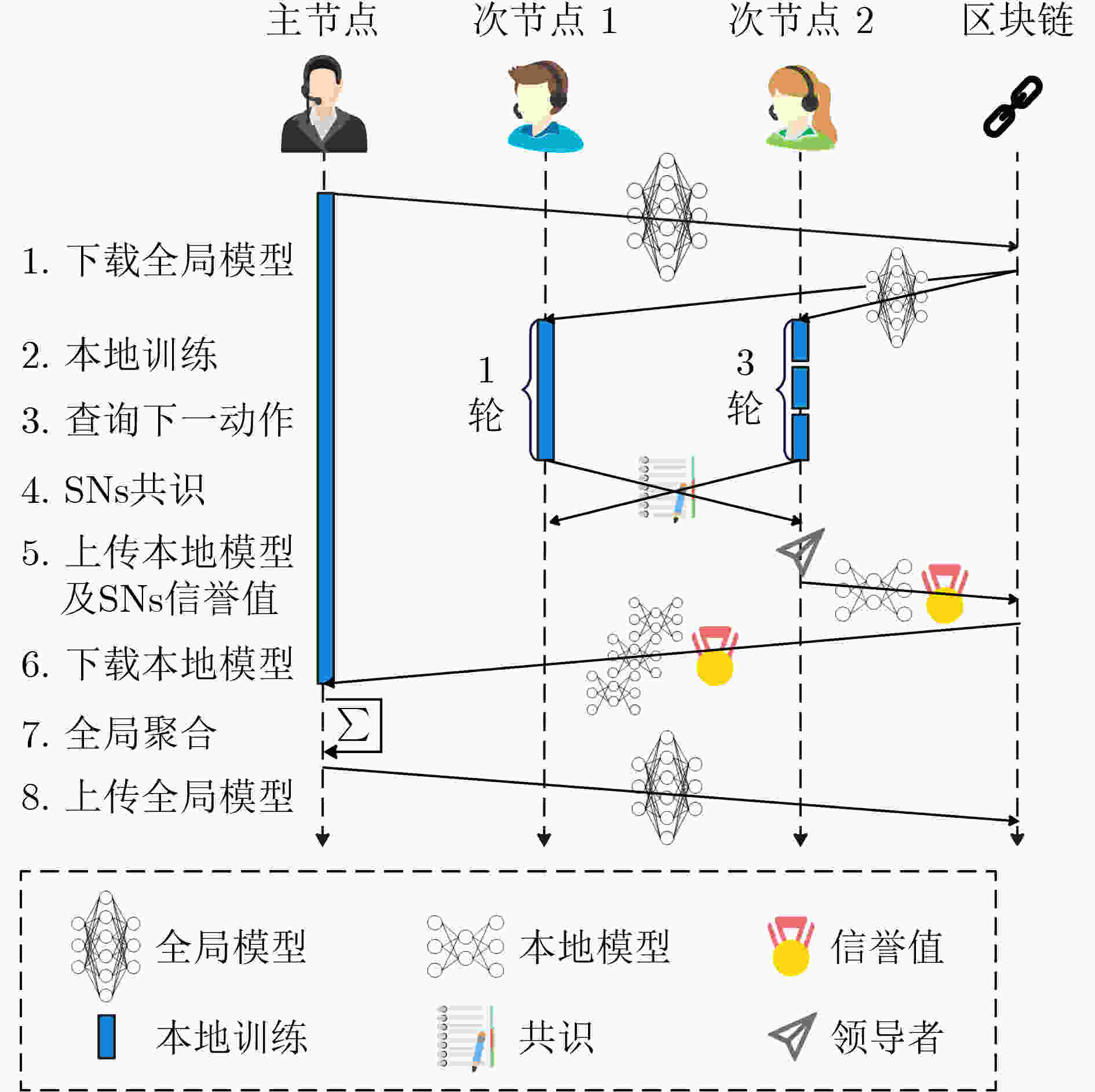

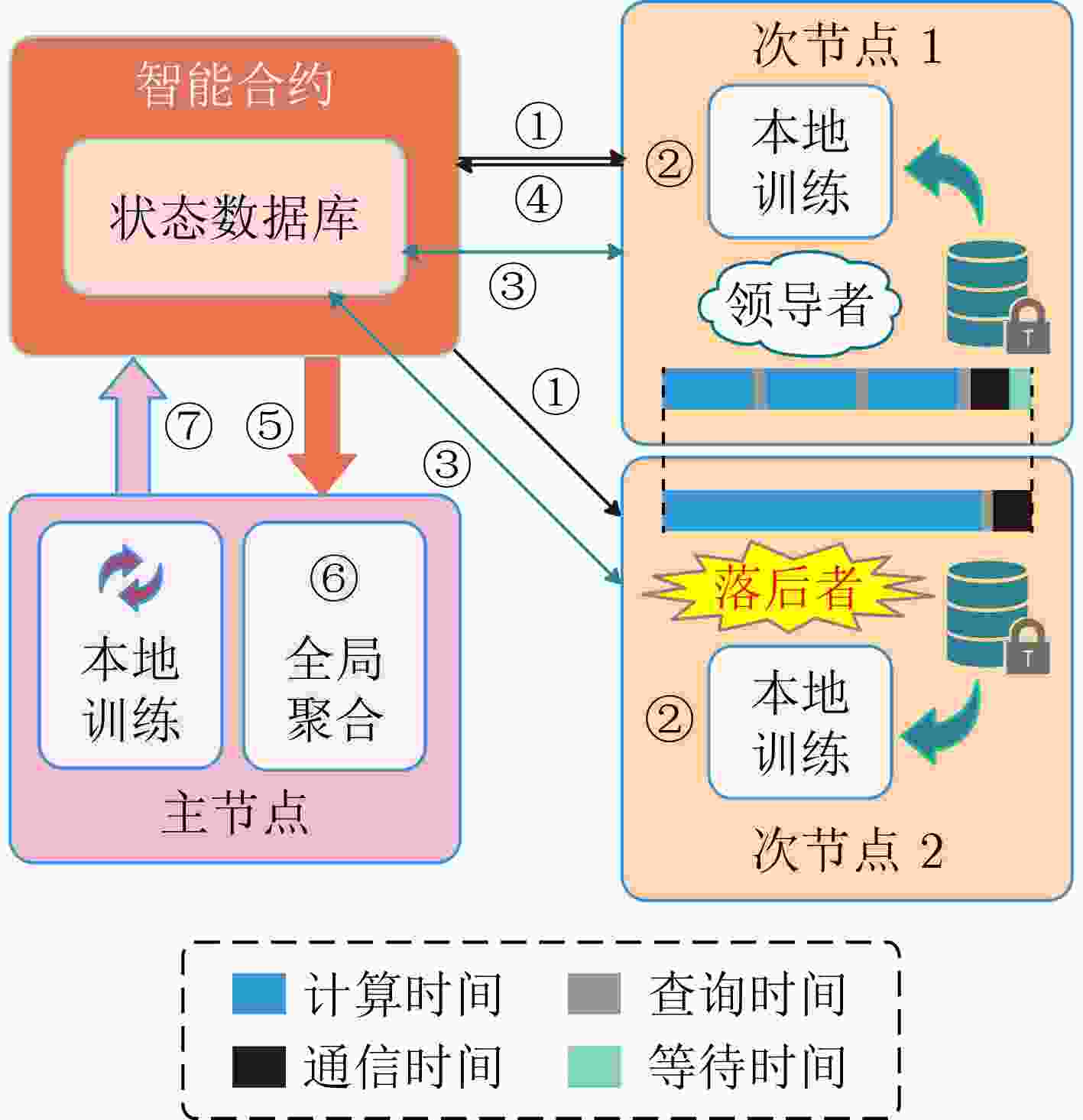

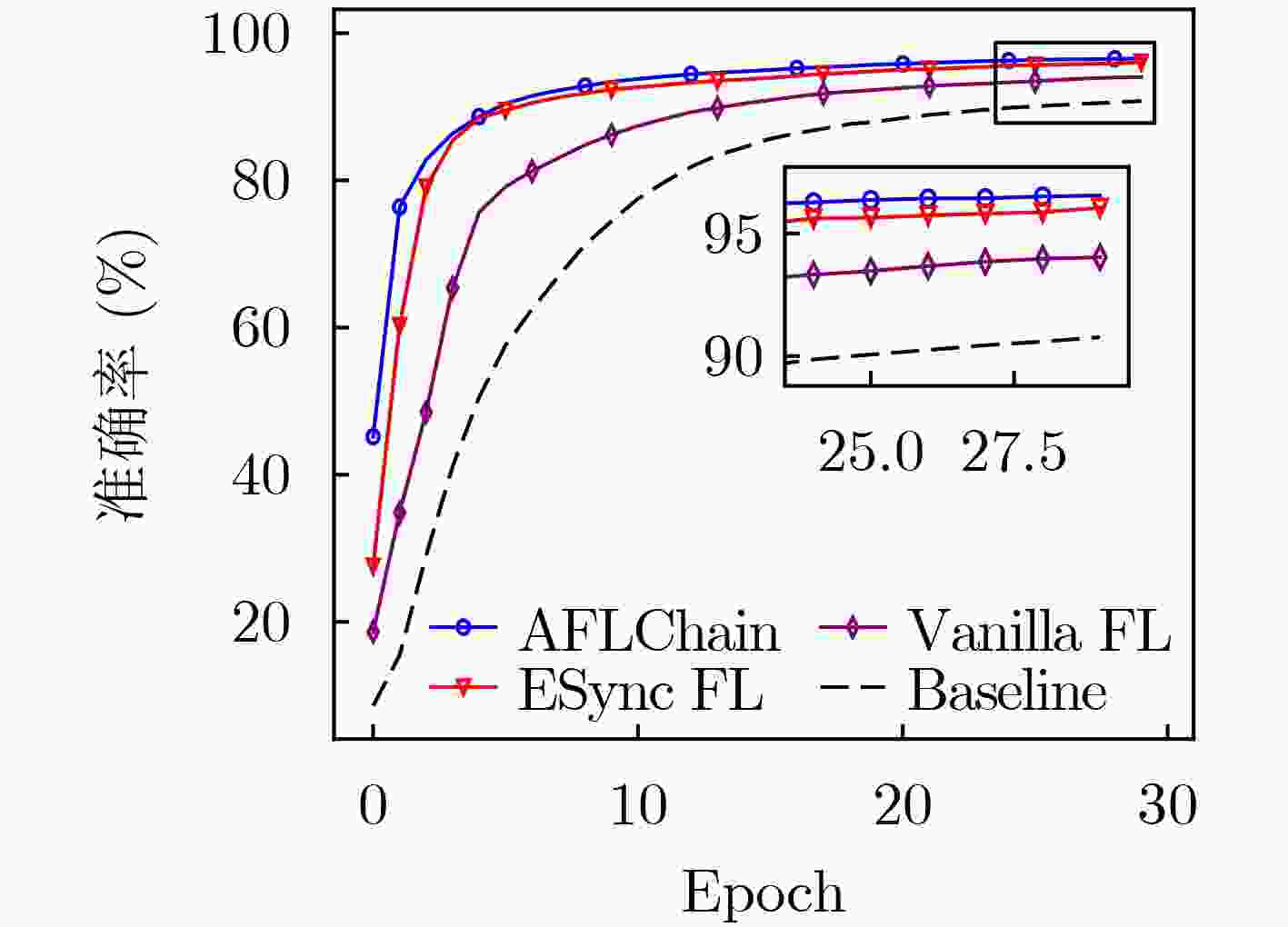

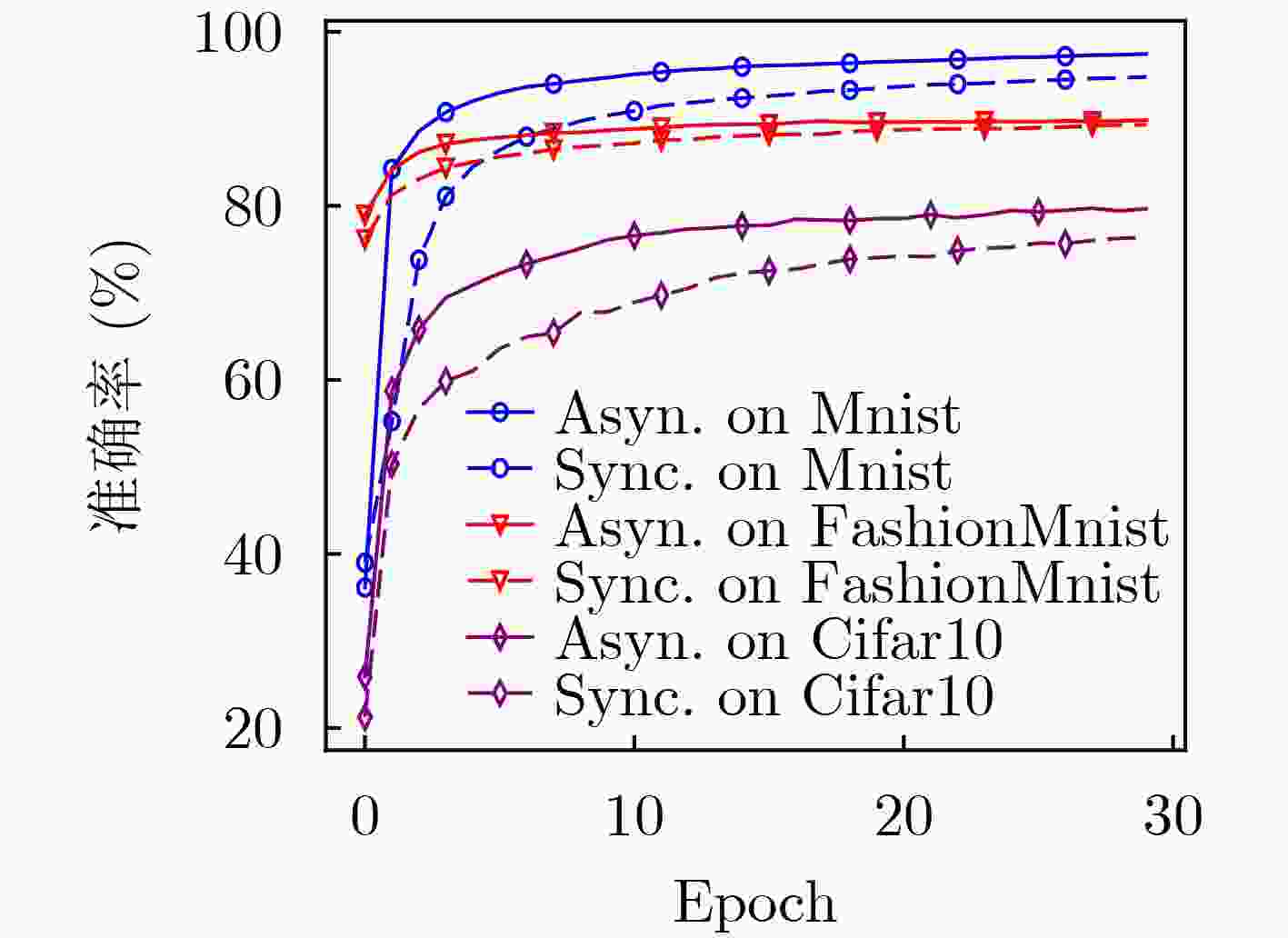

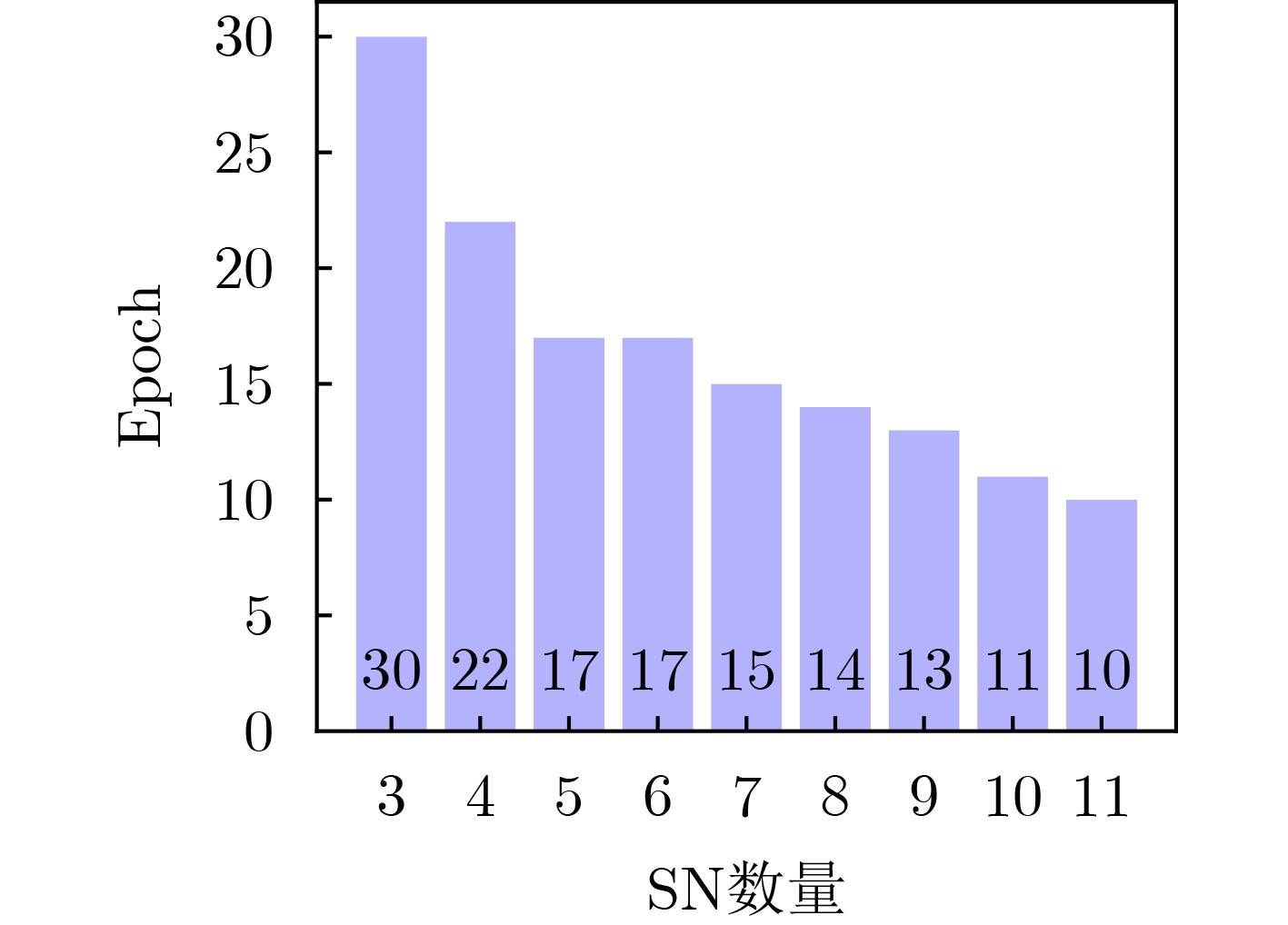

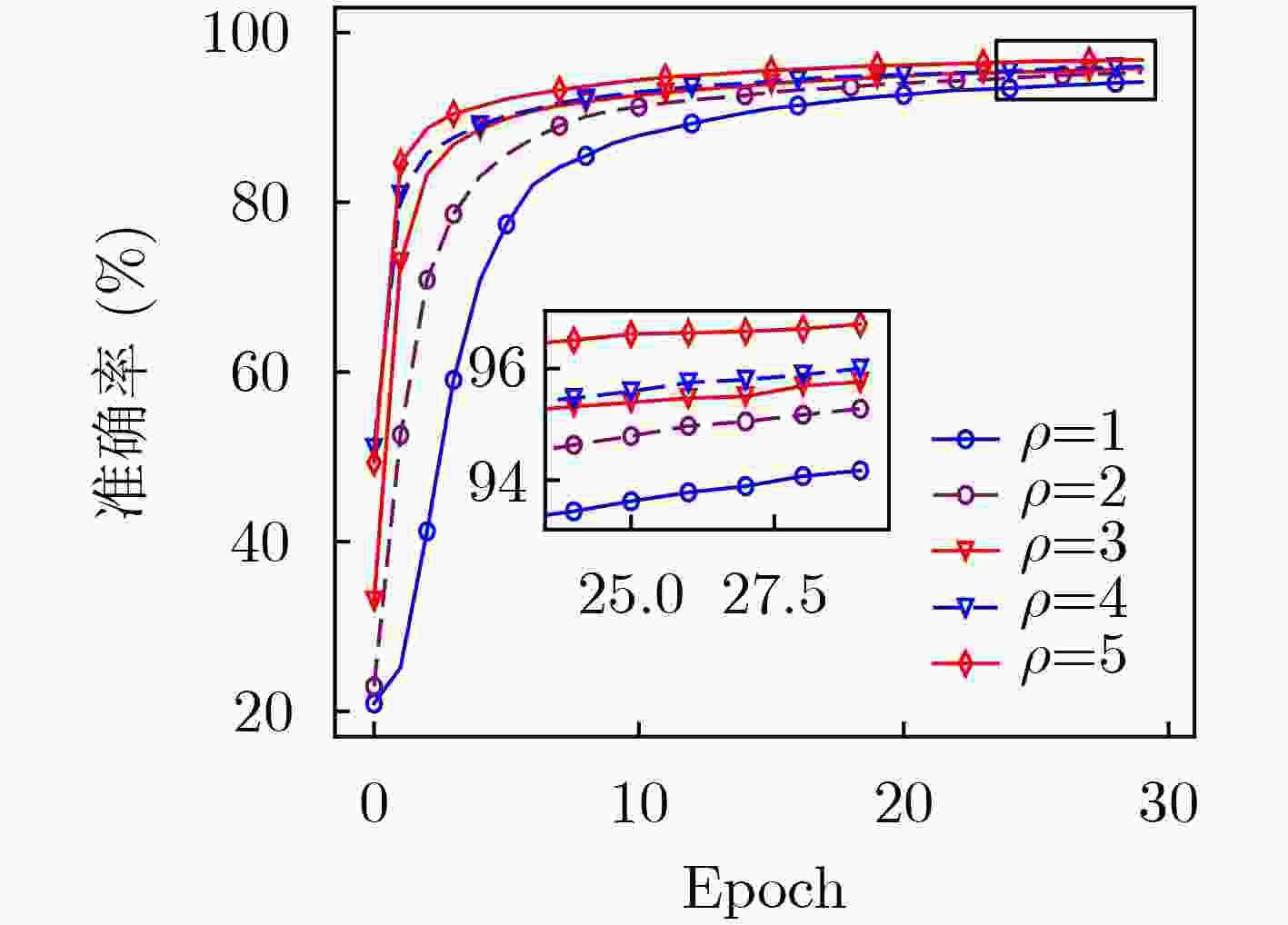

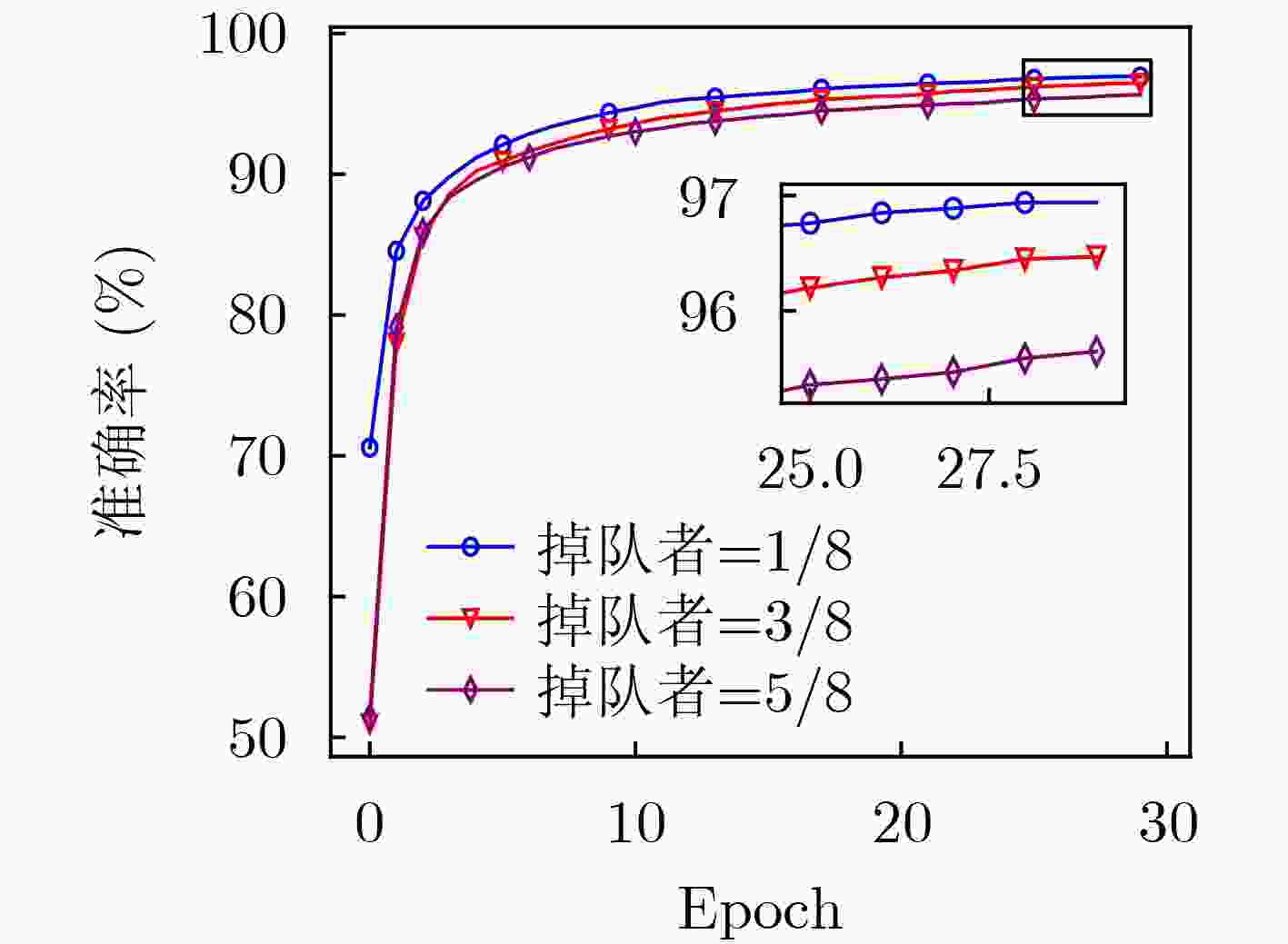

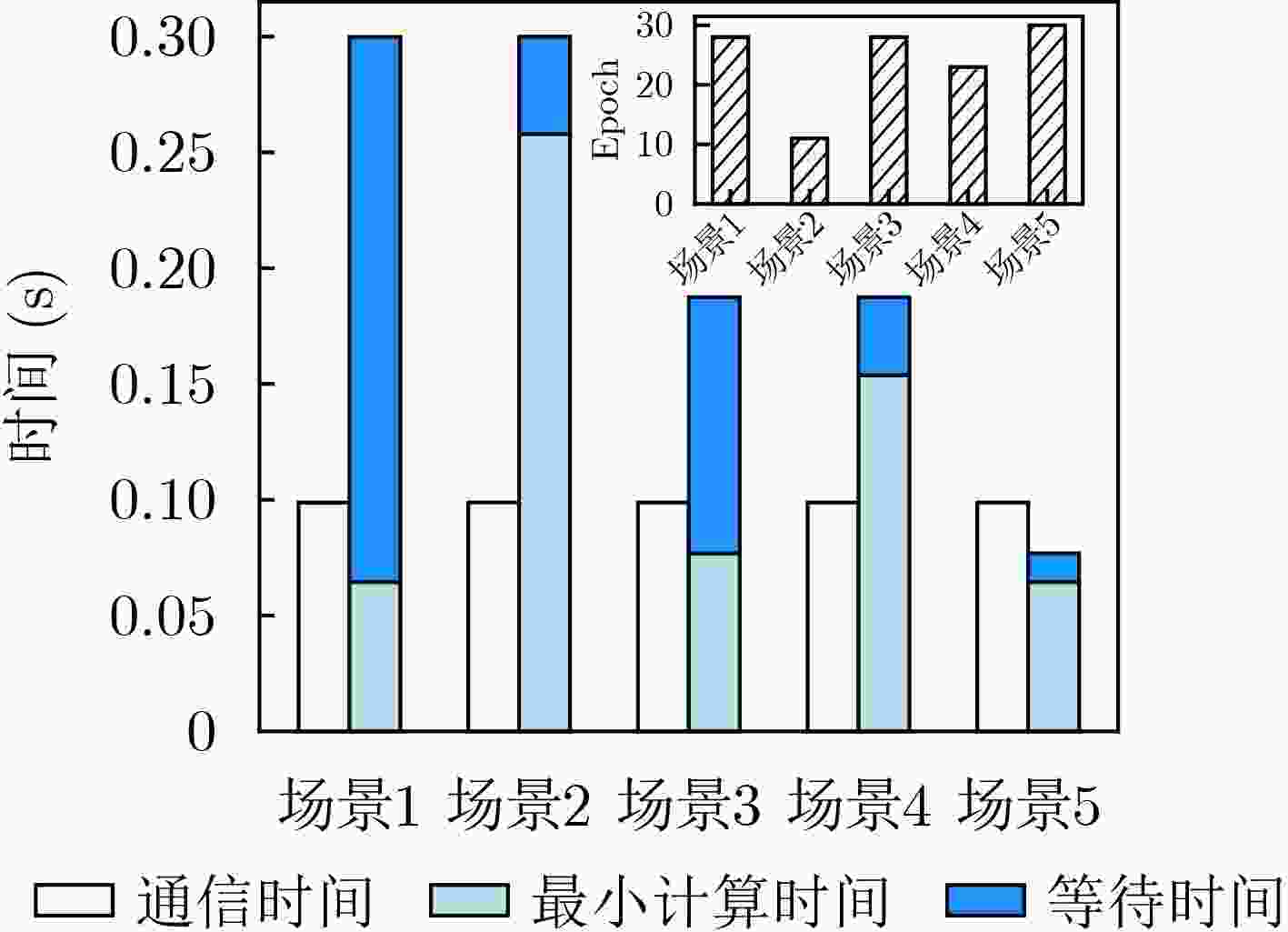

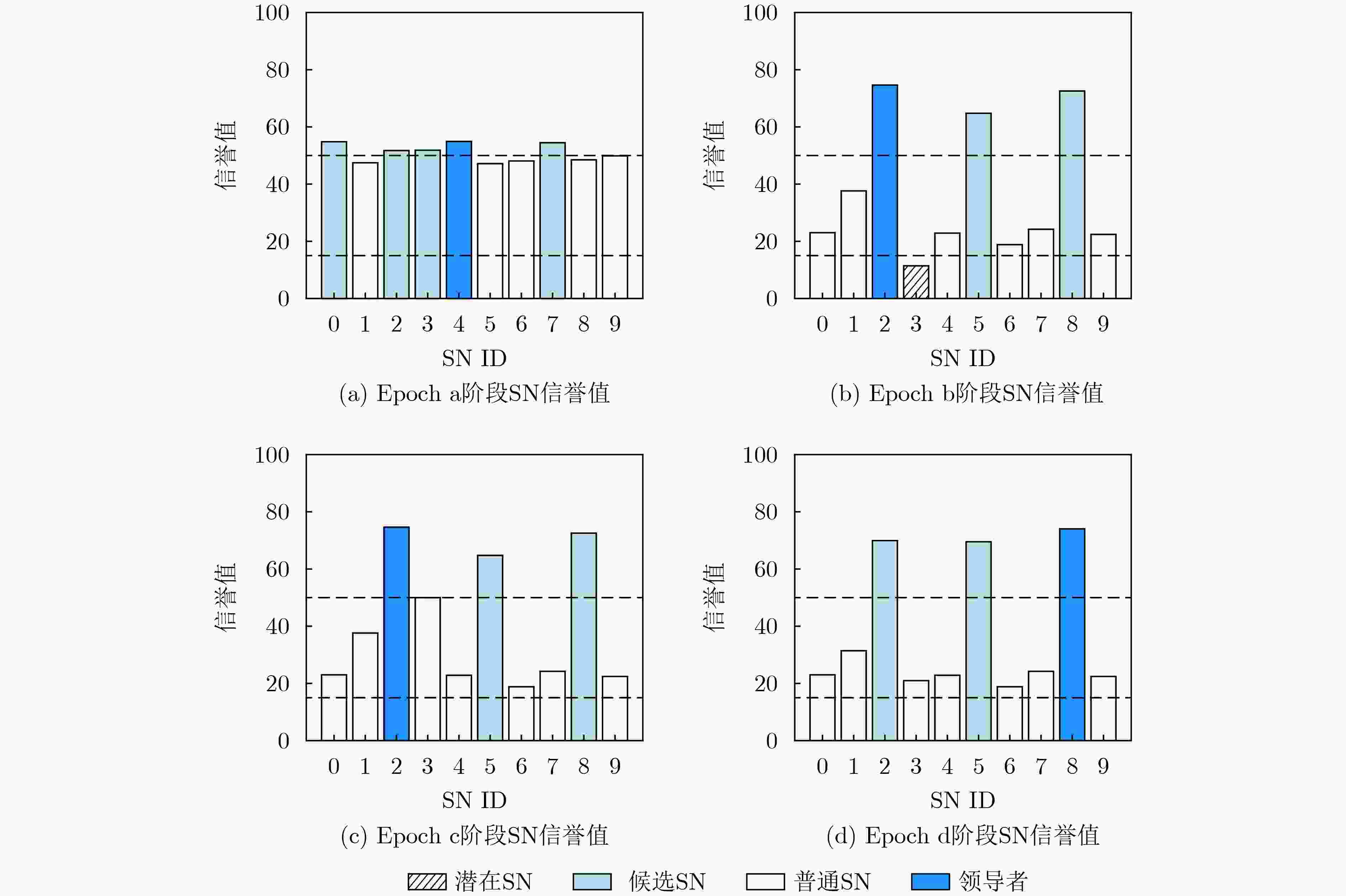

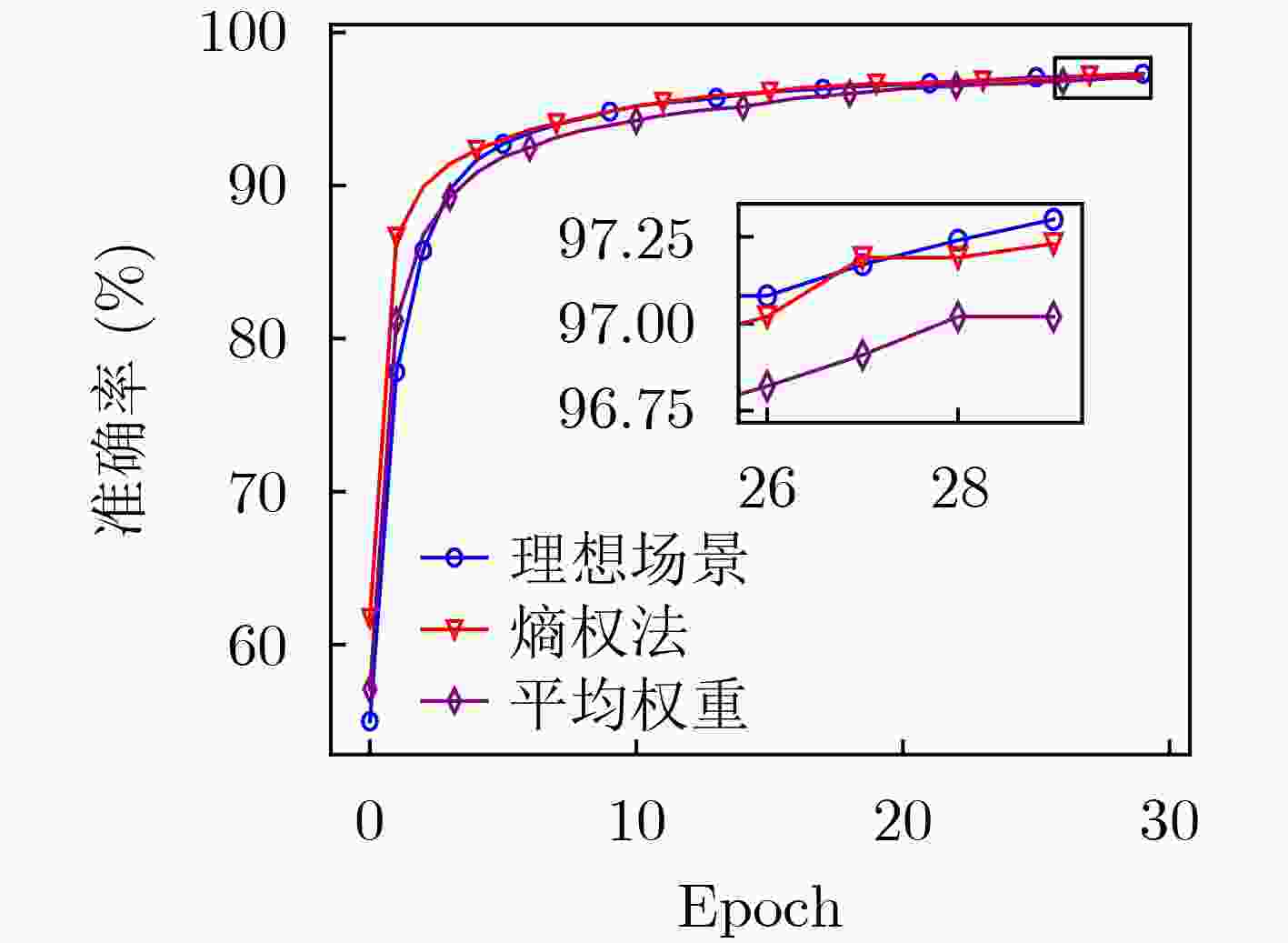

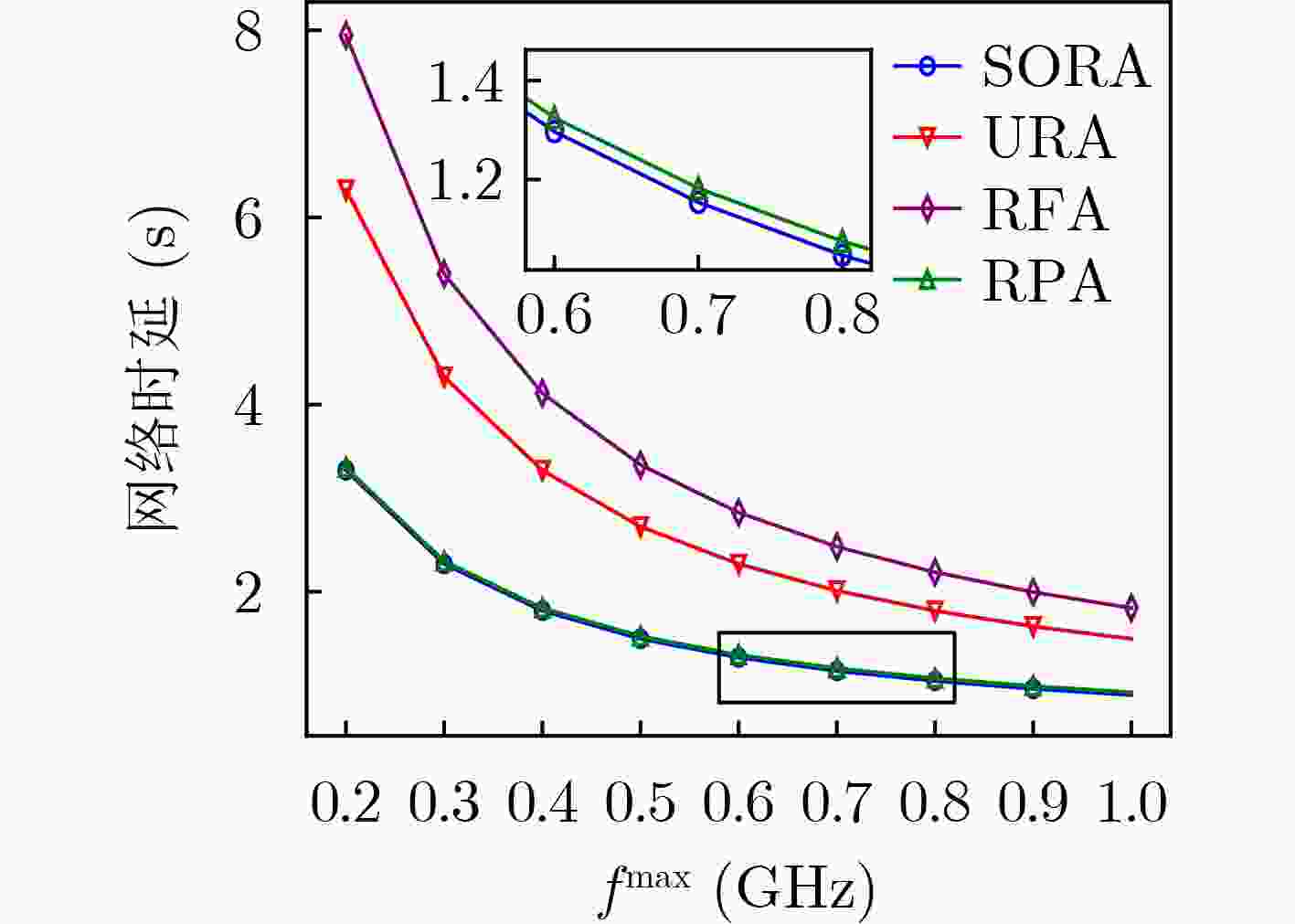

摘要: 由于数据量激增而引起的信息爆炸使得传统集中式云计算不堪重负,边缘计算网络(ECN)被提出以减轻云服务器的负担。此外,在ECN中启用联邦学习(FL),可以实现数据本地化处理,从而有效解决协同学习中边缘节点(ENs)的数据安全问题。然而在传统FL架构中,中央服务器容易受到单点攻击,导致系统性能下降,甚至任务失败。本文在ECN场景下,提出基于区块链技术的异步FL算法(AFLChain),该算法基于ENs算力动态分配训练任务,以提高学习效率。此外,基于ENs算力、模型训练进度以及历史信誉值,引入熵权信誉机制评估ENs积极性并对其分级,淘汰低质EN以进一步提高AFLChain的性能。最后,提出基于次梯度的最优资源分配(SORA)算法,通过联合优化传输功率和计算资源分配以最小化整体网络延迟。仿真结果展示了AFLChain的模型训练效率以及SORA算法的收敛情况,证明了所提算法的有效性。Abstract: Because of the information explosion caused by the surge of data, traditional centralized cloud computing is overwhelmed, Edge Computing Network (ECN) is proposed to alleviate the burden on cloud servers. In contrast, by permitting Federated Learning (FL) in the ECN, data localization processing could be realized to successfully address the data security problem of Edge Nodes (ENs) in collaborative learning. However, traditional FL exposes the central server to single-point attacks, resulting in system performance degradation or even task failure. In this paper, we propose Asynchronous Federated Learning based on Blockchain technology (AFLChain) in the ECN that can dynamically assign learning tasks to ENs based on their computing capabilities to boost learning efficiency. In addition, based on the computing capability of ENs, model training progress and historical reputation, the entropy weight reputation mechanism is implemented to assess and rank the enthusiasm of ENs, eliminating low quality ENs to further improve the performance of the AFLChain. Finally, the Subgradient based Optimal Resource Allocation (SORA) algorithm is proposed to reduce network latency by optimizing transmission power and computing resource allocation simultaneously. The simulation results demonstrate the model training efficiency of the AFLChain and the convergence of the SORA algorithm and the efficacy of the proposed algorithms.

-

算法1 状态数据库决策流程 输入:SN $k$状态信息,状态表

${[(k,{h_\kappa },{r_\kappa },T_\kappa ^{{\text{cmp}}},T_k^{{\text{qry}}},{T_\kappa },{a_\kappa })]_{\forall k \in [1,K]}}$输出:下一动作${a_k}$ 更新状态表中SN $k$的状态信息 通过式(8)找到最低算力SN 情况1:SN $k$刚进入一轮本地训练,或SN $\xi $还未完成训练。 if ${r_k} = 1$ or ${h_k} > {h_\xi }$ ${r_k} \leftarrow {r_k} + 1$ 返回${a_k} = 1$ end if ${ {{t} }_c}{\text{ = CurrentTime} }$ 情况2:SN $\xi $已完成训练,或剩余等待时间不够完成一轮训练。 if $k = \xi $ or ${a_\xi } = 0$ or 式(10)不成立 更新状态表动作信息 返回${a_k} = 0$ end if 情况3:剩余等待时间足以SN $k$完成一轮本地训练。 ${r_k} \leftarrow {r_k} + 1$ 返回${a_k} = 1$ 算法2 基于次梯度的最优资源分配算法(SORA) 输入:拉格朗日乘子更新步长$ ({\epsilon}_{1},{\epsilon}_{2}) $,拉格朗日乘子初始值

$(\pi _1^0,\pi _2^0)$,最大容忍阈值$ {\epsilon}_{3} $,$t = 0$,迭代因子上限${t_{\max }}$输出:最优资源分配$(f{_k^{ {\text{cmp} }* } },f{_k^{b*}},{p_k}^*)$ while $t < {t_{\max }}$ do 由式(36)和式(42) 分别得到$f{_k^{ {\text{cmp} }* }}$和$f{_k^{b*}}$ 由式(39)和式(40) 分别更新拉格朗日乘子${\pi _1}$和${\pi _2}$

if $ \left|{\pi }_{1}^{t+1}-{\pi }_{1}^{t}\right| < {\epsilon}_{3} $ and $ \left|{\pi }_{2}^{t+1}-{\pi }_{2}^{t}\right| < {\epsilon}_{3} $break else $t = t + 1$ end if end while 由式(44)得到${p_k}^*$ 表 1 仿真参数设置

参数 描述 数值 $K$ SN个数 30 $H$ epoch数量 30 $B$ 带宽 1 MHz $ {\delta _b} $ 块大小 8 MB $ {n_0} $ 噪声功率 –174 dBm/Hz $\lambda $ 全局学习率 1 $ \eta $ 本地学习率 0.001 $ b $ 数据抽样大小 32 $ D $ 训练样本大小 3 MB $ {c_k} $ SN $ k $完成训练所需频率 20 cycles/bit $ f_k^{\max } $ SN $ k $最大算力 0.2~1 GHz $ {p_k} $ SN $ k $传输功率 40 W ${\text{t} }{ {\text{h} }_{\rm{upper}}}$ 信誉值上分位点 50 ${\text{t} }{ {\text{h} }_{\rm{low}}}$ 信誉值下分位点 18 -

[1] ZHANG Jing and TAO Dacheng. Empowering things with intelligence: A survey of the progress, challenges, and opportunities in artificial intelligence of things[J]. IEEE Internet of Things Journal, 2021, 8(10): 7789–7817. doi: 10.1109/JIOT.2020.3039359 [2] JIANG Chunxiao, ZHANG Haijun, REN Yong, et al. Machine learning paradigms for next-generation wireless networks[J]. IEEE Wireless Communications, 2017, 24(2): 98–105. doi: 10.1109/MWC.2016.1500356WC [3] LIM W Y B, LUONG N C, HOANG D T, et al. Federated learning in mobile edge networks: A comprehensive survey[J]. IEEE Communications Surveys & Tutorials, 2020, 22(3): 2031–2063. doi: 10.1109/COMST.2020.2986024 [4] LI Tian, SAHU A K, TALWALKAR A, et al. Federated learning: Challenges, methods, and future directions[J]. IEEE Signal Processing Magazine, 2020, 37(3): 50–60. doi: 10.1109/MSP.2020.2975749 [5] IEEE Std 3652.1-2020 IEEE guide for architectural framework and application of federated machine learning[S]. IEEE, 2021. [6] SHEN Xin, LI Zhuo, and CHEN Xin. Node selection strategy design based on reputation mechanism for hierarchical federated learning[C]. 2022 18th International Conference on Mobility, Sensing and Networking (MSN), Guangzhou, China, 2022: 718–722. [7] LIU Jianchun, XU Hongli, WANG Lun, et al. Adaptive asynchronous federated learning in resource-constrained edge computing[J]. IEEE Transactions on Mobile Computing, 2023, 22(2): 674–690. doi: 10.1109/TMC.2021.3096846 [8] LI Zonghang, ZHOU Huaman, ZHOU Tianyao, et al. ESync: Accelerating intra-domain federated learning in heterogeneous data centers[J]. IEEE Transactions on Services Computing, 2022, 15(4): 2261–2274. doi: 10.1109/TSC.2020.3044043 [9] CHEN Yang, SUN Xiaoyan, and JIN Yaochu. Communication-efficient federated deep learning with layerwise asynchronous model update and temporally weighted aggregation[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(10): 4229–4238. doi: 10.1109/TNNLS.2019.2953131 [10] CAO Mingrui, ZHANG Long, and CAO Bin. Toward on-device federated learning: A direct acyclic graph-based blockchain approach[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(4): 2028–2042. doi: 10.1109/TNNLS.2021.3105810 [11] FENG Lei, YANG Zhixiang, GUO Shaoyong, et al. Two-layered blockchain architecture for federated learning over the mobile edge network[J]. IEEE Network, 2022, 36(1): 45–51. doi: 10.1109/MNET.011.2000339 [12] QIN Zhenquan, YE Jin, MENG Jie, et al. Privacy-preserving blockchain-based federated learning for marine internet of things[J]. IEEE Transactions on Computational Social Systems, 2022, 9(1): 159–173. doi: 10.1109/TCSS.2021.3100258 [13] XU Chenhao, QU Youyang, LUAN T H, et al. An efficient and reliable asynchronous federated learning scheme for smart public transportation[J]. IEEE Transactions on Vehicular Technology, 2023, 72(5): 6584–6598. doi: 10.1109/TVT.2022.3232603 [14] LI Qinbin, HE Bingsheng, and SONG D. Model-contrastive federated learning[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 10708–10717. [15] 范盛金. 一元三次方程的新求根公式与新判别法[J]. 海南师范学院学报(自然科学版), 1989, 2(2): 91–98.FAN Shengjin. A new extracting formula and a new distinguishing means on the one variable cubic equation[J]. Natural Science Journal of Hainan Normal College, 1989, 2(2): 91–98. -

下载:

下载:

下载:

下载: