Cross-modal Retrieval Enhanced Energy-efficient Multimodal Federated Learning in Wireless Networks

-

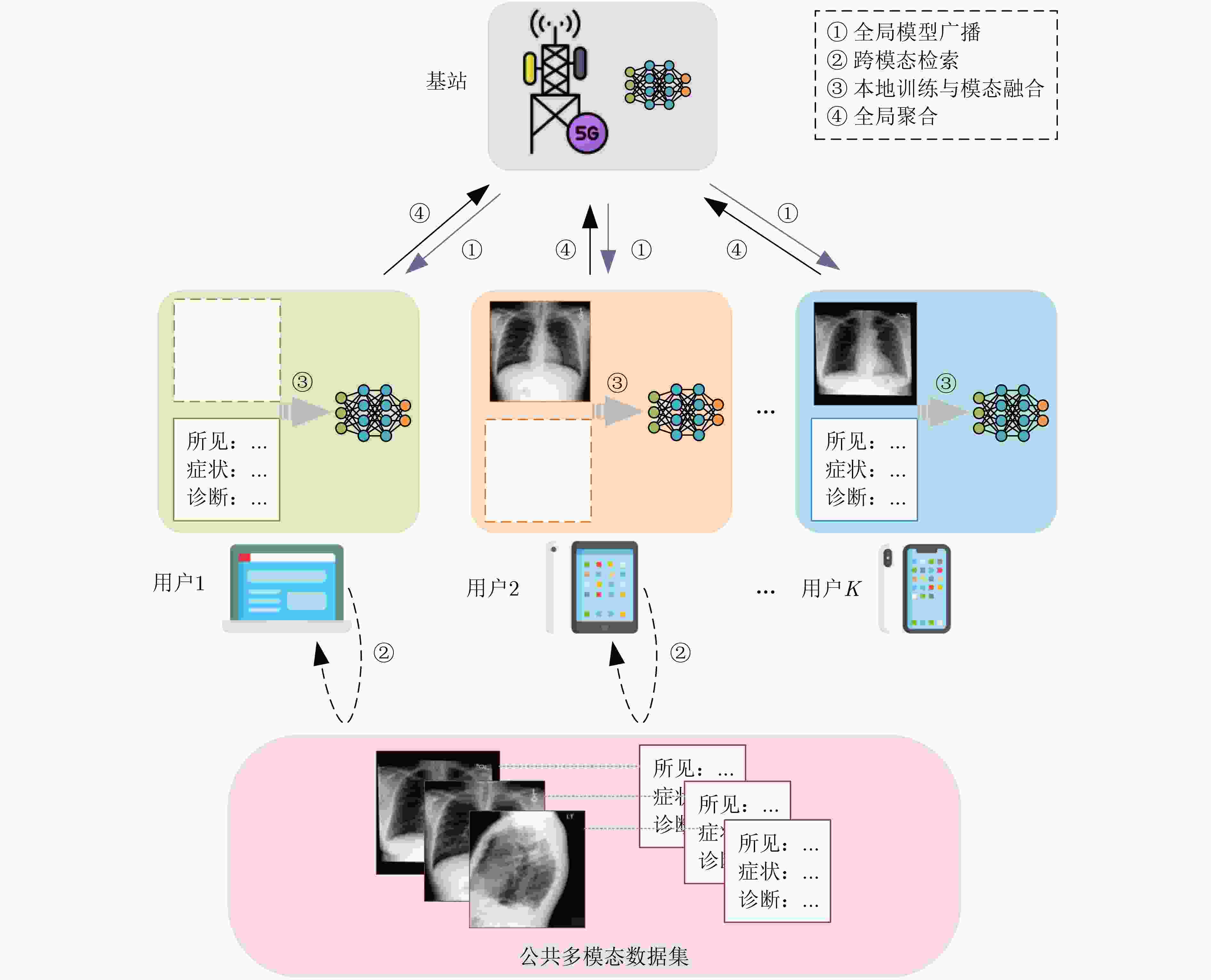

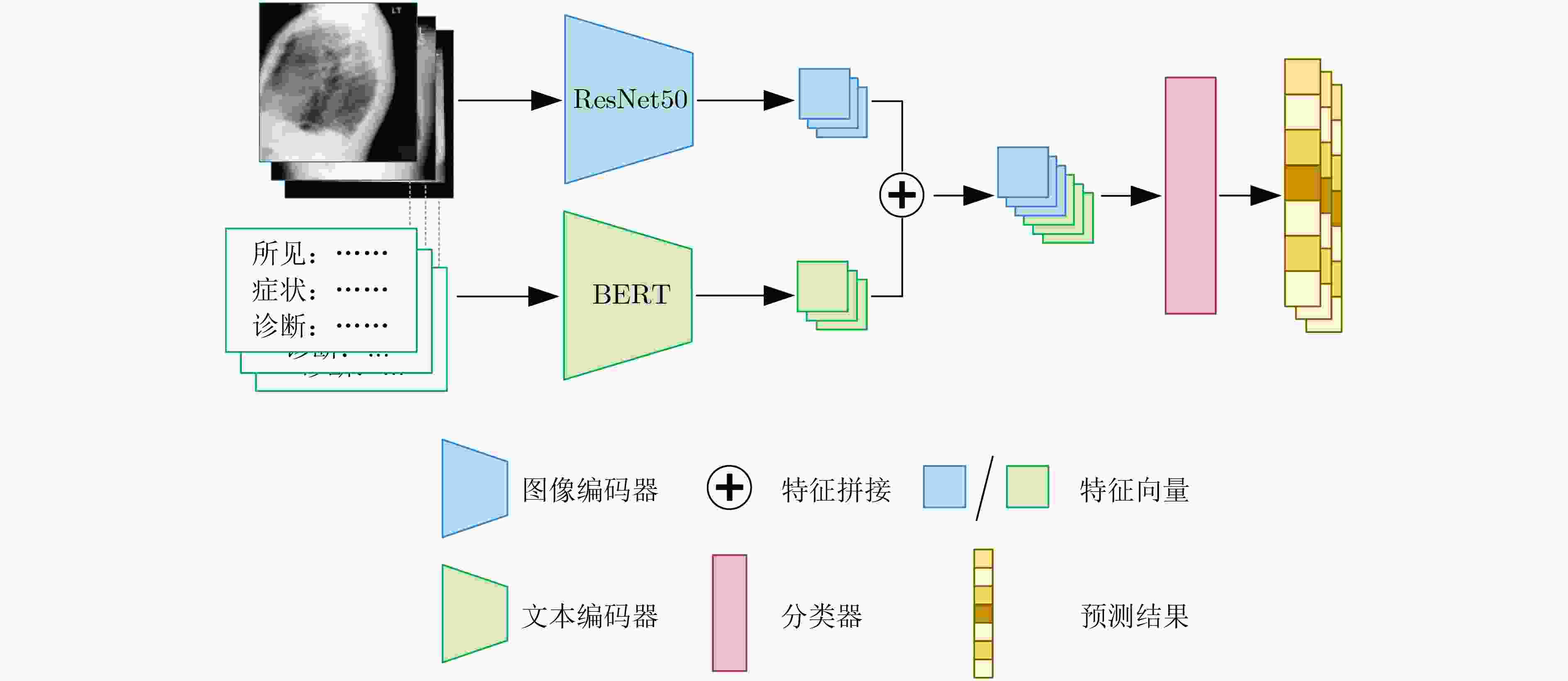

摘要: 通过整合多模态信息,多模态联邦学习(MFL)在医疗保健和智能感知等领域往往优于单模态联邦学习(FL)。但在无线边缘场景下,能量受限且样本常缺失模态,直接对所有样本进行补全会放大计算与通信开销,整体能耗随之上升。为此该文提出基于跨模态检索增强的高能效多模态联邦学习(CREEMFL)框架。该框架的核心思想是选择性插补:仅对缺失模态的部分样本从公共多模态库中检索补充,剩余样本采用零填充以限制计算量。进一步,在系统级建立能耗模型,并将检索率作为变量纳入联合优化,以在单轮成本与整体能耗之间取得平衡。该文构造两层优化:外层用经验搜索确定检索率,内层在给定检索率下对上行传输时间、发射功率与中央处理器(CPU)频率进行协同分配,并给出闭式或半闭式更新。基于MIMIC-CXR胸部X射线数据集的实验表明,CREEMFL在保证或提升准确率的同时显著缩短完成时间并降低总能耗,验证了所提框架的有效性。Abstract:

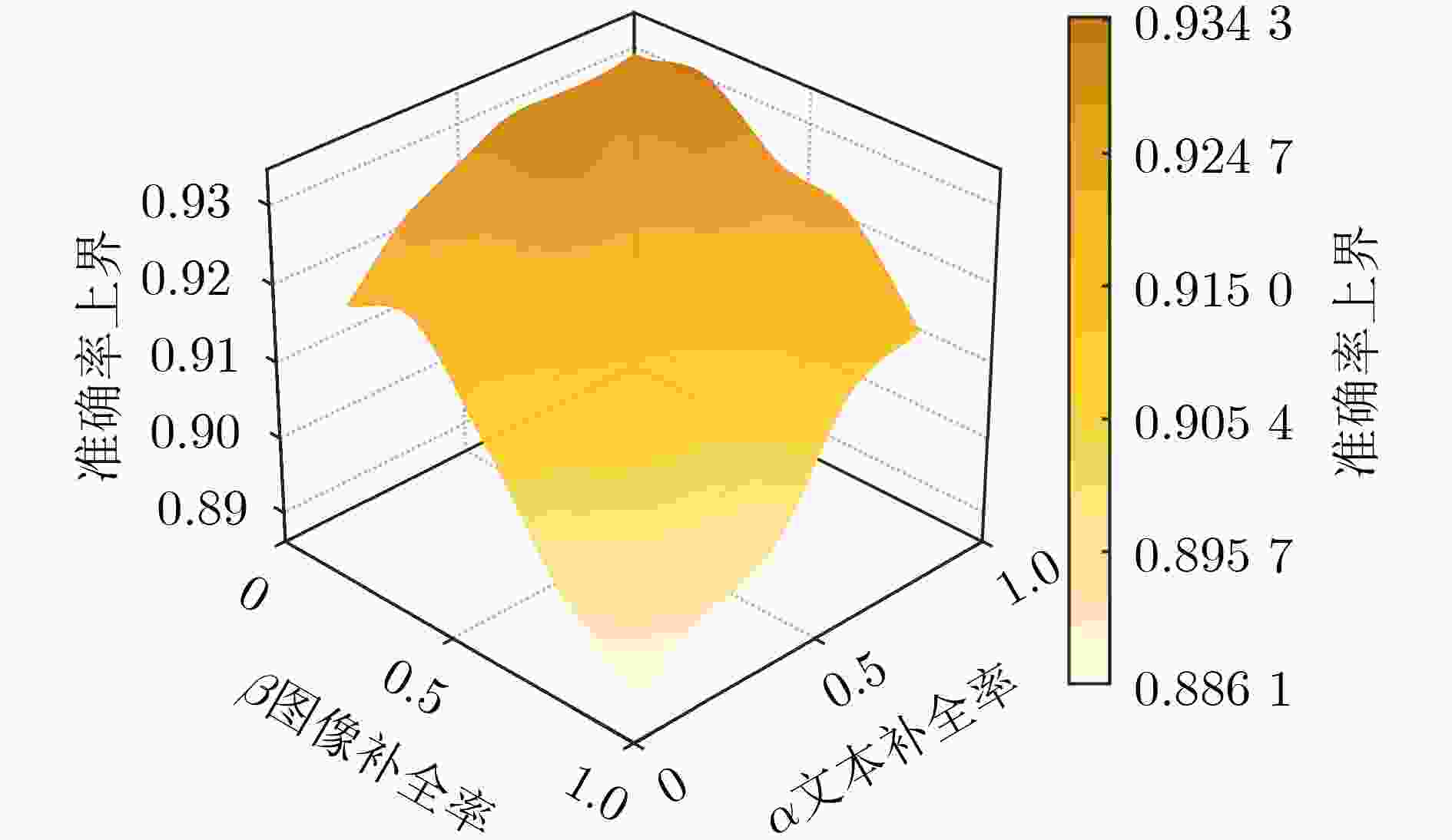

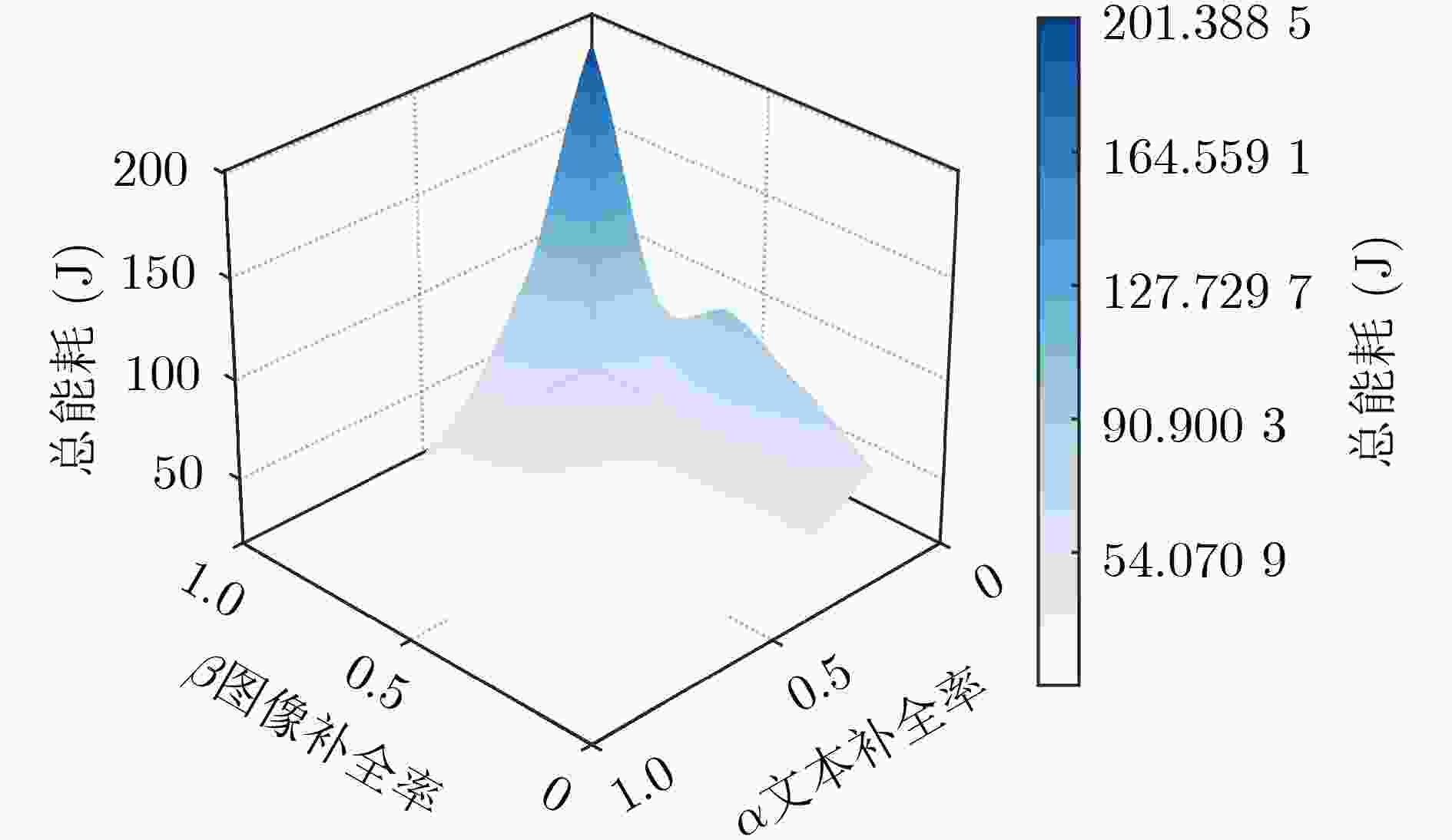

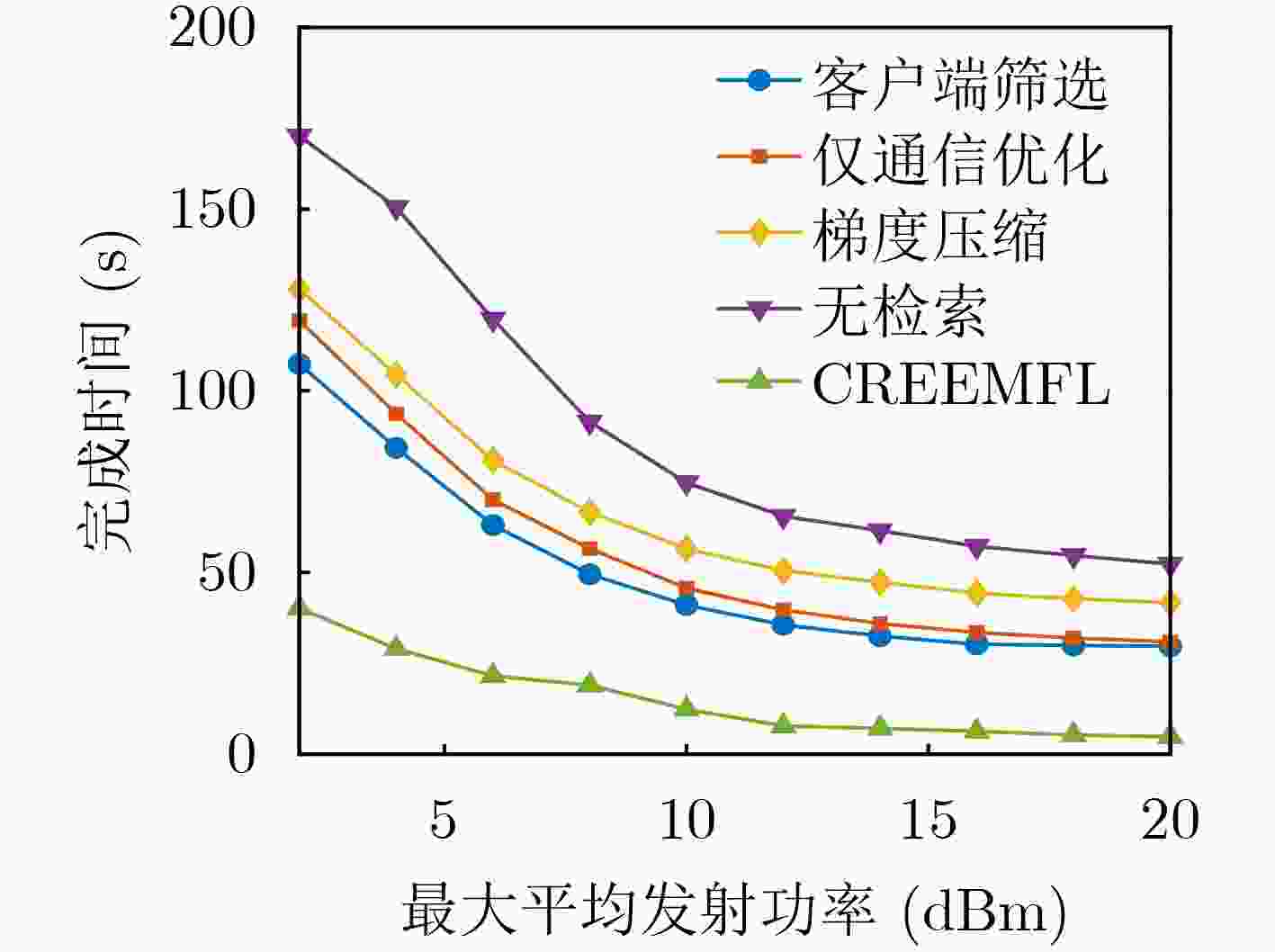

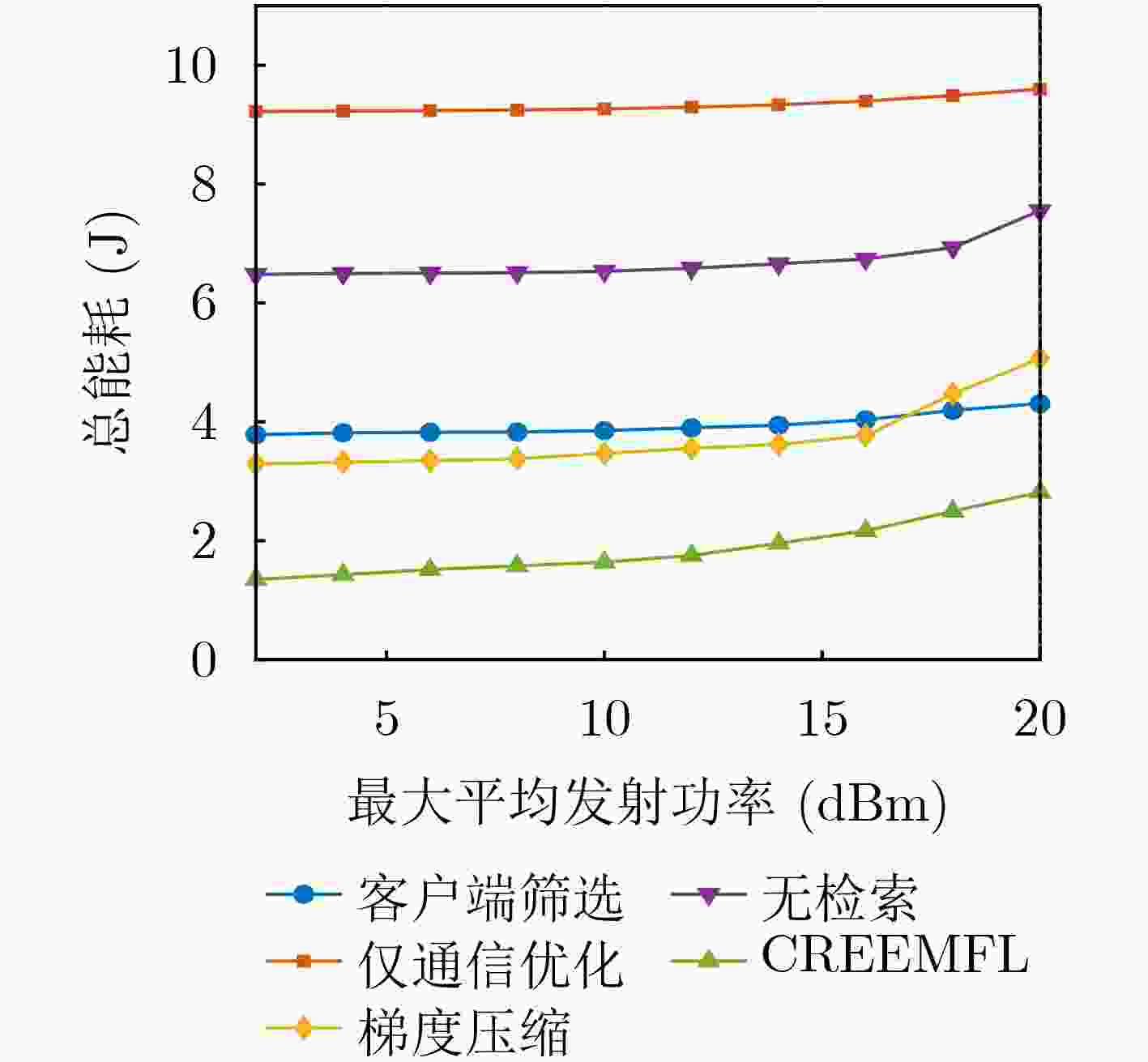

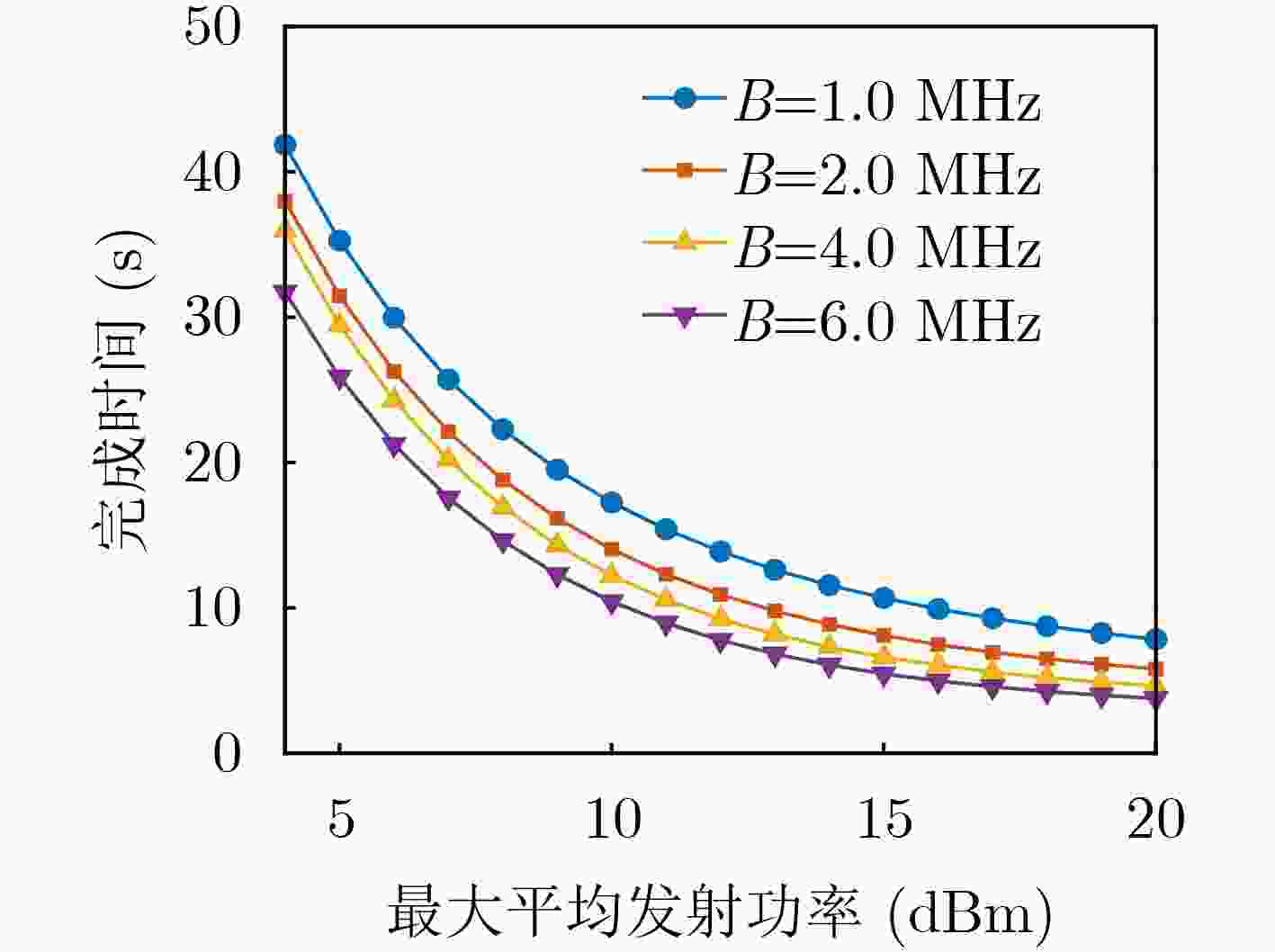

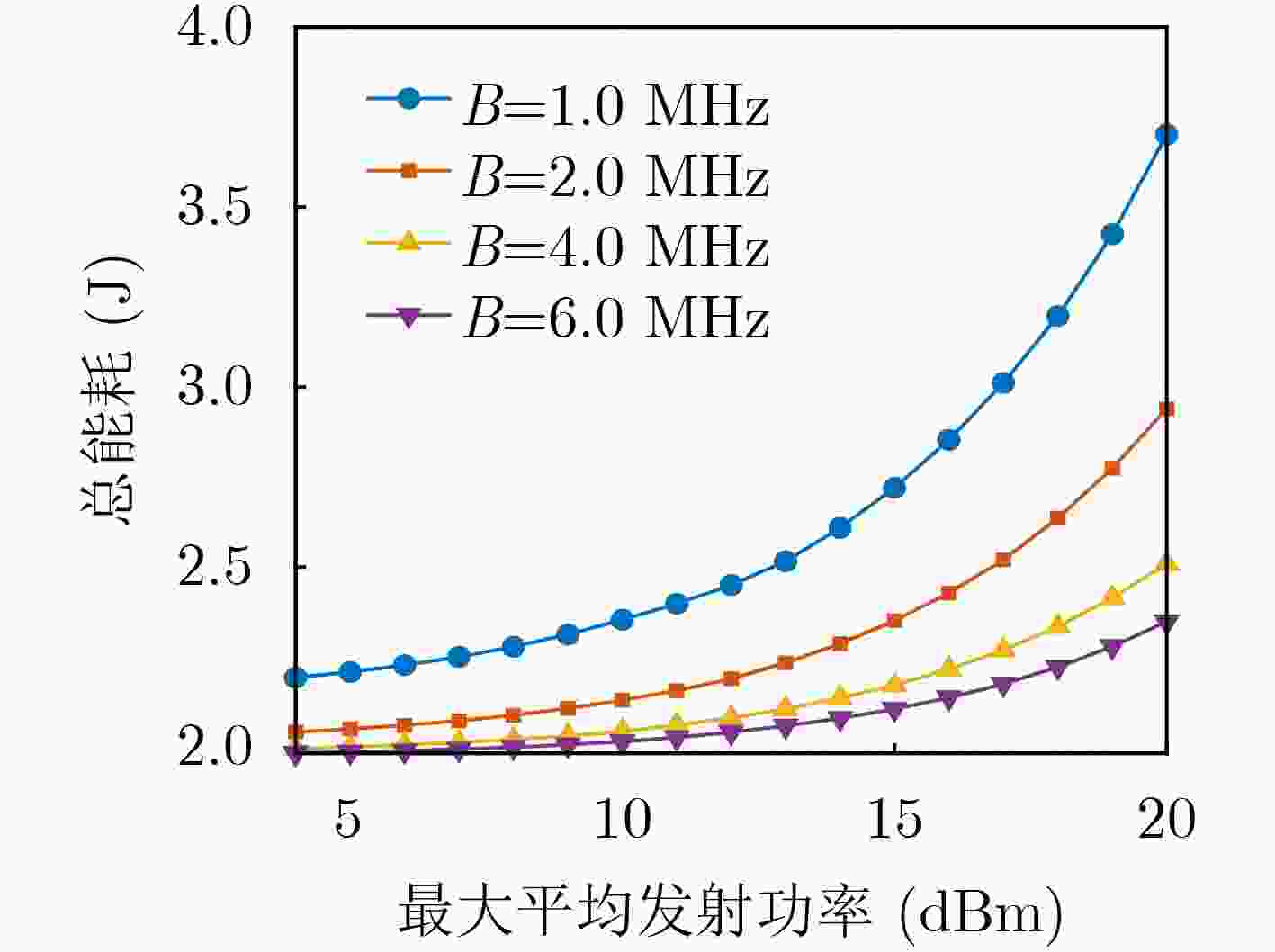

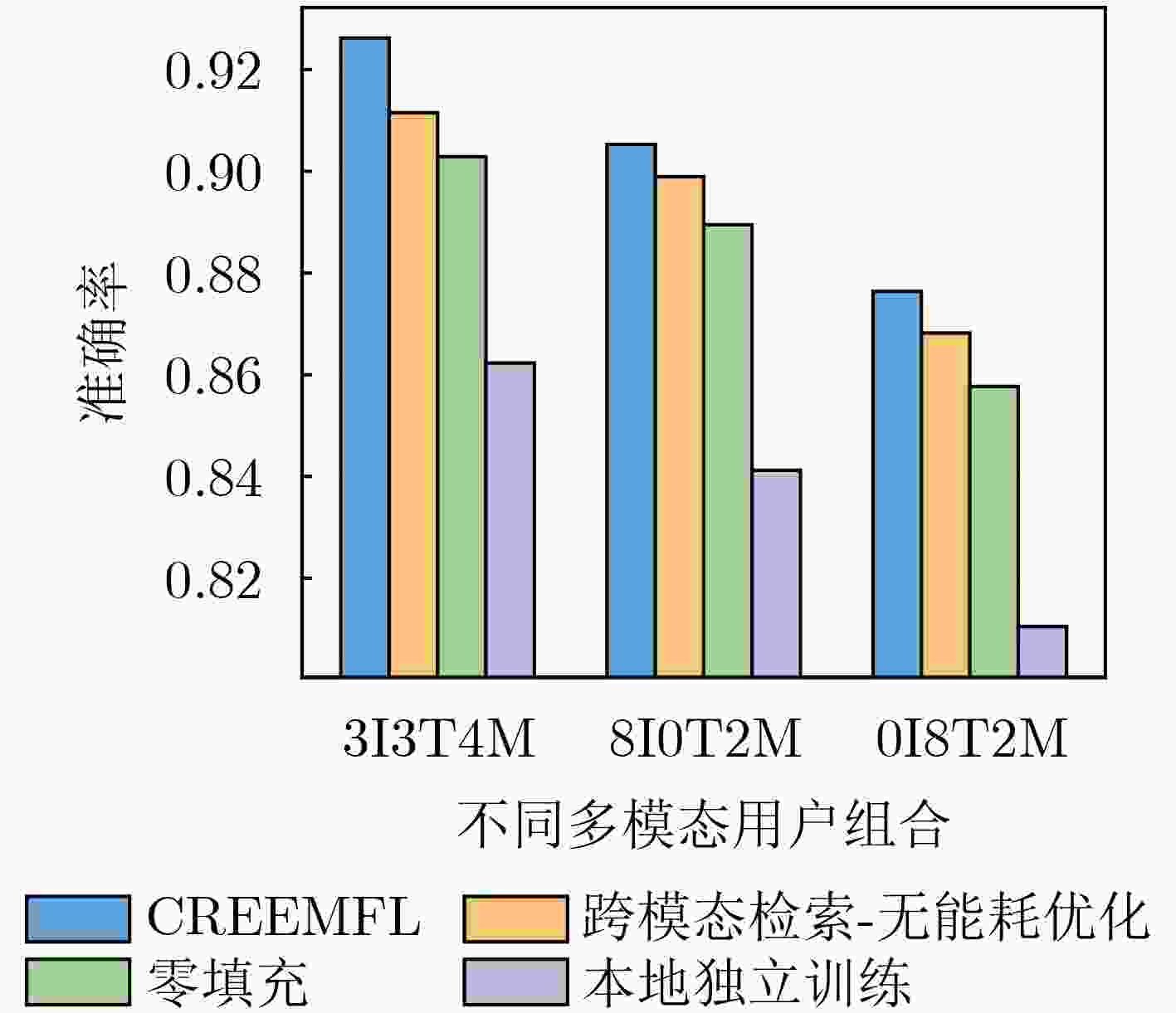

Objective Multimodal Federated Learning (MFL) uses complementary information from multiple modalities, yet in wireless edge networks it is restricted by limited energy and frequent missing modalities because many clients store only images or only reports. This study presents Cross-modal Retrieval Enhanced Energy-efficient Multimodal Federated Learning (CREEMFL), which applies selective completion and joint communication–computation optimization to reduce training energy under latency and wireless constraints. Methods CREEMFL completes part of the incomplete samples by querying a public multimodal subset, and processes the remaining samples through zero padding. Each selected user downloads the global model, performs image-to-text or text-to-image retrieval, conducts local multimodal training, and uploads model updates for aggregation. An energy–delay model couples local computation and wireless communication and treats the required number of global rounds as a function of retrieval ratios. Based on this model, an energy minimization problem is formulated and solved using a two-layer algorithm with an outer search over retrieval ratios and an inner optimization of transmission time, Central Processing Unit (CPU) frequency, and transmit power. Results and Discussions Simulations on a single-cell wireless MFL system show that increasing the ratio of completing text from images improves test accuracy and reduces total energy. In contrast, a large ratio of completing images from text provides limited accuracy gain but increases energy consumption ( Fig. 3 ,Fig. 4 ). Compared with four representative baselines, CREEMFL achieves shorter completion time and lower total energy across a wide range of maximum average transmit powers (Fig. 5 ,Fig. 6 ). For CREEMFL, increased system bandwidth further reduces completion time and energy consumption (Fig. 7 ,Fig. 8 ). Under different user modality compositions, CREEMFL also attains higher test accuracy than local training, zero padding, and cross-modal retrieval without energy optimization (Fig. 9 ).Conclusions CREEMFL integrates selective cross-modal retrieval and joint communication–computation optimization for energy-efficient MFL. By treating retrieval ratios as variables and modeling their effect on global convergence rounds, it captures the coupling between per-round costs and global training progress. Simulations verify that CREEMFL reduces training completion time and total energy while preserving classification accuracy in resource-constrained wireless edge networks. -

1 检索率的选择与联合资源分配

输入:候选检索率网格$ {\boldsymbol{(\alpha}} ,{\boldsymbol{\beta}} )\in \mathcal{G} $;容差$ \epsilon $ 输出:最优检索率$ ({{\boldsymbol{\alpha}} }^{* },{{\boldsymbol{\beta}} }^{* }) $及资源分配$ ({\boldsymbol{t}}^{*},{\boldsymbol{f}}^{*},{\boldsymbol{p}}^{*}) $ (1) for 每个$ ({\boldsymbol{\alpha}} ,{\boldsymbol{\beta}} )\in \mathcal{G} $ do (2) 初始化$ ({\boldsymbol{t}}^{(0)},{\boldsymbol{f}}^{(0)},{\boldsymbol{p}}^{(0)}) $ (3) 由式(11)计算初始目标$ {\mathcal{E}}^{(0)} $ (4) repeat (5) 步骤1 (更新$ \boldsymbol{t} $):按式(14)更新$ {\boldsymbol{t}}^{(n+1)} $ (6) 步骤2 (更新$ \boldsymbol{f},\boldsymbol{p} $):按式(16)–式(19)更新

($ ({\boldsymbol{f}}^{(n+1)},{\boldsymbol{p}}^{(n+1)}) $)(7) 计算新的目标$ {\mathcal{E}}^{(n+1)} $,令$ n\leftarrow n+1 $ (8) until $ |{\mathcal{E}}^{(n)}-{\mathcal{E}}^{(n-1)}|/{\mathcal{E}}^{(n-1)}\leq \epsilon $ (9) 记录收敛后的$ \mathcal{E}({\boldsymbol{\alpha}} ,{\boldsymbol{\beta}} ) $及对应分配$ (\boldsymbol{t},\boldsymbol{f},\boldsymbol{p}) $ (10) end for (11) 选取$ ({{\boldsymbol{\alpha}} }^{* },{{\boldsymbol{\beta}} }^{* })=\arg {\min }_{(\alpha ,\beta )\in \mathcal{G}}\mathcal{E}(\alpha ,\beta ) $,并输出对应的

$ ({\boldsymbol{t}}^{* },{\boldsymbol{f}}^{* },{\boldsymbol{p}}^{* }) $。 -

[1] XU Peng, ZHU Xiatian, and CLIFTON D A. Multimodal learning with transformers: A survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(10): 12113–12132. doi: 10.1109/TPAMI.2023.3275156. [2] 宁博, 宁一鸣, 杨超, 等. 自适应聚类中心个数选择: 一种联邦学习的隐私效用平衡方法[J]. 电子与信息学报, 2025, 47(2): 519–529. doi: 10.11999/JEIT240414.NING Bo, NING Yiming, YANG Chao, et al. Adaptive clustering center selection: A privacy utility balancing method for federated learning[J]. Journal of Electronics & Information Technology, 2025, 47(2): 519–529. doi: 10.11999/JEIT240414. [3] 陈晓, 仇洪冰, 李燕龙. 边缘辅助的自适应稀疏联邦学习优化算法[J]. 电子与信息学报, 2025, 47(3): 645–656. doi: 10.11999/JEIT240741.CHEN Xiao, QIU Hongbing, and LI Yanlong. Adaptively sparse federated learning optimization algorithm based on edge-assisted server[J]. Journal of Electronics & Information Technology, 2025, 47(3): 645–656. doi: 10.11999/JEIT240741. [4] 高莹, 谢雨欣, 邓煌昊, 等. 面向纵向联邦学习的隐私保护数据对齐框架[J]. 电子与信息学报, 2024, 46(8): 3419–3427. doi: 10.11999/JEIT231234.GAO Ying, XIE Yuxin, DENG Huanghao, et al. A privacy-preserving data alignment framework for vertical federated learning[J]. Journal of Electronics & Information Technology, 2024, 46(8): 3419–3427. doi: 10.11999/JEIT231234. [5] YANG Zhaohui, CHEN Mingzhe, SAAD W, et al. Energy efficient federated learning over wireless communication networks[J]. IEEE Transactions on Wireless Communications, 2021, 20(3): 1935–1949. doi: 10.1109/TWC.2020.3037554. [6] FENG Tiantian, BOSE D, ZHANG Tuo, et al. FedMultimodal: A benchmark for multimodal federated learning[C]. The 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, USA, 2023: 4035–4045. doi: 10.1145/3580305.3599825. [7] LE H Q, THWAL C M, QIAO Yu, et al. Cross-modal prototype based multimodal federated learning under severely missing modality[J]. Information Fusion, 2025, 122: 103219. doi: 10.1016/j.inffus.2025.103219. [8] LIU Lumin, ZHANG Jun, SONG Shenghui, et al. Hierarchical federated learning with quantization: Convergence analysis and system design[J]. IEEE Transactions on Wireless Communications, 2023, 22(1): 2–18. doi: 10.1109/TWC.2022.3190512. [9] YAO Jingjing and ANSARI N. Enhancing federated learning in fog-aided IoT by CPU frequency and wireless power control[J]. IEEE Internet of Things Journal, 2021, 8(5): 3438–3445. doi: 10.1109/JIOT.2020.3022590. [10] JIANG Yuang, WANG Shiqiang, VALLS V, et al. Model pruning enables efficient federated learning on edge devices[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(12): 10374–10386. doi: 10.1109/TNNLS.2022.3166101. [11] WANG Tianshi, LI Fengling, ZHU Lei, et al. Cross-modal retrieval: A systematic review of methods and future directions[J]. Proceedings of the IEEE, 2024, 112(11): 1716–1754. doi: 10.1109/JPROC.2024.3525147. [12] ABOOTORABI M M, ZOBEIRI A, DEHGHANI M, et al. Ask in any modality: A comprehensive survey on multimodal retrieval-augmented generation[C]. The Findings of the Association for Computational Linguistics: ACL 2025, Vienna, Austria, 2025: 16776–16809. doi: 10.18653/v1/2025.findings-acl.861. [13] JOHNSON A E W, POLLARD T J, BERKOWITZ S J, et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports[J]. Scientific Data, 2019, 6(1): 317. doi: 10.1038/s41597-019-0322-0. [14] ZHU Konglin, ZHANG Fuchun, JIAO Lei, et al. Client selection for federated learning using combinatorial multi-armed bandit under long-term energy constraint[J]. Computer Networks, 2024, 250: 110512. doi: 10.1016/j.comnet.2024.110512. [15] WANG Gang, QI Qi, HAN Rui, et al. P2CEFL: Privacy-preserving and communication efficient federated learning with sparse gradient and dithering quantization[J]. IEEE Transactions on Mobile Computing, 2024, 23(12): 14722–14736. doi: 10.1109/TMC.2024.3445957. -

下载:

下载:

下载:

下载: