Bionic Behavior Modeling Method for Unmanned Aerial Vehicle Swarms Empowered by Deep Reinforcement Learning

-

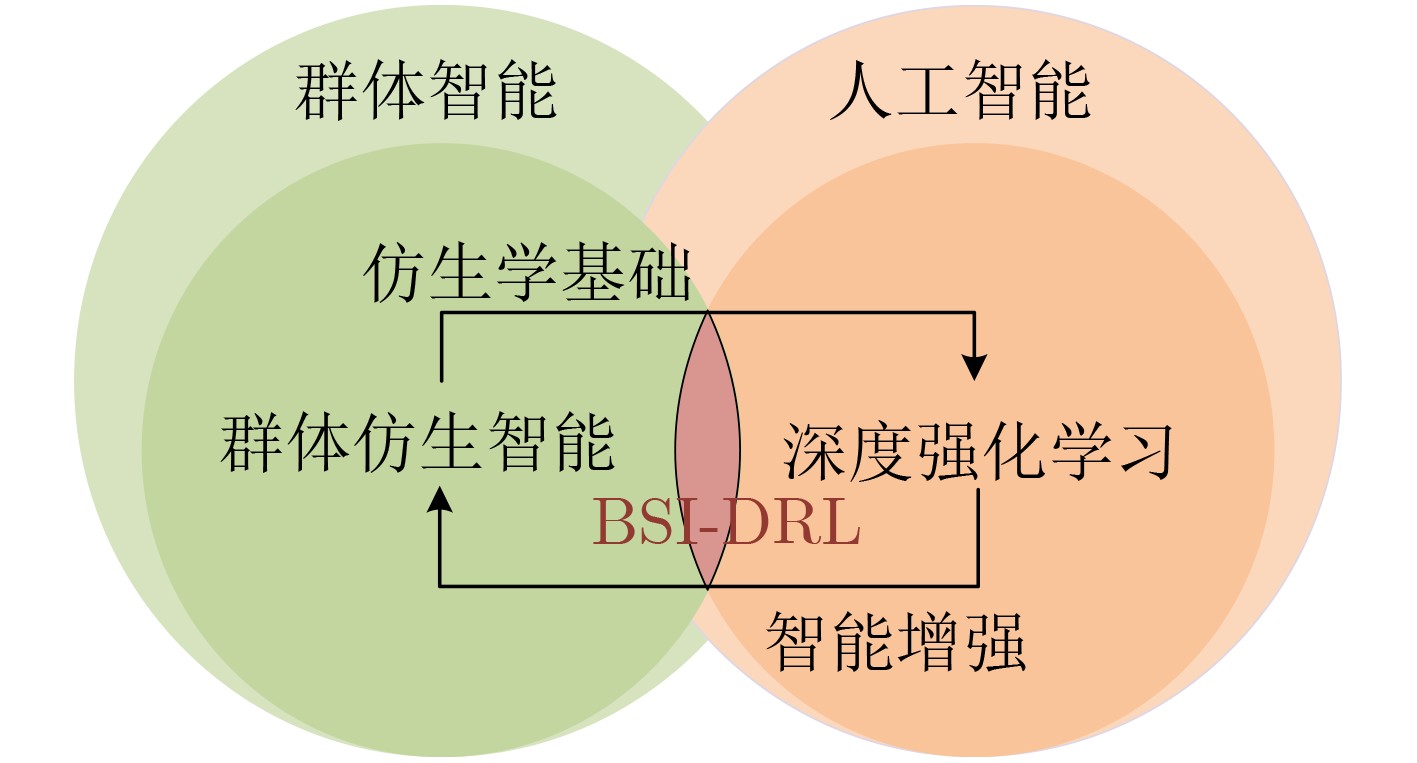

摘要: 该文针对生物群体协同行为向无人机集群工程模型转化的难题,结合群体仿生智能与深度强化学习(BSI-DRL)的融合演进趋势,聚焦仿生映射理论与建模方法创新,梳理BSI-DRL驱动的无人机集群建模进展与挑战。首先,明确群体仿生智能概念与核心特征,分析其3阶段发展范式跃迁及技术价值,解析4类典型生物群体协同机制,提炼仿生映射3关键步骤;其次,围绕BSI-DRL核心范式,综合分析仿生规则参数化DRL优化、仿生规则生成式多智能体强化学习、动态角色分配与分层DRL协同优化3大方向的技术优势与挑战;最后,展望跨物种生物机制融合、BSI-DRL闭环协同、仿鸟群相变控制与DRL融合等未来方向,为技术工程化落地提供理论和方法支撑。Abstract:

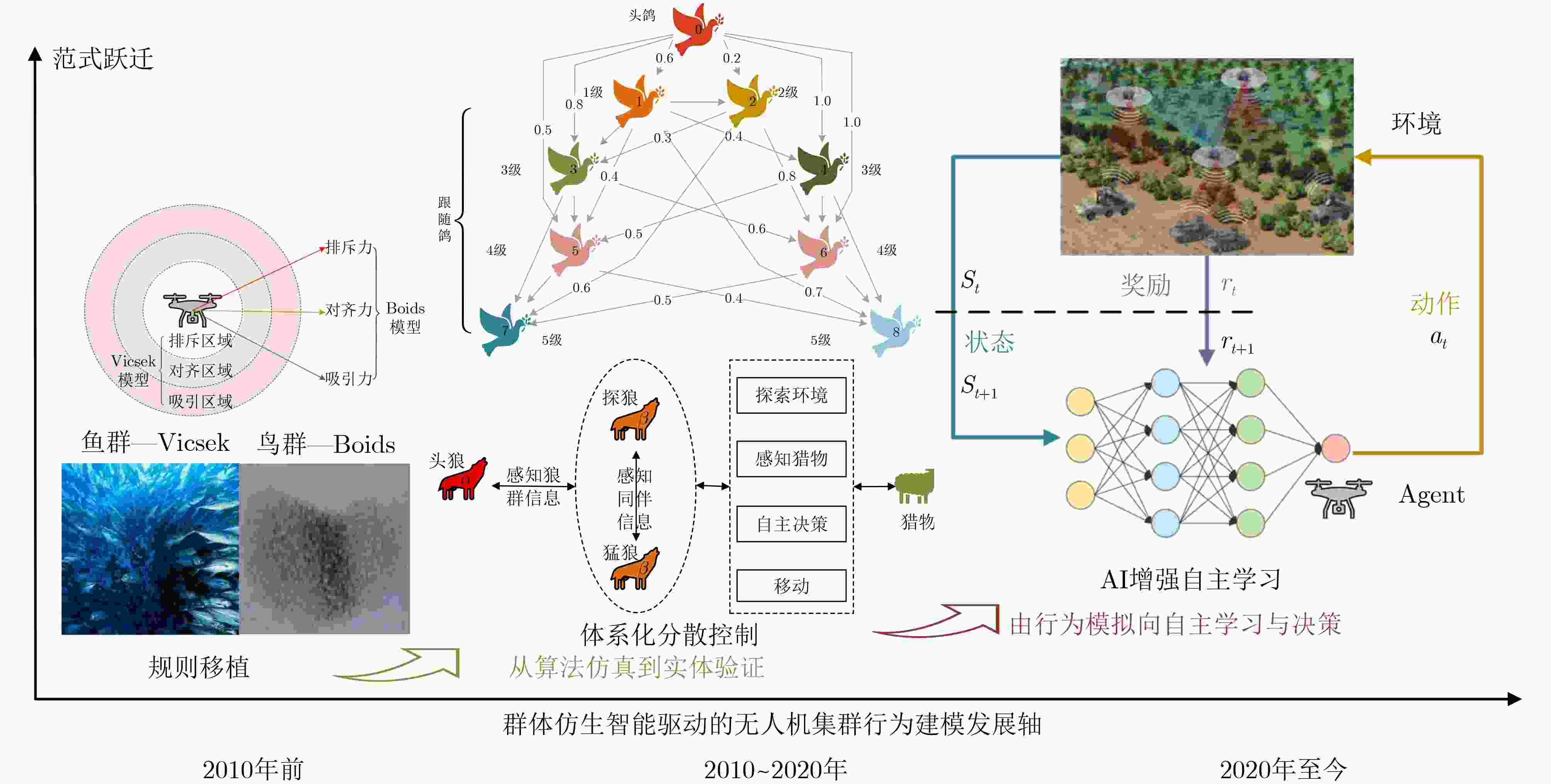

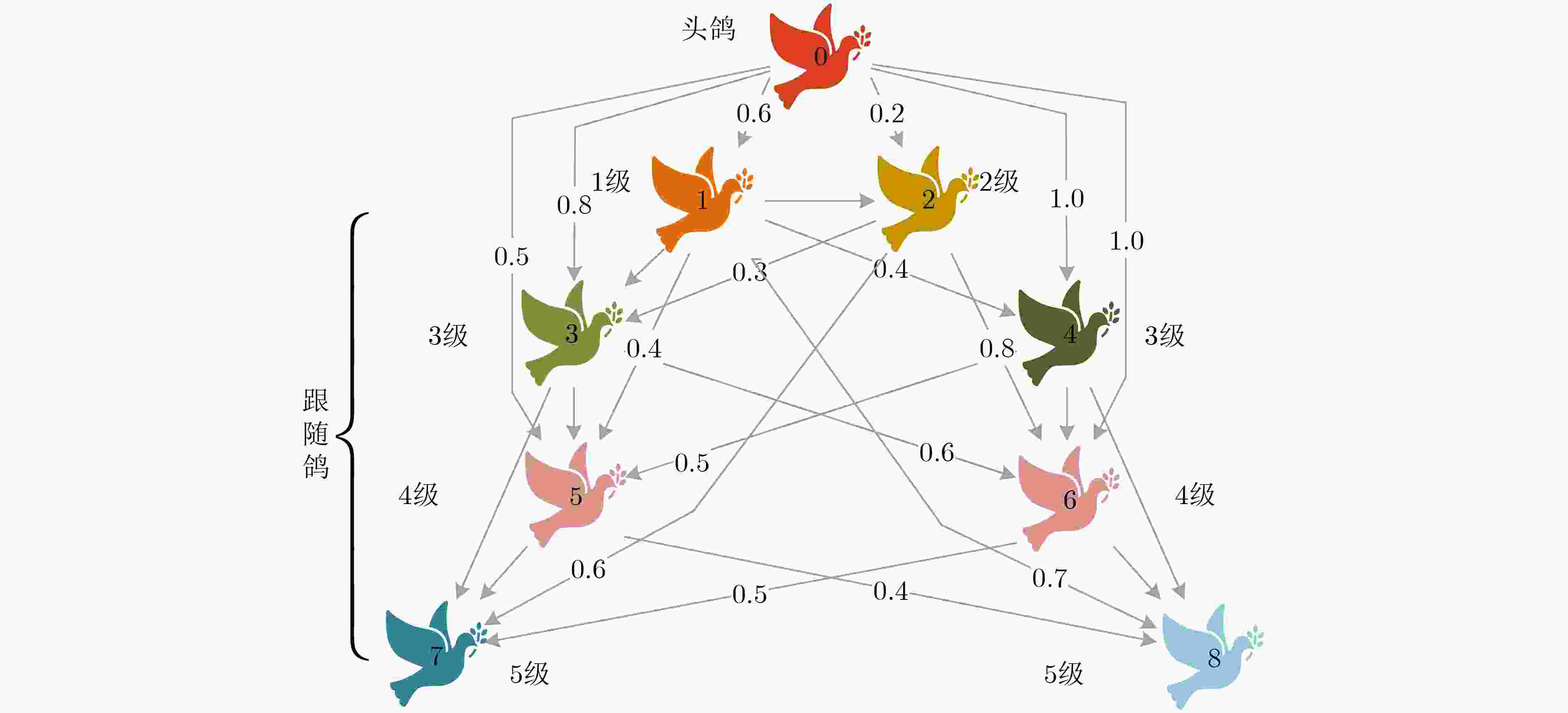

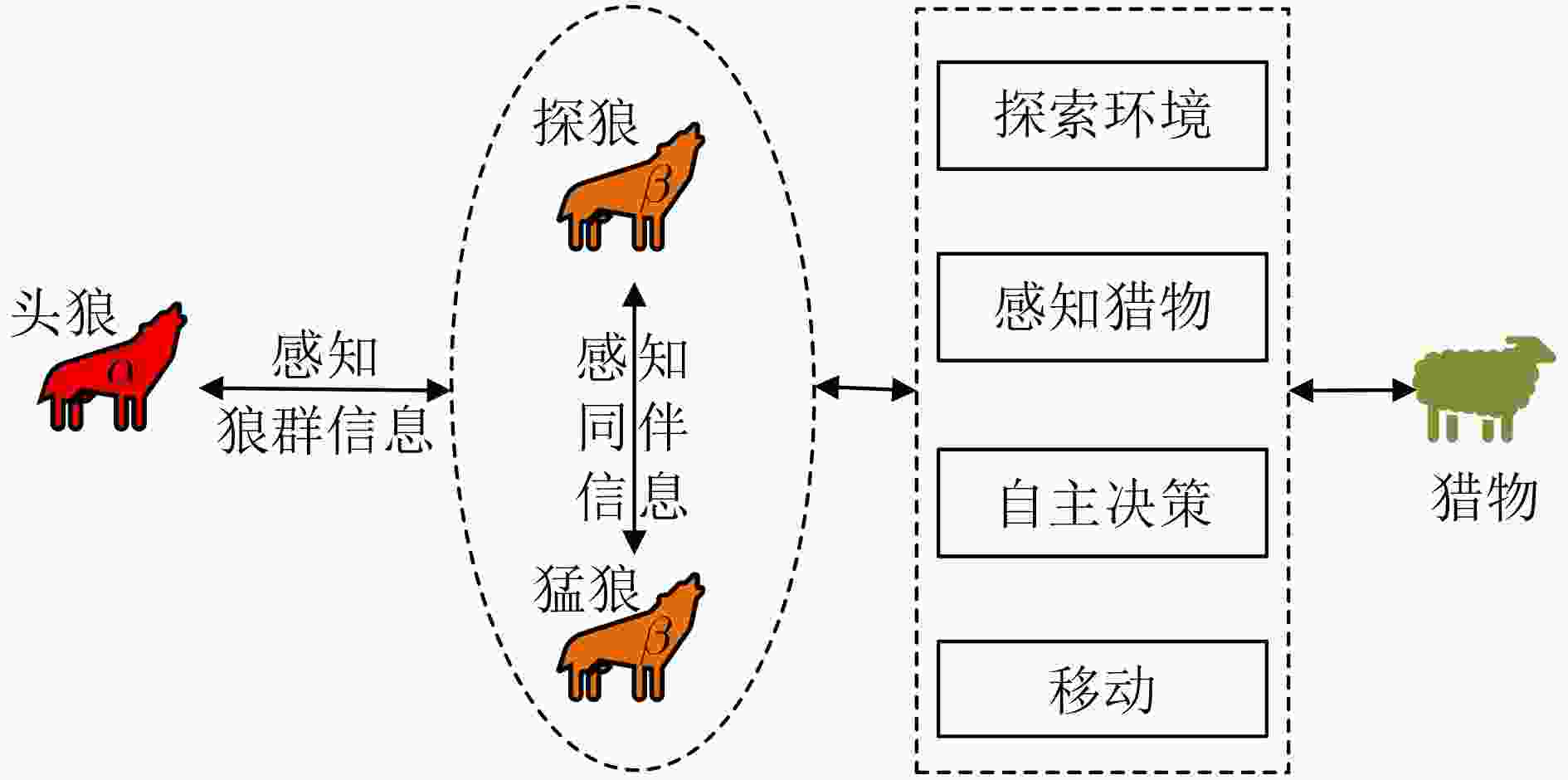

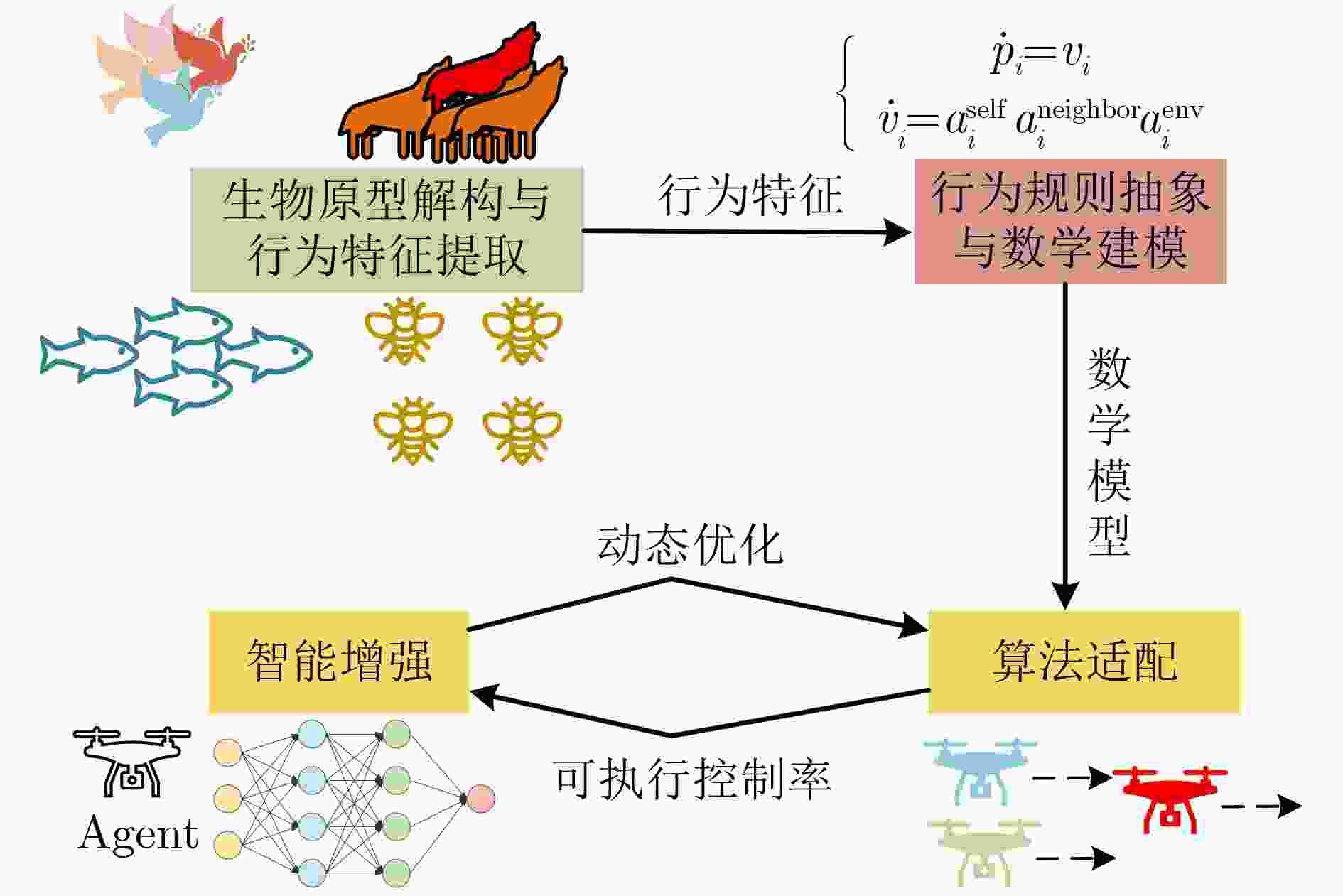

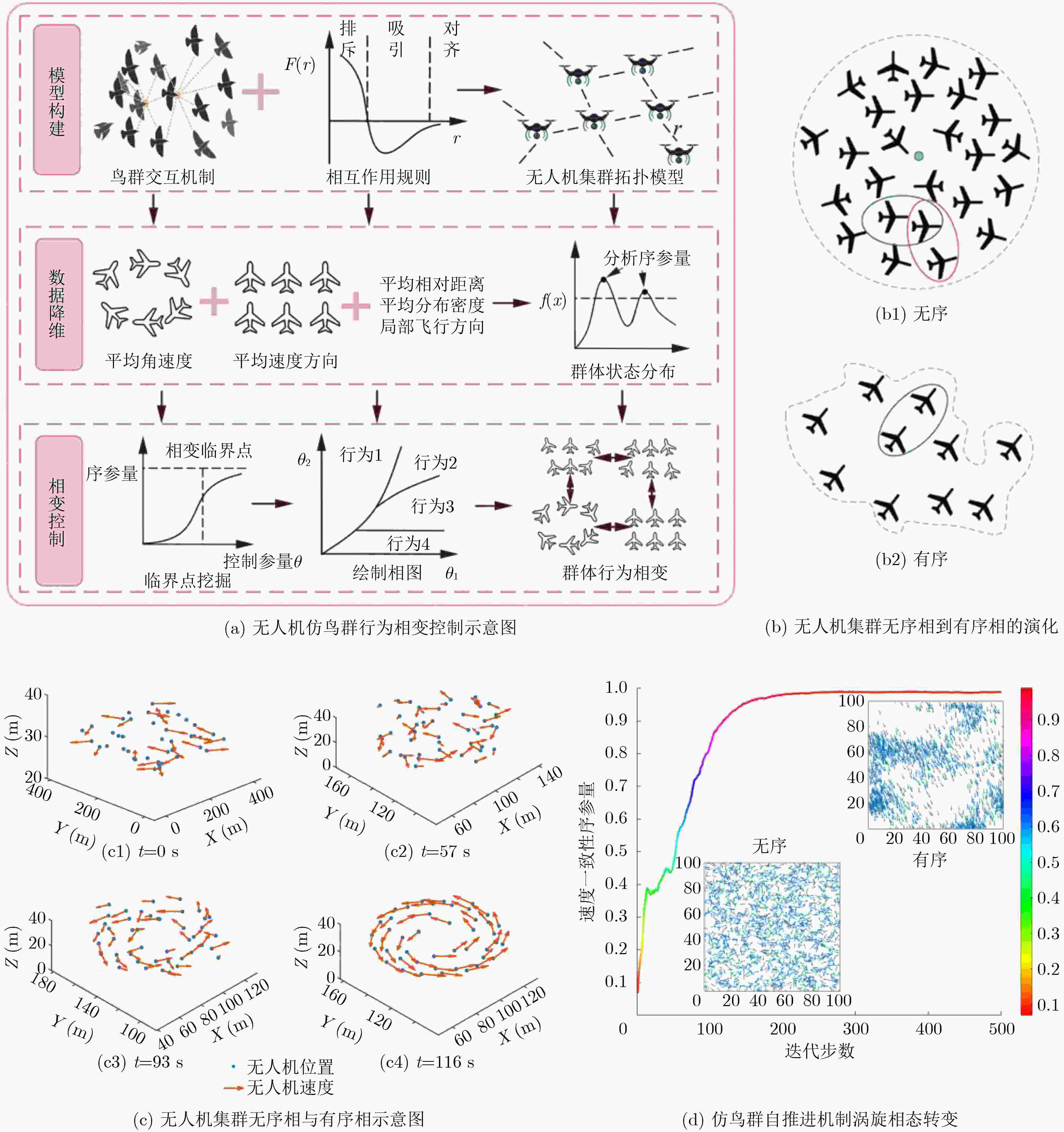

Significance Unmanned Aerial Vehicle (UAV) swarm technology is a core driver of low-altitude economic development and intelligent unmanned system evolution, yielding cooperative effects greater than the sum of individual UAVs in disaster response, environmental monitoring, and logistics distribution. As mission scenarios shift toward dynamic heterogeneity, strong interference, and large-scale deployment, traditional centralized control architectures, although theoretically feasible, do not achieve practical implementation and remain a major constraint on engineering application. Bionic Swarm Intelligence (BSI), a distributed intelligent paradigm that simulates the self-organization, elastic reconfiguration, and cooperative behavior of biological swarms, offers a path to overcoming these limitations. The integration of Deep Reinforcement Learning (DRL) enables a transition from static behavior simulation to adaptive autonomous learning and decision-making. The combined BSI-DRL framework allows UAV swarms to optimize cooperative strategies through data-driven interaction, addressing the limited adaptability of manually designed bionic rules. Clarifying the progress and challenges of UAV swarm modeling based on BSI-DRL is essential for supporting engineering transformation and improving practical system performance. Progress The progress of BSI-DRL-driven UAV swarm behavior modeling is summarized from four aspects.(1) BSI’s concept and core characteristics: BSI, a biology-oriented subset of Swarm Intelligence (SI), is defined by four characteristics: distributed control without dependence on a central command, self-organization through spontaneous disorder-to-order transition, robustness through functional maintenance under disturbances, and adaptability through dynamic strategy optimization in complex environments. (2) Three-stage paradigm transition of BSI: (a)Before 2010 (rule transplantation stage): work centered on applying fixed bionic algorithms such as particle swarm optimization and biological models (e.g., Boids, Vicsek) to UAV path planning, with SI dependent on preset rules ( Fig. 2 ). (b)From 2010 to 2020 (systematic decentralized control stage): studies shifted toward systematic design and decentralized control theory, enabling a transition from simulation to physical verification but showing limited adaptability under dynamic conditions (Fig. 2 ). (c)Since 2020 (AI-enhanced autonomous learning stage): integration of DRL enabled a transition to autonomous learning and decision-making, allowing UAV swarms to develop advanced cooperative strategies when facing unknown environments (Fig. 2 ).(3) Typical biological swarm mechanisms and bionic mapping: Four representative biological mechanisms provide bionic prototypes. (a)Pigeon flock hierarchy, characterized by a three-tier coupled structure, supports formation control and cooperation under interference. (b)Wolf pack hunting, structured as four-stage dynamic collaboration, enables efficient task division. (c)Fish school self-repair through decentralized topology adjustment enhances swarm robustness. (d)Honeybee colony division of labor, based on decentralized decision-making and dynamic role assignment, improves task efficiency. Bionic mapping proceeds through three steps: decomposition of the biological prototype and extraction of behavioral features using dynamic mode decomposition, social interaction filtering, and group state classification (Fig. 5 ); abstraction of behavior rules and mathematical modeling using approaches such as differential equations and graph theory; and algorithmic adaptation and intelligent enhancement by converting mathematical models into executable rules and integrating DRL.(4) Core BSI-DRL modeling directions: Three main technical paths are summarized with horizontal comparison (Table 1 ). (a)Bionic-rule parameterization with DRL optimization (shallow fusion): DRL is used to optimize key parameters of bionic models, such as attraction-repulsion weights in Boids, preserving biological robustness but exhibiting instability during large-swarm training. (b)Generative bionic-rule multi-agent reinforcement learning (middle fusion): bio-inspired reward functions guide the autonomous emergence of cooperative rules, improving adaptability but reducing interpretability due to “black-box” characteristics. (c)Dynamic role assignment with hierarchical DRL (deep fusion): a three-tier architecture comprising global planning, group role assignment, and individual execution reduces decision-making complexity in heterogeneous swarms and strengthens multi-task adaptability, although multi-level coordination remains challenging. A scenario-adaptation logic based on swarm scale, environmental dynamics, and task heterogeneity, together with a multi-method fusion strategy, is also proposed.Conclusions This study clarifies the theoretical framework and research progress of BSI-DRL-based UAV swarm behavior modeling. BSI addresses limitations of traditional centralized control, including scale expandability, dynamic adaptability, and system credibility, by simulating biological swarm mechanisms. DRL further enables a shift toward autonomous learning. Horizontal comparison indicates complementary strengths across the three core directions: parameterization optimization maintains basic robustness, generative methods enhance dynamic adaptability, and hierarchical collaboration improves performance in heterogeneous multi-task settings. The proposed scenario-adaptation logic, which applies parameterization to small-to-medium and static scenarios, generative methods to medium-to-large and dynamic scenarios, and hierarchical collaboration to heterogeneous multi-task missions, together with the multi-method fusion strategy, offers feasible engineering pathways. Key engineering bottlenecks are also identified, including inconsistent environmental perception, unbalanced multi-objective decision-making, and limited system interpretability, providing a basis for targeted technical advancement. Prospects Future work focuses on five directions to enhance the capacity of BSI-DRL for complex UAV swarm tasks. (1)Cross-species biological mechanism integration: combining advantages of different biological prototypes to construct adaptive hybrid systems. (2) BSI-DRL closed-loop collaborative evolution: establishing a bidirectional interaction framework in which BSI provides initial strategies and safety boundaries, while DRL refines bionic rules online. (3)Bird-swarm-like phase-transition control and DRL fusion: using phase-transition order parameters as DRL observation indicators to improve parameter interpretability. (4)Digital-twin and hardware-in-the-loop training and verification: building high-fidelity digital-twin environments to narrow simulation–reality gaps. (5)Real-scenario performance evaluation and field deployment: conducting field tests to assess algorithm effectiveness and guide theoretical refinement. -

图 2 BSI在无人机集群中的应用范式跃迁[7]

图 7 3类BSI-DRL的无人机集群建模范式[48]

表 1 BSI-DRL建模方法量化对比表

仿生规则参数化优化 仿生规则生成式MADRL 动态角色分配与分层协同 Q-learning优化

Boids参数[53]复合人工势场

DQN[57]集中训练布式

执行改进[78]生存目标驱动DRL[63] 裂变-融合强化

学习对抗[77]中央任务分配

子代理执行[73]性能指标 实现连续避障、空间覆盖最大化;成功规避障碍,维持个体间最优间距; 最小安全距离

≥20 m,对比VAPF

提升30%;轨迹

偏差累计降低25%协同作战任务完成率超 91%;集群内

碰撞率<2%群聚行为涌现率100%;集群内

碰撞率≈0%动态障碍规避成功率100%;母群任务

完成率100%;跟踪持续率≥95%;

目标获取时间≤72 s样本效率 100次训练 训练200轮收敛 训练 60000 轮收敛训练步数 500000 步训练步数 500000 步未提及 可扩展性 32架 12架 3架 60架 20架(裂变后

子群≥3 架)8~10架 通信开销 未提及通信负载

量化数据通信半径100 m;

分布式通信分布式通信 局部交互邻居数≤5 通信负载降低

50%~85%分布式通信和子代理间局部通信 其他指标 支持编队动态扩张

与收缩轨迹更平滑;避障后快速回归路线; 奖励值稳定在30±5 平均集群内个体间距均匀 极化指数稳定在0.85以上 单任务飞行时间

缩短 -

[1] FAN Ruitao, WANG Jintao, HAN Weixin, et al. UAV swarm control based on hybrid bionic swarm intelligence[J]. Guidance, Navigation and Control, 2023, 3(2): 2350008. doi: 10.1142/S2737480723500085. [2] LONG Weifan, HOU Taixian, WEI Xiaoyi, et al. A survey on population-based deep reinforcement learning[J]. Mathematics, 2023, 11(10): 2234. doi: 10.3390/math11102234. [3] BENI G and WANG Jing. Swarm intelligence in cellular robotic systems[M]. DARIO P, SANDINI G, and AEBISCHER P. Robots and Biological Systems: Towards a New Bionics?. Berlin: Springer, 1993: 703–712. doi: 10.1007/978-3-642-58069-7_38. [4] 何明, 陈浩天, 韩伟, 等. 无人机仿鸟群协同控制发展现状及关键技术[J]. 航空学报, 2024, 45(20): 029946. doi: 10.7527/S1000-6893.2024.29946.HE Ming, CHEN Haotian, HAN Wei, et al. Development status and key technologies of cooperative control of bird-inspired UAV swarms[J]. Acta Aeronautica et Astronautica Sinica, 2024, 45(20): 029946. doi: 10.7527/S1000-6893.2024.29946. [5] 段海滨, 邵山, 苏丙未, 等. 基于仿生智能的无人作战飞机控制技术发展新思路[J]. 中国科学: 技术科学, 2010, 40(8): 853–860.DUAN Haibin, SHAO Shan, SU Bingwei, et al. New development thoughts on the bio-inspired intelligence based control for unmanned combat aerial vehicle[J]. Science China Technological Sciences, 2010, 53(8): 2025–2031. doi: 10.1007/s11431-010-3160-z. [6] 邱华鑫, 段海滨, 范彦铭. 基于鸽群行为机制的多无人机自主编队[J]. 控制理论与应用, 2015, 32(10): 1298–1304. doi: 10.7641/CTA.2015.50314.QIU Huaxin, DUAN Haibin, and FAN Yanming. Multiple unmanned aerial vehicle autonomous formation based on the behavior mechanism in pigeon flocks[J]. Control Theory & Applications, 2015, 32(10): 1298–1304. doi: 10.7641/CTA.2015.50314. [7] 梁鸿涛, 王耀南, 华和安, 等. 无人集群系统深度强化学习控制研究进展[J]. 工程科学学报, 2024, 46(9): 1521–1534. doi: 10.13374/j.issn2095-9389.2023.07.30.001.LIANG Hongtao, WANG Yaonan, HUA Hean, et al. Deep reinforcement learning to control an unmanned swarm system[J]. Chinese Journal of Engineering, 2024, 46(9): 1521–1534. doi: 10.13374/j.issn2095-9389.2023.07.30.001. [8] NTI I K, ADEKOYA A F, WEYORI B A, et al. Applications of artificial intelligence in engineering and manufacturing: A systematic review[J]. Journal of Intelligent Manufacturing, 2022, 33(6): 1581–1601. doi: 10.1007/s10845-021-01771-6. [9] 刘雷, 刘大卫, 王晓光, 等. 无人机集群与反无人机集群发展现状及展望[J]. 航空学报, 2022, 43(S1): 726908. doi: 10.7527/S1000-6893.2022.26908.LIU Lei, LIU Dawei, WANG Xiaoguang, et al. Development status and outlook of UAV clusters and anti-UAV clusters[J]. Acta Aeronautica et Astronautica Sinica, 2022, 43(S1): 726908. doi: 10.7527/S1000-6893.2022.26908. [10] LIU Yunxiao, WANG Yiming, LI Han, et al. Runway-free recovery methods for fixed-wing UAVs: A comprehensive review[J]. Drones, 2024, 8(9): 463. doi: 10.3390/drones8090463. [11] SHAHZAD M M, SAEED Z, AKHTAR A, et al. A review of swarm robotics in a nutshell[J]. Drones, 2023, 7(4): 269. doi: 10.3390/drones7040269. [12] ZAITSEVA E, LEVASHENKO V, MUKHAMEDIEV R, et al. Review of reliability assessment methods of drone swarm (fleet) and a new importance evaluation based method of drone swarm structure analysis[J]. Mathematics, 2023, 11(11): 2551. doi: 10.3390/math11112551. [13] SANKEY D W E and PORTUGAL S J. Influence of behavioural and morphological group composition on pigeon flocking dynamics[J]. Journal of Experimental Biology, 2023, 226(15): jeb245776. doi: 10.1242/jeb.245776. [14] BALLERINI M, CABIBBO N, CANDELIER R, et al. Interaction ruling animal collective behavior depends on topological rather than metric distance: Evidence from a field study[J]. Proceedings of the National Academy of Sciences of the United States of America, 2008, 105(4): 1232–1237. doi: 10.1073/pnas.0711437105. [15] 罗琪楠, 段海滨, 范彦铭. 鸽群运动模型稳定性及聚集特性分析[J]. 中国科学: 技术科学, 2019, 49(6): 652–660. doi: 10.1360/N092017-00320.LUO Qi’nan, DUAN Haibin, and FAN Yanming. Analysis on stability and aggregation behavior of pigeon collective model[J]. Scientia Sinica Technologica, 2019, 49(6): 652–660. doi: 10.1360/N092017-00320. [16] HUO Mengzhen, DUAN Haibin, and DING Xilun. Manned aircraft and unmanned aerial vehicle heterogeneous formation flight control via heterogeneous pigeon flock consistency[J]. Unmanned Systems, 2021, 9(3): 227–236. doi: 10.1142/S2301385021410053. [17] CAO Shiyue, LEE C Y, DUAN Haibin, et al. Quadrotor swarm flight experimentation inspired by pigeon flock topology[C]. 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 2019: 657–662. doi: 10.1109/ICCA.2019.8899745. [18] HANG Xu and YIN Wang. Target assignment of heterogeneous multi-UAVs based on pigeon-inspired optimization[C]. 2020 International Conference on Guidance on Advances in Guidance, Tianjin, China, 2020: 3987–3998. doi: 10.1007/978-981-15-8155-7_333. [19] PAN Chengsheng, SI Zenghui, DU Xiuli, et al. A four-step decision-making grey wolf optimization algorithm[J]. Soft Computing, 2021, 25(22): 14375–14391. doi: 10.1007/s00500-021-06194-2. [20] MADDILETI T, SALENDRA G, and SIVAPPAGARI C M R. Design optimization of power and area of two-stage CMOS operational amplifier utilizing chaos grey wolf technique[J]. International Journal of Advanced Computer Science and Applications, 2020, 11(7): 465–479. doi: 10.14569/IJACSA.2020.0110760. [21] KRAIEM H, AYMEN F, YAHYA L, et al. A comparison between particle swarm and grey wolf optimization algorithms for improving the battery autonomy in a photovoltaic system[J]. Applied Sciences, 2021, 11(16): 7732. doi: 10.3390/app11167732. [22] BAI Xiaotong, ZHENG Yuefeng, LU Yang, et al. Chain hybrid feature selection algorithm based on improved Grey Wolf Optimization algorithm[J]. PLoS One, 2024, 19(10): e0311602. doi: 10.1371/journal.pone.0311602. [23] PHADKE A and MEDRANO F A. Towards resilient UAV swarms–A breakdown of resiliency requirements in UAV swarms[J]. Drones, 2022, 6(11): 340. doi: 10.3390/drones6110340. [24] HANG Haotian, HUANG Chenchen, BARNETT A, et al. Self-reorganization and information transfer in massive schools of fish[EB/OL]. https://arxiv.org/abs/2505.05822, 2025. [25] WU Husheng, PENG Qiang, SHI Meimei, et al. A survey of UAV swarm task allocation based on the perspective of coalition formation[J]. International Journal of Swarm Intelligence Research, 2022, 13(1): 1–22. doi: 10.4018/IJSIR.311499. [26] QIN Boyu, ZHANG Dong, TANG Shuo, et al. Distributed grouping cooperative dynamic task assignment method of UAV swarm[J]. Applied Sciences, 2022, 12(6): 2865. doi: 10.3390/app12062865. [27] 陈鹏宇. 基于深度强化学习的集群行为建模研究[D]. [硕士论文], 大连海洋大学, 2023. doi: 10.27821/d.cnki.gdlhy.2023.000385.CHEN Pengyu. Research on collective behavior modeling based on deep reinforcement learning[D]. [Master dissertation], Dalian Ocean University, 2023. doi: 10.27821/d.cnki.gdlhy.2023.000385. [28] YIN Jia, CHAN Yanghao, DA JORNADA F H, et al. Analyzing and predicting non-equilibrium many-body dynamics via dynamic mode decomposition[J]. Journal of Computational Physics, 2023, 477: 111909. doi: 10.1016/j.jcp.2023.111909. [29] HANSEN E, BRUNTON S L, and SONG Zhuoyuan. Swarm modeling with dynamic mode decomposition[J]. IEEE Access, 2022, 10: 59508–59521. doi: 10.1109/ACCESS.2022.3179414. [30] FUJII K, KAWASAKI T, INABA Y, et al. Prediction and classification in equation-free collective motion dynamics[J]. PLoS Computational Biology, 2018, 14(11): e1006545. doi: 10.1371/journal.pcbi.1006545. [31] XIAO Yandong, LEI Xiaokang, ZHENG Zhicheng, et al. Perception of motion salience shapes the emergence of collective motions[J]. Nature Communications, 2024, 15(1): 4779. doi: 10.1038/s41467-024-49151-x. [32] 刘明雍, 雷小康, 杨盼盼, 等. 群集运动的理论建模与实证分析[J]. 科学通报, 2014, 59(25): 2464–2483. doi: 10.1360/N972013-00045.LIU Mingyong, LEI Xiaokang, YANG Panpan, et al. Progress of theoretical modelling and empirical studies on collective motion[J]. Chinese Science Bulletin, 2014, 59(25): 2464–2483. doi: 10.1360/N972013-00045. [33] COUZIN I D, KRAUSE J, JAMES R, et al. Collective memory and spatial sorting in animal groups[J]. Journal of Theoretical Biology, 2002, 218(1): 1–11. doi: 10.1006/jtbi.2002.3065. [34] 邱华鑫, 段海滨, 范彦铭, 等. 鸽群交互模式切换模型及其同步性分析[J]. 智能系统学报, 2020, 15(2): 334–343. doi: 10.11992/tis.201904052.QIU Huaxin, DUAN Haibin, FAN Yanming, et al. Pigeon flock interaction pattern switching model and its synchronization analysis[J]. CAAI Transactions on Intelligent Systems, 2020, 15(2): 334–343. doi: 10.11992/tis.201904052. [35] VICSEK T, CZIRÓK A, BEN-JACOB E, et al. Novel type of phase transition in a system of self-driven particles[J]. Physical Review Letters, 1995, 75(6): 1226–1229. doi: 10.1103/PhysRevLett.75.1226. [36] BUHL C, SUMPTER D J T, COUZIN I D, et al. From disorder to order in marching locusts[J]. Science, 2006, 312(5778): 1402–1406. doi: 10.1126/science.1125142. [37] CAVAGNA A and GIARDINA I. Bird flocks as condensed matter[J]. Annual Review of Condensed Matter Physics, 2014, 5: 183–207. doi: 10.1146/annurev-conmatphys-031113-133834. [38] QI Jingtao, BAI Liang, WEI Yingmei, et al. Emergence of adaptation of collective behavior based on visual perception[J]. IEEE Internet of Things Journal, 2023, 10(12): 10368–10384. doi: 10.1109/JIOT.2023.3238162. [39] ATTANASI A, CAVAGNA A, DEL CASTELLO L, et al. Emergence of collective changes in travel direction of starling flocks from individual birds' fluctuations[J]. Journal of the Royal Society Interface, 2015, 12(108): 20150319. doi: 10.1098/rsif.2015.0319. [40] 邱浩楠, 何明, 韩伟, 等. 一种仿鸟群行为的无人机集群相变控制方法[J]. 现代防御技术, 2025, 53(1): 11–22. doi: 10.3969/j.issn.1009-086x.2025.01.002.QIU Haonan, HE Ming, HAN Wei, et al. A phase transition control method for UAV swarm based on birds’ behaviors[J]. Modern Defense Technology, 2025, 53(1): 11–22. doi: 10.3969/j.issn.1009-086x.2025.01.002. [41] LIU Sicong, HE Ming, HAN Wei, et al. Distributed control algorithm for multi-agent cooperation: Leveraging spatial information perception[J]. International Journal of Robust and Nonlinear Control, 2025, 36(1): 312–328. doi: 10.1002/rnc.70138. [42] CHEN Haotian, HE Ming, LIU Jintao, et al. A novel fractional-order flocking algorithm for large-scale UAV swarms[J]. Complex & Intelligent Systems, 2023, 9(6): 6831–6844. doi: 10.1007/s40747-023-01107-2. [43] 段海滨, 尤灵辰, 范彦铭, 等. 仿鸟群自推进机制的无人机集群相变控制[J]. 自动化学报, 2025, 51(5): 960–971. doi: 10.16383/j.aas.c240598.DUAN Haibin, YOU Lingchen, FAN Yanming, et al. Phase transition control of UAV swarm based on bird-inspired self-propelled mechanism[J]. Acta Automatica Sinica, 2025, 51(5): 960–971. doi: 10.16383/j.aas.c240598. [44] WANG Ling and CHEN Guanrong. Synchronization of multi-agent systems with metric-topological interactions[J]. Chaos: An Interdisciplinary Journal of Nonlinear Science, 2016, 26(9): 094809. doi: 10.1063/1.4955086. [45] EL-FERIK S. Biologically based control of a fleet of unmanned aerial vehicles facing multiple threats[J]. IEEE Access, 2020, 8: 107146–107160. doi: 10.1109/ACCESS.2020.3000774. [46] AZZAM R, BOIKO I, and ZWEIRI Y. Swarm cooperative navigation using centralized training and decentralized execution[J]. Drones, 2023, 7(3): 193. doi: 10.3390/drones7030193. [47] 夏家伟, 刘志坤, 朱旭芳, 等. 基于多智能体强化学习的无人艇集群集结方法[J]. 北京航空航天大学学报, 2023, 49(12): 3365–3376. doi: 10.13700/j.bh.1001-5965.2022.0088.XIA Jiawei, LIU Zhikun, ZHU Xufang, et al. A coordinated rendezvous method for unmanned surface vehicle swarms based on multi-agent reinforcement learning[J]. Journal of Beijing University of Aeronautics and Astronautics, 2023, 49(12): 3365–3376. doi: 10.13700/j.bh.1001-5965.2022.0088. [48] PAPADOPOULOU M, HILDENBRANDT H, and HEMELRIJK C K. Diffusion during collective turns in bird flocks under predation[J]. Frontiers in Ecology and Evolution, 2023, 11: 1198248. doi: 10.3389/fevo.2023.1198248. [49] REYNOLDS C W. Flocks, herds and schools: A distributed behavioral model[C].The 14th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, USA, 1987: 25–34. doi: 10.1145/37401.37406. [50] KHATIB O. Real-time obstacle avoidance for manipulators and mobile robots[J]. The International Journal of Robotics Research, 1986, 5(1): 90–98. doi: 10.1177/027836498600500106. [51] 朱许, 张博涵, 王正宁, 等. 基于深度强化学习的无人机集群编队避障控制[J]. 飞行力学, 2025, 43(2): 22–28. doi: 10.13645/j.cnki.f.d.20250214.002.ZHU Xu, ZHANG Bohan, WANG Zhengning, et al. Obstacle avoidance control of UAV cluster formation based on deep reinforcement learning[J]. Flight Dynamics, 2025, 43(2): 22–28. doi: 10.13645/j.cnki.f.d.20250214.002. [52] 陈泽坤, 何杏宇. 一种无人机编队控制方法研究与仿真[J]. 建模与仿真, 2024, 13(3): 2662–2672. doi: 10.12677/mos.2024.133242.CHEN Zekun and HE Xingyu. Research and simulation of a UAV formation control method[J]. Modeling and Simulation, 2024, 13(3): 2662–2672. doi: 10.12677/mos.2024.133242. [53] DONG Zhaoqi, WU Qizhen, and CHEN Lei. Reinforcement learning-based formation pinning and shape transformation for swarms[J]. Drones, 2023, 7(11): 673. doi: 10.3390/drones7110673. [54] WANG Chengjie, DENG Juan, ZHAO Hui, et al. Effect of Q-learning on the evolution of cooperation behavior in collective motion: An improved Vicsek model[J]. Applied Mathematics and Computation, 2024, 482: 128956. doi: 10.1016/j.amc.2024.128956. [55] JIN Weiqiang, TIAN Xingwu, SHI Bohang, et al. Enhanced UAV pursuit-evasion using Boids Modelling: A synergistic integration of bird swarm intelligence and DRL[J]. Computers, Materials and Continua, 2024, 80(3): 3523–3553. doi: 10.32604/cmc.2024.055125. [56] ZHAO Feifei, ZENG Yi, HAN Bing, et al. Nature-inspired self-organizing collision avoidance for drone swarm based on reward-modulated spiking neural network[J]. Patterns, 2022, 3(11): 100611. doi: 10.1016/J.PATTER.2022.100611. [57] 谢觉非. 城市物流场景下基于复合人工势场的无人机避障控制技术研究[D]. [硕士论文], 电子科技大学, 2025. DOI: 10.27005/d.cnki.gdzku.2025.004243.XIE Juefei. Research on UAV obstacle avoidance control technology based on composite artificial potential field in urban logistics scenarios[D]. [Master dissertation], University of Electronic Science and Technology of China, 2025. DOI: 10.27005/d.cnki.gdzku.2025.004243. [58] ABPEIKAR S, KASMARIK K, and GARRATT M. Reinforcement learning for collective motion tuning in the presence of extrinsic goals[C]. 35th Australasian Joint Conference on Artificial Intelligence, Perth, Australia, 2022: 761–774. doi: 10.1007/978-3-031-22695-3_53. [59] ZENG Qingli and NAIT-ABDESSELAM F. Multi-agent reinforcement learning-based extended Boid modeling for drone swarms[C]. ICC 2024-IEEE International Conference on Communications, Denver, USA, 2024: 1551–1556. doi: 10.1109/ICC51166.2024.10622479. [60] LIU Zhijun, LI Jie, SHEN Jian, et al. Leader–follower UAVs formation control based on a deep Q-network collaborative framework[J]. Scientific Reports, 2024, 14(1): 4674. doi: 10.1038/s41598-024-54531-w. [61] TANG Ruipeng, TANG Jianrui, TALIP M S A, et al. Enhanced multi agent coordination algorithm for drone swarm patrolling in durian orchards[J]. Scientific Reports, 2025, 15(1): 9139. doi: 10.1038/s41598-025-88145-7. [62] GUO Yunxiao, XIE Xinjia, ZHAO Runhao, et al. Cooperation and competition: Flocking with evolutionary multi-agent reinforcement learning[C]. International Conference on Neural Information Processing. Cham: Springer International Publishing, 2022: 271–283. doi: 10.1007/978-3-031-30105-6_23. [63] HAHN C, PHAN T, GABOR T, et al. Emergent escape-based flocking behavior using multi-agent reinforcement learning[C]. Artificial Life Conference Proceedings, 2019: 598–605. doi: 10.1162/isal_a_00226. [64] LAUDENZI G. Multi-agent deep reinforcement learning for drone swarms in static and dynamic environments[D]. [Master dissertation], Università of Bologna, 2024. [65] ABPEIKAR S, KASMARIK K, GARRATT M, et al. Automatic collective motion tuning using actor-critic deep reinforcement learning[J]. Swarm and Evolutionary Computation, 2022, 72: 101085. doi: 10.1016/j.swevo.2022.101085. [66] WANG Jun, ZHANG Yuchen, HE Leimin, et al. A bio-inspired adaptive formation architecture based on multi-agents with application to UAV swarm[C]. 2024 IEEE International Conference on Unmanned Systems (ICUS), Nanjing, China, 2024: 908–914. doi: 10.1109/ICUS61736.2024.10840152. [67] WANG Dongzi, DING Bo, and FENG Dawei. Meta reinforcement learning with generative adversarial reward from expert knowledge[C]. 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 2020: 1–7. doi: 10.1109/ICISCAE51034.2020.9236869. [68] QIAN Feng, SU Kai, LIANG Xin, et al. Task assignment for UAV swarm saturation attack: A deep reinforcement learning approach[J]. Electronics, 2023, 12(6): 1292. doi: 10.3390/electronics12061292. [69] LI Chengshu, ZHANG Ruohan, WONG J, et al. BEHAVIOR-1K: A human-centered, embodied AI benchmark with 1, 000 everyday activities and realistic simulation[EB/OL]. https://arxiv.org/abs/2403.09227, 2024. [70] ANTONELO E A, COUTO G C K, and MÖLLER C. Exploring multimodal implicit behavior learning for vehicle navigation in simulated cities[EB/OL]. https://arxiv.org/abs/2509.15400, 2025. [71] CHI Pei, WEI Jiahong, WU Kun, et al. A bio-inspired decision-making method of UAV swarm for attack-defense confrontation via multi-agent reinforcement learning[J]. Biomimetics, 2023, 8(2): 222. doi: 10.3390/biomimetics8020222. [72] YUE Longfei, YANG Rennong, ZUO Jialiang, et al. Unmanned aerial vehicle swarm cooperative decision-making for SEAD mission: A hierarchical multiagent reinforcement learning approach[J]. IEEE Access, 2022, 10: 92177–92191. doi: 10.1109/ACCESS.2022.3202938. [73] ARRANZ R, CARRAMIÑANA D, DE MIGUEL G, et al. Application of deep reinforcement learning to UAV swarming for ground surveillance[J]. Sensors, 2023, 23(21): 8766. doi: 10.3390/s23218766. [74] CAI He, MA Fu, NI Ruifeng, et al. Bio-inspired swarm confrontation algorithm for complex hilly terrains[J]. Biomimetics, 2025, 10(5): 257. doi: 10.3390/biomimetics10050257. [75] WEI Xiaolong, CUI Wenpeng, HUANG Xianglin, et al. Hierarchical RNNs with graph policy and attention for drone swarm[J]. Journal of Computational Design and Engineering, 2024, 11(2): 314–326. doi: 10.1093/jcde/qwae031. [76] TAPPLER M, LOPEZ-MIGUEL I D, TSCHIATSCHEK S, et al. Rule-guided reinforcement learning policy evaluation and improvement[EB/OL]. https://arxiv.org/abs/2503.09270, 2025. [77] ZHANG Xiaorong, WANG Yufeng, DING Wenrui, et al. Bio-inspired fission–fusion control and planning of unmanned aerial vehicles swarm systems via reinforcement learning[J]. Applied Sciences, 2024, 14(3): 1192. doi: 10.3390/app14031192. [78] XU Dan and CHEN Gang. The research on intelligent cooperative combat of UAV cluster with multi-agent reinforcement learning[J]. Aerospace Systems, 2022, 5(1): 107–121. doi: 10.1007/s42401-021-00105-x. -

下载:

下载:

下载:

下载: