Residual Subspace Prototype Constraint for SAR Target Class-Incremental Recognition

-

摘要: 合成孔径雷达(SAR)目标识别系统在开放环境中的部署常面临新类别持续涌现的挑战。该文提出一种残差子空间原型学习约束的SAR目标类增量识别方法,通过构建轻量级任务专属的适配器扩展特征子空间,有效学习新类并缓解灾难性遗忘。首先利用自监督学习预训练骨干网络,提取SAR数据的通用特征表示。在增量学习阶段,冻结主干网络,训练残差适配器学习新旧类的差异化特征,使模型聚焦于判别性特征的变化,缓解灾难性遗忘。针对特征空间扩展导致的旧类原型失效问题,提出结构化约束的原型补全机制,在无回放条件下合成旧类在新子空间的原型表示。推理时,根据目标与集成子空间原型的相似度进行预测。在MSTAR, SAMPLE和SAR-ACD数据集上的实验验证了该方法的有效性。Abstract:

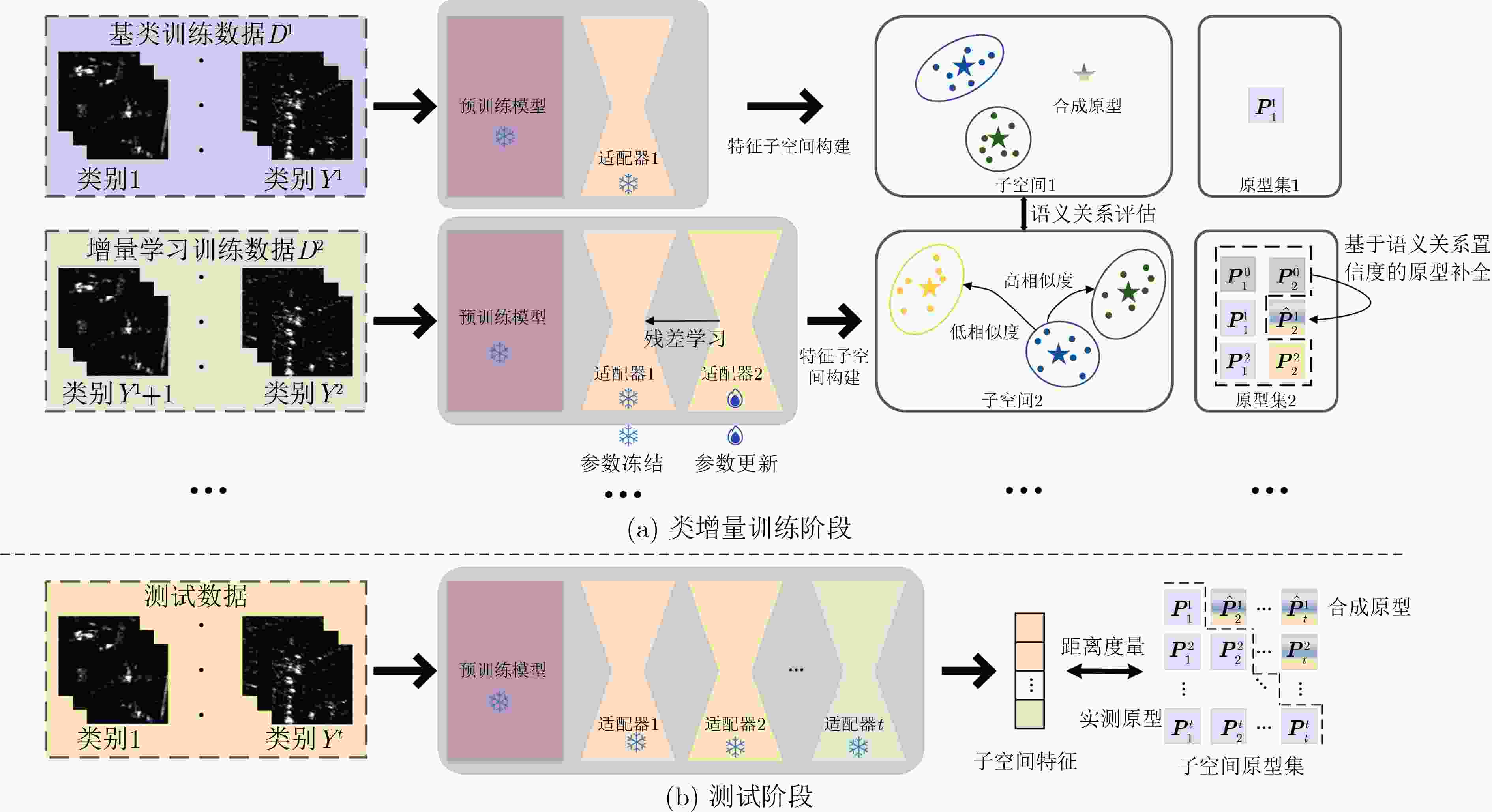

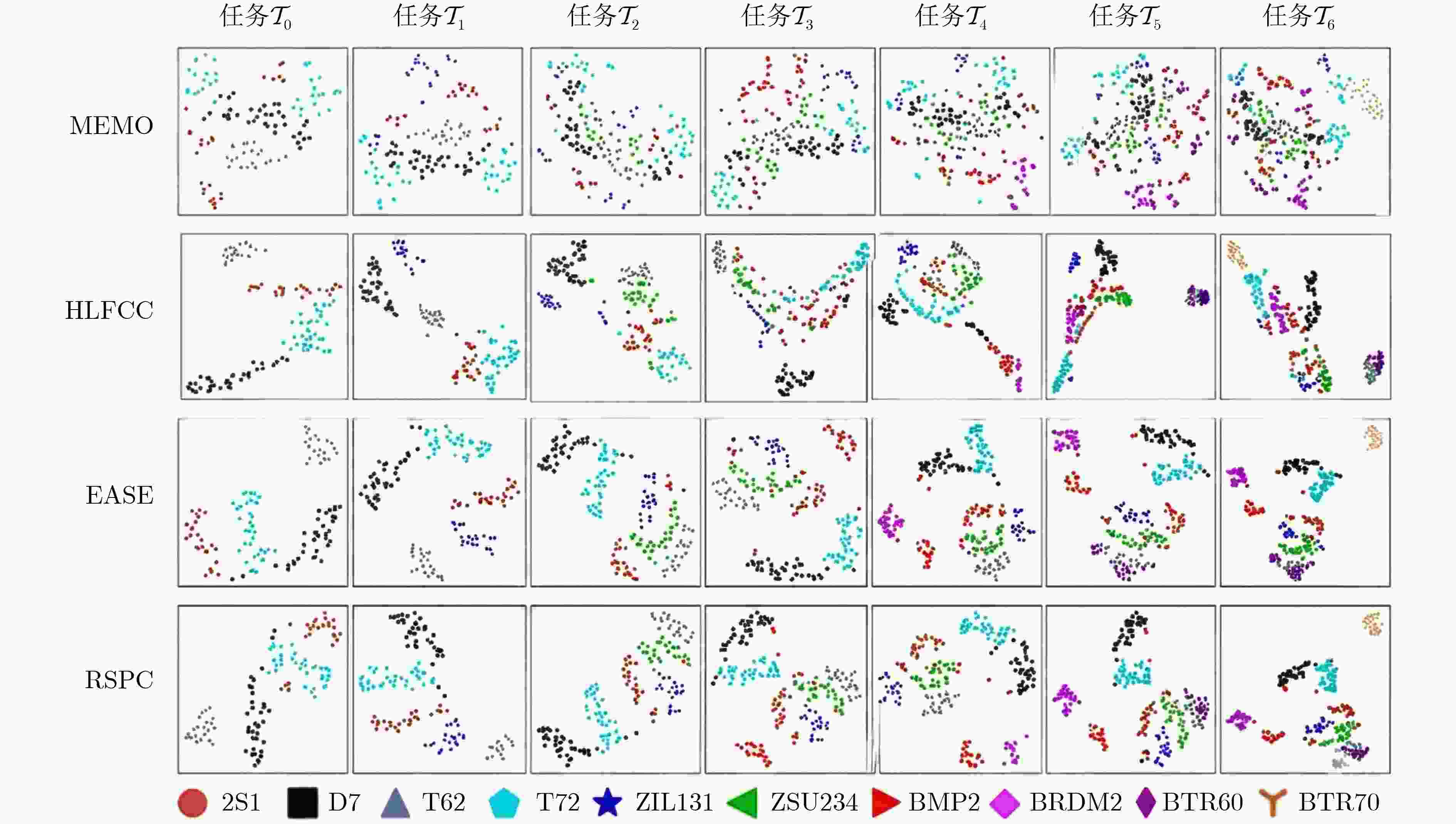

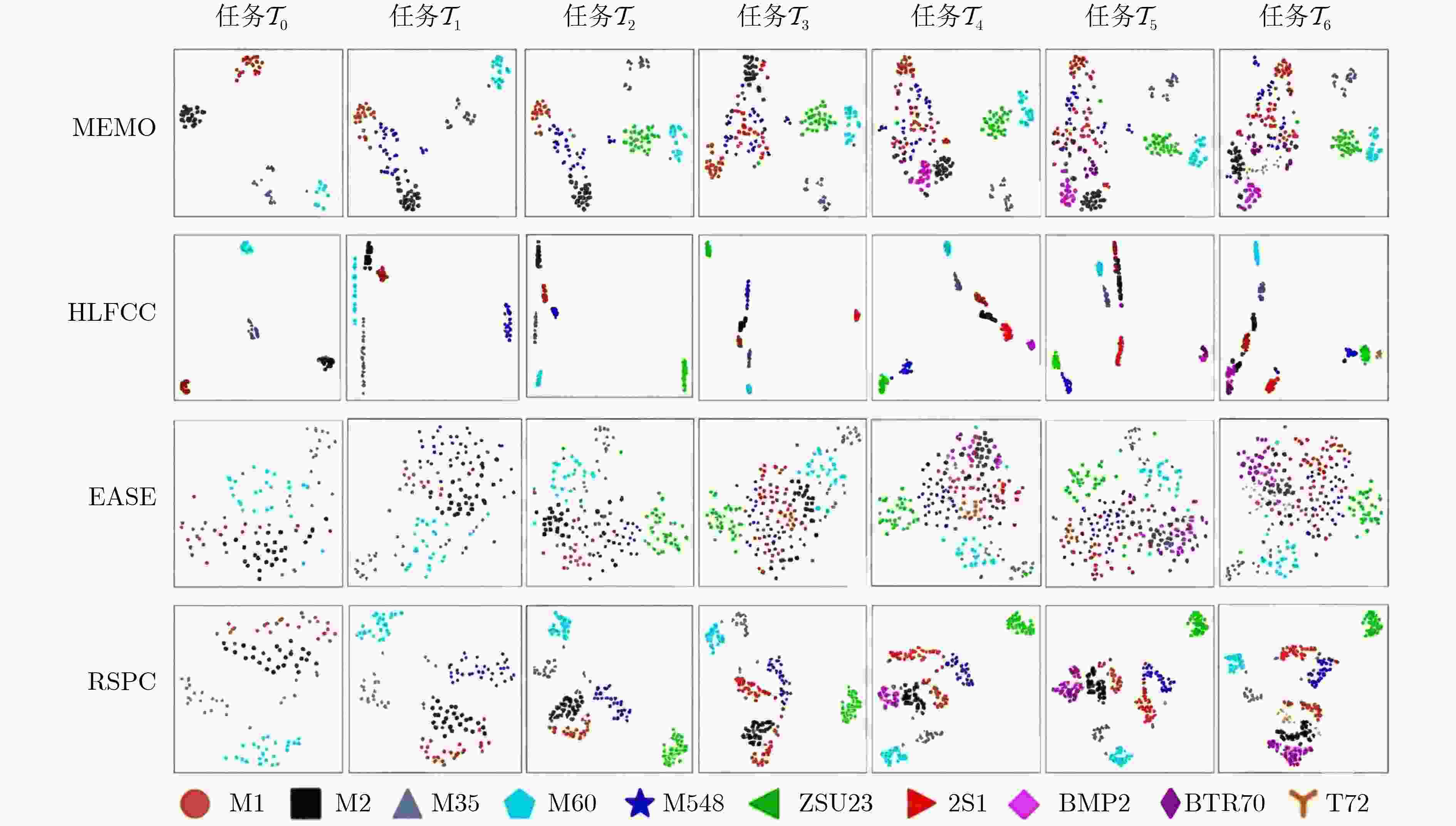

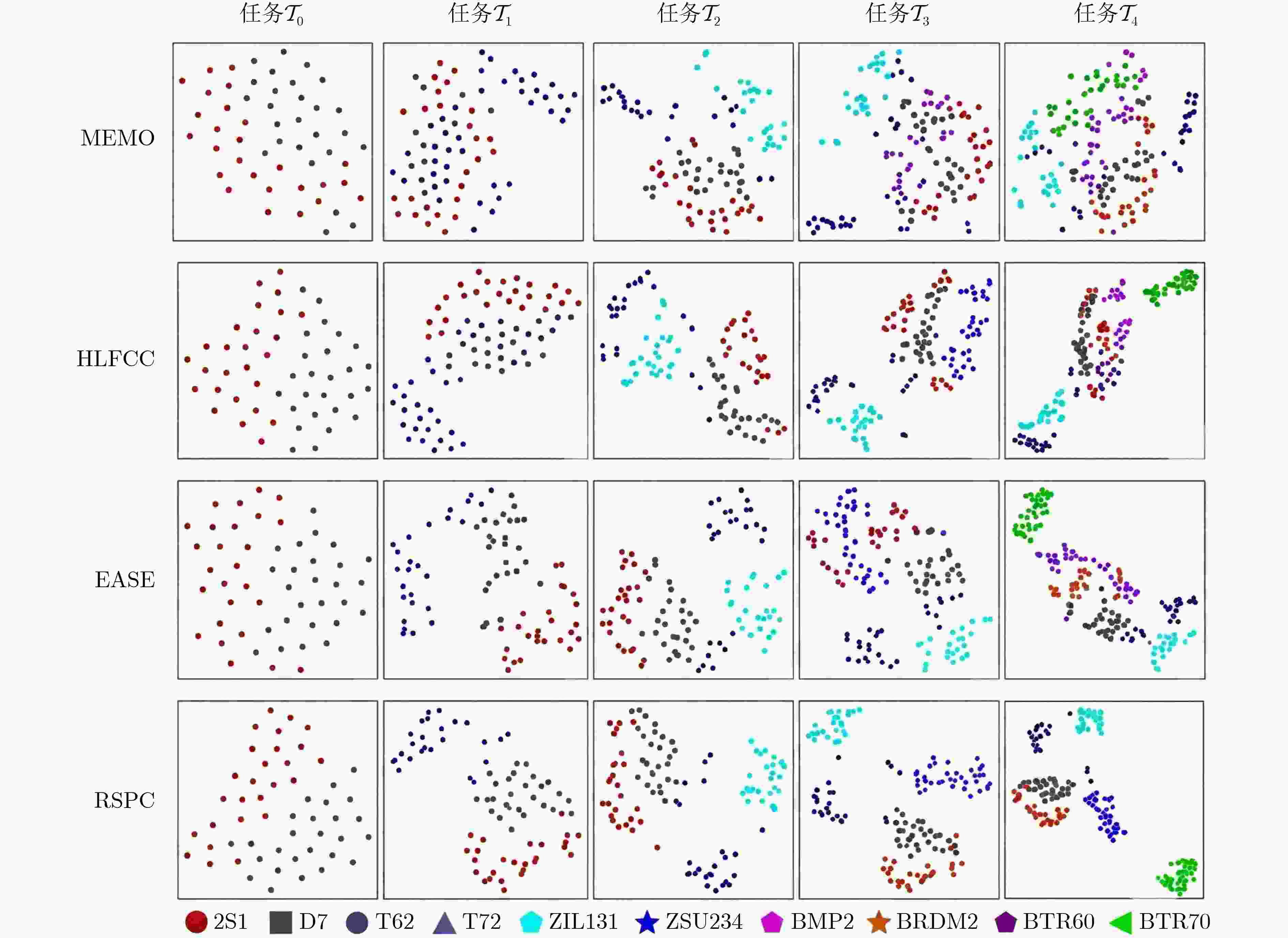

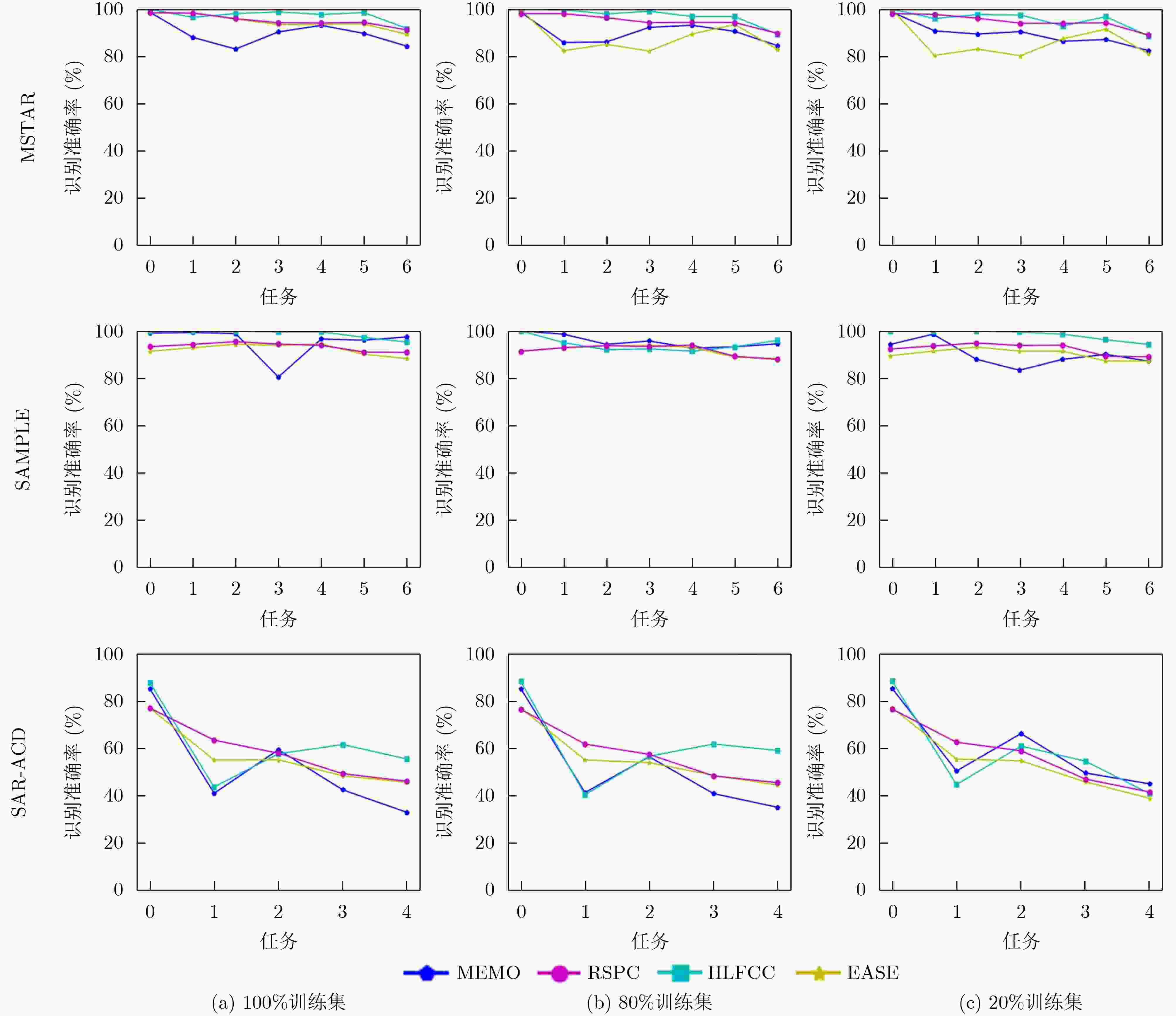

Synthetic Aperture Radar (SAR) target recognition systems deployed in open environments frequently encounter continuously emerging categories. This paper proposes a SAR target class-incremental recognition method named Residual Subspace Prototype Constraint (RSPC). RSPC constructs lightweight, task-specific adapters to expand the feature subspace, enabling effective learning of new classes and alleviating catastrophic forgetting. First, self-supervised learning is used to pretrain the backbone network to extract generic feature representations from SAR data. During incremental learning, the backbone network is frozen, and residual adapters are trained to focus on changes in discriminative features. To address old-class prototype invalidation caused by feature space expansion, a structured constraint-based prototype completion mechanism is proposed to synthesize prototypes of old classes in the new subspace without replaying historical data. During inference, predictions are made based on the similarity between the input target and the integrated prototypes from all subspaces. Experiments on the MSTAR, SAMPLE, and SAR-ACD datasets validate the effectiveness of RSPC. Objective SAR target recognition in dynamic environments must learn new classes while preserving previously acquired knowledge. Rehearsal-based methods are often impractical because of data privacy and storage constraints in real-world applications. Moreover, conventional pretraining suffers from high interclass scattering similarity and ambiguous decision boundaries, which represents a challenge different from typical catastrophic forgetting. A rehearsal-free framework is proposed to model discriminative feature evolution and reconstruct old-class prototypes in expanded subspaces. This framework enables robust, efficient, and scalable SAR target recognition without rehearsal. Methods A RSPC framework is proposed for SAR target class-incremental recognition and is built on a pretrained Vision Transformer backbone. During the incremental phase, the backbone is frozen, and a lightweight residual adapter is trained for each new task to learn the residual feature difference between the current task and the historical average, thereby forming a task-specific discriminative subspace. To address prototype decay in expanded subspaces, a structured prototype completion mechanism is introduced. This mechanism synthesizes the prototype of a historical class in the current subspace by aggregating its observed prototypes from all prior subspaces in which it is learned, weighted by a confidence score derived from three geometric consistency metrics: norm ratio, angular similarity, and Euclidean distance between the historical class and all current new classes within each prior subspace. Optimization of the residual adapter is guided by a dual-constraint loss, including a prototype contrastive loss that enforces intraclass compactness and interclass separation, and a subspace orthogonality loss that maximizes the angular distance between the residual features of a sample across consecutive subspaces, thereby preventing feature reuse and promoting task-specific learning. Results and Discussions RSPC achieves the highest Average Incremental Accuracy (AIA) and the lowest Precision Drop (PD) among all rehearsal-free methods across all three datasets ( Table 4 ~6 ). On MSTAR, RSPC achieves an AIA of 95.23% (N=1) and 94.83% (N=2), outperforming the best baseline EASE by 0.58% and 0.38%, respectively, while reducing PD by 1.90% and 1.21%. On SAMPLE, RSPC achieves an AIA of 93.30% (N=1) and 93.23% (N=2), exceeding EASE by 1.15% and 2.31 percentage points with substantially lower PD. On the more challenging SAR-ACD dataset, RSPC achieves an AIA of 58.69% (N=1) and 60.35% (N=2), demonstrating superior performance over EASE and SimpleCIL and approaching the performance of rehearsal-based methods ILFL and HLFCC. The t-SNE visualizations (Fig. 2 ~4 ) show that RSPC produces more compact and well-separated class clusters than EASE and MEMO and provides improved interclass boundary discrimination compared with DualPrompt and APER_SSF. The ablation study (Table 7 $ \sim $9 ) confirms that both the prototype contrastive loss and the subspace orthogonality loss are essential. Their joint use usually yields the highest AIA and the lowest PD across all datasets, demonstrating complementary effects on discriminability and feature disentanglement. Under low-data conditions (Fig. 5 ), RSPC maintains superior performance and achieves higher accuracy than EASE when only 20% of new-class training samples are available, indicating strong data efficiency.Conclusions A rehearsal-free incremental learning framework, RSPC, is presented for SAR target recognition to mitigate catastrophic forgetting caused by high interclass scattering similarity. RSPC employs a residual subspace mechanism to capture discriminative feature increments and a structured prototype completion strategy to reconstruct stable prototypes without historical data. Experiments on three benchmarks show that RSPC substantially outperforms existing rehearsal-free methods and rivals rehearsal-based approaches, establishing a state-of-the-art solution for scalable and privacy-preserving recognition. Robust performance in low-data regimes further supports its suitability for deployment in resource-constrained and privacy-sensitive scenarios. -

表 1 MSTAR数据集配置

类别 训练集 测试集 基类 2S1 299 274 D7 299 274 T62 299 273 T72 233 196 增量类 ZIL131 299 274 ZSU234 299 274 BMP2 233 195 BRDM2 298 274 BTR60 256 195 BTR70 233 196 表 2 SAMPLE数据集配置

类别 训练集 测试集 基类 M1 103 26 M2 102 26 M35 103 26 M60 140 36 增量类 M548 102 26 ZSU23 139 35 2S1 139 35 BMP2 85 22 BTR70 73 19 T72 86 22 表 3 SAR-ACD数据集配置

类别 训练集 测试集 基类 A220 371 93 A330 409 103 增量类 A320 408 102 ARJ21 411 103 Boeing 737 422 106 Boeing 787 403 101 表 4 不同方法的MSTAR数据集类增量学习识别结果(%)

方法 N=1 N=2 $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ {A}_{{{\mathcal{T}}_{4}}} $ $ {A}_{{{\mathcal{T}}_{5}}} $ $ {{{A}_{\mathcal{T}}}}_{6} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ MEMO 98.72 88.07 83.07 90.34 93.12 89.69 84.29 89.61 14.43 98.72 84.41 91.2 89.48 90.95 9.24 EWC 98.72 21.22 17.51 15.57 13.47 12.29 8.04 26.69 90.68 98.72 21.41 19.96 15.63 38.93 83.09 ILFL 99.98 97.91 95.65 96.14 94.49 97.58 93.32 96.44 6.66 99.98 98.08 98.33 96.54 98.23 3.44 HLFCC 99.98 96.51 98.08 98.81 97.74 98.52 91.79 97.35 8.19 99.98 98.98 99.26 97.44 98.92 2.54 L2P 99.98 78.78 64.98 57.78 50.00 45.61 41.94 62.72 58.04 99.98 92.65 88.20 74.93 88.94 25.05 SimpleCIL 98.43 98.14 95.65 93.75 93.95 94.13 89.77 94.83 8.66 98.43 95.65 93.95 89.77 94.45 8.66 Aper_SSF 99.90 94.58 87.86 88.58 89.43 87.13 80.82 89.76 19.08 99.90 87.86 89.43 80.82 89.50 19.08 DualPrompt 99.90 78.70 64.92 57.73 49.95 45.56 41.90 62.67 58.01 99.90 76.23 73.50 60.66 77.57 39.24 EASE 98.43 98.30 95.78 93.47 93.56 93.72 89.28 94.65 9.15 98.43 95.65 93.95 89.77 94.45 8.66 RSPC 98.43 98.34 95.97 94.23 94.12 94.35 91.18 95.23 7.25 98.43 95.78 94.11 90.98 94.83 7.45 表 5 不同方法的SAMPLE数据集类增量学习识别结果(%)

方法 N=1 N=2 $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ {A}_{{{\mathcal{T}}_{4}}} $ $ {A}_{{{\mathcal{T}}_{5}}} $ $ {{{A}_{\mathcal{T}}}}_{6} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ MEMO 99.04 99.29 98.86 80.48 96.55 96.02 97.44 95.38 1.60 99.04 97.71 93.97 96.34 96.77 2.70 EWC 99.02 18.57 14.86 12.38 11.21 10.36 9.52 25.13 89.50 99.02 14.86 11.21 9.52 33.65 89.5 ILFL 99.98 99.29 99.98 99.52 98.28 96.81 97.80 98.81 2.18 99.98 98.86 99.57 97.8 99.05 2.18 HLFCC 99.98 99.98 99.98 99.52 99.57 97.24 95.22 98.78 4.76 99.98 98.86 99.14 97.8 98.95 2.18 L2P 81.32 74.29 59.43 49.52 44.83 41.43 38.10 55.56 43.22 81.32 67.43 46.12 46.52 60.35 34.8 SimpleCIL 92.31 93.57 94.86 94.29 93.97 90.44 89.01 92.64 3.30 92.31 94.24 93.97 89.01 92.38 3.30 Aper_SSF 99.04 95.02 95.39 93.81 90.09 85.26 81.32 91.50 17.72 99.04 96.00 90.09 81.32 91.61 17.72 DualPrompt 94.23 70.12 56.00 46.67 42.24 39.04 36.26 54.92 57.97 94.23 55.43 40.52 33.70 55.97 60.53 EASE 91.35 92.86 94.29 93.81 94.40 90.04 88.28 92.15 3.07 91.35 94.29 92.67 85.35 90.92 6.00 RSPC 93.28 94.26 95.43 94.39 93.88 91.01 90.88 93.30 2.40 93.28 94.56 94.38 90.69 93.23 2.59 表 6 不同方法的SAR-ACD数据集类增量学习识别结果(%)

方法 N=1 N=2 $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ {A}_{{{\mathcal{T}}_{4}}} $ AIA PD $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ AIA PD MEMO 85.13 40.94 59.35 42.41 32.89 52.14 52.24 85.13 51.87 39.14 58.71 45.99 EWC 85.02 34.23 25.44 20.12 16.78 38.32 68.24 85.02 40.15 31.09 52.09 53.93 ILFL 87.69 43.62 56.61 62.13 57.24 61.46 30.45 87.69 55.86 58.55 67.37 29.14 HLFCC 87.69 43.62 57.61 61.54 55.43 61.18 32.26 87.69 55.36 59.05 67.37 28.64 L2P 78.97 50.67 32.92 27.81 23.19 42.71 55.78 78.97 50.62 35.20 54.93 43.77 SimpleCIL 76.41 62.75 58.10 48.13 44.90 58.06 31.51 76.41 58.10 44.90 59.80 31.51 Aper_SSF 84.62 53.36 39.90 30.37 26.15 46.88 58.47 84.62 39.90 26.15 50.22 58.47 DualPrompt 76.92 50.34 37.66 28.01 23.36 43.26 53.56 76.92 58.10 29.77 54.93 47.15 EASE 76.92 55.03 55.11 48.32 45.56 56.19 31.36 76.92 38.40 33.88 49.73 43.04 RSPC 76.92 63.41 57.86 49.22 46.04 58.69 30.88 76.92 58.80 45.33 60.35 31.59 表 7 MSTAR数据集上RSPC不同损失组合的性能对比(%)

损失 N=1 N=2 $ {L}_{\text{contrastive}} $ $ {L}_{\text{ortho}} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ {A}_{{{\mathcal{T}}_{4}}} $ $ {A}_{{{\mathcal{T}}_{5}}} $ $ {{{A}_{\mathcal{T}}}}_{6} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ × × 98.43 98.30 95.56 93.49 93.56 93.80 89.11 94.61 9.32 98.43 95.65 93.56 89.80 94.36 8.63 × √ 98.43 98.30 95.82 94.29 93.89 94.46 90.89 95.87 7.54 98.43 95.65 93.95 90.98 94.75 7.45 √ × 98.43 98.41 96.01 93.82 94.12 94.17 91.07 95.15 7.36 98.43 95.80 94.18 91.01 94.86 7.42 √ √ 98.43 98.34 95.97 94.23 94.12 94.35 91.18 95.23 7.25 98.43 95.78 94.11 90.98 94.83 7.45 表 9 SAR-ACD数据集上RSPC不同损失组合的性能对比(%)

损失 N=1 N=2 $ {L}_{\text{contrastive}} $ $ {L}_{\text{ortho}} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ {A}_{{{\mathcal{T}}_{4}}} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ × × 76.92 56.98 55.98 48.45 45.60 56.79 31.32 76.92 40.89 35.98 51.26 40.94 × √ 76.92 60.45 58.32 49.27 46.78 58.35 30.14 76.92 57.39 42.89 59.07 34.03 √ × 76.92 61.39 56.90 48.77 46.02 58.00 30.90 76.92 54.62 41.09 57.54 35.83 √ √ 76.92 63.41 57.86 49.22 46.04 58.69 30.88 76.92 58.80 45.33 60.35 31.59 表 8 SAMPLE数据集上RSPC不同损失组合的性能对比(%)

损失 N=1 N=2 $ {L}_{\text{contrastive}} $ $ {L}_{\text{ortho}} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ {A}_{{{\mathcal{T}}_{4}}} $ $ {A}_{{{\mathcal{T}}_{5}}} $ $ {{{A}_{\mathcal{T}}}}_{6} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ $ {{{A}_{\mathcal{T}}}}_{0} $ $ {A}_{{{\mathcal{T}}_{1}}} $ $ {A}_{{{\mathcal{T}}_{2}}} $ $ {A}_{{{\mathcal{T}}_{3}}} $ $ \mathrm{AIA} $ $ \mathrm{PD} $ × × 93.28 92.86 94.31 93.94 93.98 90.41 88.47 92.46 4.81 93.28 94.29 92.09 86.29 91.49 6.99 × √ 93.28 93.23 94.98 94.32 93.59 90.71 90.98 93.01 2.30 93.28 94.67 93.96 89.73 92.91 3.55 √ × 93.28 94.18 95.55 94.39 93.83 94.59 90.79 93.80 2.49 93.28 94.58 94.12 88.40 92.60 4.88 √ √ 93.28 94.26 95.43 94.39 93.88 91.01 90.88 93.30 2.40 93.28 94.56 94.38 90.69 93.23 2.59 -

[1] 罗汝, 赵凌君, 何奇山, 等. SAR图像飞机目标智能检测识别技术研究进展与展望[J]. 雷达学报, 2024, 13(2): 307–330. doi: 10.12000/JR23056.LUO Ru, ZHAO Lingjun, HE Qishan, et al. Intelligent technology for aircraft detection and recognition through SAR imagery: Advancements and prospects[J]. Journal of Radars, 2024, 13(2): 307–330. doi: 10.12000/JR23056. [2] 翁星星, 庞超, 许博文, 等. 面向遥感图像解译的增量深度学习[J]. 电子与信息学报, 2024, 46(10): 3979–4001. doi: 10.11999/JEIT240172.WENG Xingxing, PANG Chao, XU Bowen, et al. Incremental deep learning for remote sensing image interpretation[J]. Journal of Electronics & Information Technology, 2024, 46(10): 3979–4001. doi: 10.11999/JEIT240172. [3] ZHOU Dawei, CAI Ziwen, YE Hanjia, et al. Revisiting class-incremental learning with pre-trained models: Generalizability and adaptivity are all you need[J]. International Journal of Computer Vision, 2025, 133(3): 1012–1032. doi: 10.1007/s11263-024-02218-0. [4] MCDONNELL M D, GONG Dong, PARVENEH A, et al. RanPAC: Random projections and pre-trained models for continual learning[C]. The 37th International Conference on Neural Information Processing Systems, New Orleans, United States, 2023: 526. doi: 10.48550/arXiv.2307.02251. [5] ZHOU Dawei, SUN HaiLong, YE Hanjia, et al. Expandable subspace ensemble for pre-trained model-based class-incremental learning[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, United States, 2024: 23554–23564. doi: 10.1109/CVPR52733.2024.02223. [6] SUN Hailong, ZHOU Dawei, ZHAO Hanbin, et al. MOS: Model surgery for pre-trained model-based class-incremental learning[C]. The 39th AAAI Conference on Artificial Intelligence, Philadelphia, United States, 2025: 20699–20707. doi: 10.1609/aaai.v39i19.34281. [7] 赵琰, 赵凌君, 张思乾, 等. 自监督解耦动态分类器的小样本类增量SAR图像目标识别[J]. 电子与信息学报, 2024, 46(10): 3936–3948. doi: 10.11999/JEIT231470.ZHAO Yan, ZHAO Lingjun, ZHANG Siqian, et al. Few-shot class-incremental SAR image target recognition using self-supervised decoupled dynamic classifier[J]. Journal of Electronics & Information Technology, 2024, 46(10): 3936–3948. doi: 10.11999/JEIT231470. [8] LI Bin, CUI Zongyong, SUN Yuxuan, et al. Density coverage-based exemplar selection for incremental SAR automatic target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5211713. doi: 10.1109/TGRS.2023.3293509. [9] DANG Sihang, CAO Zongjie, CUI Zongyong, et al. Open set incremental learning for automatic target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(7): 4445–4456. doi: 10.1109/TGRS.2019.2891266. [10] DANG Sihang, CAO Zongjie, CUI Zongyong, et al. Class boundary exemplar selection based incremental learning for automatic target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(8): 5782–5792. doi: 10.1109/TGRS.2020.2970076. [11] ZHAO Yan, ZHAO Lingjun, DING Ding, et al. Few-shot class-incremental SAR target recognition via cosine prototype learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5212718. doi: 10.1109/TGRS.2023.3298016. [12] XU Yanjie, SUN Hao, ZHAO Yan, et al. Simulated data feature guided evolution and distillation for incremental SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5215917. doi: 10.1109/TGRS.2024.3419794. [13] ZHOU Yongsheng, ZHANG Shuo, SUN Xiaokun, et al. SAR target incremental recognition based on hybrid loss function and class-bias correction[J]. Applied Sciences, 2022, 12(3): 1279. doi: 10.3390/app12031279. [14] OVEIS A H, GIUSTI E, GHIO S, et al. Incremental learning in synthetic aperture radar images using openmax algorithm[C]. The IEEE Radar Conference, San Antonio, United States, 2023: 1–6. doi: 10.1109/RadarConf2351548.2023.10149627. [15] HU Chao, HAO Ming, WANG Wenying, et al. Incremental learning using feature labels for synthetic aperture radar automatic target recognition[J]. IET Radar, Sonar & Navigation, 2022, 16(11): 1872–1880. doi: 10.1049/rsn2.12303. [16] WANG Li, YANG Xinyao, TAN Haoyue, et al. Few-shot class-incremental SAR target recognition based on hierarchical embedding and incremental evolutionary network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5204111. doi: 10.1109/TGRS.2023.3248040. [17] SHI Qian, HE Da, LIU Zhengyu, et al. Globe230k: A benchmark dense-pixel annotation dataset for global land cover mapping[J]. Journal of Remote Sensing, 2023, 3: 0078. doi: 10.34133/remotesensing.0078. [18] HU Fengming, XU Feng, WANG R, et al. Conceptual study and performance analysis of tandem multi-antenna spaceborne SAR interferometry[J]. Journal of Remote Sensing, 2024, 4: 0137. doi: 10.34133/remotesensing.0137. [19] MEI Shaohui, LIAN Jiawei, WANG Xiaofei, et al. A comprehensive study on the robustness of deep learning-based image classification and object detection in remote sensing: Surveying and benchmarking[J]. Journal of Remote Sensing, 2024, 4: 0219. doi: 10.34133/remotesensing.0219. -

下载:

下载:

下载:

下载: