Knowledge-guided Few-shot Earth Surface Anomalies Detection

-

摘要: 地表异常(ESA)是指地球表面因自然或人为因素引发的突发性灾害事件,具有强破坏性和广泛影响,及时准确地发现各类地表异常事件对社会安全与可持续发展具有重要意义。遥感技术是地表异常检测的重要手段,但受限于标注数据匮乏、地表异常遥感影像背景复杂,以及多源遥感影像分布差异等因素,基于深度学习的异常检测模型性能有限。因此,该文提出一种知识引导的小样本学习方法,在异常遥感影像样本稀缺时引入语言知识提升分类性能。该方法利用大语言模型为不同遥感影像类别生成抽象化的文本描述,从语言模态角度刻画常规地物与异常地物的特征及其空间语义关系。然后通过文本编码器将文本描述映射到语言特征空间,并设计跨模态语义知识生成模块,自动学习并融合语言与视觉模态的语义表征。同时建立自注意力机制建模上下文关系,将提取的语义上下文信息与视觉原型特征融合,形成跨模态联合表征。该方法有效增强了小样本任务中原型特征的判别性,提高了目标域异常样本与多模态原型特征的匹配准确度。实验表明,该方法能够充分利用语言知识,弥补视觉信息的不足,提升小样本学习模型对地表异常遥感影像的表征能力,在跨域和域内小样本分类任务上均表现出一定优势。Abstract:

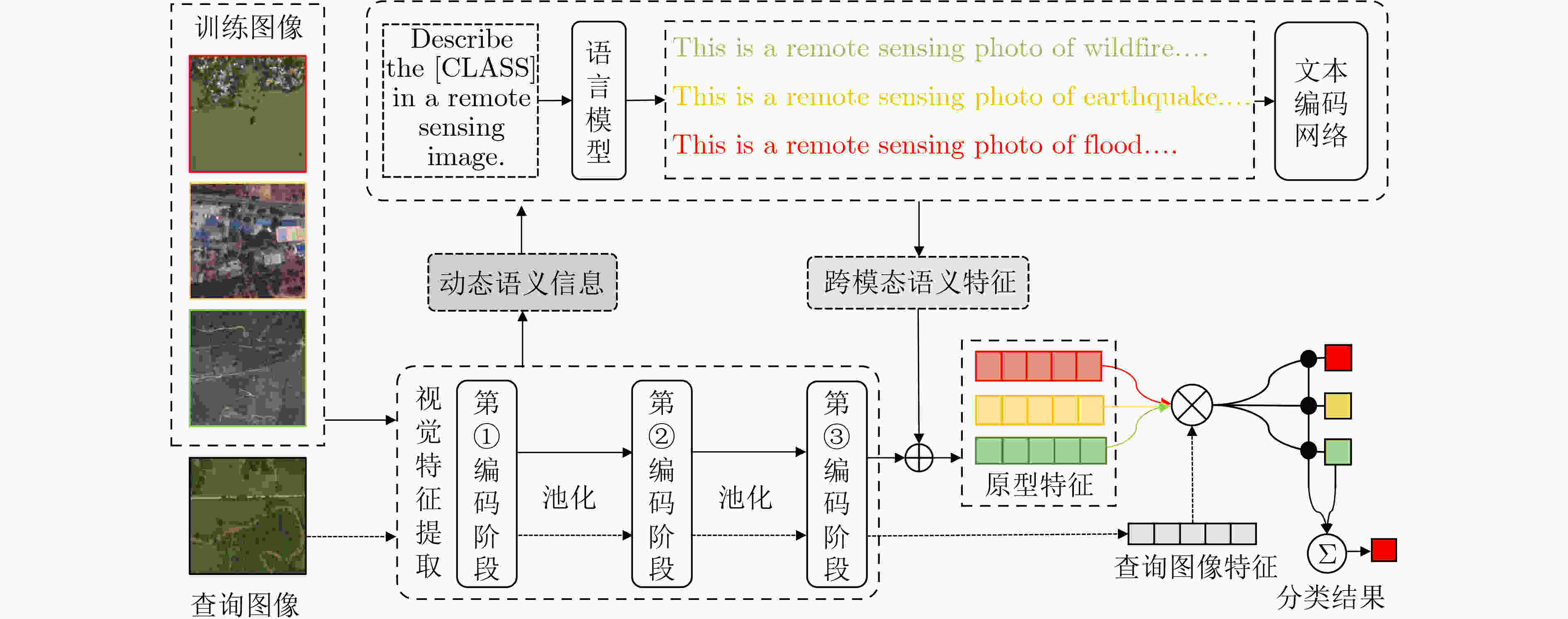

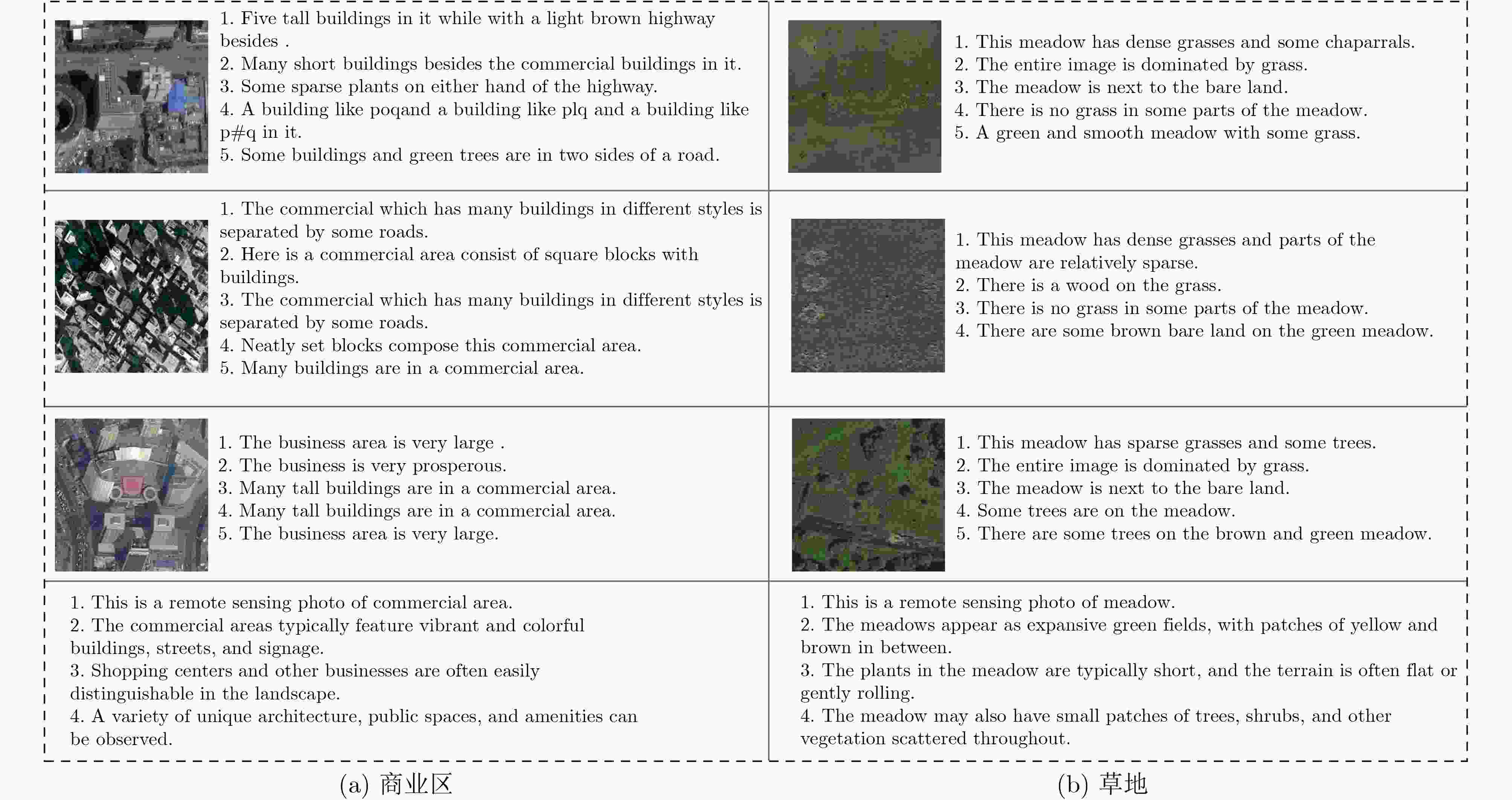

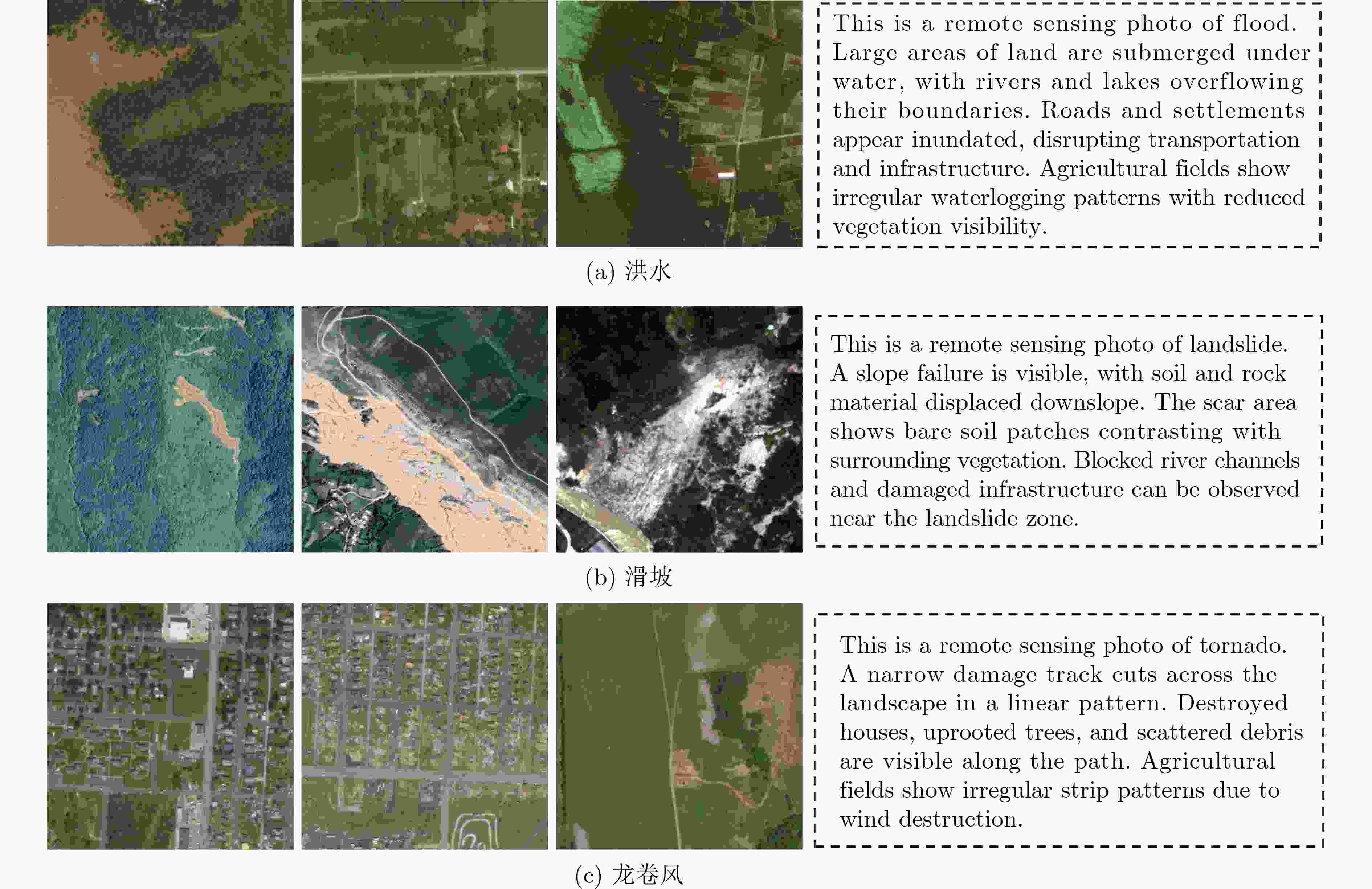

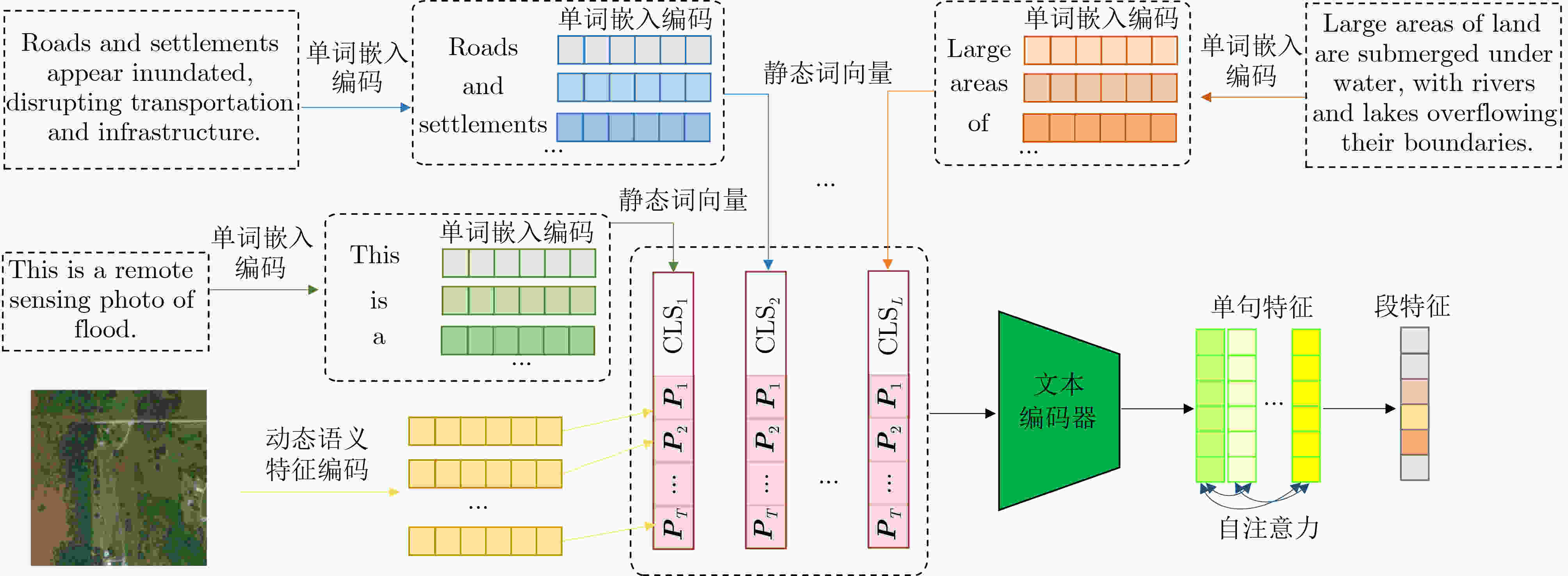

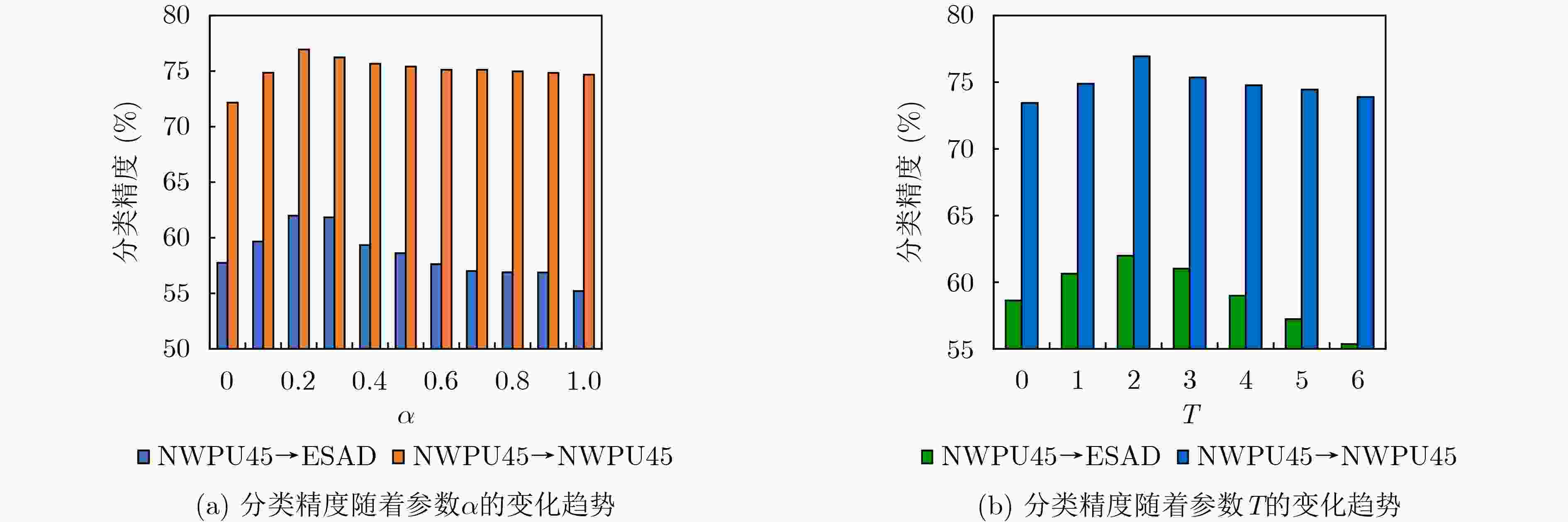

Objective Earth Surface Anomalies (ESAs), defined as sudden natural or human-generated disruptions on the Earth’s surface, present severe risks and widespread effects. Timely and accurate ESA detection is therefore essential for public security and sustainable development. Remote sensing offers an effective approach for this task. However, current deep learning models remain limited due to the scarcity of labeled data, the complexity of anomalous backgrounds, and distribution shifts across multi-source remote sensing imagery. To address these issues, this paper proposes a knowledge-guided few-shot learning method. Large language models generate abstract textual descriptions of normal and anomalous geospatial features. These descriptions are encoded and fused with visual prototypes to construct a cross-modal joint representation. The integrated representation improves prototype discriminability in few-shot settings and demonstrates that linguistic knowledge strengthens ESA detection. The findings suggest a feasible direction for reliable disaster monitoring when annotated data are limited. Methods The knowledge-guided few-shot learning method is constructed on a metric-based paradigm in which each episode contains support and query sets, and classification is achieved by comparing query features with class prototypes through distance-based similarity and cross-entropy optimization ( Fig. 1 ). To supplement limited visual prototypes, class-level textual descriptions are generated with ChatGPT through carefully designed prompts, producing semantic sentences that characterize the appearance, attributes, and contextual relations of normal and anomalous categories (Fig. 2 ,3 ). These descriptions encode domain-specific properties such as anomaly extent, morphology, and environmental effect, which are otherwise difficult to capture when only a few visual samples are available. The sentences are encoded with a Contrastive Language-Image Pre-training (CLIP) text encoder, and task-adaptive soft prompts are introduced by generating tokens from support features and concatenating them with static embeddings to form adaptive word embeddings. Encoded sentence vectors are processed with a lightweight self-attention module to model dependencies across multiple descriptions and to obtain a coherent paragraph-level semantic representation (Fig. 4 ). The resulting semantic prototypes are fused with the visual prototypes through weighted addition to produce cross-modal prototypes that integrate visual grounding and linguistic abstraction. During training, query samples are compared with the cross-modal prototypes, and optimization is guided by two objectives: a classification loss that enforces accurate query–prototype alignment, and a prototype regularization loss that ensures semantic prototypes are discriminative and well separated. The entire method is implemented in an episodic training framework (Algorithm 1).Results and Discussions The proposed method is evaluated under both cross-domain and in-domain few-shot settings. In the cross-domain case, models are trained on NWPU45 or AID and tested on ESAD to assess ESAs recognition. As shown in the comparisons ( Table 2 ), traditional meta-learning methods such as MAML and Meta-SGD reach accuracies below 50%, whereas metric-based baselines such as ProtoNet and RelationNet demonstrate greater stability but remain limited. The proposed method reaches 61.99% on the NWPU45→ESAD and 59.79% on the AID→ESAD settings, outperforming ProtoNet by 4.72% and 2.67% respectively. In the in-domain setting, where training and testing are conducted on the same dataset, the method reaches 76.94% on NWPU45 and 72.98% on AID, and consistently exceeds state-of-the-art baselines such as S2M2 and IDLN (Table 3 ). Ablation experiments further support the contribution of each component. Using only visual prototypes produces accuracies of 57.74% and 72.16%, and progressively incorporating simple class names, task-oriented templates, and ChatGPT-generated descriptions improves performance. The best accuracy is achieved by combining ChatGPT descriptions, learnable tokens, and an attention-based mechanism, reaching 61.99% and 76.94% (Table 4 ). Parameter sensitivity analysis shows that an appropriate weight for language features (α = 0.2) and the use of two learnable tokens yield optimal performance (Fig. 5 ).Conclusions This paper addresses ESAs detection in remote sensing imagery through a knowledge-guided few-shot learning method. The approach uses large language models to generate abstract textual descriptions for anomaly categories and conventional remote sensing scenes, thereby constructing multimodal training and testing resources. These descriptions are encoded into semantic feature vectors with a pretrained text encoder. To extract task-specific knowledge, a dynamic token learning strategy is developed in which a small number of learnable parameters are guided by visual samples within few-shot tasks to generate adaptive semantic vectors. An attention-based semantic knowledge module models dependencies among language features and produces cross-modal semantic vectors for each class. By fusing these vectors with visual prototypes, the method forms joint multimodal representations used for query-prototype matching and network optimization. Experimental evaluations show that the method effectively leverages prior knowledge contained in pretrained models, compensates for limited visual data, and improves feature discriminability for anomalies recognition. Both cross-domain and in-domain results confirm consistent gains over competitive baselines, highlighting the potential of the approach for reliable application in real-world remote sensing anomalies detection scenarios. -

1 知识引导的小样本学习方法网络训练流程

1:输入:训练图像数据集$ {\mathcal{D}_{\rm{base}}} $,文本描述$ {\boldsymbol{t}} $,文本编码器

$ g( \cdot ) $,软提示生成器$ s( \cdot ) $2:输出:特征提取网络$ f({\text{ }} \cdot {\text{ }};\mathcal{F}) $,软提示生成器$ s( \cdot ) $ 3:repeat 4: 采样小样本任务$ \{ \mathcal{S},\mathcal{Q}\} $; 5: 计算支持样本的视觉特征:$ {\boldsymbol{z}}_k^v = f({{\boldsymbol{x}}_k};\mathcal{F}) $,

$ k \in \{ 1,2,\cdots,K\} $;6: 计算基于视觉特征的软提示$ {{\boldsymbol{P}}^k} = s({\boldsymbol{z}}_k^{\mathrm{v}}) \in {\mathbb{R}^{L \times T \times 512}} $; 7: 创建第$ k $类的语言词嵌入向量

$ {{\hat {\boldsymbol t}}} = {\text{CAT}}({{\boldsymbol{P}}^k},{\boldsymbol{t}}) = {[{\boldsymbol{p}}]_1}{[{\boldsymbol{p}}]_2}\cdots{[{\boldsymbol{p}}]_T}[{\boldsymbol{t}}] $;8: 计算第$ k $类别的跨模态语义嵌入特征

$ {\boldsymbol{a}} = \{ {{\boldsymbol{a}}_l}\} _{l = 1}^L = \{ g({{{\hat {\boldsymbol t}}}_l})\} _{l = 1}^L $;9: 利用式(10)和式(11)计算自注意重构语言特征向量$ {{\hat {\boldsymbol{a}}}} $; 10: 通计算跨模态原型特征$ {{\boldsymbol{z}}_k} = {\boldsymbol{z}}_k^{\mathrm{v}} + \alpha {{\hat {\boldsymbol{a}}}} $; 11: 计算查询样本特征$ {{\boldsymbol{z}}_{\mathrm{q}}} = f({{\boldsymbol{x}}_{\mathrm{q}}};\mathcal{F}) $; 12: $ {{\boldsymbol{z}}_k} $和$ {{\boldsymbol{z}}_q} $进行匹配,计算交叉熵损失,回传梯度,更新网络。 13:until 网络收敛 14:返回 $ f({\text{ }} \cdot {\text{ }};\mathcal{F}) $,$ s( \cdot ) $ 表 1 ESAD数据集统计数据

类别 洪水 滑坡 泥石流 飓风 野火 地震 火山喷发 龙卷风 海啸 火灾 森林大火 数量 647 59 48 1296 1201 14 217 254 107 1548 996 表 2 本文和其他方法在ESAD数据集上的跨域小样本分类性能(%)

方法 特征提取网络 NWPU45→ESAD AID→ESAD transfer learning[15] ResNet-12 58.94±0.65 56.12±0.27 S2M2 [31] ResNet-12 56.24±0.38 51.37±0.53 MAML[17] Conv-4-64 46.34±0.42 43.28±0.45 Meta-SGD[32] ResNet-12 49.85±0.77 48.66±2.21 MatchingNet[33] ResNet-12 51.36±0.52 51.77±0.56 ProtoNet[16] ResNet-12 57.27±0.67 57.12±0.66 RelationNet[34] ResNet-12 56.26±0.43 54.84±0.66 本文方法 ResNet-12 61.99±0.22 59.79±0.42 表 3 本文和其他方法在NWPU45和AID数据集上的域内小样本分类性能(%)

方法 特征提取网络 NWPU45 AID transfer baseline[17] ResNet-12 69.02±0.46 67.12±0.47 S2M2 [31] ResNet-12 63.24±0.47 66.22±0.45 MAML[17] Conv-4-64 58.99±0.45 60.11±0.50 Meta-SGD[32] ResNet-12 60.63±0.90 53.14±1.46 MatchingNet[33] ResNet-12 61.57±0.49 64.30±0.46 ProtoNet[16] ResNet-12 64.52±0.48 67.08±0.47 RelationNet[34] ResNet-12 65.52±0.85 68.56±0.49 RS-MetaNet[28] ResNet-50 52.78±0.09 53.37±0.56 SCL-MLNet[35] Conv-256 62.21±1.12 59.49±0.96 DLA-MatchNet[29] ResNet-12 68.80±0.70 57.21±0.82 SPNet[36] ResNet-12 67.84±0.87 - IDLN[37] ResNet-12 75.25±0.75 - 本文方法 ResNet-12 76.94±0.54 72.98±0.51 表 4 不同文本描述形式和信息融合方式对小样本分类性能的影响(%)

设置 文本描述 多模态信息融合方法 ESAD NWPU45 (a) - - 57.74±0.77 72.16±0.53 (b) [Class_name] - 58.01±0.65 72.75±0.46 (c) “This is a photo of [Class_name]” - 58.89±0.43 73.21±0.53 (d) ChatGPT Addition 58.64±0.63 73.04±0.48 (e) ChatGPT 本文基于注意力的方法 59.75±0.64 73.45±0.51 (f) ChatGPT+自动词向量学习 Addition 60.95±0.77 74.08±0.56 (g) ChatGPT+自动词向量学习 本文基于注意力的方法 61.99±0.22 76.94±0.41 -

[1] 王桥. 地表异常遥感探测与即时诊断方法研究框架[J]. 测绘学报, 2022, 51(7): 1141–1152. doi: 10.11947/j.AGCS.2022.20220124.WANG Qiao. Research framework of remote sensing monitoring and real-time diagnosis of earth surface anomalies[J]. Acta Geodaetica et Cartographica Sinica, 2022, 51(7): 1141–1152. doi: 10.11947/j.AGCS.2022.20220124. [2] WEI Haishuo, JIA Kun, WANG Qiao, et al. Real-time remote sensing detection framework of the earth's surface anomalies based on a priori knowledge base[J]. International Journal of Applied Earth Observation and Geoinformation, 2023, 122: 103429. doi: 10.1016/j.jag.2023.103429. [3] 高智, 胡傲涵, 陈泊安, 等. 多层级几何—语义融合的图神经网络地表异常检测框架[J]. 遥感学报, 2024, 28(7): 1760–1770. doi: 10.11834/jrs.20243301.GAO Zhi, HU Aohan, CHEN Boan, et al. A hierarchical geometry-to-semantic fusion GNN framework for earth surface anomalies detection[J]. National Remote Sensing Bulletin, 2024, 28(7): 1760–1770. doi: 10.11834/jrs.20243301. [4] 刘思琪, 高智, 陈泊安, 等. 基于图网络的遥感地物关系表达与推理的地表异常检测[J]. 电子与信息学报, 2025, 47(6): 1690–1703. doi: 10.11999/JEIT240883.LIU Siqi, GAO Zhi, CHEN Boan, et al. Earth surface anomaly detection using graph neural network-based representation and reasoning of remote sensing geographic object relationships[J]. Journal of Electronics & Information Technology, 2025, 47(6): 1690–1703. doi: 10.11999/JEIT240883. [5] ZHAO Chuanwu, PAN Yaozhong, WU Hanyi, et al. A novel spectral index for vegetation destruction event detection based on multispectral remote sensing imagery[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024, 17: 11290–11309. doi: 10.1109/JSTARS.2024.3412737. [6] WU Hanyi, ZHAO Chuanwu, ZHU Yu, et al. A multiscale examination of heat health risk inequality and its drivers in mega-urban agglomeration: A case study in the Yangtze River Delta, China[J]. Journal of Cleaner Production, 2024, 458: 142528. doi: 10.1016/j.jclepro.2024.142528. [7] WEI Haishuo, JIA Kun, WANG Qiao, et al. A remote sensing index for the detection of multi-type water quality anomalies in complex geographical environments[J]. International Journal of Digital Earth, 2024, 17(1): 2313695. doi: 10.1080/17538947.2024.2313695. [8] ROY D P, JIN Y, LEWIS P E, et al. Prototyping a global algorithm for systematic fire-affected area mapping using MODIS time series data[J]. Remote Sensing of Environment, 2005, 97(2): 137–162. doi: 10.1016/j.rse.2005.04.007. [9] 王立波, 高智, 王桥. 融合遥感指数协同推理的地表异常检测方法[J]. 电子与信息学报, 2025, 47(6): 1669–1678. doi: 10.11999/JEIT240882.WANG Libo, GAO Zhi, and WANG Qiao. A novel earth surface anomaly detection method based on collaborative reasoning of deep learning and remote sensing indexes[J]. Journal of Electronics & Information Technology, 2025, 47(6): 1669–1678. doi: 10.11999/JEIT240882. [10] ZHANG Zilun, ZHAO Tiancheng, GUO Yulong, et al. RS5M and GeoRSCLIP: A large-scale vision- language dataset and a large vision-language model for remote sensing[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5642123. doi: 10.1109/TGRS.2024.3449154. [11] GE Junyao, ZHANG Xu, ZHENG Yang, et al. RSTeller: Scaling up visual language modeling in remote sensing with rich linguistic semantics from openly available data and large language models[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2025, 226: 146–163. doi: 10.1016/j.isprsjprs.2025.05.002. [12] ZHENG Zhuo, ZHONG Yanfei, WANG Junjue, et al. Building damage assessment for rapid disaster response with a deep object-based semantic change detection framework: From natural disasters to man-made disasters[J]. Remote Sensing of Environment, 2021, 265: 112636. doi: 10.1016/j.rse.2021.112636. [13] KYRKOU C and THEOCHARIDES T. EmergencyNet: Efficient aerial image classification for drone-based emergency monitoring using atrous convolutional feature fusion[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 1687–1699. doi: 10.1109/JSTARS.2020.2969809. [14] CHEN Boan, GAO Zhi, LI Ziyao, et al. Hierarchical GNN framework for earth’s surface anomaly detection in single satellite imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5627314. doi: 10.1109/TGRS.2024.3408330. [15] CHEN Weiyu, LIU Yencheng, KIRA Z, et al. A closer look at few-shot classification[C]. The 2019 International Conference on Learning Representations, New Orleans, USA, 2019: 1–16. [16] SNELL J, SWERSKY K, and ZEMEL R. Prototypical networks for few-shot learning[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 4080–4090. [17] FINN C, ABBEEL P, and LEVINE S. Model-agnostic meta-learning for fast adaptation of deep networks[C]. The 34th International Conference on Machine Learning - Volume 70, Sydney, Australia, 2017: 1126–1135. [18] RADFORD A, KIM J, HALLACY C, et al. Learning transferable visual models from natural language supervision[C]. The 38th International Conference on Machine Learning, Virtual Event, 2021: 8748–8763. [19] XU Jingyi and LE H. Generating representative samples for few-shot classification[C]. Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 8993–9003. doi: 10.1109/CVPR52688.2022.00880. [20] ZHANG Baoquan, LI Xutao, YE Yunming, et al. Prototype completion with primitive knowledge for few-shot learning[C]. The 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 3753–3761. doi: 10.1109/CVPR46437.2021.00375. [21] LIU Fan, CHEN Delong, GUAN Zhangqingyun, et al. RemoteCLIP: A vision language foundation model for remote sensing[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5622216. doi: 10.1109/TGRS.2024.3390838. [22] 张永军, 李彦胜, 党博, 等. 多模态遥感基础大模型: 研究现状与未来展望[J]. 测绘学报, 2024, 53(10): 1942–1954. doi: 10.11947/j.AGCS.2024.20240019.ZHANG Yongjun, LI Yansheng, DANG Bo, et al. Multi-modal remote sensing large foundation models: Current research status and future prospect[J]. Acta Geodaetica et Cartographica Sinica, 2024, 53(10): 1942–1954. doi: 10.11947/j.AGCS.2024.20240019. [23] OpenAI. 隆重推出ChatGPT[EB/OL]. https://openai.com/blog/chatgpt, 2022. [24] GUPTA R, GOODMAN B, PATEL N, et al. Creating xBD: A dataset for assessing building damage from satellite imagery[C].The 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, USA, 2019: 10–17. [25] RUDNER T G J, RUSSWURM M, FIL J, et al. Multi3Net: Segmenting flooded buildings via fusion of multiresolution, multisensor, and multitemporal satellite imagery[C]. The Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, USA, 2019, 33: 702–709. doi: 10.1609/aaai.v33i01.3301702. [26] 曾超, 曹振宇, 苏凤环, 等. 四川及周边滑坡泥石流灾害高精度航空影像及解译数据集(2008–2020年)[J]. 中国科学数据, 2022, 7(2): 191–201. doi: 10.11922/noda.2021.0005.zh.ZENG Chao, CAO Zhenyu, SU Fenghuan, et al. A dataset of high-precision aerial imagery and interpretation of landslide and debris flow disaster in Sichuan and surrounding areas between 2008 and 2020[J]. China Scientific Data, 2022, 7(2): 191–201. doi: 10.11922/noda.2021.0005.zh. [27] CHENG Gong, HAN Junwei, and LU Xiaoqiang. Remote sensing image scene classification: Benchmark and state of the art[J]. Proceedings of the IEEE, 2017, 105(10): 1865–1883. doi: 10.1109/JPROC.2017.2675998. [28] LI Haifeng, CUI Zhenqi, ZHU Zhiqiang, et al. RS-MetaNet: Deep metametric learning for few-shot remote sensing scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(8): 6983–6994. doi: 10.1109/TGRS.2020.3027387. [29] LI Lingjun, HAN Junwei, YAO Xiwen, et al. DLA-MatchNet for few-shot remote sensing image scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(9): 7844–7853. doi: 10.1109/TGRS.2020.3033336. [30] XIA Guisong, HU Jingwen, HU Fan, et al. AID: A benchmark data set for performance evaluation of aerial scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(7): 3965–3981. doi: 10.1109/TGRS.2017.2685945. [31] MANGLA P, SINGH M, SINHA A, et al. Charting the right manifold: Manifold mixup for few-shot learning[C]. The 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, USA, 2020: 2207–2216. doi: 10.1109/WACV45572.2020.9093338. [32] NICHOL A, ACHIAM J, and SCHULMAN J. On first-order meta-learning algorithms[J]. arXiv preprint arXiv: 1803.02999, 2018. doi: 10.48550/arXiv.1803.02999. [33] VINYALS O, BLUNDELL C, LILLICRAP T, et al. Matching networks for one shot learning[C]. The 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 2016: 3637–3645. [34] SUNG F, YANG Yongxin, ZHANG Li, et al. Learning to compare: Relation network for few-shot learning[C]. The 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1199–1208. doi: 10.1109/CVPR.2018.00131. [35] LI Xiaomin, SHI Daqian, DIAO Xiaolei, et al. SCL-MLNet: Boosting few-shot remote sensing scene classification via self-supervised contrastive learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5801112. doi: 10.1109/TGRS.2021.3109268. [36] CHENG Gong, CAI Liming, LANG Chunbo, et al. SPNet: Siamese-prototype network for few-shot remote sensing image scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5608011. doi: 10.1109/TGRS.2021.3099033. [37] ZENG Qingjie, GENG Jie, JIANG Wen, et al. IDLN: Iterative distribution learning network for few-shot remote sensing image scene classification[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 8020505. doi: 10.1109/LGRS.2021.3109728. -

下载:

下载:

下载:

下载: