A Morphology-guided Decoupled Framework for Oriented SAR Ship Detection

-

摘要: 合成孔径雷达(SAR)以其全天时、全天候的观测能力,在遥感检测中得到了广泛应用。然而,受限于标注精度,目前主流的SAR目标检测方法多依赖水平框标注,难以实现精确的目标角度和尺度估计。同时,尽管弱监督学习在光学图像中的角度预测取得了进展,但其忽视了SAR特有的成像几何,难以有效泛化。为解决上述挑战,该文提出一种融合SAR成像机理的有向舰船检测新框架,核心思想在于将检测任务解耦为定位与方向估计两个独立的子模块。其中,定位模块可以直接利用任意现有的、在水平框标注上训练的检测器;而方向估计模块则在一个专门构建的形态学合成二值数据集上进行全监督训练。该框架的优势在于无需修改原有检测器结构和重新训练的前提下,即插即用地赋予模型高精度的有向框预测能力。实验验证了所提方法在多个数据集上相较于现有仅依赖水平框监督的方法表现出更优的性能,部分场景中甚至超越全监督方法,体现出强大的有效性与工程应用价值。Abstract:

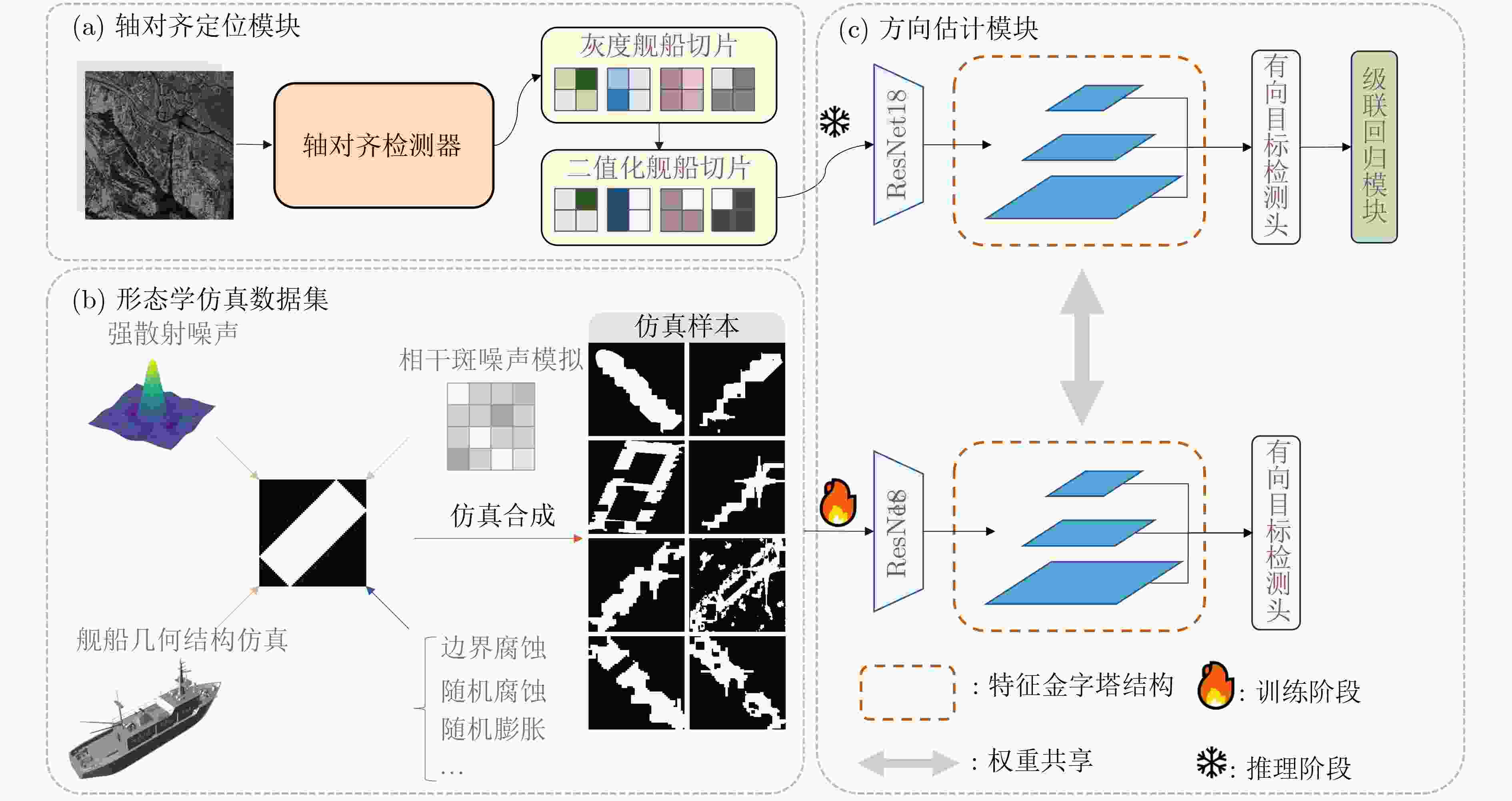

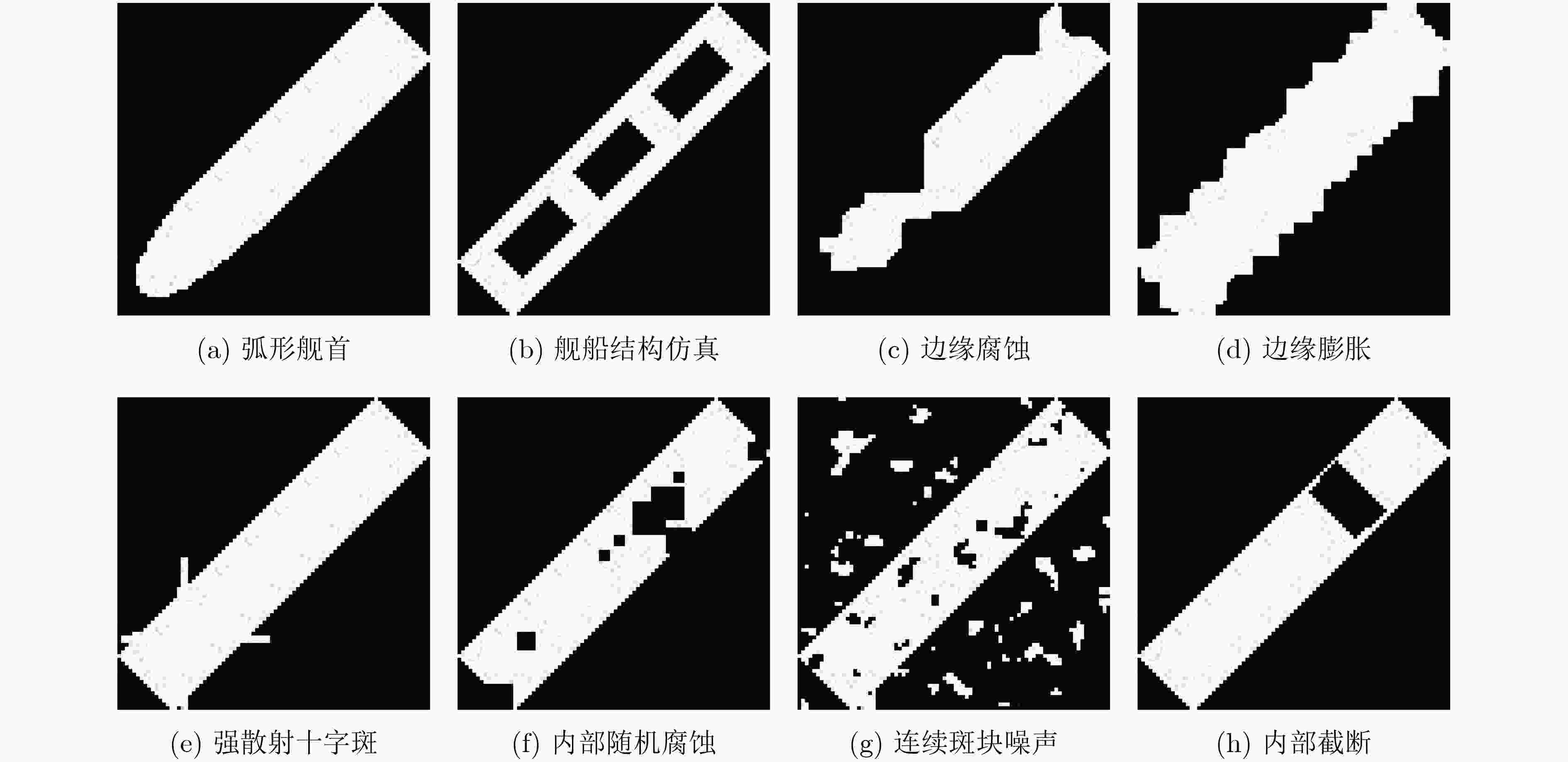

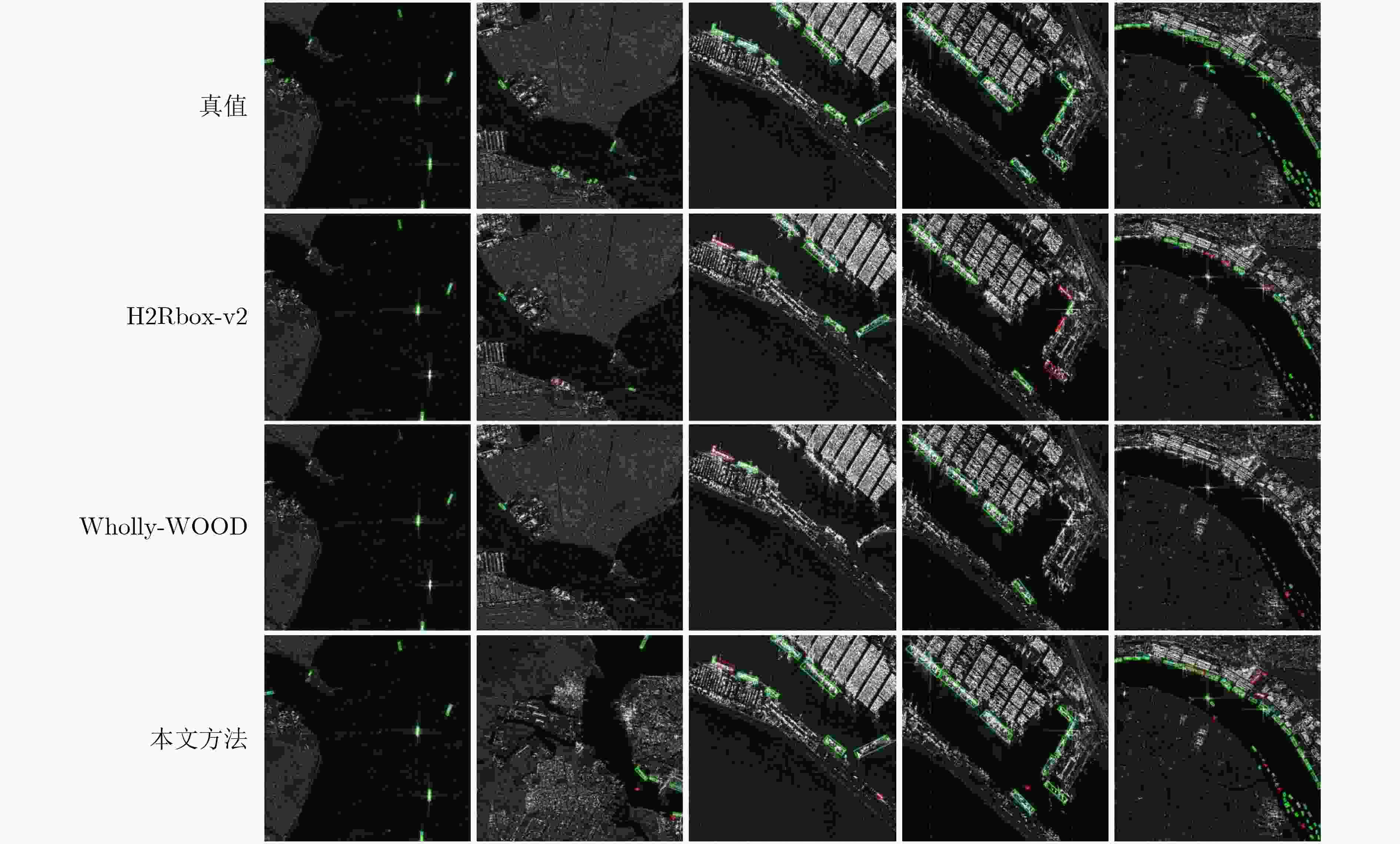

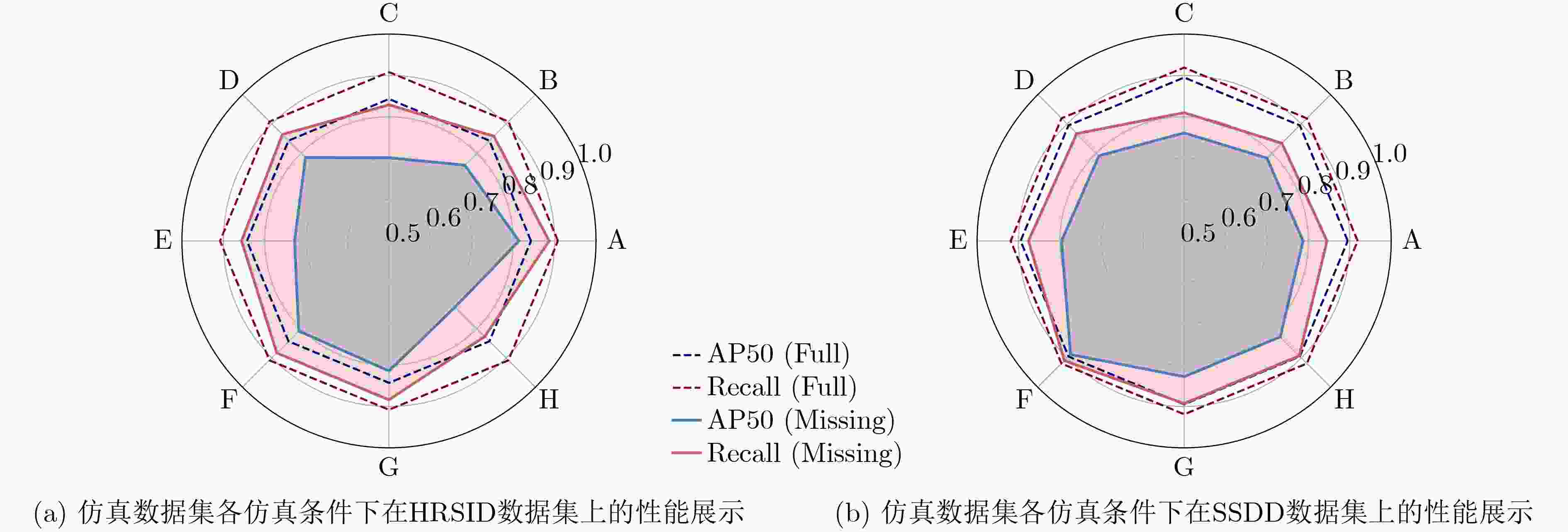

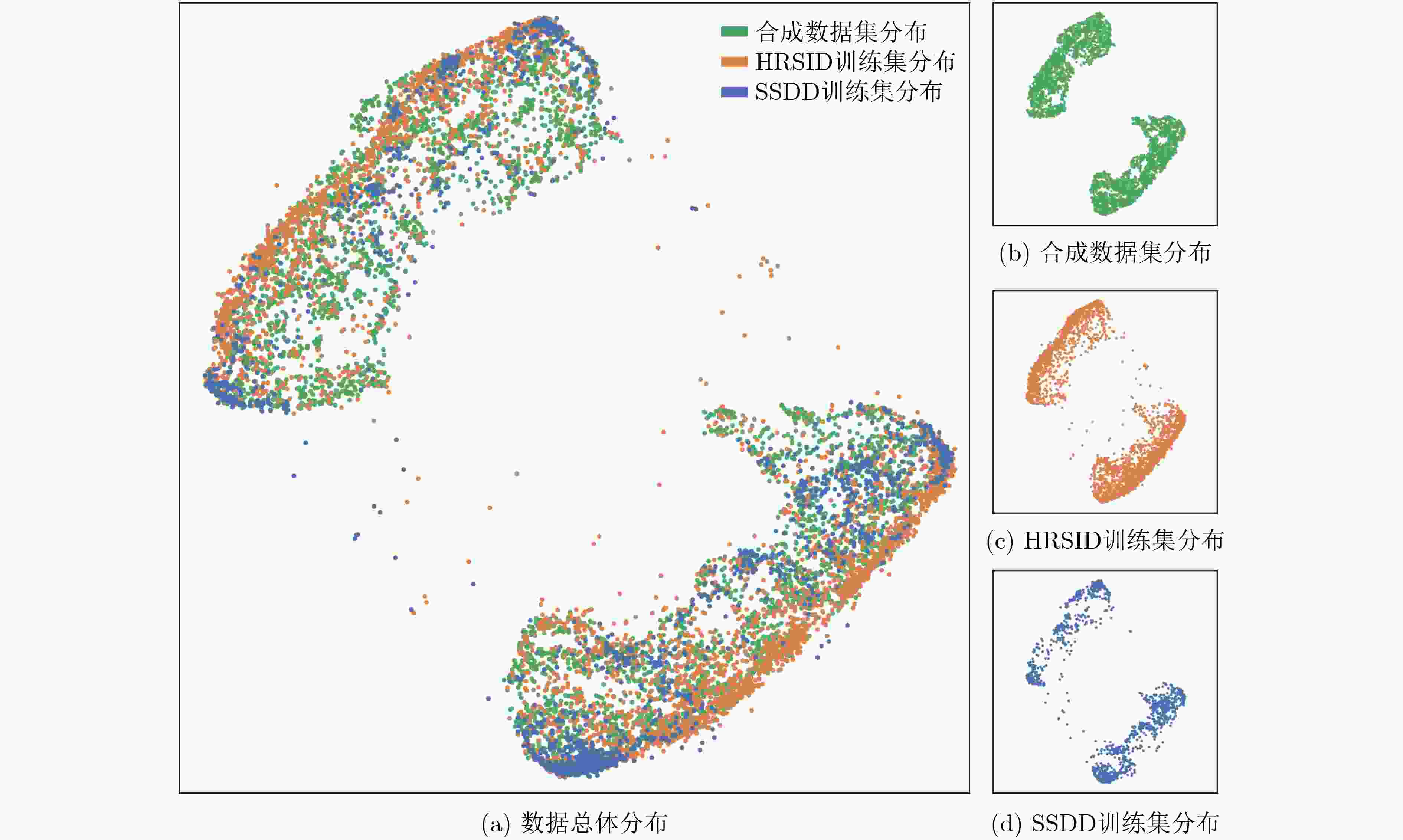

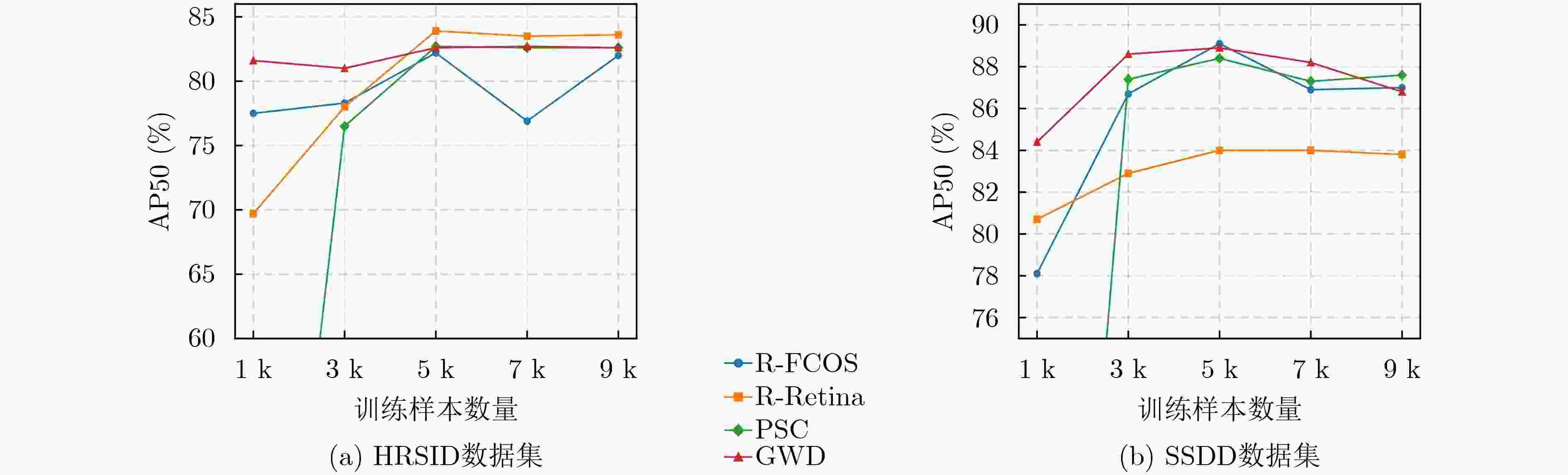

Objective Synthetic Aperture Radar (SAR) is an indispensable remote sensing technology; however, accurate ship detection in SAR imagery remains challenging. Most deep learning-based detection approaches rely on Horizontal Bounding Box (HBB) annotations, which do not provide sufficient geometric information to estimate ship orientation and scale. Although Oriented Bounding Box (OBB) annotation contains such information, reliable OBB labeling for SAR imagery is costly and frequently inaccurate because of speckle noise and geometric distortions intrinsic to SAR imaging. Weakly supervised object detection provides a potential alternative, yet approaches designed for optical imagery exhibit limited generalization capability in the SAR domain. To address these limitations, a simulation-driven decoupled framework is proposed. The objective is to enable standard HBB-based detectors to produce accurate OBB predictions without structural modification by training a dedicated orientation estimation module using a fully supervised synthetic dataset that captures essential SAR ship morphology. Methods The proposed framework decomposes oriented ship detection into two sequential sub-tasks: coarse localization and fine-grained orientation estimation ( Fig. 1 ). First, an axis-aligned localization module based on a standard HBB detector, such as YOLOX, is trained using available HBB annotations to identify candidate regions of interest. This stage exploits the high-recall capability of mature detection networks and outputs image patches that potentially contain ship targets. Second, to learn orientation information without real OBB annotations, a large-scale morphological simulation dataset composed of binary images is constructed. The dataset generation begins with simple binary rectangles of randomized aspect ratios and known ground-truth orientations. To approximate the appearance of binarized SAR ship targets, morphological operations, including edge-level and region-level erosion and dilation, are applied to introduce boundary ambiguity. Structured strong scattering cross noise is further injected to simulate SAR-specific artifacts. This process yields a synthetic dataset with precise orientation labels. Third, an orientation estimation module based on a lightweight ResNet-18 architecture is trained exclusively on the synthetic dataset. This module predicts object orientation and refines aspect ratio using only shape and contour information. During inference, candidate patches produced by the localization module are binarized and processed by the orientation estimation module. Final OBBs are generated by fusing the spatial coordinates derived from the initial HBBs with the predicted orientation and refined dimensions.Results and Discussions The proposed method is evaluated on two public SAR ship detection benchmarks, HRSID and SSDD. Training is conducted using only HBB annotations, whereas performance is assessed against ground-truth OBBs using Average Precision at 0.5 intersection over union (AP50) and Recall (R). The method demonstrates superior performance relative to existing weakly supervised approaches and remains competitive with fully supervised methods ( Table 1 andTable 2 ). On the HRSID dataset, an AP50 of 84.3% and a recall of 91.9% are achieved. These results exceed those of weakly supervised methods such as H2Rbox-v2 (56.2% AP50) and the approach reported by Yue et al.[14 ] (81.5% AP50), and also outperform several fully supervised detectors, such as R-RetinaNet (72.7% AP50) and S2ANet (80.8% AP50). A similar advantage is observed on the SSDD dataset, where an AP50 of 89.4% is obtained, representing a significant improvement over the best reported weakly supervised result of 87.3%. Qualitative inspection of detection outputs supports these quantitative results (Fig. 3 ). The proposed method shows a lower missed-detection rate, particularly for small and densely clustered ships, relative to other weakly supervised approaches. This robustness is attributed to the high-recall property of the first-stage localization network combined with reliable orientation cues learned from the morphological dataset. To examine key methodological aspects, additional experiments are conducted. Analysis of the domain gap between synthetic and real data using UMAP-based visualization of high-dimensional features (Fig. 5 ) reveals substantial overlap and similar manifold structures across domains, indicating strong morphological consistency. An ablation study of the morphological components (Fig. 4 ) further shows that each simulation element contributes incrementally to performance improvement, supporting the design of the high-fidelity simulation process.Conclusions A morphology-guided decoupled framework for oriented ship detection in SAR imagery is presented. By separating localization and orientation estimation, standard HBB-based detectors are enabled to perform accurate oriented detection without retraining. The central contribution is a fully supervised morphological simulation dataset that allows a dedicated module to learn robust orientation features from structural contours, thereby mitigating the annotation challenges associated with real SAR data. Experimental results demonstrate that the proposed approach substantially outperforms existing HBB-supervised methods and remains competitive with fully supervised alternatives. The plug-and-play design highlights its practical applicability. -

表 1 本文方法与典型检测方法在HRSID数据集上的性能比较(%)

表 2 本文方法与典型检测方法在SSDD数据集上的性能比较(%)

表 3 不同轴对齐检测器对最终精度的影响(%)

数据集 轴对齐检测器 HBBs AP50 OBBs AP50 HBBs R OBBs R SSDD FCOS[22] 81.9 78.1 91.2 88.8 CenterNet[23] 90.2 88.5 96.7 94.7 Faster-RCNN[3] 90.0 88.1 93.0 90.1 YOLOX[4] 90.3 89.4 99.1 95.6 HRSID FOCS[22] 78.4 71.2 87.6 80.3 CenterNet[23] 86.6 77.5 92.3 85.1 Faster-RCNN[3] 79.7 70.3 83.5 78.7 YOLOX[4] 89.0 84.3 96.1 90.8 -

[1] DI BISCEGLIE M and GALDI C. CFAR detection of extended objects in high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2005, 43(4): 833–843. doi: 10.1109/TGRS.2004.843190. [2] LENG Xiangguang, JI Kefeng, YANG Kai, et al. A bilateral CFAR algorithm for ship detection in SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(7): 1536–1540. doi: 10.1109/LGRS.2015.2412174. [3] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/tpami.2016.2577031. [4] GE Zheng, LIU Songtao, WANG Feng, et al. YOLOX: Exceeding YOLO series in 2021[EB/OL]. https://arxiv.org/abs/2107.08430, 2021. [5] CHEN Yuming, YUAN Xinbin, WANG Jiabao, et al. YOLO-MS: Rethinking multi-scale representation learning for real-time object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2025, 47(6): 4240–4252. doi: 10.1109/tpami.2025.3538473. [6] DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An image is worth 16x16 words: Transformers for image recognition at scale[C]. 9th International Conference on Learning Representations, Austria, 2021. [7] YU Yi, YANG Xue, LI Qingyun, et al. H2RBox-v2: Incorporating symmetry for boosting horizontal box supervised oriented object detection[C].The 37th International Conference on Neural Information Processing Systems, New Orleans, USA, 2023: 2581. [8] YU Yi, YANG Xue, LI Yansheng, et al. Wholly-WOOD: Wholly leveraging diversified-quality labels for weakly-supervised oriented object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2025, 47(6): 4438–4454. doi: 10.1109/TPAMI.2025.3542542. [9] HU Fengming, XU Feng, WANG R, et al. Conceptual study and performance analysis of tandem multi-antenna spaceborne SAR interferometry[J]. Journal of Remote Sensing, 2024, 4: 0137. doi: 10.34133/remotesensing.0137. [10] YOMMY A S, LIU Rongke, and WU Shuang. SAR image despeckling using refined lee filter[C]. 2015 7th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 2015: 260–265. doi: 10.1109/IHMSC.2015.236. [11] KANG Yuzhuo, WANG Zhirui, ZUO Haoyu, et al. ST-Net: Scattering topology network for aircraft classification in high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5202117. doi: 10.1109/tgrs.2023.3236987. [12] ZHANG Yipeng, LU Dongdong, QIU Xiaolan, et al. Scattering-point-guided RPN for oriented ship detection in SAR images[J]. Remote Sensing, 2023, 15(5): 1411. doi: 10.3390/rs15051411. [13] PAN Dece, GAO Xin, DAI Wei, et al. SRT-Net: Scattering region topology network for oriented ship detection in large-scale SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5202318. doi: 10.1109/tgrs.2024.3351366. [14] YUE Tingxuan, ZHANG Yanmei, WANG Jin, et al. A weak supervision learning paradigm for oriented ship detection in SAR image[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5207812. doi: 10.1109/TGRS.2024.3375069. [15] WEI Shunjun, ZENG Xiangfeng, QU Qizhe, et al. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation[J]. IEEE Access, 2020, 8: 120234–120254. doi: 10.1109/access.2020.3005861. [16] ZHANG Tianwen, ZHANG Xiaoling, LI Jianwei, et al. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis[J]. Remote Sensing, 2021, 13(18): 3690. doi: 10.3390/rs13183690. [17] ZHOU Yue, YANG Xue, ZHANG Gefan, et al. MMRotate: A rotated object detection benchmark using PyTorch[C]. The 30th ACM International Conference on Multimedia, Lisboa, Portugal, 2022: 7331–7334. doi: 10.1145/3503161.3548541. [18] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2999–3007. doi: 10.1109/iccv.2017.324. [19] YANG Xue, ZHANG Gefan, YANG Xiaojiang, et al. Detecting rotated objects as gaussian distributions and its 3-D generalization[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(4): 4335–4354. doi: 10.1109/tpami.2022.3197152. [20] LI Jianfeng, CHEN Mingxu, HOU Siyuan, et al. An improved S2A-net algorithm for ship object detection in optical remote sensing images[J]. Remote Sensing, 2023, 15(18): 4559. doi: 10.3390/rs15184559. [21] DING Jian, XUE Nan, LONG Yang, et al. Learning RoI transformer for oriented object detection in aerial images[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 2844–2853. doi: 10.1109/cvpr.2019.00296. [22] TIAN Zhi, SHEN Chunhua, CHEN Hao, et al. FCOS: A simple and strong anchor-free object detector[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(4): 1922–1933. doi: 10.1109/tpami.2020.3032166. [23] DUAN Kaiwen, BAI Song, XIE Lingxi, et al. CenterNet++ for object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(5): 3509–3521. doi: 10.1109/tpami.2023.3342120. [24] HEALY J and MCINNES L. Uniform manifold approximation and projection[J]. Nature Reviews Methods Primers, 2024, 4(1): 82. doi: 10.1038/s43586-024-00363-x. -

下载:

下载:

下载:

下载: