Multiscale Fractional Information Potential Field and Dynamic Gradient-Guided Energy Modeling for SAR and Multispectral Image Fusion

-

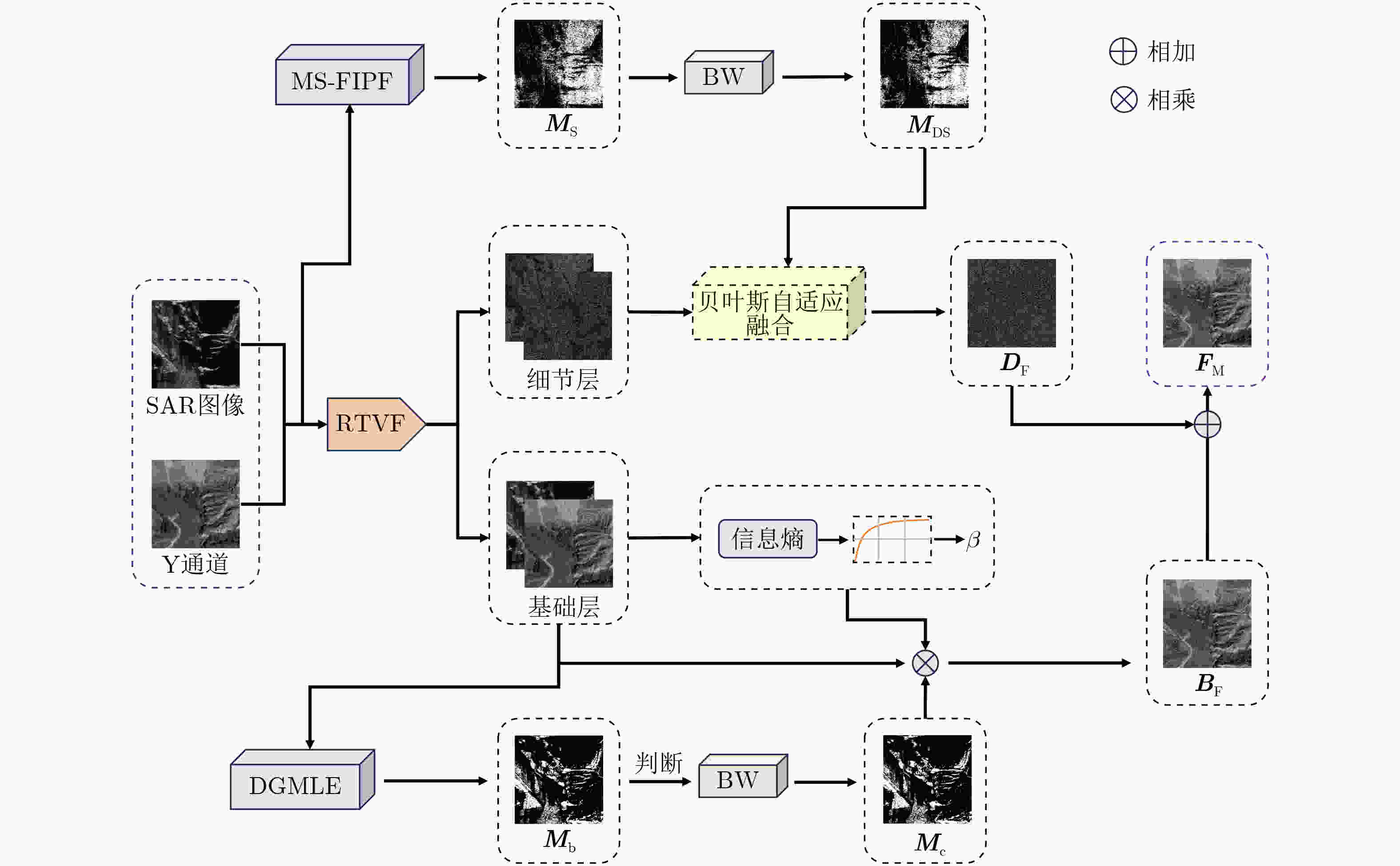

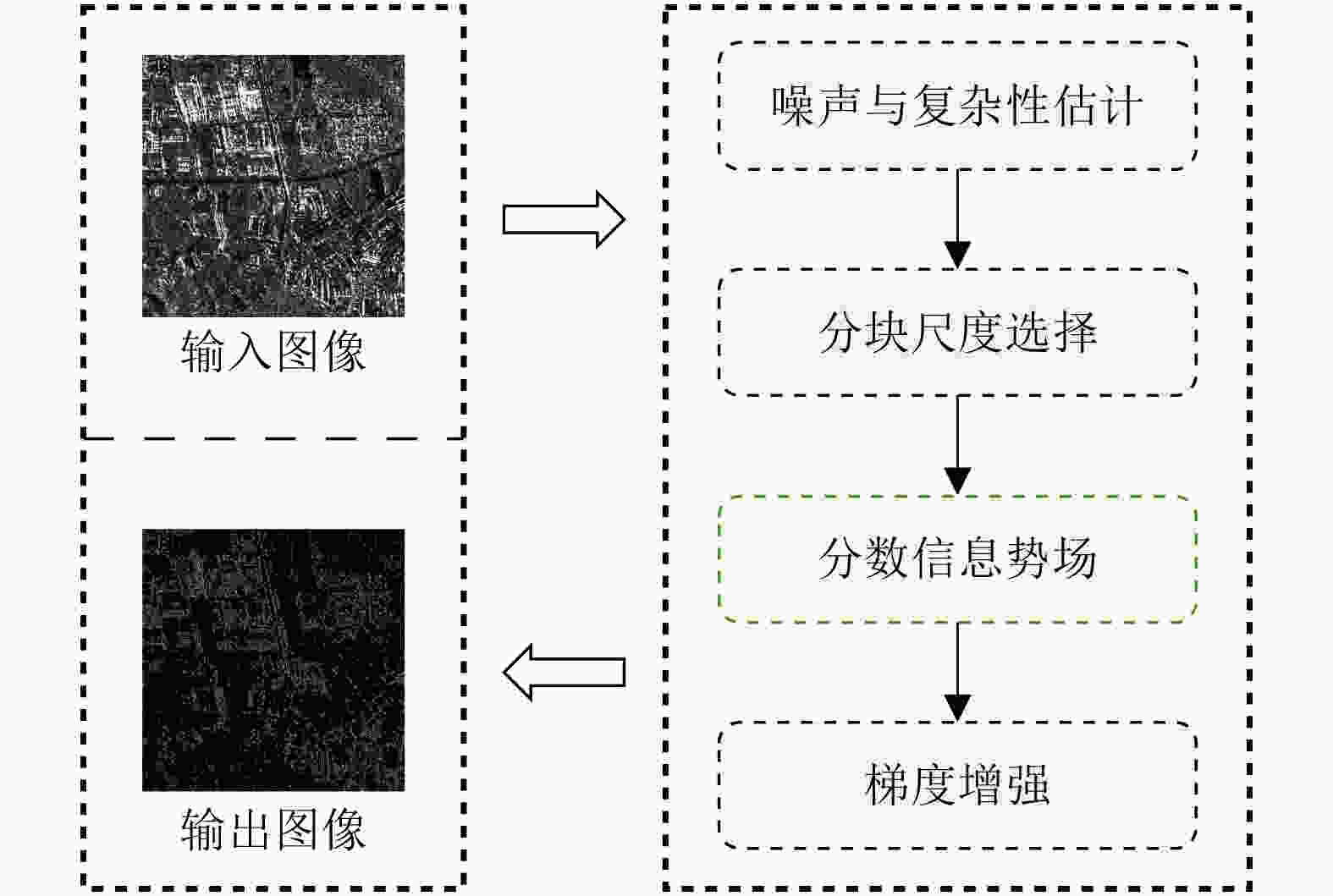

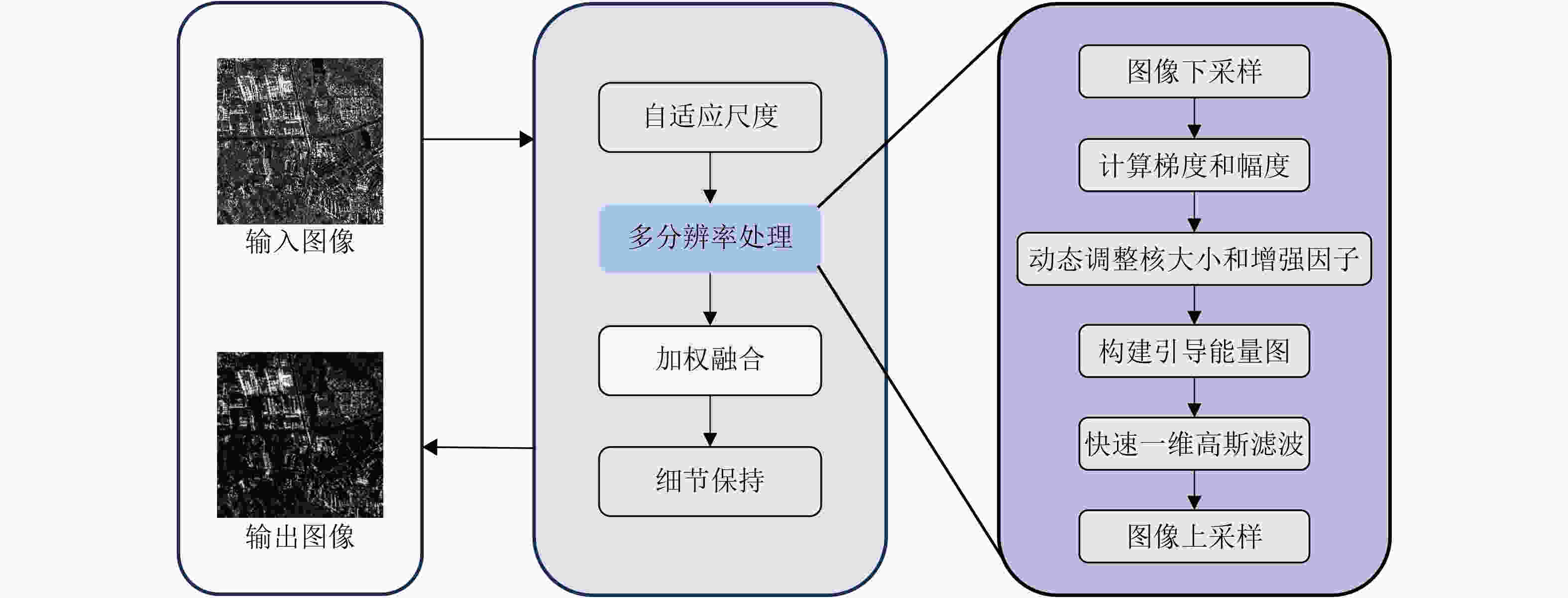

摘要: 合成孔径雷达(SAR)与多光谱图像融合在遥感应用中具有重要意义。然而,现有方法常因斑点噪声、强散射以及模态差异而引起结构失真和光谱畸变。为解决这些问题,该文提出一种结合多尺度分数信息势场与动态梯度引导能量建模的鲁棒融合方法。首先,设计了一种多尺度分数信息势场显著性检测方法,融合局部熵驱动的自适应尺度机制、傅里叶域分数阶建模与梯度增强策略,实现细节层显著结构的稳定提取。其次,针对模态间噪声差异及结构响应不一致的问题,提出局部方差感知的动态正则化项,并基于贝叶斯最小均方误差准则构建了细节融合模型,以提升结构一致性及抗噪性能。进一步,提出梯度引导的多分辨率结构能量建模方法,用于基础层特征提取,从而增强几何结构的保持能力。最后,设计了基于信息熵与均方根误差的联合驱动机制,实现SAR散射信息的自适应贡献平衡,确保融合图像的光谱协调性与视觉一致性。实验结果表明,所提方法在WHU, YYX和HQ数据集上的整体性能超过7种主流方法,评价指标相较次优方法平均提升29.11%,并在结构保持、光谱保真和噪声抑制方面表现出显著优势。Abstract:

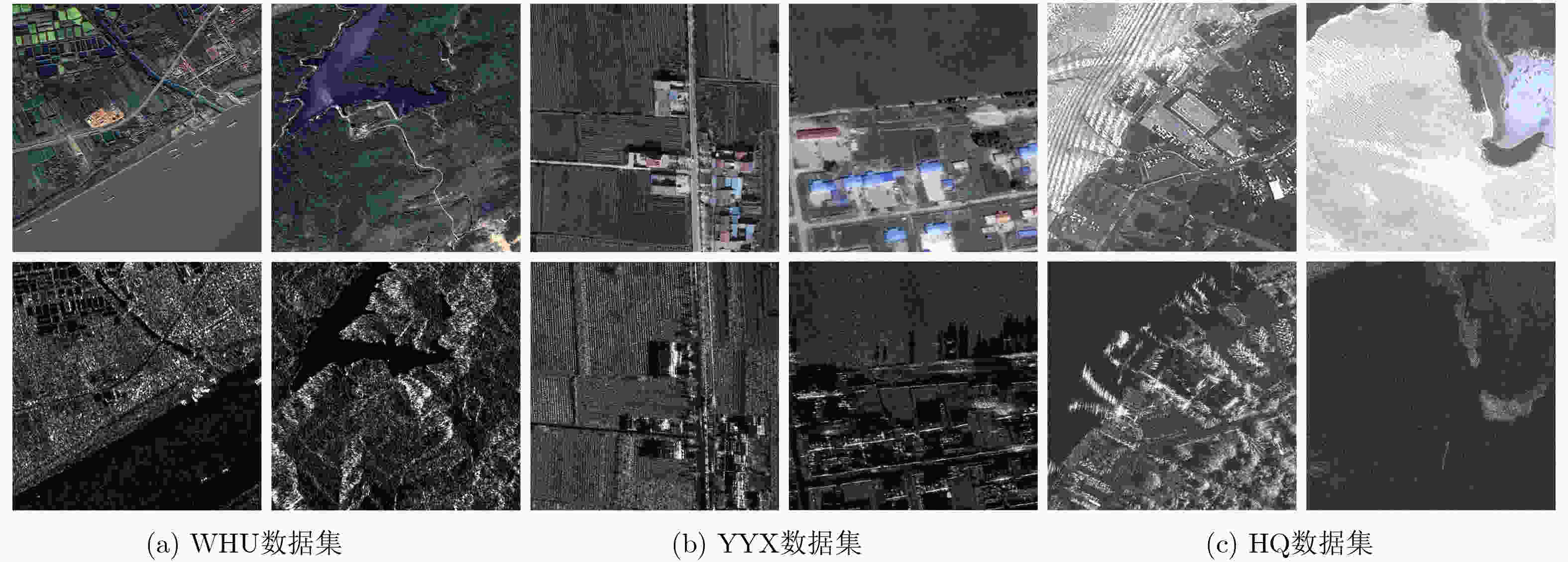

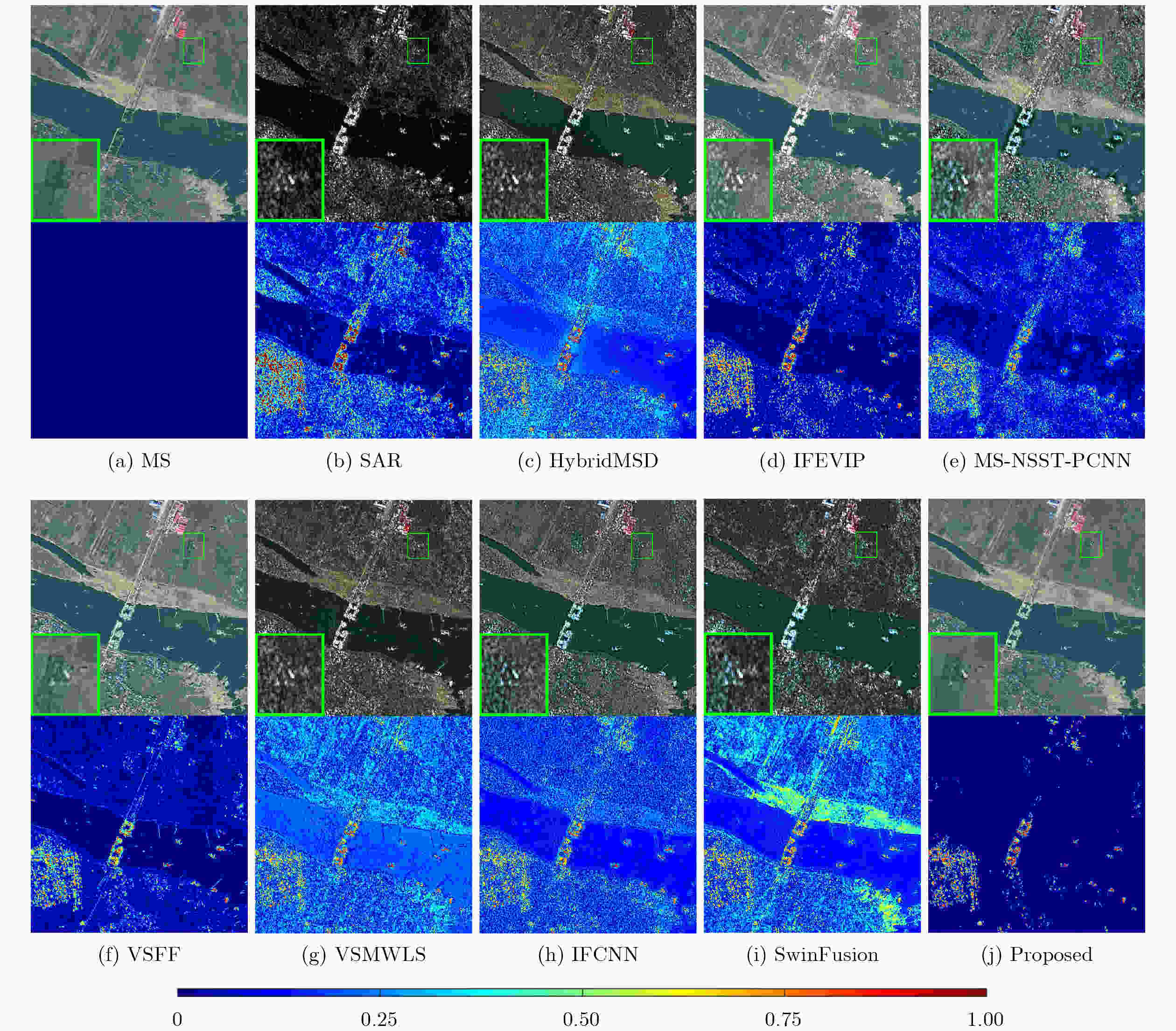

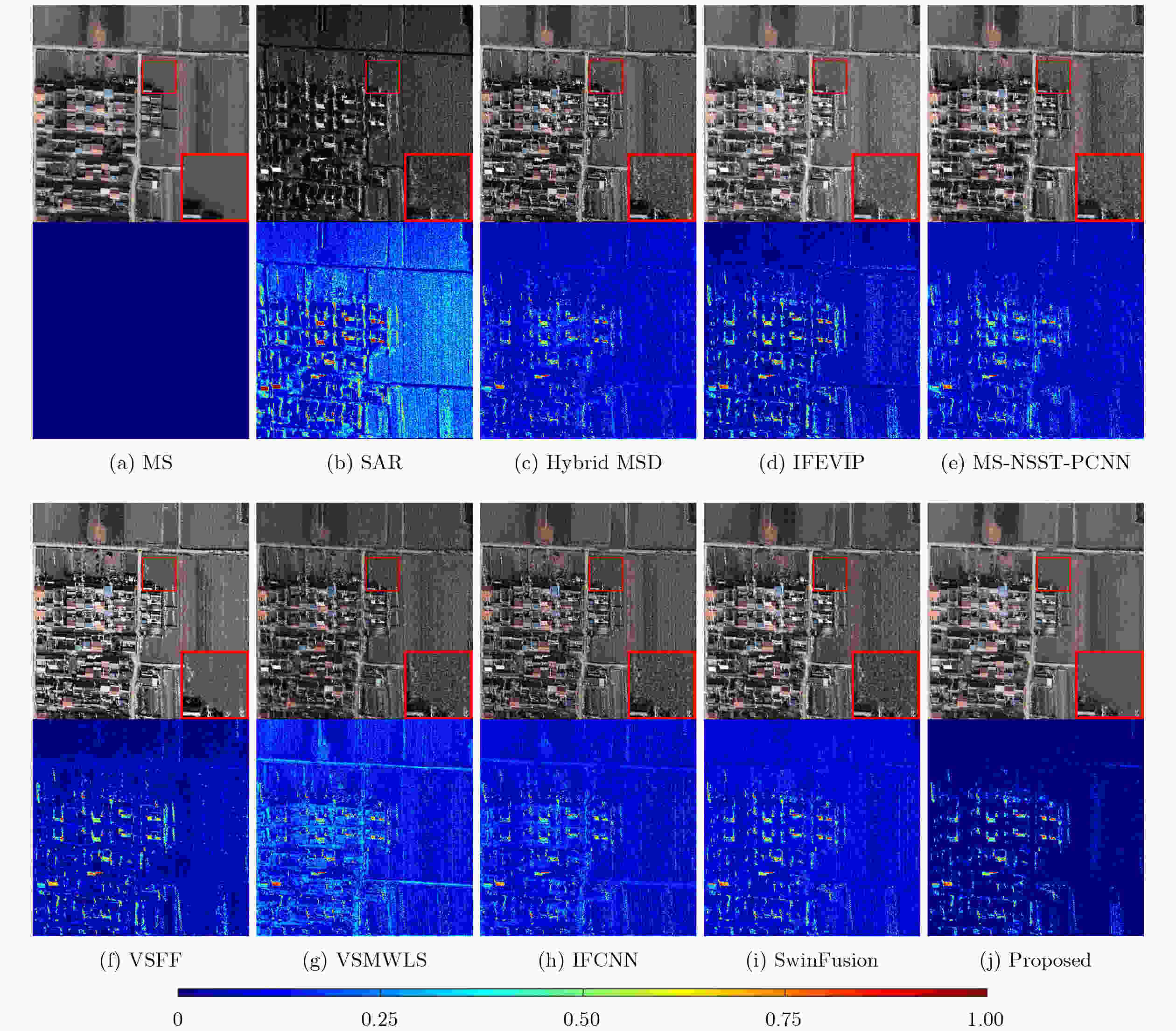

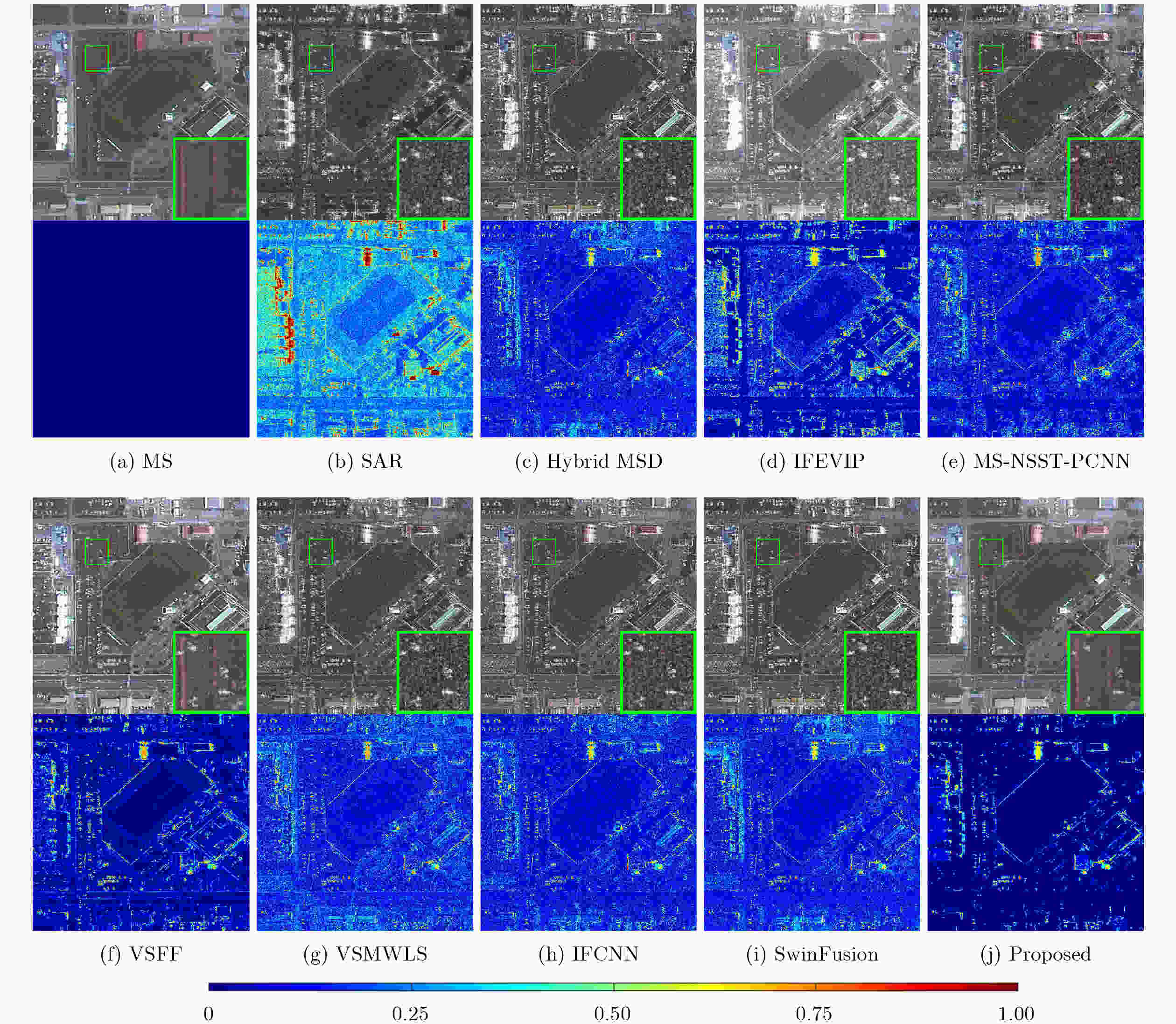

Objective In remote sensing, fusion of Synthetic Aperture Radar (SAR) and MultiSpectral (MS) images is essential for comprehensive Earth observation. SAR sensors provide all-weather imaging capability and capture dielectric and geometric surface characteristics, although they are inherently affected by multiplicative speckle noise. In contrast, MS sensors provide rich spectral information that supports visual interpretation, although their performance is constrained by atmospheric conditions. The objective of SAR-MS image fusion is to integrate the structural details and scattering characteristics of SAR imagery with the spectral content of MS imagery, thereby improving performance in applications such as land-cover classification and target detection. However, existing fusion approaches, ranging from component substitution and multiscale transformation to Deep Learning (DL), face persistent limitations. Many methods fail to achieve an effective balance between noise suppression and texture preservation, which leads to spectral distortion or residual speckle, particularly in highly heterogeneous regions. DL-based methods, although effective in specific scenarios, exhibit strong dependence on training data and limited generalization across sensors. To address these issues, a robust unsupervised fusion framework is developed that explicitly models modality-specific noise characteristics and structural differences. Fractional calculus and dynamic energy modeling are combined to improve structural preservation and spectral fidelity without relying on large-scale training datasets. Methods The proposed framework adopts a multistage fusion strategy based on Relative Total Variation filtering for image decomposition and consists of four core components. First, a MultiScale Fractional Information Potential Field (MS-FIPF) method (Fig. 2) is proposed to extract robust detail layers. A fractional-order kernel is constructed in the Fourier domain to achieve nonlinear frequency weighting, and a local entropy-driven adaptive scale mechanism is applied to enhance edge information while suppressing noise. Second, to address the different noise distributions observed in SAR and MS detail layers, a Bayesian adaptive fusion model based on the minimum mean square error criterion is constructed. A dynamic regularization term is incorporated to adaptively balance structural preservation and noise suppression. Third, for base layers containing low-frequency geometric information, a Dynamic Gradient-Guided Multiresolution Local Energy (DGMLE) method (Fig. 3) is proposed. This method constructs a global entropy-driven multiresolution pyramid and applies a gradient-variance-controlled enhancement factor combined with adaptive Gaussian smoothing to emphasize significant geometric structures. Finally, a Scattering Intensity Adaptive Modulation (SIAM) strategy is applied through a nonlinear mapping regulated by joint entropy and root mean square error, enabling adaptive adjustment of SAR scattering contributions to maintain visual and spectral consistency. Results and Discussions The proposed framework is evaluated on the WHU, YYX, and HQ datasets, which represent different spatial resolutions and scene complexities, and is compared with seven state-of-the-art fusion methods. Qualitative comparisons (Figs. 5$ \sim $7) show that several existing approaches, including hybrid multiscale decomposition and image fusion convolutional neural networks, exhibit limited noise modeling capability. This limitation results in spectral distortion and detail blurring caused by SAR speckle interference. Methods based on infrared feature extraction and visual information preservation also show image whitening and contrast degradation due to excessive scattering feature injection. In contrast, the proposed method effectively filters redundant SAR noise through multiscale fractional denoising and adaptive scattering modulation, while preserving MS spectral consistency and salient SAR geometric structures. Improved visual clarity and detail preservation are observed, exceeding the performance of competitive approaches such as visual saliency feature fusion, which still presents residual noise. Quantitative results (Tables 1$ \sim $3) demonstrate consistent superiority across six evaluation metrics. On the WHU dataset, optimal ERGAS (3.737 0) and PSNR (24.798 3 dB) values are achieved. Performance improvements are more evident on the high-resolution YYX dataset and the structurally complex HQ dataset, where the proposed method ranks first for all indices. The mutual information on the YYX dataset reaches 3.353 5, which is nearly twice that of the second-ranked method, confirming strong multimodal information preservation. On average, the proposed framework achieves a performance improvement of 29.11% compared with the second-best baseline. Mechanism validation and efficiency analysis ( Tables 4 ,5 ) further support these results. Ablation experiments demonstrate that SIAM plays a critical role in maintaining the balance between spectral information and scattering characteristics, whereas DGMLE contributes substantially to structural fidelity. With an average runtime of 1.303 3 s, the proposed method achieves an effective trade-off between computational efficiency and fusion quality and remains significantly faster than complex transform-domain approaches such as multiscale non-subsampled shearlet transform combined with parallel convolutional neural networks.Conclusions A robust and unsupervised framework for SAR and MS image fusion is proposed. By integrating MS-FIPF-based fractional-order saliency extraction with DGMLE-based gradient-guided energy modeling, the proposed method addresses the long-standing trade-off between noise suppression and detail preservation. Bayesian adaptive fusion and scattering intensity modulation further improve robustness to modality differences. Experimental results confirm that the proposed framework outperforms seven representative fusion algorithms, achieving an average improvement of 29.11% across comprehensive evaluation metrics. Significant gains are observed in noise suppression, structural fidelity, and spectral preservation, demonstrating strong potential for multisource remote sensing data processing. -

表 1 WHU数据集定量评价结果

方法 ERGAS RMSE CC MI PSNR UIQI Hybrid MSD 12.9026 0.2217 0.3188 1.1341 13.9949 0.2356 IFEVIP 9.7476 0.1603 0.4778 1.3652 15.5444 0.3974 MS-NSST-PCNN 10.0468 0.1719 0.3038 0.8799 16.2896 0.2506 VSFF 4.0737 0.0695 0.8501 1.5112 23.3578 0.8068 VSMWLS 11.9109 0.2029 0.3368 1.1460 14.4080 0.2602 IFCNN 9.8688 0.1696 0.4087 0.9888 16.4705 0.3376 SwinFusion 13.2376 0.2289 0.1187 1.9432 13.7405 0.0923 本文 3.7370 0.0618 0.7951 3.0514 24.7983 0.7772 表 3 HQ数据集定量评价结果

方法 ERGAS RMSE CC MI PSNR UIQI Hybrid MSD 8.2002 0.1288 0.6274 1.0143 18.1301 0.6085 IFEVIP 7.4381 0.1238 0.6681 1.1227 18.8240 0.6499 MS-NSST-PCNN 10.5269 0.1561 0.6112 1.3835 16.6433 0.5815 VSFF 7.0673 0.1095 0.6975 1.4878 19.5663 0.6819 VSMWLS 6.4825 0.1129 0.7871 1.7237 19.8440 0.7720 IFCNN 9.1143 0.1602 0.5774 0.8943 16.2774 0.5484 SwinFusion 8.1024 0.1250 0.6449 1.7283 18.4429 0.6245 本文 3.7469 0.0587 0.8943 3.1966 26.2644 0.8895 表 2 YYX数据集定量评价结果

方法 ERGAS RMSE CC MI PSNR UIQI Hybrid MSD 10.0752 0.1327 0.6444 0.9647 17.0951 0.6355 IFEVIP 12.8203 0.1661 0.6070 1.3836 15.4906 0.5801 MS-NSST-PCNN 10.2292 0.1335 0.6311 1.0423 17.1989 0.6226 VSFF 8.1470 0.1068 0.8318 1.7089 19.1507 0.8081 VSMWLS 11.4078 0.1505 0.5452 0.9556 15.8586 0.5322 IFCNN 9.7939 0.1283 0.6254 1.0598 17.4961 0.6189 SwinFusion 11.1995 0.1461 0.5362 1.4485 16.4130 0.5296 本文 6.5735 0.0850 0.8399 3.3535 21.4096 0.8332 表 4 WHU, YYX 和 HQ 数据集上的消融实验结果

方法变体 ERGAS RMSE CC MI PSNR UIQI w/o MS-FIPF 4.7500 0.0695 0.8367 2.6672 23.9856 0.8269 w/o BAF 4.8335 0.0707 0.8311 3.0243 23.7813 0.8212 w/o DGMLE 5.5730 0.0831 0.7766 2.8511 22.1310 0.7594 w/o SIAM 6.1029 0.0895 0.7575 3.2301 21.8445 0.7390 本文 4.6832 0.0685 0.8434 3.2066 24.1686 0.8335 表 5 平均运行时间(s)

方法 IFCNN IFEVIP 本文 VSFF VSMWLS SwinFusion Hybrid MSD MS-NSST-PCNN 时间 0.0219 0.0994 1.3033 2.0317 2.5572 3.4612 8.1407 32.6212 -

[1] ZHANG Hao, XU Han, TIAN Xin, et al. Image fusion meets deep learning: A survey and perspective[J]. Information Fusion, 2021, 76: 323–336. doi: 10.1016/j.inffus.2021.06.008. [2] LIU Shuaijun, LIU Jia, TAN Xiaoyue, et al. A hybrid spatiotemporal fusion method for high spatial resolution imagery: Fusion of Gaofen-1 and Sentinel-2 over agricultural landscapes[J]. Journal of Remote Sensing, 2024, 4: 0159. doi: 10.34133/remotesensing.0159. [3] MEI Shaohui, LIAN Jiawei, WANG Xiaofei, et al. A comprehensive study on the robustness of deep learning-based image classification and object detection in remote sensing: Surveying and benchmarking[J]. Journal of Remote Sensing, 2024, 4: 0219. doi: 10.34133/remotesensing.0219. [4] YANG Songling, WANG Lihua, YUAN Yi, et al. Recognition of small water bodies under complex terrain based on SAR and optical image fusion algorithm[J]. Science of the Total Environment, 2024, 946: 174329. doi: 10.1016/j.scitotenv.2024.174329. [5] WU Wenfu, SHAO Zhenfeng, HUANG Xiao, et al. Quantifying the sensitivity of SAR and optical images three-level fusions in land cover classification to registration errors[J]. International Journal of Applied Earth Observation and Geoinformation, 2022, 112: 102868. doi: 10.1016/j.jag.2022.102868. [6] DONG Jun, FENG Jiewen, and TANG Xiaoyu. OptiSAR-Net: A cross-domain ship detection method for multisource remote sensing data[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 4709311. doi: 10.1109/TGRS.2024.3502447. [7] QUAN Yujun, ZHANG Rongrong, LI Jian, et al. Learning SAR-optical cross modal features for land cover classification[J]. Remote Sensing, 2024, 16(2): 431. doi: 10.3390/rs16020431. [8] HE Xiaoning, ZHANG Shuangcheng, XUE Bowei, et al. Cross-modal change detection flood extraction based on convolutional neural network[J]. International Journal of Applied Earth Observation and Geoinformation, 2023, 117: 103197. doi: 10.1016/j.jag.2023.103197. [9] YE Yuanxin, ZHANG Jiacheng, ZHOU Liang, et al. Optical and SAR image fusion based on complementary feature decomposition and visual saliency features[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5205315. doi: 10.1109/TGRS.2024.3366519. [10] DENG Liangjian, VIVONE G, PAOLETTI M E, et al. Machine learning in pansharpening: A benchmark, from shallow to deep networks[J]. IEEE Geoscience and Remote Sensing Magazine, 2022, 10(3): 279–315. doi: 10.1109/MGRS.2022.3187652. [11] CHU Tianyong, TAN Yumin, LIU Qiang, et al. Novel fusion method for SAR and optical images based on non-subsampled shearlet transform[J]. International Journal of Remote Sensing, 2020, 41(12): 4590–4604. doi: 10.1080/01431161.2020.1723175. [12] ZHANG Wei and YU Le. SAR and Landsat ETM+ image fusion using variational model[C]. The 2010 International Conference on Computer and Communication Technologies in Agriculture Engineering, Chengdu, China, 2010: 205–207. doi: 10.1109/CCTAE.2010.5544210. [13] KONG Yingying, HONG Fang, LEUNG H, et al. A fusion method of optical image and SAR image based on dense-UGAN and Gram–Schmidt transformation[J]. Remote Sensing, 2021, 13(21): 4274. doi: 10.3390/rs13214274. [14] SHAO Zhenfeng, WU Wenfu, and GUO Songjing. IHS-GTF: A fusion method for optical and synthetic aperture radar data[J]. Remote Sensing, 2020, 12(17): 2796. doi: 10.3390/rs12172796. [15] LI Wenmei, WU Jiaqi, LIU Qing, et al. An effective multimodel fusion method for SAR and optical remote sensing images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023, 16: 5881–5892. doi: 10.1109/JSTARS.2023.3288143. [16] GONG Xunqiang, HOU Zhaoyang, WAN Yuting, et al. Multispectral and SAR image fusion for multiscale decomposition based on least squares optimization rolling guidance filtering[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5401920. doi: 10.1109/TGRS.2024.3353868. [17] XU Li, YAN Qiong, XIA Yang, et al. Structure extraction from texture via relative total variation[J]. ACM Transactions on Graphics, 2012, 31(6): 139. doi: 10.1145/2366145.2366158. [18] LI Bo and XIE Wei. Adaptive fractional differential approach and its application to medical image enhancement[J]. Computers & Electrical Engineering, 2015, 45: 324–335. doi: 10.1016/j.compeleceng.2015.02.013. [19] YANG Qi, CHEN Dali, ZHAO Tiebiao, et al. Fractional calculus in image processing: A review[J]. Fractional Calculus and Applied Analysis, 2016, 19(5): 1222–1249. doi: 10.1515/fca-2016-0063. [20] ENßLIN T A. Information theory for fields[J]. Annalen der Physik, 2019, 531(3): 1800127. doi: 10.1002/andp.201800127. [21] LIU Yu, WANG Lei, CHENG Juan, et al. Multi-focus image fusion: A survey of the state of the art[J]. Information Fusion, 2020, 64: 71–91. doi: 10.1016/j.inffus.2020.06.013. [22] FIZA S and SAFINAZ S. Multi-focus image fusion using edge discriminative diffusion filter for satellite images[J]. Multimedia Tools and Applications, 2024, 83(25): 66087–66106. doi: 10.1007/s11042-024-18174-3. [23] MA Jiayi, YU Wei, LIANG Pengwei, et al. FusionGAN: A generative adversarial network for infrared and visible image fusion[J]. Information Fusion, 2019, 48: 11–26. doi: 10.1016/j.inffus.2018.09.004. [24] ZHOU Zhiqiang, WANG Bo, LI Sun, et al. Perceptual fusion of infrared and visible images through a hybrid multi-scale decomposition with Gaussian and bilateral filters[J]. Information Fusion, 2016, 30: 15–26. doi: 10.1016/j.inffus.2015.11.003. [25] MA Jinlei, ZHOU Zhiqiang, WANG Bo, et al. Infrared and visible image fusion based on visual saliency map and weighted least square optimization[J]. Infrared Physics & Technology, 2017, 82: 8–17. doi: 10.1016/j.infrared.2017.02.005. [26] ZHANG Yu, LIU Yu, SUN Peng, et al. IFCNN: A general image fusion framework based on convolutional neural network[J]. Information Fusion, 2020, 54: 99–118. doi: 10.1016/j.inffus.2019.07.011. [27] MA Jiayi, TANG Linfeng, FAN Fan, et al. SwinFusion: Cross-domain long-range learning for general image fusion via Swin transformer[J]. IEEE/CAA Journal of Automatica Sinica, 2022, 9(7): 1200–1217. doi: 10.1109/JAS.2022.105686. [28] ZHANG Yu, ZHANG Lijia, BAI Xiangzhi, et al. Infrared and visual image fusion through infrared feature extraction and visual information preservation[J]. Infrared Physics & Technology, 2017, 83: 227–237. doi: 10.1016/j.infrared.2017.05.007. [29] HUANG Wei, LIU Yanyan, SUN Le, et al. A novel dual-branch pansharpening network with high-frequency component enhancement and multi-scale skip connection[J]. Remote Sensing, 2025, 17(5): 776. doi: 10.3390/rs17050776. [30] VIVONE G, ALPARONE L, CHANUSSOT J, et al. A critical comparison among pansharpening algorithms[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(5): 2565–2586. doi: 10.1109/TGRS.2014.2361734. [31] LONCAN L, DE ALMEIDA L B, BIOUCAS-DIAS J M, et al. Hyperspectral pansharpening: A review[J]. IEEE Geoscience and Remote Sensing Magazine, 2015, 3(3): 27–46. doi: 10.1109/MGRS.2015.2440094. [32] PLUIM J P W, MAINTZ J B A, and VIERGEVER M A. Mutual-information-based registration of medical images: A survey[J]. IEEE Transactions on Medical Imaging, 2003, 22(8): 986–1004. doi: 10.1109/TMI.2003.815867. [33] LIU Danfeng, WANG Enyuan, WANG Liguo, et al. Pansharpening based on multimodal texture correction and adaptive edge detail fusion[J]. Remote Sensing, 2024, 16(16): 2941. doi: 10.3390/rs16162941. [34] WEN Xincan, MA Hongbing, and LI Liangliang. A three-branch pansharpening network based on spatial and frequency domain interaction[J]. Remote Sensing, 2025, 17(1): 13. doi: 10.3390/rs17010013. [35] LI Xue, ZHANG Guo, CUI Hao, et al. MCANet: A joint semantic segmentation framework of optical and SAR images for land use classification[J]. International Journal of Applied Earth Observation and Geoinformation, 2022, 106: 102638. doi: 10.1016/j.jag.2021.102638. [36] LI Jinjin, ZHANG Jiacheng, YANG Chao, et al. Comparative analysis of pixel-level fusion algorithms and a new high-resolution dataset for SAR and optical image fusion[J]. Remote Sensing, 2023, 15(23): 5514. doi: 10.3390/rs15235514. -

下载:

下载:

下载:

下载: