A Landmark Matching Method Considering Gray-Gradient Dual-Channel Features and Deformation Parameter Optimization

-

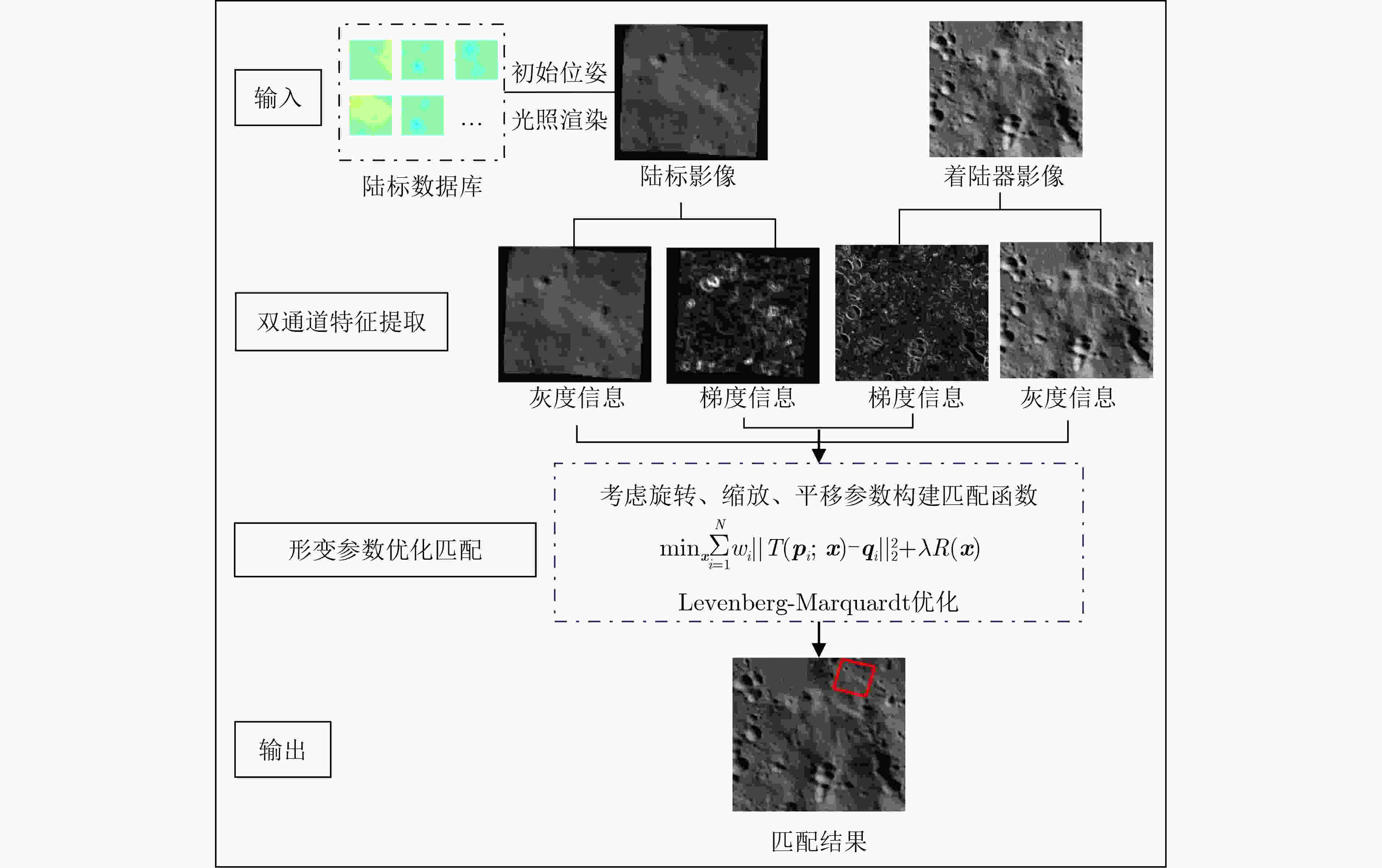

摘要: 面向深空探测任务对光学自主导航定位的迫切需求,该文提出一种融合影像灰度与梯度幅值双通道特征,并结合形变参数优化的陆标匹配算法。该方法将匹配问题转换为非线性函数求解问题,以陆标与着陆器影像在灰度与梯度特征上的差异最小化为目标,构建非线性函数,并采用Levenberg-Marquardt算法迭代求解最优形变参数,从而获得陆标在着陆影像上精确的匹配位置。实验结果表明,即便在存在多种先验误差的情况下,该方法仍能以亚秒级的速度实现鲁棒匹配,平均匹配误差模长为1.03像素。研究结果充分验证了该算法在高精度与高实时性陆标匹配任务中的有效性,可为无卫星导航条件下的月球着陆定位提供可靠的技术支撑。Abstract:

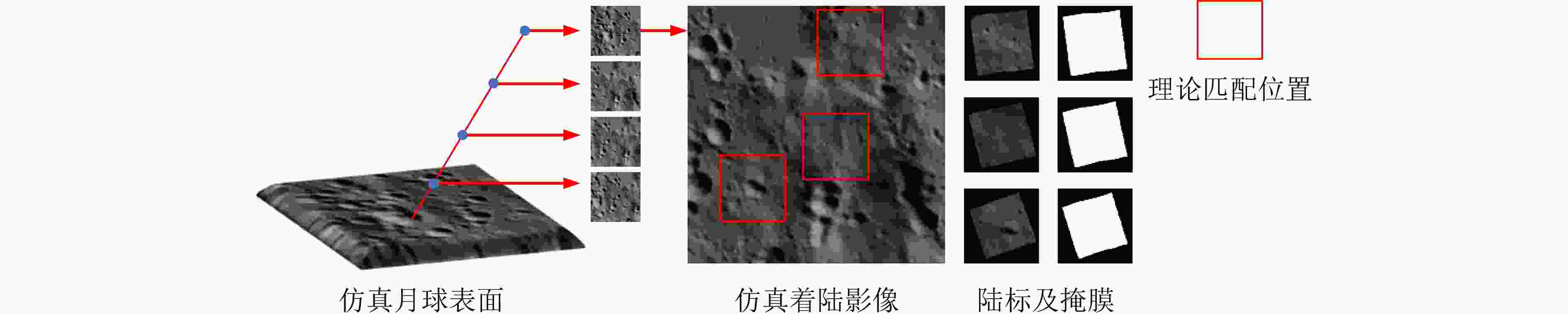

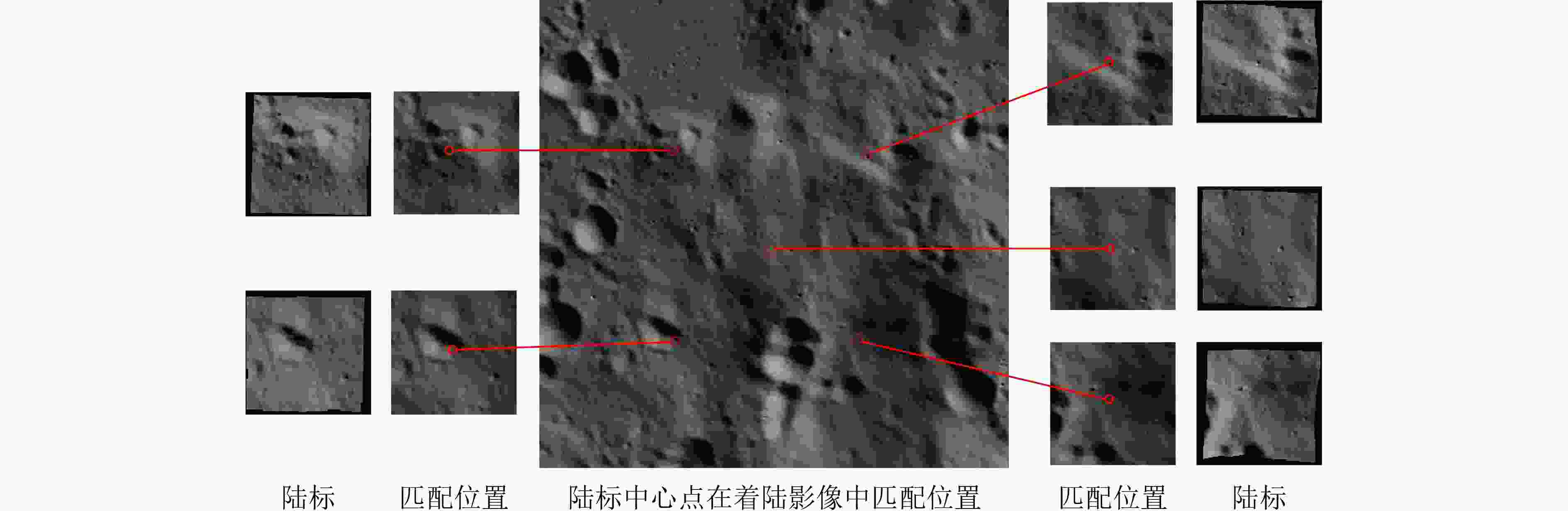

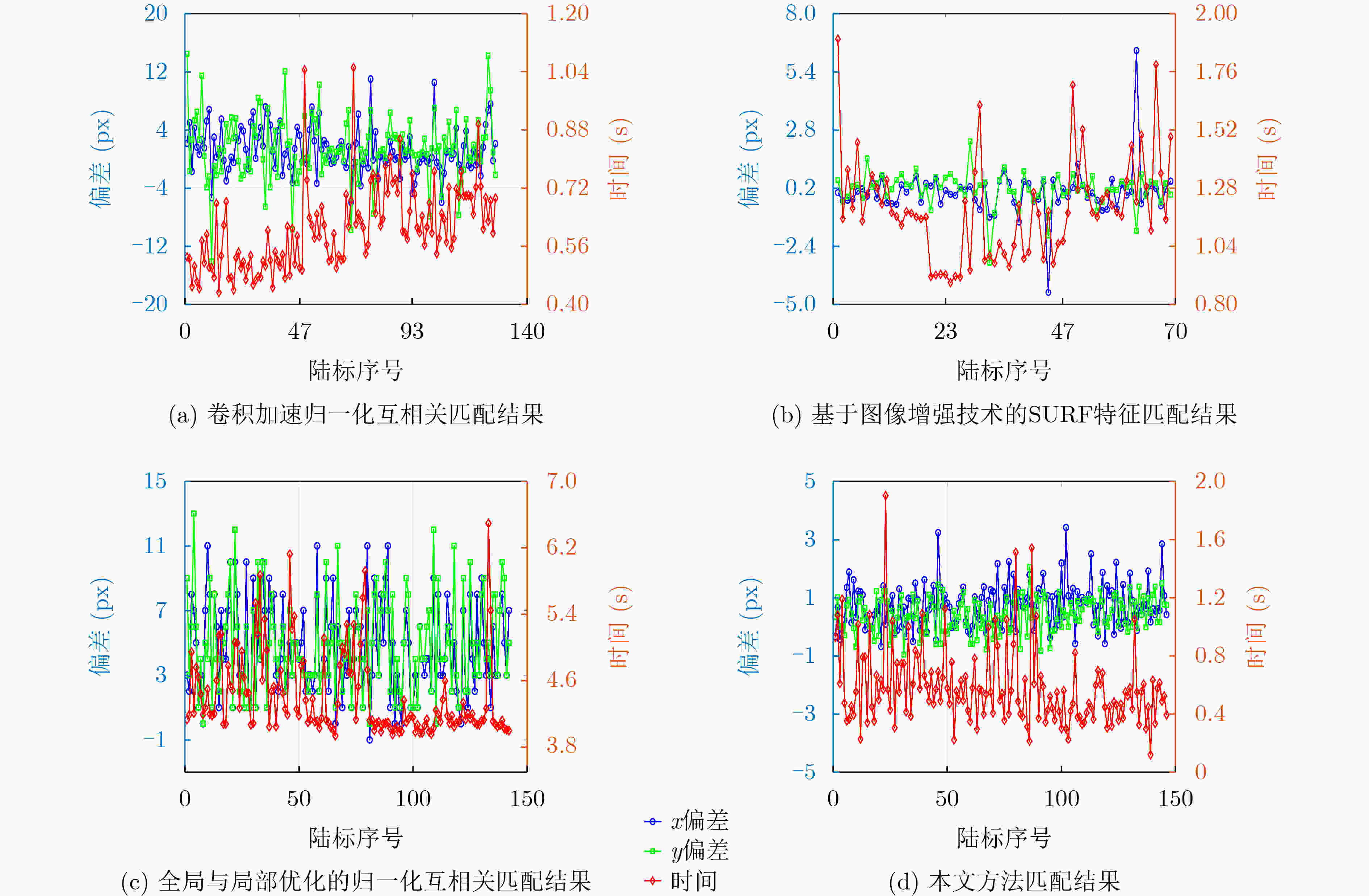

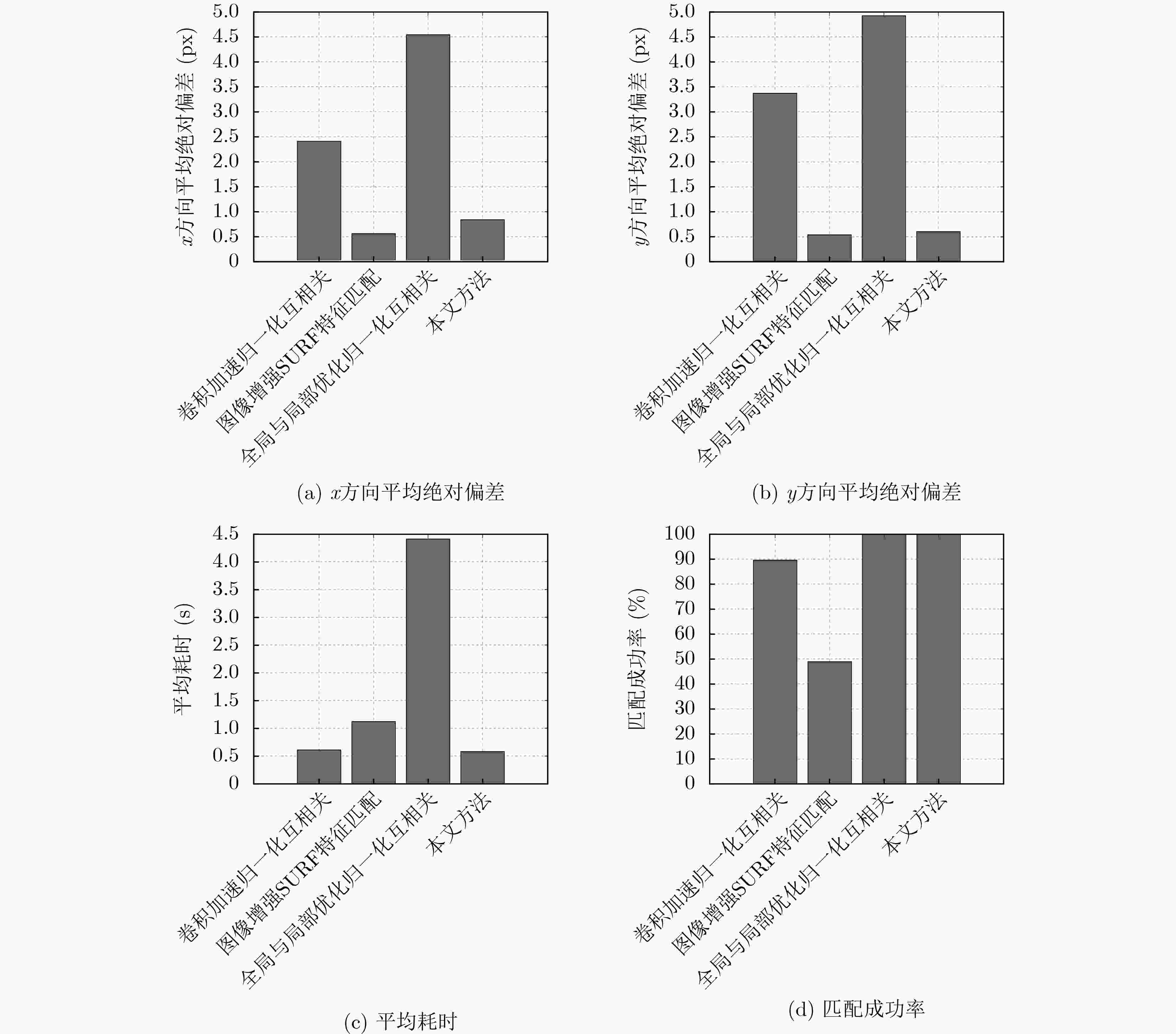

Objective High-precision optical autonomous navigation is a key technology for deep-space exploration and planetary landing missions. During the descent phase of a lunar lander, communication delays and accumulated errors in the Inertial Navigation System (INS) lead to significant positioning deviations, which pose serious risks to safe landing. Optical images acquired by the lander are matched with pre-stored lunar landmark databases to establish correspondences between image coordinates and three-dimensional coordinates of lunar surface features, thereby enabling precise position estimation. This process is challenged by dynamic illumination variation on the lunar surface, noise in prior pose information, and limited onboard computational resources. Traditional template matching methods exhibit high computational cost and sensitivity to rotation and scale variation. Keypoint-based methods, such as Scale Invariant Feature Transform (SIFT) and Speeded Up Robust Features (SURF), suffer from uneven keypoint distribution and sensitivity to illumination variation, which results in reduced robustness. Deep learning-based approaches, including SuperPoint, SuperGlue, and LF-Net, improve feature detection accuracy but require substantial computational resources, which restricts real-time onboard deployment. To address these limitations, a landmark matching algorithm is proposed that integrates gray-gradient dual-channel features with deformation parameter optimization, enabling high-precision and real-time matching for lunar optical autonomous navigation. Methods Dual-channel image features are constructed by combining gray-level intensity and gradient magnitude representations. Gradient features are computed using Sobel operators in the horizontal and vertical directions, and the gradient magnitude is calculated as the Euclidean norm of the two components. To reduce the effect of local brightness variation and ensure inter-region comparability, zero-mean normalization is applied independently to each feature channel. An adaptive weighting strategy is employed, in which weights are assigned according to local gradient saliency, and a bias term is introduced to retain weak texture information, thereby improving robustness under noisy conditions. Landmark matching is formulated as a nonlinear least-squares optimization problem. A deformation parameter vector is defined, which includes incremental rotation, scale, and translation relative to the prior pose. The objective function minimizes the weighted sum of squared differences between dual-channel landmark features and image features, with Tikhonov regularization applied to constrain parameter magnitude and improve numerical stability. The Levenberg-Marquardt (LM) algorithm is adopted to iteratively estimate the optimal deformation parameters. Its adaptive damping strategy enables switching between gradient descent and Gauss-Newton updates, ensuring stable convergence under large prior pose errors. Iteration terminates when the error norm falls below a predefined threshold or when the maximum iteration number is reached, yielding the optimal landmark transformation parameters. Results and Discussions Experiments are conducted using simulated lunar landing images generated from 60 m-resolution SLDEM (Digital Elevation Model Coregistered with SELENE Data) data, with high-fidelity illumination rendering applied to ensure realistic lighting conditions ( Fig. 2 ). To evaluate matching performance under different scenarios, 143 landmarks are synthesized with systematically controlled perturbations in rotation, scale, and translation. Four representative methods are selected for comparison, including convolution-accelerated Normalized Cross-Correlation (NCC), SURF-based feature matching with image enhancement, globally and locally optimized NCC, and the proposed algorithm (Fig. 4 ). The results indicate clear performance differences among the methods. Convolution-accelerated NCC achieves sub-second runtime and demonstrates high computational efficiency, although its accuracy degrades under gray-level variation and geometric deformation, with mean absolute errors of 2.41 px along the x-axis and 3.37 px along the y-axis, and a success rate of 89.51% (Table 1 ). SURF-based matching achieves sub-pixel accuracy, with mean absolute errors of 0.56 px along the x-axis and 0.54 px along the y-axis, although its success rate is limited to 48.95% and its runtime exceeds one second, which restricts onboard applicability. The globally and locally optimized NCC method exhibits the lowest accuracy, with errors of 4.54 px along the x-axis and 4.92 px along the y-axis, and the longest runtime of 4.41 s, despite achieving a 100% success rate. In contrast, the proposed algorithm consistently achieves sub-pixel accuracy comparable to SURF, maintains a 100% success rate, and sustains a stable runtime of approximately 0.5 s across all test cases. Its robustness to landmark deformation and illumination variation demonstrates suitability for complex operational conditions. Overall, the results show that the proposed algorithm achieves a favorable balance among accuracy, robustness, and computational efficiency.Conclusions A landmark matching algorithm is presented that integrates gray-gradient dual-channel features with deformation parameter optimization. Gray-level intensity and gradient magnitude information from both landmark templates and lander images are jointly exploited to construct a dual-channel matching model that minimizes feature differences. Deformation parameters, including rotation, scale, and translation, are iteratively optimized using the LM algorithm, enabling rapid estimation of the optimal landmark position in the lander image. Experimental results show stable convergence within sub-second runtime, with an average matching error of 1.03 pixels under disturbances in attitude, scale, and position. The proposed method outperforms single-channel gray-level cross-correlation and SURF-based matching approaches in accuracy, robustness, and efficiency. These results provide practical support for the design and implementation of future autonomous lunar optical navigation systems. -

表 1 不同匹配方法指标对比

方法 x方向平均绝对偏差(像素) y方向平均绝对偏差(像素) 平均耗时(s) 匹配成功率(%) 卷积加速归一化互相关 2.41 3.37 0.61 89.51 基于图像增强技术的SURF特征匹配 0.56 0.54 1.12 48.95 全局与局部优化的归一化互相关 4.54 4.92 4.41 100.00 本文方法 0.84 0.60 0.58 100.00 -

[1] LIN Yangting, YANG Wei, ZHANG Hui, et al. Return to the moon: New perspectives on lunar exploration[J]. Science Bulletin, 2024, 69(13): 2136–2148. doi: 10.1016/j.scib.2024.04.051. [2] 余后满, 饶炜, 张益源, 等. “嫦娥七号”探测器任务综述[J]. 深空探测学报(中英文), 2023, 10(6): 567–576. doi: 10.15982/j.issn.2096-9287.2023.20230119.YU Houman, RAO Wei, ZHANG Yiyuan, et al. Mission analysis and spacecraft design of Chang’e-7[J]. Journal of Deep Space Exploration, 2023, 10(6): 567–576. doi: 10.15982/j.issn.2096-9287.2023.20230119. [3] 童小华, 刘世杰, 谢欢, 等. 从地球测绘到地外天体测绘[J]. 测绘学报, 2022, 51(4): 488–500.TONG Xiaohua, LIU Shijie, XIE Huan, et al. From Earth mapping to extraterrestrial planet mapping[J]. Acta Geodaetica et Cartographica Sinica, 2022, 51(4): 488–500. [4] MOURIKIS A I, TRAWNY N, ROUMELIOTIS S I, et al. Vision-aided inertial navigation for spacecraft entry, descent, and landing[J]. IEEE Transactions on Robotics, 2009, 25(2): 264–280. doi: 10.1109/TRO.2009.2012342. [5] SIMON A B, MAJJI M, RESTREPO C I, et al. A comparison of feature extraction methods for terrain relative navigation[C]. AIAA Scitech 2020 Forum, Orlando, USA, 2020: 0601. doi: 10.2514/6.2020-0601. [6] 胡荣海, 黄翔宇, 徐超. 小天体近距离视觉导航的陆标鲁棒匹配方法[J]. 深空探测学报(中英文), 2022, 9(4): 407–416. doi: 10.15982/j.issn.2096-9287.2022.20220019.HU Ronghai, HUANG Xiangyu, and XU Chao. Robust landmark matching method for visual navigation near small bodies[J]. Journal of Deep Space Exploration, 2022, 9(4): 407–416. doi: 10.15982/j.issn.2096-9287.2022.20220019. [7] 华宝成, 李琦, 徐昌定, 等. 嫦娥六号降落图像导航定位技术[J]. 空间控制技术与应用, 2024, 50(6): 57–63. doi: 10.3969/j.issn.1674-1579.2024.06.007.HUA Baocheng, LI Qi, XU Changding, et al. Visual navigation and positioning technology for Chang’e-6 descent[J]. Aerospace Control and Application, 2024, 50(6): 57–63. doi: 10.3969/j.issn.1674-1579.2024.06.007. [8] 张成渝, 梁潇, 华宝成, 等. 一种小行星探测陆标导航方法[J]. 空间控制技术与应用, 2021, 47(1): 78–86. doi: 10.3969/j.issn.1674-1579.2021.01.011.ZHANG Chengyu, LIANG Xiao, HUA Baocheng, et al. A landmark navigation method for asteroid exploration[J]. Aerospace Control and Application, 2021, 47(1): 78–86. doi: 10.3969/j.issn.1674-1579.2021.01.011. [9] BAY H, TUYTELAARS T, and VAN GOOL L. SURF: Speeded up robust features[C]. The 9th European Conference on Computer Vision, Graz, Austria, 2006: 404–417. doi: 10.1007/11744023_32. [10] DETONE D, MALISIEWICZ T, and RABINOVICH A. SuperPoint: Self-supervised interest point detection and description[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, USA, 2018: 337–33712. doi: 10.1109/CVPRW.2018.00060. [11] ONO Y, TRULLS E, FUA P, et al. LF-Net: Learning local features from images[C]. Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, Canada, 2018: 6237–6247. [12] SARLIN P E, CADENA C, SIEGWART R, et al. From coarse to fine: Robust hierarchical localization at large scale[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 12708–12717. doi: 10.1109/CVPR.2019.01300. [13] WANG Yaqiong, XIE Huan, HUANG Qian, et al. A novel approach for multiscale lunar crater detection by the use of path-profile and isolation forest based on high-resolution planetary images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 4601424. doi: 10.1109/TGRS.2022.3153721. [14] RONNEBERGER O, FISCHER P, and BROX T. U-Net: Convolutional networks for biomedical image segmentation[C]. The 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234–241. doi: 10.1007/978-3-319-24574-4_28. [15] SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, USA, 2015: 1–9. doi: 10.1109/CVPR.2015.7298594. [16] MUHAMMAD U, WANG Weiqiang, CHATTHA S P, et al. Pre-trained VGGNet architecture for remote-sensing image scene classification[C]. 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 2018: 1622–1627. doi: 10.1109/ICPR.2018.8545591. [17] SAKAI S, KUSHIKI K, SAWAI S, et al. Moon landing results of SLIM: A smart Lander for investigating the moon[J]. Acta Astronautica, 2025, 235: 47–54. doi: 10.1016/j.actaastro.2025.05.047. [18] LIU Shijie, CHU Guanghan, XU Changding, et al. Lunar crater matching with triangle-based global second-order similarity for precision navigation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2025, 63: 4600817. doi: 10.1109/TGRS.2025.3598104. [19] LOURAKIS M I A. A brief description of the Levenberg-Marquardt algorithm implemened by Levmar[R]. Foundation for Research and Technology, 2005: 1–6. [20] TONG Xiaohua, HUANG Qian, LIU Shijie, et al. A high-precision horizon-based illumination modeling method for the lunar surface using pyramidal LOLA data[J]. Icarus, 2023, 390: 115302. doi: 10.1016/j.icarus.2022.115302. [21] 张一梵. 基于灰度相关的快速模板匹配算法研究[D]. [硕士论文], 广州大学, 2022. doi: 10.27040/d.cnki.ggzdu.2022.001434.ZHANG Yifan. Research on fast template matching algorithm based on gray-level correlation[D]. [Master dissertation], Guangzhou University, 2022. doi: 10.27040/d.cnki.ggzdu.2022.001434. [22] 张明浩, 杨耀权, 靳渤文. 基于图像增强技术的SURF特征匹配算法研究[J]. 自动化与仪表, 2019, 34(9): 98–102. doi: 10.19557/j.cnki.1001-9944.2019.09.023.ZHANG Minghao, YANG Yaoquan, and JIN Bowen. Research on SURF feature matching algorithm based on image enhancement technology[J]. Automation & Instrumentation, 2019, 34(9): 98–102. doi: 10.19557/j.cnki.1001-9944.2019.09.023. -

下载:

下载:

下载:

下载: