Multi-Scale Region of Interest Feature Fusion for Palmprint Recognition

-

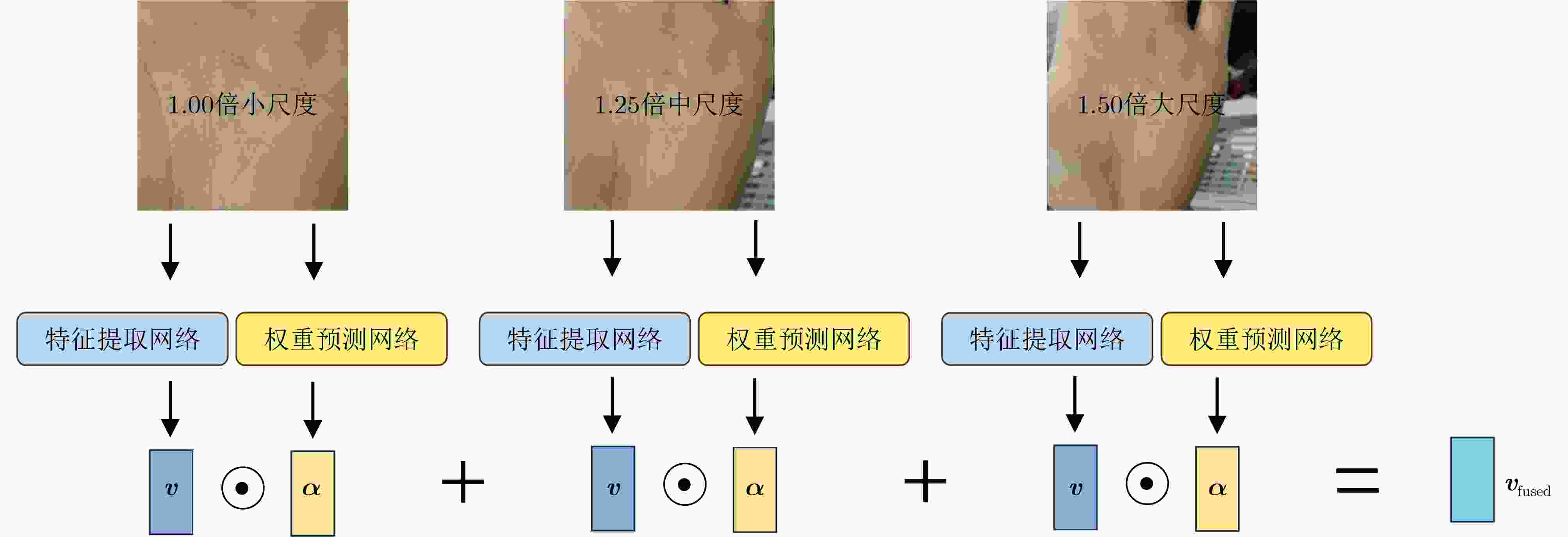

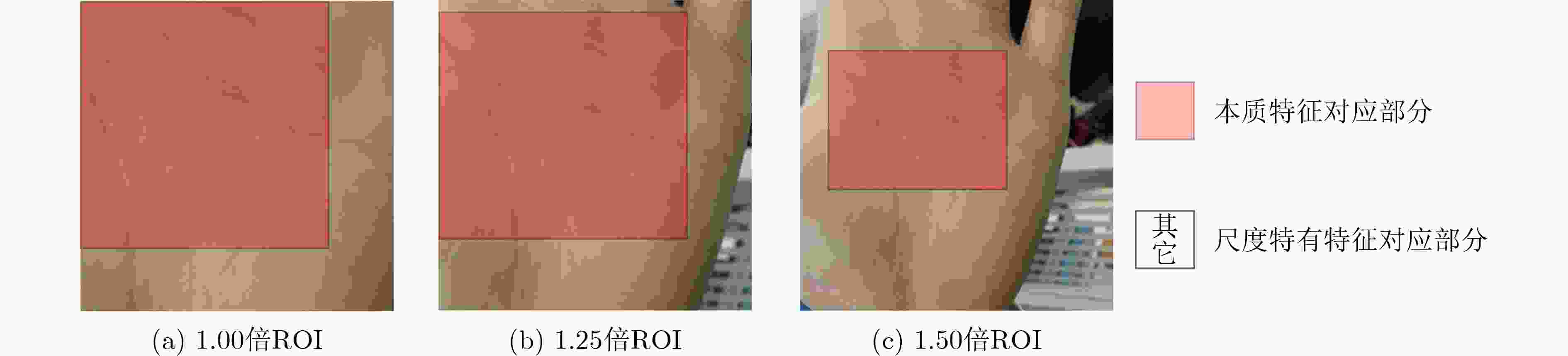

摘要: 定位感兴趣区域(ROI)是掌纹识别流程中的关键环节,然而,在实际应用中,光照变化与手掌姿态的多样性常常导致ROI定位出现偏移,进而影响识别系统的性能。为缓解此问题,该文提出一种新颖的多尺度ROI特征融合机制,并据此设计了一个双分支协同工作的深度学习模型。该模型由特征提取网络和权重预测网络构成:前者负责从多个不同尺度的ROI中并行提取特征,后者则自适应地为各尺度特征分配权重。该融合机制的核心思想在于,不同尺度的ROI既共享了掌纹的核心纹理等本质特征,又各自包含了独特的尺度相关信息。通过对这些特征进行加权融合,模型能够强化共有的本质特征,同时抑制由定位不准引入的噪声和冗余信息,从而生成更具鲁棒性的特征。在IITD, MPD和NTU-CP等多个公开掌纹数据集上的综合实验表明,该模型在存在显著定位误差时,其识别精度仅出现小幅下降,展现出远超传统单尺度ROI模型的抗误差能力。特别是在NTU-CP定位误差测试中,该模型的等错误率(EER)仅从1.96%小幅上升至5.01%,而其他对比模型的EER均超过10%,这充分证实了所提多尺度ROI特征融合机制的有效性与优越性。Abstract:

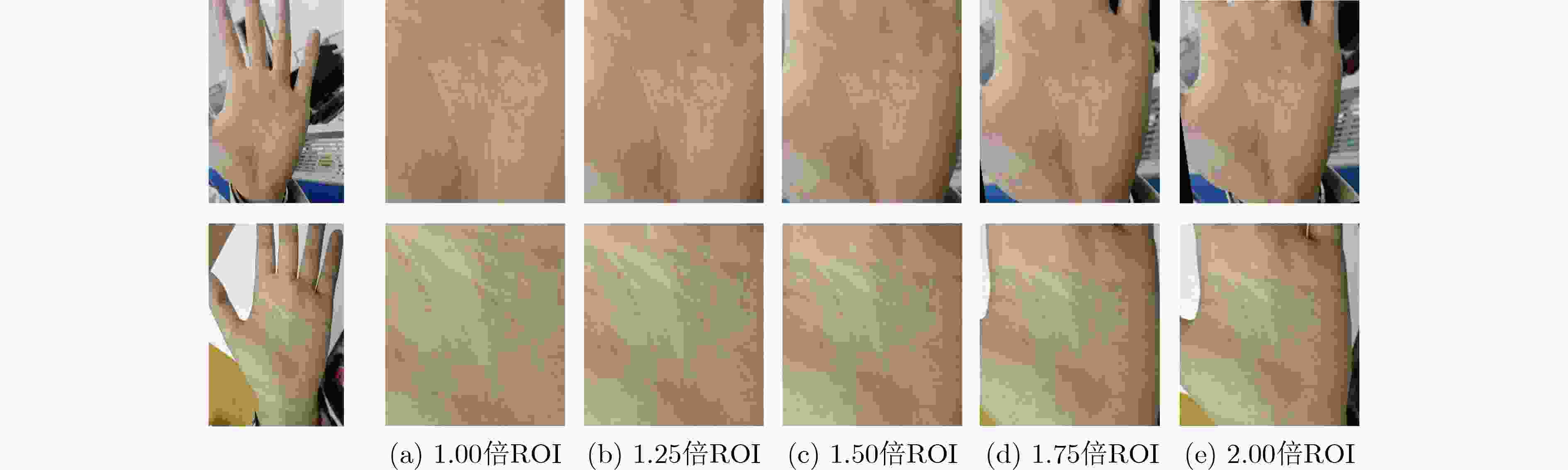

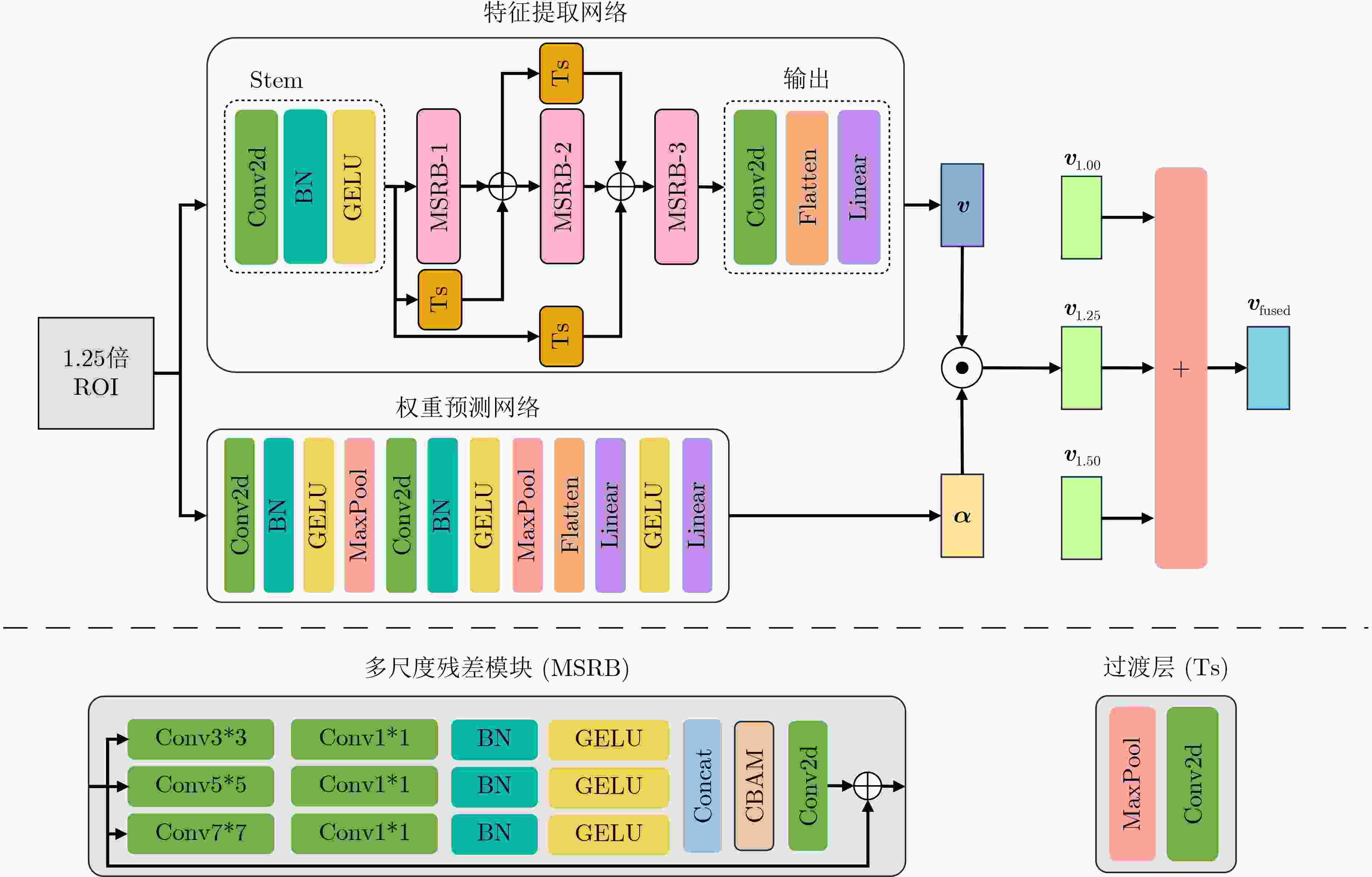

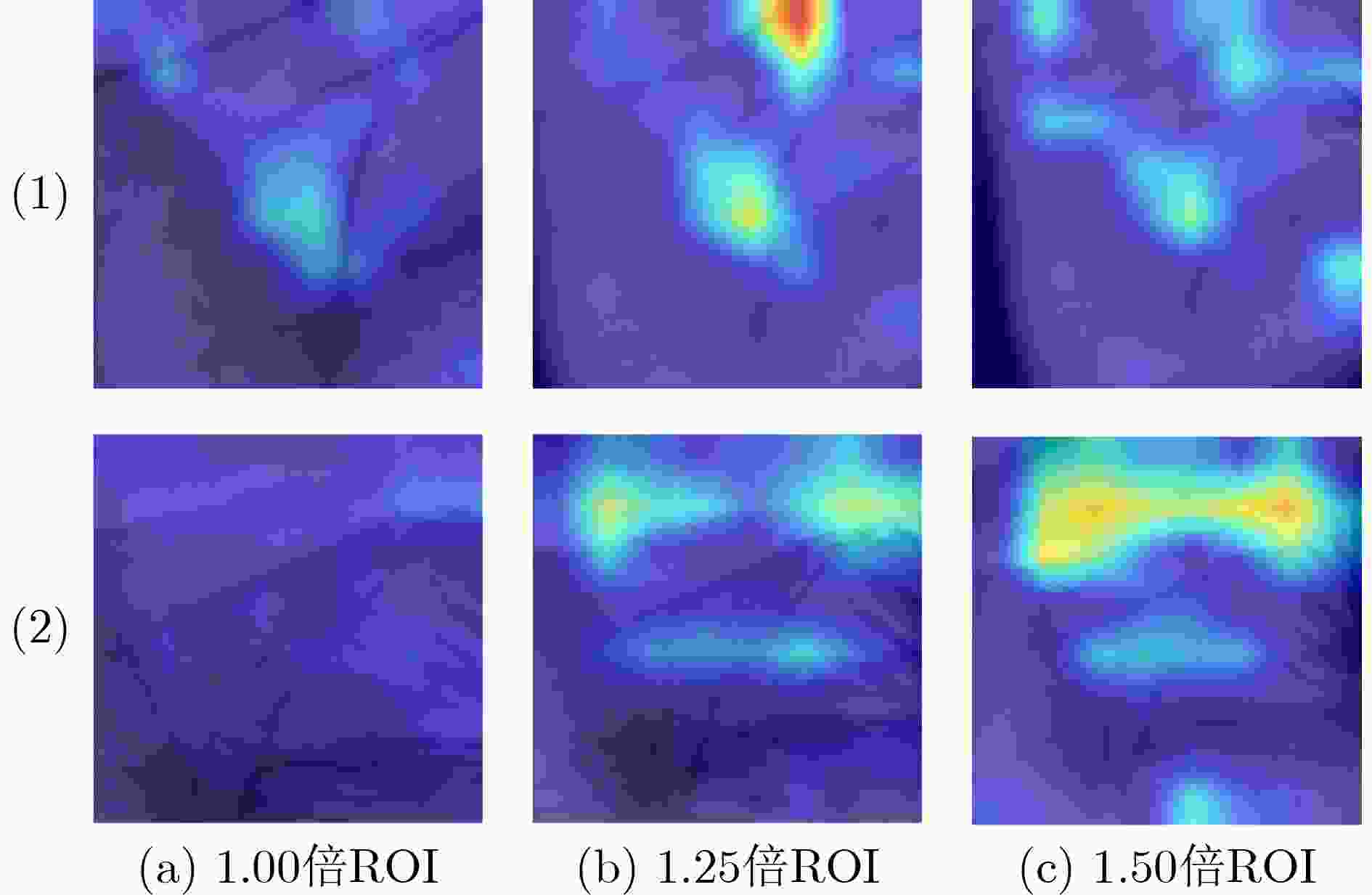

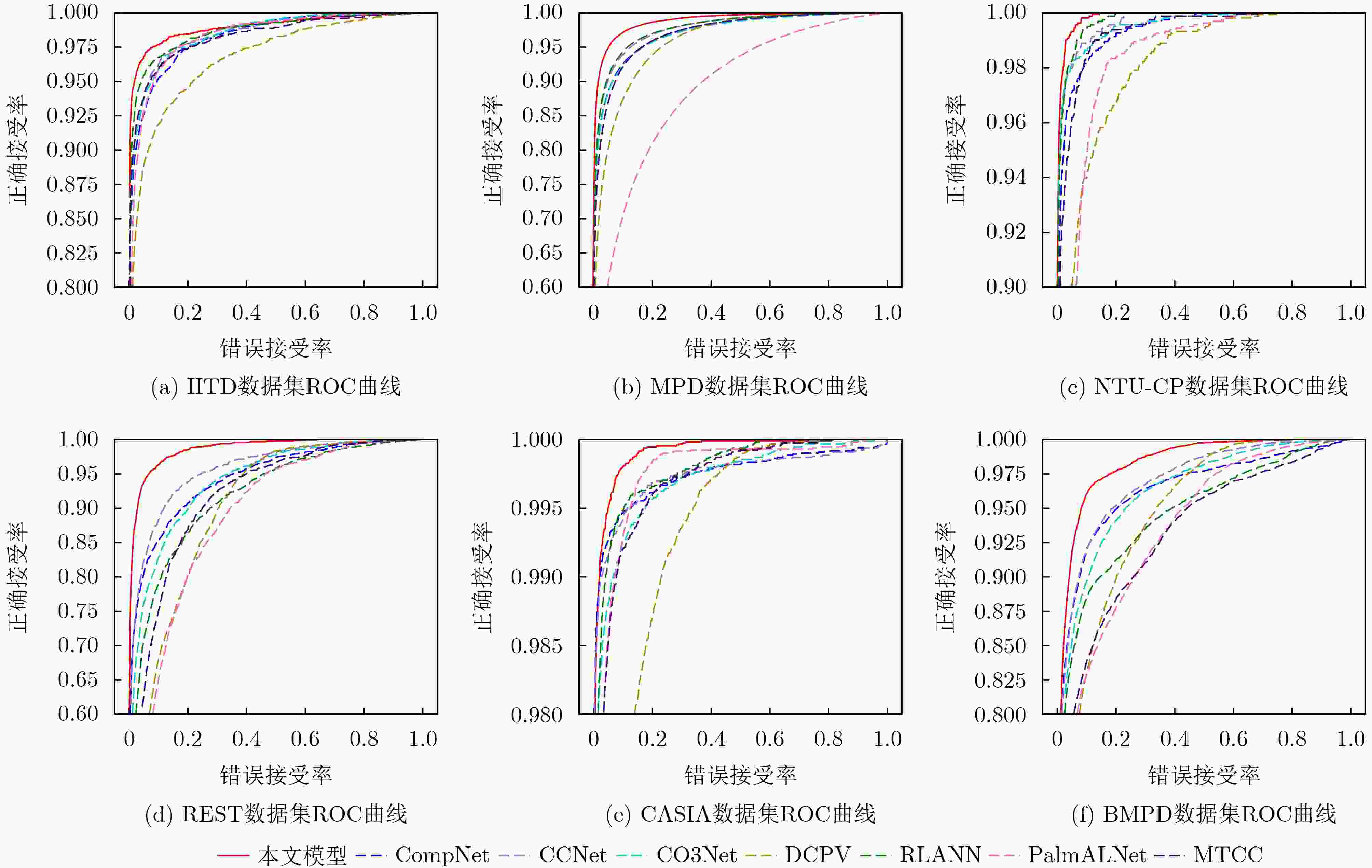

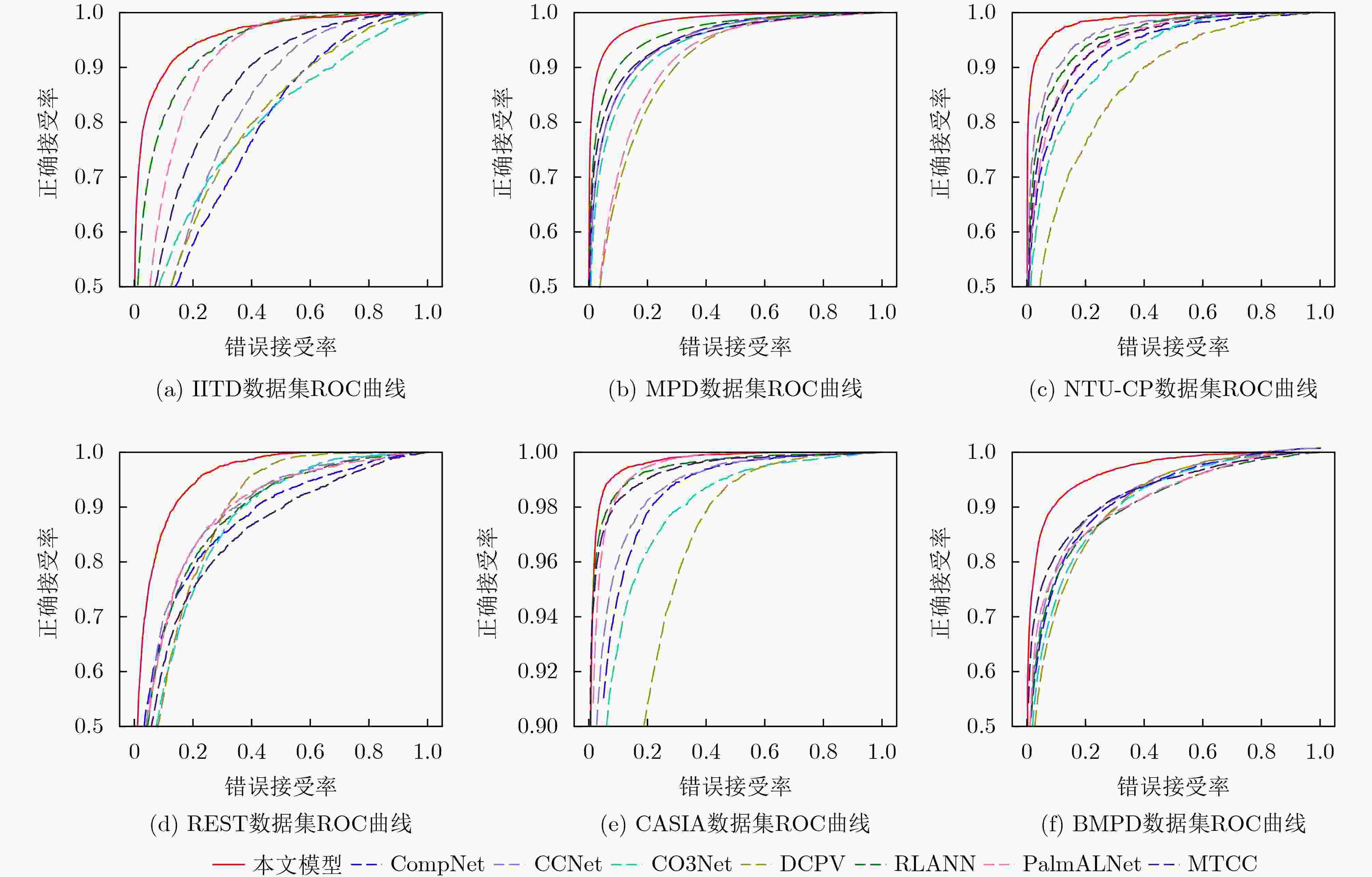

Objective Accurate localization of the Region Of Interest (ROI) is a prerequisite for high-precision palmprint recognition. In contactless and uncontrolled application scenarios, complex background illumination and diverse hand postures frequently cause ROI localization offsets. Most existing deep learning-based recognition methods rely on a single fixed-size ROI as input. Although some approaches adopt multi-scale convolution kernels, fusion at the ROI level is not performed, which makes these methods highly sensitive to localization errors. Therefore, small deviations in ROI extraction often result in severe performance degradation, which restricts practical deployment. To overcome this limitation, a Multi-scale ROI Feature Fusion Mechanism is proposed, and a corresponding model, termed ROI3Net, is designed. The objective is to construct a recognition system that is inherently robust to localization errors by integrating complementary information from multiple ROI scales. This strategy reinforces shared intrinsic texture features while suppressing scale-specific noise introduced by positioning inaccuracies. Methods The proposed ROI3Net adopts a dual-branch architecture consisting of a Feature Extraction Network and a lightweight Weight Prediction Network ( Fig. 4 ). The Feature Extraction Network employs a sequence of Multi-Scale Residual Blocks (MSRBs) to process ROIs at three progressive scales (1.00×, 1.25×, and 1.50×) in parallel. Within each MSRB, dense connections are applied to promote feature reuse and reduce information loss (Eq. 3). Convolutional Block Attention Modules (CBAMs) are incorporated to adaptively refine features in both the channel and spatial dimensions. The Weight Prediction Network is implemented as an end-to-end lightweight module. It takes raw ROI images as input and processes them using a serialized convolutional structure (Conv2d-BN-GELU-MaxPool), followed by a Multi-Layer Perceptron (MLP) head, to predict a dynamic weight vector for each scale. This subnetwork is optimized for efficiency, containing 2.38 million parameters, which accounts for approximately 6.2% of the total model parameters, and requiring 103.2 MFLOPs, which corresponds to approximately 2.1% of the total computational cost. The final feature representation is obtained through a weighted summation of multi-scale features (Eq. 1 and Eq. 2), which mathematically maximizes the information entropy of the fused feature vector.Results and Discussions Experiments are conducted on six public palmprint datasets: IITD, MPD, NTU-CP, REST, CASIA, and BMPD. Under ideal conditions with accurate ROI localization, ROI3Net demonstrates superior performance compared with state-of-the-art single-scale models. For instance, a Rank-1 accuracy of 99.90% is achieved on the NTU-CP dataset, and a Rank-1 accuracy of 90.17% is achieved on the challenging REST dataset ( Table 1 ). Model robustness is further evaluated by introducing a random 10% localization offset. Under this condition, conventional models exhibit substantial performance degradation. For example, the Equal Error Rate (EER) of the CO3Net model on NTU-CP increases from 2.54% to 15.66%. In contrast, ROI3Net maintains stable performance, with the EER increasing only from 1.96% to 5.01% (Fig. 7 ,Table 2 ). The effect of affine transformations, including rotation (±30°) and scaling (0.85$ \sim $1.15×), is also analyzed. Rotation causes feature distortion because standard convolution operations lack rotation invariance, whereas the proposed multi-scale mechanism effectively compensates for translation errors by expanding the receptive field (Table 3 ). Generalization experiments further confirm that embedding this mechanism into existing models, including CCNet, CO3Net, and RLANN, significantly improves robustness (Table 6 ). In terms of efficiency, although the theoretical computational load increases by approximately 150%, the actual GPU inference time increases by only about 20% (6.48 ms) because the multi-scale branches are processed independently and in parallel (Table 7 ).Conclusions A Multi-scale ROI Feature Fusion Mechanism is presented to reduce the sensitivity of palmprint recognition systems to localization errors. By employing a lightweight Weight Prediction Network to adaptively fuse features extracted from different ROI scales, the proposed ROI3Net effectively combines fine-grained texture details with global semantic information. Experimental results confirm that this approach significantly improves robustness to translation errors by recovering truncated texture information, whereas the efficient design of the Weight Prediction Network limits computational overhead. The proposed mechanism also exhibits strong generalization ability when integrated into different backbone networks. This study provides a practical and resilient solution for palmprint recognition in unconstrained environments. Future work will explore non-linear fusion strategies, such as graph neural networks, to further exploit cross-scale feature interactions. -

Key words:

- Palmprint recognition /

- Multi-scale feature fusion /

- Deep learning

-

表 1 正常定位下实验结果(%)

方法 IITD MPD NTU-CP REST CASIA BMPD EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 本文模型 3.60 99.00 4.97 99.90 1.96 99.90 8.59 90.17 1.21 99.90 6.51 99.88 CompNet 6.32 98.61 8.36 99.90 3.50 99.65 12.70 87.66 1.26 99.90 8.89 99.62 CCNet 5.67 99.00 7.46 99.86 2.63 99.65 10.84 86.89 1.31 99.90 8.77 99.75 CO3Net 5.73 99.00 8.47 99.82 2.54 99.74 13.63 84.29 1.84 99.77 10.21 99.75 DCPV 8.65 95.69 11.32 99.71 7.37 98.89 19.97 80.88 4.87 99.36 13.80 99.25 RLANN 4.68 99.00 7.18 99.78 2.78 99.48 16.13 82.27 1.77 99.80 11.01 99.62 PalmALNet 6.23 95.15 19.42 96.73 7.68 97.53 19.88 81.66 2.51 99.53 14.54 98.75 MTCC 5.57 97.62 8.71 99.72 4.42 99.57 16.05 82.93 2.37 99.80 13.91 99.38 表 2 存在定位误差实验结果(%)

方法 IITD MPD NTU-CP REST CASIA BMPD EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 本文模型 10.15 90.53 6.33 99.58 5.01 96.76 11.76 87.21 2.70 99.43 10.60 99.32 CompNet 30.22 57.92 12.49 99.08 14.52 68.42 20.72 79.52 7.01 98.63 16.72 98.12 CCNet 27.60 61.76 12.86 97.96 10.11 80.93 18.76 81.37 6.00 98.73 15.98 97.12 CO3Net 28.36 61.76 13.56 97.96 15.66 58.55 22.26 75.27 8.10 97.09 17.73 96.37 DCPV 29.51 38.61 18.86 92.68 20.53 44.25 21.50 76.83 14.27 87.83 18.37 93.37 RLANN 14.16 75.84 9.98 98.82 11.36 75.40 19.87 80.62 3.21 99.13 16.81 97.00 PalmALNet 17.31 29.15 17.55 70.91 13.08 71.23 18.79 81.26 4.04 97.96 16.60 95.75 MTCC 22.68 30.08 11.83 99.20 13.34 90.98 22.74 78.72 3.52 99.06 14.98 97.25 表 3 本文模型在不同条件下的精度实验结果(%)

条件 IITD MPD NTU-CP REST CASIA BMPD EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 定位正常 3.60 99.00 4.97 99.90 1.96 99.90 8.59 90.17 1.21 99.90 6.51 99.88 定位误差 10.15 90.53 6.33 99.58 5.01 96.76 11.76 87.21 2.70 99.43 10.60 99.32 仿射变换 20.59 73.46 8.28 98.21 7.99 90.13 20.67 64.14 5.14 98.33 18.21 92.13 表 4 不同方法的性能对比

方法 计算量(M) 参数量(M) GPU运行时间(ms) 本文模型 4927.14 38.44 6.48 CompNet 1053.19 15.04 4.98 CCNet 1688.97 62.52 10.01 CO3Net 2302.40 79.63 10.70 DCPV 2134.62 68.74 9.54 RLANN 2450.40 43.35 7.42 PalmALNet 2030.75 28.62 6.92 MTCC 640.55 4.43 2.84 表 5 不同尺度消融的EER结果(%)

采用尺度 测试数据集 1.00 1.25 1.50 1.75 IITD MPD REST BMPD √ 5.60 7.67 13.32 11.39 √ 5.87 7.91 13.82 10.55 √ 6.05 8.01 14.37 11.88 √ √ 4.81 5.34 10.29 8.74 √ √ 5.10 5.73 10.14 7.49 √ √ 4.95 5.38 9.11 8.93 √ √ √ 3.60 4.97 8.59 6.51 √ √ √ √ 4.26 5.16 8.12 7.84 表 6 多尺度ROI特征融合机制对不同模型性能的提升结果(%)

方法 IITD MPD NTU-CP REST CASIA BMPD EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 EER Rank-1 CCNet ↓15.44 ↑27.08 ↓4.02 ↑0.51 ↓3.45 ↑13.36 ↓5.60 ↑4.54 ↓2.75 ↑0.48 ↓2.71 ↑1.71 CO3Net ↓15.78 ↑24.52 ↓4.58 ↑0.51 ↓10.80 ↑38.87 ↓6.94 ↑8.14 ↓4.28 ↑1.93 ↓2.81 ↑2.25 RLANN ↓4.34 ↑15.48 ↓3.00 ↑0.41 ↓5.61 ↑20.02 ↓2.34 ↑0.66 ↓0.40 ↑0.30 ↓2.30 ↑1.45 表 7 多尺度ROI特征融合机制对性能损耗结果

方法 计算量(M) 参数量(M) GPU运行时间(ms) CCNet ↑ 3443.42 ↑6.46 ↑2.22 CO3Net ↑ 4670.28 ↑10.46 ↑3.50 RLANN ↑ 5004.04 ↑2.91 ↑1.05 -

[1] ZHAO Shuping, FEI Lunke, and WEN Jie. Multiview-learning-based generic palmprint recognition: A literature review[J]. Mathematics, 2023, 11(5): 1261. doi: 10.3390/math11051261. [2] AMROUNI N, BENZAOUI A, and ZEROUAL A. Palmprint recognition: Extensive exploration of databases, methodologies, comparative assessment, and future directions[J]. Applied Sciences, 2024, 14(1): 153. doi: 10.3390/app14010153. [3] ZHANG D, KONG W K, YOU J, et al. Online palmprint identification[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2003, 25(9): 1041–1050. doi: 10.1109/TPAMI.2003.1227981. [4] KONG A W K and ZHANG D. Competitive coding scheme for palmprint verification[C]. The 17th International Conference on Pattern Recognition, Cambridge, UK, 2004: 520–523. doi: 10.1109/ICPR.2004.1334184. [5] FEI Lunke, XU Yong, TANG Wenliang, et al. Double-orientation code and nonlinear matching scheme for palmprint recognition[J]. Pattern Recognition, 2016, 49: 89–101. doi: 10.1016/j.patcog.2015.08.001. [6] JIA Wei, HU Rongxiang, LEI Yingke, et al. Histogram of oriented lines for palmprint recognition[J]. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 2014, 44(3): 385–395. doi: 10.1109/TSMC.2013.2258010. [7] GENOVESE A, PIURI V, PLATANIOTIS K N, et al. PalmNet: Gabor-PCA convolutional networks for touchless palmprint recognition[J]. IEEE Transactions on Information Forensics and Security, 2019, 14(12): 3160–3174. doi: 10.1109/TIFS.2019.2911165. [8] CHAI Tingting, PRASAD S, and WANG Shenghui. Boosting palmprint identification with gender information using DeepNet[J]. Future Generation Computer Systems, 2019, 99: 41–53. doi: 10.1016/j.future.2019.04.013. [9] LIANG Xu, YANG Jinyang, LU Guangming, et al. CompNet: Competitive neural network for palmprint recognition using learnable Gabor kernels[J]. IEEE Signal Processing Letters, 2021, 28: 1739–1743. doi: 10.1109/LSP.2021.3103475. [10] YANG Ziyuan, HUANGFU Huijie, LENG Lu, et al. Comprehensive competition mechanism in palmprint recognition[J]. IEEE Transactions on Information Forensics and Security, 2023, 18: 5160–5170. doi: 10.1109/TIFS.2023.3306104. [11] YANG Ziyuan, XIA Wenjun, QIAO Yifan, et al. CO3Net: Coordinate-aware contrastive competitive neural network for palmprint recognition[J]. IEEE Transactions on Instrumentation and Measurement, 2023, 72: 2514114. doi: 10.1109/TIM.2023.3276506. [12] FENG Yulin and KUMAR A. BEST: Building evidences from scattered templates for accurate contactless palmprint recognition[J]. Pattern Recognition, 2023, 138: 109422. doi: 10.1016/j.patcog.2023.109422. [13] CHAI Tingting, WANG Xin, LI Ru, et al. Joint finger valley points-free ROI detection and recurrent layer aggregation for palmprint recognition in open environment[J]. IEEE Transactions on Information Forensics and Security, 2025, 20: 421–435. doi: 10.1109/TIFS.2024.3516539. [14] SHAO Huikai, ZOU Yuchen, LIU Chengcheng, et al. Learning to generalize unseen dataset for cross-dataset palmprint recognition[J]. IEEE Transactions on Information Forensics and Security, 2024, 19: 3788–3799. doi: 10.1109/TIFS.2024.3371257. [15] SU Le, FEI Lunke, ZHANG B, et al. Complete region of interest for unconstrained palmprint recognition[J]. IEEE Transactions on Image Processing, 2024, 33: 3662–3675. doi: 10.1109/TIP.2024.3407666. [16] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 3–19. doi: 10.1007/978-3-030-01234-2_1. [17] KUMAR A and SHEKHAR S. Personal identification using multibiometrics rank-level fusion[J]. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 2011, 41(5): 743–752. doi: 10.1109/TSMCC.2010.2089516. [18] MATKOWSKI W M, CHAI Tingting, and KONG A W K. Palmprint recognition in uncontrolled and uncooperative environment[J]. IEEE Transactions on Information Forensics and Security, 2020, 15: 1601–1615. doi: 10.1109/TIFS.2019.2945183. [19] SUN Zhenan, TAN Tieniu, WANG Yunhong, et al. Ordinal palmprint represention for personal identification[C]. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, USA, 2005: 279–284. doi: 10.1109/CVPR.2005.267. [20] IZADPANAHKAKHK M, RAZAVI S M, TAGHIPOUR-GORJIKOLAIE M, et al. Novel mobile palmprint databases for biometric authentication[J]. International Journal of Grid and Utility Computing, 2019, 10(5): 465–474. doi: 10.1504/ijguc.2019.102016. [21] YANG Ziyuan, KANG Ming, TEOH A B J, et al. A dual-level cancelable framework for palmprint verification and hack-proof data storage[J]. IEEE Transactions on Information Forensics and Security, 2024, 19: 8587–8599. doi: 10.1109/TIFS.2024.3461869. [22] YANG Ziyuan, LENG Lu, WU Tengfei, et al. Multi-order texture features for palmprint recognition[J]. Artificial Intelligence Review, 2023, 56(2): 995–1011. doi: 10.1007/s10462-022-10194-5. -

下载:

下载:

下载:

下载: