A Distributed Multi-Satellite Collaborative Framework for Remote Sensing Scene Classification

-

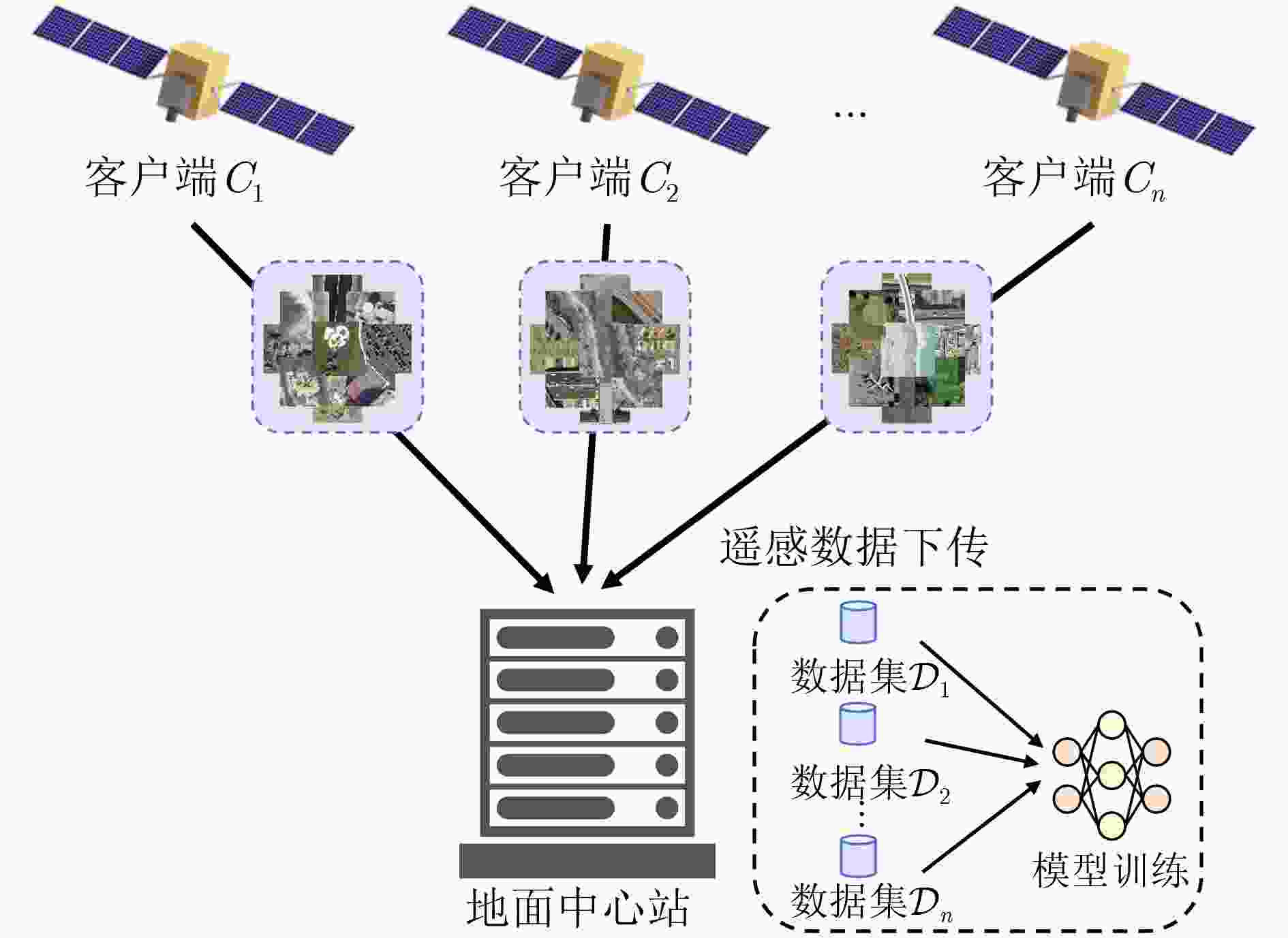

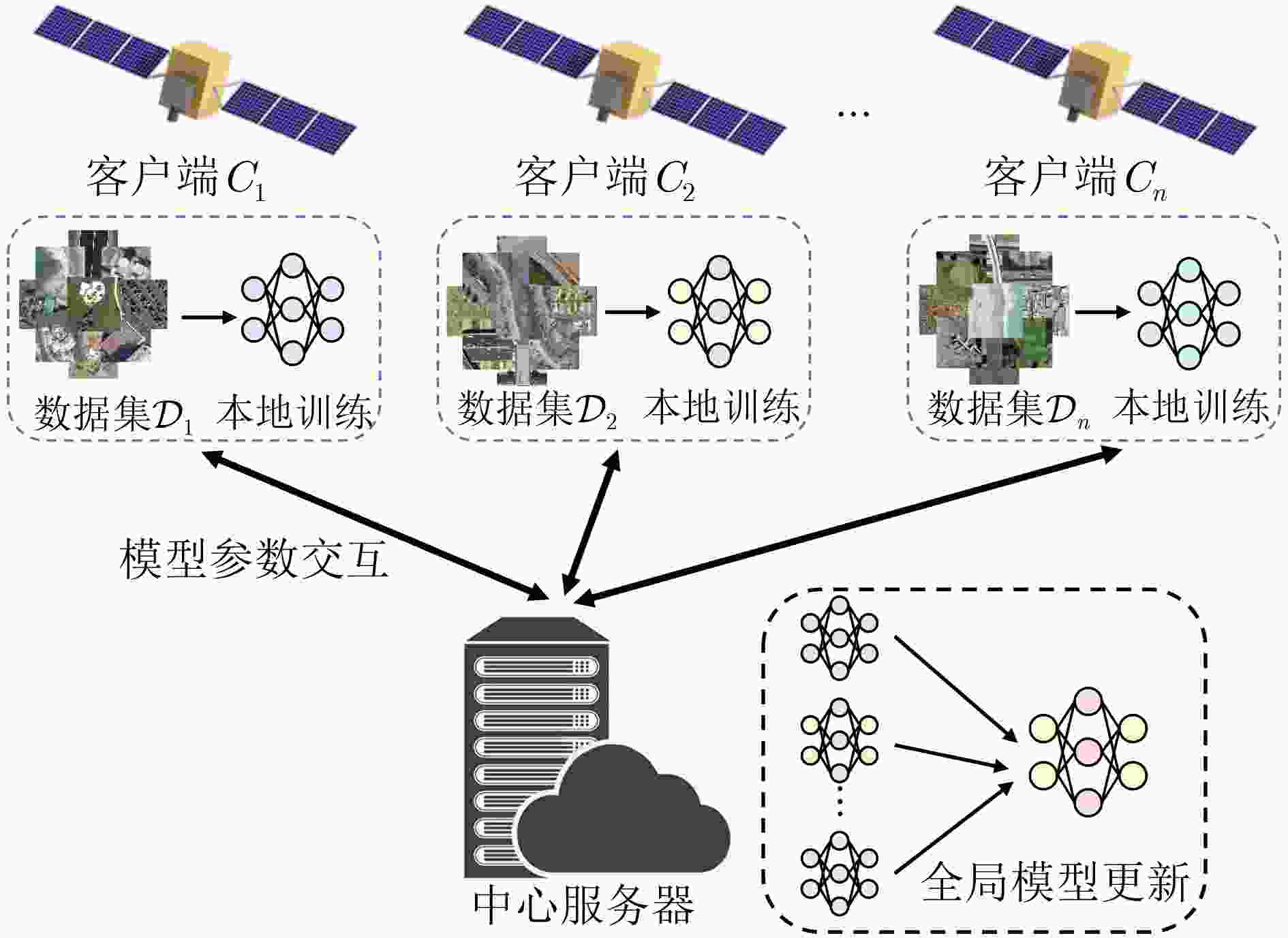

摘要: 随着空天信息技术的快速发展,卫星遥感平台对海量数据的高效处理与智能解译的需求日益增强。传统集中式遥感场景分类方法需将数据回传至地面中心进行集中处理与训练,受限于通信带宽、传输延迟及链路稳定性,难以满足“空天信息时代”高时效性与低通信负载的需求。针对这一问题,该文提出一种基于联邦学习的分布式多卫星协同遥感场景分类方法,在保留各卫星本地遥感数据的前提下,由各卫星独立完成本地模型训练,仅上传更新后的模型参数至中心节点进行全局聚合,并将优化后的全局模型参数下发至各卫星继续迭代,实现跨卫星的联合建模与协同推理。同时,结合星间直连通信机制开展参数共识,再由中心节点选取代表节点参与全局聚合,从而减少星地链路的传输负载,有效降低通信开销并提升系统可扩展性。在NWPU-RESISC45与UC-Merced数据集上的实验结果表明,该方法在分类准确率、通信效率和模型鲁棒性方面均优于现有主流算法,验证了其在多卫星协同遥感场景分类中的有效性与应用潜力。Abstract:

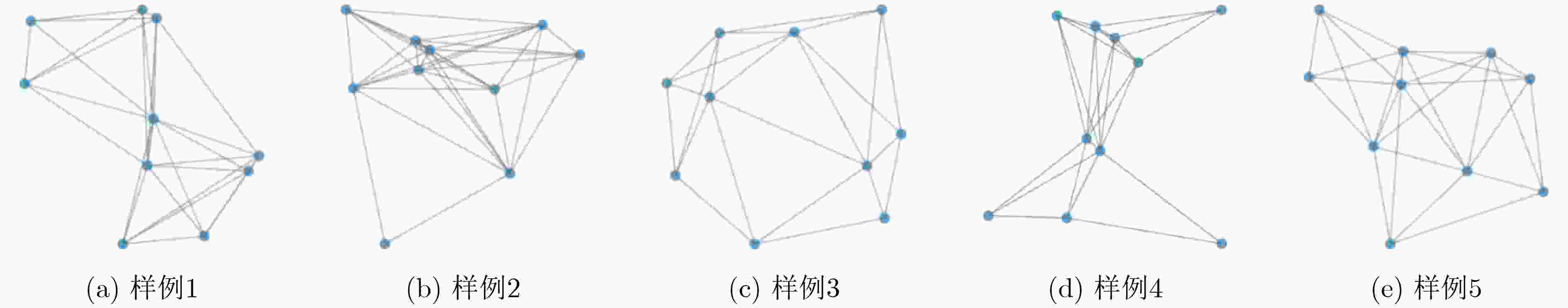

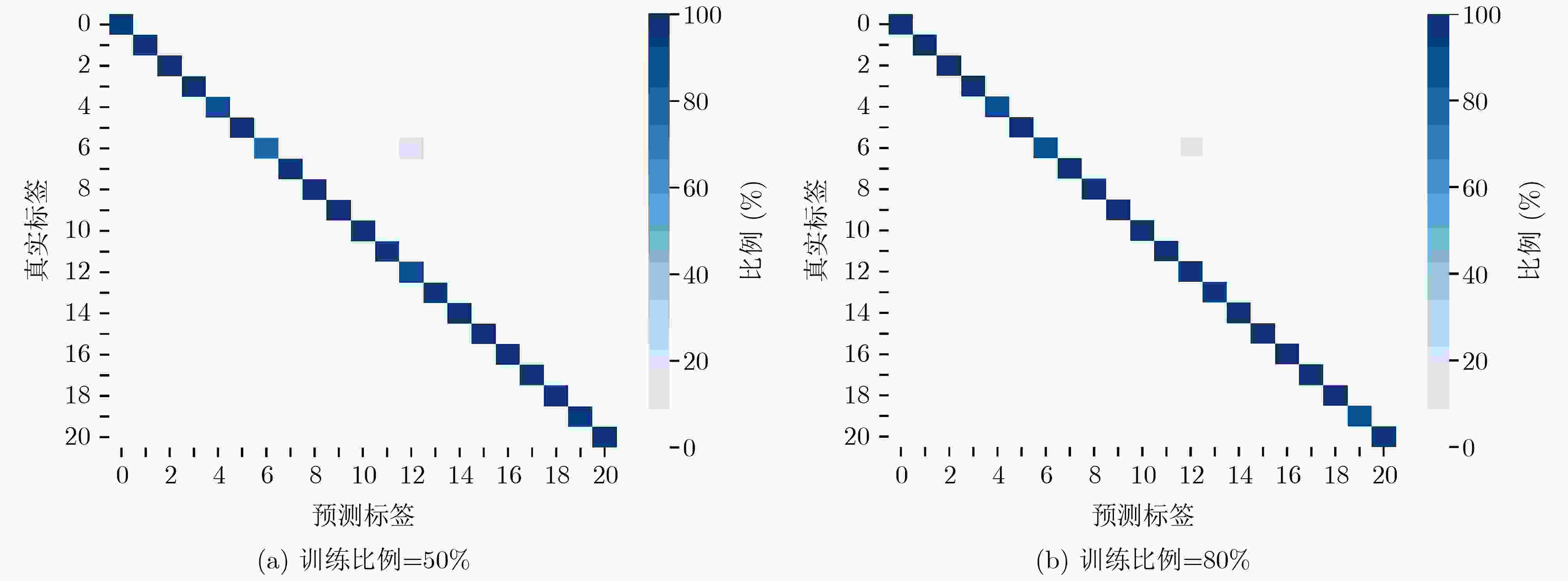

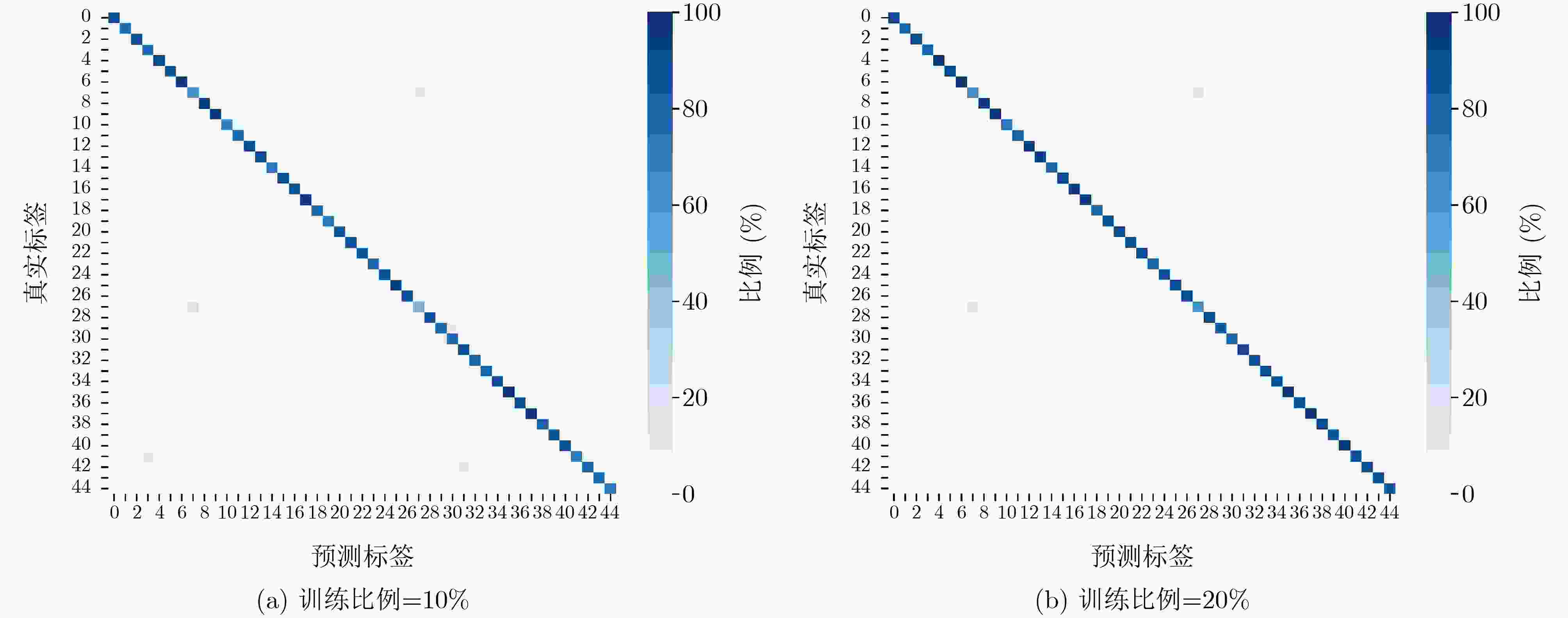

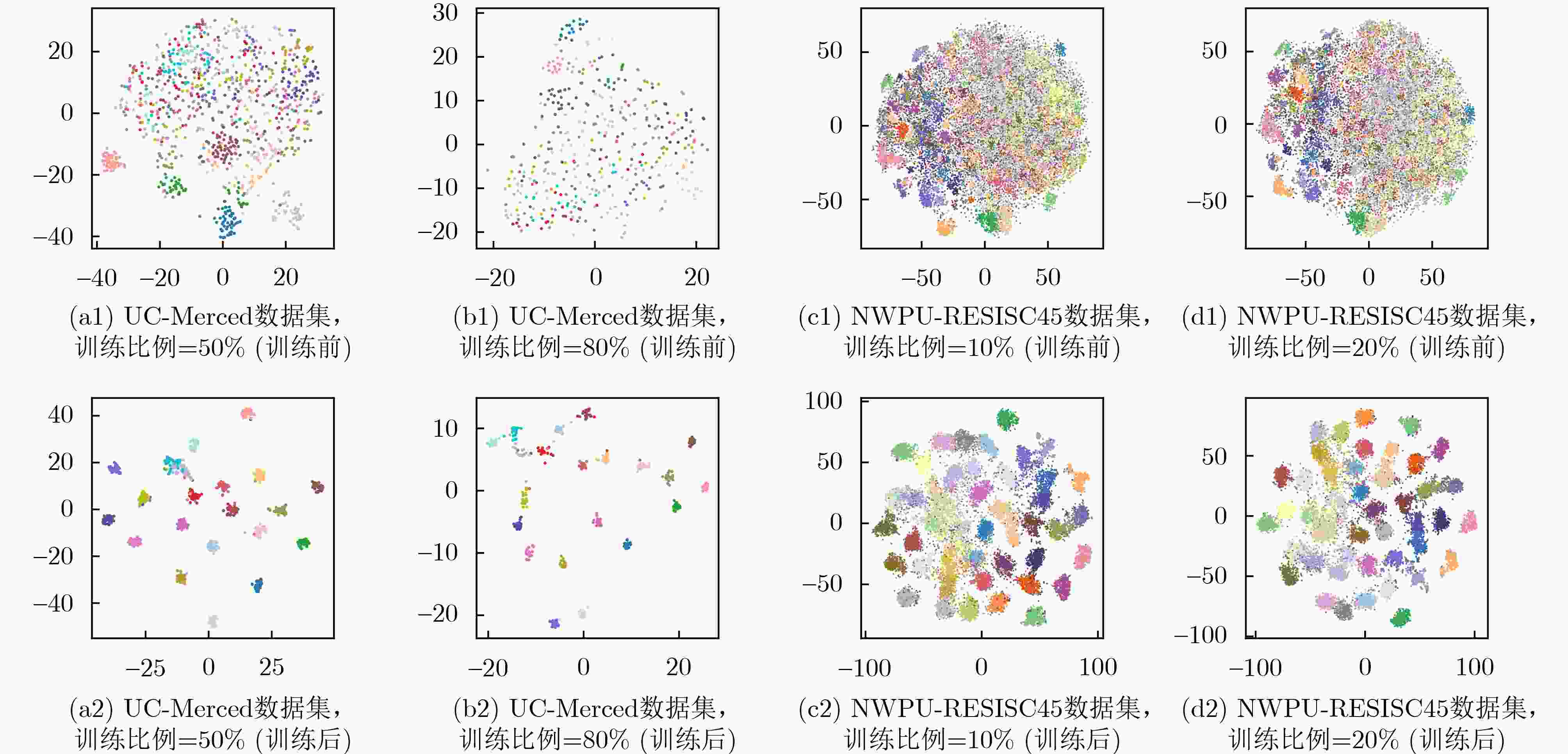

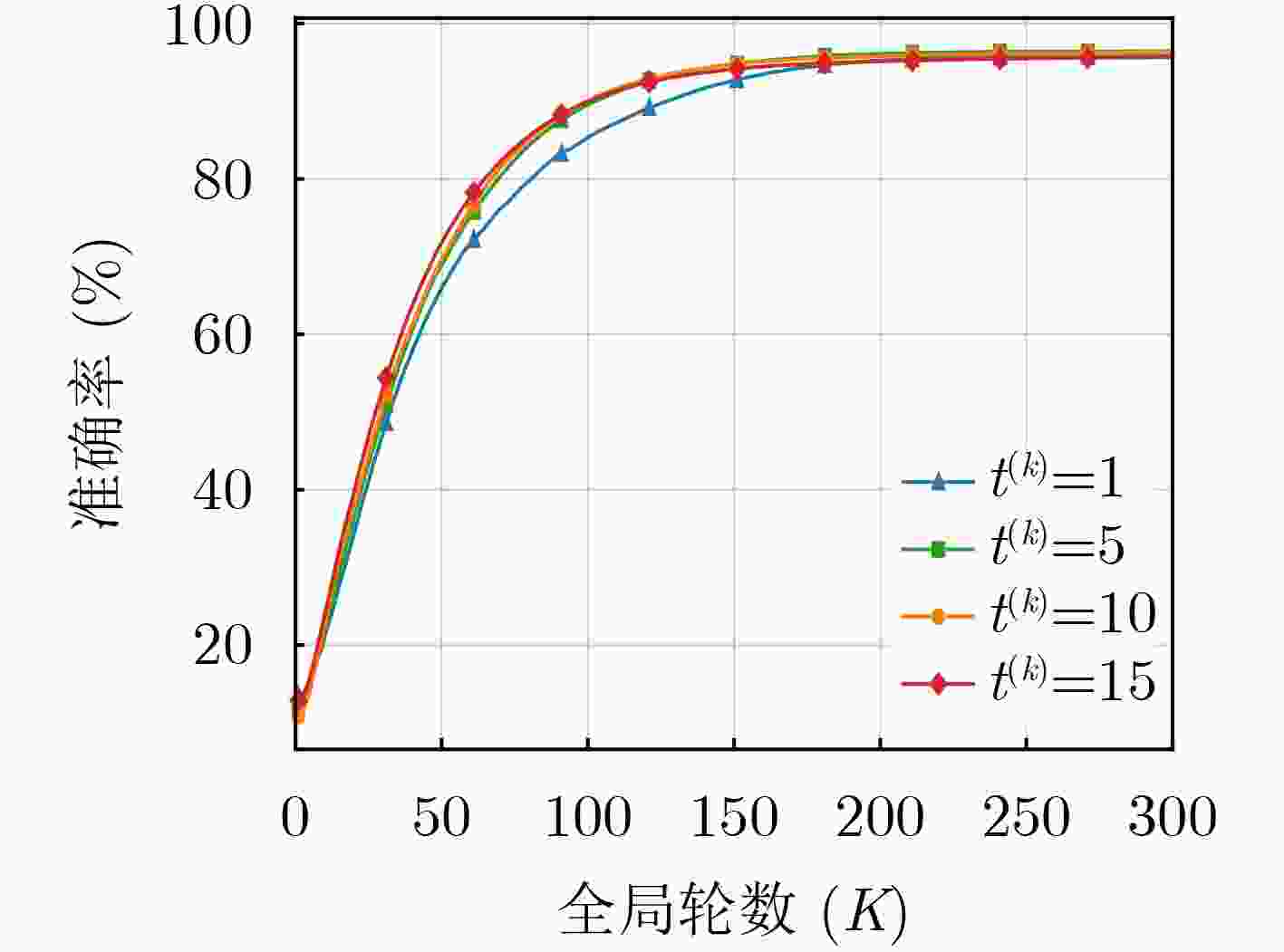

Objective With the rapid development of space technologies, satellites generate large volumes of Remote Sensing (RS) data. Scene classification, a fundamental task in RS interpretation, is essential for earth observation applications. Although Deep Learning (DL) improves classification accuracy, most existing methods rely on centralized architectures. This design allows unified management but faces limited bandwidth, high latency, and privacy risks, which restrict scalability in multi-satellite settings. With increasing demand for distributed computation, Federated Learning (FL) has received growing attention in RS. Research on FL for RS scene classification, however, remains at an early stage. This study proposes a distributed collaborative framework for multi-satellite scene classification that applies efficient parameter aggregation to reduce communication overhead while preserving accuracy. Methods An FL-based framework is proposed for multi-satellite RS scene classification. Each satellite conducts local training while raw data remain stored locally to preserve privacy. Only updated model parameters are transmitted to a central server for global aggregation. The optimized global model is then broadcast to satellites to enable joint modeling and inference. To reduce the high communication cost of space-to-ground links, an inter-satellite communication mechanism is added. This design lowers communication overhead and strengthens scalability. The effect of parameter consensus on global convergence is theoretically analyzed, and an upper bound of convergence error is derived to provide a rigorous convergence guarantee and support practical applicability. Results and Discussions Comparative experiments are conducted on the UC-Merced and NWPU-RESISC45 datasets ( Table 2 ,Table 3 ) to evaluate the proposed framework. The method consistently shows higher accuracy than centralized training, FedAvg, and FedProx under different client numbers and training ratios. On UC-Merced, Overall Accuracy (OA) reaches 96.68% at a 50% training ratio with 2 clients and rises to 97.49% at 80% with 10 clients. On NWPU-RESISC45, OA reaches 83.64% at 10% with 5 clients and 88.41% at 20% with 10 clients, both exceeding baseline methods. Confusion matrices (Fig. 4 ,Fig. 5 ) show clear diagonal dominance and only minor confusions, and t-SNE visualizations (Fig. 6 ) show compact intra-class clusters and well-separated inter-class distributions, indicating strong generalization even under lower training ratios. Communication energy analysis (Table 4 ) shows high efficiency. On UC-Merced with a 50% training ratio, the communication cost is 1.30 kJ, more than 60% lower than FedAvg and FedProx. On NWPU-RESISC45, substantial savings are also observed across all ratios.Conclusions This study proposes an FL-based framework for multi-satellite RS scene classification and addresses limitations of centralized training, including restricted bandwidth, high latency, and privacy concerns. By allowing satellites to conduct local training and applying central aggregation with inter-satellite consensus, the framework achieves collaborative modeling with high communication efficiency. Evaluations on UC-Merced and NWPU-RESISC45 verify the effectiveness of the method. On UC-Merced with an 80% training ratio and 10 clients, OA reaches 97.49%, higher than centralized training, FedAvg, and FedProx by 1.85%, 0.60%, and 0.81%, respectively. On NWPU-RESISC45 with a 20% training ratio, the communication energy cost is 5.88 kJ, showing reductions of 57.45% and 58.18% compared with FedAvg and FedProx. These results indicate strong generalization and efficiency across different data scales and training ratios. The framework is suited for bandwidth-limited and dynamic space environments and offers a promising direction for distributed RS applications. Future work will examine cross-task transfer learning to improve adaptability and generalization under multi-task and heterogeneous data conditions. -

1 基于参数共识机制的多卫星协同遥感图像场景分类算法

(1) 输入:客户端数量$ N $,通信轮数$ K $,参数共识轮数$ {t}^{\left(k\right)} $,模

型参数$ \boldsymbol{\theta} $(2) 输出:全局模型参数$ \boldsymbol{\theta}^{(K)} $ (3) # 初始化 (4) 中心服务器$ S $初始化全局模型参数$ \boldsymbol{\theta}^{(0)} $,并广播至所有客户

端$ \mathcal{C} $(5) 对于每轮全局通信轮次$ k=1{,}2,\cdots ,K $: (6) 对于每个客户端$ {C}_{n} $,$ n=1{,}2,\cdots ,N $: (7) 接收$ \boldsymbol{\theta}^{(k-1)} $,作为本地初始模型:$ \boldsymbol{\theta}_n^{(k)}=\boldsymbol{\theta}^{(k-1)} $ (8) # 本地训练 (9) 执行本地训练,更新本地模型参数:

$ \boldsymbol{\theta}_n^{(k)}\leftarrow\text{LocalUpdate}(\boldsymbol{\theta}_n^{(k)},\mathcal{D}_n) $(10) # 参数共识 (11) 构建D2D通信拓扑图$ {G}^{(k)}=({C}^{(k)},{\mathcal{E}}^{(k)}) $ (12) 对于每轮参数共识轮次$ t=1{,}2,\cdots ,{t}^{\left(k\right)} $: (13) 客户端根据式(4)更新模型参数$ \boldsymbol{h}_n(t+1) $ (14) 根据式(5),得到共识后的模型参数$ \hat{\boldsymbol{\theta}}_n^{(k)} $ (15) # 全局模型更新 (16) 中心服务器$ S $随机选择客户端Cm,将其模型参数更新为

新的全局模型:$ \boldsymbol{\theta}^{(k)}=\boldsymbol{\hat{\theta}}_m^{\left(k\right)} $(17) # 参数广播 (18) 中心服务器$ S $将更新的全局模型参数$ \boldsymbol{\theta}^{(k)} $广播至所有客户

端$ \mathcal{C} $,供下一轮本地训练使用(19) 返回最终全局模型参数$ \boldsymbol{\theta}^{(K)} $ 表 1 UC-Merced和NWPU-RESISC45遥感场景分类数据集详情

数据集 类别

数量图像

数量/类别空间

分辨率(m)训练/

测试比例(%)UC-Merced 21 100 0.3 50/50, 80/20 NWPU-RESISC45 45 700 0.2~30 10/90, 20/80 表 2 多卫星协同遥感场景分类UC-Merced数据集OA实验结果(%)

训练比例(%) 算法 客户端数量($ N $) 2 3 4 5 6 7 8 9 10 50 集中式训练 93.96 FedAvg 95.46 95.22 95.05 94.88 93.94 94.12 94.03 94.62 94.55 FedProx 95.63 95.41 95.32 95.21 94.98 94.83 94.79 94.73 94.62 本文方法 96.68 96.54 96.42 96.39 96.25 96.42 96.31 96.47 95.34 80 集中式训练 95.64 FedAvg 97.14 96.92 96.78 96.65 96.52 96.35 96.13 96.97 96.89 FedProx 97.31 97.05 96.88 96.74 96.81 96.52 96.38 96.76 96.68 本文方法 98.38 98.26 98.19 98.12 98.06 97.92 97.88 97.63 97.49 表 3 多卫星协同遥感场景分类NWPU-RESISC45数据集OA实验结果(%)

训练比例(%) 算法 客户端数量($ N $) 2 3 4 5 6 7 8 9 10 10 集中式训练 80.73 FedAvg 81.03 80.84 80.52 80.26 79.74 79.18 78.89 78.61 78.42 FedProx 80.96 80.62 80.29 80.02 79.55 79.12 78.86 78.70 78.64 本文方法 84.23 84.11 83.92 83.64 83.76 83.59 83.73 83.84 83.93 20 集中式训练 88.12 FedAvg 86.92 86.55 86.18 85.72 85.47 85.03 84.61 84.29 84.03 FedProx 86.74 86.42 86.01 85.63 85.28 84.87 84.50 84.33 84.18 本文方法 88.64 88.43 88.27 87.96 88.29 88.12 88.35 88.44 88.41 表 4 多卫星协同遥感场景分类通信能量开销实验结果($ \mathrm{kJ} $)

数据集 训练比例(%) 算法 FedAvg FedProx 本文方法 UC-Merced 50 3.52 3.27 1.30 80 2.76 2.26 1.22 NWPU-RESISC45 10 15.57 15.07 6.73 20 13.82 14.06 5.88 表 5 不同数据分布下多卫星协同遥感场景分类OA实验结果(%)

数据集 训练比例(%) 数据分布 独立同分布

(IID)非独立同分布

(Non-IID)UC-Merced 50 95.34 95.13 80 97.49 97.32 NWPU-RESISC45 10 83.93 81.69 20 88.41 86.10 表 6 不同共识轮数$ {\boldsymbol{t}}^{(\boldsymbol{k})} $下多卫星协同遥感场景分类OA实验结果(%)

数据集 训练比例(%) 共识轮数($ {t}^{(k)} $) 1 5 10 15 UC-Merced 50 92.65 94.13 94.27 94.60 80 93.47 95.96 95.98 96.33 NWPU-RESISC45 10 79.93 80.64 81.13 81.25 20 83.69 85.13 85.57 86.08 -

[1] NIU Ziqing, CHENG Peirui, WANG Zhirui, et al. FCIL-MSN: A federated class-incremental learning method for multisatellite networks[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5626115. doi: 10.1109/TGRS.2024.3406817. [2] LI Daixun, XIE Weiying, LI Yunsong, et al. FedFusion: Manifold-driven federated learning for multi-satellite and multi-modality fusion[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5500813. doi: 10.1109/TGRS.2023.3339522. [3] SONG Wanying, ZHANG Yingying, WANG Chi, et al. Remote sensing scene classification based on semantic-aware fusion network[J]. IEEE Geoscience and Remote Sensing Letters, 2024, 21: 2505805. doi: 10.1109/LGRS.2024.3470773. [4] LIU Quanyong, PENG Jiangtao, ZHANG Genwei, et al. Deep contrastive learning network for small-sample hyperspectral image classification[J]. Journal of Remote Sensing, 2023, 3: 0025. doi: 10.34133/remotesensing.0025. [5] MENG Shili, PANG Yong, HUANG Chengquan, et al. A multifactor weighting method for improved clear view compositing using all available Landsat 8 and sentinel 2 images in google earth engine[J]. Journal of Remote Sensing, 2023, 3: 0086. doi: 10.34133/remotesensing.0086. [6] LIU Shuaijun, LIU Jia, TAN Xiaoyue, et al. A hybrid spatiotemporal fusion method for high spatial resolution imagery: Fusion of Gaofen-1 and sentinel-2 over agricultural landscapes[J]. Journal of Remote Sensing, 2024, 4: 0159. doi: 10.34133/remotesensing.0159. [7] MEI Shaohui, LIAN Jiawei, WANG Xiaofei, et al. A comprehensive study on the robustness of deep learning-based image classification and object detection in remote sensing: Surveying and benchmarking[J]. Journal of Remote Sensing, 2024, 4: 0219. doi: 10.34133/remotesensing.0219. [8] 张继贤, 顾海燕, 杨懿, 等. 高分辨率遥感影像智能解译研究进展与趋势[J]. 遥感学报, 2021, 25(11): 2198–2210. doi: 10.11834/jrs.20210382.ZHANG Jixian, GU Haiyan, YANG Yi, et al. Research progress and trend of high-resolution remote sensing imagery intelligent interpretation[J]. National Remote Sensing Bulletin, 2021, 25(11): 2198–2210. doi: 10.11834/jrs.20210382. [9] LI Shaofan, DAI Mingjun, and LI Bingchun. MMPC-Net: Multigranularity and multiscale progressive contrastive learning neural network for remote sensing image scene classification[J]. IEEE Geoscience and Remote Sensing Letters, 2024, 21: 2502505. doi: 10.1109/LGRS.2024.3392214. [10] WANG Yuelei, WANG Zhirui, CHENG Peirui, et al. DCM: A distributed collaborative training method for the remote sensing image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5605018. doi: 10.1109/TGRS.2023.3252544. [11] LI Jianzhao, GONG Maoguo, LIU Zaitian, et al. Toward multiparty personalized collaborative learning in remote sensing[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 4503616. doi: 10.1109/TGRS.2024.3370584. [12] XU Yonghao, BAI Tao, YU Weikang, et al. AI security for geoscience and remote sensing: Challenges and future trends[J]. IEEE Geoscience and Remote Sensing Magazine, 2023, 11(2): 60–85. doi: 10.1109/MGRS.2023.3272825. [13] BÜYÜKTAŞ B, SUMBUL G, and DEMIR B. Federated learning across decentralized and unshared archives for remote sensing image classification: A review[J]. IEEE Geoscience and Remote Sensing Magazine, 2024, 12(3): 64–80. doi: 10.1109/MGRS.2024.3415391. [14] MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C].The 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2017: 1273–1282. [15] YAN Jintao, CHEN Tan, SUN Yuxuan, et al. Dynamic scheduling for vehicle-to-vehicle communications enhanced federated learning[J]. IEEE Transactions on Wireless Communications, 2025, 24(11): 9373–9390. doi: 10.1109/TWC.2025.3573048. [16] ZHANG Xiaokang, ZHANG Boning, YU Weikang, et al. Federated deep learning with prototype matching for object extraction from very-high-resolution remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5603316. doi: 10.1109/TGRS.2023.3244136. [17] TANG Xiaochuan, YAN Xiaochuang, YUAN Xiaojun, et al. FedLD: Federated learning for privacy-preserving collaborative landslide detection[J]. IEEE Geoscience and Remote Sensing Letters, 2024, 21: 8003105. doi: 10.1109/LGRS.2024.3437743. [18] HOSSEINALIPOUR S, AZAM S S, BRINTON C G, et al. Multi-stage hybrid federated learning over large-scale D2D-enabled fog networks[J]. IEEE/ACM Transactions on Networking, 2022, 30(4): 1569–1584. doi: 10.1109/TNET.2022.3143495. [19] JIA Xingde. Wireless networks and random geometric graphs[C]. The 7th International Symposium on Parallel Architectures, Algorithms and Networks, Hong Kong, China, 2004: 575–579. doi: 10.1109/ISPAN.2004.1300540. [20] ZHU Guangxu, WANG Yong, and HUANG Kaibin. Broadband analog aggregation for low-latency federated edge learning[J]. IEEE Transactions on Wireless Communications, 2020, 19(1): 491–506. doi: 10.1109/TWC.2019.2946245. [21] AMIRI M M and GÜNDÜZ D. Federated learning over wireless fading channels[J]. IEEE Transactions on Wireless Communications, 2020, 19(5): 3546–3557. doi: 10.1109/TWC.2020.2974748. [22] SUN Yuxuan, ZHOU Sheng, NIU Zhisheng, et al. Dynamic scheduling for over-the-air federated edge learning with energy constraints[J]. IEEE Journal on Selected Areas in Communications, 2022, 40(1): 227–242. doi: 10.1109/JSAC.2021.3126078. [23] CHEN Tan, YAN Jintao, SUN Yuxuan, et al. Mobility accelerates learning: Convergence analysis on hierarchical federated learning in vehicular networks[J]. IEEE Transactions on Vehicular Technology, 2025, 74(1): 1657–1673. doi: 10.1109/TVT.2024.3466299. [24] YANG Yi and NEWSAM S. Bag-of-visual-words and spatial extensions for land-use classification[C]. The 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, USA, 2010: 270–279. doi: 10.1145/1869790.1869829. [25] CHENG Gong, HAN Junwei, and LU Xiaoqiang. Remote sensing image scene classification: Benchmark and state of the art[J]. Proceedings of the IEEE, 2017, 105(10): 1865–1883. doi: 10.1109/JPROC.2017.2675998. [26] LI Tian, SAHU A K, ZAHEER M, et al. Federated optimization in heterogeneous networks[C]. The 3rd Conference on Machine Learning and Systems, Austin, USA, 2020: 429–450. -

下载:

下载:

下载:

下载: