-

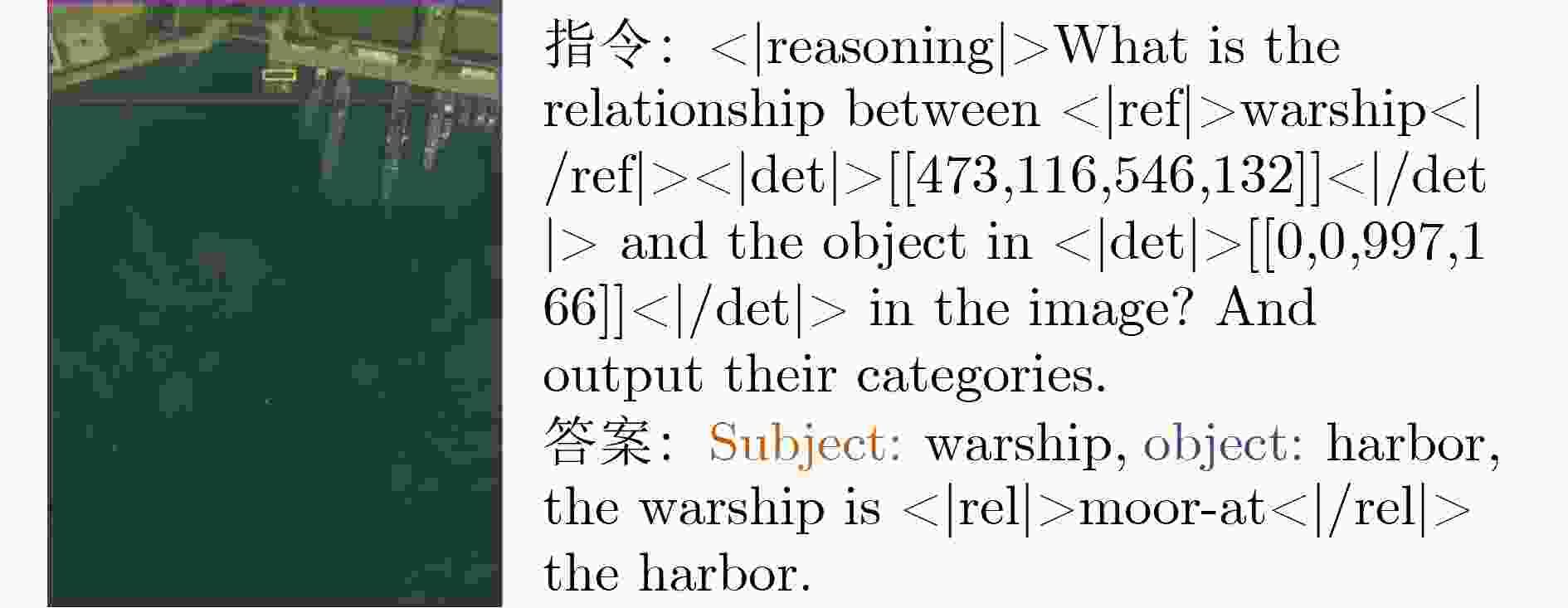

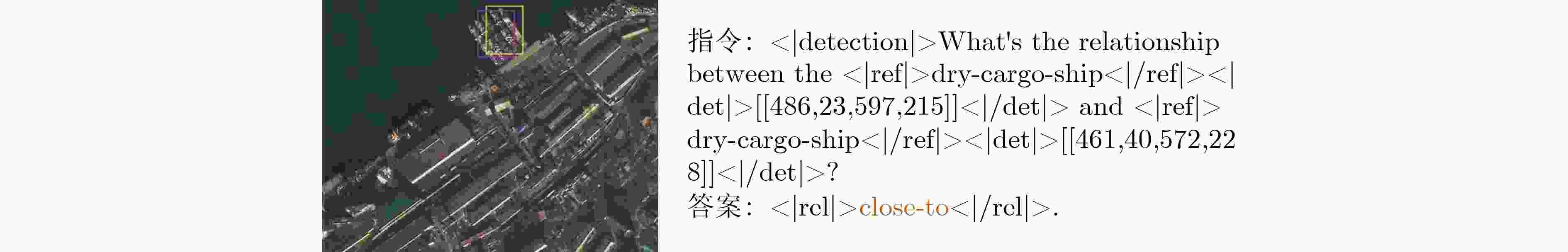

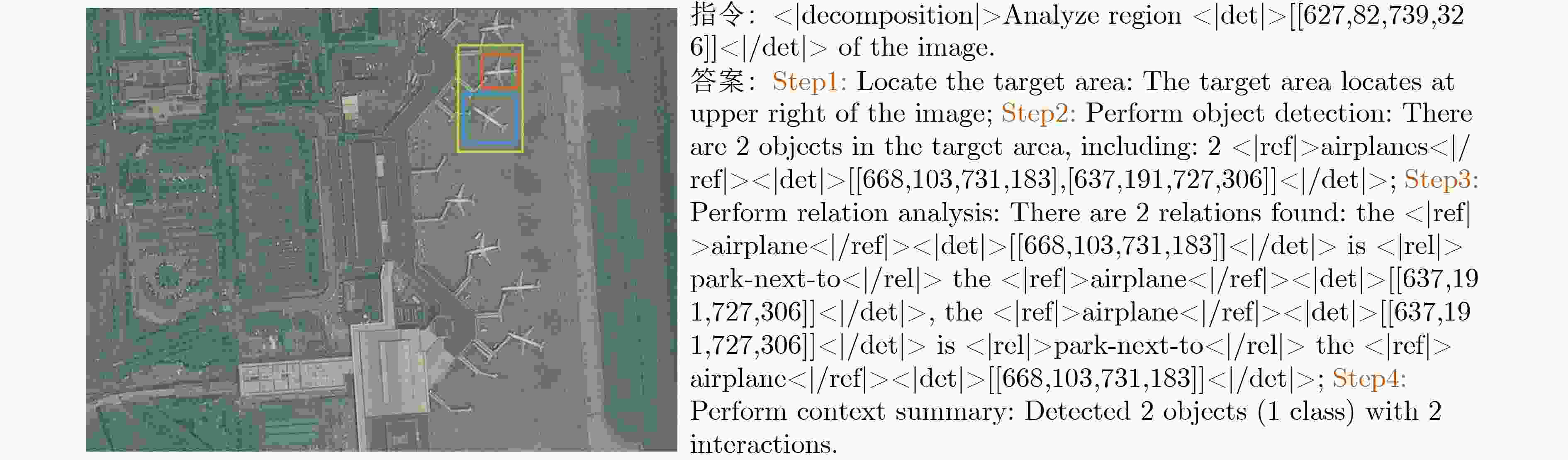

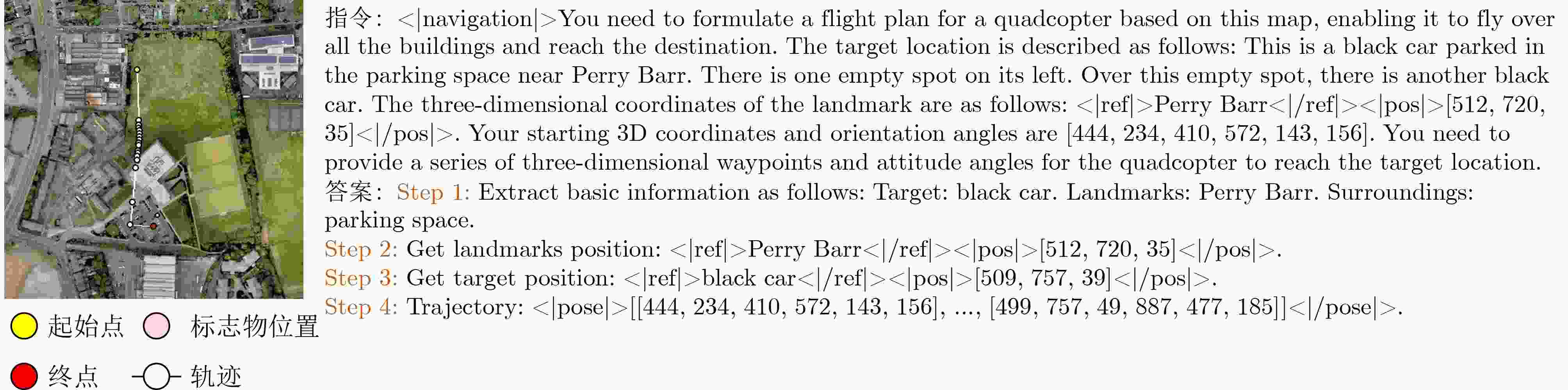

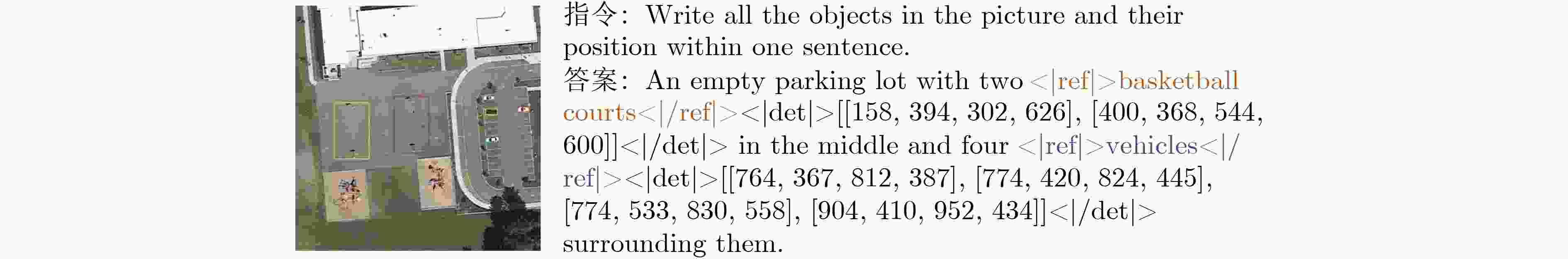

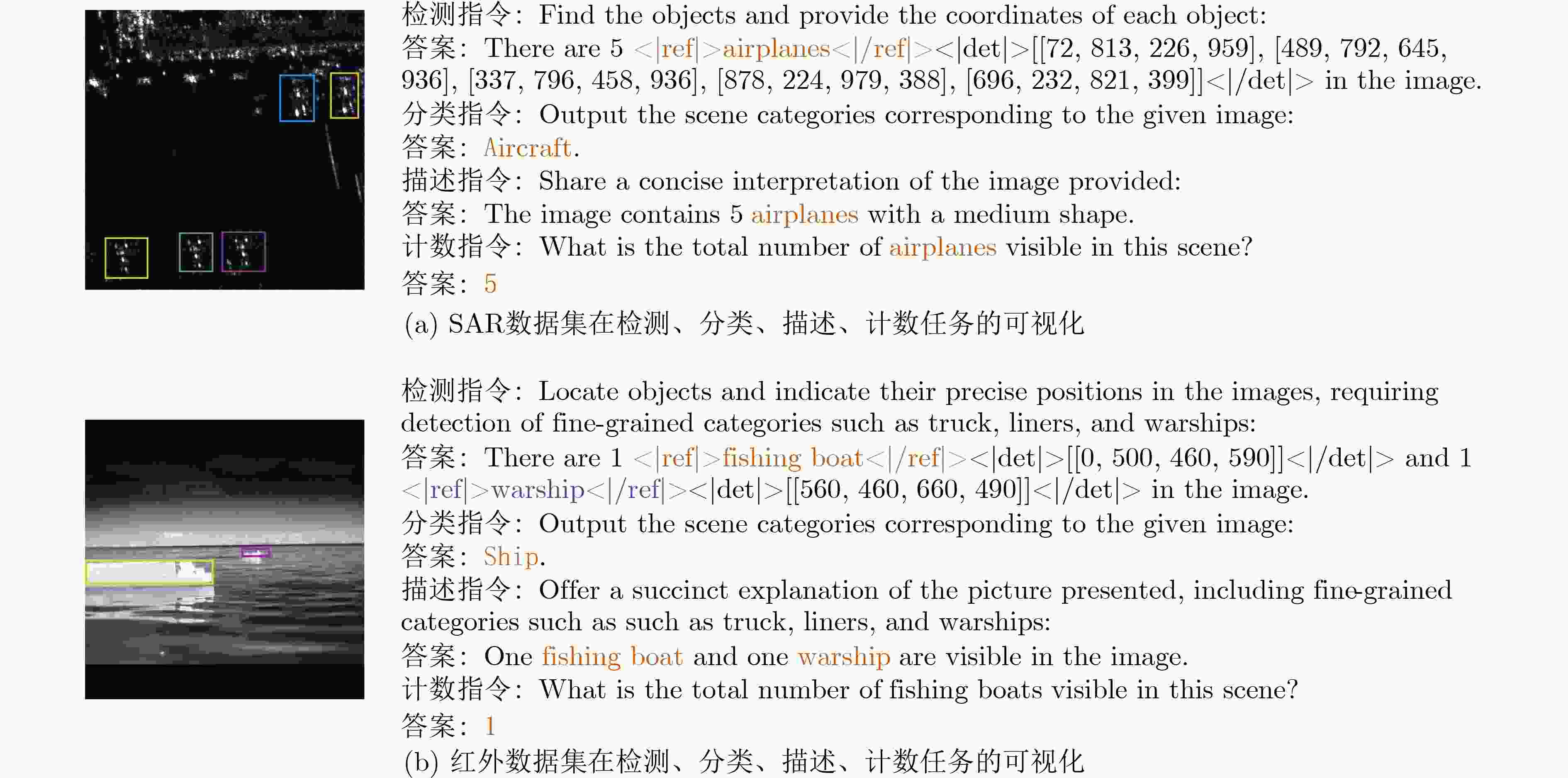

摘要: 随着遥感应用不断从静态图像分析迈向智能化认知决策任务,构建覆盖多任务、多模态的信息融合数据体系已成为推动遥感基础模型发展的关键前提。本文围绕遥感智能体中的感知、认知需求,提出并构建了一个面向多任务图文指令的遥感多模态数据集,系统组织图像、文本指令、空间坐标与行为轨迹等多模态信息,统一支撑多阶段任务链路的训练与评估。该数据集涵盖9类核心任务,包括关系推理、指令分解、任务调度、定位描述与多模态感知等,共计21个子数据集,覆盖光学、SAR与红外三类遥感模态,总体数据规模超过2百万样本。在数据构建过程中,本文针对遥感图像的特性设计了标准化的指令格式,提出统一的输入输出范式,确保不同任务间的互通性与可迁移性。同时,设计自动化数据生成与转换流程,以提升多模态样本生成效率与一致性。此外,本文还介绍了在遥感基础模型上的基线性能评估结果,验证了该数据集在多任务泛化学习中的实用价值。该数据集可广泛服务于遥感领域多模态基础模型的构建与评估,尤其适用于统一感知-认知-决策闭环流程的智能体模型开发,具有良好的研究推广价值与工程应用前景。

-

表 1 数据集整体统计

任务类型 数据模态 数据集名称 数量规模 任务调度 光学 Citynav[2] 32637 指令分解 光学 ReCon1M-DEC 27821 关系推理 光学 ReCon1M-REL 125000 关系检测 光学 ReCon1M-DET 120097 定位描述 光学 DIOR-GC 22921 光学 DOTA-GC 48866 多模态感知

(目标检测)光学 DIOR[5] 23463 SAR SARDet-100K[6] 116598 红外 IR-DET 56353 多模态感知

(图像描述)光学 DIOR-CAP 92875 光学 DOTA-CAP 307150 SAR SAR-CAP 582990 红外 IR-CAP 281765 多模态感知

(图像分类)光学 AID[13] 10000 光学 NWPU-RESISC45[14] 31500 SAR SAR-CLA 116597 红外 IR-CLA 56353 多模态感知

(目标计数)光学 DIOR-COUNT 35204 光学 DOTA-COUNT 78432 SAR IR-COUNT 107565 红外 SAR-COUNT 117803 总数 — — 2391990 表 2 关系推理数据集中各类别关系的样本分布统计表

类别名 训练集 测试集 类别名 训练集 测试集 类别名 训练集 测试集 类别名 训练集 测试集 above 69 5 dig 1 0 link-to 166 21 pull 5 1 adjacent-to 2799 289 dock-alone-at 4 1 load 9 0 sail-by 20 4 adjoint-with 3 1 dock-at 102 13 manage 35 4 sail-on 922 123 around 3 0 drive-at-the-different-lane 148 20 moor-at 3414 383 separate 31 1 belong-to 913 112 drive-at-the-same-lane 144 17 move-away-from 8 0 serve 1737 174 block 2 1 drive-on 4931 538 park-alone-at 14 3 slow-down 241 34 border 72 9 emit 1 0 park-next-to 30544 3389 supplement 334 50 close-to 24992 2657 enter 6 0 parked-at 15432 1735 supply 179 26 command 3 0 equipped-with 66 8 pass-under 3 0 support 79 7 connect 186 15 exit-from 5 1 pile-up-at 660 83 support-the-construction-of 82 9 contain 106 14 hoist 93 10 placed-on 29 2 taxi-on 226 22 converge 14 1 inside 7558 854 power 139 9 tow 10 3 cooperate-with 430 47 is-parallel-to 3516 402 prepared-for 81 14 transport 48 6 cross 1224 130 is-symmetric-with 4 1 provide-access-to 10584 1247 ventilate 44 2 cultivate 10 0 lie-under 13 1 provide-shuttle-service-to 6 1 表 3 关系检测数据集中各类别关系的样本分布统计表

类别名 训练集 测试集 类别名 训练集 测试集 类别名 训练集 测试集 类别名 训练集 测试集 above 73 7 dock-alone-at 5 0 manage 29 5 sail-by 22 3 adjacent-to 2575 297 dock-at 80 7 moor-at 3224 344 sail-on 636 78 adjoint-with 4 0 drive-at-the-different-lane 112 15 move-away-from 5 0 separate 39 4 around 4 2 drive-at-the-same-lane 154 18 park-alone-at 10 0 serve 1655 186 belong-to 768 66 drive-on 4819 545 park-next-to 29857 3249 slow-down 245 34 block 1 0 enter 8 0 parked-at 15308 1729 supplement 321 37 border 52 7 equipped-with 59 9 pass-under 3 0 supply 207 16 close-to 23942 2713 exit-from 5 0 pile-up-at 590 76 support 61 6 command 2 0 hoist 115 7 placed-on 25 0 support-the-construction-of 11 1 connect 169 16 inside 7556 860 power 99 9 taxi-on 155 13 contain 111 7 is-parallel-to 3088 345 prepared-for 64 11 tow 7 0 converge 9 2 is-symmetric-with 2 0 provide-access-to 9989 1136 transport 28 2 cooperate-with 384 39 lie-under 14 4 provide-shuttle-service-to 5 0 ventilate 30 2 cross 1151 118 link-to 168 14 pull 2 1 表 4 指令分解数据集中各类别关系的样本分布统计表

类别名 训练集 测试集 类别名 训练集 测试集 类别名 训练集 测试集 类别名 训练集 测试集 above 195 48 dig 32 7 link-to 78 24 pull 1 0 adjacent-to 7960 2014 dock-alone-at 22 5 load 70 21 sail-by 95 11 adjoint-with 20 4 dock-at 172 34 manage 108 20 sail-on 4750 890 around 10 5 drive-at-the-different-lane 210 48 moor-at 10740 2751 separate 14 4 belong-to 3957 1108 drive-at-the-same-lane 42 18 move-away-from 35 3 serve 2623 681 block 11 0 drive-on 9148 2119 park-alone-at 41 8 slow-down 417 103 border 319 97 emit 12 4 park-next-to 71926 17232 supplement 475 124 close-to 40840 9980 enter 30 4 parked-at 35623 8641 supply 403 115 command 34 9 equipped-with 89 21 pass-under 28 8 support 94 38 connect 618 170 exit-from 6 0 pile-up-at 2070 514 support-the-construction-of 61 9 contain 441 25 hoist 266 66 placed-on 139 20 taxi-on 1175 320 converge 17 5 inside 12566 3607 power 212 47 tow 48 10 cooperate-with 998 230 is-parallel-to 9428 2578 prepared-for 139 31 transport 195 42 cross 1534 364 is-symmetric-with 4 4 provide-access-to 12362 3009 ventilate 83 25 cultivate 97 28 lie-under 42 9 provide-shuttle-service-to 44 34 表 5 定位描述数据集信息统计表

训练集 句子数量 单词数量 平均句子长度 DIOR-GC训练集 11381 151804 13.34 DIOR-GC测试集 11540 164248 14.23 DOTA-GC训练集 37617 932049 24.78 DOTA-GC测试集 11249 239331 21.28 总数 71787 1487432 18.41 表 6 多模态感知中图像描述数据集统计

数据类型 训练集 句子数量 单词数量 平均句子长度 SAR SAR-CAP训练集 524925 4950300 9.43 SAR-CAP测试集 58065 550216 9.48 总数 582990 5500516 9.46 红外 IR-CAP训练集 132410 1330173 10.05 IR-CAP测试集 14735 148973 10.11 总数 147145 1479146 10.08 可见光 DIOR-CAP训练集 11725 157232 13.41 DIOR-CAP测试集 57700 738907 12.81 DOTA-CAP训练集 83635 1486176 17.77 DOTA-CAP测试集 56245 1090289 19.38 总数 209305 3472604 15.84 表 7 多模态感知中图像分类数据集统计

数据类型 类别名称 训练集 测试集 训练/测试比例 SAR Aircraft 3037 16835 0.1804 Aircraft and tank 3 4 0.7500 Bridge 1697 16168 0.1050 Bridge and harbor 16 131 0.1221 Bridge and ship 13 230 0.0565 Bridge and tank 33 329 0.1003 Bridge, harbor and tank 3 25 0.1200 Car 103 941 0.1095 Harbor 138 1255 0.1100 Harbor and tank 9 82 0.1098 Ship 6470 67211 0.0963 Ship and tank 13 287 0.0453 Tank 77 1487 0.0518 总数 11612 104985 0.1505 红外 Ship 1593 14354 0.1110 Street 4036 36370 0.1110 总数 5629 50724 0.1110 表 8 关系推理任务在ReCon1M-REL数据集上的结果

表 9 指令分解任务在ReCon1M-DEC数据集上的结果

表 10 任务调度任务在CityNav数据集上的结果

表 11 定位描述任务在DIOR-GC和DOTA-GC数据集上的结果

表 12 多模态感知任务在SAR-CAP和IR-CAP数据集上的结果

模型类型 模型方法 SAR-CAP IR-CAP BLEU-1↑ BLEU-2↑ BLEU-3↑ BLEU-4↑ METEOR↑ ROUGE-L↑ BLEU-1↑ BLEU-2↑ BLEU-3↑ BLEU-4↑ METEOR↑ ROUGE-L↑ 通用视觉

语言模型

(零样本评估)MiniGPT-v2[17] 7.00 3.64 1.59 0.60 7.67 9.25 5.65 3.35 1.92 0.98 7.62 8.67 DeepSeek-VL2[18] 12.52 5.88 1.88 0.60 10.65 14.10 13.95 7.57 3.47 1.43 12.48 15.12 遥感视觉

语言模型

(微调后评估)RingMo-Agent[21] 55.93 44.49 33.57 23.94 25.06 51.12 56.84 40.45 29.17 21.50 26.15 43.13 表 13 多模态感知任务在DIOR-CAP和DOTA-CAP数据集上的结果

表 14 多模态感知任务在IR-DET数据集上的结果

-

[1] YAN Qiwei, DENG Chubo, LIU Chenglong, et al. ReCon1M: A large-scale benchmark dataset for relation comprehension in remote sensing imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2025, 63: 4507022. doi: 10.1109/TGRS.2025.3589986. [2] LEE J, MIYANISHI T, KURITA S, et al. CityNav: A large-scale dataset for real-world aerial navigation[C]. Proceedings of the IEEE/CVF International Conference on Computer Vision, Honolulu, The United States of America, 2025: 5912–5922. (查阅网上资料, 未找到本条文献信息, 请确认). [3] LIU Chenyang, ZHAO Rui, CHEN Hao, et al. Remote sensing image change captioning with dual-branch transformers: A new method and a large scale dataset[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5633520. doi: 10.1109/TGRS.2022.3218921. [4] XIA Guisong, BAI Xiang, DING Jian, et al. DOTA: A large-scale dataset for object detection in aerial images[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3974–3983. doi: 10.1109/cvpr.2018.00418. [5] LI Ke, WAN Gang, CHENG Gong, et al. Object detection in optical remote sensing images: A survey and a new benchmark[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 159: 296–307. doi: 10.1016/j.isprsjprs.2019.11.023. [6] LI Yuxuan, LI Xiang, LI Weijie, et al. SARDet-100K: Towards open-source benchmark and toolkit for large-scale SAR object detection[C]. Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2024: 4079. [7] SUO Jiashun, WANG Tianyi, ZHANG Xingzhou, et al. HIT-UAV: A high-altitude infrared thermal dataset for Unmanned Aerial Vehicle-based object detection[J]. Scientific Data, 2023, 10(1): 227. doi: 10.1038/s41597-023-02066-6. [8] 李春柳, 王水根. 红外海上船舶数据集[EB/OL]. https://openai.raytrontek.com/apply/Sea_shipping.html, 2021. (查阅网上资料,未找到对应的英文翻译信息,请确认补充). [9] 刘晴, 徐召飞, 金荣璐, 等. 红外安防数据库[EB/OL]. https://openai.raytrontek.com/apply/Infrared_security.html, 2021. (查阅网上资料,未找到对应的英文翻译信息,请确认补充). [10] 刘晴, 徐召飞, 王水根. 红外航拍人车检测数据集[EB/OL]. http://openai.raytrontek.com/apply/Aerial_mancar.html, 2021. (查阅网上资料,未找到对应的英文翻译信息,请确认补充). [11] 李钢强, 王建生, 王水根. 双光车载场景数据库[EB/OL]. http://openai.raytrontek.com/apply/Double_light_vehicle.html, 2021. (查阅网上资料,未找到对应的英文翻译信息,请确认补充). [12] 李永富, 赵显, 刘兆军, 等. 远海(10–12km)船舶的目标检测数据集[EB/OL]. http://www.core.sdu.edu.cn/info/1133/2174.htm, 2020.(查阅网上资料,未找到对应的英文翻译信息,请确认补充). [13] XIA Guisong, HU Jingwen, HU Fan, et al. AID: A benchmark data set for performance evaluation of aerial scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(7): 3965–3981. doi: 10.1109/TGRS.2017.2685945. [14] CHENG Gong, HAN Junwei, and LU Xiaoqiang. Remote sensing image scene classification: Benchmark and state of the art[J]. Proceedings of the IEEE, 2017, 105(10): 1865–1883. doi: 10.1109/JPROC.2017.2675998. [15] GRATTAFIORI A, DUBEY A, JAUHRI A, et al. The llama 3 herd of models[J]. arXiv: 2407.21783, 2024. doi: 10.48550/arXiv.2407.21783.(查阅网上资料,不确定文献类型及格式是否正确,请确认). [16] LOBRY S, MARCOS D, MURRAY J, et al. RSVQA: Visual question answering for remote sensing data[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(12): 8555–8566. doi: 10.1109/TGRS.2020.2988782. [17] CHEN Jun, ZHU Deyao, SHEN Xiaoqian, et al. MiniGPT-v2: Large language model as a unified interface for vision-language multi-task learning[J]. arXiv: 2310.09478, 2023. doi: 10.48550/arXiv.2310.09478.(查阅网上资料,不确定文献类型及格式是否正确,请确认). [18] WU Zhiyu, CHEN Xiaokang, PAN Zizheng, et al. Deepseek-vl2: Mixture-of-experts vision-language models for advanced multimodal understanding[J]. arXiv: 2412.10302, 2024. doi: 10.48550/arXiv.2412.10302.(查阅网上资料,不确定文献类型及格式是否正确,请确认). [19] PENG Zhiliang, WANG Wenhui, DONG Li, et al. Kosmos-2: Grounding multimodal large language models to the world[J]. arXiv: 2306.14824, 2023. doi: 10.48550/arXiv.2306.14824.(查阅网上资料,不确定文献类型及格式是否正确,请确认). [20] CHEN Keqin, ZHANG Zhao, ZENG Weili, et al. Shikra: Unleashing multimodal LLM's referential dialogue magic[J]. arXiv: 2306.15195, 2023. doi: 10.48550/arXiv.2306.15195.(查阅网上资料,不确定文献类型及格式是否正确,请确认). [21] HU Huiyang, WANG Peijin, FENG Yingchao, et al. RingMo-Agent: A unified remote sensing foundation model for multi-platform and multi-modal reasoning[J]. arXiv: 2507.20776, 2025. doi: 10.48550/arXiv.2507.20776.(查阅网上资料,不确定文献类型及格式是否正确,请确认). [22] ANDERSON P, WU Q, TENEY D, et al. Vision-and-language navigation: Interpreting visually-grounded navigation instructions in real environments[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3674–3683. doi: 10.1109/CVPR.2018.00387. [23] LIU Shuobo, ZHANG Hongsheng, QI Yuankai, et al. AeriaLVLN: Vision-and-language navigation for UAVs[C]. Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2023: 15338–15348. doi: 10.1109/ICCV51070.2023.01411. [24] WANG Peijin, HU Huiyang, TONG Boyuan, et al. RingMoGPT: A unified remote sensing foundation model for vision, language, and grounded tasks[J]. IEEE Transactions on Geoscience and Remote Sensing, 2025, 63: 5611320. doi: 10.1109/TGRS.2024.3510833. [25] CHENG Qimin, HUANG Haiyan, XU Yuan, et al. NWPU-captions dataset and MLCA-net for remote sensing image captioning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5629419. doi: 10.1109/TGRS.2022.3201474. [26] LU Xiaoqiang, WANG Binqiang, ZHENG Xiangtao, et al. Exploring models and data for remote sensing image caption generation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(4): 2183–2195. doi: 10.1109/TGRS.2017.2776321. [27] QU Bo, LI Xuelong, TAO Dacheng, et al. Deep semantic understanding of high resolution remote sensing image[C]. 2016 International Conference on Computer, Information and Telecommunication Systems (CITS), Kunming, China, 2016: 1–5. doi: 10.1109/CITS.2016.7546397. [28] LI Kun, VOSSELMAN G, and YANG M Y. HRVQA: A visual question answering benchmark for high-resolution aerial images[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2024, 214: 65–81. doi: 10.1016/j.isprsjprs.2024.06.002. [29] SUN Xian, WANG Peijin, LU Wanxuan, et al. RingMo: A remote sensing foundation model with masked image modeling[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5612822. doi: 10.1109/TGRS.2022.3194732. [30] HU Huiyang, WANG Peijin, BI Hanbo, et al. RS-vHeat: Heat conduction guided efficient remote sensing foundation model[C]. Proceedings of the IEEE/CVF International Conference on Computer Vision, Honolulu, The United States of America, 2025: 9876–9887. (查阅网上资料, 未找到本条文献信息, 请确认). [31] CHANG Hao, WANG Peijin, DIAO Wenhui, et al. Remote sensing change detection with bitemporal and differential feature interactive perception[J]. IEEE Transactions on Image Processing, 2024, 33: 4543–4555. doi: 10.1109/TIP.2024.3424335. [32] LIU Haotian, LI Chunyuan, LI Yuheng, et al. Improved baselines with visual instruction tuning[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2024: 26286–26296. doi: 10.1109/CVPR52733.2024.02484. [33] HU Yuan, YUAN Jianlong, WEN Congcong, et al. RSGPT: A remote sensing vision language model and benchmark[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2025, 224: 272–286. doi: 10.1016/j.isprsjprs.2025.03.028. [34] LUO Junwei, PANG Zhen, ZHANG Yongjun, et al. SkySenseGPT: A fine-grained instruction tuning dataset and model for remote sensing vision-language understanding[J]. arXiv: 2406.10100, 2024. doi: 10.48550/arXiv.2406.10100. [35] LI Xiang, DING Jian, and MOHAMED E. VRSBench: A versatile vision-language benchmark dataset for remote sensing image understanding[C]. Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2024: 106. [36] SHI Qian, HE Da, LIU Zhengyu, et al. Globe230k: A benchmark dense-pixel annotation dataset for global land cover mapping[J]. Journal of Remote Sensing, 2023, 3: 0078. doi: 10.34133/remotesensing.0078. [37] HU Fengming, XU Feng, WANG R, et al. Conceptual study and performance analysis of tandem multi-antenna spaceborne SAR interferometry[J]. Journal of Remote Sensing, 2024, 4: 0137. doi: 10.34133/remotesensing.0137. [38] MEI Shaohui, LIAN Jiawei, WANG Xiaofei, et al. A comprehensive study on the robustness of deep learning-based image classification and object detection in remote sensing: Surveying and benchmarking[J]. Journal of Remote Sensing, 2024, 4: 0219. doi: 10.34133/remotesensing.0219. -

- 首页

- 期刊相关

- 在线期刊

-

数据集

- 《电子与信息学报》数据社区

- DroneRFa:用于侦测低空无人机的大规模无人机射频信号数据集

- 基于全球AIS的多源航迹关联数据集(MTAD)

- 双清一号(珞珈三号01星)多模式成像样例数据集

- 3DSARBuSim 1.0:人造建筑高分辨星载SAR三维成像仿真数据集

- 基于全球开源DEM的高程误差预测数据集

- 超大规模MIMO阵列可视区域空间分布数据集

- DroneRFb-DIR: 用于非合作无人机个体识别的射频信号数据集

- 基于5G空口的通感一体化实测数据集

- DTDS:用于侧信道能量分析的Dilithium数据集

- 海上船只目标多源数据集可见光图像部分

- 面向山地森林区域的植被高度预测数据集

- 面向遥感智能体的多模态图文指令大规模数据集

- BIRD1445:面向生态监测的大规模多模态鸟类数据集

- 投稿指南

- 期刊订阅

- 联系我们

- English

下载:

下载:

下载:

下载: