T3FRNet: A Cloth-Changing Person Re-identification via Texture-aware Transformer Tuning Fine-grained Reconstruction Method

-

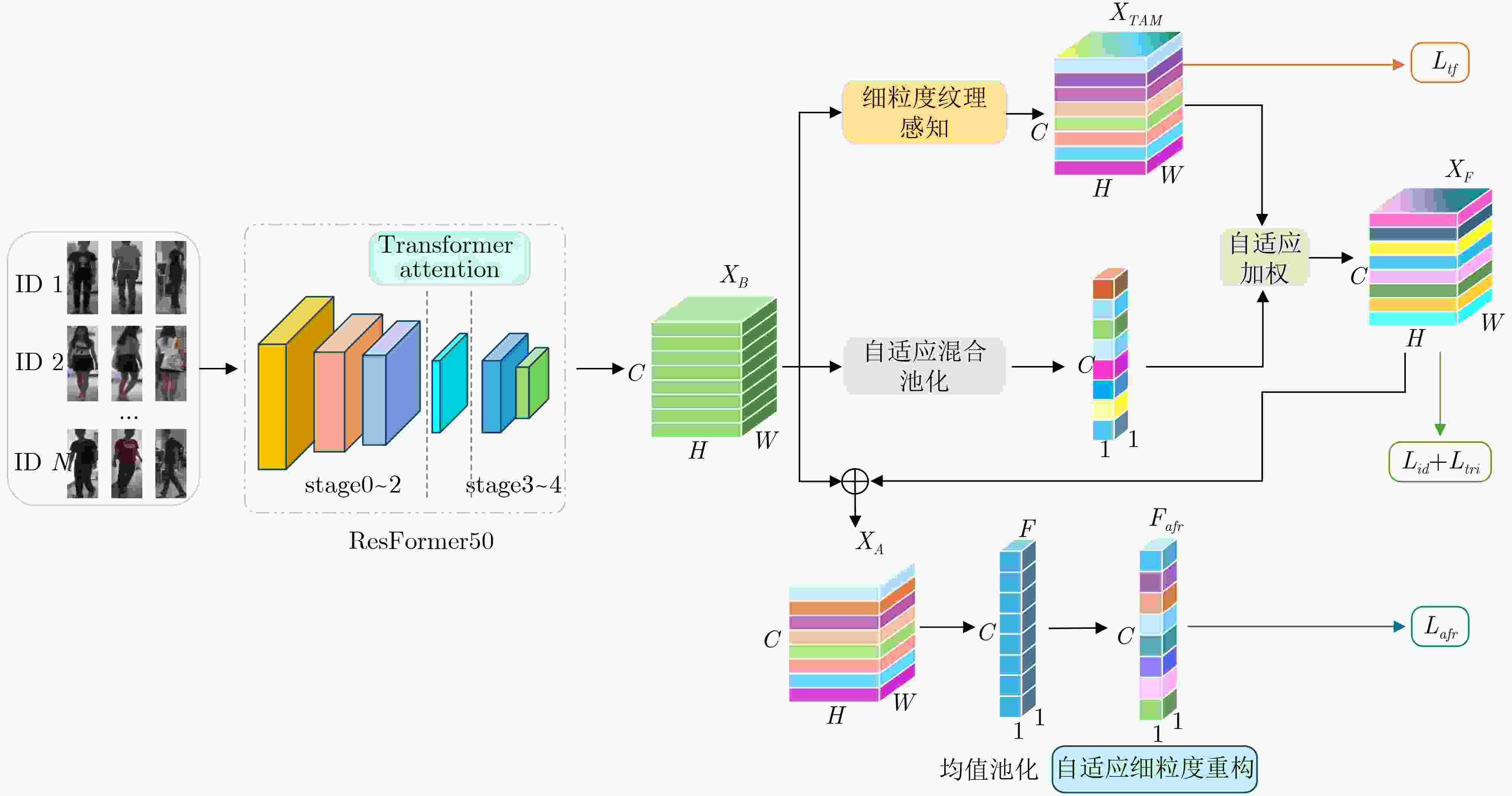

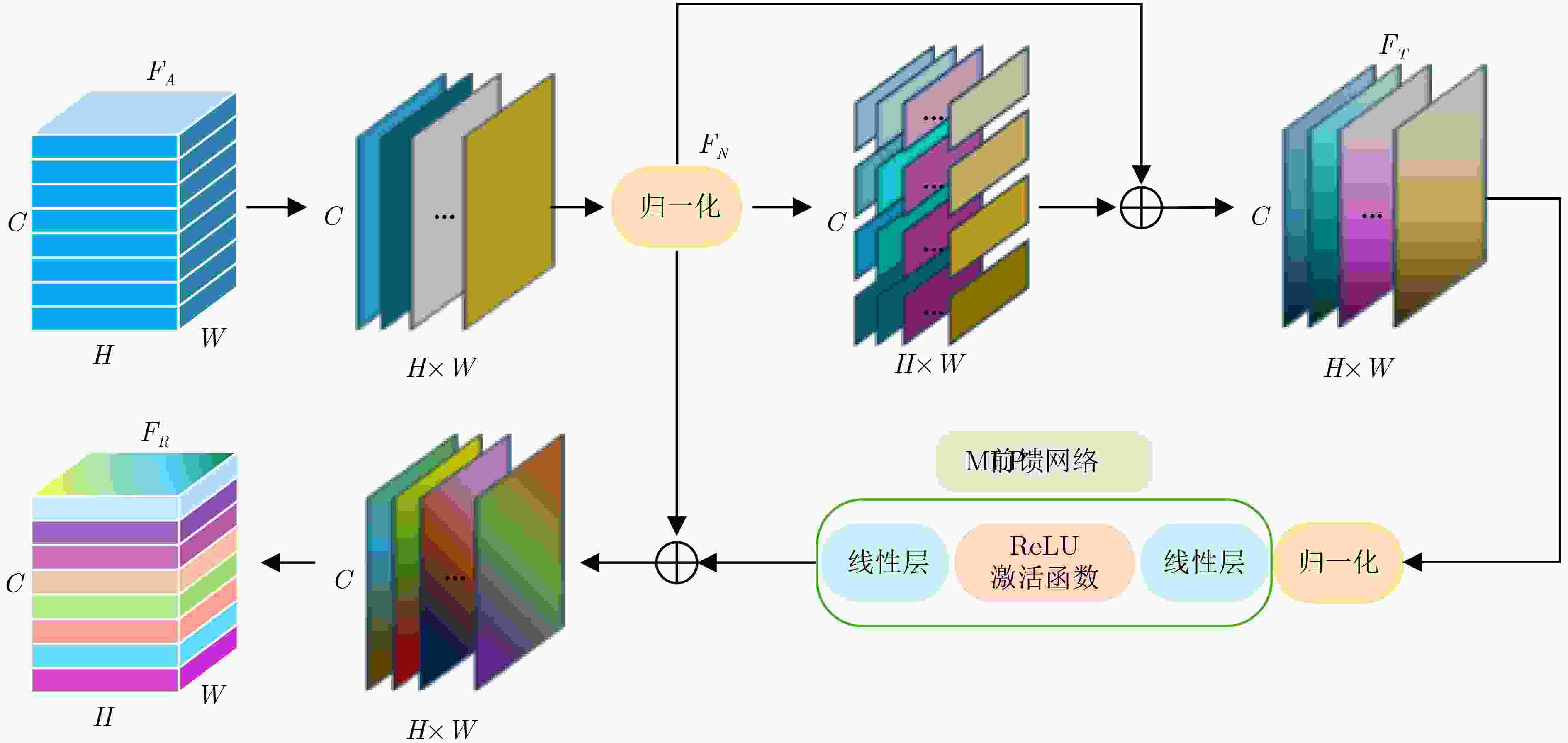

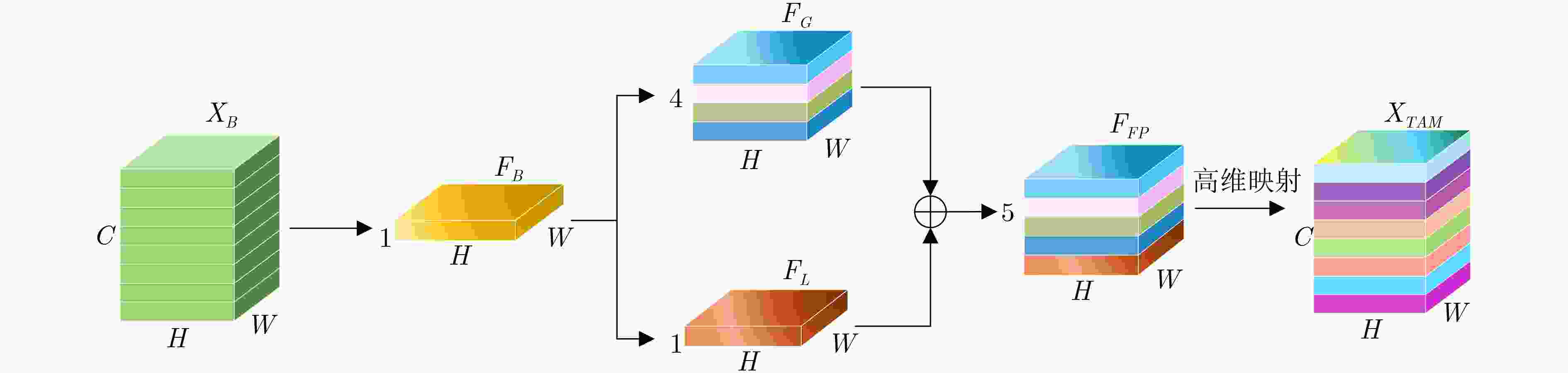

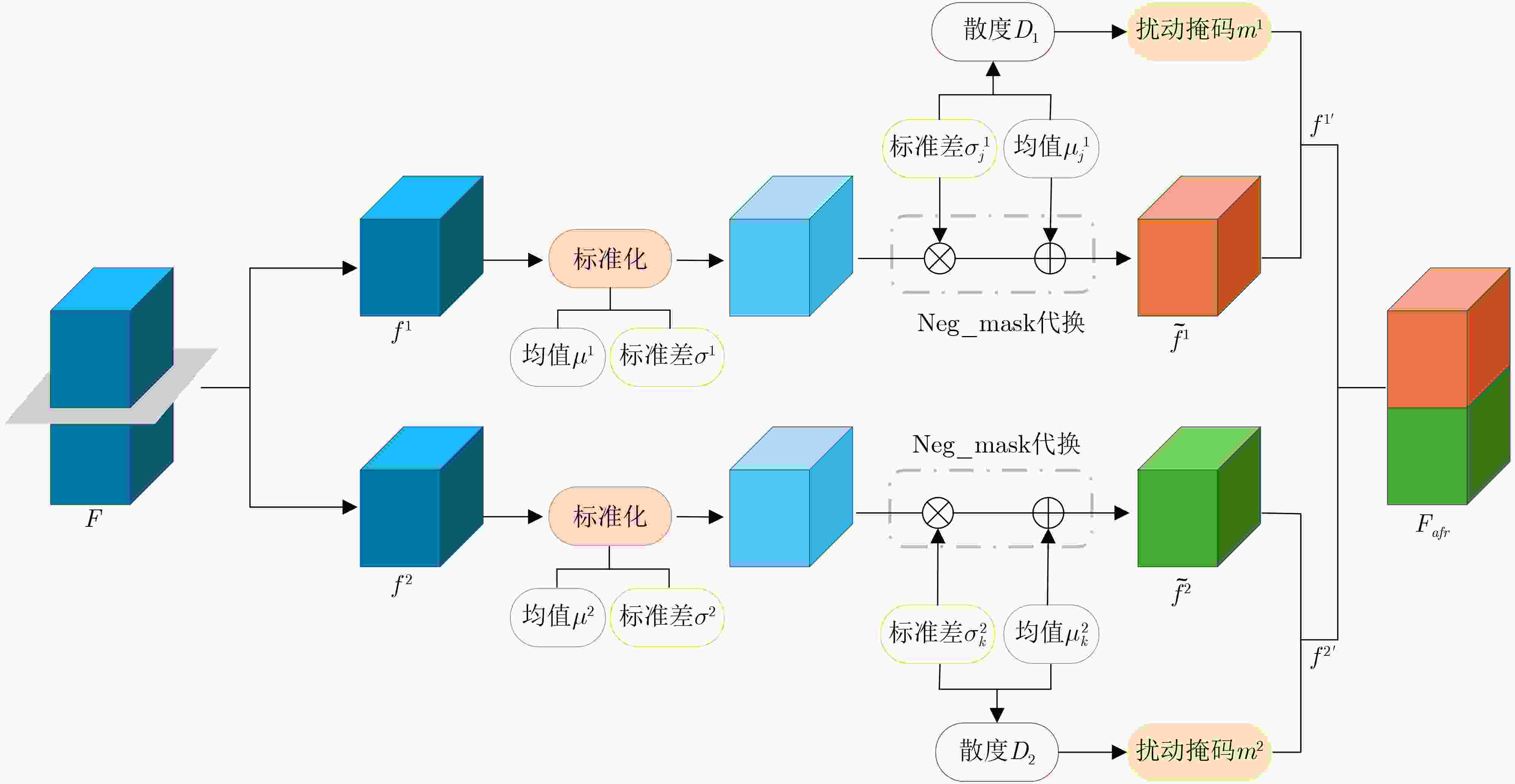

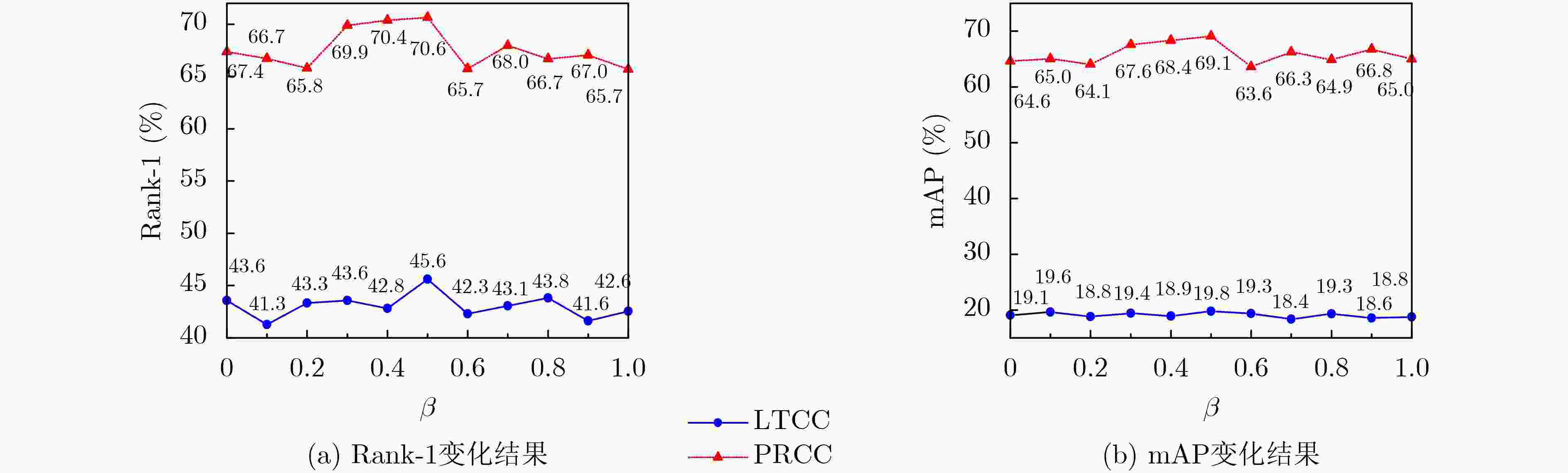

摘要: 针对换衣行人重识别(CC Re-ID)任务中存在的有效特征提取困难和训练样本不足的问题,该文提出一种融合三重感知细粒度重构的换衣行人重识别方法,利用细粒度纹理感知模块处理后的纹理特征与深度特征进行拼接,提高服装变化下的识别能力,引入Transformer注意力机制的ResFormer50网络增强模型对图像特征提取的感知能力,通过自适应混合池化模块(AHP)进行通道级自主感知聚合,对特征进行深层次细粒度挖掘,从而达到整体表征一致性与服装变化泛化性并重的效果。新的自适应细粒度重构策略(AFR)通过细粒度级别的对抗性扰动与选择性重构,在不依赖显式监督的前提下,显著提升模型对服装变换、局部细节扰动的鲁棒性和泛化能力,从而提高模型在实际场景中的识别准确率。大量实验结果表明了所提方法的有效性,在LTCC和PRCC数据集换衣场景下,Rank-1/mAP分别达到了45.6%/19.8%和70.6%/69.1%,优于同类前沿方法。

-

关键词:

- 行人重识别 /

- 换衣 /

- 纹理感知 /

- Transformer /

- 细粒度重构

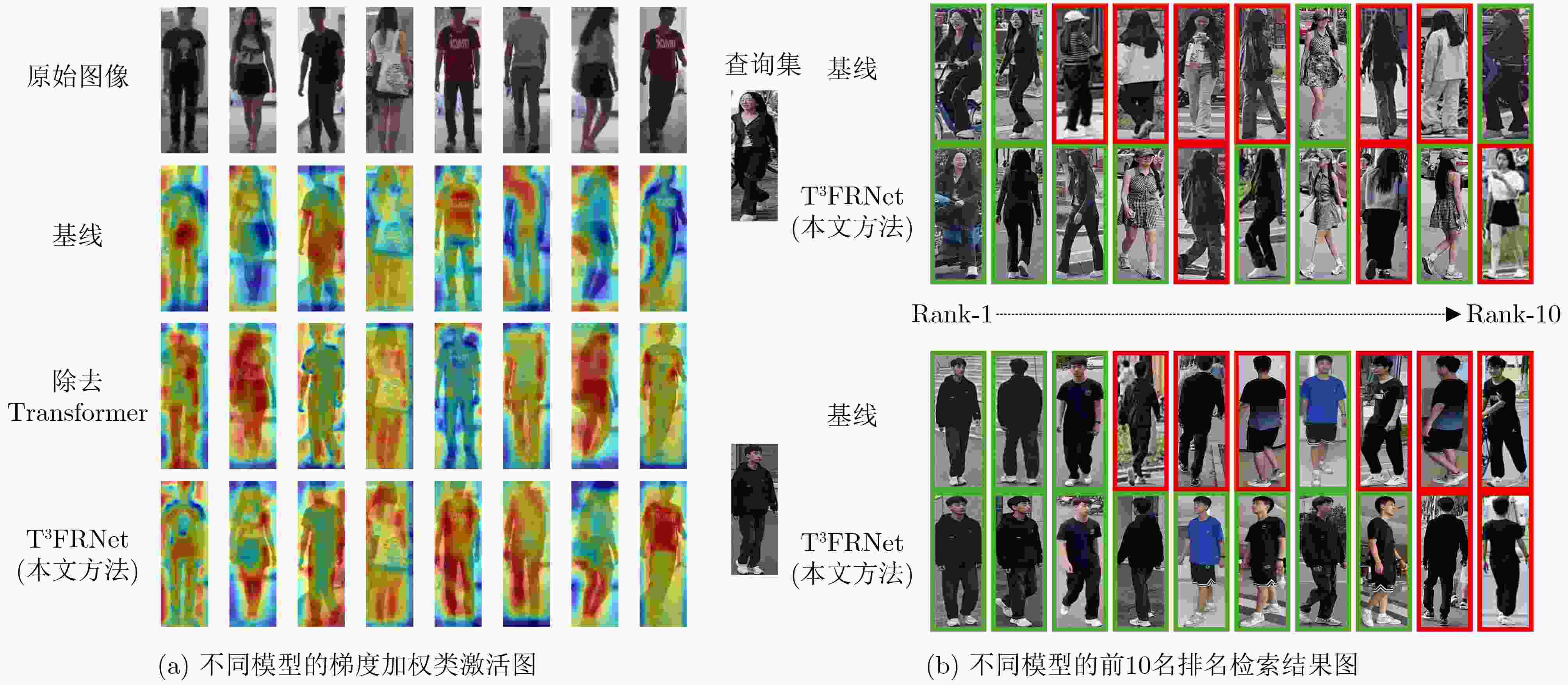

Abstract:Objective Compared with conventional person re-identification, Cloth-Changing Person Re-Identification (CC Re-ID) requires moving beyond reliance on the temporal stability of appearance features and instead demands models with stronger robustness and generalization to meet real-world application requirements. Existing deep feature representation methods leverage salient regions or attribute information to obtain discriminative features and mitigate the effect of clothing variations; however, their performance often degrades under changing environments. To address the challenges of effective feature extraction and limited training samples in CC Re-ID tasks, a Texture-Aware Transformer Tuning Fine-Grained Reconstruction Network (T3FRNet) is proposed. The method aims to exploit fine-grained information in person images, enhance the robustness of feature learning, and reduce the adverse effect of clothing changes on recognition performance, thereby alleviating performance bottlenecks under scene variations. Methods To compensate for the limitations of local receptive fields, a Transformer-based attention mechanism is integrated into a ResNet50 backbone, forming a hybrid architecture referred to as ResFormer50. This design enables spatial relationship modeling on top of local features and improves perceptual capacity for feature extraction while maintaining a balance between efficiency and performance. A fine-grained Texture-Aware (TA) module concatenates processed texture features with deep semantic features, improving recognition capability under clothing variations. An Adaptive Hybrid Pooling (AHP) module performs channel-wise autonomous aggregation, allowing deeper mining of feature representations and balancing global representation consistency with robustness to clothing changes. An Adaptive Fine-Grained Reconstruction (AFR) strategy introduces adversarial perturbations and selective reconstruction at the fine-grained level. Without explicit supervision, this strategy enhances robustness and generalization against clothing changes and local detail perturbations. In addition, a Joint Perception Loss (JP-Loss) is constructed by integrating fine-grained identity robustness loss, texture feature loss, identity classification loss, and triplet loss. This composite loss jointly supervises the learning of robust fine-grained identity features under cloth-changing conditions. Results and Discussions Extensive evaluations are conducted on LTCC, PRCC, Celeb-reID, and the large-scale DeepChange dataset (Table 1). Under cloth-changing scenarios, the proposed method achieves Rank-1/mAP scores of 45.6%/19.8% on LTCC, 70.6%/69.1% on PRCC (Table 2), 64.6%/18.4% on Celeb-reID (Table 3), and 58.0%/20.8% on DeepChange (Table 4), outperforming existing state-of-the-art approaches. The TA module effectively captures latent local texture details and, when combined with the AFR strategy, enables fine-grained adversarial perturbation and selective reconstruction. This improves fine-grained feature representation and allows the method to achieve 96.2% Rank-1 and 89.3% mAP on the clothing-consistent Market-1501 dataset (Table 5). The JP-Loss further supports the TA module and AFR strategy by enabling fine-grained adaptive regulation and clustering of texture-sensitive identity features (Table 6). When the Transformer-based attention mechanism is inserted after stage 2 of ResNet50, improved local structural perception and global context modeling are obtained with only a slight increase in computational overhead (Table 7). Setting the $ \beta $ parameter to 0.5 (Fig. 5) enables effective balancing of global texture consistency and local fine-grained discriminability. Visualization results on PRCC (Fig. 6) and top-10 retrieval comparisons (Fig. 7) provide intuitive evidence of improved stability and accuracy in cloth-changing scenarios. Conclusions A CC Re-ID method based on T3FRNet is proposed, consisting of the ResFormer50 backbone, TA module, AHP module, AFR strategy, and JP-Loss. Experimental results on four cloth-changing benchmarks and one clothing-consistent dataset confirm the effectiveness of the proposed approach. Under long-term scenarios, Rank-1/mAP improvements of 16.8%/8.3% on LTCC and 30.4%/32.9% on PRCC are achieved. The ResFormer50 backbone supports spatial relationship modeling over local fine-grained features, while the TA module and AFR strategy enhance feature expressiveness. The AHP module balances sensitivity to local textures and stability of global features, and JP-Loss strengthens adaptive regulation of fine-grained representations. Future work will focus on simplifying the architecture to reduce computational complexity and latency while maintaining high recognition accuracy. -

Key words:

- Person re-identification /

- Cloth-changing /

- Texture-aware /

- Transformer /

- Fine-grained reconstruction

-

表 1 5个常用数据集的基本情况

数据集 摄像头 数据类型 训练集(ID/图像) 测试集(ID/图像) 查询集 图库集 LTCC 12 SC/CC 77/ 9576 75/493 75/ 7050 PRCC 3 SC/CC 150/ 17896 71/ 3543 71/ 3384 Celeb-reID - SC/CC 632/ 20208 420/ 2972 420/ 11006 DeepChange 17 CC 450/ 75083 521/ 17527 521/ 62956 Market- 1501 6 SC 751/ 12936 750/ 3368 750/ 19732 注:SC和CC分别表示服装一致和服装变换两种模式 表 2 本文方法与其他最新方法在LTCC和PRCC数据集上的实验结果(%)

方法 模态 服装标签 LTCC PRCC 标准 服装变换 标准 服装变换 Rank-1 mAP Rank-1 mAP Rank-1 mAP Rank-1 mAP CAL[7] RGB Yes 74.2 40.8 40.1 18.0 100.0 99.8 55.2 55.8 AIM[8] RGB Yes 76.3 41.1 40.6 19.1 100.0 99.9 57.9 58.3 FDGAN[23] RGB Yes 73.4 36.9 32.9 15.4 100.0 99.7 58.3 58.6 GI-ReID[4] RGB+ga No 63.2 29.4 23.7 10.4 - - 33.3 - BMDB[6] RGB+bs No 74.3 39.5 41.8 17.9 99.7 97.9 56.6 53.9 FIRe2[12] RGB No 75.9 39.9 44.6 19.1 100.0 99.5 65.0 63.1 TAPFN[13] RGB No 71.9 34.7 40.1 17.4 99.8 98.1 69.1 68.7 CSSC[11] RGB No 78.1 40.2 43.6 18.6 100.0 99.1 65.5 63.0 MBUNet[9] RGB No 67.6 34.8 40.3 15.0 99.8 99.6 68.7 65.2 ACID[10] RGB No 65.1 30.6 29.1 14.5 99.1 99.0 55.4 66.1 AFL[24] RGB No 74.4 39.1 42.1 18.4 100.0 99.7 57.4 56.5 T3FRNet(本文方法) RGB No 79.8 41.3 45.6 19.8 100.0 99.9 70.6 69.1 表 3 本文方法与其他最新方法在Celeb-reID数据集上的实验结果(%)

表 4 本文方法与其他最新方法在DeepChange数据集上的实验结果(%)

表 5 本文方法与其他最新方法在Market-

1501 数据集上的比较(%)表 6 LTCC和PRCC数据集上的消融实验(%)

AFR TA JP-Loss ResFormer50 AHP LTCC PRCC Rank-1 mAP Rank-1 mAP - - - - - 28.8 11.5 40.2 36.2 √ - - - - 40.1 17.9 58.3 56.0 √ √ - - - 40.6 18.8 65.2 62.2 √ √ √ - - 41.5 19.3 67.6 62.5 √ √ √ √ - 41.8 19.2 69.5 67.5 √ √ √ √ √ 45.6 19.8 70.6 69.1 表 7 Transformer注意力机制嵌入位置研究结果(%)

嵌入位置 LTCC PRCC Rank-1 mAP Rank-1 mAP stage1 45.3 19.4 69.8 69.0 stage2 45.6 19.8 70.6 69.1 stage3 43.1 19.2 68.9 67.3 stage4 41.6 19.2 62.0 61.1 -

[1] 程德强, 姬广凯, 张皓翔, 等. 基于多粒度融合和跨尺度感知的跨模态行人重识别[J]. 通信学报, 2025, 46(1): 108–123. doi: 10.11959/j.issn.1000-436x.2025019.CHENG Deqiang, JI Guangkai, ZHANG Haoxiang, et al. Cross-modality person re-identification based on multi-granularity fusion and cross-scale perception[J]. Journal on Communications, 2025, 46(1): 108–123. doi: 10.11959/j.issn.1000-436x.2025019. [2] 庄建军, 庄宇辰. 一种结构化双注意力混合通道增强的跨模态行人重识别方法[J]. 电子与信息学报, 2024, 46(2): 518–526. doi: 10.11999/JEIT230614.ZHUANG Jianjun and ZHUANG Yuchen. A cross-modal person re-identification method based on hybrid channel augmentation with structured dual attention[J]. Journal of Electronics & Information Technology, 2024, 46(2): 518–526. doi: 10.11999/JEIT230614. [3] 张鹏, 张晓林, 包永堂, 等. 换装行人重识别研究进展[J]. 中国图象图形学报, 2023, 28(5): 1242–1264. doi: 10.11834/jig.220702.ZHANG Peng, ZHANG Xiaolin, BAO Yongtang, et al. Cloth-changing person re-identification: A summary[J]. Journal of Image and Graphics, 2023, 28(5): 1242–1264. doi: 10.11834/jig.220702. [4] JIN Xin, HE Tianyu, ZHENG Kecheng, et al. Cloth-changing person re-identification from a single image with gait prediction and regularization[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 14258-14267. doi: 10.1109/CVPR52688.2022.01388. [5] GE Yiyuan, YU Mingxin, CHEN Zhihao, et al. Attention-enhanced controllable disentanglement for cloth-changing person re-identification[J]. The Visual Computer, 2025, 41(8): 5609–5624. doi: 10.1007/s00371-024-03741-4. [6] LIU Xuan, HAN Hua, XU Kaiyu, et al. Cloth-changing person re-identification based on the backtracking mechanism[J]. IEEE Access, 2025, 13: 27527–27536. doi: 10.1109/ACCESS.2025.3538976. [7] GU Xinqian, CHANG Hong, MA Bingpeng, et al. Clothes-changing person re-identification with RGB modality only[C]. Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 1060–1069. doi: 10.1109/CVPR52688.2022.00113. [8] YANG Zhengwei, LIN Meng, ZHONG Xian, et al. Good is bad: Causality inspired cloth-debiasing for cloth-changing person re-identification[C]. Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 1472–1481. doi: 10.1109/CVPR52729.2023.00148. [9] ZHANG Guoqing, LIU Jie, CHEN Yuhao, et al. Multi-biometric unified network for cloth-changing person re-identification[J]. IEEE Transactions on Image Processing, 2023, 32: 4555–4566. doi: 10.1109/TIP.2023.3279673. [10] YANG Zhengwei, ZHONG Xian, ZHONG Zhun, et al. Win-win by competition: Auxiliary-free cloth-changing person re-identification[J]. IEEE Transactions on Image Processing, 2023, 32: 2985–2999. doi: 10.1109/TIP.2023.3277389. [11] WANG Qizao, QIAN Xuelin, LI Bin, et al. Content and salient semantics collaboration for cloth-changing person re-identification[C]. Proceedings of the 2025 IEEE International Conference on Acoustics, Speech and Signal Processing, Hyderabad, India, 2025: 1–5. doi: 10.1109/ICASSP49660.2025.10890451. [12] WANG Qizao, QIAN Xuelin, LI Bin, et al. Exploring fine-grained representation and recomposition for cloth-changing person re-identification[J]. IEEE Transactions on Information Forensics and Security, 2024, 19: 6280–6292. doi: 10.1109/TIFS.2024.3414667. [13] ZHANG Guoqing, ZHOU Jieqiong, ZHENG Yuhui, et al. Adaptive transformer with pyramid fusion for cloth-changing person re-identification[J]. Pattern Recognition, 2025, 163: 111443. doi: 10.1016/j.patcog.2025.111443. [14] HE Kaiming, ZHANG Xiangyu, REN Shaqing, et al. Deep residual learning for image recognition[C]. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. [15] LUO Hao, GU Youzhi, LIAO Xingyu, et al. Bag of tricks and a strong baseline for deep person re-identification[C]. Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, USA, 2019: 1487–1495. doi: 10.1109/CVPRW.2019.00190. [16] CHEN Weihua, CHEN Xiaotang, ZHANG Jianguo, et al. Beyond triplet loss: A deep quadruplet network for person re-identification[C]. Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1320–1329. doi: 10.1109/CVPR.2017.145. [17] QIAN Xuelin, WANG Wenxuan, ZHANG Li, et al. Long-term cloth-changing person re-identification[C]. Proceedings of the 15th Asian Conference on Computer Vision, Kyoto, Japan, 2020: 71–88. doi: 10.1007/978-3-030-69535-4_5. [18] YANG Qize, WU Ancong, and ZHENG Weishi. Person re-identification by contour sketch under moderate clothing change[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(6): 2029–2046. doi: 10.1109/TPAMI.2019.2960509. [19] HUANG Yan, XU Jingsong, WU Qiang, et al. Beyond scalar neuron: Adopting vector-neuron capsules for long-term person re-identification[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2020, 30(10): 3459–3471. doi: 10.1109/TCSVT.2019.2948093. [20] XU Peng and ZHU Xiatian. DeepChange: A long-term person re-identification benchmark with clothes change[C]. Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 2023: 11162–11171. doi: 10.1109/ICCV51070.2023.01028. [21] ZHENG Liang, SHEN Liyue, TIAN Lu, et al. Scalable person re-identification: A benchmark[C]. Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1116–1124. doi: 10.1109/ICCV.2015.133. [22] KRIZHEVSKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[J]. Communications of the ACM, 2017, 60(6): 84–90. doi: 10.1145/3065386. [23] CHAN P P K, HU Xiaoman, SONG Haorui, et al. Learning disentangled features for person re-identification under clothes changing[J]. ACM Transactions on Multimedia Computing, Communications and Applications, 2023, 19(6): 1–21. doi: 10.1145/3584359. [24] LIU Yuxuan, GE Hongwei, WANG Zhen, et al. Clothes-changing person re-identification via universal framework with association and forgetting learning[J]. IEEE Transactions on Multimedia, 2024, 26: 4294–4307. doi: 10.1109/TMM.2023.3321498. [25] GUO Peini, LIU Hong, WU Jianbing, et al. Semantic-aware consistency network for cloth-changing person re-identification[C]. Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, Canada, 2023: 8730–8739. doi: 10.1145/3581783.3612416. -

下载:

下载:

下载:

下载: