Bimodal Emotion Recognition Method Based on Dual-stream Attention and Adversarial Mutual Reconstruction

-

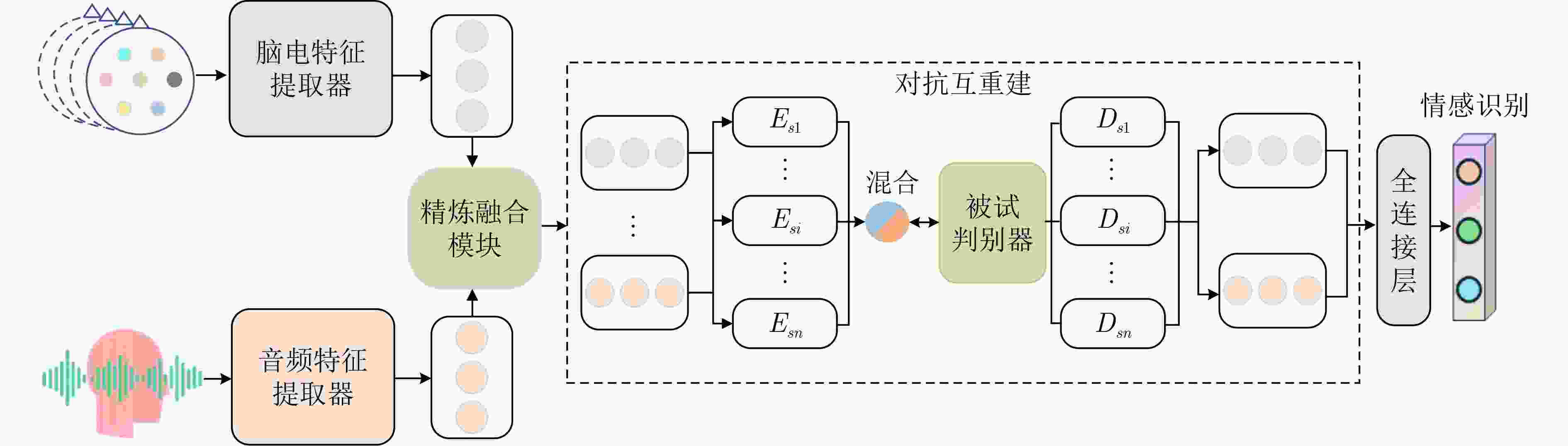

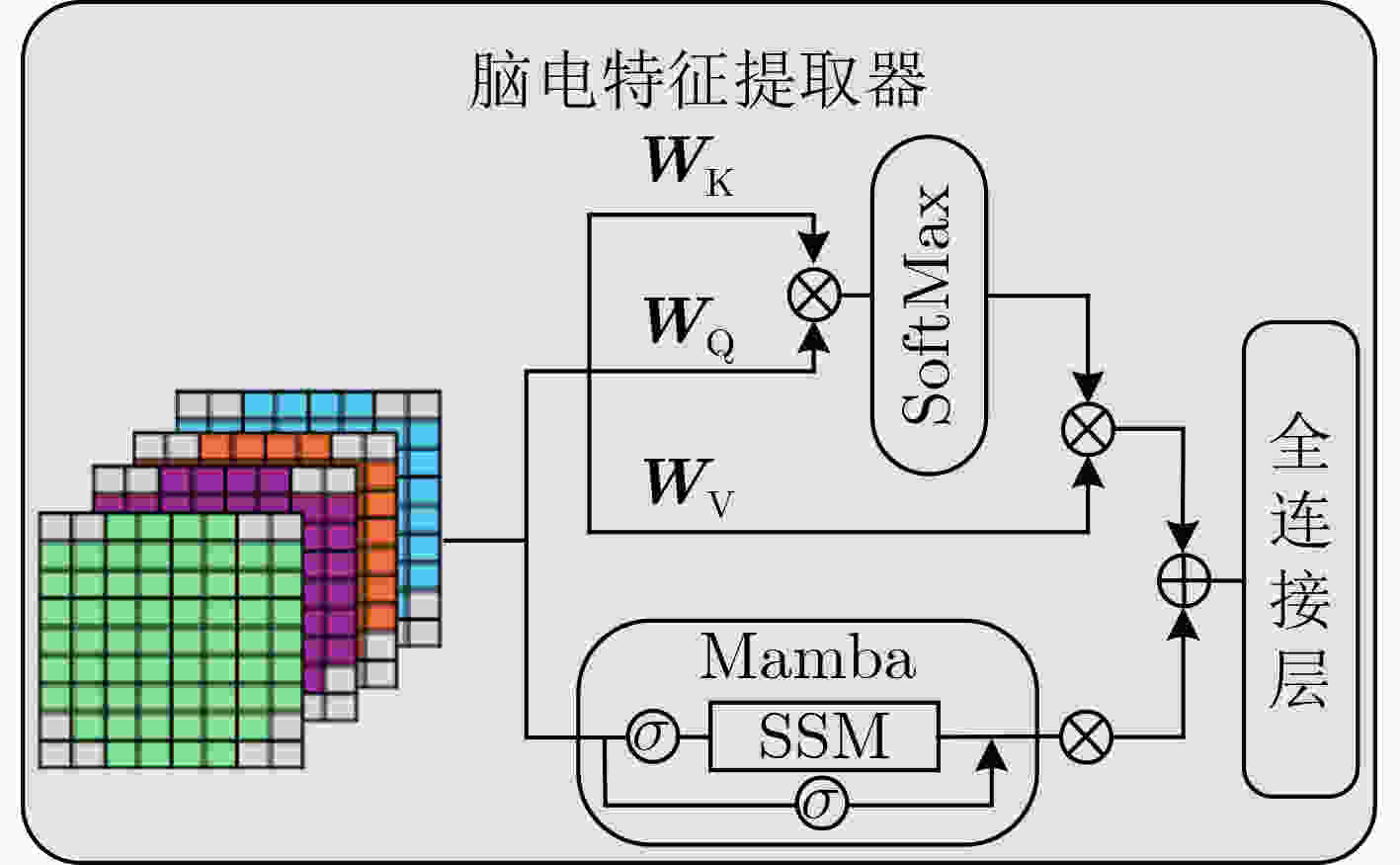

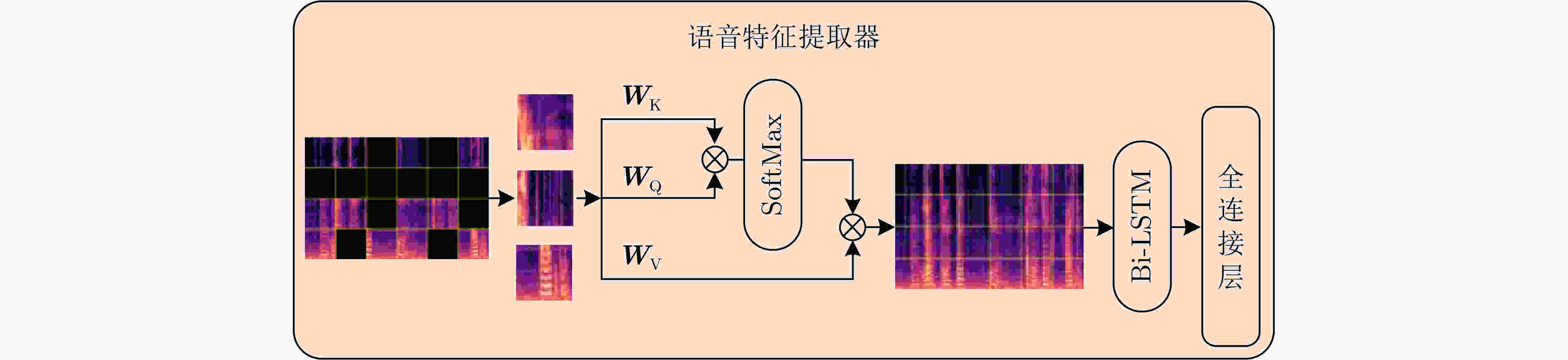

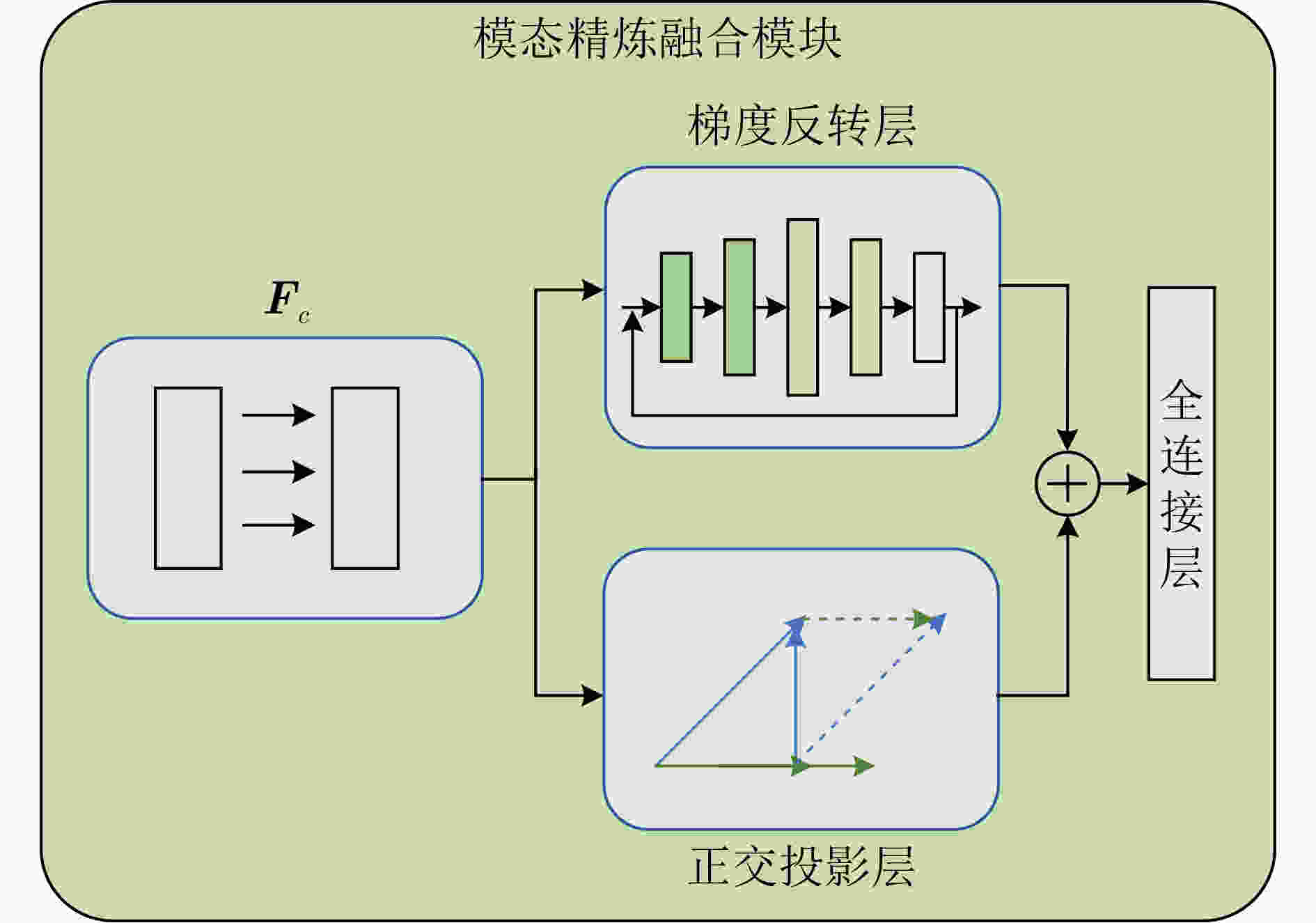

摘要: 随着情感计算的不断发展,基于多模态信号的情绪识别方法得到了广泛关注。脑电情感信号因受试个体的不同存在较大的分布差异,导致分类识别率不高。为了解决现有情绪识别方法中单一模态存在的噪声大、个体差异明显等问题,该文结合脑电信号(EEG)与语音信号提出一种基于双流注意力与对抗互重建的双模态情绪识别方法。在脑电模态方面,设计集成时间帧-通道联合注意力与Mamba网络的双重特征提取器,实现对关键时序片段与频谱特征的深度建模。在语音模态方面,引入帧级随机掩码机制与双向长短时记忆网络结构,增强模型对语音情绪变化的建模能力及抗干扰能力。通过模态精炼融合模块引入梯度反转层与正交投影机制,提升模态对齐与判别能力;进一步地,结合对抗互重建机制,在共享隐空间中重建同类情绪特征,实现跨被试的一致性建模。实验在MAHNOB-HCI, EAV与SEED等多个基准数据集上验证了所提方法的有效性,结果表明该模型在跨个体情绪识别与模态信息融合方面具有显著优势,为多模态情感计算提供了一种有效解决方案。Abstract:

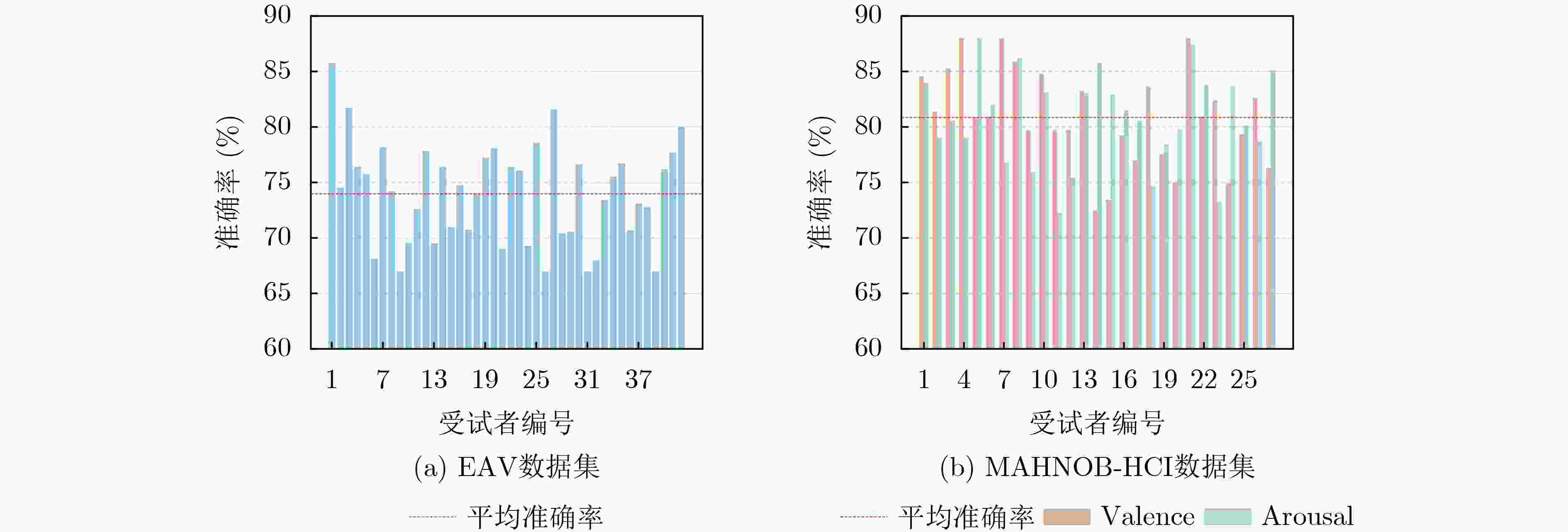

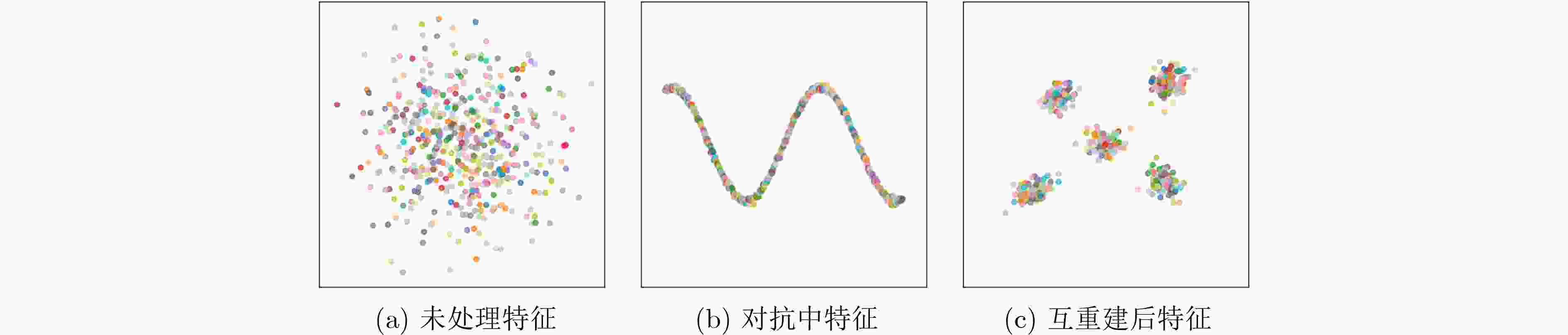

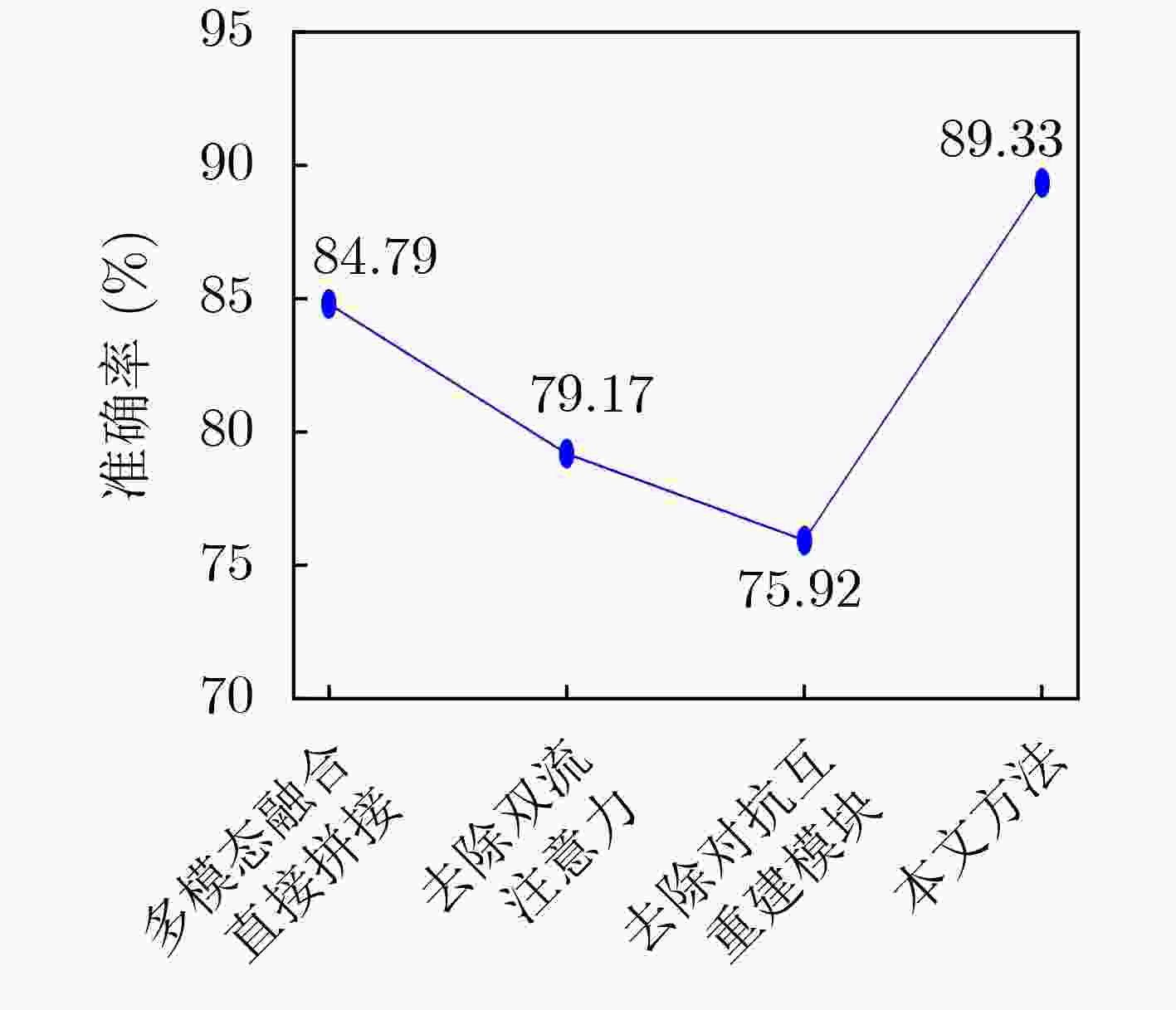

Objective This paper proposes a bimodal emotion recognition method that integrates ElectroEncephaloGraphy (EEG) and speech signals to address noise sensitivity and inter-subject variability that limit single-modality emotion recognition systems. Although substantial progress has been achieved in emotion recognition research, cross-subject recognition accuracy remains limited, and performance is strongly affected by noise. For EEG signals, physiological differences among subjects lead to large variations in emotion classification performance. Speech signals are likewise sensitive to environmental noise and data loss. This study aims to develop a dual-modality recognition framework that combines EEG and speech signals to improve robustness, stability, and generalization performance. Methods The proposed method utilizes two independent feature extractors for EEG and speech signals. For EEG, a dual feature extractor integrating time-frame-channel joint attention and state-space modeling is designed to capture salient temporal and spectral features. For speech, a Bidirectional Long Short-Term Memory (Bi-LSTM) network with a frame-level random masking strategy is adopted to improve robustness to missing or noisy speech segments. A modality refinement fusion module is constructed using gradient reversal and orthogonal projection to enhance feature alignment and discriminability. In addition, an adversarial mutual reconstruction mechanism is applied to enforce consistent emotion feature reconstruction across subjects within a shared latent space. Results and Discussions The proposed method is evaluated on multiple benchmark datasets, including MAHNOB-HCI, EAV, and SEED. Under cross-subject validation on the MAHNOB-HCI dataset, the model achieves accuracies of 81.09% for valence and 80.11% for arousal, outperforming several existing approaches. In five-fold cross-validation, accuracies increase to 98.14% for valence and 98.37% for arousal, demonstrating strong generalization and stability. On the EAV dataset, the proposed model attains an accuracy of 73.29%, which exceeds the 60.85% achieved by conventional Convolutional Neural Network (CNN)-based methods. In single-modality experiments on the SEED dataset, an accuracy of 89.33% is obtained, confirming the effectiveness of the dual-stream attention mechanism and adversarial mutual reconstruction for improving cross-subject generalization. Conclusions The proposed dual-stream attention and adversarial mutual reconstruction framework effectively addresses challenges in cross-subject emotion recognition and multimodal fusion for affective computing. The method demonstrates strong robustness to individual differences and noise, supporting its applicability in real-world human–computer interaction systems. -

表 1 MAHNOB-HCI数据集实验结果

验证方法 模型 准确率 (%) Valence Arousal 跨被试验证 SVM[29] 59.63 61.82 KNN[30] 60.27 63.71 Huang等人[31] 75.63 74.17 Li等人[32] 70.04 72.14 Zhang等人[33] 73.55 74.34 孙强等人[12] 79.99 78.59 本文方法 81.09 80.11 被试依赖交叉验证 SVM[29] 73.19 73.94 KNN[30] 76.64 77.24 Salama等人[34] 96.13 96.79 Zhang等人[33] 96.82 97.79 Chen等人[35] 89.96 90.37 Wang等人[36] 96.69 96.26 孙强等人[12] 97.02 97.36 本文方法 98.14 98.37 表 2 EAV数据集实验结果

表 3 SEED数据集实验结果

验证方法 模型 准确率(%) 标准差 跨被试验证 SVM[29] 56.73 16.29 KNN[30] 73.93 09.95 DGCNN[38] 79.95 09.02 GMSS[39] 86.52 06.22 DG-DANN[40] 84.31 08.32 PPDA[41] 86.72 07.17 本文方法 89.33 05.79 被试依赖交叉验证 SVM[29] 71.62 14.45 KNN[30] 79.24 12.94 DGCNN[38] 92.27 10.11 GMSS[39] 89.18 09.74 DG-DANN[40] 91.74 09.22 本文方法 94.81 09.31 表 4 反转强度系数$ \lambda $消融实验结果

反转强度系数 准确率(%) 标准差 备注 $ \mathit{\lambda} $=0.05 82.12 7.03 较轻反转,去偏效果较弱 $ \mathit{\lambda} $=0.1 89.33 5.79 最优性能 $ \mathit{\lambda} $=0.2 85.95 6.11 较强反转 $ \mathit{\lambda} $=0.5 79.57 9.56 过强反转,模型泛化能力下降 表 5 损失函数消融实验结果

消融设置 保留损失项 准确率(%) 移除模态对齐损失 $ L\mathrm{_{adv}} $,$ L\mathrm{_{rec}} $ 86.16 移除互重建损失 $ L\mathrm{_{adv}} $,$ L\mathrm{_{ali}} $ 87.02 移除被试对抗损失 $ L\mathrm{_{ali}} $,$ L\mathrm{_{rec}} $ 86.29 完整模型 $ L\mathrm{_{adv}} $,$ L\mathrm{_{ali}} $,$ L\mathrm{_{rec}} $ 89.33 -

[1] LI Wei, HUAN Wei, HOU Bowen, et al. Can emotion be transferred?—A review on transfer learning for EEG-based emotion recognition[J]. IEEE Transactions on Cognitive and Developmental Systems, 2022, 14(3): 833–846. doi: 10.1109/TCDS.2021.3098842. [2] LI Wei, FANG Cheng, ZHU Zhihao, et al. Fractal spiking neural network scheme for EEG-based emotion recognition[J]. IEEE Journal of Translational Engineering in Health and Medicine, 2024, 12: 106–118. doi: 10.1109/JTEHM.2023.3320132. [3] HAMADA M, ZAIDAN B B, and ZAIDAN A A. A systematic review for human EEG brain signals based emotion classification, feature extraction, brain condition, group comparison[J]. Journal of Medical Systems, 2018, 42(9): 162. doi: 10.1007/s10916-018-1020-8. [4] 姚鸿勋, 邓伟洪, 刘洪海, 等. 情感计算与理解研究发展概述[J]. 中国图象图形学报, 2022, 27(6): 2008–2035. doi: 10.11834/jig.220085.YAO Hongxun, DENG Weihong, LIU Honghai, et al. An overview of research development of affective computing and understanding[J]. Journal of Image and Graphics, 2022, 27(6): 2008–2035. doi: 10.11834/jig.220085. [5] MA Jiaxin, TANG Hao, ZHENG Weilong, et al. Emotion recognition using multimodal residual LSTM network[C]. The 27th ACM International Conference on Multimedia, Nice, France, 2019: 176–183. doi: 10.1145/3343031.3350871. [6] LI Mu and LU Baoliang. Emotion classification based on gamma-band EEG[C]. 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, USA, 2009: 1223–1226. doi: 10.1109/IEMBS.2009.5334139. [7] FRANTZIDIS C A, BRATSAS C, PAPADELIS C L, et al. Toward emotion aware computing: An integrated approach using multichannel neurophysiological recordings and affective visual stimuli[J]. IEEE Transactions on Information Technology in Biomedicine, 2010, 14(3): 589–597. doi: 10.1109/TITB.2010.2041553. [8] LAWHERN V J, SOLON A J, WAYTOWICH N R, et al. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces[J]. Journal of Neural Engineering, 2018, 15(5): 056013. doi: 10.1088/1741-2552/aace8c. [9] YANG Yilong, WU Qingfeng, FU Yazhen, et al. Continuous convolutional neural network with 3D input for EEG-based emotion recognition[C]. The 25th International Conference on Neural Information Processing, Siem Reap, Cambodia, 2018: 433–443. doi: 10.1007/978-3-030-04239-4_39. [10] DU Xiaobing, MA Cuixia, ZHANG Guanhua, et al. An efficient LSTM network for emotion recognition from multichannel EEG signals[J]. IEEE Transactions on Affective Computing, 2022, 13(3): 1528–1540. doi: 10.1109/TAFFC.2020.3013711. [11] SHEN Jian, LI Kunlin, LIANG Huajian, et al. HEMAsNet: A hemisphere asymmetry network inspired by the brain for depression recognition from electroencephalogram signals[J]. IEEE Journal of Biomedical and Health Informatics, 2024, 28(9): 5247–5259. doi: 10.1109/JBHI.2024.3404664. [12] 孙强, 陈远. 多层次时空特征自适应集成与特有-共享特征融合的双模态情感识别[J]. 电子与信息学报, 2024, 46(2): 574–587. doi: 10.11999/JEIT231110.SUN Qiang and CHEN Yuan. Bimodal emotion recognition with adaptive integration of multi-level spatial-temporal features and specific-shared feature fusion[J]. Journal of Electronics & Information Technology, 2024, 46(2): 574–587. doi: 10.11999/JEIT231110. [13] LI Chao, BIAN Ning, ZHAO Ziping, et al. Multi-view domain-adaptive representation learning for EEG-based emotion recognition[J]. Information Fusion, 2024, 104: 102156. doi: 10.1016/j.inffus.2023.102156. [14] CHUANG Zejing and WU C H. Multi-modal emotion recognition from speech and text[J]. International Journal of Computational Linguistics & Chinese Language Processing, 2004, 9(2): 45–62. [15] ZHENG Wenbo, YAN Lan, and WANG Feiyue. Two birds with one stone: Knowledge-embedded temporal convolutional transformer for depression detection and emotion recognition[J]. IEEE Transactions on Affective Computing, 2023, 14(4): 2595–2613. doi: 10.1109/TAFFC.2023.3282704. [16] NING Zhaolong, HU Hao, YI Ling, et al. A depression detection auxiliary decision system based on multi-modal feature-level fusion of EEG and speech[J]. IEEE Transactions on Consumer Electronics, 2024, 70(1): 3392–3402. doi: 10.1109/TCE.2024.3370310. [17] 杨杨, 詹德川, 姜远, 等. 可靠多模态学习综述[J]. 软件学报, 2021, 32(4): 1067–1081. doi: 10.13328/j.cnki.jos.006167.YANG Yang, ZHAN Dechuan, JIANG Yuan, et al. Reliable multi-modal learning: A survey[J]. Journal of Software, 2021, 32(4): 1067–1081. doi: 10.13328/j.cnki.jos.006167. [18] JENKE R, PEER A, and BUSS M. Feature extraction and selection for emotion recognition from EEG[J]. IEEE Transactions on Affective Computing, 2014, 5(3): 327–339. doi: 10.1109/TAFFC.2014.2339834. [19] HOU Fazheng, GAO Qiang, SONG Yu, et al. Deep feature pyramid network for EEG emotion recognition[J]. Measurement, 2022, 201: 111724. doi: 10.1016/j.measurement.2022.111724. [20] ZHANG Jianhua, YIN Zhong, CHEN Peng, et al. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review[J]. Information Fusion, 2020, 59: 103–126. doi: 10.1016/j.inffus.2020.01.011. [21] GU A and DAO T. M: Linear-time Sequence Modeling with Selective State Spaces[J]. 2023, arXiv preprint arXiv:2312.00752. [22] 薛珮芸, 戴书涛, 白静, 等. 借助语音和面部图像的双模态情感识别[J]. 电子与信息学报, 2024, 46(12): 4542–4552. doi: 10.11999/JEIT240087.XUE Peiyun, DAI Shutao, BAI Jing, et al. Emotion recognition with speech and facial images[J]. Journal of Electronics & Information Technology, 2024, 46(12): 4542–4552. doi: 10.11999/JEIT240087. [23] HUANG Poyao, XU Hu, LI Juncheng, et al. Masked autoencoders that listen[C]. The 36th International Conference on Neural Information Processing Systems, New Orleans, USA, 2022: 2081. [24] 李幼军, 黄佳进, 王海渊, 等. 基于SAE和LSTM RNN的多模态生理信号融合和情感识别研究[J]. 通信学报, 2017, 38(12): 109–120. doi: 10.11959/j.issn.1000-436x.2017294.LI Youjun, HUANG Jiajin, WANG Haiyuan, et al. Study of emotion recognition based on fusion multi-modal bio-signal with SAE and LSTM recurrent neural network[J]. Journal on Communications, 2017, 38(12): 109–120. doi: 10.11959/j.issn.1000-436x.2017294. [25] WANG Yiming, ZHANG Bin, and TANG Yujiao. DMMR: Cross-subject domain generalization for EEG-based emotion recognition via denoising mixed mutual reconstruction[C] The 38th AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2024: 628–636. doi: 10.1609/aaai.v38i1.27819. [26] SOLEYMANI M, LICHTENAUER J, PUN T, et al. A multimodal database for affect recognition and implicit tagging[J]. IEEE Transactions on Affective Computing, 2012, 3(1): 42–55. doi: 10.1109/T-AFFC.2011.25. [27] LEE M H, SHOMANOV A, BEGIM B, et al. EAV: EEG-audio-video dataset for emotion recognition in conversational contexts[J]. Scientific Data, 2024, 11(1): 1026. doi: 10.1038/s41597-024-03838-4. [28] ZHENG Weilong and LU Baoliang. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks[J]. IEEE Transactions on Autonomous Mental Development, 2015, 7(3): 162–175. doi: 10.1109/TAMD.2015.2431497. [29] SUYKENS J A K and VANDEWALLE J. Least squares support vector machine classifiers[J]. Neural Processing Letters, 1999, 9(3): 293–300. doi: 10.1023/A:1018628609742. [30] COVER T and HART P. Nearest neighbor pattern classification[J]. IEEE Transactions on Information Theory, 1967, 13(1): 21–27. doi: 10.1109/TIT.1967.1053964. [31] HUANG Yongrui, YANG Jianhao, LIU Siyu, et al. Combining facial expressions and electroencephalography to enhance emotion recognition[J]. Future Internet, 2019, 11(5): 105. doi: 10.3390/fi11050105. [32] LI Ruixin, LIANG Yan, LIU Xiaojian, et al. MindLink-eumpy: An open-source python toolbox for multimodal emotion recognition[J]. Frontiers in Human Neuroscience, 2021, 15: 621493. doi: 10.3389/fnhum.2021.621493. [33] ZHANG Yuhao, HOSSAIN Z, and RAHMAN S. DeepVANet: A deep end-to-end network for multi-modal emotion recognition[C]. The 18th International Conference on Human-Computer Interaction, Bari, Italy, 2021: 227–237. doi: 10.1007/978-3-030-85613-7_16. [34] SALAMA E S, EL-KHORIBI R A, SHOMAN M E, et al. A 3D-convolutional neural network framework with ensemble learning techniques for multi-modal emotion recognition[J]. Egyptian Informatics Journal, 2021, 22(2): 167–176. doi: 10.1016/j.eij.2020.07.005. [35] CHEN Jingxia, LIU Yang, XUE Wen, et al. Multimodal EEG emotion recognition based on the attention recurrent graph convolutional network[J]. Information, 2022, 13(11): 550. doi: 10.3390/info13110550. [36] WANG Shuai, QU Jingzi, ZHANG Yong, et al. Multimodal emotion recognition from EEG signals and facial expressions[J]. IEEE Access, 2023, 11: 33061–33068. doi: 10.1109/ACCESS.2023.3263670. [37] YIN Kang, SHIN H B, LI Dan, et al. EEG-based multimodal representation learning for emotion recognition[C]. 2025 13th International Conference on Brain-Computer Interface, Gangwon, Republic of Korea, 2025: 1–4. doi: 10.1109/BCI65088.2025.10931743. [38] SONG Tengfei, ZHENG Wenming, SONG Peng, et al. EEG emotion recognition using dynamical graph convolutional neural networks[J]. IEEE Transactions on Affective Computing, 2020, 11(3): 532–541. doi: 10.1109/TAFFC.2018.2817622. [39] LI Yang, CHEN Ji, LI Fu, et al. GMSS: Graph-based multi-task self-supervised learning for EEG emotion recognition[J]. IEEE Transactions on Affective Computing, 2023, 14(3): 2512–2525. doi: 10.1109/TAFFC.2022.3170428. [40] MA Boqun, LI He, ZHENG Weilong, et al. Reducing the subject variability of EEG signals with adversarial domain generalization[C]. The 26th International Conference on Neural Information Processing, Sydney, Australia, 2019: 30–42. doi: 10.1007/978-3-030-36708-4_3. [41] ZHAO Liming, YAN Xu, and LU Baoliang. Plug-and-play domain adaptation for cross-subject EEG-based emotion recognition[C/OL] The 35th AAAI Conference on Artificial Intelligence, Beijing, China, 2021: 863–870. doi: 10.1609/aaai.v35i1.16169. -

下载:

下载:

下载:

下载: