Enhanced Super-Resolution-based Dual-Path Short-Term Dense Concatenate Metric Change Detection Network for Heterogeneous Remote Sensing Images

-

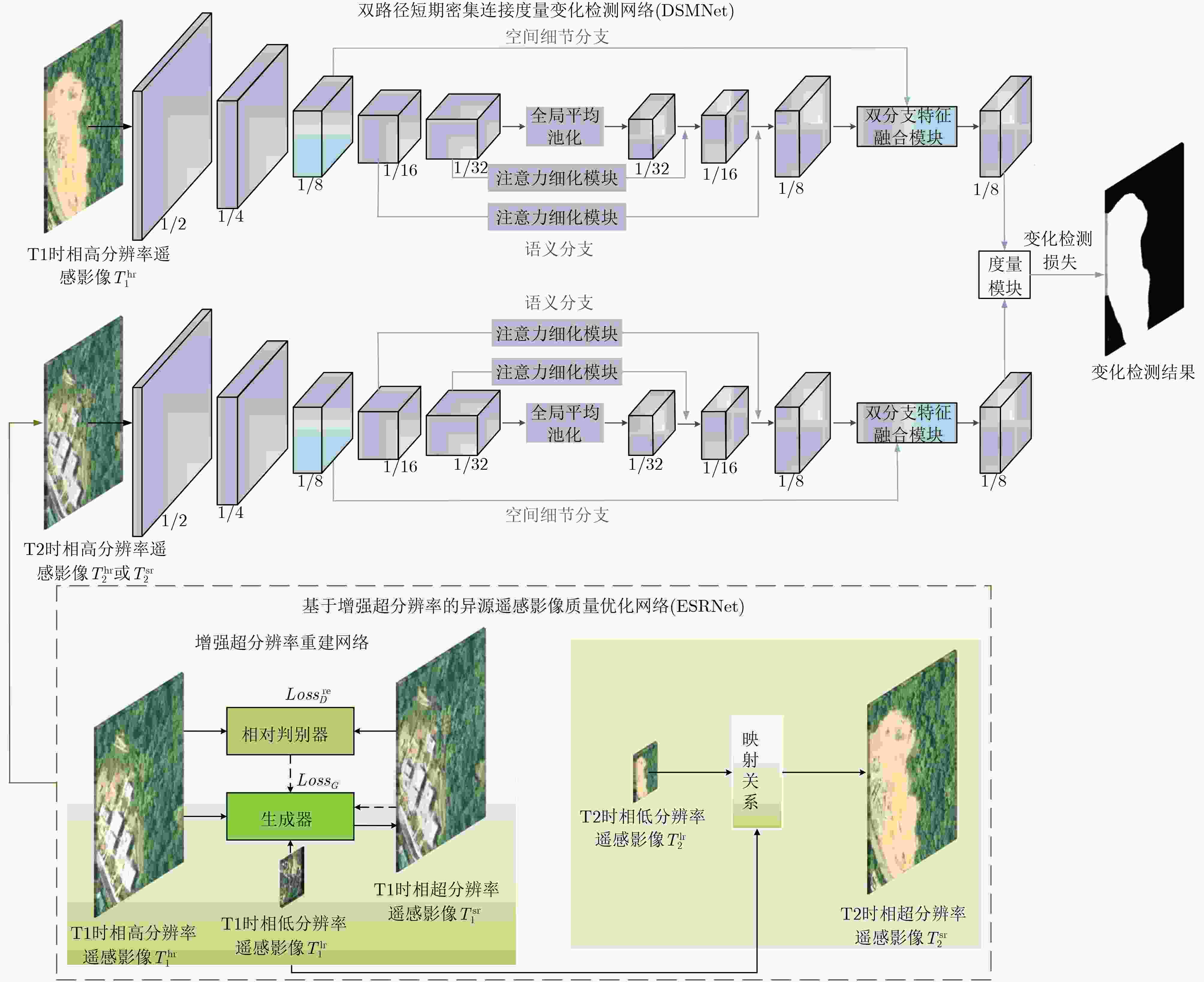

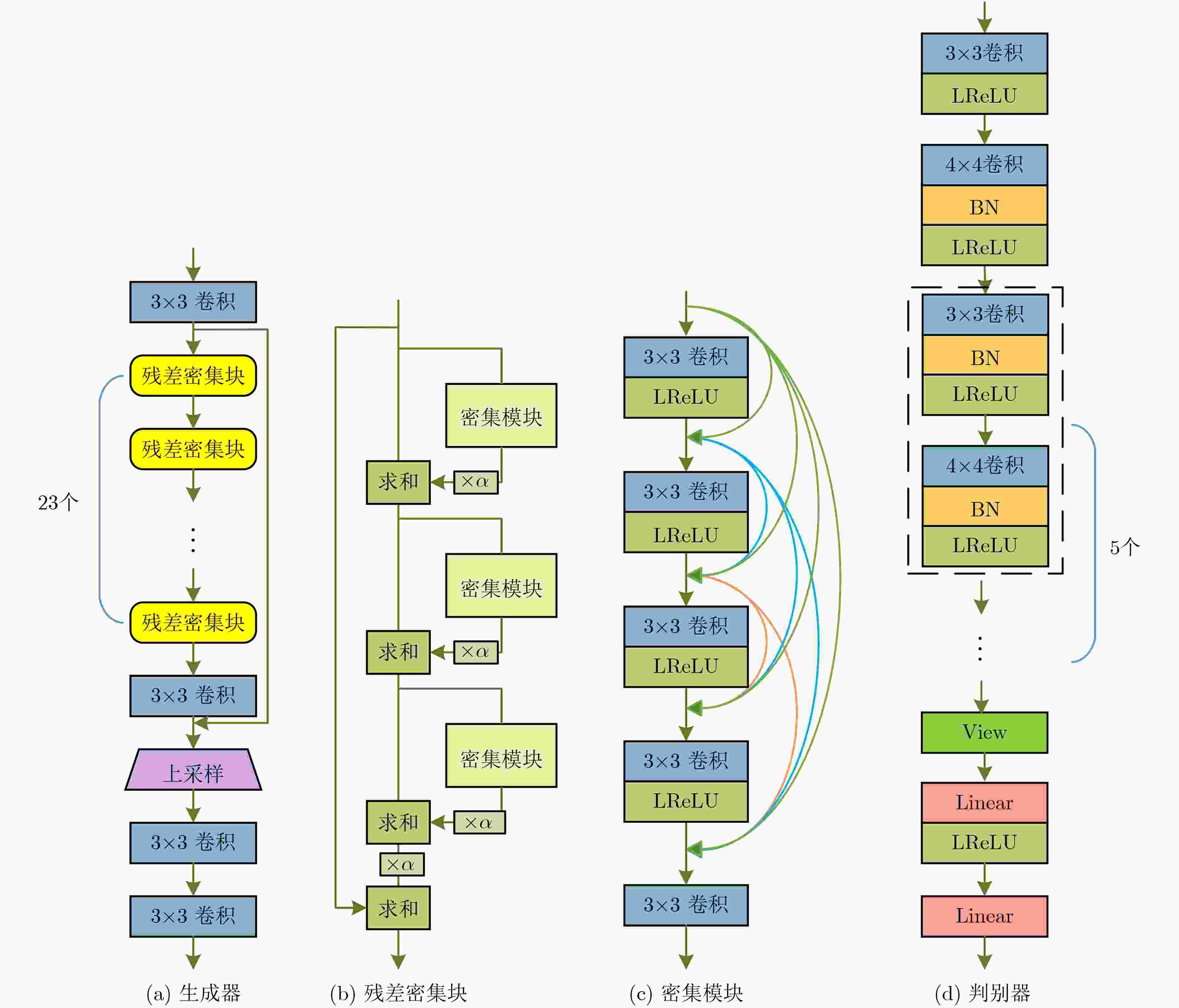

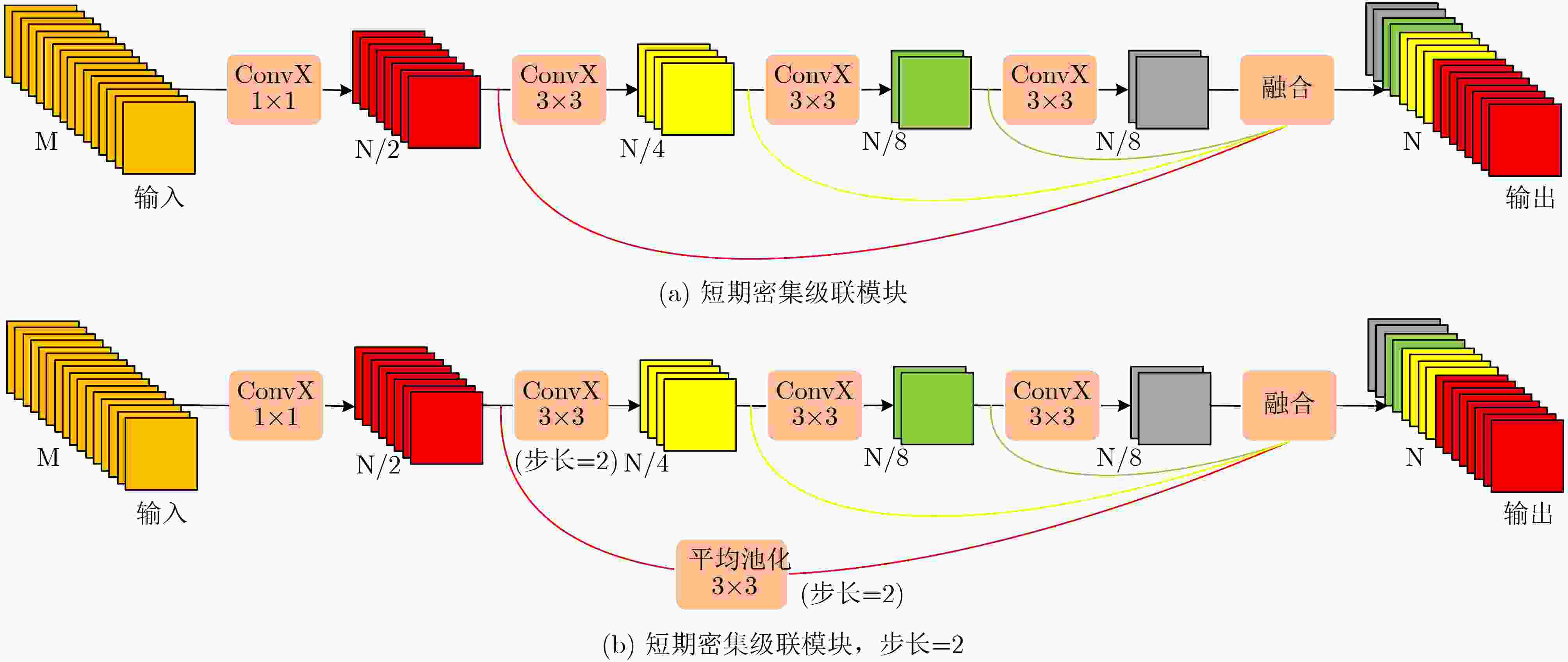

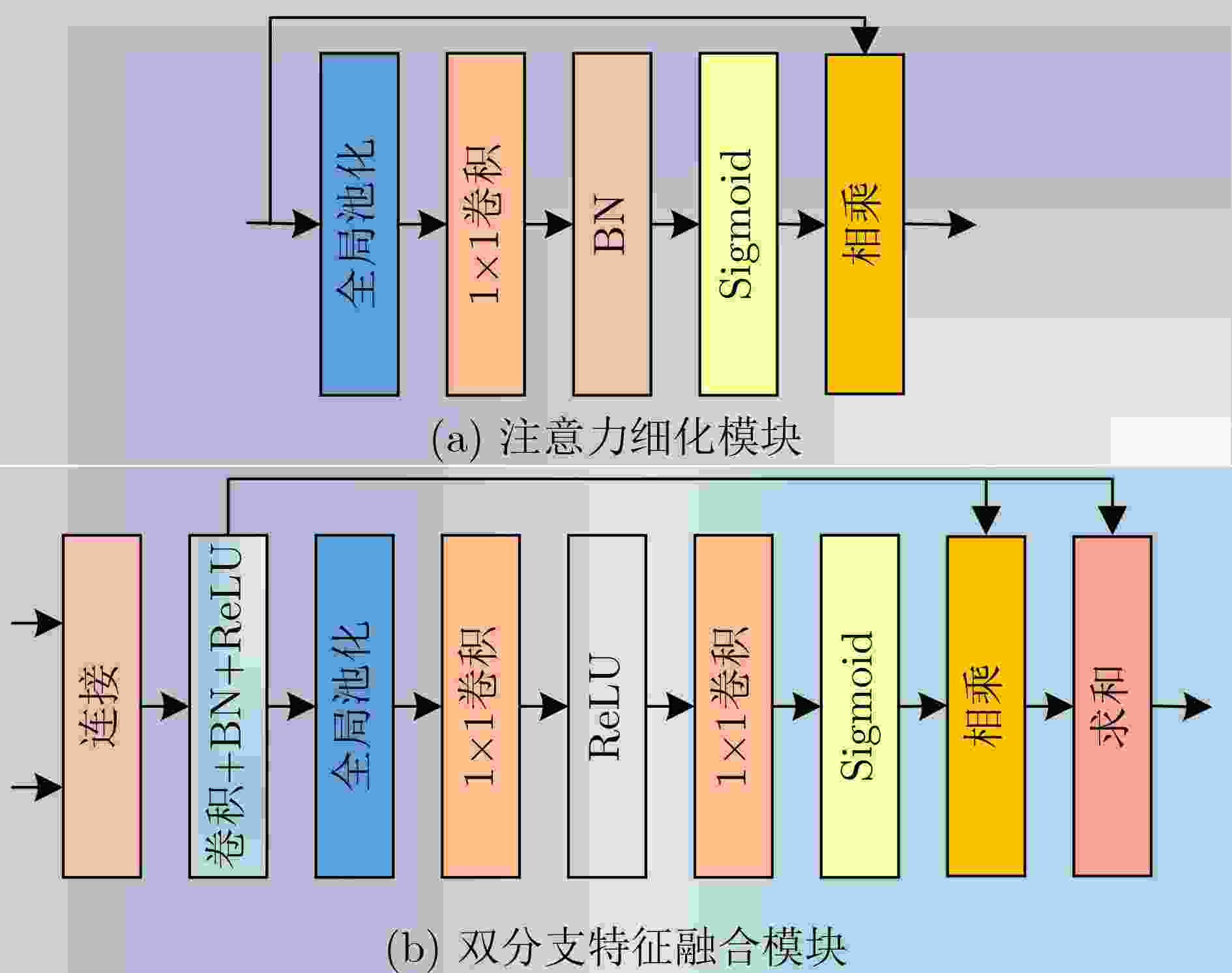

摘要: 光学异源高分辨率遥感影像变化检测中存在着空间分辨率差异、光谱差异以及变化类型复杂多样的问题,使得准确高效的检测异源高分辨率遥感影像中的变化更加困难。针对上述问题,该文提出一种基于增强超分辨率的异源遥感影像双路径短期密集连接度量变化检测网络(ESR-DSMNet),探讨光学异源高分辨率遥感影像高精度和高效率变化检测新路径。提出一种基于增强超分辨率的异源遥感影像质量优化网络(ESRNet),增强边缘信息和细节信息的同时,在影像级解决异源遥感影像空间分辨率差异;提出一种双路径短期密集连接度量变化检测网络(DSMNet),从特征级解决异源遥感影像的光谱差异,并实现高精度和高效率变化检测;在4组同源和异源遥感影像数据集进行对比分析表明,提出的方法领先于其他12种主流的变化检测方法,F1分别为79.69%, 71.01%, 95.87%和90.55%,所提的方法具有更高的精度和效率,泛化性能最好,在检测大面积地物和微小地物时,检测结果内部更具一致性、边缘更加精细。Abstract:

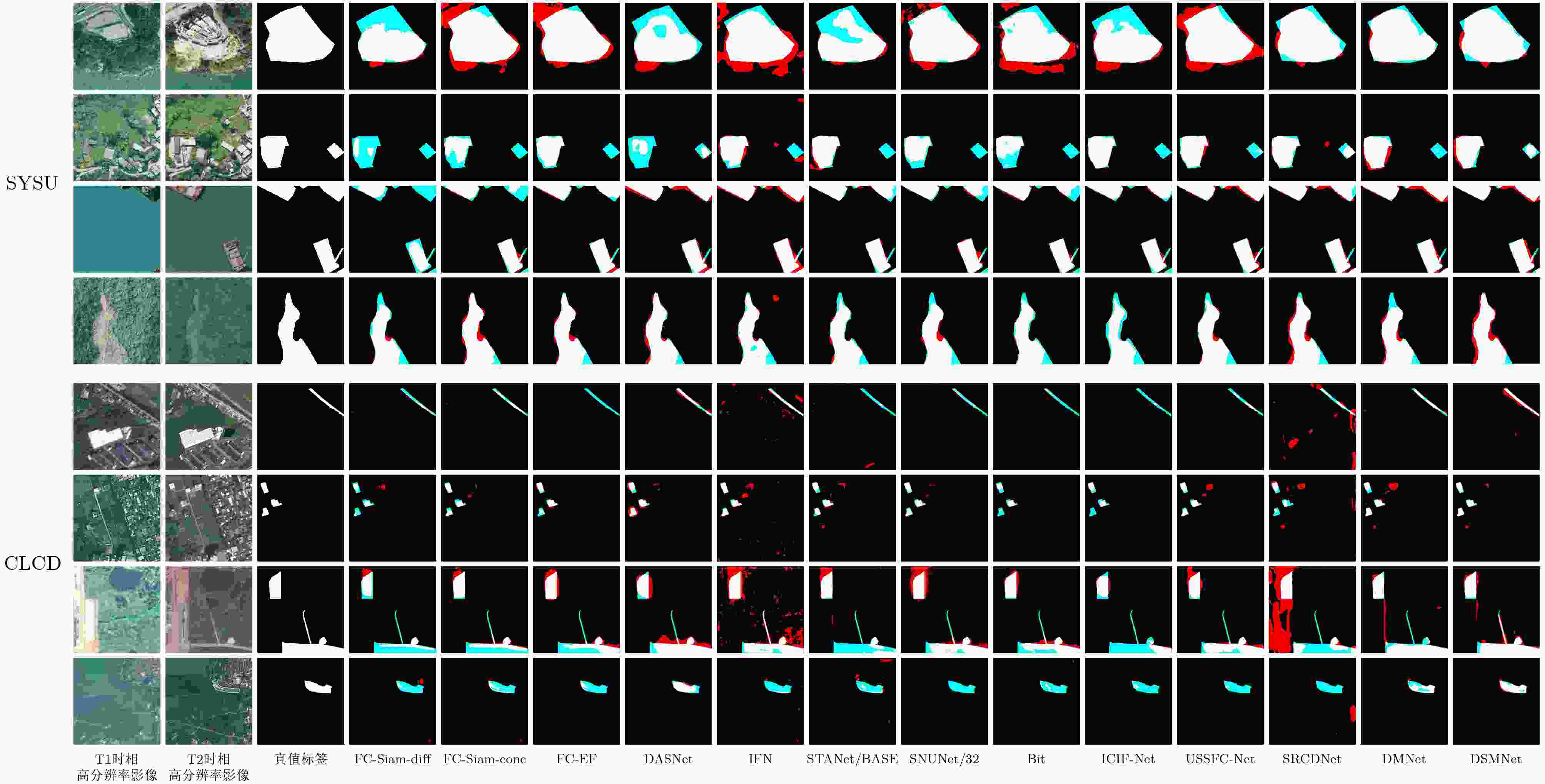

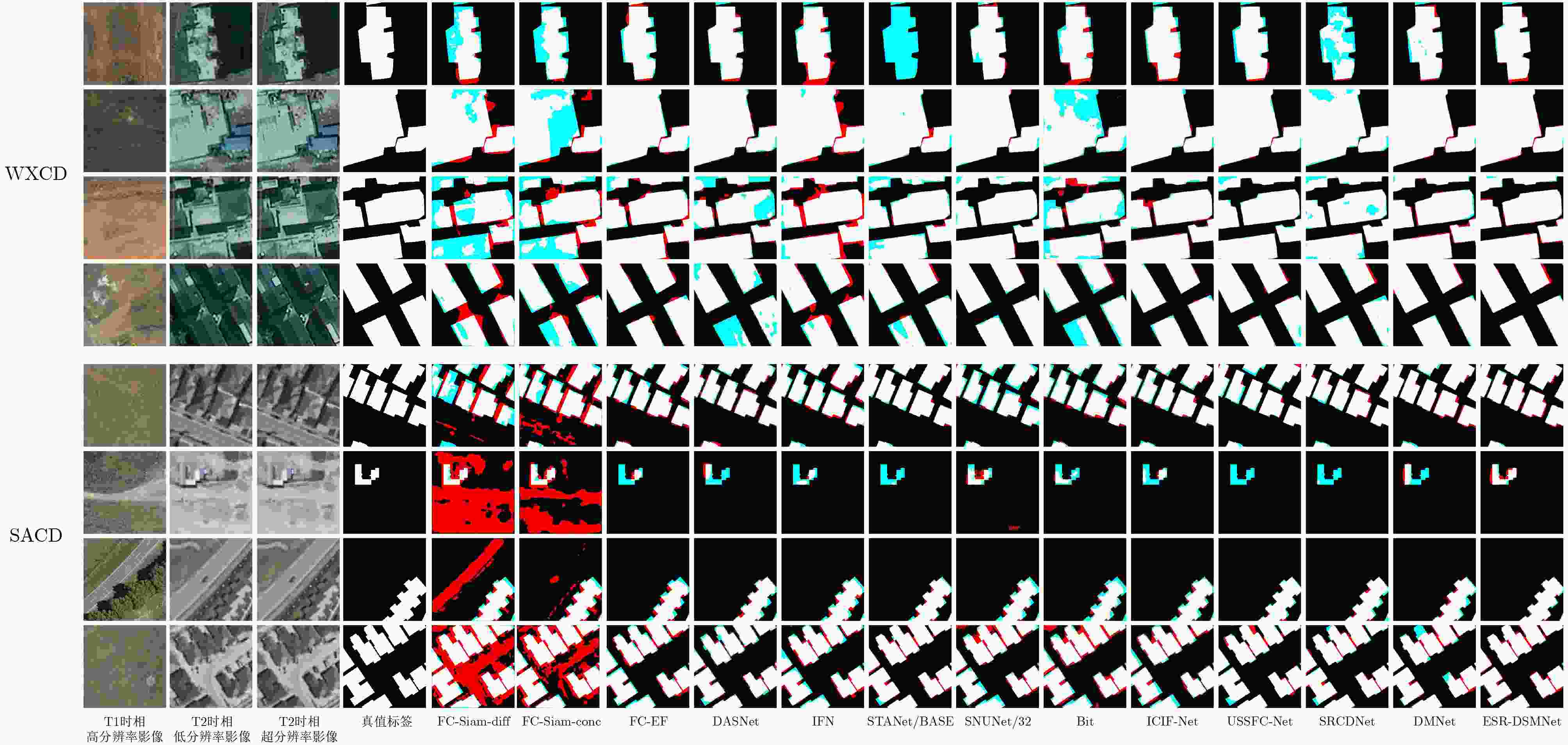

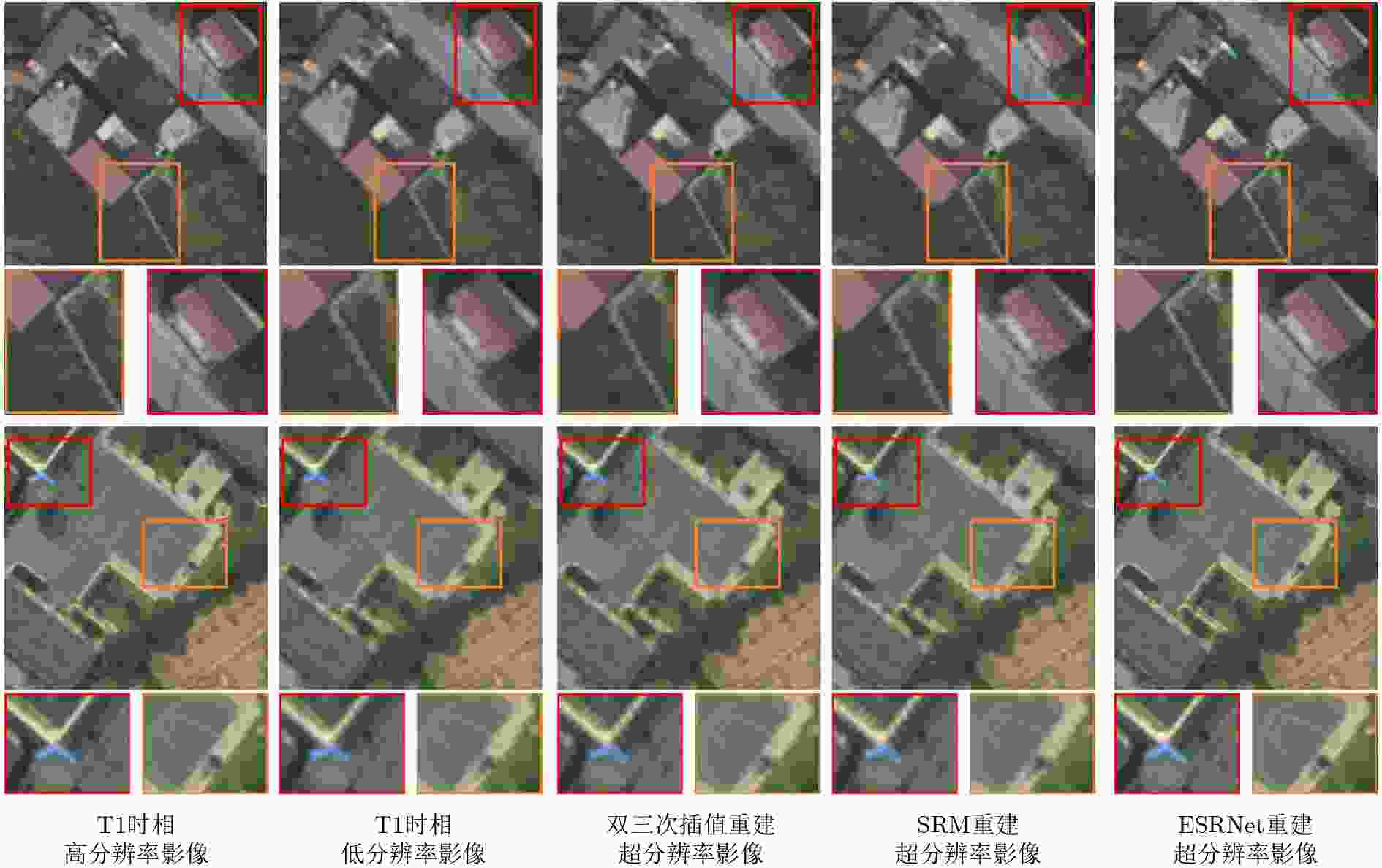

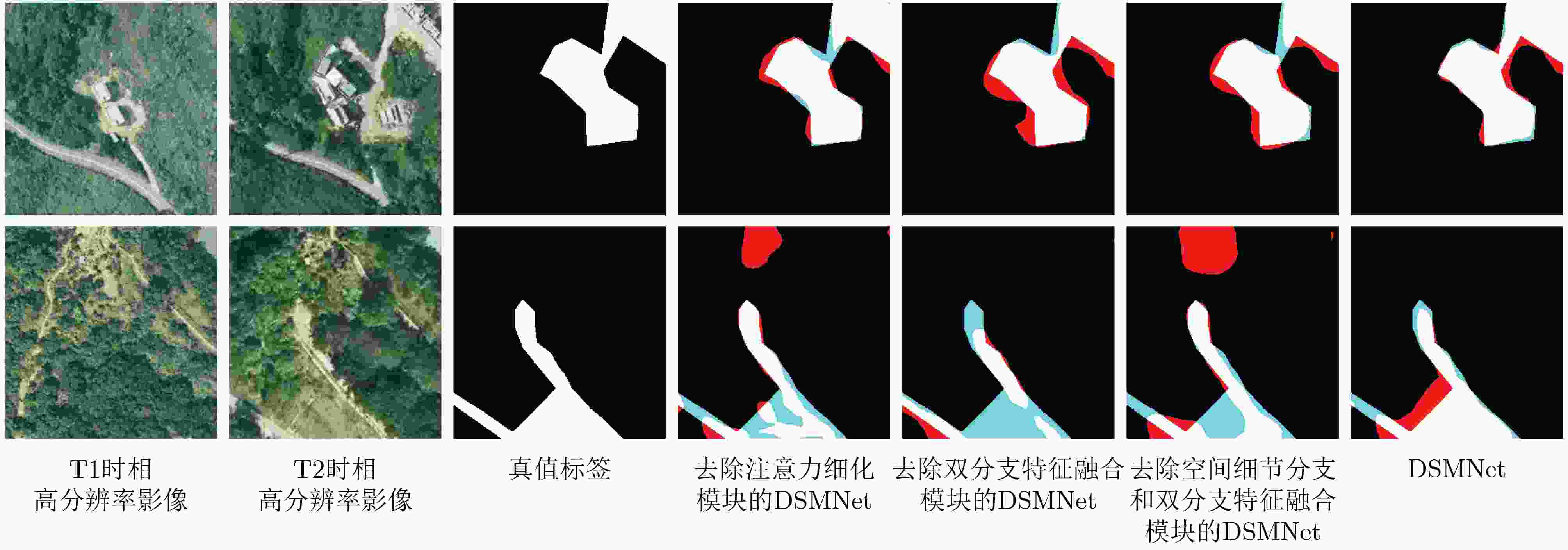

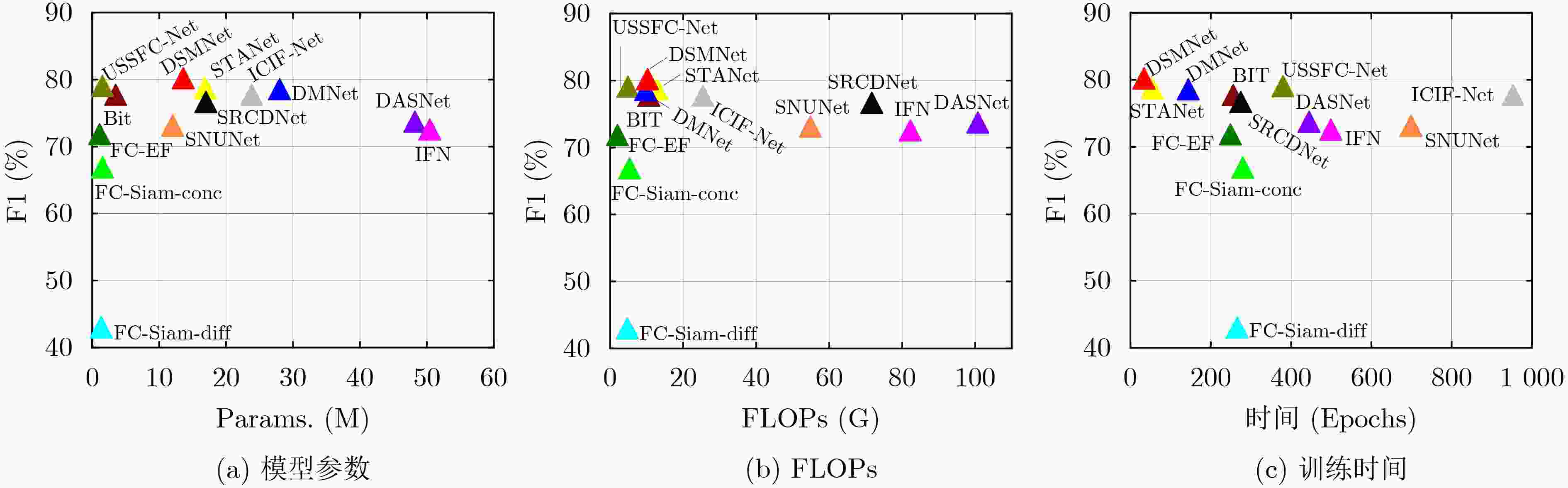

Objective In sudden-onset natural disasters such as landslides and floods, homologous pre-event and post-event remote sensing images are often unavailable in a timely manner, which restricts accurate assessment of disaster-induced changes and subsequent disaster relief planning. Optical heterogeneous remote sensing images differ in sensor type, imaging angle, imaging altitude, and acquisition time. These differences lead to challenges in cross time–space–spectrum change detection, particularly due to spatial resolution inconsistency, spectral discrepancies, and the complexity and diversity of change types for identical ground objects. To address these issues, an Enhanced Super-Resolution-Based Dual-Path Short-Term Dense Concatenate Metric Change Detection Network (ESR-DSMNet) is proposed to achieve accurate and efficient change detection in optical heterogeneous remote sensing images. Methods The ESR-DSMNet consists of an Enhanced Super-Resolution-Based Heterogeneous Remote Sensing Image Quality Optimization Network (ESRNet) and a Dual-Path Short-Term Dense Concatenate Metric Change Detection Network (DSMNet). ESRNet first establishes mapping relationships between remote sensing images with different spatial resolutions using an enhanced super-resolution network. Based on this mapping, low-resolution images are reconstructed to enhance high-frequency edge information and fine texture details, thereby unifying the spatial resolution of heterogeneous remote sensing images at the image level. DSMNet comprises a semantic branch, a spatial-detail branch, a dual-branch feature fusion module, and a metric module based on a batch-balanced contrast loss function. This architecture addresses spectral discrepancies at the feature level and enables accurate and efficient change detection in heterogeneous remote sensing images. Three loss functions are used to optimize the proposed network, which is evaluated and compared with twelve deep learning-based change detection benchmark methods on four datasets, including homologous and heterogeneous remote sensing image datasets. Results and Discussions Comparative analysis on the SYSU dataset ( Table 2 ) shows that DSMNet outperforms the other twelve change detection methods, achieving the highest recall and F1 values of 82.98% and 79.69%, respectively. The method exhibits strong internal consistency for large-area objects and the best visual performance (Fig. 5 ). On the CLCD dataset (Table 2 ), DSMNet ranks first in accuracy among the twelve methods, with recall and F1 values of 73.98% and 71.01%, respectively, and demonstrates superior performance in detecting small-object changes (Fig. 5 ). On the heterogeneous remote sensing image dataset WXCD (Table 3 ), ESR-DSMNet achieves the highest F1 value of 95.87% compared with the other methods, with more consistent internal regions and finer building edges (Fig. 6 ). On the heterogeneous remote sensing image dataset SACD (Table 3 ), ESR-DSMNet attains the highest recall and F1 values of 92.63% and 90.55%, respectively, and produces refined edges in both dense and sparse building change detection scenarios (Fig. 6 ). Compared with low-resolution images, the reconstructed images present sharper edges without distortion, which improves change detection accuracy (Fig. 6 ). Comparisons of reconstructed image quality using different super-resolution methods (Table 4 and Fig. 7), ablation experiments on the DSMNet core modules (Table 5 andFig. 8 ), and model efficiency evaluations (Table 6 andFig. 9 ) further verify the effectiveness and generalization performance of the proposed method.Conclusions The ESR-DSMNet is proposed to address spatial resolution inconsistency, spectral discrepancies, and the complexity and diversity of change types in heterogeneous remote sensing image change detection. The ESRNet unifies spatial resolution at the image level, whereas the DSMNet mitigates spectral differences at the feature level and improves detection accuracy and efficiency. The proposed network is optimized using three loss functions and validated on two homologous and two heterogeneous remote sensing image datasets. Experimental results demonstrate that ESR-DSMNet achieves superior generalization performance and higher accuracy and efficiency than twelve advanced deep learning-based remote sensing image change detection methods. Additional experiments on reconstructed image quality, DSMNet module ablation, and model efficiency comparisons further confirm the effectiveness of the proposed approach. -

Key words:

- Heterogeneous remote sensing images /

- Change detection /

- Super-resolution /

- Deep learning

-

表 1 实验数据集详情

数据集 数据来源 影像对数(训练/验证/测试) 大小 空间分辨率(m/Pixel) 地区 时间跨度 SYSU 航空影像 12000 /4000 /4000 256×256 0.5 香港 2007-2014年 CLCD 高分二号影像 360/120/120 512×512 0.5-2.0 广东 2017-2019年 WXCD 无人机影像

高景一号影像3400 /1000 /1000 256×256 0.2

0.5湖南 2012-2018年 SACD 航空影像

卫星影像3000 /1000 /1000 256×256 0.2

0.6新西兰基督城 2012-2017年 表 2 同源遥感影像上13种变化检测方法的定量结果对比(%)

变化检测方法 SYSU CLCD Precision Recall F1 Precision Recall F1 FC-Sima-diff 83.18 32.22 42.51 59.05 36.73 43.15 FC-Sima-conc 77.97 61.85 66.37 51.15 51.62 49.34 FC-EF 77.13 71.27 71.34 60.96 50.89 55.10 DASNet 74.41 72.06 73.22 72.07 66.39 69.11 IFN 71.16 77.41 72.05 38.87 58.81 44.64 STANet/BASE 77.25 79.15 78.19 56.82 58.93 57.86 SNUNet/32 75.49 73.15 72.61 49.36 54.76 51.19 BIT 77.08 77.28 77.18 65.03 55.16 59.69 ICIF-Net 80.85 73.89 77.22 80.40 46.89 59.24 USSFC-Net 78.01 79.04 78.52 61.33 67.28 64.16 SRCDNet 72.23 80.48 76.13 41.89 56.57 48.13 DMNet 77.96 78.15 78.05 67.72 66.39 67.05 DSMNet 76.66 82.98 79.69 68.27 73.98 71.01 表 3 异源遥感影像上13种变化检测方法的定量结果对比(%)

变化检测方法 WXCD SACD Precision Recall F1 Precision Recall F1 FC-Sima-diff 66.82 60.75 63.18 38.55 81.62 51.80 FC-Sima-conc 70.82 60.78 64.56 39.49 84.87 53.37 FC-EF 95.42 94.74 95.07 88.80 85.99 87.30 DASNet 95.46 69.70 80.58 87.68 92.02 89.80 IFN 82.89 85.56 83.99 82.48 80.35 80.91 STANet/BASE 97.76 73.88 84.16 81.75 91.48 86.34 SNUNet/32 96.64 94.21 95.39 78.75 84.23 81.22 BIT 92.00 79.05 85.04 76.54 84.71 80.42 ICIF-Net 96.89 92.81 94.80 89.72 90.08 89.90 USSFC-Net 93.56 96.04 94.79 88.03 90.91 89.44 SRCDNet 96.43 85.67 90.73 88.13 86.37 87.24 DMNet 95.30 94.97 95.13 86.05 91.96 88.91 DSMNet 96.67 95.08 95.87 88.56 92.63 90.55 表 4 异源遥感影像上不同超分辨率方法重建影像质量对比

重建方法 WXCD PSNR 感知指数 双三次插值 34.74 7.55 SRM 33.72 7.62 ESRNet 34.02 6.30 表 5 DSMNet核心模块消融实验(%)

模块 Precision Recall F1 空间细节分支 注意力细化模块 双分支特征融合模块 √ × √ 76.60 81.94 79.18 √ √ × 73.94 80.48 77.07 × √ × 73.75 82.92 78.06 √ √ √ 76.66 82.98 79.69 表 6 13种变化检测网络性能对比

变化检测方法 Params.(M) FLOPs(G) 训练时间(Epoch/s) SYSU F1(%) SYSU F1(%) CLCD F1(%) WXCD F1(%) SACD FC-Sima-diff 1.35 4.73 266 42.51 43.15 63.18 51.80 FC-Sima-conc 1.55 5.33 280 66.37 49.34 64.56 53.37 FC-EF 1.10 2.02 249 71.34 55.10 95.07 87.30 DASNet 48.22 100.72 445 73.22 69.11 80.58 89.80 IFN 50.44 82.26 499 72.05 44.64 83.99 80.91 STANet/BASE 16.89 12.86 54 78.19 57.86 84.16 86.34 SNUNet/32 12.03 54.83 699 72.61 51.19 95.39 81.22 BIT 3.50 10.63 256 77.18 59.69 85.04 80.42 ICIF-Net 23.84 25.41 953 77.22 59.24 94.80 89.90 USSFC-Net 1.52 4.86 380 78.52 64.16 90.73 89.44 SRCDNet 16.98 71.68 275 76.13 48.13 94.79 87.24 DMNet 27.99 9.72 144 78.05 67.05 95.13 88.91 DSMNet 13.60 10.23 34 79.69 71.01 95.87 90.55 -

[1] LIU Quanyong, PENG Jiangtao, ZHANG Genwei, et al. Deep contrastive learning network for small-sample hyperspectral image classification[J]. Journal of Remote Sensing, 2023, 3: 0025. doi: 10.34133/remotesensing.0025. [2] LIU Shuaijun, LIU Jia, TAN Xiaoyue, et al. A hybrid spatiotemporal fusion method for high spatial resolution imagery: Fusion of gaofen-1 and sentinel-2 over agricultural landscapes[J]. Journal of Remote Sensing, 2024, 4: 0159. doi: 10.34133/remotesensing.0159. [3] WANG Haoyu and LI Xiaofeng. Expanding horizons: U-net enhancements for semantic segmentation, forecasting, and super-resolution in ocean remote sensing[J]. Journal of Remote Sensing, 2024, 4: 0196. doi: 10.34133/remotesensing.0196. [4] MEI Shaohui, LIAN Jiawei, WANG Xiaofei, et al. A comprehensive study on the robustness of deep learning-based image classification and object detection in remote sensing: Surveying and benchmarking[J]. Journal of Remote Sensing, 2024, 4: 0219. doi: 10.34133/remotesensing.0219. [5] BAI Ting, WANG Le, YIN Dameng, et al. Deep learning for change detection in remote sensing: A review[J]. Geo-Spatial Information Science, 2023, 26(3): 262–288. doi: 10.1080/10095020.2022.2085633. [6] DAUDT R C, SAUX B L, and BOULCH A. Fully convolutional Siamese networks for change detection[C]. 2018 25th IEEE international conference on image processing (ICIP), Athens, Greece, 2018: 4063–4067. doi: 10.1109/ICIP.2018.8451652. [7] ZHANG Chenxiao, YUE Peng, TAPETE D, et al. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 166: 183–200. doi: 10.1016/j.isprsjprs.2020.06.003. [8] FANG Sheng, LI Kaiyu, SHAO Jinyuan, et al. SNUNet-CD: A densely connected Siamese network for change detection of VHR images[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 8007805. doi: 10.1109/LGRS.2021.3056416. [9] CHEN Hao and SHI Zhenwei. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection[J]. Remote Sensing, 2020, 12(10): 1662. doi: 10.3390/rs12101662. [10] CHEN Jie, YUAN Ziyang, PENG Jian, et al. DASNet: Dual attentive fully convolutional siamese networks for change detection in high-resolution satellite images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 1194–1206. doi: 10.1109/JSTARS.2020.3037893. [11] LEI Tao, GENG Xinzhe, NING Hailong, et al. Ultralightweight spatial-spectral feature cooperation network for change detection in remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 4402114. doi: 10.1109/TGRS.2023.3261273. [12] CHEN Hao, QI Zipeng, and SHI Zhenwei. Remote sensing image change detection with transformers[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5607514. doi: 10.1109/TGRS.2021.3095166. [13] FENG Yuchao, XU Honghui, JIANG Jiawei, et al. ICIF-Net: Intra-scale cross-interaction and inter-scale feature fusion network for bitemporal remote sensing images change detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 4410213. doi: 10.1109/TGRS.2022.3168331. [14] LIU Mengxi, SHI Qian, LIU Penghua, et al. Siamese generative adversarial network for change detection under different scales[C]. IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, United States, 2020: 2543–2546. doi: 10.1109/IGARSS39084.2020.9323499. [15] 王超, 王帅, 陈晓, 等. 联合UNet++和多级差分模块的多源光学遥感影像对象级变化检测[J]. 测绘学报, 2023, 52(2): 283–296. doi: 10.11947/j.AGCS.2023.20220202.WANG Chao, WANG Shuai, CHEN Xiao, et al. Object-level change detection of multi-sensor optical remote sensing images combined with UNet++ and multi-level difference module[J]. Acta Geodaetica et Cartographica Sinica, 2023, 52(2): 283–296. doi: 10.11947/j.AGCS.2023.20220202. [16] LI Shaochun, WANG Yanjun, CAI Hengfan, et al. MF-SRCDNet: Multi-feature fusion super-resolution building change detection framework for multi-sensor high-resolution remote sensing imagery[J]. International Journal of Applied Earth Observation and Geoinformation, 2023, 119: 103303. doi: 10.1016/j.jag.2023.103303. [17] LEDIG C, THEIS L, HUSZÁR F, et al. Photo-realistic single image super-resolution using a generative adversarial network[C]. Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, United States, 2017: 105–114. doi: 10.1109/CVPR.2017.19. [18] WANG Xintao, YU Ke, WU Shixiang, et al. ESRGAN: Enhanced super-resolution generative adversarial networks[C]. Proceedings of the European Conference on Computer Vision, Munich, Germany, 2019: 63–79. doi: 10.1007/978-3-030-11021-5_5. [19] LIU Mengxi, SHI Qian, MARINONI A, et al. Super-resolution-based change detection network with stacked attention module for images with different resolutions[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 4403718. doi: 10.1109/TGRS.2021.3091758. [20] LI Xi, YAN Li, ZHANG Yi, et al. ESR-DMNet: Enhanced super-resolution-based dual-path metric change detection network for remote sensing images with different resolutions[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5402415. doi: 10.1109/TGRS.2024.3362895. [21] SHAO Ruizhe, DU Chun, CHEN Hao, et al. SUNet: Change detection for heterogeneous remote sensing images from satellite and UAV using a dual-channel fully convolution network[J]. Remote Sensing, 2021, 13(18): 3750. doi: 10.3390/rs13183750. [22] LIU Mengxi, SHI Qian, LI Jianlong, et al. Learning token-aligned representations with multimodel transformers for different-resolution change detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 4413013. doi: 10.1109/TGRS.2022.3200684. [23] XIANG Yunfan, TIAN Xiangyu, XU Yue, et al. EGMT-CD: Edge-guided multimodal transformers change detection from satellite and aerial images[J]. Remote Sensing, 2024, 16(1): 86. doi: 10.3390/rs16010086. [24] FAN Mingyuan, LAI Shenqi, HUANG Junshi, et al. Rethinking BiSeNet for real-time semantic segmentation[C]. Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 9711–9720. doi: 10.1109/CVPR46437.2021.00959. [25] YU Changqian, WANG Jingbo, PENG Chao, et al. BiSeNet: Bilateral segmentation network for real-time semantic segmentation[C]. Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 2018: 334–349. doi: 10.1007/978-3-030-01261-8_20. [26] YU Changqian, GAO Changxin, WANG Jingbo, et al. BiSeNet V2: Bilateral network with guided aggregation for real-time semantic segmentation[J]. International Journal of Computer Vision, 2021, 129(11): 3051–3068. doi: 10.1007/s11263-021-01515-2. [27] SHI Qian, LIU Mengxi, LI Shengchen, et al. A deeply supervised attention metric-based network and an open aerial image dataset for remote sensing change detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5604816. doi: 10.1109/TGRS.2021.3085870. [28] LIU Mengxi, CHAI Zhuoqun, DENG Haojun, et al. A CNN-transformer network with multiscale context aggregation for fine-grained cropland change detection[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 4297–4306. doi: 10.1109/JSTARS.2022.3177235. [29] KEYS R. Cubic convolution interpolation for digital image processing[J]. IEEE Transactions on Acoustics, Speech, and Signal Processing, 1981, 29(6): 1153–1160. doi: 10.1109/TASSP.1981.1163711. [30] ZHANG Puzhao, GONG Maoguo, SU Linzhi, et al. Change detection based on deep feature representation and mapping transformation for multi-spatial-resolution remote sensing images[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2016, 116: 24–41. doi: 10.1016/j.isprsjprs.2016.02.013. [31] MA Chao, YANG C Y, YANG Xiaokang, et al. Learning a no-reference quality metric for single-image super-resolution[J]. Computer Vision and Image Understanding, 2017, 158: 1–16. doi: 10.1016/j.cviu.2016.12.009. [32] MITTAL A, SOUNDARARAJAN R, and BOVIK A C. Making a "completely blind" image quality analyzer[J]. IEEE Signal Processing Letters, 2013, 20(3): 209–212. doi: 10.1109/LSP.2012.2227726. -

下载:

下载:

下载:

下载: