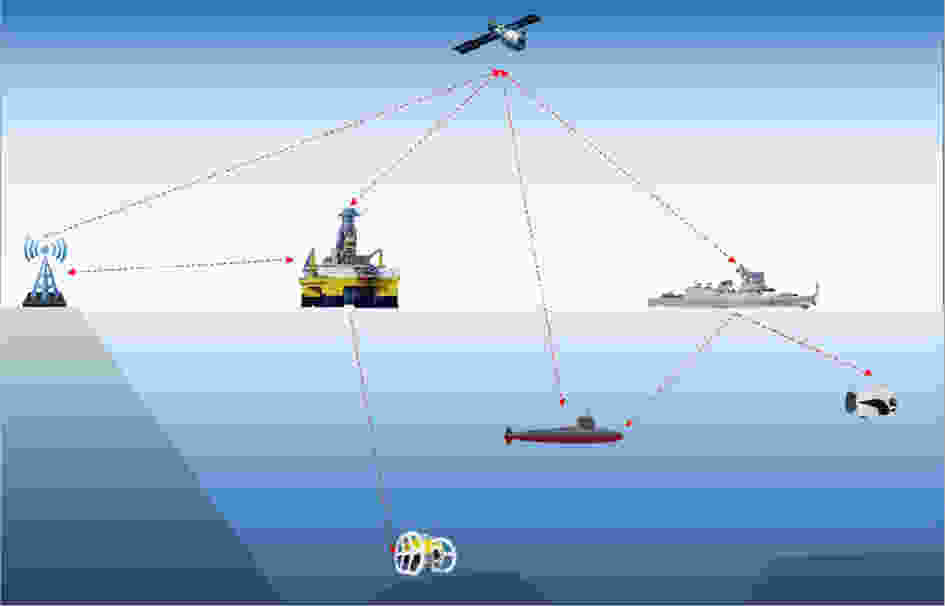

Cross-Layer Collaborative Resource Allocation in Maritime Wireless Communications: QoS-Aware Power Control and Knowledge-Enhanced Service Scheduling

-

摘要: 海上无线通信网络面临动态拓扑漂移、大尺度信道衰落与跨层资源竞争等多重挑战,使得传统单层资源分配优化方法难以维持有限网络资源下的高质量通信和多种类业务需求之间的平衡,导致业务服务质量(QoS)下降,业务保障失衡。为此,该文提出跨层协同联合资源分配框架,通过物理层功率控制与网络层业务调度的跨层闭环优化,实现系统吞吐量与QoS保障的均衡提升。首先,从物理层信道容量与传输层传输控制协议(TCP)吞吐量的耦合机理出发,构建跨层无线网络传输模型;其次,在经典注水框架中引入信噪比与QoS双水位调节机制,提出服务质量感知的双阈值注水算法,以可控的吞吐量损失换取高需求业务Qos的提升;进一步,在孪生深度强化学习架构中设计出双通道特征解耦与冲突消解的策略优化滤波器,实现节点-业务动态匹配的在线决策。仿真表明,所提框架在对照实验中使QoS平均评分提升9.51%,关键业务完成量增加1.3%,同时维持系统吞吐量下降幅度不超过10%。Abstract:

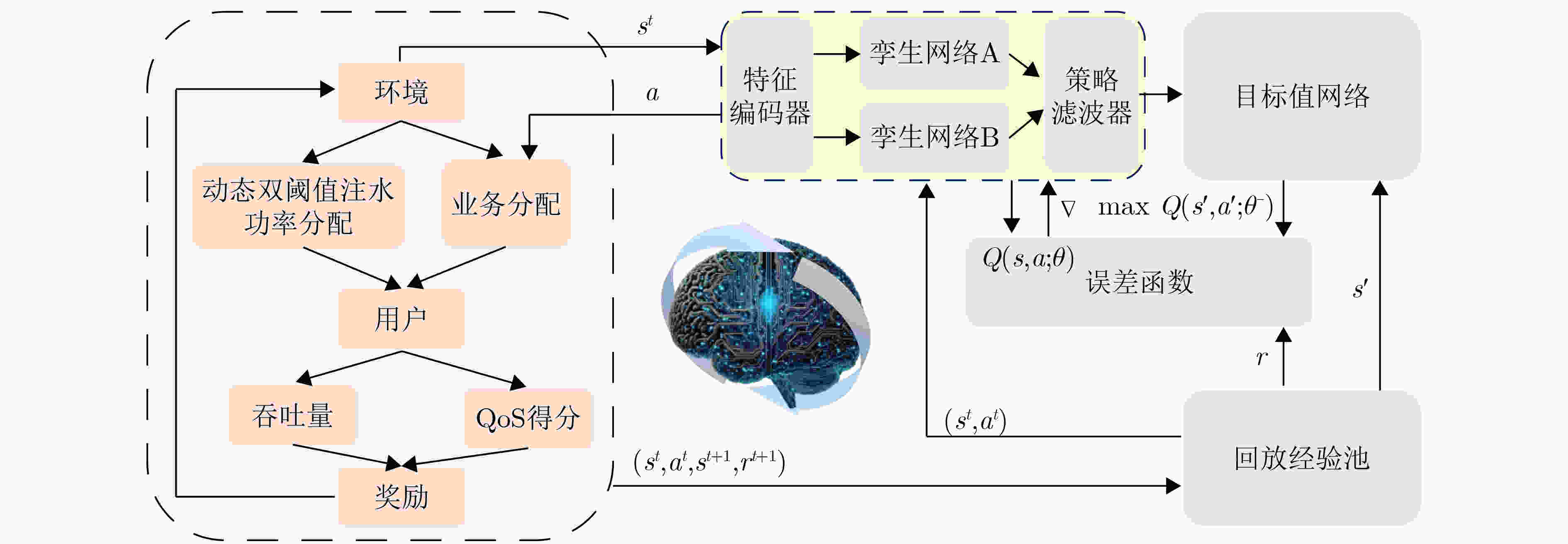

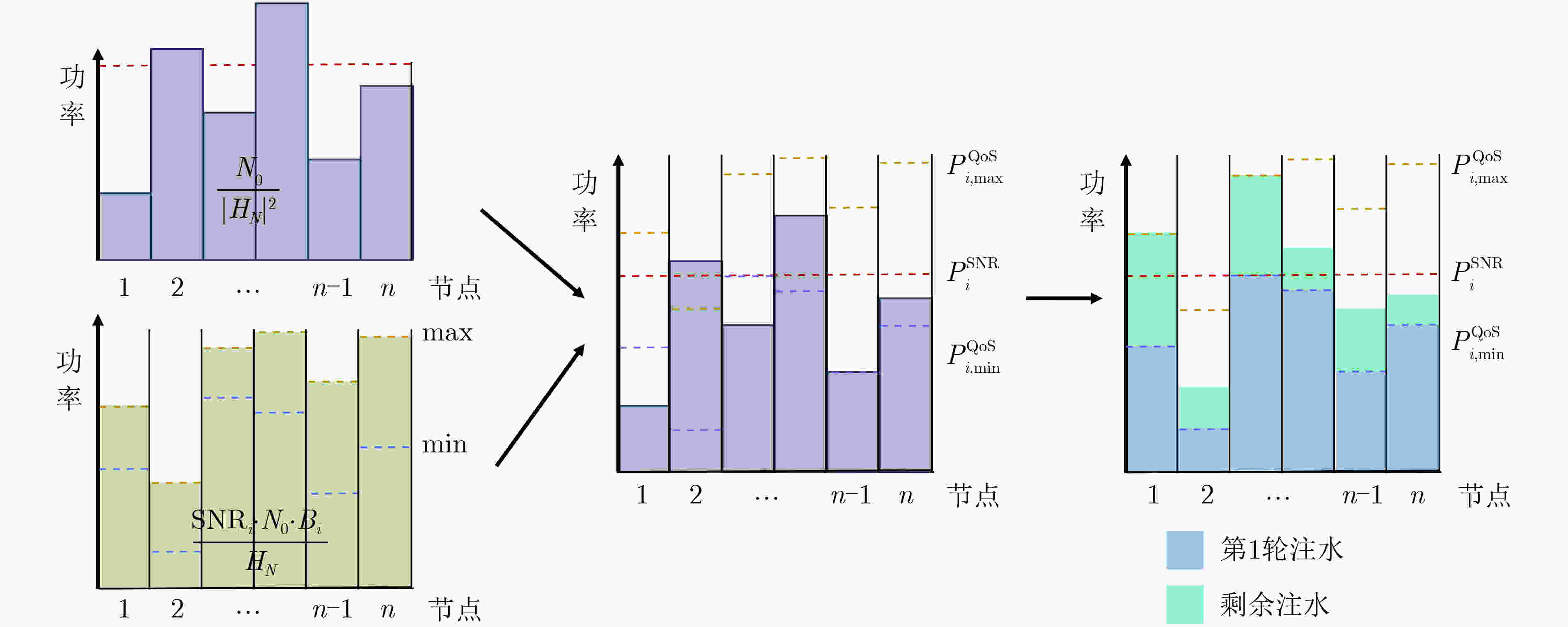

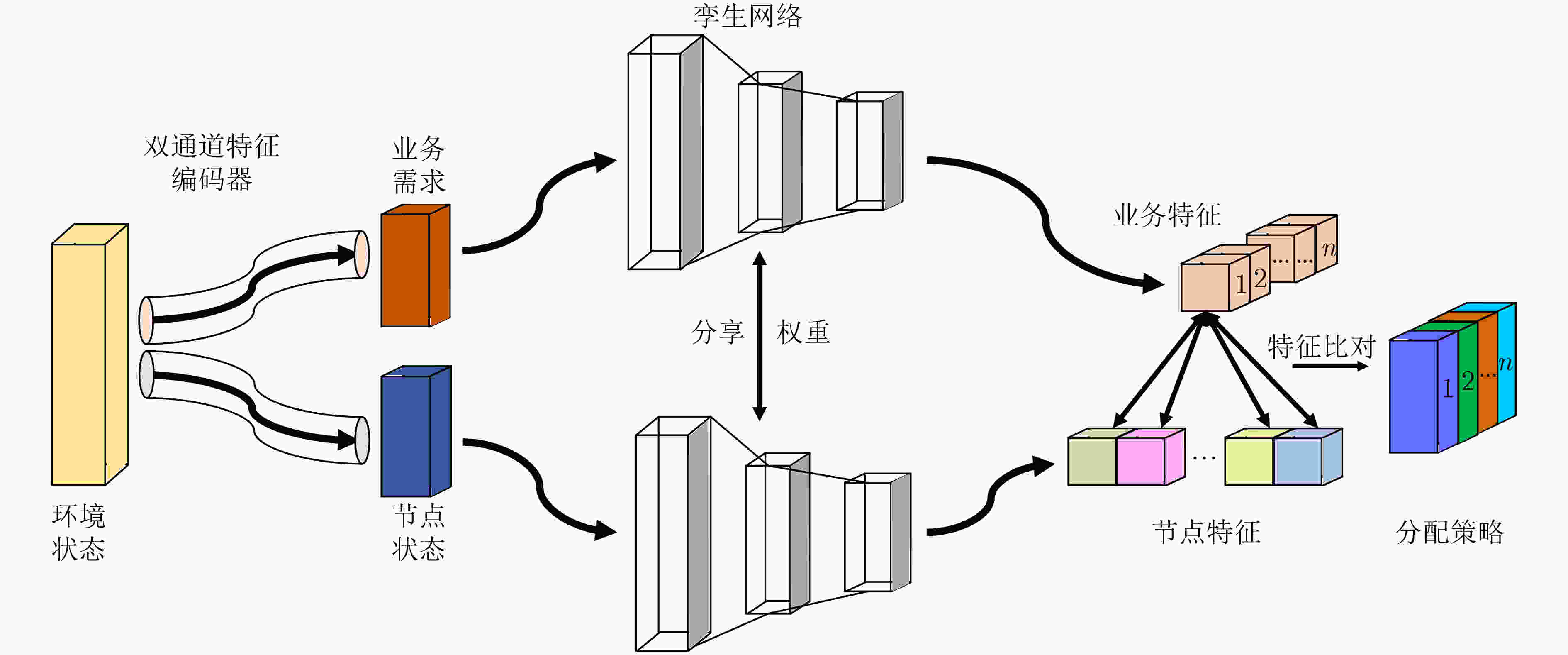

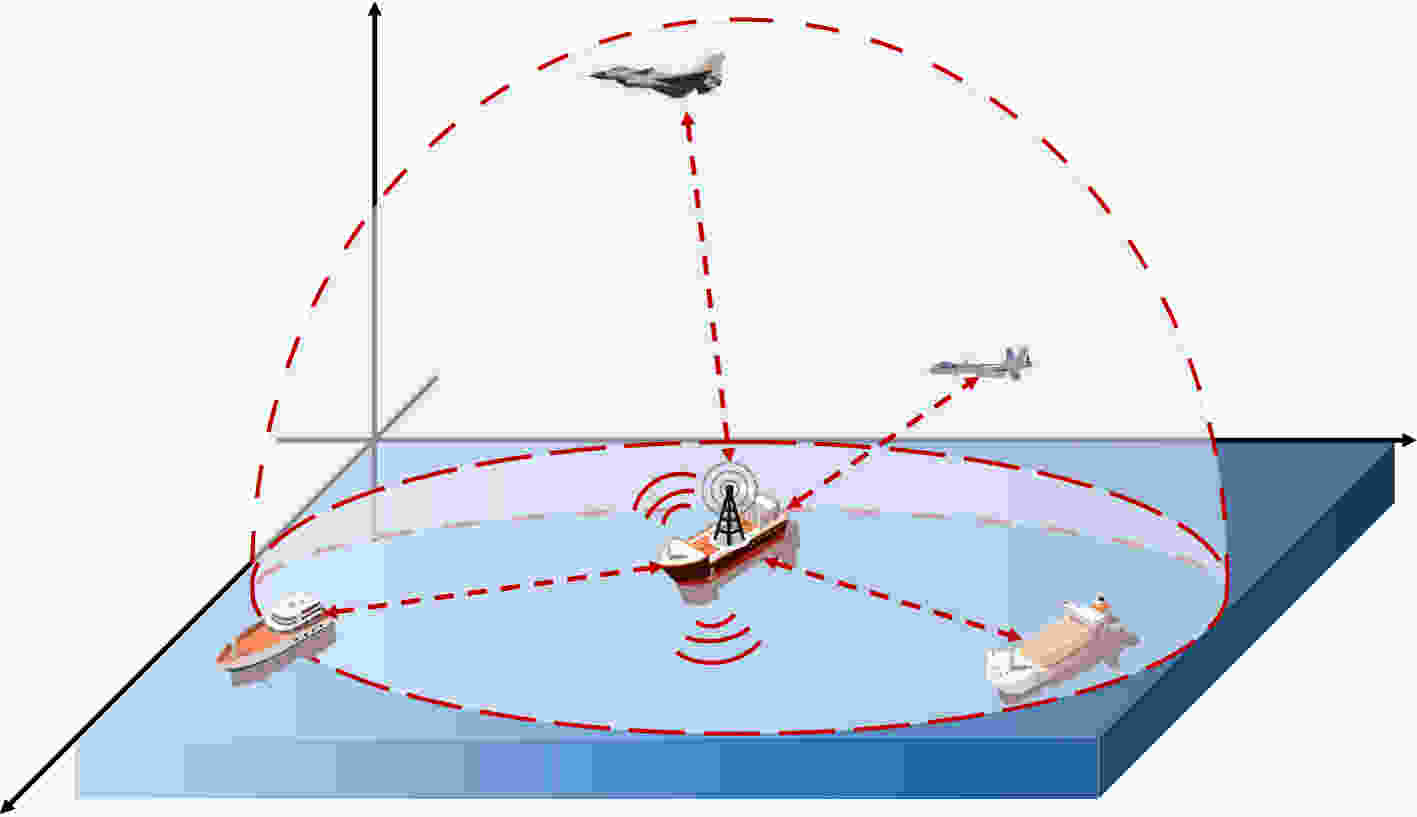

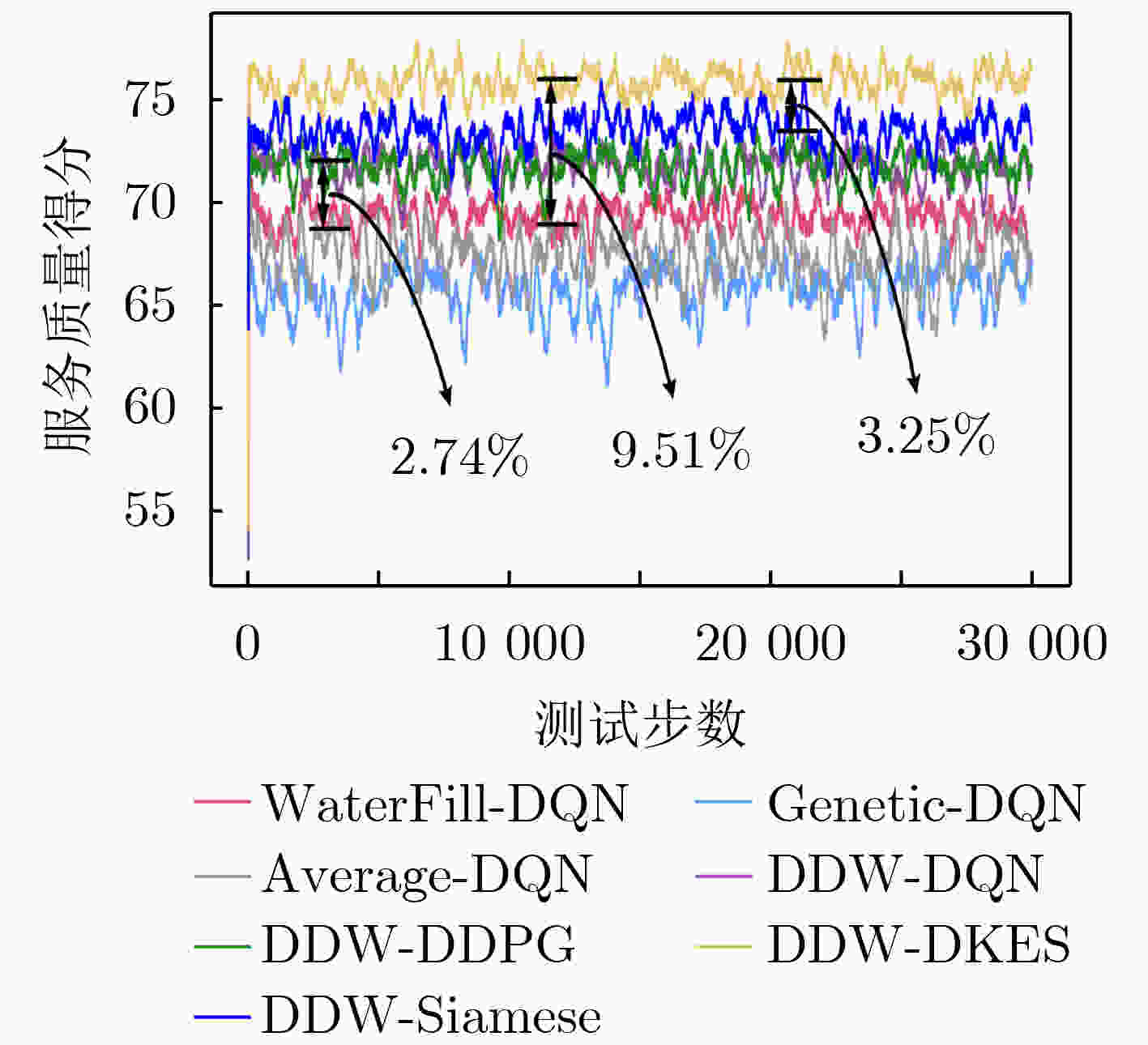

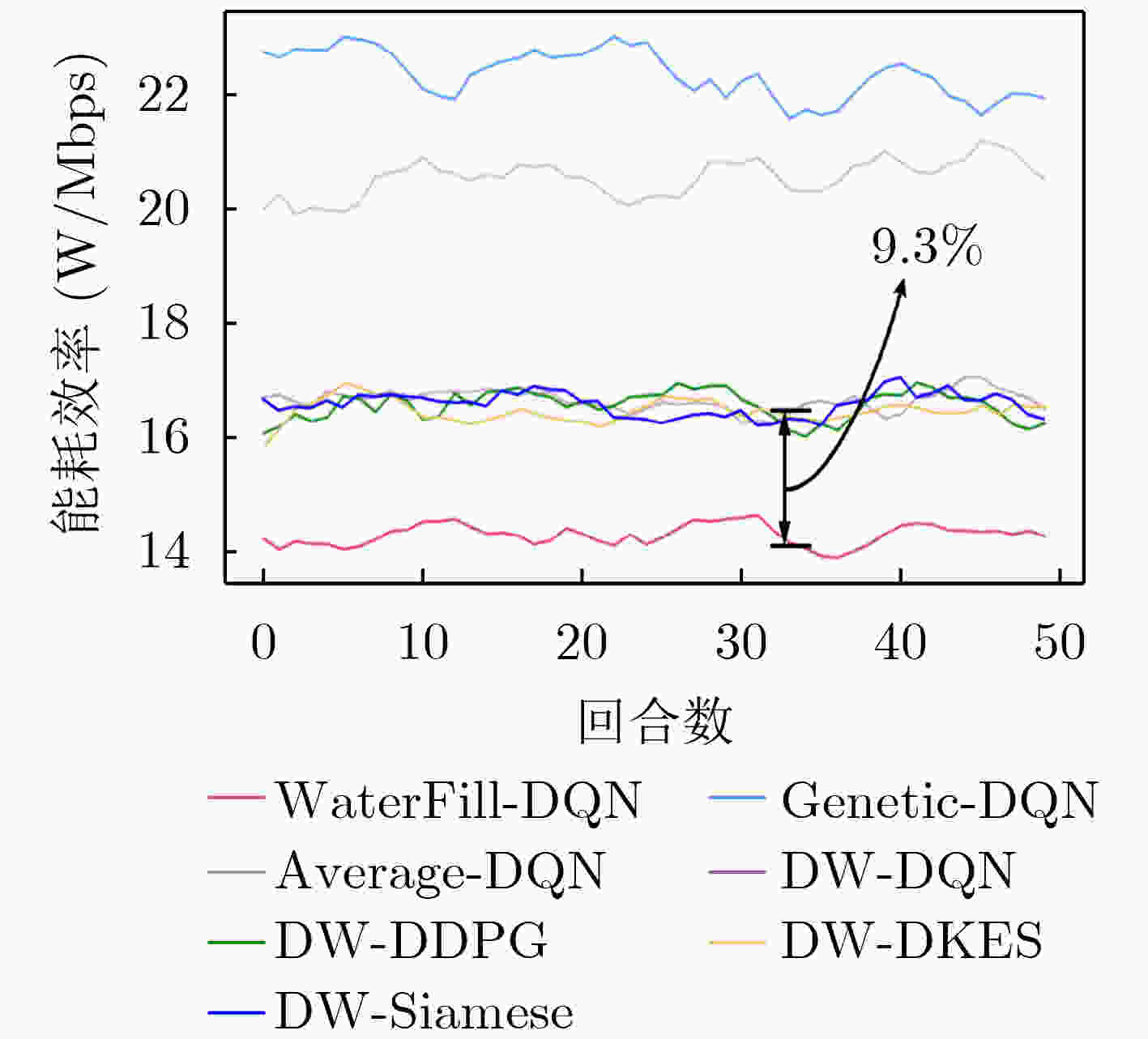

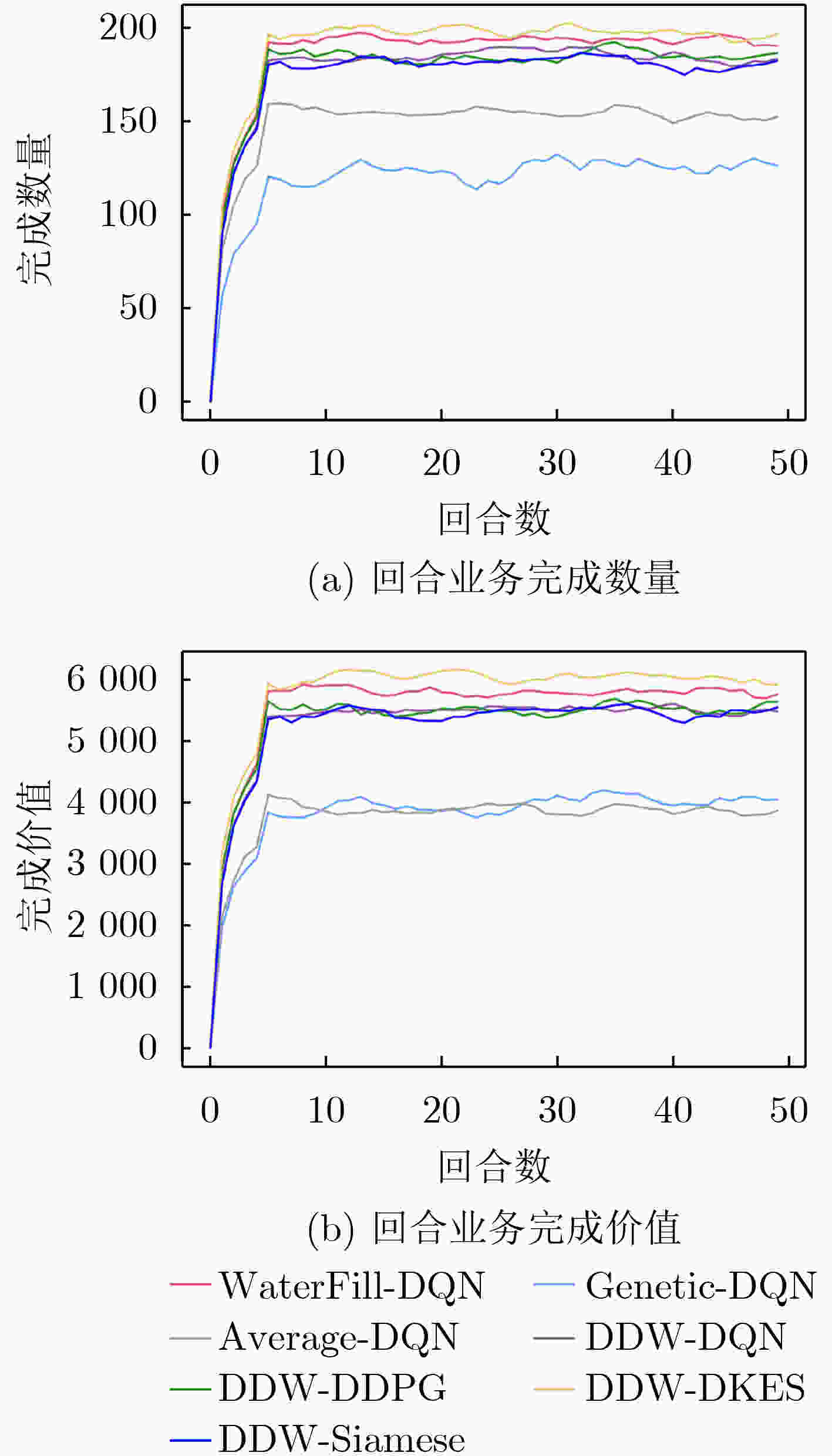

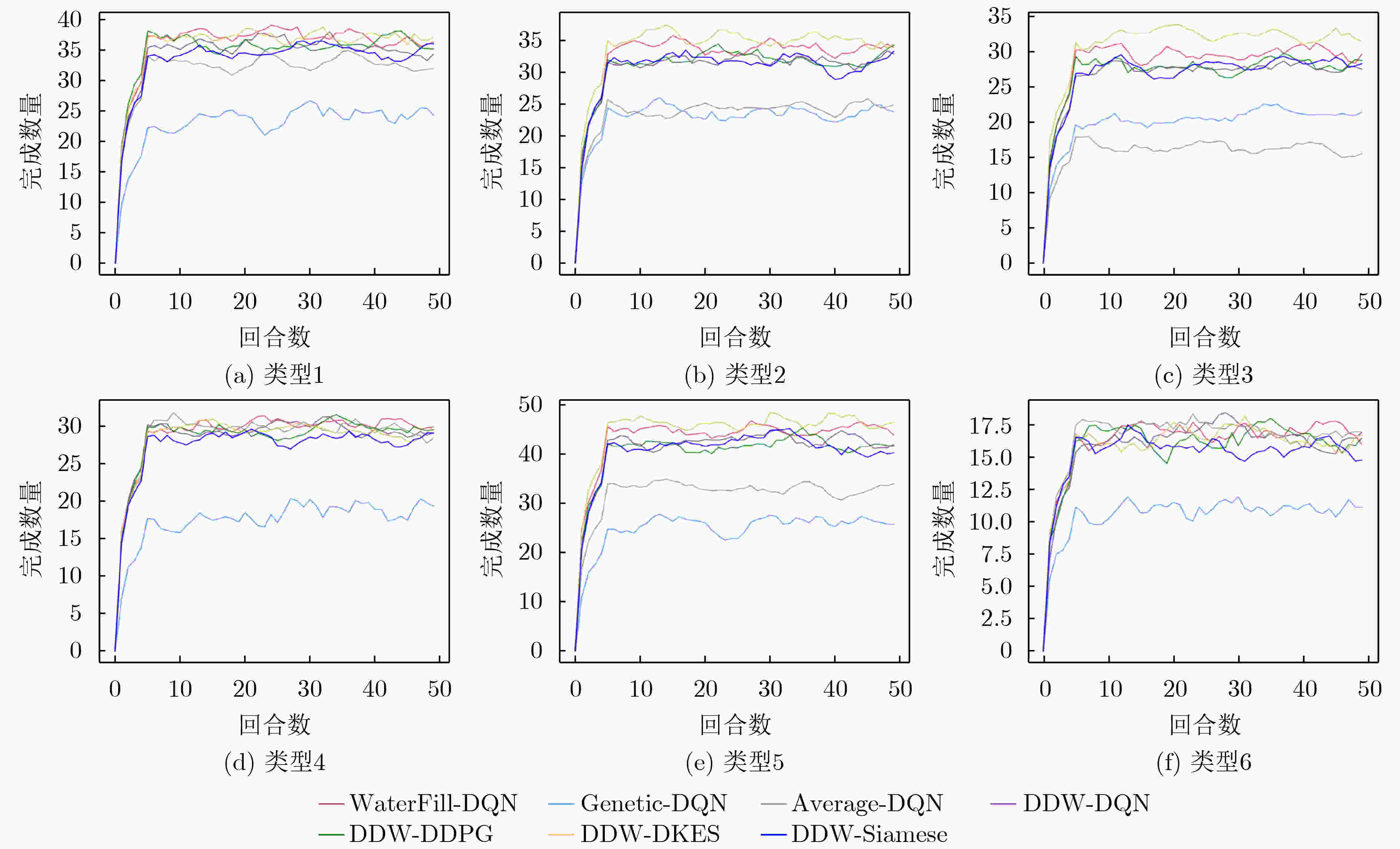

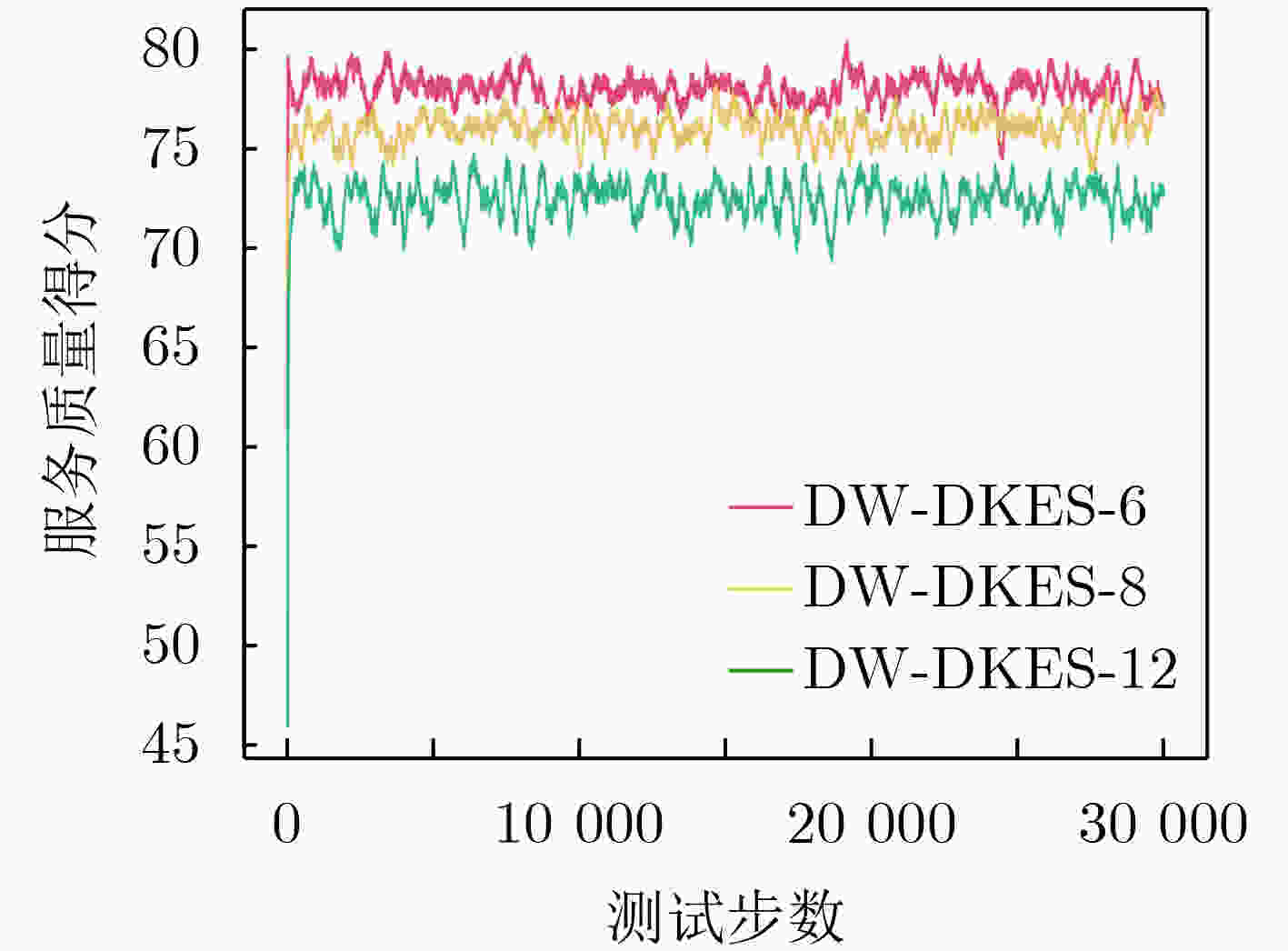

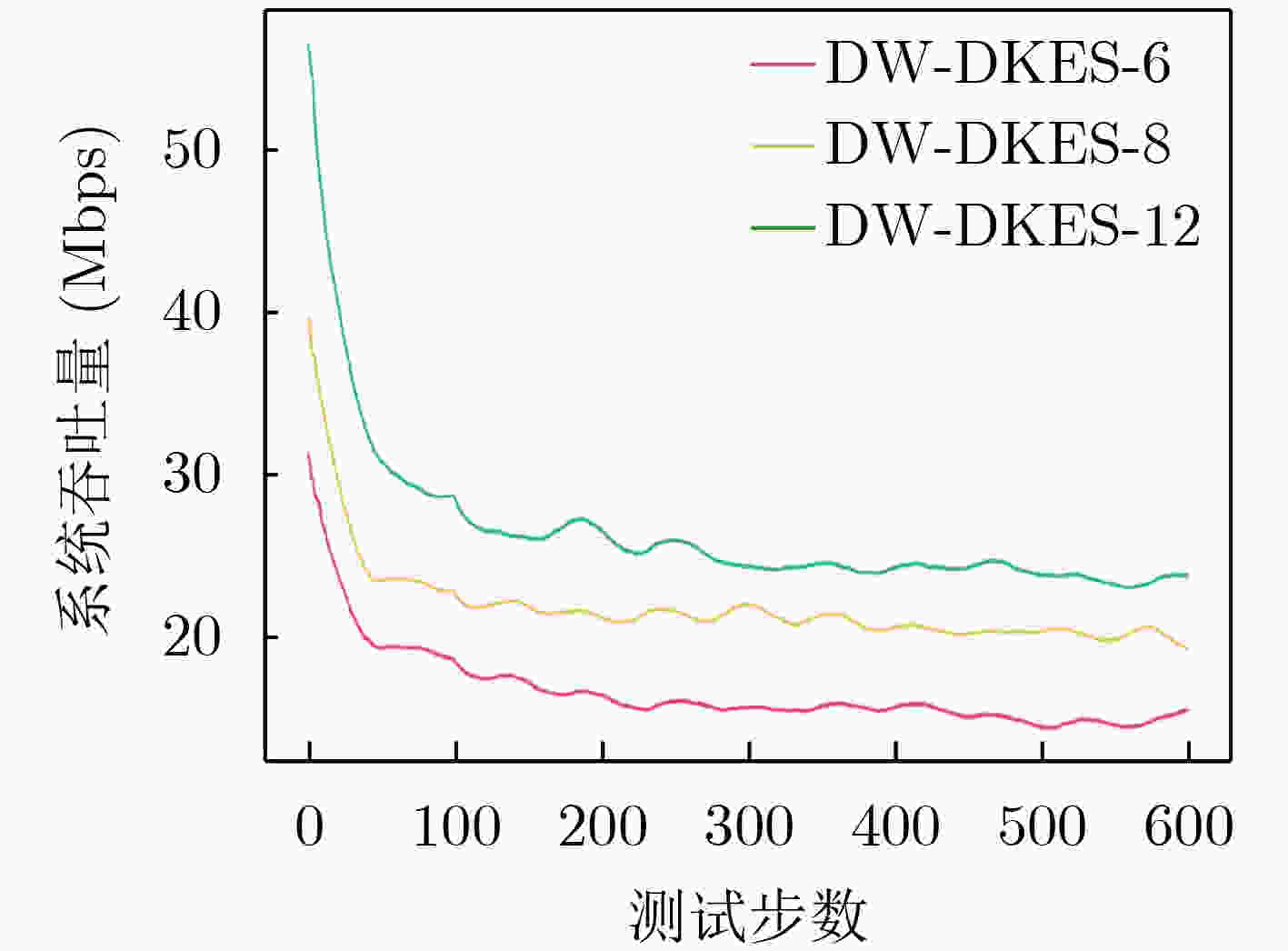

Objective Maritime wireless communication networks face significant challenges, including dynamic topology drift, large-scale channel fading, and cross-layer resource competition. These factors hinder the effectiveness of traditional single-layer resource allocation methods, which struggle to maintain the balance between high-quality communications and heterogeneous service demands under limited network resources. This results in degraded Quality of Service (QoS) and uneven service guarantees. To address these challenges, this study proposes a cross-layer collaborative resource allocation framework that achieves balanced enhancement of system throughput and QoS assurance through closed-loop optimization, integrating physical-layer power control with network-layer service scheduling. First, a cross-layer wireless network transmission model is established based on the coupling mechanism between physical-layer channel capacity and transport-layer TCP throughput. Second, a dual-threshold water-level adjustment mechanism, incorporating both Signal-to-Noise Ratio (SNR) and QoS metrics, is introduced into the classical water-filling framework, yielding a QoS-aware dual-threshold water-filling algorithm. This approach strategically trades controlled throughput loss for improved QoS of high-priority services. Furthermore, a conflict resolution strategy optimization filter with dual-channel feature decoupling is designed within a twin deep reinforcement learning framework to enable real-time, adaptive node-service dynamic matching. Simulation results demonstrate that the proposed framework improves average QoS scores by 9.51% and increases critical service completion by 1.3%, while maintaining system throughput degradation within 10%. Methods This study advances through three main components: theoretical modeling, algorithm design, and system implementation, forming a comprehensive technical system. First, leveraging the coupling relationship between physical-layer channel capacity and transport-layer Transmission Control Protocol (TCP) throughput, a cross-layer joint optimization model integrating power allocation and service scheduling is established. Through mathematical derivation, the model reveals the nonlinear mapping between wireless resources and service demands, unifying traditionally independent power control and service scheduling within a non-convex optimization structure, thus providing a theoretical foundation for algorithm development. Second, the proposed dynamic dual-threshold water-filling algorithm incorporates a dual-regulation mechanism based on SNR and QoS levels. A joint mapping function is designed to enable flexible, demand-driven power allocation, enhancing system adaptability. Finally, a twin deep reinforcement learning framework is constructed, which achieves independent modeling of node mobility patterns and service demand characteristics through a dual-channel feature decoupling mechanism. A dynamic adjustment mechanism is embedded within the strategy optimization filter, improving critical service allocation success rates while controlling system throughput loss. This approach strengthens system resilience to the dynamic, complex maritime environment. Results and Discussions Comparative ablation experiments demonstrate that the dynamic dual-threshold water-filling algorithm within the proposed framework achieves a 9.51% improvement in QoS score relative to conventional water-filling methods. Furthermore, the Domain Knowledge-Enhanced Siamese DRL (DKES-DRL) method exceeds the Siamese DRL approach by 3.25% ( Fig. 6 ), albeit at the expense of a 9.3% reduction in the system’s maximum throughput (Fig. 7 ). The average number of completed transactions exceeds that achieved by the traditional water-filling algorithm by 1.3% (Fig. 8 ,Fig. 9 ). In addition, analysis of the effect of node density on system performance reveals that lower node density corresponds to a higher average QoS score (Fig. 10 ), indicating that the proposed framework maintains service quality more effectively under sparse network conditions.Conclusions To address the complex challenges of dynamic topology drift, multi-scale channel fading, and cross-layer resource contention in maritime wireless communication networks, this paper proposes a cross-layer collaborative joint resource allocation framework. By incorporating a closed-loop cross-layer optimization mechanism spanning the physical and network layers, the framework mitigates the imbalance between system throughput and QoS assurance that constrains traditional single-layer optimization approaches. The primary innovations of this work are reflected in three aspects: (1) Cross-layer modeling is applied to overcome the limitations of conventional hierarchical optimization, establishing a theoretical foundation for integrated power control and service scheduling. (2) A dual-dimensional water-level adjustment mechanism is proposed, extending the classical water-filling algorithm to accommodate QoS-driven resource allocation. (3) A knowledge-enhanced intelligent decision-making system is developed by integrating model-driven and data-driven methodologies within a deep reinforcement learning framework. Simulation results confirm that the proposed framework delivers robust performance in dynamic maritime channel conditions and heterogeneous traffic scenarios, demonstrating particular suitability for maritime emergency communication environments with stringent QoS requirements. Future research will focus on resolving engineering challenges associated with the practical deployment of the proposed framework. -

1 动态双阈值注水算法

输入:节点信道增益hi,总发射功率Pmax,节点数量 i 输出:服务质量感知的功率分配向量 $ P_{i}^{{\mathrm{QoS}}}=\left[p_{1}, p_{2}, \cdots, p_{i}\right] $ 初始化节点位置dx, dy, dz;初始化;初始化节点执行业务类型Ti; 初始化节点包抖动Ji;初始化节点包延迟Di;初始化节点丢包率

Li;初始化业务类型QoS阈值矩阵A;初始化迭代次数k;初始化收敛

阈值$ \varepsilon $计算等效信噪比倒数并按升序排序$ \alpha_{\mathrm{SNR}} $ 根据信道增益 hi计算初始水位$ \mu_{0} $ While True do 计算功率分配$ P_{i}^{{\mathrm{SNR}}} $和总消耗功率$ P_{{\mathrm{t}}} $ if 判断收敛条件$|P_i^{{\mathrm{SNR}}}-P_t| < \varepsilon$ break else 更新水位$\mu_k= \mu_k +(P_t-P_i^{{\mathrm{SNR}}})/n $ end k = k +1; and While 代入计算当前时刻节点理论传输速率区间

$\varGamma_{i,\max/\min}^{\mathrm{T}} = \left[ I_{\mathrm{s}} \left( \overline{D_{\mathrm{s}}} + J_{\mathrm{s}} \right) \sqrt{L_{\mathrm{s}}} \right] \cdot \varGamma_{i,{\mathrm{s}},\max/\min}^{\mathrm{A}} $计算服务质量感知的用户功率分配策略$ P^{{\mathrm{PoS}}} $ 计算功率分配策略差值$ \Delta P_{i, \max }=P_{i, \mathrm{max}}^{\mathrm{QoS}}-P_{i}^{\mathrm{SNR}} $和

$ \Delta P_{i, \min }=P_{i,\min}^{{\mathrm{QoS}}}-P_{i}^{\mathrm{SNR}} $For i= 1,N do If $ \Delta P_{i, \max }>0 $ $P_i=P_{i,\max}^{{\mathrm{QoS}}},\;x=x+ \Delta P_{i,\max} $ else $ P_{i}=P_{i}^{\mathrm{SNR}} $ End If End For If x> 0 按比例分配给$ \Delta P_{i, \text { max }}<0 $的节点$ P_{i}^{\text {QoS}}=P_{i}^{\text {SNR}}+\Delta P_{i, \text {s}} $ End If 2 跨层协同联合资源分配框架DCJRA

输入:环境状态st,奖励rt 输出:功率分配策略Pi,业务分配策略$\tilde a_t^i $ 将经验回放池D初始化为容量N;初始化经验回放池最大尺寸Nr; 用随机权重θ 初始化动作价值函数Q;初始化训练批次大小Nb; 用权重θ–=θ初始化目标动作价值函数$\hat Q $;初始化目标网络更新频

率N–初始化贪婪概率ε和动作价值敏感度Qs For episode= 1, 2, ···, M do 初始化动作序列ε={x1} 和初始状态序列$\phi_1 $ = $\phi $(s1) For t= 1, 2, ···, T do if 贪婪概率ε < 阈值 以概率ε选择一个随机动作${\boldsymbol{a}}_t^i $ else 以低于Qs用孪生网络模型选取一个业务选择动作${\boldsymbol{a}}_t^i $ 贪婪概率ε衰减,动作价值敏感度Qs概率εs衰减,εs以时衰

系数$\mu $衰减双通道特征编码器预处理状态$s_t \to \tilde s_t$ 更新双分支网络状态输入$(\tilde s^{t+1},r^{t+1})\to (\tilde s^{t+1},r^{t+1},b_m^{a_t^j}) $ 策略优化滤波器重构策略选择$a_t^i \to \tilde a_t^i$,动态双阈值注水算

法计算功率分配策略Pi执行动作$\tilde a_t^i $和分配策略Pi 得到奖励值 rt+1 和状态 st+1 将四元组(st, $\tilde a_t^i $, rt+1, st+1)存入经验回放池D 按照最小批次大小从经验回放池中抽样四元组(st, $\tilde a_t^i $, rt, st+1) 计算每个训练批次Nb的目标价值: 定义$a^{\max}(s';\theta )={\mathrm{arg}}\;\max_{a'}Q(s',a'; \theta) $ 计算: $y_i=\left\{ \begin{aligned} & r_j, 如果训练回合在{j}+1停止\\ & r_j+r \hat Q(s',a^{\max}(s', \theta); \theta^-), 其他 \end{aligned}\right. $ 计算梯度下降$\|y_j-Q(s,a; \theta)\|^2 $ 每N–步更新目标网络参数$\theta^- \leftarrow \theta $ End For End For 表 1 仿真参数设置

表 2 业务参数设置

通信类型 QoS速率(Mbps)[35] 时延(ms)[37] 丢包率(×10–4)[37] 数据量(Mbps) [37] 瞬时价值[36] 典型应用场景[37] 语音业务 [0.1, 0.5] <100 <100 [ 0.0001 , 0.01]1 海上安全通信等 数据业务 [1, 5] <300 <100 [1, 10] 2.5 科研数据传输等 视频业务 [5~20] <200 <100 [10, 50] 3 海上航行监视等 消息业务 0.1 <500 <10 [ 0.0001 , 0.001]1.5 即时信息交换等 低时延业务 [1, 5] <10 <5 [1, 10] 2 海上自动化控制等 海量连接业务 [0.01, 0.1] <500 <50 [0.01, 0.1] 1.5 海上环境监测等 表 3 不同节点密度的方法性能对比

名称 QoS平均得分 系统吞吐量(Mbps/s) DDW-DKES-6 78.02 16.70 DDW-DKES-8 76.02 21.86 DDW-DKES-12 72.45 26.49 表 4 方法性能对比

名称 QoS平均得分 能耗效率 业务完成数量 业务完成价值 WaterFill-DQN 69.31 14.31↓ 186.54 5755.80 Genetic-DQN 65.79 22.36 117.70 3776.40 Average-DQN 67.41 20.55 147.53 3717.10 DDW-DQN 71.21 16.66 176.17 5233.02 DDW-DDPG 71.14 16.57 176.68 5266.33 DDW-Siamese 73.51 16.57 172.92 5195.62 DDW-DKES 75.90↑ 15.03 188.94↑ 6005.13 ↑ -

[1] NOMIKOS N, GKONIS P K, BITHAS P S, et al. A survey on UAV-aided maritime communications: Deployment considerations, applications, and future challenges[J]. IEEE Open Journal of the Communications Society, 2023, 4: 56–78. doi: 10.1109/OJCOMS.2022.3225590. [2] SONG Y, SHIN H, KOO S, et al. Internet of maritime things platform for remote marine water quality monitoring[J]. IEEE Internet of Things Journal, 2022, 9(16): 14355–14365. doi: 10.1109/JIOT.2021.3079931. [3] RANI S, BABBAR H, KAUR P, et al. An optimized approach of dynamic target nodes in wireless sensor network using bio inspired algorithms for maritime rescue[J]. IEEE Transactions on Intelligent Transportation Systems, 2023, 24(2): 2548–2555. doi: 10.1109/TITS.2021.3129914. [4] HASSAN S S, KIM D H, TUN Y K, et al. Seamless and energy-efficient maritime coverage in coordinated 6G space–air–sea non-terrestrial networks[J]. IEEE Internet of Things Journal, 2023, 10(6): 4749–4769. doi: 10.1109/JIOT.2022.3220631. [5] NOMIKOS N, GIANNOPOULOS A, KALAFATELIS A, et al. Improving connectivity in 6G maritime communication networks with UAV swarms[J]. IEEE Access, 2024, 12: 18739–18751. doi: 10.1109/ACCESS.2024.3360133. [6] FANG Xinran, FENG Wei, WANG Yanmin, et al. NOMA-based hybrid satellite-UAV-terrestrial networks for 6G maritime coverage[J]. IEEE Transactions on Wireless Communications, 2023, 22(1): 138–152. doi: 10.1109/TWC.2022.3191719. [7] WU Zhao, WANG Qian. Trajectory optimization and power allocation for cell-free satellite-UAV internet of things[J]. IEEE Access, 2023, 11: 203–213. doi: 10.1109/ACCESS.2022.3232945. [8] YU Jun, WANG Rui, and WU Jun. QoS-driven resource optimization for intelligent fog radio access network: A dynamic power allocation perspective[J]. IEEE Transactions on Cognitive Communications and Networking, 2022, 8(1): 394–407. doi: 10.1109/TCCN.2021.3094209. [9] WU Yile, GE Le, YUAN Xiangyun, et al. Adaptive power control based on double-layer Q-learning algorithm for multi-parallel power conversion systems in energy storage station[J]. Journal of Modern Power Systems and Clean Energy, 2022, 10(6): 1714–1724. doi: 10.35833/MPCE.2020.000909. [10] QIN Peng, ZHAO Honghao, FU Yang, et al. Energy-efficient resource allocation for space–air–ground integrated industrial power internet of things network[J]. IEEE Transactions on Industrial Informatics, 2024, 20(4): 5274–5284. doi: 10.1109/TII.2023.3331127. [11] CHAN S, LEE H, KIM S, et al. Intelligent low complexity resource allocation method for integrated satellite-terrestrial systems[J]. IEEE Wireless Communications Letters, 2022, 11(5): 1087–1091. doi: 10.1109/LWC.2022.3157062. [12] DAI Pengcheng, WANG He, HOU Huazhou, et al. Joint spectrum and power allocation in wireless network: A two-stage multi-agent reinforcement learning method[J]. IEEE Transactions on Emerging Topics in Computational Intelligence, 2024, 8(3): 2364–2374. doi: 10.1109/TETCI.2024.3360305. [13] HU Xin, WANG Yin, LIU Zhijun, et al. Dynamic power allocation in high throughput satellite communications: A two-stage advanced heuristic learning approach[J]. IEEE Transactions on Vehicular Technology, 2023, 72(3): 3502–3516. doi: 10.1109/TVT.2022.3218565. [14] PAN Yang, XIANG Chenlu, and ZHANG Shunqing. Distributed joint power and bandwidth allocation for multiagent cooperative localization[J]. IEEE Communications Letters, 2022, 26(11): 2601–2605. doi: 10.1109/LCOMM.2022.3195646. [15] SUI Xiufeng, JIANG Ziqi, LYU Yifeng, et al. Integrating convex optimization and deep learning for downlink resource allocation in LEO satellites networks[J]. IEEE Transactions on Cognitive Communications and Networking, 2024, 10(3): 1104–1118. doi: 10.1109/TCCN.2024.3361071. [16] DANI M N, SO D K C, TANG Jie, et al. Resource allocation for layered multicast video streaming in NOMA systems[J]. IEEE Transactions on Vehicular Technology, 2022, 71(11): 11379–11394. doi: 10.1109/TVT.2022.3193122. [17] JI Tian, CHANG Xiaolei, CHEN Zhengjian, et al. Study on QoS routing optimization algorithms for smart ocean networks[J]. IEEE Access, 2023, 11: 86489–86508. doi: 10.1109/ACCESS.2023.3298438. [18] LIN Yuanmo, XU Zhiyong, LI Jianhua, et al. Resource management for QoS-guaranteed marine data feedback based on space–air–ground–sea network[J]. IEEE Systems Journal, 2024, 18(3): 1741–1752. doi: 10.1109/JSYST.2024.3439343. [19] WANG Chenlu, PENG Yuhuai, WU Jingjing, et al. Deterministic scheduling and reliable routing for smart ocean services in maritime internet of things: A cross-layer approach[J]. IEEE Transactions on Services Computing, 2024, 17(6): 3387–3399. doi: 10.1109/TSC.2024.3442471. [20] SUN Ming, MEI Erzhuang, WANG Shumei, et al. Joint DDPG and unsupervised learning for channel allocation and power control in centralized wireless cellular networks[J]. IEEE Access, 2023, 11: 42191–42203. doi: 10.1109/ACCESS.2023.3270316. [21] HE Yuanzhi, SHENG Biao, YIN Hao, et al. Multi-objective deep reinforcement learning based time-frequency resource allocation for multi-beam satellite communications[J]. China Communications, 2022, 19(1): 77–91. doi: 10.23919/JCC.2022.01.007. [22] ZABETIAN N and KHALAJ B H. Hybrid QoE-based joint admission control and power allocation[J]. IEEE Transactions on Vehicular Technology, 2024, 73(1): 522–531. doi: 10.1109/TVT.2023.3300775. [23] QIN Peng, FU Yang, ZHAO Xiongwen, et al. Optimal task offloading and resource allocation for C-NOMA heterogeneous air-ground integrated power internet of things networks[J]. IEEE Transactions on Wireless Communications, 2022, 21(11): 9276–9292. doi: 10.1109/TWC.2022.3175472. [24] XUE Tong, ZHANG Haixia, DING Hui, et al. Joint vehicle pairing, spectrum assignment, and power control for sum-rate maximization in NOMA-based V2X underlaid cellular networks[J]. IEEE Internet of Things Journal, 2025, 12(12): 22337–22349. doi: 10.1109/JIOT.2025.3550884. [25] ZHANG Xiaochen, ZHAO Haitao, XIONG Jun, et al. Decentralized routing and radio resource allocation in wireless Ad Hoc networks via graph reinforcement learning[J]. IEEE Transactions on Cognitive Communications and Networking, 2024, 10(3): 1146–1159. doi: 10.1109/TCCN.2024.3360517. [26] WANG Zixin, BENNIS M, and ZHOU Yong. Graph attention-based MADRL for access control and resource allocation in wireless networked control systems[J]. IEEE Transactions on Wireless Communications, 2024, 23(11): 16076–16090. doi: 10.1109/TWC.2024.3436906. [27] GIANG L T, TUNG N X, VIET V H, et al. HGNN: A hierarchical graph neural network architecture for joint resource management in dynamic wireless sensor networks[J]. IEEE Sensors Journal, 2024, 24(24): 42352–42364. doi: 10.1109/JSEN.2024.3485058. [28] LI Dongyang, DING Hui, ZHANG Haixia, et al. Deep learning-enabled joint edge content caching and power allocation strategy in wireless networks[J]. IEEE Transactions on Vehicular Technology, 2024, 73(3): 3639–3651. doi: 10.1109/TVT.2023.3325036. [29] MATHIS M, SEMKE J, MAHDAVI J, et al. The macroscopic behavior of the TCP congestion avoidance algorithm[J]. ACM SIGCOMM Computer Communication Review, 1997, 27(3): 67–82. doi: 10.1145/263932.264023. [30] ITU Radiocommunication Sector. Radio noise: P. 372–13[R]. ITU-R P. 372–13, 2016. [31] WANG Jue, ZHOU Haifeng, LI Ye, et al. Wireless channel models for maritime communications[J]. IEEE Access, 2018, 6: 68070–68088. doi: 10.1109/ACCESS.2018.2879902. [32] ITU Radiocommunication Sector. End-user multimedia QoS categories[R]. G. 1010, 2001. [33] XIA Tingting, WANG M M, ZHANG Jingjing, et al. Maritime internet of things: Challenges and solutions[J]. IEEE Wireless Communications, 2020, 27(2): 188–196. doi: 10.1109/MWC.001.1900322. [34] XU Jiajie, KISHK M A, and ALOUINI M S. Space-air-ground-sea integrated networks: Modeling and coverage analysis[J]. IEEE Transactions on Wireless Communications, 2023, 22(9): 6298–6313. doi: 10.1109/TWC.2023.3241341. [35] BEKKADAL F. Innovative maritime communications technologies[C]. The 18th International Conference on Microwaves, Radar and Wireless Communications, Vilnius, Lithuania, 2010: 1–4. [36] MAO Zhongyang, ZHANG Zhilin, LU Faping, et al. Sea-based UAV network resource allocation method based on an attention mechanism[J]. Electronics, 2024, 13(18): 3686. doi: 10.3390/electronics13183686. [37] ALQURASHI F S, TRICHILI A, SAEED N, et al. Maritime communications: A survey on enabling technologies, opportunities, and challenges[J]. IEEE Internet of Things Journal, 2023, 10(4): 3525–3547. doi: 10.1109/JIOT.2022.3219674. [38] 张冬梅, 徐友云, 蔡跃明. OFDMA系统中线性注水功率分配算法[J]. 电子与信息学报, 2007, 29(6): 1286–1289. doi: 10.3724/SP.J.1146.2005.01345.ZHANG Dongmei, XU Youyun, and CAI Yueming. Linear water-filling power allocation algorithm in OFDMA system[J]. Journal of Electronics & Information Technology, 2007, 29(6): 1286–1289. doi: 10.3724/SP.J.1146.2005.01345. [39] 陆音, 汪成功, 王慧如, 等. 一种基于遗传算法的NOMA功率分配方法[P]. 中国, 201811038383.6, 2019.LU Yin, WANG Chenggong, WANG Huiru, et al. NOMA power distribution method based on genetic algorithm[P]. CN, 201811038383.6, 2019. [40] MNIH V, KAVUKCUOGLU K, SILVER D, et al. Playing Atari with deep reinforcement learning[C]. Neural Information Processing Systems, Montereal, Canada, 2015: 1–9. doi: 10.48550/arXiv.1312.5602. [41] LILLICRAP T P, HUNT J J, PRITZEL A, et al. Continuous control with deep reinforcement learning[C]. The 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2016. doi: 10.1016/S1098–3015(10)67722–4. [42] BROMLEY J, GUYON I, LECUN Y, et al. Signature verification using a “Siamese” time delay neural network[C]. The 7th International Conference on Neural Information Processing Systems, Denver, USA, 1993: 737–744. doi: 10.5555/2987189.2987282. -

下载:

下载:

下载:

下载: