Link State Awareness Enhanced Intelligent Routing Algorithm for Tactical Communication Networks

-

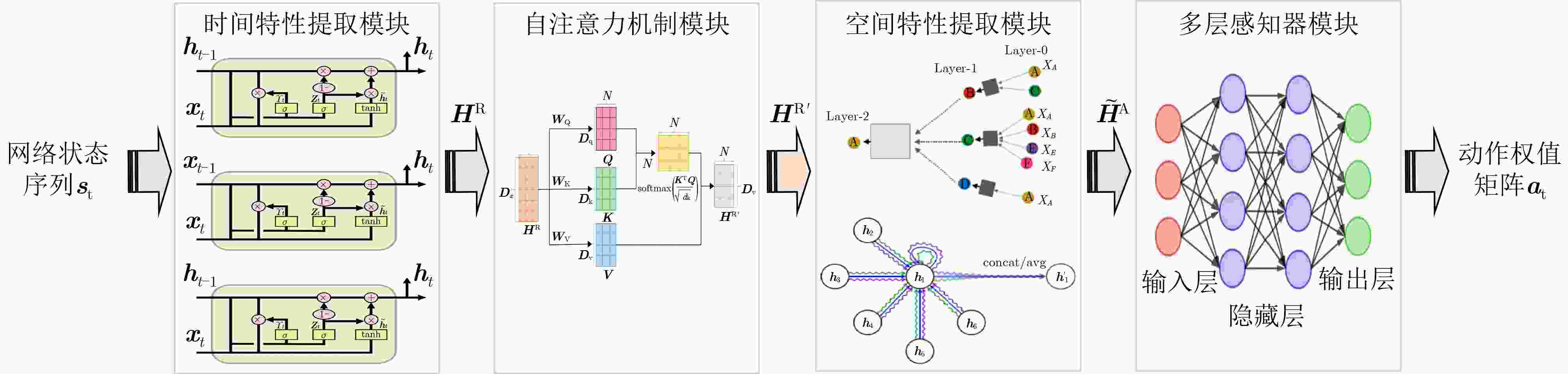

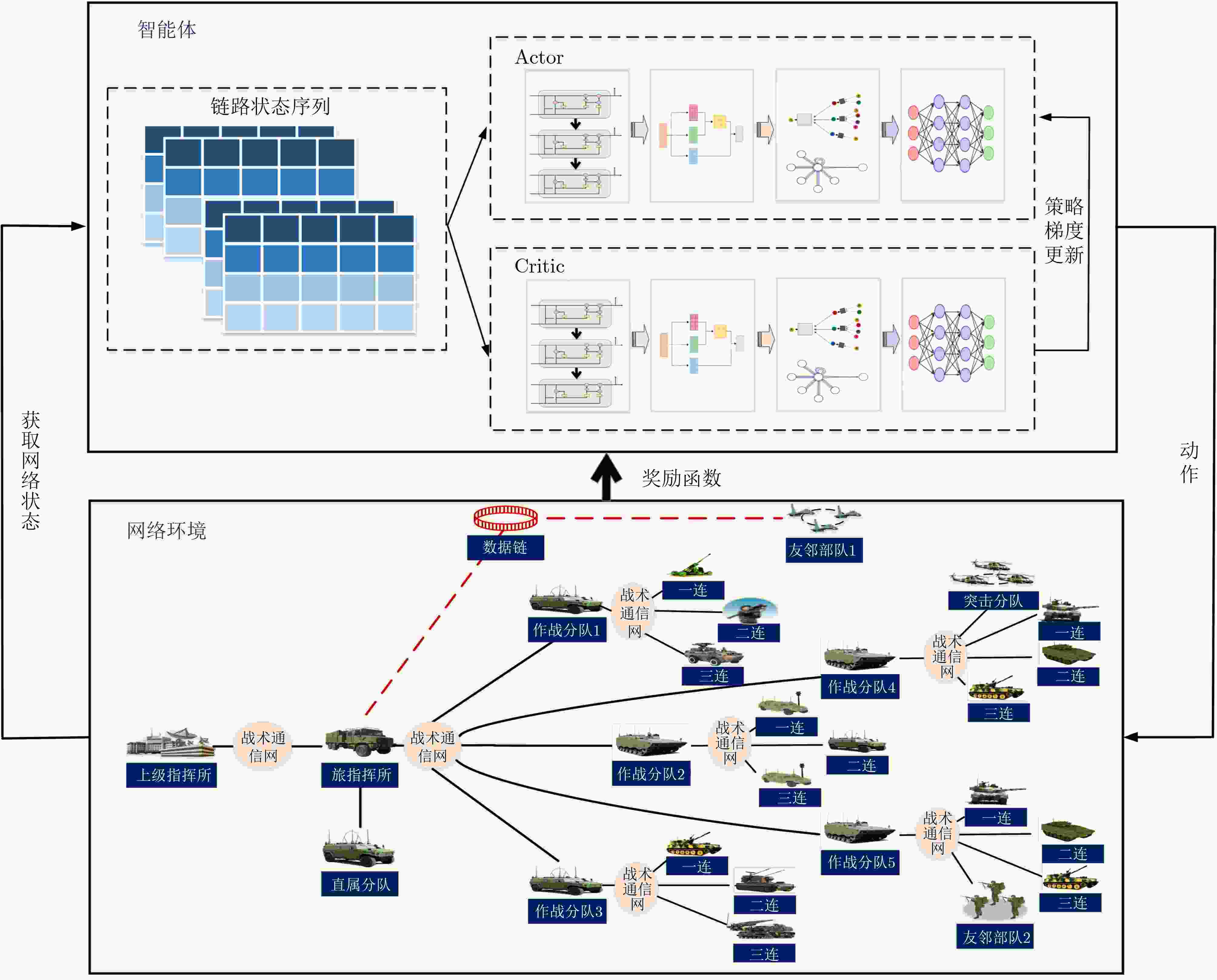

摘要: 针对现有基于深度强化学习的路由算法采用单一神经网络结构,无法全面感知各链路状态的复杂依赖关系,导致算法在网络状态时变条件下的路由决策准确性和鲁棒性受限的问题,该文提出一种基于链路状态感知增强的战术通信网络智能路由算法(DRL-SGA)。该算法在利用近端策略优化(PPO)智能体采集网络状态序列的基础上,构建替代PPO中全连接神经网络(FCNN)的链路状态感知增强模块,以捕获网络状态序列之间的时空依赖关系,提升路由决策模型对时变网络状态的适应能力。进一步,将链路状态感知增强模块输出的动作与网络环境进行周期性交互,以探索满足时延敏感、带宽敏感、可靠性敏感等异质业务差异化传输需求的最佳路由。实验结果表明,与OSPF, DQN, DDPG, A3C和DRL-ST等基准路由算法相比,该文提出的DRL-SGA路由算法在平均端到端时延、平均网络吞吐量、平均丢包率等性能上均有不同程度的优势,且对带宽资源受限、拓扑动态变化等复杂场景具有更强的适应能力。Abstract:

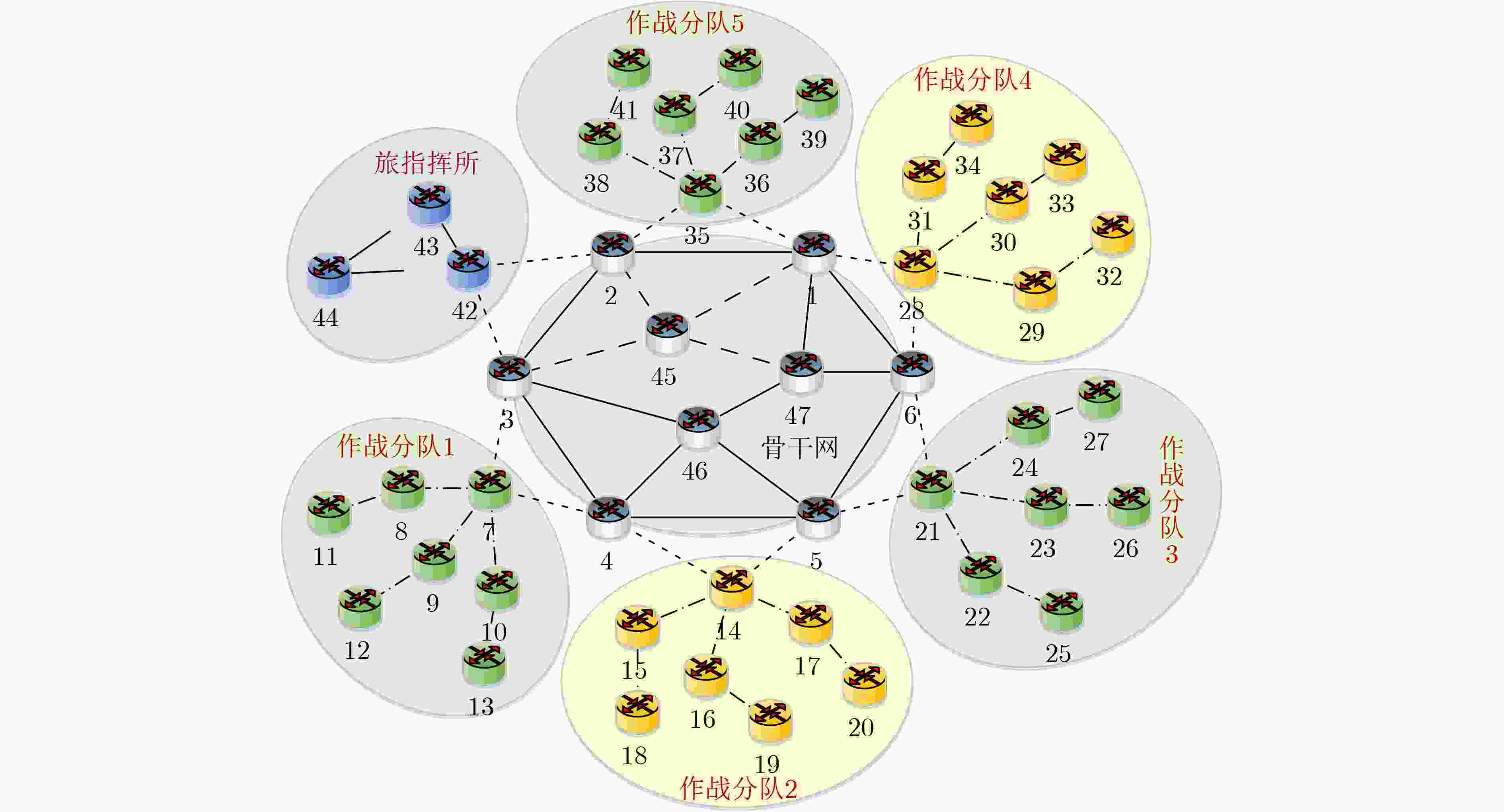

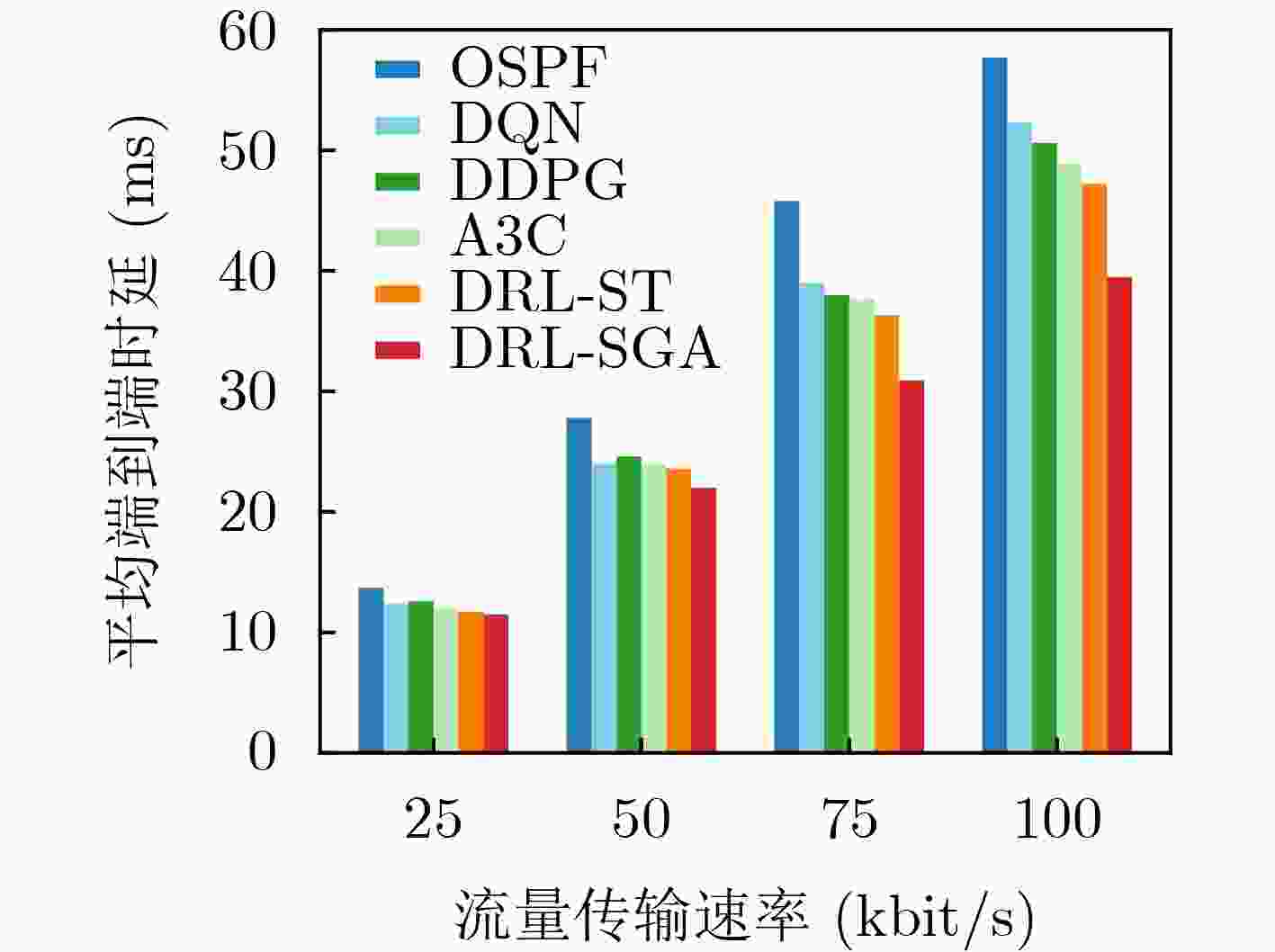

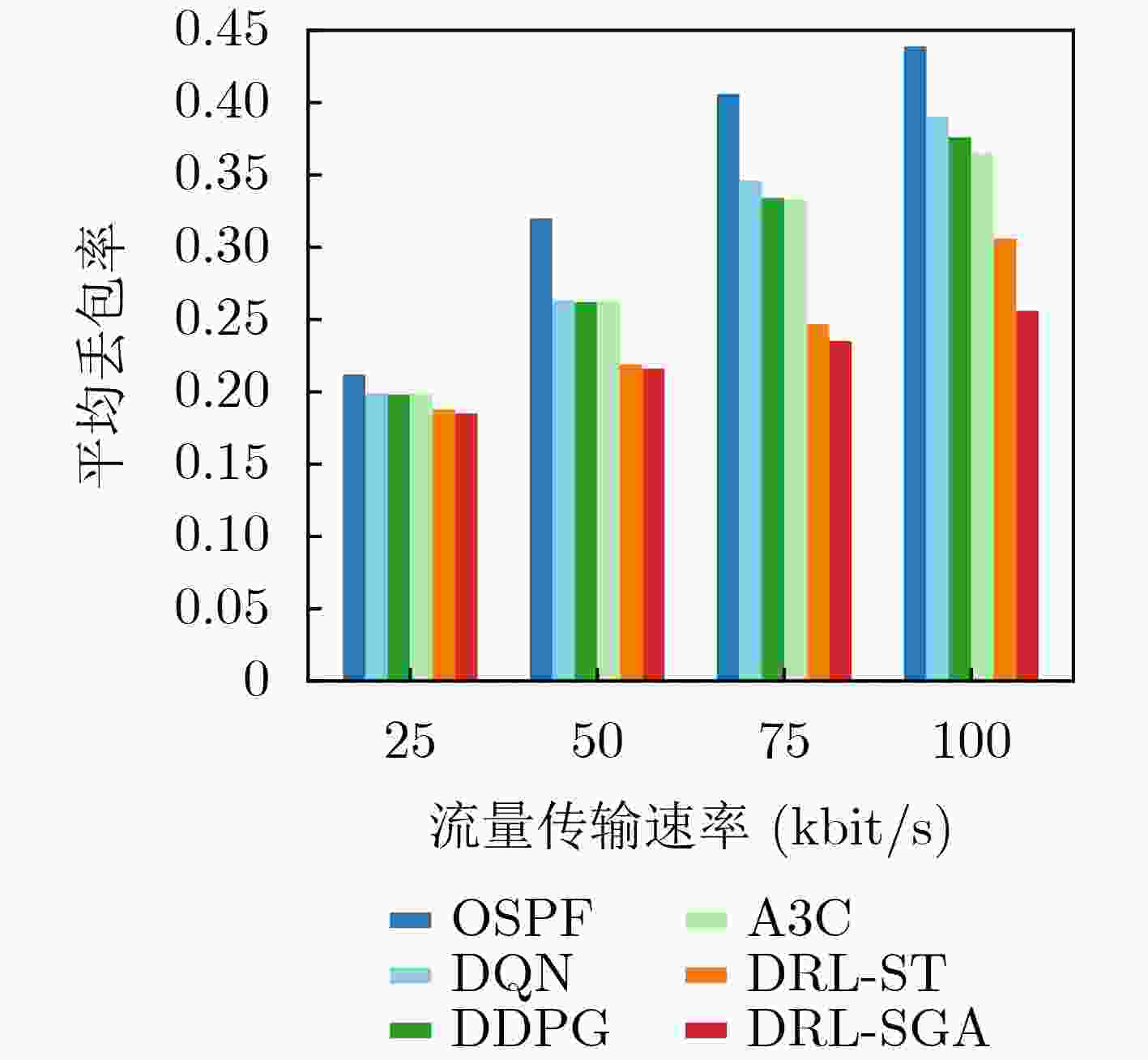

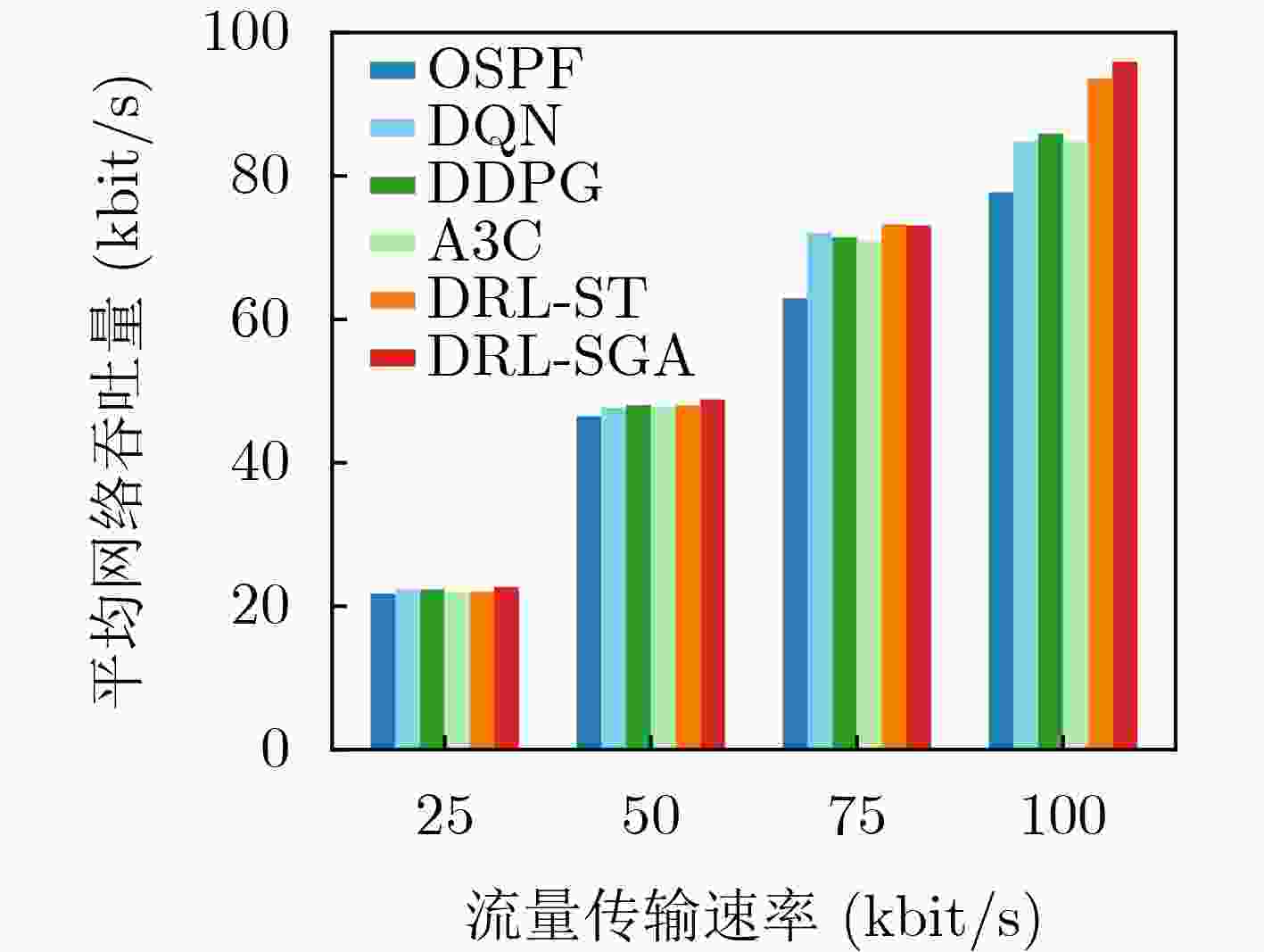

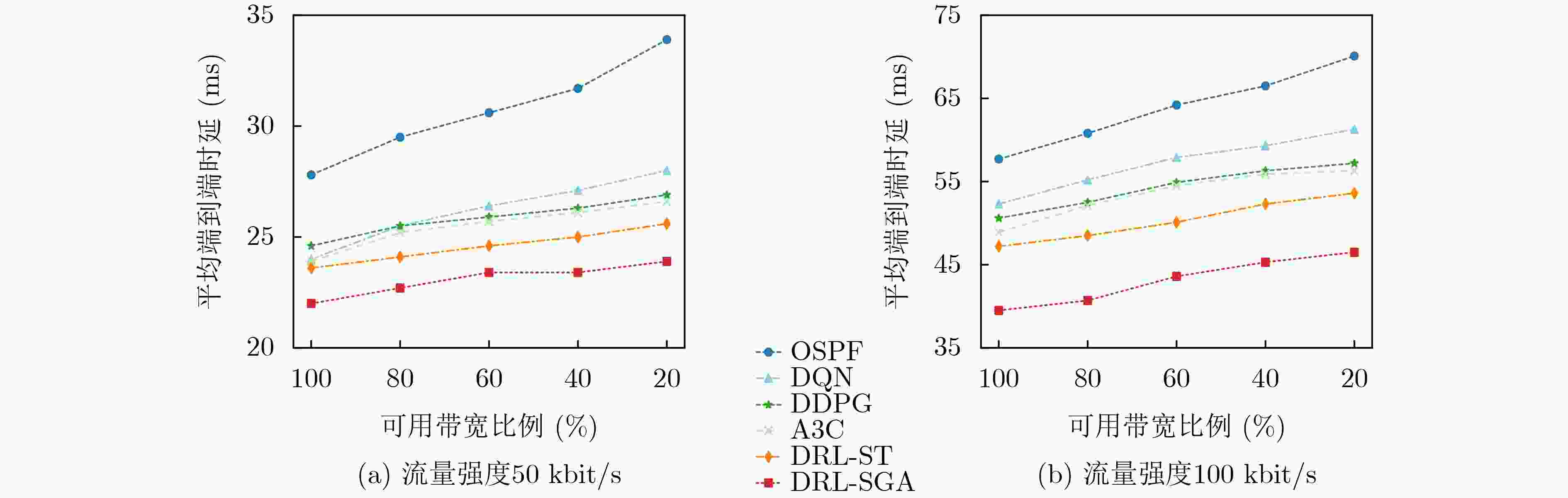

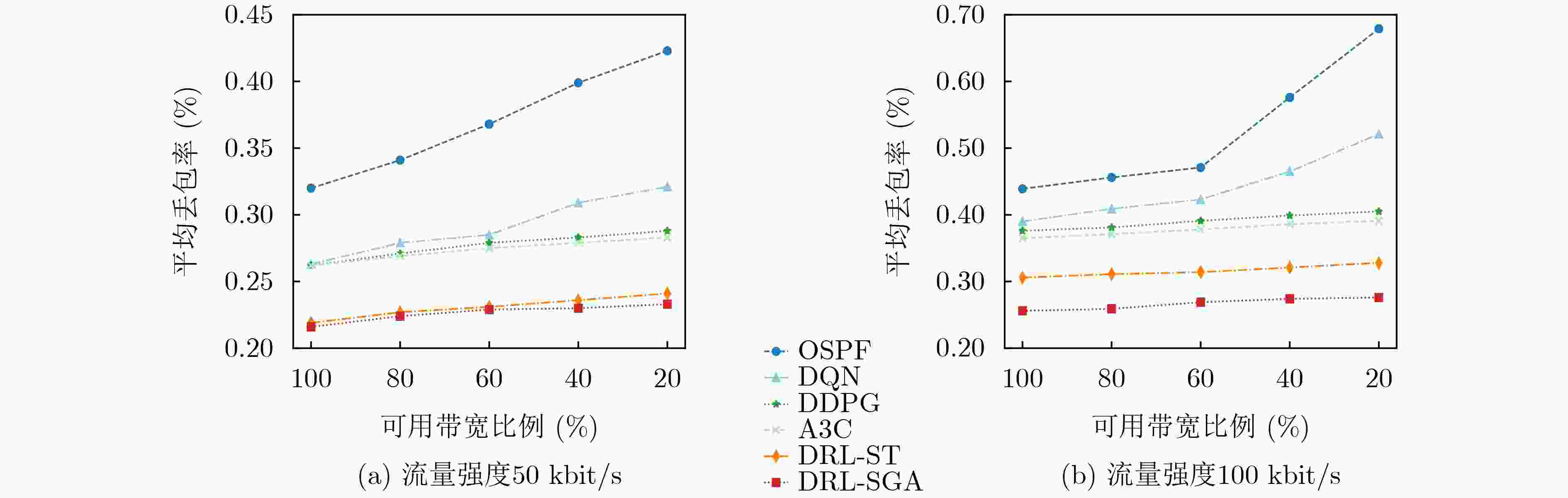

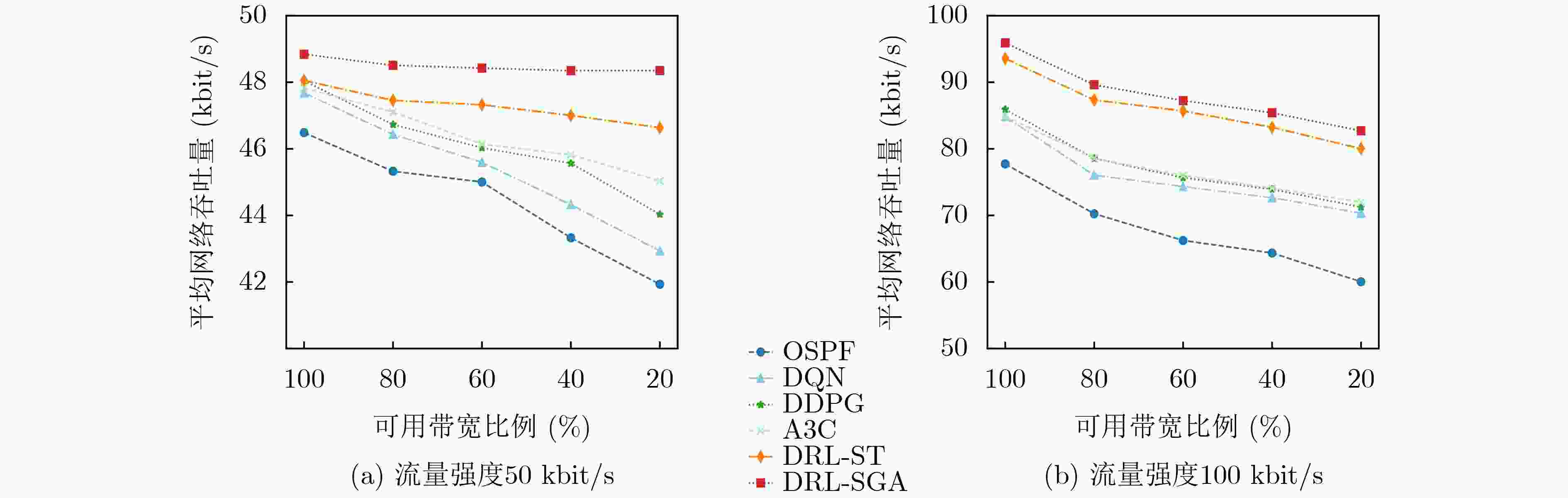

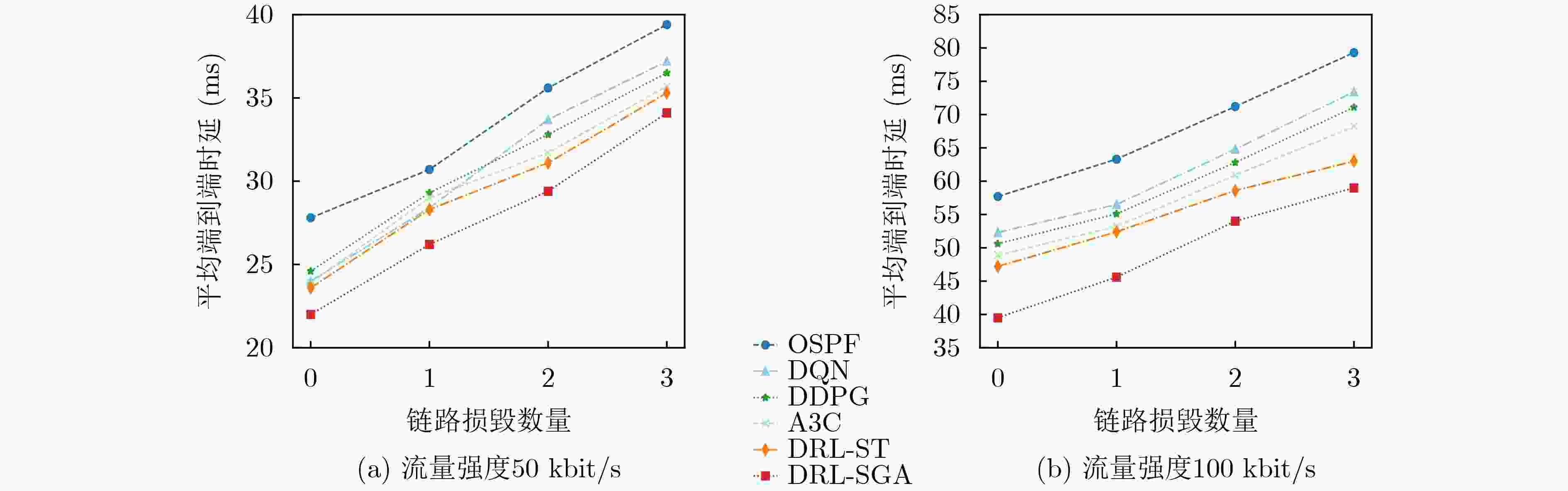

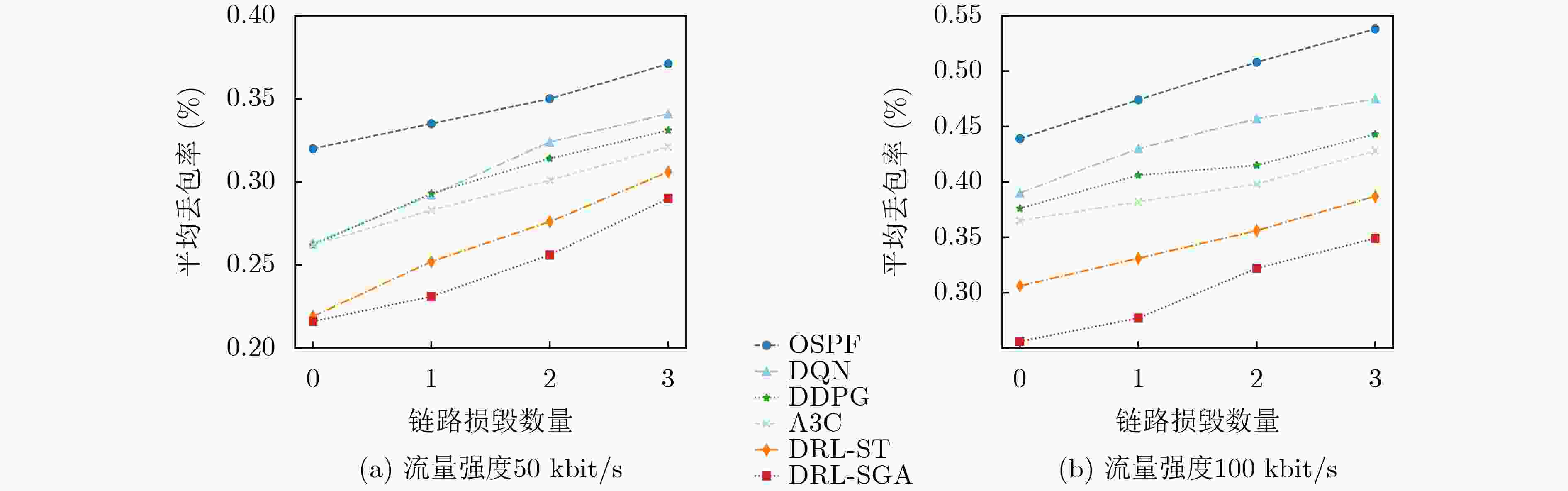

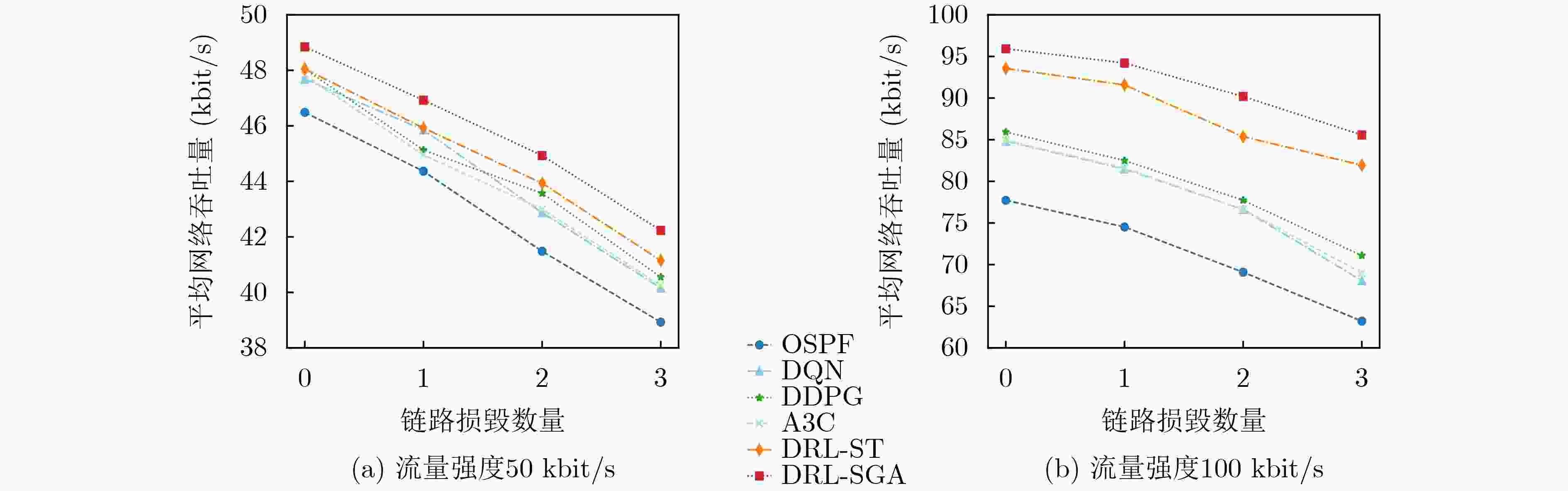

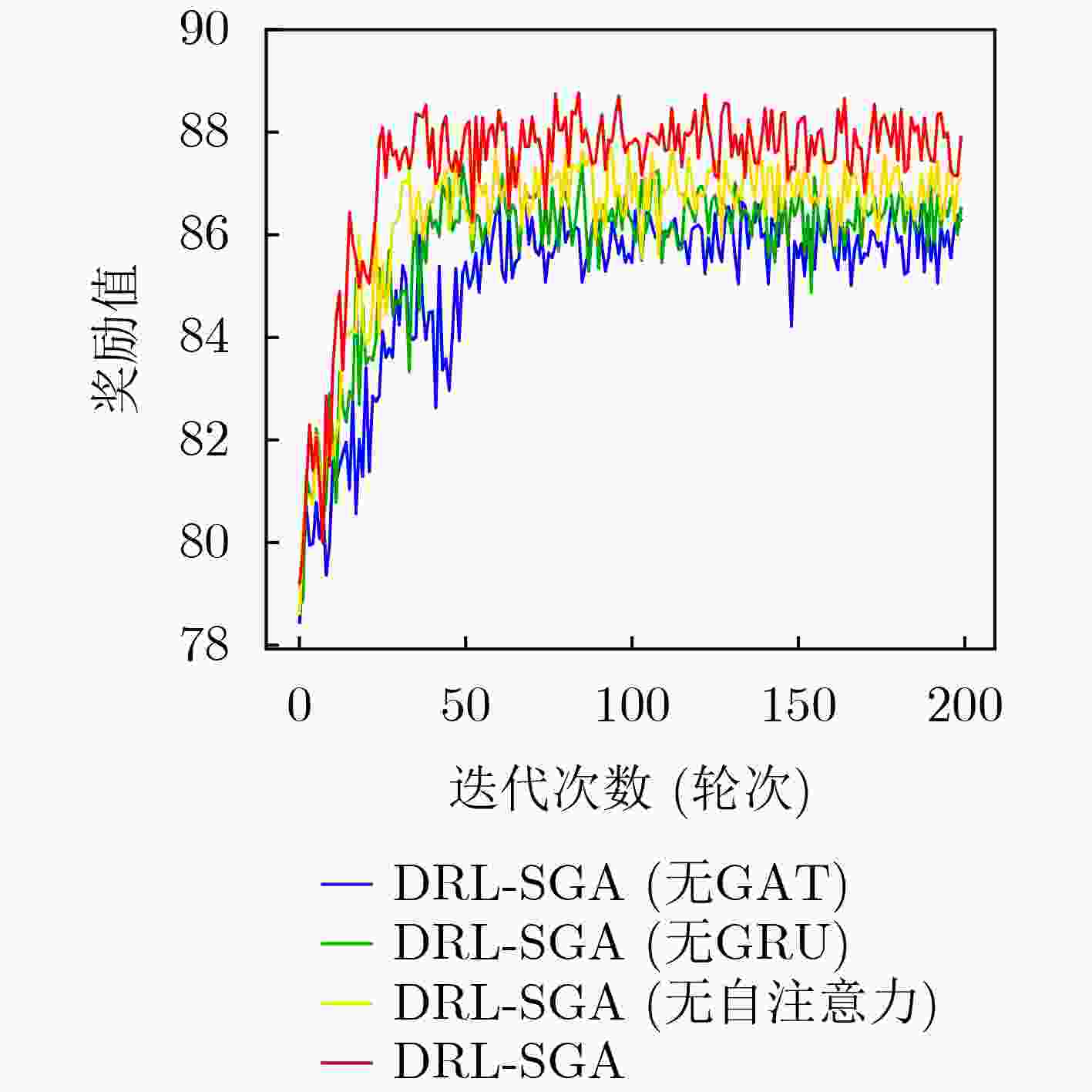

Objective Operational concept iteration, combat style innovation, and the emergence of new combat forces are accelerating the transition of warfare toward intelligent systems. In this context, tactical communication networks must establish end-to-end transmission paths through heterogeneous links, including ultra-shortwave and satellite communications, to meet differentiated routing requirements for multi-modal services sensitive to latency, bandwidth, and reliability. Existing Deep Reinforcement Learning (DRL)-based intelligent routing algorithms primarily use single neural network architectures, which inadequately capture complex dependencies among link states. This limitation reduces the accuracy and robustness of routing decisions under time-varying network conditions. To address this, a link state perception-enhanced intelligent routing algorithm (DRL-SGA) is proposed. By capturing spatiotemporal dependencies in link state sequences, the algorithm improves the adaptability of routing decision models to dynamic network conditions and enables more effective path selection for multi-modal service transmission. Methods The proposed DRL-SGA algorithm incorporates a link state perception enhancement module that integrates a Graph Neural Network (GNN) and an attention mechanism into a Proximal Policy Optimization (PPO) agent framework for collecting network state sequences. This module extracts high-order features from the sequences across temporal and spatial dimensions, thereby addressing the limited global link state awareness of the PPO agent’s Fully Connected Neural Network (FCNN). This enhancement improves the adaptability of the routing decision model to time-varying network conditions. The Actor-Critic framework enables periodic interaction between the agent and the network environment, while an experience replay pool continuously refines policy parameters. This process facilitates the discovery of routing paths that meet heterogeneous transmission requirements across latency-, bandwidth-, and reliability-sensitive services. Results and Discussions The routing decision capability of the DRL-SGA algorithm is evaluated in a simulated network comprising 47 routing nodes and 61 communication links. Its performance is compared with that of five other routing algorithms under varying traffic intensities. The results show that DRL-SGA provides superior adaptability to heterogeneous network environments. At a traffic intensity of 100 kbit/s, DRL-SGA reduces latency by 14.42~33.57% compared with the other algorithms ( Figure 4 ). Network throughput increases by 2.51~23.41% (Figure 5 ). In scenarios characterized by resource constraints or topological changes, DRL-SGA consistently maintains higher service quality and greater adaptability to fluctuations in network state (Figures 7 ~12 ). Ablation experiments confirm the effectiveness of the individual components within the link state perception enhancement module in improving the algorithm’s perception capability (Table 3 ).Conclusions A link state perception-enhanced intelligent routing algorithm (DRL-SGA) is proposed for tactical communication networks. By extracting high-order features from link state sequences across temporal and spatial dimensions, the algorithm addresses the limited global link state awareness of the PPO agent’s FCNN. Through the Actor-Critic framework and periodic interactions between the agent and the network environment, DRL-SGA enables iterative optimization of routing strategies, improving decision accuracy and robustness under dynamic topology and link conditions. Experimental results show that DRL-SGA meets the differentiated transmission requirements of heterogeneous services—latency-sensitive, bandwidth-sensitive, and reliability-sensitive, while offering improved adaptability to variations in network state. However, the algorithm may exhibit delayed convergence when training samples are insufficient in rapidly changing environments. Future work will examine the integration of diffusion models to enrich training data and accelerate convergence. -

1 DRL-SGA算法

输入:奖励折扣因子$ \gamma $,Actor学习率${\lambda _1}$,Critic学习率${\lambda _2}$,训练回合总数$T$,交互频率${N_{{\mathrm{step}}}}$,经验回放池容量$M$,经验样本数$D$,网

络状态序列${{\boldsymbol{s}}_t}$输出:全局最优传输路径 1:初始化Actor策略网络参数$\theta $和Critic价值网络参数$\varphi $; 2:初始化经验回放池容量$M$; 3:for $ {\text{episode}} = 1 $ to $T$ do: 4: 智能体在$t$时刻获取初始网络状态序列$ {{\boldsymbol{s}}_t} = [{{\boldsymbol{x}}_{t - l + 1}},{{\boldsymbol{x}}_{t - l + 2}}, \cdots ,{{\boldsymbol{x}}_t}] $; 5: for $t = 1$ to $ {N_{{\mathrm{step}}}} $: 6: Actor网络根据策略${\pi _\theta }$生成最优路径动作${{\boldsymbol{a}}_t}$并执行; 7: 智能体获得奖励${r_t}$,新的网络状态序列${{\boldsymbol{s}}_{t + 1}}$; 8: 将经验样本$ ({{\boldsymbol{s}}_t},{{\boldsymbol{a}}_t},{r_t},{{\boldsymbol{s}}_{t + 1}}) $存储到经验回放池中; 9: 更新状态序列${{\boldsymbol{s}}_t} \leftarrow {{\boldsymbol{s}}_{t + 1}}$; 10:end for 11:if ${{{\mathrm{len}}(M) \gt D}}$: 12: for $i = 1$ to $D$: 13: 采集经验样本$({{\boldsymbol{s}}_i},{{\boldsymbol{a}}_i},{r_i},{{\boldsymbol{s}}_{i + 1}})$输入Critic网络,获取所有状态价值$V({{\boldsymbol{s}}_i})$; 14: 根据式(14)计算优势函数$\hat A$,利用反向传播更新Critic网络参数$\varphi $; 15: 根据式(16)计算目标函数${L^{{\mathrm{clip}}}}$,利用反向传播更新Actor网络参数$\theta $; 16: end for 17:end if 18:end for 表 1 战术通信网络中的链路类型及相应带宽

网络类型 链路类型 链路带宽(Mbit/s) 骨干网 微波、散射、区宽、卫星、HF、

VHF、UHF、被覆线、数据链1~10 旅指挥所 被覆线、VHF、UHF、区宽 1~10 光纤 622.08 作战分队1 UHF, VHF 1 作战分队2 UHF, VHF 1 作战分队3 UHF, VHF 1 作战分队4 UHF, VHF 1 作战分队5 UHF, VHF 1 表 2 DRL-SGA参数设置

参数 值 优化器 Adam Actor学习率${\lambda _1}$ 0.001 Critic学习率${\lambda _2}$ 0.001 奖励折扣因子$\gamma $ 0.9 裁剪因子$\varepsilon $ 0.2 经验回放池容量$M$ 5000 经验样本数$D$ 32 训练回合总数$T$ 200 交互频率${N_{{\mathrm{step}}}}$ 30 GRU单元数量 3 GAT单元数量 1 MLP隐藏层数量 128 可行路径数$k$ 10 表 3 去除各模块后算法在不同指标上的表现

算法(去除子模块) 奖励均值 奖励方差 端到端时延(ms) 网络吞吐量(kbit/s) 丢包率(%) DRL-SGA(GAT) 85.08 2.95 67.98 69.95 0.351 DRL-SGA(GRU) 85.79 2.37 73.90 66.53 0.355 DRL-SGA(自注意力) 86.33 2.49 70.94 75.06 0.352 DRL-SGA(无) 87.29 2.80 59.12 85.30 0.348 -

[1] 吉祥, 蒋锴, 成海东. 全域作战指挥信息系统总体架构及核心支柱[J]. 指挥与控制学报, 2023, 9(2): 225–232. doi: 10.3969/j.issn.2096-0204.2023.02.0225.JI Xiang, JIANG Kai, and CHENG Haidong. Architecture and core pillars of all-domain operation command information system[J]. Journal of Command and Control, 2023, 9(2): 225–232. doi: 10.3969/j.issn.2096-0204.2023.02.0225. [2] 张姣, 曹阔, 王海军, 等. 基于分层虚拟簇的多信道组网算法[J]. 电子与信息学报, 2023, 45(11): 4041–4049. doi: 10.11999/JEIT230802.ZHANG Jiao, CAO Kuo, WANG Haijun, et al. Multi-channel network construction algorithm based on hierarchical virtual clustering[J]. Journal of Electronics & Information Technology, 2023, 45(11): 4041–4049. doi: 10.11999/JEIT230802. [3] AULIA M A, SUKMANDHANI A A, and OHLIATI J. RIP and OSPF routing protocol analysis on defined network software[C]. 2022 International Electronics Symposium (IES), Surabaya, Indonesia, 2022: 393–397. doi: 10.1109/IES55876.2022.9888355. [4] VERMA A and BHARDWAJ N. A review on routing information protocol (RIP) and open shortest path first (OSPF) routing protocol[J]. International Journal of Future Generation Communication and Networking, 2016, 9(4): 161–170. doi: 10.14257/ijfgcn.2016.9.4.13. [5] NASRALLAH A, THYAGATURU A S, ALHARBI Z, et al. Ultra-low latency (ULL) networks: The IEEE TSN and IETF DetNet standards and related 5G ULL research[J]. IEEE Communications Surveys & Tutorials, 2019, 21(1): 88–145. doi: 10.1109/COMST.2018.2869350. [6] BELGAUM M R, MUSA S, ALI F, et al. Self-socio adaptive reliable particle swarm optimization load balancing in software-defined networking[J]. IEEE Access, 2023, 11: 101666–101677. doi: 10.1109/ACCESS.2023.3314791. [7] YAO Guangshun, DONG Zaixiu, WEN Weiming, et al. A routing optimization strategy for wireless sensor networks based on improved genetic algorithm[J]. Journal of Applied Science and Engineering, 2016, 19(2): 221–228. doi: 10.6180/jase.2016.19.2.13. [8] DENG Xia, ZENG Shouyuan, CHANG Le, et al. An ant colony optimization-based routing algorithm for load balancing in LEO satellite networks[J]. Wireless Communications and Mobile Computing, 2022, 2022(1): 3032997. doi: 10.1155/2022/3032997. [9] HUANG Xiaohong, YUAN Tingting, QIAO Guanhua, et al. Deep reinforcement learning for multimedia traffic control in software defined networking[J]. IEEE Network, 2018, 32(6): 35–41. doi: 10.1109/MNET.2018.1800097. [10] DONG Tianjian, ZHUANG Zirui, QI Qi, et al. Intelligent joint network slicing and routing via GCN-powered multi-task deep reinforcement learning[J]. IEEE Transactions on Cognitive Communications and Networking, 2022, 8(2): 1269–1286. doi: 10.1109/TCCN.2021.3136221. [11] HE Nan, YANG Song, LI Fan, et al. Leveraging deep reinforcement learning with attention mechanism for virtual network function placement and routing[J]. IEEE Transactions on Parallel and Distributed Systems, 2023, 34(4): 1186–1201. doi: 10.1109/TPDS.2023.3240404. [12] CASAS-VELASCO D M, RENDON O M C, and DA FONSECA N L S. DRSIR: A deep reinforcement learning approach for routing in software-defined networking[J]. IEEE Transactions on Network and Service Management, 2022, 19(4): 4807–4820. doi: 10.1109/TNSM.2021.3132491. [13] YANG Sijin, ZHUANG Lei, ZHANG Jianhui, et al. A multipolicy deep reinforcement learning approach for multiobjective joint routing and scheduling in deterministic networks[J]. IEEE Internet of Things Journal, 2024, 11(10): 17402–17418. doi: 10.1109/JIOT.2024.3358403. [14] SUN Penghao, GUO Zehua, LI Junfei, et al. Enabling scalable routing in software-defined networks with deep reinforcement learning on critical nodes[J]. IEEE/ACM Transactions on Networking, 2022, 30(2): 629–640. doi: 10.1109/TNET.2021.3126933. [15] 潘成胜, 曹康宁, 石怀峰, 等. 基于深度强化学习的战术通信网络路径优选算法[J]. 中国电子科学研究院学报, 2024, 19(2): 138–148. doi: 10.3969/j.issn.1673-5692.2024.02.005.PAN Chengsheng, CAO Kangning, SHI Huaifeng, et al. Tactical communication network path selection algorithm based on deep reinforcement learning[J]. Journal of China Academy of Electronics and Information Technology, 2024, 19(2): 138–148. doi: 10.3969/j.issn.1673-5692.2024.02.005. [16] 于全. 战术通信理论与技术[M]. 北京: 人民邮电出版社, 2020: 192–198.YU Quan. Communications in Tactical Environments: Theories and Technologies[M]. Beijing: Posts & Telecom Press, 2020: 192–198. [17] LEE D, KIM J, CHO K, et al. Advanced double layered multi-agent Systems based on A3C in real-time path planning[J]. Electronics, 2021, 10(22): 2762. doi: 10.3390/electronics10222762. -

下载:

下载:

下载:

下载: