Patch-based Adversarial Example Generation Method for Multi-spectral Object Tracking

-

摘要: 当前面向跟踪器的对抗样本生成研究主要集中于可见光谱段,无法在多谱段条件下实现对跟踪器的有效攻击。为了填补这一空缺,该文提出一种基于多谱段的目标跟踪补丁式对抗样本生成网络,有效提升了对抗样本在多谱段条件下的攻击有效性。具体来说,该网络包含对抗纹理生成模块与对抗形状优化策略,对可见光谱段下跟踪器对目标纹理的理解进行语义干扰,并显著破坏对热显著目标相关特征的提取。此外,根据不同跟踪器的特点设计误回归损失和掩膜干扰损失对多谱段跟踪模型补丁式对抗样本生成提供指引,实现跟踪预测框扩大或者脱离目标的效果,引入最大特征差异损失削弱特征空间中模版帧和搜索帧间的相关性,进而实现对跟踪器的有效攻击。定性和定量实验证明该文对抗样本可以有效提升多谱段环境下对跟踪器的攻击成功率。Abstract:

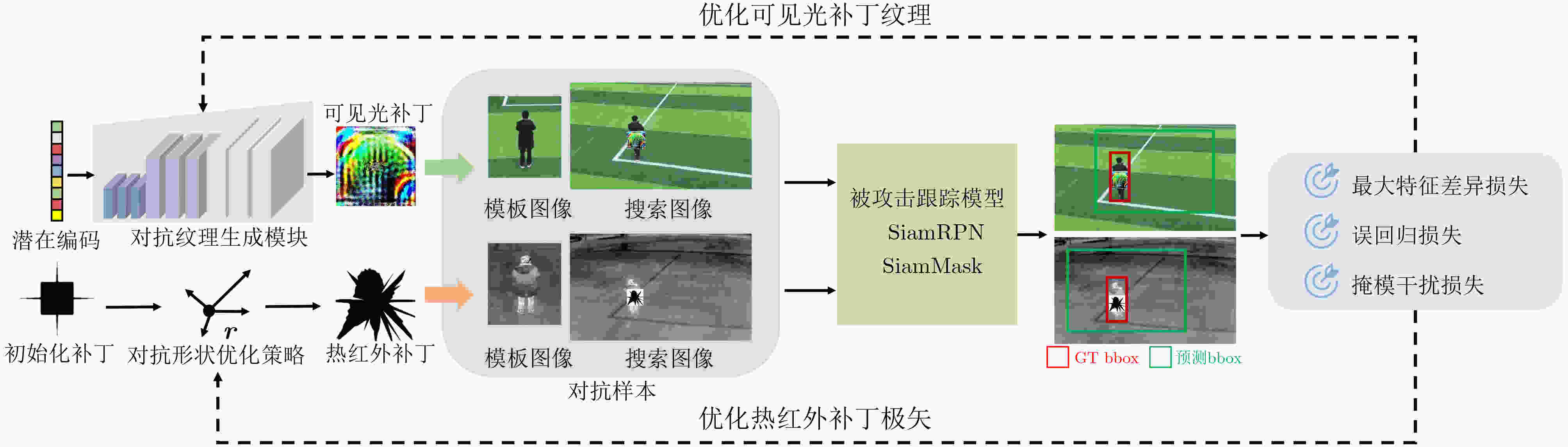

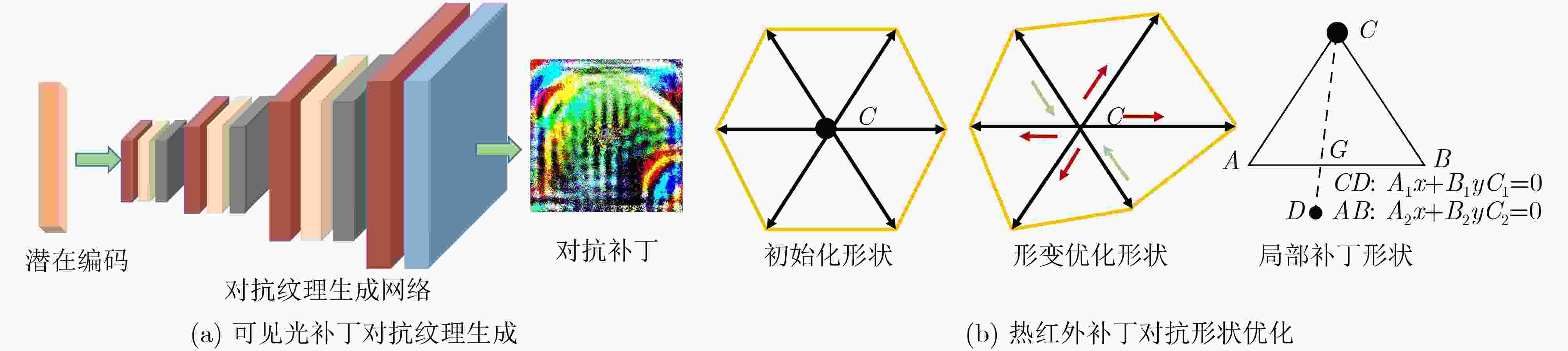

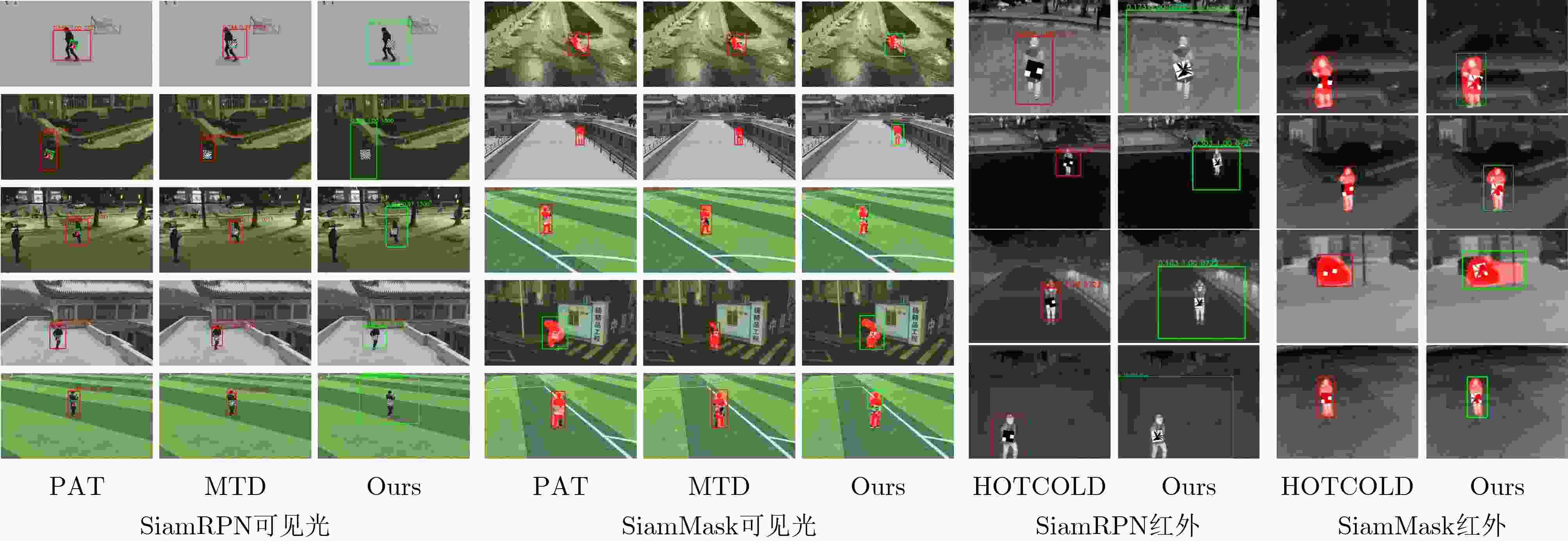

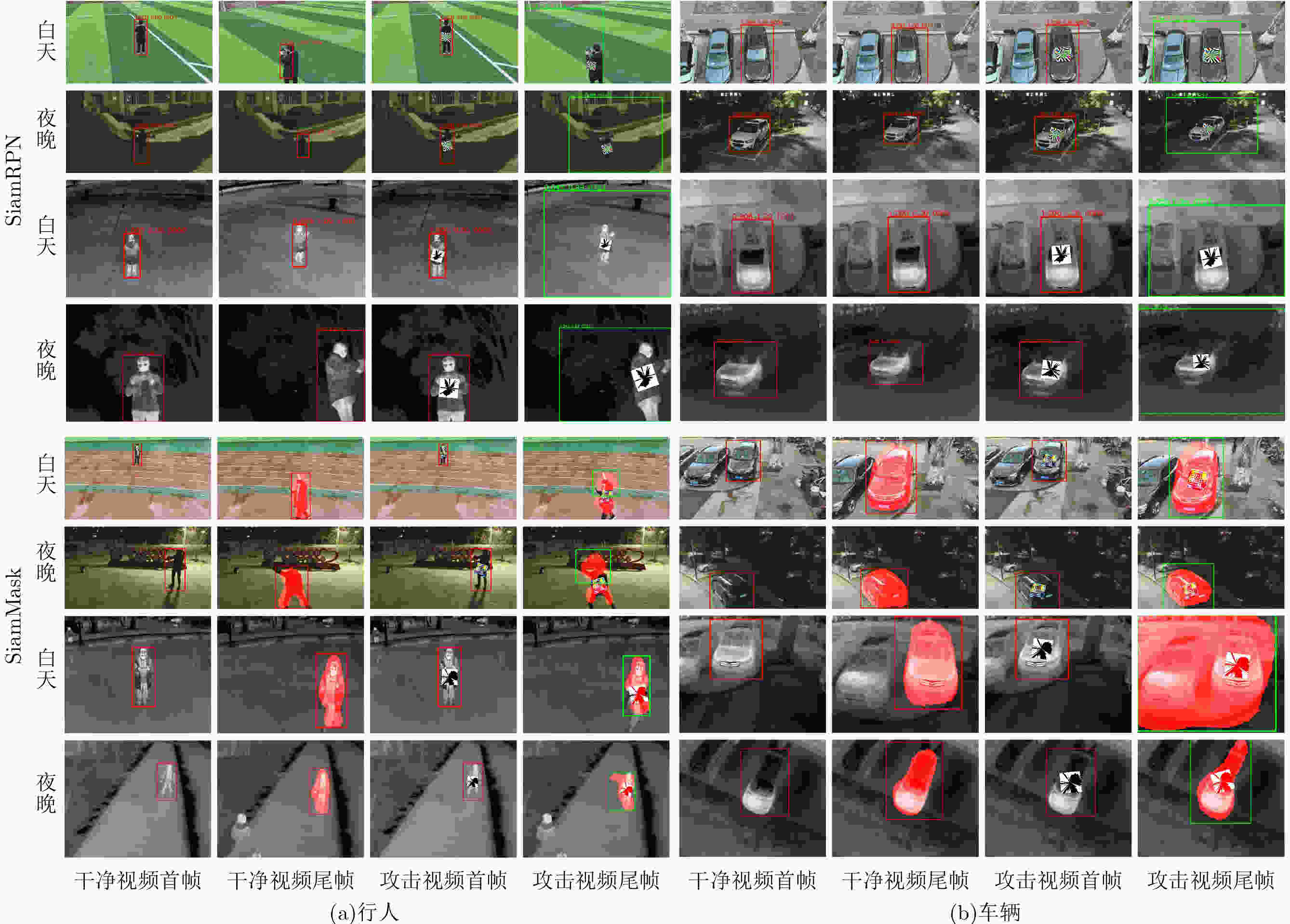

Objective Current research on tracker-oriented adversarial sample generation primarily focuses on the visible spectral band, leaving a gap in addressing multi-spectral conditions, particularly the infrared spectrum. To address this, this study proposes a novel patch-based adversarial sample generation framework for multi-spectral object tracking. By integrating adversarial texture generation modules and adversarial shape optimization strategies, the framework disrupts the tracking model’s interpretation of target textures in the visible spectrum and impairs the extraction of thermal salient features in the infrared spectrum, respectively. Additionally, tailored loss functions, including mis-regression loss, mask interference loss, and maximum feature discrepancy loss, guide the generation of adversarial patches, leading to the expansion or deviation of tracking prediction boxes and weakening the correlation between template and search frames in the feature space. Research on adversarial sample generation contributes to the development of robust object tracking models resistant to interference in practical scenarios. Methods The proposed framework integrates two key components. A Generative Adversarial Network (GAN) synthesizes texture-rich patches to interfere with the tracker’s semantic understanding of target appearance. This module employs upsampling layers to generate adversarial textures that disrupt the tracker’s ability to recognize and localize targets in the visible spectrum. A deformable patch algorithm dynamically adjusts geometric shapes to disrupt thermal saliency features. By optimizing the length of radial vectors, the algorithm generates adversarial shapes that interfere with the tracker’s extraction of thermal salient features, which are critical for infrared object tracking. Tailored loss functions are designed for different trackers. Mis-regression loss and mask interference loss guide attacks on region-proposal-based trackers (e.g., SiamRPN) and mask-guided trackers (e.g., SiamMask), respectively. These losses mislead the regression branches of region-proposal-based trackers and degrade the mask prediction accuracy of mask-guided trackers. Maximum feature discrepancy loss reduces the correlation between template and search features in deep representation space, further weakening the tracker’s ability to match and track targets. The adversarial patches are generated through iterative optimization of these losses, ensuring cross-spectral attack effectiveness. Results and Discussions Experimental results validate the method’s effectiveness. In the visible spectrum, the proposed framework achieves attack success rates of 81.57% (daytime) and 81.48% (night) against SiamRPN, significantly outperforming state-of-the-art methods PAT and MTD ( Table 1 ). For SiamMask, success rates reach 53.65% (day) and 52.77% (night), demonstrating robust performance across different tracking architectures (Fig. 3 ). In the infrared spectrum, the method attains attack success rates of 71.43% (day) and 81.08% (night) against SiamRPN, exceeding the HOTCOLD method by more than 30% (Table 2 ). For SiamMask, the success rates reach 65.95% (day) and 65.85% (night), highlighting the effectiveness of the adversarial shape optimization strategy in disrupting thermal salient features. Multi-scene robustness is further demonstrated through qualitative results (Fig. 4 ), which show consistent attack performance across diverse environments, including roads, grasslands, and playgrounds under varying illumination conditions. Ablation studies confirm the necessity of each loss component. The combination of mis-regression and feature discrepancy losses improves the SiamRPN attack success rate to 75.95%, while the mask and feature discrepancy losses enhance SiamMask attack success to 65.91% (Table 3 ). Qualitative and quantitative experiments demonstrate that the adversarial samples proposed in this study effectively increase attack success rates against trackers in multi-spectral environments. These results highlight the framework’s ability to generate highly effective adversarial patches across both visible and infrared spectra, offering a comprehensive solution for multi-spectral object tracking security.Conclusions This study addresses the gap in multi-spectral adversarial attacks on object trackers by proposing a novel patch-based adversarial example generation framework. The method integrates a texture generation module for visible-spectrum attacks and a shape optimization strategy for thermal infrared interference, effectively disrupting trackers’ reliance on texture semantics and heat-significant features. By designing task-specific loss functions, including mis-regression loss, mask disruption loss, and maximum feature discrepancy loss, the framework enables precise attacks on both region-proposal and mask-guided trackers. Experimental results demonstrate the adversarial patches’ strong cross-spectral transferability and environmental robustness, causing trackers to deviate from targets or produce excessively enlarged bounding boxes. This work not only advances multi-spectral adversarial attacks in object tracking but also provides insights into improving model robustness against real-world perturbations. Future research will explore dynamic patch generation and extend the framework to emerging transformer-based trackers. -

Key words:

- Adversarial example generation /

- Tracker attacks /

- Multi-spectral /

- Adversarial patch /

- Object tracking

-

表 1 SiamRPN/SiamMask对抗样本可见光谱段攻击对比定量结果

跟踪器 场景 跟踪结果 干净视频 PAT 攻击成功率(%) MTD 攻击成功率(%) 本文 攻击成功率(%) SiamRPN 白天 成功 38 22 42.11 21 44.71 7 81.57 失败 12 28 29 43 黑夜 成功 27 15 44.44 11 59.25 5 81.48 失败 23 35 39 45 SiamMask 白天 成功 41 27 34.15 36 12.19 19 53.65 失败 9 23 14 31 黑夜 成功 36 21 41.06 29 19.44 17 52.77 失败 14 29 21 33 表 2 SiamRPN/SiamMask对抗样本红外谱段攻击对比定量结果

跟踪器 场景 跟踪结果 干净视频 HOTCOLD 攻击成功率(%) 本文 攻击成功率(%) SiamRPN 白天 成功 42 32 23.81 12 71.43 失败 8 18 38 黑夜 成功 37 20 45.94 7 81.08 失败 13 30 43 SiamMask 白天 成功 47 39 17.02 16 65.95 失败 3 11 34 黑夜 成功 41 21 48.78 14 65.85 失败 9 29 36 表 3 损失函数消融实验

跟踪器 损失函数 干净跟踪成功视频数 对抗样本跟踪成功视频数 攻击成功率(%) SiamRPN 误回归损失 79 25 68.35 最大特征差异损失 33 58.23 误回归损失+最大特征差异损失 19 75.95 SiamMask 掩膜损失 88 37 57.95 最大特征差异损失 52 40.91 掩膜损失+最大特征差异损失 30 65.91 -

[1] 卢湖川, 李佩霞, 王栋. 目标跟踪算法综述[J]. 模式识别与人工智能, 2018, 31(1): 61–67. doi: 10.16451/j.cnki.issn1003-6059.201801006.LU Huchuan, LI Peixia, and WANG Dong. Visual object tracking: A survey[J]. Pattern Recognition and Artificial Intelligence, 2018, 31(1): 61–67. doi: 10.16451/j.cnki.issn1003-6059.201801006. [2] SZEGEDY C, ZAREMBA W, SUTSKEVER I, et al. Intriguing properties of neural networks[C]. The 2nd International Conference on Learning Representations, Banff, Canada, 2014. [3] 潘文雯, 王新宇, 宋明黎, 等. 对抗样本生成技术综述[J]. 软件学报, 2020, 31(1): 67–81. doi: 10.13328/j.cnki.jos.005884.PAN Wenwen, WANG Xinyu, SONG Mingli, et al. Survey on generating adversarial examples[J]. Journal of Software, 2020, 31(1): 67–81. doi: 10.13328/j.cnki.jos.005884. [4] JIA Shuai, MA Chao, SONG Yibing, et al. Robust tracking against adversarial attacks[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 69–84. doi: 10.1007/978-3-030-58529-7_5. [5] CHEN Fei, WANG Xiaodong, ZHAO Yunxiang, et al. Visual object tracking: A survey[J]. Computer Vision and Image Understanding, 2022, 222: 103508. doi: 10.1016/j.cviu.2022.103508. [6] CHEN Xuesong, FU Canmiao, ZHENG Feng, et al. A unified multi-scenario attacking network for visual object tracking[C]. The 35th AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2021: 1097–1104. doi: 10.1609/aaai.v35i2.16195. [7] YAN Bin, PENG Houwen, FU Jianlong, et al. Learning spatio-temporal transformer for visual tracking[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 10428–10437. doi: 10.1109/ICCV48922.2021.01028. [8] TANG Chuanming, WANG Xiao, BAI Yuanchao, et al. Learning spatial-frequency transformer for visual object tracking[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2023, 33(9): 5102–5116. doi: 10.1109/TCSVT.2023.3249468. [9] LI Bo, YAN Junjie, WU Wei, et al. High performance visual tracking with Siamese region proposal network[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8971–8980. doi: 10.1109/CVPR.2018.00935. [10] HU Weiming, WANG Qiang, ZHANG Li, et al. SiamMask: A framework for fast online object tracking and segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(3): 3072–3089. doi: 10.1109/TPAMI.2022.3172932. [11] LIN Liting, FAN Heng, ZHANG Zhipeng, et al. SwinTrack: A simple and strong baseline for transformer tracking[C]. The 36th International Conference on Neural Information Processing Systems, New Orleans, USA, 2022: 1218. doi: 10.5555/3600270.3601488. [12] LIN Xixun, ZHOU Chuan, WU Jia, et al. Exploratory adversarial attacks on graph neural networks for semi-supervised node classification[J]. Pattern Recognition, 2023, 133: 109042. doi: 10.1016/j.patcog.2022.109042. [13] HUANG Hao, CHEN Ziyan, CHEN Huanran, et al. T-SEA: Transfer-based self-ensemble attack on object detection[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 20514–20523. doi: 10.1109/CVPR52729.2023.01965. [14] JIA Shuai, SONG Yibing, MA Chao, et al. IoU attack: Towards temporally coherent black-box adversarial attack for visual object tracking[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 6705–6714. doi: 10.1109/CVPR46437.2021.00664. [15] DING Li, WANG Yongwei, YUAN Kaiwen, et al. Towards universal physical attacks on single object tracking[C]. The 35th AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2021: 1236–1245. doi: 10.1609/aaai.v35i2.16211. [16] HUANG Xingsen, MIAO Deshui, WANG Hongpeng, et al. Context-guided black-box attack for visual tracking[J]. IEEE Transactions on Multimedia, 2024, 26: 8824–8835. doi: 10.1109/TMM.2024.3382473. [17] GOODFELLOW I, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial networks[J]. Communications of the ACM, 2020, 63(11): 139–144. doi: 10.1145/3422622. [18] CHEN Zhaoyu, LI Bo, WU Shuang, et al. Shape matters: Deformable patch attack[C]. The 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 529–548. doi: 10.1007/978-3-031-19772-7_31. [19] LI Chenglong, LIANG Xinyan, LU Yijuan, et al. RGB-T object tracking: Benchmark and baseline[J]. Pattern Recognition, 2019, 96: 106977. doi: 10.1016/j.patcog.2019.106977. [20] WIYATNO R and XU Anqi. Physical adversarial textures that fool visual object tracking[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 4821–4830. doi: 10.1109/ICCV.2019.00492. [21] WEI Hui, WANG Zhixiang, JIA Xuemei, et al. HOTCOLD block: Fooling thermal infrared detectors with a novel wearable design[C]. The 37th AAAI Conference on Artificial Intelligence, Washington, USA, 2023: 15233–15241. doi: 10.1609/aaai.v37i12.26777. -

下载:

下载:

下载:

下载: