Siamese Network-assisted Multi-domain Feature Fusion for Radar Active Jamming Recognition Method

-

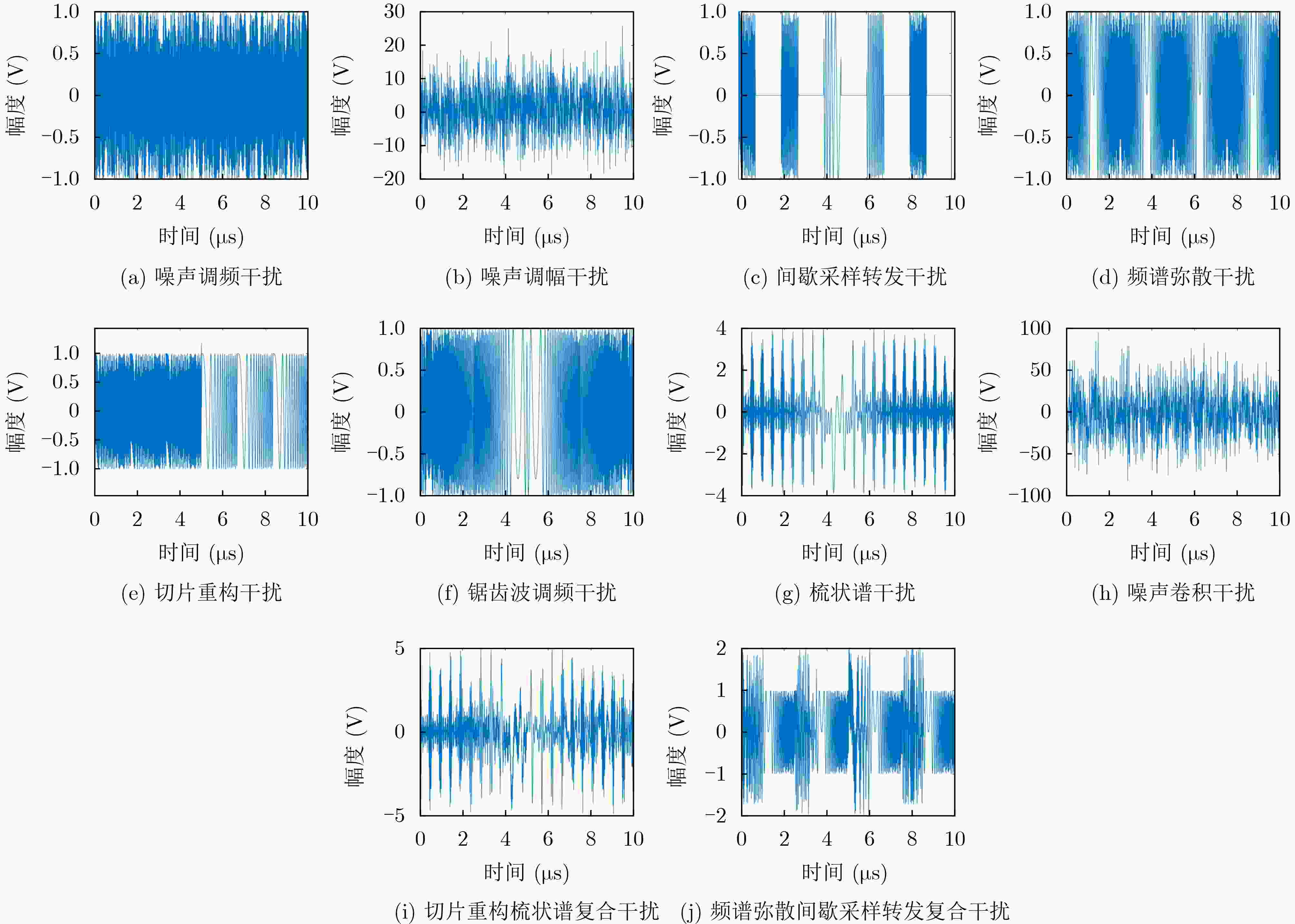

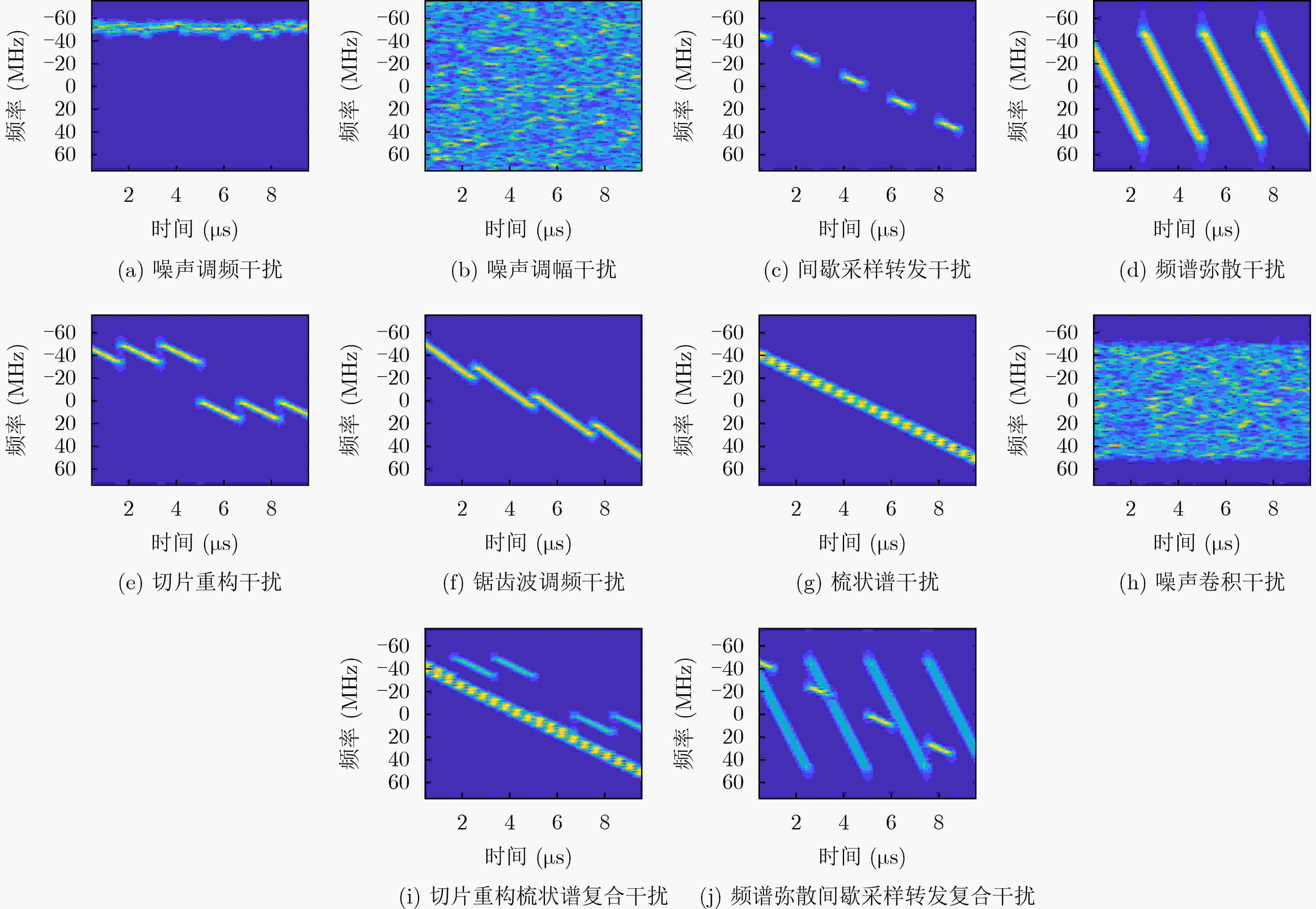

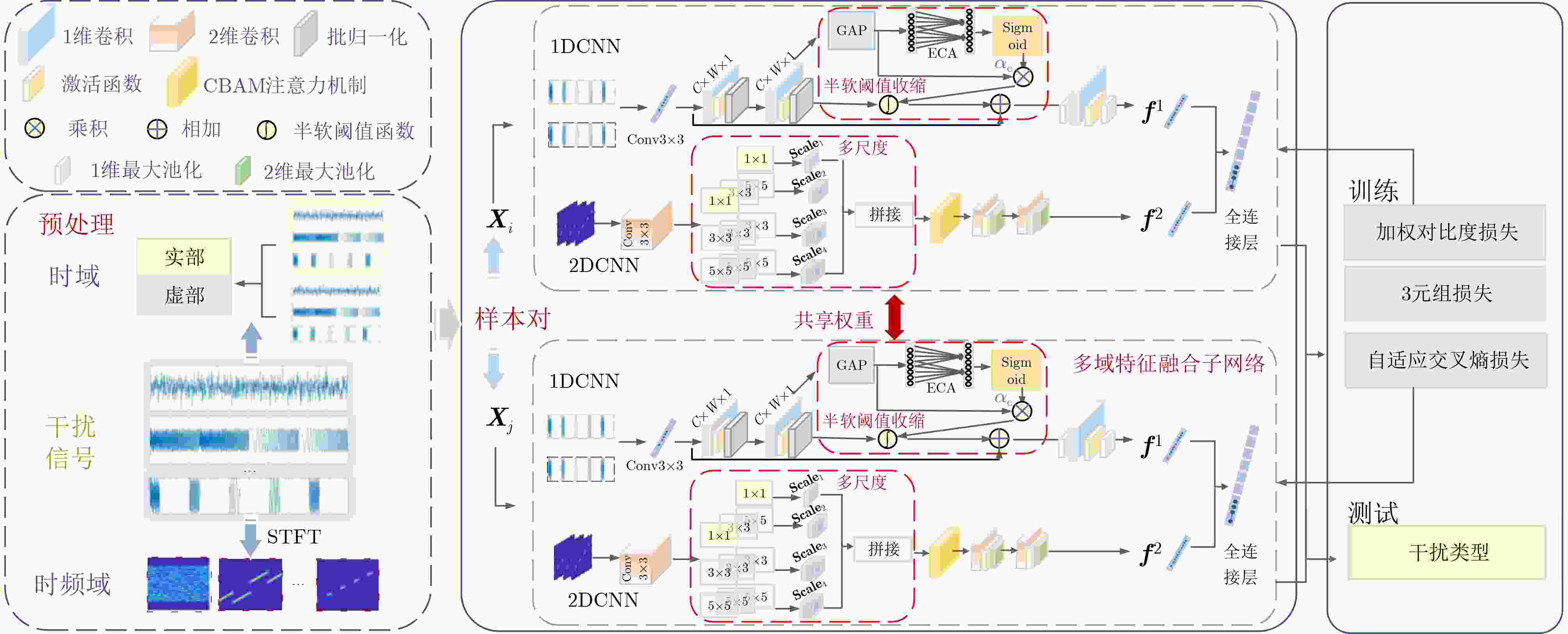

摘要: 针对目前雷达有源干扰识别方法在低干噪比下识别精度低和训练样本难以高效获取的问题,该文提出一种孪生网络辅助下多域特征融合的雷达有源干扰识别方法。首先,为了实现低干噪比下干扰特征的有效提取,构建了一种多域特征融合子网络;具体地,结合半软阈值函数和注意力机制,提出半软阈值收缩模块,以有效提取时域特征,避免手工提取阈值的不足,同时引入多尺度卷积模块和注意力模块,以增强时频域特征提取能力。然后,为了降低识别模型对样本的依赖,设计了一种权值共享的孪生网络,通过对比样本间相似度扩大训练次数,以解决样本不足问题。最后,联合改进的加权对比度损失函数、自适应交叉熵损失函数和3元组损失函数,实现干扰特征的类内聚集、类间分离。实验结果表明,在干噪比为–6 dB且每类干扰为20个训练样本时,对10种典型有源干扰的识别率达到96.88%。Abstract:

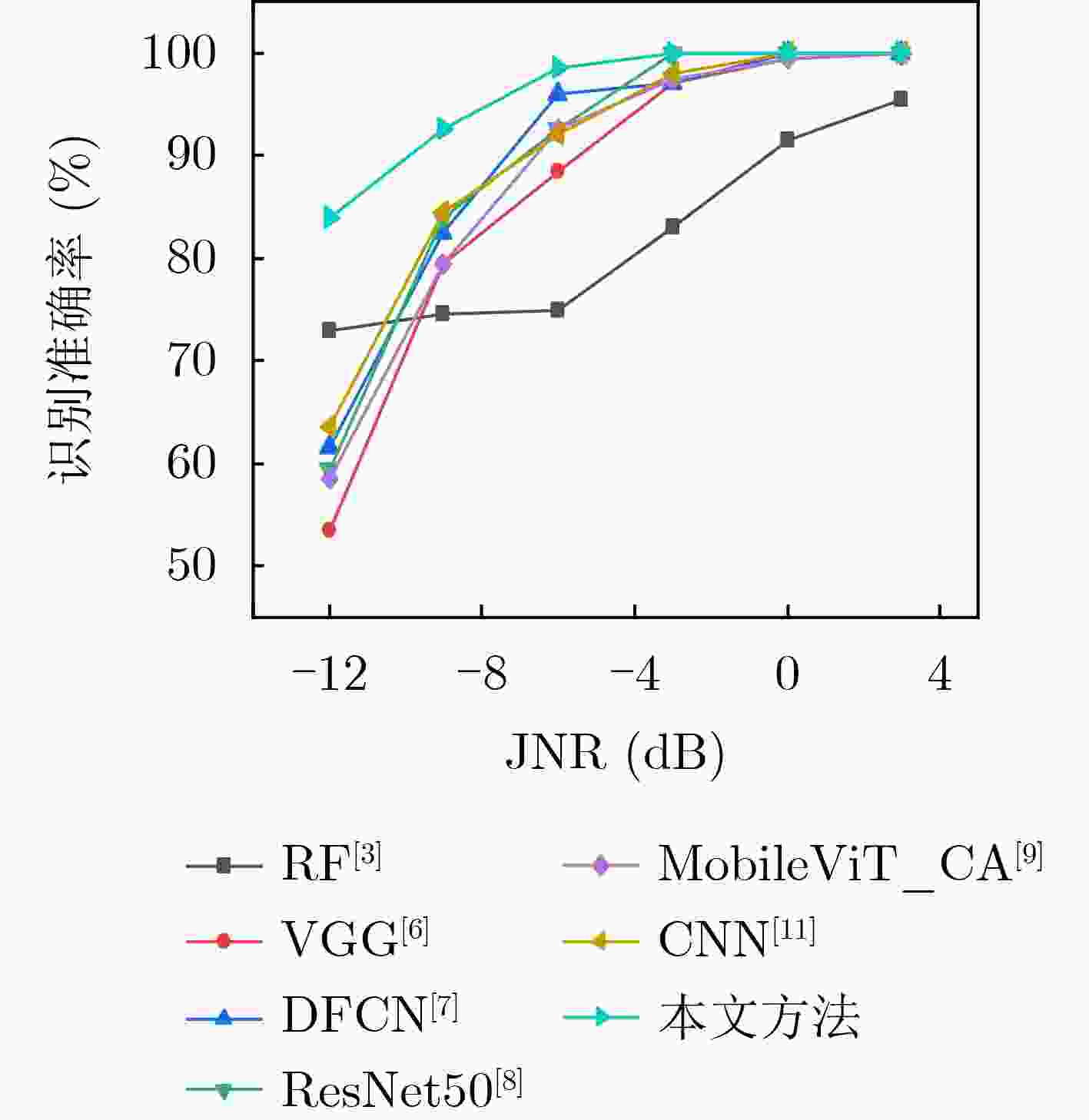

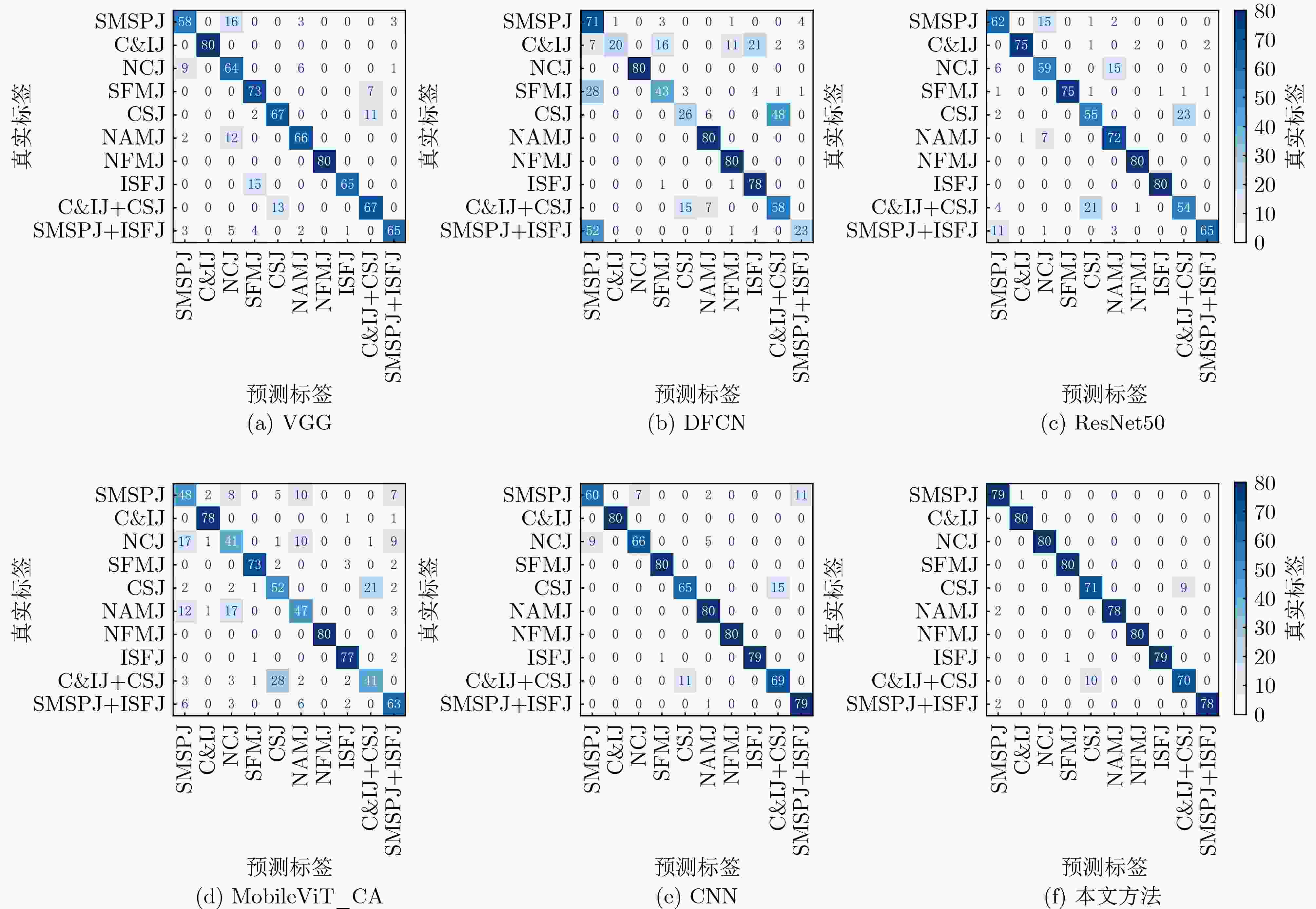

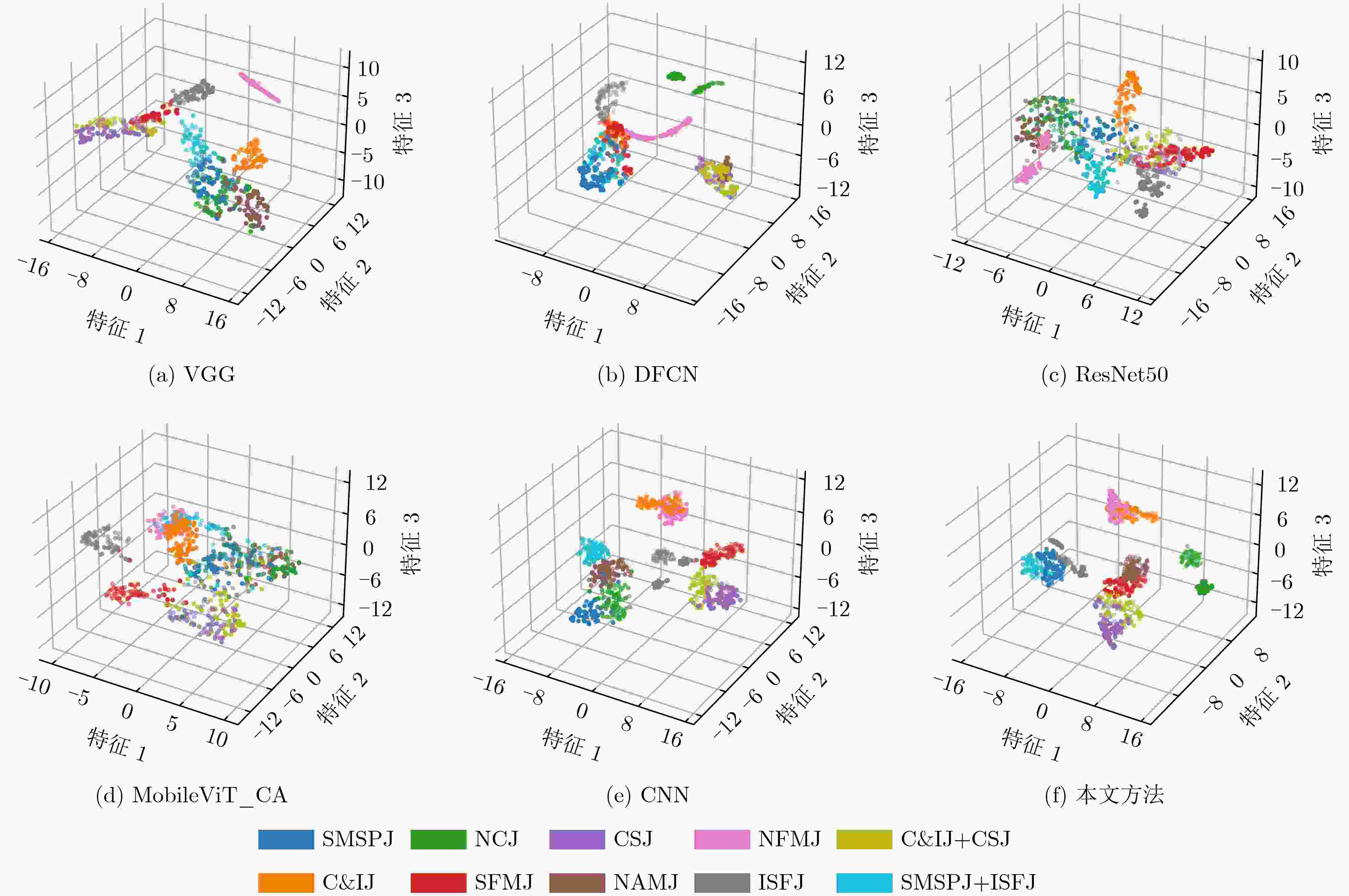

Objective The rapid development of electronic warfare technology has introduced complex scenarios in which active jamming presents considerable challenges to radar systems. On modern battlefields, the electromagnetic environment is highly congested, and various forms of active jamming signals frequently disrupt radar functionality. Although existing recognition algorithms can identify certain types of radar active jamming, their performance declines under low Jamming-to-Noise Ratio (JNR) conditions or when training data are scarce. Low JNR reduces the detectability of jamming signals by conventional methods, and limited sample size further constrains recognition accuracy. To address these challenges, neural network-based methods have emerged as viable alternatives. This study proposes a radar active jamming recognition approach based on multi-domain feature fusion assisted by a Siamese network, which enhances recognition capability under low JNR and small-sample conditions. The proposed method offers an intelligent framework for improving jamming recognition in complex environments and provides theoretical support for battlefield awareness and the design of effective counter-jamming strategies. Methods The proposed method comprises a multi-domain feature fusion subnetwork, a Siamese architecture, and a joint loss design. To extract jamming features effectively under low JNR conditions, a multi-domain feature fusion subnetwork is developed. Specifically, a semi-soft thresholding shrinkage module is proposed by integrating a semi-soft threshold function with an attention mechanism. This module efficiently extracts time-domain features and eliminates the limitations of manual threshold selection. To enhance the extraction of time-frequency domain features, a multi-scale convolution module and an additional attention mechanism are incorporated. To reduce the model’s dependence on large training datasets, a weight-sharing Siamese network is constructed. By comparing similarity between sample pairs, this network increases the number of training iterations, thereby mitigating the limitations imposed by small sample sizes. Finally, three loss functions are jointly applied: an improved weighted contrastive loss, an adaptive cross-entropy loss, and a triplet loss. This joint strategy promotes intra-class compactness and inter-class separability of jamming features. Results and Discussions When the number of training samples is limited ( Table 6 ), the proposed method achieves an accuracy of 96.88% at a JNR of –6 dB with only 20 training samples, indicating its effectiveness under data-scarce conditions. With further reduction in sample size—specifically, when only 15 training samples are available per jamming type—the recognition performance of other methods declines substantially. In contrast, the proposed method maintains higher recognition accuracy, demonstrating enhanced stability and robustness under low JNR and limited sample conditions. This performance advantage is attributable to three key factors: (1) Multi-domain feature fusion integrates jamming features from multiple domains, preventing the loss of discriminative information commonly observed under low JNR conditions. (2) The weight-sharing Siamese network increases the number of effective training iterations by evaluating sample similarities, thereby mitigating the limitations associated with small datasets. (3) The combined use of an improved weighted contrastive loss, an adaptive cross-entropy loss, and a triplet loss promotes intra-class compactness and inter-class separability of jamming features, enhancing the model’s generalization capability.Conclusions This study proposes a radar active jamming recognition method that performs effectively under low JNR and limited training sample conditions. A multi-domain feature fusion subnetwork is developed to extract representative features from both the time and time-frequency domains, enabling a more comprehensive and discriminative characterization of jamming signals. A weight-sharing Siamese network is then introduced to reduce reliance on large training datasets by leveraging sample similarity comparisons to expand training iterations. In addition, three loss functions—an improved weighted contrastive loss, an adaptive cross-entropy loss, and a triplet loss—are jointly applied to promote intra-class compactness and inter-class separability. Experimental results validate the effectiveness of the proposed method. At a low JNR of –6 dB with only 20 training samples, the method achieves a recognition accuracy of 96.88%, demonstrating its robustness and adaptability in challenging electromagnetic environments. These findings provide technical support for the development of anti-jamming strategies and enhance the operational reliability of radar systems in complex battlefield scenarios. -

表 1 干扰信号参数设置

干扰类型 参数设置 取值范围 SMSPJ 复制次数 2~6 C&IJ 子脉冲个数

时隙个数2~4

2NCJ 高斯白噪声 均值0,方差1 SFMJ 调制系数

周期

分段数5×106

2.5 μs

4CSJ 梳状谱个数 4~8 NAMJ 高斯白噪声

幅度均值0,方差1

1NFMJ 高斯白噪声

调制系数均值0,方差1

2×108ISFJ 采样次数

转发次数

占空比3~5

1

0.4~0.6C&IJ+CSJ

SMSPJ+ISFJ由单一干扰参数决定 表 2 不同JNR下的干扰识别结果(%)

JNR(dB) –12 –9 –6 –3 0 3 1DCNN 51.00 61.50 70.22 80.11 85.44 89.23 2DCNN 61.50 84.00 92.00 99.50 100.00 100.00 多域特征融合子网络(软阈值收缩[14]) 52.00 81.00 91.50 97.00 99.50 99.00 多域特征融合子网络(半软阈值收缩) 85.50 93.00 99.00 100.00 100.00 100.00 孪生时域网络 74.88 77.56 81.11 85.24 90.33 92.33 孪生时频域网络 80.33 84.33 93.55 99.85 100.00 100.00 本文方法 87.59 93.50 100.00 100.00 100.00 100.00 表 3 消融实验(%)

半软阈值收缩模块 多尺度卷积块 CBAM JNR(dB) –12 –9 –6 –3 0 3 模型1 √ √ × 85.50 93.50 98.00 99.50 100.00 100.00 模型2 √ × √ 87.50 93.00 99.00 99.00 100.00 100.00 模型3 × √ √ 86.00 93.00 99.00 100.00 100.00 100.00 本文方法 √ √ √ 87.59 93.50 100.00 100.00 100.00 100.00 表 4 不同识别方法在不同JNR下的识别结果(%)

JNR (dB) –12 –9 –6 –3 0 3 RF[3] 73.00 74.50 75.00 83.00 91.50 95.50 VGG[6] 53.50 79.50 88.50 97.00 99.50 100.00 DFCN[7] 61.50 82.50 96.00 97.00 100.00 100.00 ResNet50[8] 59.00 84.00 92.50 100.00 100.00 100.00 MobileViT_CA[9] 58.50 79.50 92.50 97.50 99.50 100.00 CNN[11] 63.50 84.50 92.00 98.00 100.00 100.00 本文方法 87.50 93.50 100.00 100.00 100.00 100.00 表 5 不同方法下各类干扰的识别结果(%)

RF[3] VGG[6] DFCN[7] ResNet50[8] MobileViT_CA[9] CNN[11] 本文方法 SMSPJ 54.16 75.83 90.00 80.83 79.10 84.17 91.67 C&IJ 50.00 95.00 90.83 95.00 97.50 96.67 98.33 NCJ 100.00 80.83 95.83 75.83 83.33 85.83 100.00 SFMJ 67.50 94.17 89.17 95.83 92.50 95.83 98.33 CSJ 75.00 78.33 76.67 88.33 85.83 80.00 95.83 NAMJ 99.17 75.83 97.50 90.00 77.50 81.67 100.00 NFMJ 100.00 99.17 100.00 100.00 100.00 98.33 100.00 ISFJ 95.00 91.67 88.33 94.17 95.83 96.67 96.67 C&IJ+CSJ 88.33 85.00 82.50 82.50 80.83 85.00 93.33 SMSPJ+ISFJ 91.67 87.50 84.17 90.83 86.67 92.50 94.17 准确率 82.08 86.33 89.50 89.33 87.92 89.67 96.83 运算量(GB) \ 5.06 0.43 1.35 0.45 0.54 0.68 参数量(MB) \ 65.10 0.99 23.53 5.07 6.79 0.26 -

[1] YAN Junkun, LIU Hongwei, JIU Bo, et al. Joint detection and tracking processing algorithm for target tracking in multiple radar system[J]. IEEE Sensors Journal, 2015, 15(11): 6534–6541. doi: 10.1109/JSEN.2015.2461435. [2] LI Xinrui, CHEN Baixiao, CHEN Xiaoying, et al. An efficient hybrid jamming suppression method for multichannel synthetic aperture radar based on group iterative separation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5214316. doi: 10.1109/TGRS.2024.3409713. [3] 祝存海. 基于特征提取的雷达有源干扰信号分类研究[D]. [硕士论文], 西安电子科技大学, 2017. doi: 10.7666/d.D01385660.ZHU Cunhai. Research on radar active jamming signal disturbance classification base on feature extraction[D]. [Master dissertation], Xidian University, 2017. doi: 1 0.7666/d.D01385660. [4] 郝万兵, 马若飞, 洪伟. 基于时频特征提取的雷达有源干扰识别[J]. 火控雷达技术, 2017, 46(4): 11–15. doi: 10.3969/j.issn.1008-8652.2017.04.003.HAO Wanbing, MA Ruofei, and HONG Wei. Radar active jamming identification based on time-frequency characteristic extraction[J]. Fire Control Radar Technology, 2017, 46(4): 11–15. doi: 10.3969/j.issn.1008-8652.2017.04.003. [5] WANG Yafeng, SUN Boye, and WANG Ning. Recognition of radar active-jamming through convolutional neural networks[J]. The Journal of Engineering, 2019, 2019(21): 7695–7697. doi: 10.1049/joe.2019.0659. [6] LI Ming, REN Qinghua, and WU Jialong. Interference classification and identification of TDCS based on improved convolutional neural network[C]. 2020 Second International Conference on Artificial Intelligence Technologies and Application (ICAITA), Dalian, China, 2020: 012155. doi: 10.1088/1742-6596/1651/1/012155. [7] SHAO Guangqing, CHEN Yushi, and WEI Yinsheng. Deep fusion for radar jamming signal classification based on CNN[J]. IEEE Access, 2020, 8: 117236–117244. doi: 10.1109/ACCESS.2020.3004188. [8] WANG Jingyi, DONG Wenhao, and SONG Zhiyong. Radar active jamming recognition based on time-frequency image classification[C]. 2021 5th International Conference on Electronic Information Technology and Computer Engineering, Xiamen, China, 2021: 449–454. doi: 10.1145/3501409.3502153. [9] ZOU Wenxu, XIE Kai, and LIN Jinjian. Light‐weight deep learning method for active jamming recognition based on improved MobileViT[J]. IET Radar, Sonar & Navigation, 2023, 17(8): 1299–1311. doi: 10.1049/rsn2.12420. [10] KOCH G, ZEMEL R, and SALAKHUTDINOV R. Siamese neural networks for one-shot image recognition[C]. The 32nd International Conference on Machine Learning, Lille, France, 2015: 1–8. [11] WANG Pengyu, CHENG Yufan, DONG Binhong, et al. Convolutional neural network-based interference recognition[C]. 2020 IEEE 20th International Conference on Communication Technology, Nanning, China, 2020: 1296–1300. doi: 10.1109/ICCT50939.2020.9295942. [12] ZHOU Hongping, WANG Lei, and GUO Zhongyi. Compound radar jamming recognition based on signal source separation[J]. Signal Processing, 2023, 214: 109246. doi: 10.1016/j.sigpro.2023.109246. [13] YANG Jikai, BAI Zhiquan, HU Jiacheng, et al. Time-frequency analysis and convolutional neural network based Fuze jamming signal recognition[C]. The 25th International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Korea, 2023: 277–282. doi: 10.23919/ICACT56868.2023.10079346. [14] ZHAO Minghang, ZHONG Shisheng, FU Xuyun, et al. Deep residual shrinkage networks for fault diagnosis[J]. IEEE Transactions on Industrial Informatics, 2020, 16(7): 4681–4690. doi: 10.1109/TII.2019.2943898. [15] LEI Sai, LU Mingming, LIN Jieqiong, et al. Remote sensing image denoising based on improved semi-soft threshold[J]. Signal, Image and Video Processing, 2021, 15(1): 73–81. doi: 10.1007/s11760-020-01722-3. [16] HUANG Jiru, SHEN Qian, WANG Min, et al. Multiple attention siamese network for high-resolution image change detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5406216. doi: 10.1109/TGRS.2021.3127580. -

下载:

下载:

下载:

下载: