Multimodal Intent Recognition Method with View Reliability

-

摘要: 在人机交互的闲聊型对话中,准确理解用户多模态意图有助于机器为用户提供智能高效的聊天服务。当前的用户多模态意图识别方法面临着跨模态信息交互性与模型不确定性的挑战。该文提出一种基于Transformer的可信多模态意图识别方法。考虑用户意图表达时的文本、视频和音频等数据的异质性,通过模块特定编码模块,生成单模态特征视图;为了捕捉跨模态间的互补性和长距离依赖性,通过跨模态交互模块,生成跨模态特征视图;为了降低模型的不确定性,设计一个多视图可信融合模块,考虑每个视图的可信度进行主观意见的动态融合,基于主观意见的Dirichlet分布,设计一种组合优化策略进行模型训练。最后在多模态意图识别数据集MIntRec上进行实验。实验结果表明,与基线模型相比,该文方法在准确率和召回率上分别提升了1.73%和1.1%。该方法不仅能够提升多模态意图识别的效果,而且能够对每个视图预测结果的可信度进行度量,提高模型的可解释性。Abstract:

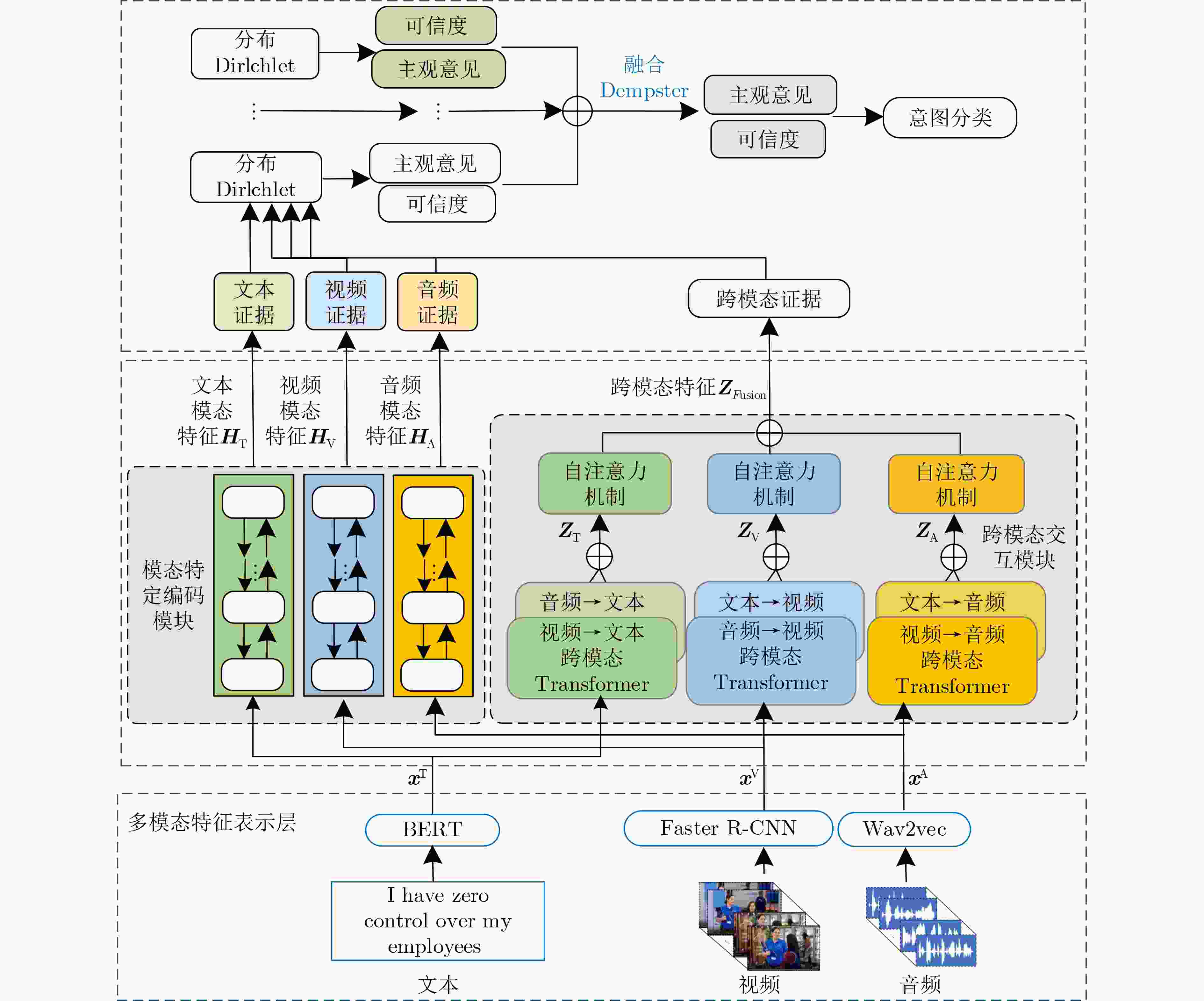

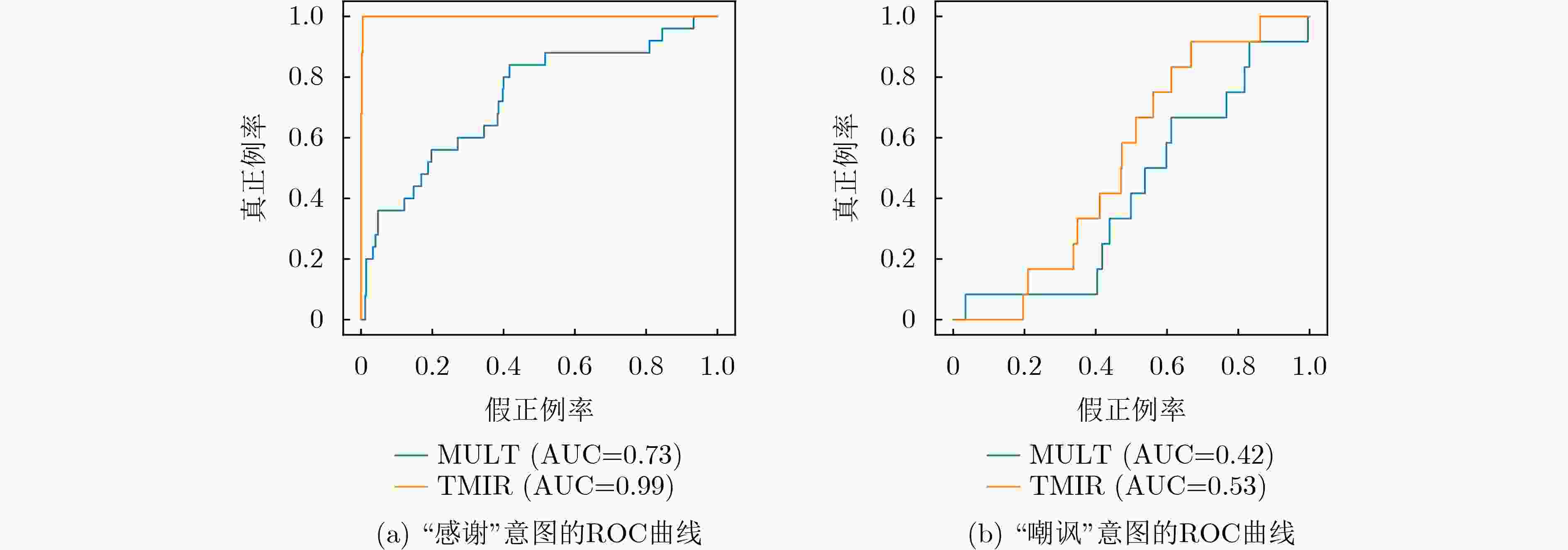

Objective With the rapid advancement of human-computer interaction technologies, accurately recognizing users’ multimodal intentions in social chat dialogue systems has become essential. These systems must process both semantic and affective content to meet users’ informational and emotional needs. However, current approaches face two major challenges: ineffective cross-modal interaction and difficulty handling uncertainty. First, the heterogeneity of multimodal data limits the ability to leverage intermodal complementarity. Second, noise affects the reliability of each modality differently, and traditional methods often fail to account for these dynamic variations, leading to suboptimal fusion performance. To address these limitations, this study proposes a Trusted Multimodal Intent Recognition (TMIR) method. TMIR adaptively fuses multimodal information by assessing the credibility of each modality, thereby enhancing intent recognition accuracy and model interpretability. This approach supports intelligent and personalized services in open-domain conversational systems. Methods The TMIR method is developed to improve the accuracy and reliability of intent recognition in social chat dialogue systems. It consists of three core modules: a multimodal feature representation layer, a multi-view feature extraction layer, and a trusted fusion layer ( Fig. 1 ). In the multimodal feature representation layer, BERT, Wav2Vec 2.0, and Faster R-CNN are used to extract features from text, audio, and video inputs, respectively. The multi-view feature extraction layer comprises a cross-modal interaction module and a modality-specific encoding module. The cross-modal interaction module applies cross-modal Transformers to generate cross-modal feature views, enabling the model to capture complementary information between modalities (e.g., text and audio). This enhances the expressiveness of the overall feature representation. The modality-specific encoding module employs Bi-LSTM to extract unimodal feature views, preserving the distinct characteristics of each modality. In the trusted fusion layer, features from each view are converted into evidence. Subjective opinions are formulated according to subjective logic theory and are fused dynamically using Dempster’s combination rules. This process yields the final intent recognition result and provides a measure of credibility. To optimize model training, a combinatorial strategy based on Dirichlet distribution expectation is applied, which reduces uncertainty and enhances recognition reliability.Results and Discussions The TMIR method is evaluated on the MIntRec dataset, achieving a 1.73% improvement in accuracy and a 1.1% increase in recall compared with the baseline ( Table 2 ). Ablation studies confirm the contribution of each module: removing the cross-modal interaction and modality-specific encoding components results in a 3.82% drop in accuracy, highlighting their roles in capturing intermodal interactions and preserving unimodal features (Table 3 ). Excluding the multi-view trusted fusion module reduces accuracy by 1.12% and recall by 1.67%, demonstrating the effectiveness of credibility-based dynamic fusion in enhancing generalization (Table 3 ). Receiver Operating Characteristic (ROC) curve analysis (Fig. 2 ) shows that TMIR outperforms the MULT model in detecting both “thanks” and “taunt” intents, with higher Area Under the Curve (AUC) values. In terms of computational efficiency, TMIR maintains comparable FLOPs and parameter counts to existing multimodal models (Table 4 ), indicating its feasibility for real-world deployment. These results demonstrate that TMIR effectively balances performance and efficiency, offering a promising approach for robust multimodal intent recognition.Conclusions This study proposes a TMIR method. By addressing the heterogeneity and uncertainty of multimodal data—specifically text, audio, and video—the method incorporates a cross-modal interaction module, a modality-specific encoding module, and a multi-view trusted fusion module. These components collectively enhance the accuracy and interpretability of intent recognition. Experimental results demonstrate that TMIR outperforms the baseline in both accuracy and recall, and exhibits strong generalization in handling multimodal inputs. Future work will address class imbalance and the dynamic identification of emerging intent categories. The method also holds potential for broader application in domains such as healthcare and customer service, supporting its multi-domain scalability. -

Key words:

- Intent recognition /

- Multimodal fusion /

- Multi-view learning

-

表 1 可调参数设置

参数名称 参数值 向量维度(文本、视频、音频) 768, 256, 768 序列最大长度(文本、视频、音频) 48, 480, 230 学习率 10–5 Batch-size 20 CNN卷积核大小(文本、视频、音频) 5, 10, 10 CNN卷积核通道数(文本、视频、音频) 120, 120, 120 Transformer层数 8 BiLSTM隐藏层大小(文本、视频、音频) 60,60,60 表 2 对比方法实验结果(%)

模态 模型 Acc m-P m-R m-F1 ConvLSTM 70.11 57.90 60.09 58.38 单模态 MDL 70.79 70.38 66.76 66.89 Wav2vec+Transformer 24.04 7.31 12.25 8.72 Faster R-CNN+Transformer 15.28 7.86 8.12 5.77 MISA 71.91 70.46 67.82 68.07 MULT 72.52 70.25 69.24 69.25 多模态 MAG-BERT 72.65 69.08 69.28 68.64 EMRFM 72.58 71.90 70.45 70.46 MAP 72.13 72.50 68.80 71.80 TMIR 74.38 72.83 71.55 71.36 表 3 消融实验对比结果(%)

模型 Acc m-P m-R m-F1 w/o 多视图特征提取层 70.56 68.70 66.78 66.94 w/o音频-文本Transformer 72.58 72.23 70.26 70.15 w/o 视频-文本Transformer 72.13 70.98 70.85 70.04 w/o 文本-音频Transformer 71.91 66.87 69.54 67.32 w/o 视频-音频Transformer 72.13 71.85 70.91 70.75 w/o 音频-视频Transformer 73.26 67.76 70.60 68.52 w/o 文本-视频Transformer 72.58 70.99 71.18 70.45 w/o 多视图可信融合模块 73.26 71.16 71.14 70.37 TMIR 74.38 72.83 71.55 71.36 表 4 浮点计算量与参数量对比结果

模型 FLOPs(G) Params(M) MISA 15.58 115.93 MULT 16.60 105.03 DEAN 14.22 89.88 MAG-BERT 14.04 88.43 TMIR 17.14 108.24 -

[1] ZHANG Hanlei, XU Hua, WANG Xin, et al. MIntRec: A new dataset for multimodal intent recognition[C]. The 30th ACM International Conference on Multimedia, Lisboa, Portugal, 2022: 1688–1697. doi: 10.1145/3503161.3547906. [2] SINGH U, ABHISHEK K, and AZAD H K. A survey of cutting-edge multimodal sentiment analysis[J]. ACM Computing Surveys, 2024, 56(9): 227. doi: 10.1145/3652149. [3] HAO Jiaqi, ZHAO Junfeng, and WANG Zhigang. Multi-modal sarcasm detection via graph convolutional network and dynamic network[C]. The 33rd ACM International Conference on Information and Knowledge Management, Boise, USA, 2024: 789–798. doi: 10.1145/3627673.3679703. [4] KRUK J, LUBIN J, SIKKA K, et al. Integrating text and image: Determining multimodal document intent in Instagram posts[C]. The 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 2019: 4622–4632. doi: 10.18653/v1/D19-1469. [5] ZHANG Lu, SHEN Jialie, ZHANG Jian, et al. Multimodal marketing intent analysis for effective targeted advertising[J]. IEEE Transactions on Multimedia, 2022, 24: 1830–1843. doi: 10.1109/TMM.2021.3073267. [6] MAHARANA A, TRAN Q, DERNONCOURT F, et al. Multimodal intent discovery from livestream videos[C]. Findings of the Association for Computational Linguistics: NAACL, Seattle, USA, 2022: 476–489. doi: 10.18653/v1/2022.findings-naacl.36. [7] SINGH G V, FIRDAUS M, EKBAL A, et al. EmoInt-trans: A multimodal transformer for identifying emotions and intents in social conversations[J]. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2023, 31: 290–300. doi: 10.1109/TASLP.2022.3224287. [8] 钱岳, 丁效, 刘挺, 等. 聊天机器人中用户出行消费意图识别方法[J]. 中国科学: 信息科学, 2017, 47(8): 997–1007. doi: 10.1360/N112016-00306.QIAN Yue, DING Xiao, LIU Ting, et al. Identification method of user's travel consumption intention in chatting robot[J]. Scientia Sinica Informationis, 2017, 47(8): 997–1007. doi: 10.1360/N112016-00306. [9] TSAI Y H H, BAI Shaojie, LIANG P P, et al. Multimodal transformer for unaligned multimodal language sequences[C]. The 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 2019: 6558–6569. doi: 10.18653/v1/P19-1656. [10] HAZARIKA D, ZIMMERMANN R, and PORIA S. MISA: Modality-invariant and -specific representations for multimodal sentiment analysis[C]. The 28th ACM International Conference on Multimedia, Seattle, USA, 2020: 1122–1131. doi: 10.1145/3394171.3413678. [11] HUANG Xuejian, MA Tinghuai, JIA Li, et al. An effective multimodal representation and fusion method for multimodal intent recognition[J]. Neurocomputing, 2023, 548: 126373. doi: 10.1016/j.neucom.2023.126373. [12] HAN Zongbo, ZHANG Changqing, FU Huazhu, et al. Trusted multi-view classification with dynamic evidential fusion[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(2): 2551–2566. doi: 10.1109/TPAMI.2022.3171983. [13] BAEVSKI A, ZHOU H, MOHAMED A, et al. Wav2vec 2.0: A framework for self-supervised learning of speech representations[C]. Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 1044. doi: 10.5555/3495724.3496768. [14] LIU Wei, YUE Xiaodong, CHEN Yufei, et al. Trusted multi-view deep learning with opinion aggregation[C]. The Thirty-Sixth AAAI Conference on Artificial Intelligence, 2022: 7585–7593. doi: 10.1609/aaai.v36i7.20724. [15] ZHANG Zhu, WEI Xuan, ZHENG Xiaolong, et al. Detecting product adoption intentions via multiview deep learning[J]. INFORMS Journal on Computing, 2022, 34(1): 541–556. doi: 10.1287/ijoc.2021.1083. [16] RAHMAN W, HASAN M K, LEE S, et al. Integrating multimodal information in large pretrained transformers[C]. The 58th Annual Meeting of the Association for Computational Linguistics, 2020: 2359–2369. doi: 10.18653/v1/2020.acl-main.214. [17] ZHOU Qianrui, XU Hua, LI Hao, et al. Token-level contrastive learning with modality-aware prompting for multimodal intent recognition[C]. The Thirty-Eighth AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2024: 17114–17122. doi: 10.1609/aaai.v38i15.29656. -

下载:

下载:

下载:

下载: