Scale Adaptive Fusion Network for Multimodal Remote Sensing Data Classification

-

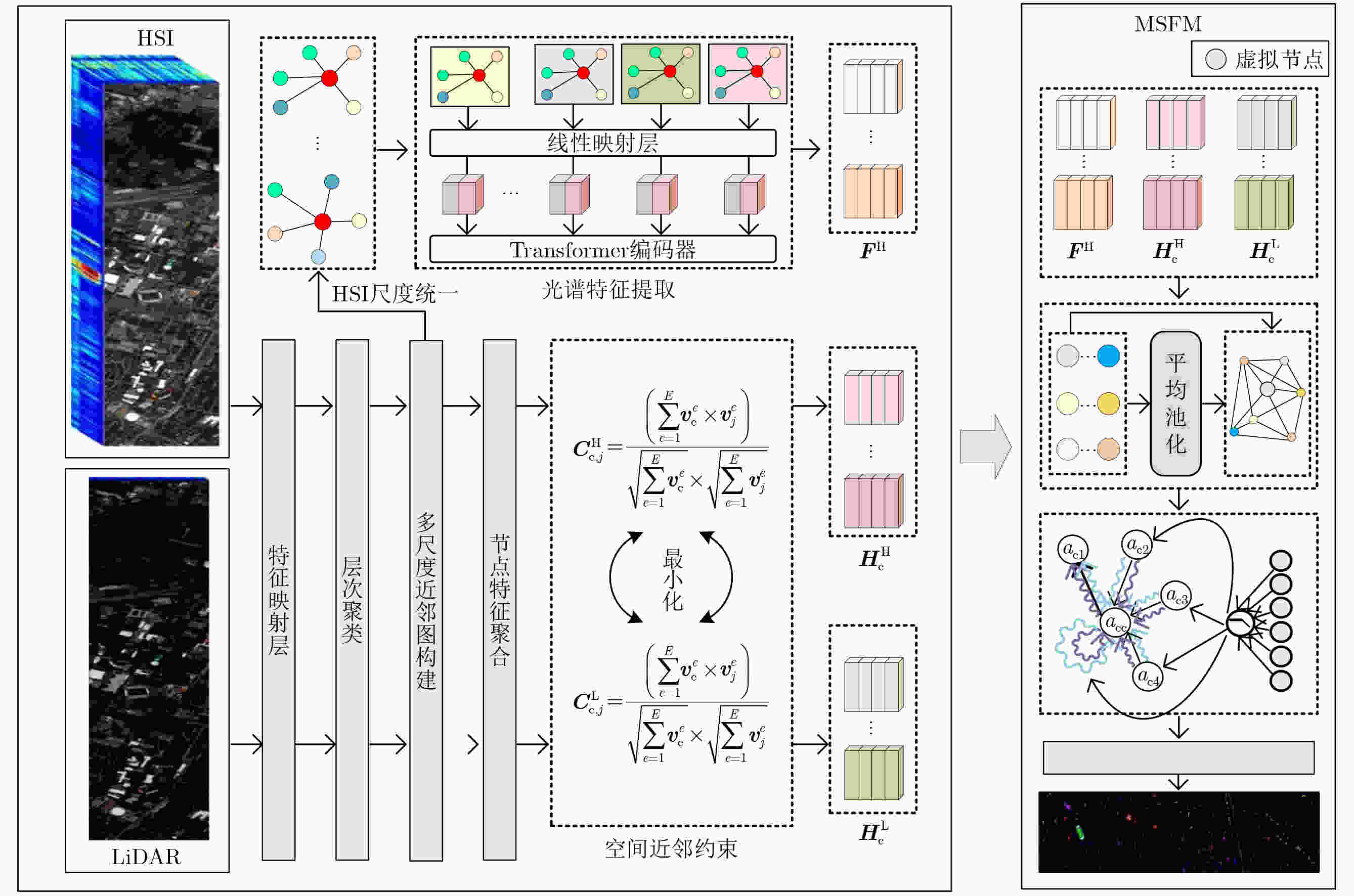

摘要: 多模态融合方法能够利用不同模态的互补特性有效提升地物分类的准确性,近年来成为各领域的研究热点。现有多模态融合方法被成功应用于面向高光谱图像(HSI)和激光雷达(LiDAR)的联合分类任务。然而,现有的研究仍面临许多挑战,包括地物间空间依赖关系难捕获,多模态数据中判别性信息难获取等。为应对上述挑战,该文将多模态、多尺度、多视角特征融合整合到一个统一的框架中,提出一种尺度自适应融合网络(SAFN)。首先,提出动态多尺度图模块以捕获地物复杂的空间依赖关系,提升模型对不规则地物以及尺度迥异地物的适应能力。其次,基于激光雷达和高光谱图像的互补特性,约束同一空间近邻区域内的地物具有相近的特征表示,获取判别性遥感特征。然后,提出多模态空-谱融合模块,建立多模态、多尺度、多视角特征间的信息交互,捕获各特征间可共享的类辨识信息,为地物分类任务提供具有判别性的融合特征。最后,将融合特征输入分类器中得到类别概率得分,对地物类别进行预测。为验证方法的有效性,该文在3个数据集(Houston, Trento, MUUFL)上进行了实验。实验结果表明,与现有主流算法相比较,SAFN在多源遥感数据分类任务中取得了最佳的视觉效果和最高精度。Abstract: The multimodal fusion method can effectively improve the ground object classification accuracy by using the complementary characteristics of different modalities, which has become a research hotspot in various fields in recent years. The existing multimodal fusion methods have been successfully applied to multi-source remote sensing classification tasks oriented to HyperSpectral Image (HSI) and Light Detection And Ranging (LiDAR). However, existing research still faces many challenges, including difficulty in capturing spatial dependencies among irregular ground objects and obtaining discriminative information in multimodal data. To address the above challenges, a Scale Adaptive Fusion Network (SAFN)is proposed in this paper, by integrating the fusion of multimodal, multiscale, and multiview features into a unified framework. First, a dynamic multiscale graph module is proposed to capture the complex spatial dependencies of ground object, enhancing the model’s adaptability to irregular and scale-dissimilar ground object. Second, the complementary properties of LiDAR and HSI are utilized to constrain ground object within the same spatial neighborhood to have similar feature representations, thereby acquiring discriminative remote sensing features. Then, a multimodal spatial-spectral graph fusion module is proposed to establish feature interactions among multimodal, multiscale, and multiview features, providing discriminative fusion features for classification tasks by capturing class-recognition information that can be shared among features. Finally, the fusion features are fed into a classifier to obtain class probability scores for predicting the ground object class. To verify the effectiveness of SAFN, experiments are conducted on three datasets (i.e., Houston, Trento, and MUUFL). The experimental results show that, SAFN achieved state-of-the-art performance in multi-source remote sensing data classification tasks when compared with existing mainstream methods.

-

表 1 Houston数据集分类精度(%)

类别 CNN HRWN EndNet CCR-Net FGCN CNN-DF-S MEDFN SAFN Healthy grass 98.62 89.60 99.35 97.48 95.42 92.20 94.96 81.48 Stressed grass 94.41 97.08 99.51 86.14 92.11 99.35 83.47 97.25 Synthetic grass 94.39 99.85 99.85 97.49 98.92 98.23 97.49 99.71 Trees 98.20 92.08 90.28 99.10 96.39 98.20 96.49 97.30 Soil 99.02 99.51 97.71 99.67 99.74 99.76 98.53 97.71 Water 82.62 90.49 95.74 96.72 94.18 93.44 98.69 85.57 Residential 69.39 79.41 83.89 89.58 78.90 77.89 86.22 94.15 Commercial 67.40 79.49 51.63 69.85 80.74 75.49 83.99 89.30 Road 74.76 53.90 73.54 96.40 76.37 64.69 76.22 86.04 Highway 79.79 86.00 87.57 88.57 87.94 90.89 94.12 87.99 Railway 75.89 64.28 84.77 80.82 83.38 77.04 89.71 85.93 Parking lot 1 62.41 77.41 73.54 81.62 78.87 85.24 94.23 95.30 Parking lot 2 83.30 85.52 85.75 86.86 83.05 93.32 95.10 99.56 Tennis court 98.78 99.02 100 99.27 98.41 99.76 99.51 99.76 Running track 97.50 99.06 99.38 96.41 98.03 100 98.28 95.00 OA 83.75 84.22 86.30 87.79 88.32 87.98 91.11 92.17 AA 85.09 86.18 88.17 89.27 89.50 89.70 92.47 92.80 Kappa 82.45 82.93 86.21 86.71 87.36 87.00 90.39 91.54 表 2 Trento数据集分类精度(%)

类别 CNN HRWN EndNet CCR-Net FGCN CNN-DF-S MEDFN SAFN Apple trees 95.04 96.01 95.37 96.11 97.09 98.96 97.78 99.53 Buildings 77.77 81.93 94.03 96.53 92.02 92.08 97.82 97.85 Ground 97.82 100 99.78 96.95 97.82 99.47 99.13 96.30 Woods 99.78 99.50 99.53 99.96 99.98 99.57 99.97 99.90 Vineyard 98.57 98.51 99.04 99.74 99.95 98.57 99.96 99.46 Roads 79.04 86.21 81.20 81.26 87.83 90.05 94.07 97.78 OA 94.41 95.62 96.35 97.03 97.51 97.44 98.84 99.22 AA 91.34 93.69 94.82 59.09 95.78 96.45 98.12 98.47 Kappa 92.56 94.16 95.15 96.04 96.68 96.57 98.45 98.96 表 3 MUUFL数据集分类精度(%)

类别 CNN HRWN EndNet CCR-Net FGCN CNN-DF-S MEDFN SAFN Trees 81.82 81.85 80.01 83.16 82.39 83.63 80.94 82.63 Mostly grass 65.47 61.94 80.90 71.52 76.71 76.19 76.33 84.99 Mixed ground surface 55.96 59.40 57.51 58.18 67.20 50.25 71.31 70.72 Dirt and sand 78.78 79.90 79.62 86.54 87.22 72.81 90.77 86.93 Road 75.76 71.21 79.49 72.79 76.96 81.87 85.63 86.85 Water 98.32 98.08 92.55 98.56 97.84 96.86 91.83 98.21 Building shadow 82.50 87.36 84.52 78.24 77.74 79.49 82.68 92.23 Building 72.71 77.16 71.65 80.24 76.70 84.57 83.93 87.25 Sidewalk 45.62 63.52 61.65 67.34 64.49 59.41 77.90 73.33 Yellow curb 57.14 66.92 77.44 77.44 81.20 64.42 88.72 96.93 Cloth panels 98.17 96.35 95.43 99.09 99.54 90.76 99.54 93.57 OA 74.52 75.36 76.25 77.07 78.35 77.57 80.77 82.88 AA 73.81 76.70 78.43 79.37 80.73 76.39 84.51 86.70 Kappa 67.63 68.63 69.90 70.87 72.39 71.49 75.57 78.19 表 4 不同组件对总体精度的影响(%)

数据集 SAFN-A SAFN-B SAFN-C SAFN Houston 2013 87.89 89.23 90.35 92.17 Trento 96.73 97.54 98.48 99.22 MUUFL 78.13 80.15 81.76 82.88 -

[1] WANG Leiquan, ZHU Tongchuan, KUMAR N, et al. Attentive-adaptive network for hyperspectral images classification with noisy labels[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5505514. doi: 10.1109/TGRS.2023.3254159. [2] HANG Renlong, LI Zhu, GHAMISI P, et al. Classification of hyperspectral and LiDAR data using coupled CNNs[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(7): 4939–4950. doi: 10.1109/TGRS.2020.2969024. [3] 王成龙, 赵倩, 赵琰, 等. 基于深度可分离卷积的实时遥感目标检测算法[J]. 电光与控制, 2022, 29(8): 45–49. doi: 10.3969/j.issn.1671-637X.2022.08.009.WANG Chenglong, ZHAO Qian, ZHAO Yan, et al. A real-time remote sensing target detection algorithm based on depth separable convolution[J]. Electronics Optics & Control, 2022, 29(8): 45–49. doi: 10.3969/j.issn.1671-637X.2022.08.009. [4] AHMAD M, KHAN A M, MAZZARA M, et al. Multi-layer extreme learning machine-based autoencoder for hyperspectral image classification[C]. The 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech, 2019: 75–82. doi: 10.5220/0007258000750082. [5] CUI Ying, SHAO Chao, LUO Li, et al. Center weighted convolution and GraphSAGE cooperative network for hyperspectral image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5508216. doi: 10.1109/TGRS.2023.3264653. [6] LI Mingsong, LI Wei, LIU Yikun, et al. Adaptive mask sampling and manifold to Euclidean subspace learning with distance covariance representation for hyperspectral image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5508518. doi: 10.1109/TGRS.2023.3265388. [7] OU Xianfeng, WU Meng, TU Bing, et al. Multi-objective unsupervised band selection method for hyperspectral images classification[J]. IEEE Transactions on Image Processing, 2023, 32: 1952–1965. doi: 10.1109/TIP.2023.3258739. [8] XUE Zhixiang, TAN Xiong, YU Xuchu, et al. Deep hierarchical vision transformer for hyperspectral and LiDAR data classification[J]. IEEE Transactions on Image Processing, 2022, 31: 3095–3110. doi: 10.1109/TIP.2022.3162964. [9] 赵伍迪, 李山山, 李安, 等. 结合深度学习的高光谱与多源遥感数据融合分类[J]. 遥感学报, 2021, 25(7): 1489–1502. doi: 10.11834/jrs.20219117.ZHAO Wudi, LI Shanshan, LI An, et al. Deep fusion of hyperspectral images and multi-source remote sensing data for classification with convolutional neural network[J]. National Remote Sensing Bulletin, 2021, 25(7): 1489–1502. doi: 10.11834/jrs.20219117. [10] ZHAO Xudong, ZHANG Mengmeng, TAO Ran, et al. Fractional Fourier image transformer for multimodal remote sensing data classification[J]. IEEE Transactions on Neural Networks and Learning Systems, 2024, 35(2): 2314–2326. doi: 10.1109/TNNLS.2022.3189994. [11] ROY S K, DERIA A, HONG Danfeng, et al. Hyperspectral and LiDAR data classification using joint CNNs and morphological feature learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5530416. doi: 10.1109/TGRS.2022.3177633. [12] 孙强, 陈远. 多层次时空特征自适应集成与特有-共享特征融合的双模态情感识别[J]. 电子与信息学报, 2024, 46(2): 574–587. doi: 10.11999/JEIT231110.SUN Qiang and CHEN Yuan. Bimodal emotion recognition with adaptive integration of multi-level spatial-temporal features and specific-shared feature fusion[J]. Journal of Electronics & Information Technology. 2024, 46(2): 574–587. doi: 10.11999/JEIT231110. [13] 雷大江, 杜加浩, 张莉萍, 等. 联合多流融合和多尺度学习的卷积神经网络遥感图像融合方法[J]. 电子与信息学报, 2022, 44(1): 237–244. doi: 10.11999/JEIT200792.LEI Dajiang, DU Jiahao, ZHANG Liping, et al. Multi-stream architecture and multi-scale convolutional neural network for remote sensing image fusion[J]. Journal of Electronics & Information Technology, 2022, 44(1): 237–244. doi: 10.11999/JEIT200792. [14] JIA Sen, ZHAN Zhangwei, ZHANG Meng, et al. Multiple feature-based superpixel-level decision fusion for hyperspectral and LiDAR data classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(2): 1437–1452. doi: 10.1109/TGRS.2020.2996599. [15] ZHAO Guangrui, YE Qiaolin, SUN Le, et al. Joint classification of hyperspectral and LiDAR data using a hierarchical CNN and transformer[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5500716. doi: 10.1109/TGRS.2022.3232498. [16] LI Hengchao, HU Wenshuai, LI Wei, et al. A3 CLNN: Spatial, spectral and multiscale attention ConvLSTM neural network for multisource remote sensing data classification[J]. IEEE Transactions on Neural Networks and Learning Systems, 2022, 33(2): 747–761. doi: 10.1109/TNNLS.2020.3028945. [17] ZHANG Mengmeng, LI Wei, ZHANG Yuxiang, et al. Hyperspectral and LiDAR data classification based on structural optimization transmission[J]. IEEE Transactions on Cybernetics, 2023, 53(5): 3153–3164. doi: 10.1109/TCYB.2022.3169773. [18] LI Jiaojiao, MA Yinle, SONG Rui, et al. A triplet semisupervised deep network for fusion classification of hyperspectral and LiDAR data[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5540513. doi: 10.1109/TGRS.2022.3213513. [19] DONG Wenqian, ZHANG Tian, QU Jiahui, et al. Multibranch feature fusion network with self- and cross-guided attention for hyperspectral and LiDAR classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5530612. doi: 10.1109/TGRS.2022.3179737. [20] 马梁, 苟于涛, 雷涛, 等. 基于多尺度特征融合的遥感图像小目标检测[J]. 光电工程, 2022, 49(4): 210363. doi: 10.12086/oee.2022.210363.MA Liang, GOU Yutao, LEI Tao, et al. Small object detection based on multi-scale feature fusion using remote sensing images[J]. Opto-Electronic Engineering, 2022, 49(4): 210363. doi: 10.12086/oee.2022.210363. [21] ZHANG Zhongqiang, LIU Danhua, GAO Dahua, et al. A novel spectral-spatial multi-scale network for hyperspectral image classification with the Res2Net block[J]. International Journal of Remote Sensing, 2022, 43(3): 751–777. doi: 10.1080/01431161.2021.2005840. [22] XU Kejie, ZHAO Yue, ZHANG Lingming, et al. Spectral–spatial residual graph attention network for hyperspectral image classification[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 5509305. doi: 10.1109/LGRS.2021.3111985. [23] TAN Xiong and XUE Zhixiang. Spectral-spatial multi-layer perceptron network for hyperspectral image land cover classification[J]. European Journal of Remote Sensing, 2022, 55(1): 409–419. doi: 10.1080/22797254.2022.2087540. [24] HONG Danfeng, HAN Zhu, YAO Jing, et al. SpectralFormer: Rethinking hyperspectral Image classification with transformers[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5518615. doi: 10.1109/TGRS.2021.3130716. [25] HAMILTON W L, YING R, and LESKOVEC J. Inductive representation learning on large graphs[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 1025–1035. [26] YU Haoyang, ZHANG Hao, LIU Yao, et al. Dual-channel convolution network with image-based global learning framework for hyperspectral image classification[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 6005705. doi: 10.1109/LGRS.2021.3139358. [27] ZHAO Xudong, TAO Ran, LI Wei, et al. Joint classification of hyperspectral and LiDAR data using hierarchical random walk and deep CNN architecture[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(10): 7355–7370. doi: 10.1109/TGRS.2020.2982064. [28] HONG Danfeng, GAO Lianru, HANG Renlong, et al. Deep encoder–decoder networks for classification of hyperspectral and LiDAR data[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 5500205. doi: 10.1109/LGRS.2020.3017414. [29] WU Xin, HONG Danfeng, and CHANUSSOT J. Convolutional neural networks for multimodal remote sensing data classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5517010. doi: 10.1109/TGRS.2021.3124913. [30] ZHAO Xudong, TAO Ran, LI Wei, et al. Fractional Gabor convolutional network for multisource remote sensing data classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5503818. doi: 10.1109/TGRS.2021.3065507. [31] WANG Haoyu, CHENG Yuhu, LIU Xiaomin, et al. Reinforcement learning based Markov edge decoupled fusion network for fusion classification of hyperspectral and LiDAR[J]. IEEE Transactions on Multimedia, 2024, 26: 7174–7187. doi: 10.1109/TMM.2024.3360717. -

下载:

下载:

下载:

下载: