Research on Open-Set Object Detection in Remote Sensing Images Based on Adaptive Pre-Screening

-

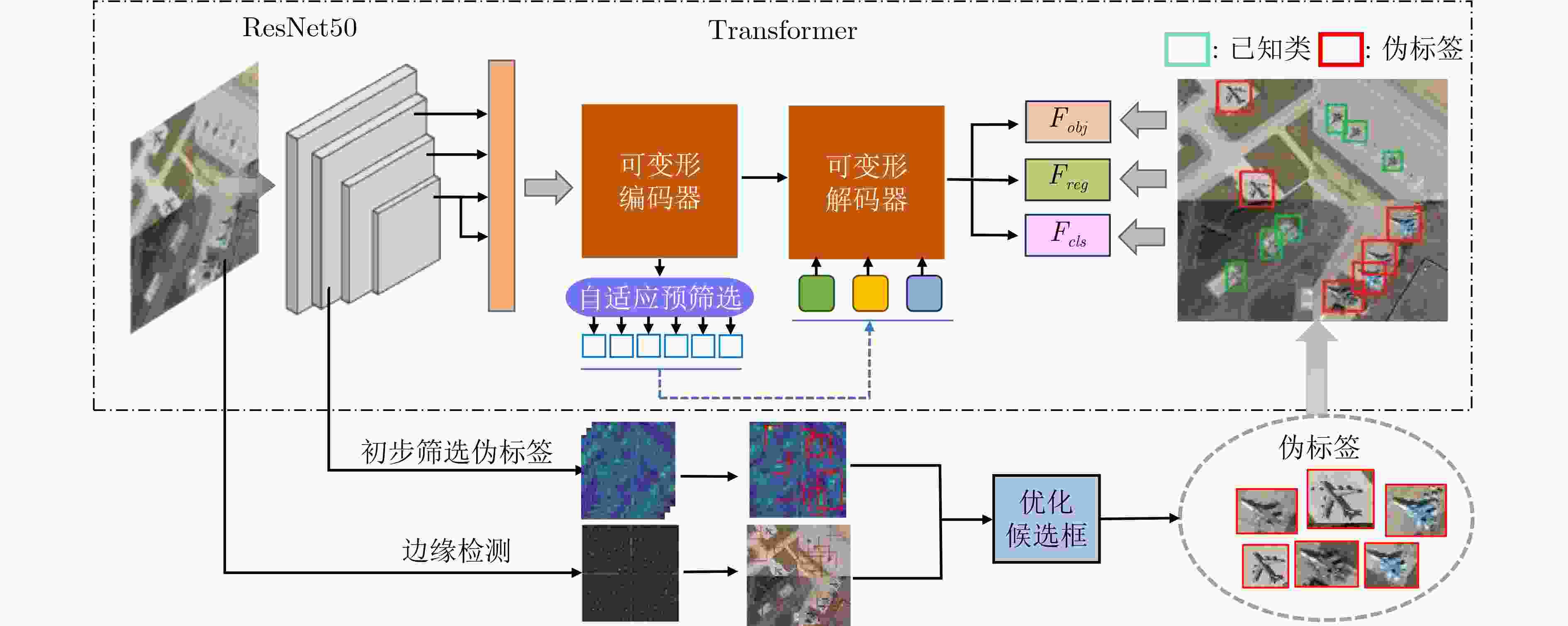

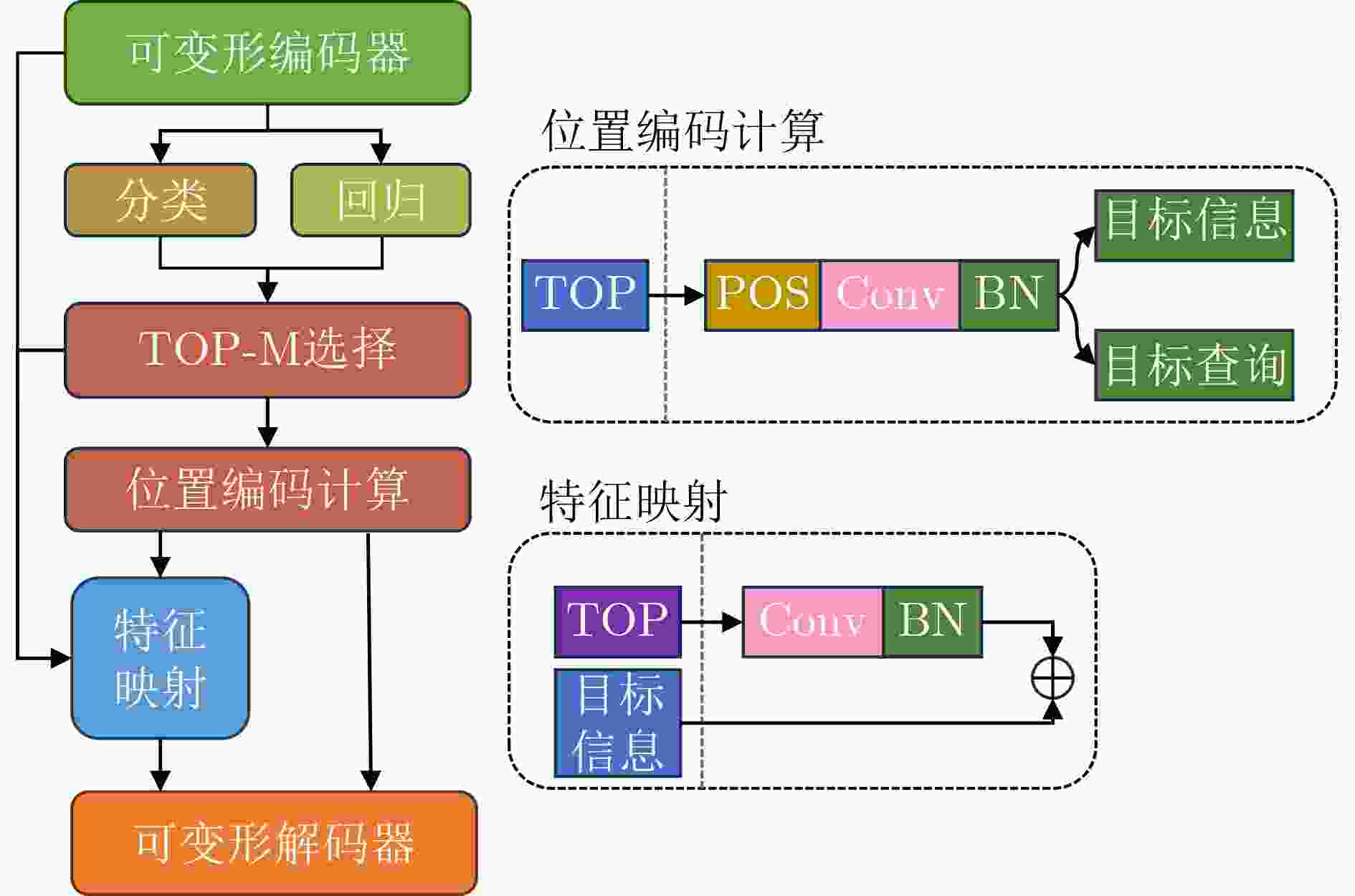

摘要: 开放动态环境下目标类别不断丰富,遥感目标检测问题不能局限于已知类目标的鉴别,还需要对未知类目标做出有效判决。该文设计一种基于自适应预筛选的遥感开集目标检测网络,首先,提出面向目标候选框的自适应预筛选模块,依据筛选出的候选框坐标得到具有丰富语义信息和空间特征的查询传递至解码器。然后,结合原始图像中目标边缘信息提出一种伪标签选取方法,并以开集判决为目的构造损失函数,提高网络对未知新类特征的学习能力。最后,采用MAR20飞机目标识别数据集模拟不同的开放动态遥感目标检测环境,通过广泛的对比实验和消融实验,验证了该文方法能够实现对已知类目标的可靠检测和未知类目标的有效检出。Abstract: In open, dynamic environments where the range of object categories continually expands, the challenge of remote sensing object detection is to detect a known set of object categories while simultaneously identifying unknown objects. To this end, a remote sensing open-set object detection network based on adaptive pre-screening is proposed. Firstly, an adaptive pre-screening module is proposed for object region proposals. Based on the coordinates of the selected region proposals, queries with rich semantic information and spatial features are generated and passed to the decoder. Subsequently, a pseudo-label selection method is devised based on object edge information, and loss functions are constructed with the aim of open set classification to enhance the network’s ability to learn knowledge of unknown classes. Finally, the Military Aircraft Recognition (MAR20) dataset is used to simulate various dynamic environments. Extensive comparative experiments and ablation experiments show that the proposed method can achieve reliable detection of known and unknown objects.

-

1 基于图像边缘信息的伪标签选取算法

输入:当前迭代$ t $条件下:对应特征图$ \boldsymbol{A} $;经过DDETR匹配机制剩余的预测候选框$ {{\boldsymbol{b}}}_{i}^{{\mathrm{F}}} $;基于图像边缘信息生成的候选框$ {{\boldsymbol{b}}}_{j}^{{\mathrm{E}}} $;损失存储队

列$ {L}_{m} $;微调参数$ {\lambda }_{p} $和$ {\lambda }_{n} $;权重更新迭代次数$ {T}_{w} $;权重值$ {w}_{1} $和$ {w}_{2} $;伪标签个数$ u $输出:当前迭代$ t $条件下:图像的伪标签 1. while train do: 2. 式(1)初步得到基于卷积特征的目标置信度得分$ F\left({{\boldsymbol{b}}}_{i}^{{\mathrm{F}}}\right) $; 3. 式(3)得到基于图像底层边缘信息的目标置信度得分S$ \left({{\boldsymbol{b}}}_{i}^{\mathrm{{E}}}\right) $; 4. if $ t\mathrm{\%}{T}_{w}==0 $ then: 5. 使用式(7)和$ {L}_{m} $计算$ \Delta l $; 6. 使用式(8)计算$ \Delta w $; 7. 使用式(5)更新权重值$ {w}_{1} $和$ {w}_{2} $; 8. end if 9. 使用式(4)得到剩余的预测候选框$ {{\boldsymbol{b}}}_{i}^{{\mathrm{F}}} $的最终目标置信度分数$ {F}_{i}^{{\mathrm{new}}} $; 10. 对$ {F}_{i}^{\mathrm{n}\mathrm{e}\mathrm{w}} $从大到小排序,选取前$ {u} $个候选框标记“未知类”。 表 1 MAR20数据集图像数量分布情况

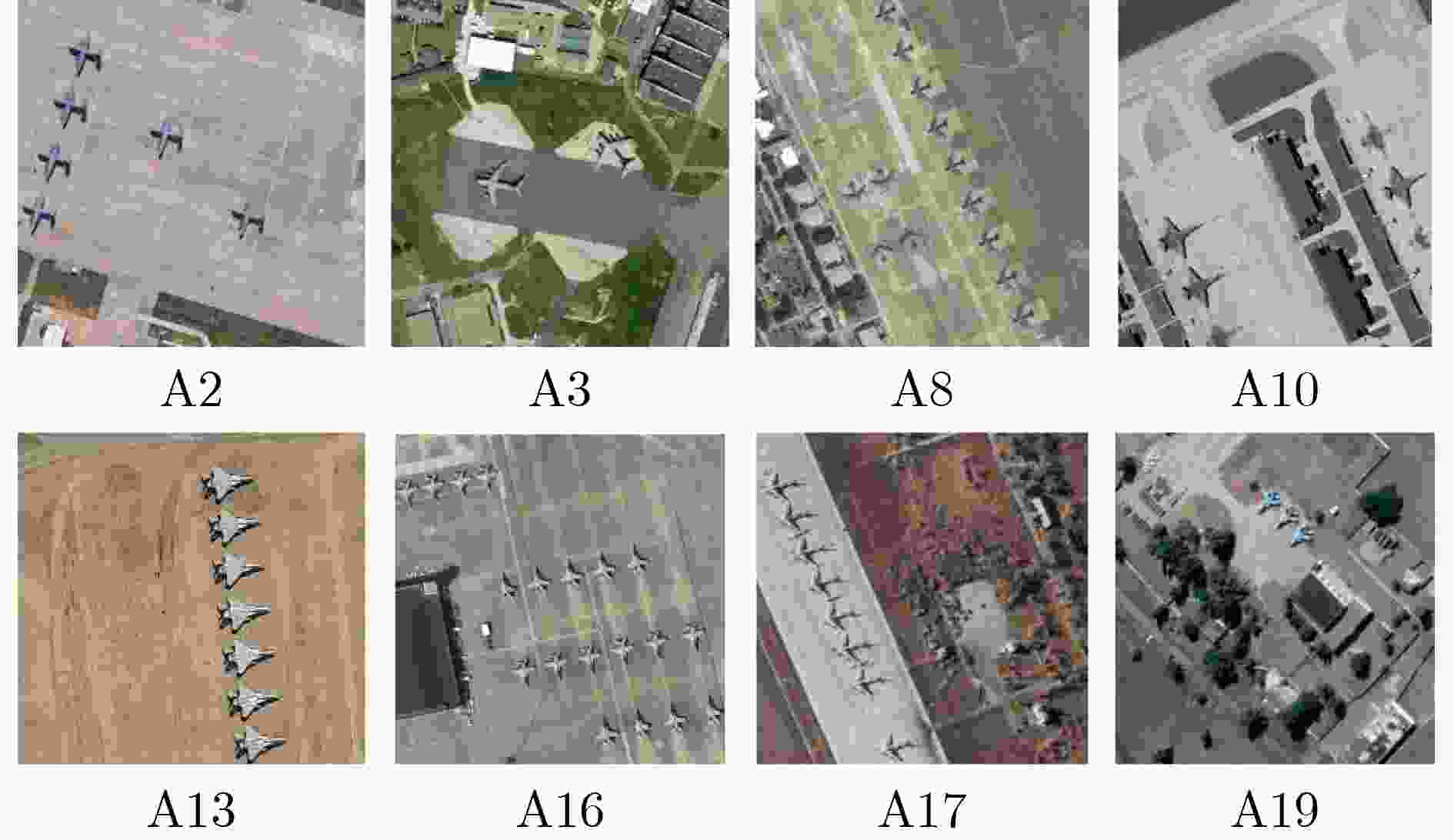

A1 A2 A3 A4 A5 A6 A7 A8 A9 A10 168 16 150 70 247 31 100 142 146 146 A11 A12 A13 A14 A15 A16 A17 A18 A19 A20 86 66 212 252 108 265 173 37 129 130 含多类 1017 总计 3842 表 2 开集目标检测任务

实验编号 未知类 目标类别 训练与测试比例 总计 #已知类+#未知类 已知类 未知类 训练 测试 任务1 0.75 A1~A5 A6~A20 644 161 805 任务2 0.5 A1~A10 A11~A20 736 185 921 任务3 0.25 A1~A15 A16~A20 764 192 956 表 3 网络检测结果对比(%)

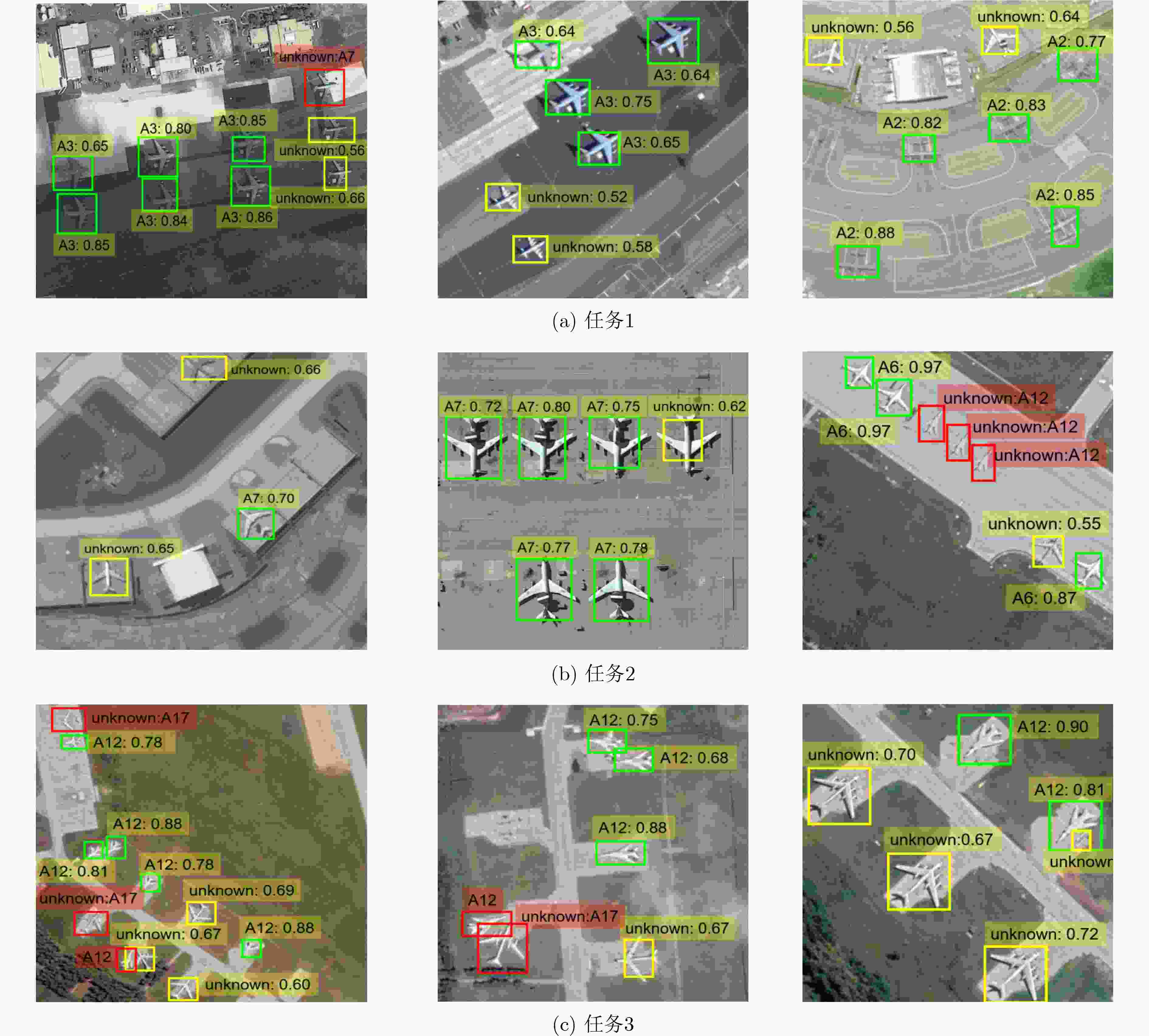

任务编号 任务1 任务2 任务3 已知类mAP 未知类召回率 已知类mAP 未知类召回率 已知类mAP 未知类召回率 Faster-RCNN 73.95 – 77.84 – 88.18 – YOLOv3 88.02 – 88.40 – 88.86 – DDETR 84.30 – 87.60 – 88.95 – OW-DETR 82.52 17.66 87.90 29.68 87.66 30.41 CAT 77.40 21.21 83.78 36.09 85.05 53.42 本文算法 89.09 38.67 90.35 47.17 90.38 61.20 表 4 模块验证实验结果(%)

模块 任务1消融实验 任务2消融实验 任务3消融实验 基准

模型自适应预筛选 基于边缘信息的

伪标签选取策略已知类mAP 未知类

召回率已知类mAP 未知类

召回率已知类mAP 未知类

召回率√ 82.52 17.66 87.90 29.68 87.66 30.41 √ √ 89.34 2.43 90.83 5.96 89.74 13.33 √ √ 83.28 45.81 87.54 63.01 87.69 54.80 √ √ √ 89.09 38.67 90.35 47.17 90.38 61.20 -

[1] ZAIDI S S A, ANSARI M S, ASLAM A, et al. A survey of modern deep learning based object detection models[J]. Digital Signal Processing, 2022, 126: 103514. doi: 10.1016/j.dsp.2022.103514. [2] ZOU Zhengxia, CHEN Keyan, SHI Zhenwei, et al. Object detection in 20 years: A survey[J]. Proceedings of the IEEE, 2023, 111(3): 257–276. doi: 10.1109/JPROC.2023.3238524. [3] 吕进东, 王彤, 唐晓斌. 基于图注意力网络的半监督SAR舰船目标检测[J]. 电子与信息学报, 2023, 45(5): 1541–1549. doi: 10.11999/JEIT220139.LÜ Jindong, WANG Tong, and TANG Xiaobin. Semi-supervised SAR ship target detection with graph attention network[J]. Journal of Electronics & Information Technology, 2023, 45(5): 1541–1549. doi: 10.11999/JEIT220139. [4] 王玺坤, 姜宏旭, 林珂玉. 基于改进型YOLO算法的遥感图像舰船检测[J]. 北京航空航天大学学报, 2020, 46(6): 1184–1191. doi: 10.13700/j.bh.1001-5965.2019.0394.WANG Xikun, JIANG Hongxu, and LIN Keyu. Remote sensing image ship detection based on modified YOLO algorithm[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46(6): 1184–1191. doi: 10.13700/j.bh.1001-5965.2019.0394. [5] AI Jiaqiu, TIAN Ruitian, LUO Qiwu, et al. Multi-scale rotation-invariant Haar-like feature integrated CNN-based ship detection algorithm of multiple-target environment in SAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(12): 10070–10087. doi: 10.1109/TGRS.2019.2931308. [6] 黄玉玲, 陶昕辰, 朱涛, 等. 残差对抗目标检测算法的遥感图像检测[J]. 电光与控制, 2023, 30(7): 63–67. doi: 10.3969/j.issn.1671-637X.2023.07.011.HUANG Yuling, TAO Xinchen, ZHU Tao, et al. A remote sensing image detection method based on residuals adversarial object detection algorithm[J]. Electronics Optics & Control, 2023, 30(7): 63–67. doi: 10.3969/j.issn.1671-637X.2023.07.011. [7] 马梁, 苟于涛, 雷涛, 等. 基于多尺度特征融合的遥感图像小目标检测[J]. 光电工程, 2022, 49(4): 210363. doi: 10.12086/oee.2022.210363.MA Liang, GOU Yutao, LEI Tao, et al. Small object detection based on multi-scale feature fusion using remote sensing images[J]. Opto-Electronic Engineering, 2022, 49(4): 210363. doi: 10.12086/oee.2022.210363. [8] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031. [9] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]. The 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779–788. doi: 10.1109/CVPR.2016.91. [10] REDMON J and FARHADI A. YOLOv3: An incremental improvement[EB/OL]. https://arxiv.org/abs/1804.02767, 2018. [11] 邵延华, 张铎, 楚红雨, 等. 基于深度学习的YOLO目标检测综述[J]. 电子与信息学报, 2022, 44(10): 3697–3708. doi: 10.11999/JEIT210790.SHAO Yanhua, ZHANG Duo, CHU Hongyu, et al. A review of YOLO object detection based on deep learning[J]. Journal of Electronics & Information Technology, 2022, 44(10): 3697–3708. doi: 10.11999/JEIT210790. [12] CARION N, MASSA F, SYNNAEVE G, et al. End-to-end object detection with transformers[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 213–229. doi: 10.1007/978-3-030-58452-8_13. [13] GENG Chuanxing, HUANG Shengjun, and CHEN Songcan. Recent advances in open set recognition: A survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(10): 3614–3631. doi: 10.1109/TPAMI.2020.2981604. [14] DANG Sihang, CAO Zongjie, CUI Zongyong, et al. Open set incremental learning for automatic target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(7): 4445–4456. doi: 10.1109/TGRS.2019.2891266. [15] DANG Sihang, XIA Zhaoqiang, JIANG Xiaoyue, et al. Inclusive consistency-based quantitative decision-making framework for incremental automatic target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5215614. doi: 10.1109/TGRS.2023.3312330. [16] JOSEPH K J, KHAN S, KHAN F S, et al. Towards open world object detection[C]. The 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 5826–5836. doi: 10.1109/CVPR46437.2021.00577. [17] GUPTA A, NARAYAN S, JOSEPH KJ, et al. OW-DETR: Open-world detection transformer[C]. The 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 9225–9234. doi: 10.1109/CVPR52688.2022.00902. [18] MA Shuailei, WANG Yuefeng, WEI Ying, et al. CAT: LoCalization and identification cascade detection transformer for open-world object detection[C]. The 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 19681–19690. doi: 10.1109/CVPR52729.2023.01885. [19] UIJLINGS J R R, VAN DE SANDE K E A, GEVERS T, et al. Selective search for object recognition[J]. International Journal of Computer Vision, 2013, 104(2): 154–171. doi: 10.1007/s11263-013-0620-5. [20] ZOHAR O, WANG K C, and YEUNG S. PROB: Probabilistic objectness for open world object detection[C]. The 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 11444–11453. doi: 10.1109/CVPR52729.2023.01101. [21] CHENG Gong, XIE Xingxing, HAN Junwei, et al. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 3735–3756. doi: 10.1109/JSTARS.2020.3005403. [22] ZITNICK C L and DOLLÁR P. Edge boxes: Locating object proposals from edges[C]. The 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 391–405. doi: 10.1007/978-3-319-10602-1_26. [23] 禹文奇, 程塨, 王美君, 等. MAR20: 遥感图像军用飞机目标识别数据集[J]. 遥感学报, 2023, 27(12): 2688–2696. doi: 10.11834/jrs.20222139.YU Wenqi, CHENG Gong, WANG Meijun, et al. MAR20: A benchmark for military aircraft recognition in remote sensing images[J]. National Remote Sensing Bulletin, 2023, 27(12): 2688–2696. doi: 10.11834/jrs.20222139. [24] ZHU Xizhou, SU Weijie, LU Lewei, et al. Deformable DETR: Deformable transformers for end-to-end object detection[C]. 9th International Conference on Learning Representations, 2021. -

下载:

下载:

下载:

下载: