Depression Intensity Recognition Based on Perceptually Locally-enhanced Global Depression Features and Fused Global-local Semantic Correlation Features on Faces

-

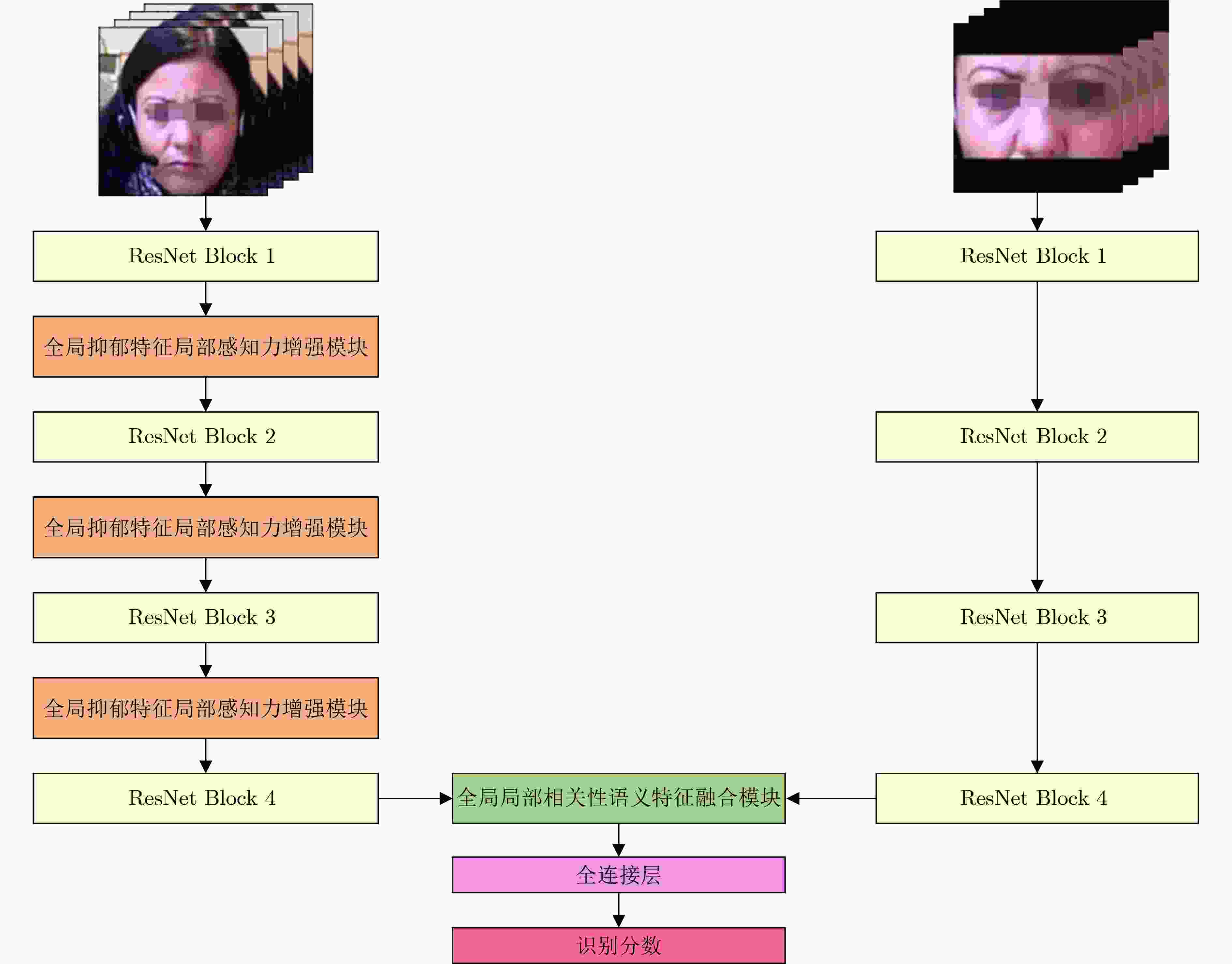

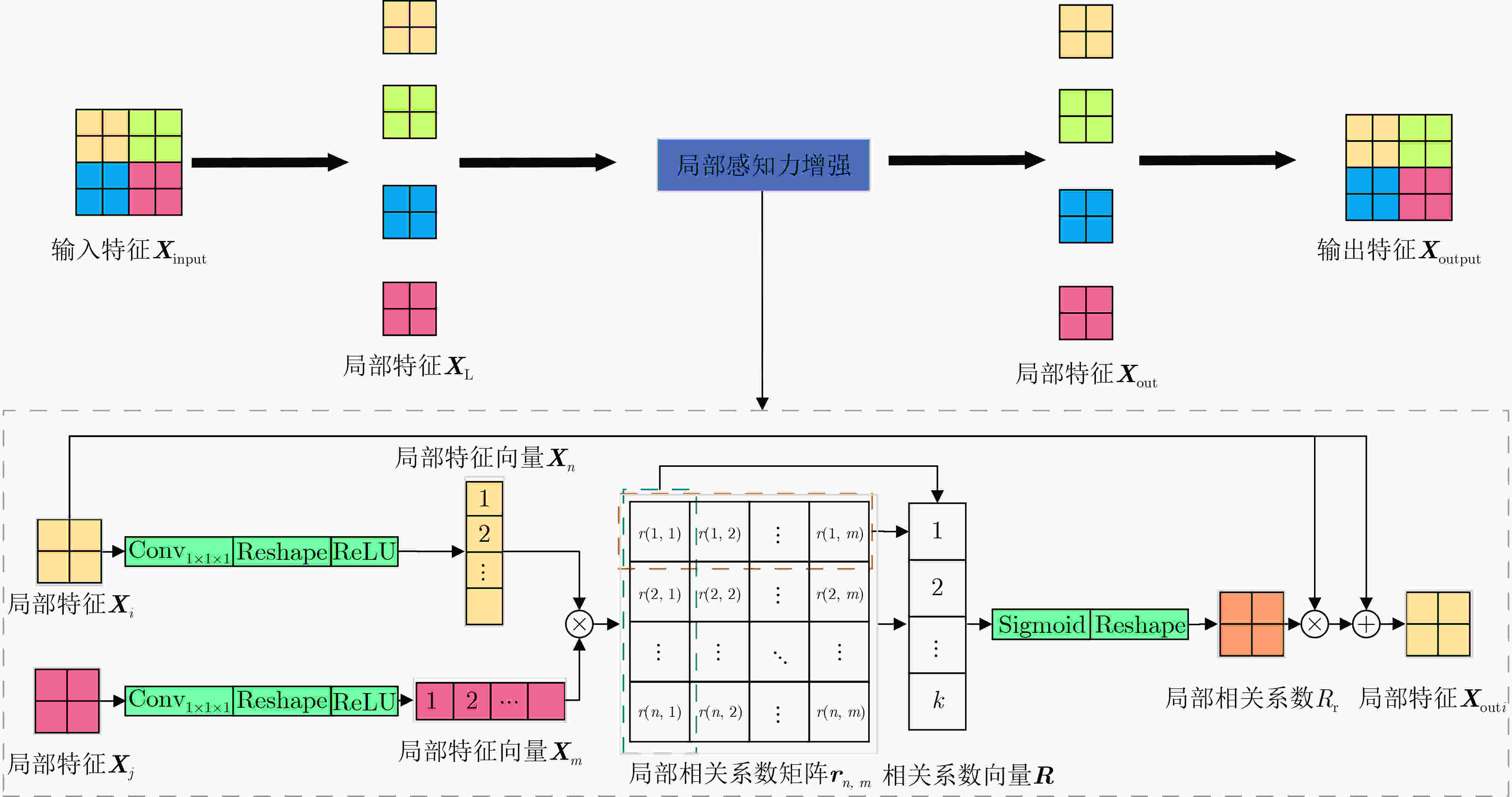

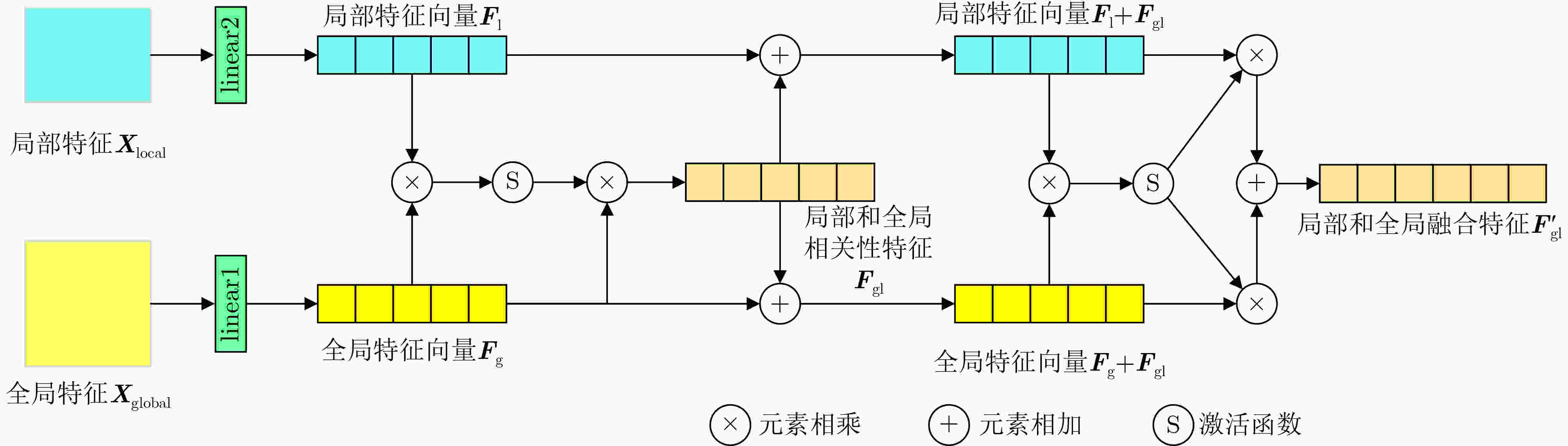

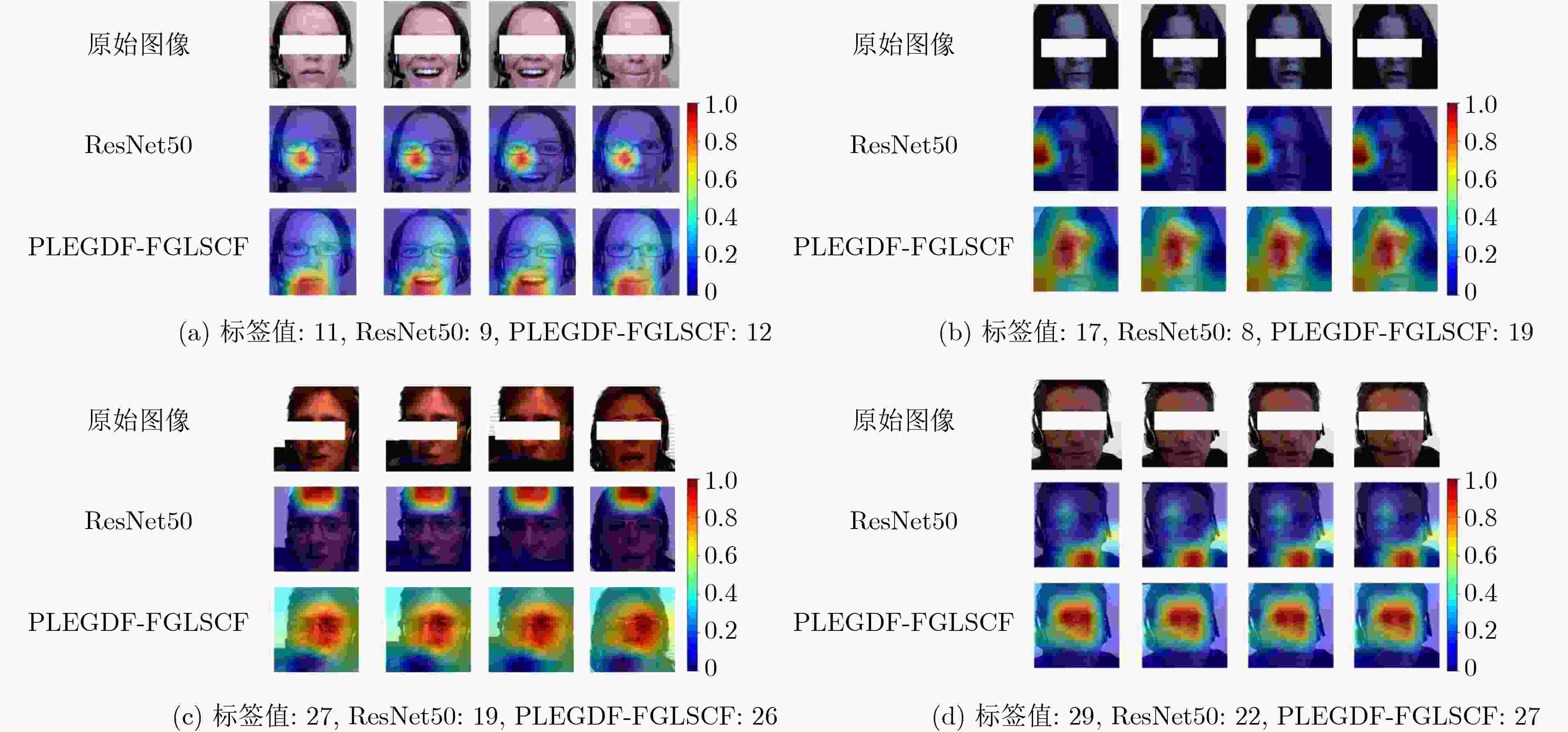

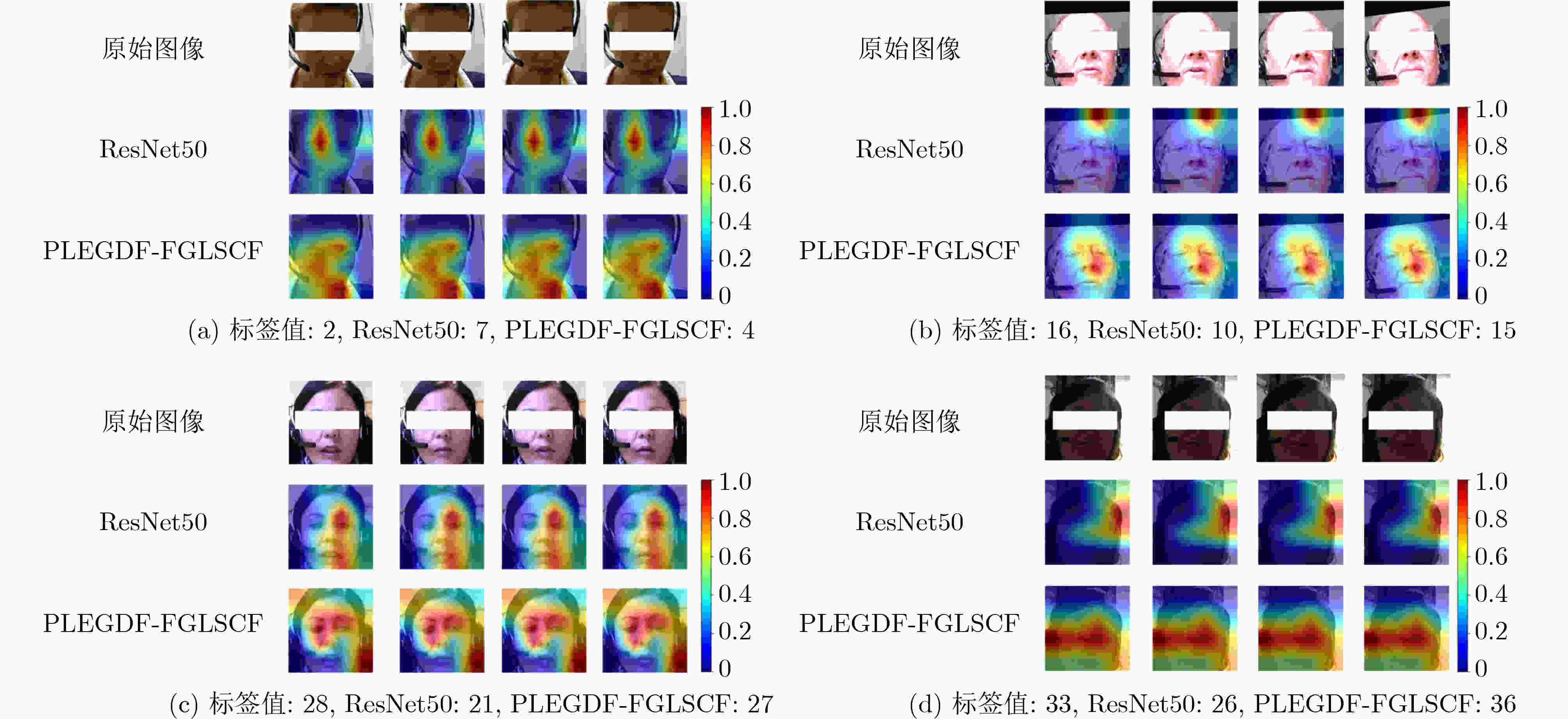

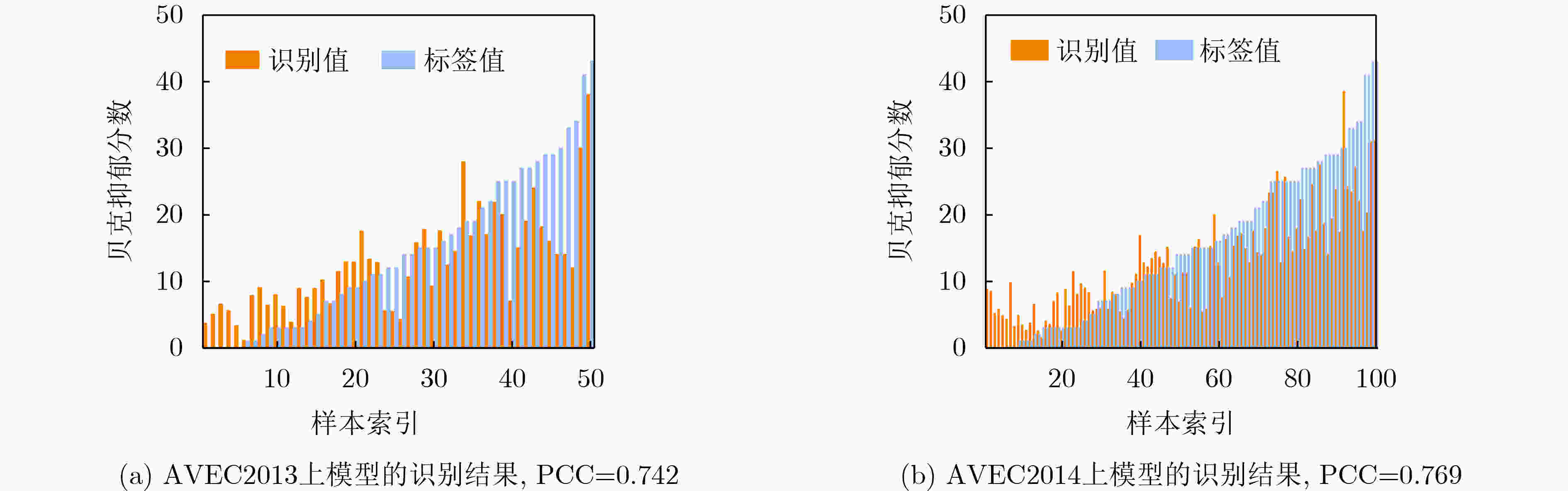

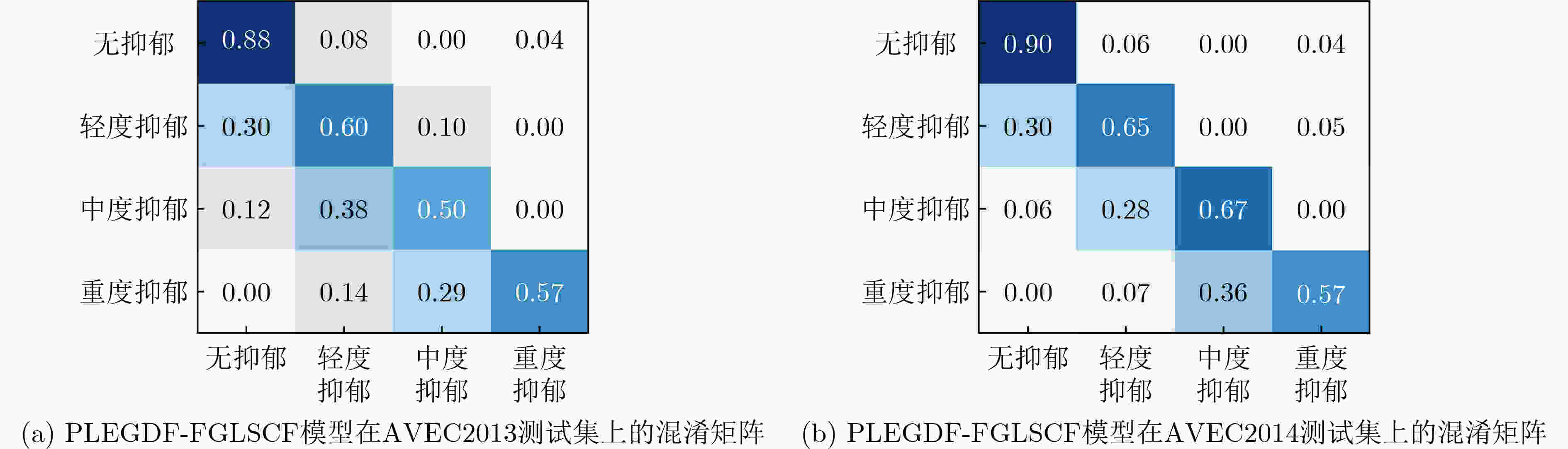

摘要: 现有基于深度学习的大多数方法在实现患者抑郁程度自动识别的过程中,主要存在两大挑战:(1)难以利用深度模型自动地从面部表情有效学习到抑郁强度相关的全局上下文信息,(2)往往忽略抑郁强度相关的全局和局部信息之间的语义一致性。为此,该文提出一种全局抑郁特征局部感知力增强和全局-局部语义相关性特征融合(PLEGDF-FGLSCF)的抑郁强度识别深度模型。首先,设计了全局抑郁特征局部感知力增强(PLEGDF)模块,用于提取面部局部区域之间的语义相关性信息,促进不同局部区域与抑郁相关的信息之间的交互,从而增强局部抑郁特征驱动的全局抑郁特征表达力。然后,提出了全局-局部语义相关性特征融合(FGLSCF)模块,用于捕捉全局和局部语义信息之间的关联性,实现全局和局部抑郁特征之间的语义一致性描述。最后,在AVEC2013和AVEC2014数据集上,利用PLEGDF-FGLSCF模型获得的识别结果在均方根误差(RMSE)和平均绝对误差(MAE)指标上的值分别是7.75/5.96和7.49/5.99,优于大多数已有的基准模型,证实了该方法的合理性和有效性。Abstract: For automatic recognition of the depression intensity in patients, the existing deep learning based methods typically face two main challenges: (1) It is difficult for deep models to effectively capture the global context information relevant to the level of depression intensity from facial expressions, and (2) the semantic consistency between the global semantic information and the local one associated with depression intensity is often ignored. One new deep neural network for recognizing the severity of depressive symptoms, by combining the Perceptually Locally-Enhanced Global Depression Features and the Fused Global-Local Semantic Correlation Features (PLEGDF-FGLSCF), is proposed in this paper. Firstly, the PLEGDF module for the extraction of global depression features with local perceptual enhancement, is designed to extract the semantic correlations among local facial regions, to promote the interactions between depression-relevant information in different local regions, and thus to enhance the expressiveness of the global depression features driven by the local ones. Secondly, in order to achieve full integration of global and local semantic features related to depression severity, the FGLSCF module is proposed, aiming to capture the correlation of global and local semantic information and thus to ensure the semantic consistency in describing the depression intensity by means of global and local semantic features. Finally, on the AVEC2013 and AVEC2014 datasets, the PLEGDF-FGLSCF model achieved recognition results in terms of the Root Mean Square Error (RMSE) and the Mean Absolute Error (MAE) with the values of 7.75/5.96 and 7.49/5.99, respectively, demonstrating its superiority to most existing benchmark methods, verifying the rationality and effectiveness of our approach.

-

表 1 不同数量的PLEGDF在AVEC2013数据集上的比较结果

方法 RMSE MAE ResNet50 8.57 6.44 ResNet50+layer1嵌入PLEGDF 8.11 6.19 ResNet50+layer1,2嵌入PLEGDF 7.91 6.07 PLEGDF- FGLSCF 7.75 5.96 表 2 不同数量的PLEGDF在AVEC2014数据集上的比较结果

方法 RMSE MAE ResNet50 8.34 6.37 ResNet50+layer1嵌入PLEGDF 8.09 6.16 ResNet50+layer1,2嵌入PLEGDF 7.88 6.10 PLEGDF- FGLSCF 7.49 5.99 表 3 在AVEC2013数据集上的比较结果

方法 RMSE MAE 拼接全连接层 8.02 6.14 不同分支识别分数求平均 8.25 6.28 仅使用人脸分支 8.32 6.46 仅使用眼睛区域分支 8.29 6.39 PLEGDF-FGLSCF 7.75 5.96 表 4 在AVEC2014数据集上的比较结果

方法 RMSE MAE 拼接全连接层 8.49 6.27 不同分支识别分数求平均 8.36 6.28 仅使用人脸分支 8.28 6.16 仅使用眼睛区域分支 8.07 6.21 PLEGDF-FGLSCF 7.49 5.99 表 5 在 AVEC2013 数据集上识别抑郁强度的方案比较

模型是否预训练 方法 RMSE MAE 未预训练 Valstar等人[40] 13.61 10.88 预训练 Zhu等人[18] 9.82 7.58 预训练 Jazaery等人[30] 9.28 7.37 预训练 Melo等人[24] 7.90 5.98 预训练 Melo等人[25] 7.55 6.24 预训练 Pan等人[26] 7.98 6.15 预训练 Zhou等人[28] 8.28 6.20 预训练 Melo等人[29] 8.25 6.30 预训练 孙浩浩等人[34] 8.70 6.74 未预训练 Uddin等人[19] 8.93 7.04 未预训练 He等人[31] 8.39 6.59 未预训练 Shang等人[35] 8.20 6.38 未预训练 PLEGDF-FGLSCF 7.75 5.96 表 6 在AVEC2014 数据集上识别抑郁强度的方案比较

模型是否预训练 方法 RMSE MAE 未预训练 Valstar等人[41] 10.86 8.86 预训练 Zhu等人[18] 9.55 7.47 预训练 Jazaery等人[30] 9.20 7.22 预训练 Melo等人[24] 7.61 5.82 预训练 Melo等人[25] 7.65 6.06 预训练 Pan等人[26] 7.75 6.00 预训练 Zhou等人[28] 8.39 6.21 预训练 Melo等人[29] 8.23 6.15 预训练 孙浩浩等人[34] 8.56 6.65 未预训练 Uddin等人[19] 8.78 6.86 未预训练 He等人[31] 8.30 6.51 未预训练 Shang等人[35] 7.84 6.08 未预训练 PLEGDF-FGLSCF 7.49 5.99 表 7 对于无抑郁样本和不同抑郁程度样本模型产生的PCC结果

抑郁程度 AVEC2013 AVEC2014 无抑郁(0~13分) 0.575 0.497 轻度抑郁(14~19分) 0.772 0.689 中度抑郁(20~28分) 0.661 0.782 重度抑郁(29~63分) 0.867 0.855 表 8 模型在跨数据集上的测试结果

训练集 测试集 RMSE MAE 实验1 AVEC2013 AVEC2014 7.84 5.79 实验2 AVEC2014 AVEC2013 8.57 6.48 实验3 AVEC2013 北风任务 7.78 5.96 实验4 AVEC2013 自由任务 7.91 5.94 实验5 北风任务 AVEC2013 8.76 6.65 实验6 自由任务 AVEC2013 8.32 6.27 实验7 北风任务 自由任务 7.99 6.02 实验8 自由任务 北风任务 8.81 6.77 -

[1] ETTMAN C K, ABDALLA S M, COHEN G H, et al. Prevalence of depression symptoms in us adults before and during the COVID-19 pandemic[J]. JAMA Network Open, 2020, 3(9): e2019686. doi: 10.1001/jamanetworkopen.2020.19686. [2] HYLAND P, SHEVLIN M, MCBRIDE O, et al. Anxiety and depression in the republic of Ireland during the COVID-19 pandemic[J]. Acta Psychiatrica Scandinavica, 2020, 142(3): 249–256. doi: 10.1111/acps.13219. [3] CHASE T N. Apathy in neuropsychiatric disease: Diagnosis, pathophysiology, and treatment[J]. Neurotoxicity Research, 2011, 19(2): 266–278. doi: 10.1007/s12640-010-9196-9. [4] 王文亚. 基于步态中骨架数据抑郁风险识别和面部图像人物识别的应用研究[D]. [硕士论文], 兰州大学, 2023.WANG Wenya. Application research on skeleton data depression risk recognition and facial image character recognition based on gait[D]. [Master dissertation], Lanzhou University, 2023. [5] ZIMMERMAN M, MARTINEZ J H, YOUNG D, et al. Severity classification on the Hamilton depression rating scale[J]. Journal of Affective Disorders, 2013, 150(2): 384–388. doi: 10.1016/j.jad.2013.04.028. [6] BECK A T, STEER R A, BALL R, et al. Comparison of beck depression inventories-IA and-II in psychiatric outpatients[J]. Journal of Personality Assessment, 1996, 67(3): 588–597. doi: 10.1207/s15327752jpa6703_13. [7] KROENKE K, STRINE T W, SPITZER R L, et al. The PHQ-8 as a measure of current depression in the general population[J]. Journal of Affective Disorders, 2009, 114(1/3): 163–173. doi: 10.1016/j.jad.2008.06.026. [8] THOMBS B, TURNER K A, and SHRIER I. Defining and evaluating overdiagnosis in mental health: A meta-research review[J]. Psychotherapy and Psychosomatics, 2019, 88(4): 193–202. doi: 10.1159/000501647. [9] 瞿伟, 谷珊珊. 抑郁症治疗研究新进展[J]. 第三军医大学学报, 2014, 36(11): 1113–1117. doi: 10.16016/j.1000-5404.2014.11.022.QU Wei and GU Shanshan. New progress in treatment of depression[J]. Journal of Third Military Medical University, 2014, 36(11): 1113–1117. doi: 10.16016/j.1000-5404.2014.11.022. [10] 赵健, 周莉芸, 武孟青, 等. 基于人工智能的抑郁症辅助诊断方法[J]. 西北大学学报:自然科学版, 2023, 53(3): 325–335. doi: 10.16152/j.cnki.xdxbzr.2023-03-002.ZHAO Jian, ZHOU Liyun, WU Mengqing, et al. AI-based assisted diagnostic methods for depression[J]. Journal of Northwest University:Natural Science Edition, 2023, 53(3): 325–335. doi: 10.16152/j.cnki.xdxbzr.2023-03-002. [11] 郭威彤. 利用深度学习从面部表情和语音识别抑郁症方法的研究[D]. [博士论文], 兰州大学, 2022. doi: 10.27204/d.cnki.glzhu.2022.003611.GUO Weitong. Research on deep learning-based depression recognition from facial expression and speech[D]. [Ph. D. dissertation], Lanzhou University, 2022. doi: 10.27204/d.cnki.glzhu.2022.003611. [12] 陈坤林, 胡德锋, 陈楠楠. 基于面部表情分析的抑郁症识别研究[J]. 计算机时代, 2023(10): 70–74. doi: 10.16644/j.cnki.cn33-1094/tp.2023.10.015.CHEN Kunlin, HU Defeng, and CHEN Nannan. Research on depression identification based on facial expression analysis[J]. Computer Era, 2023(10): 70–74. doi: 10.16644/j.cnki.cn33-1094/tp.2023.10.015. [13] MORTENSEN C D. Communication Theory[M]. 2nd ed. New York: Routledge, 2017: 193–200. [14] PAMPOUCHIDOU A, SIMOS P G, MARIAS K, et al. Automatic assessment of depression based on visual cues: A systematic review[J]. IEEE Transactions on Affective Computing, 2019, 10(4): 445–470. doi: 10.1109/taffc.2017.2724035. [15] GIRARD J M, COHN J F, MAHOOR M H, et al. Social risk and depression: Evidence from manual and automatic facial expression analysis[C]. The 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, Shanghai, China, 2013: 1–8. doi: 10.1109/FG.2013.6553748. [16] GIRARD J M, COHN J F, MAHOOR M H, et al. Nonverbal social withdrawal in depression: Evidence from manual and automatic analyses[J]. Image and Vision Computing, 2014, 32(10): 641–647. doi: 10.1016/j.imavis.2013.12.007. [17] GUR R C, ERWIN R J, GUR R E, et al. Facial emotion discrimination: II. Behavioral findings in depression[J]. Psychiatry Research, 1992, 42(3): 241–251. doi: 10.1016/0165-1781(92)90116-K. [18] ZHU Yu, SHANG Yuanyuan, SHAO Zhuhong, et al. Automated depression diagnosis based on deep networks to encode facial appearance and dynamics[J]. IEEE Transactions on Affective Computing, 2018, 9(4): 578–584. doi: 10.1109/TAFFC.2017.2650899. [19] UDDIN M A, JOOLEE J B, and LEE Y K. Depression level prediction using deep spatiotemporal features and multilayer Bi-LTSM[J]. IEEE Transactions on Affective Computing, 2022, 13(2): 864–870. doi: 10.1109/TAFFC.2020.2970418. [20] DE MELO W C, GRANGER E, and LOPEZ M B. Encoding temporal information for automatic depression recognition from facial analysis[C]. ICASSP 2020-2020 the IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 2020: 1080–1084. doi: 10.1109/ICASSP40776.2020.9054375. [21] CHEN Qian, CHATURVEDI I, JI Shaoxiong, et al. Sequential fusion of facial appearance and dynamics for depression recognition[J]. Pattern Recognition Letters, 2021, 150: 115–121. doi: 10.1016/j.patrec.2021.07.005. [22] 何浪. 基于3D-CNN和时空注意力-卷积LSTM的抑郁症识别研究[J]. 首都师范大学学报:自然科学版, 2021, 42(2): 17–25. doi: 10.19789/j.1004-9398.2021.02.004.HE Lang. Automatic depression estimation using 3D-CNN and STA-ConvLSTM from videos[J]. Journal of Capital Normal University:Natural Sciences Edition, 2021, 42(2): 17–25. doi: 10.19789/j.1004-9398.2021.02.004. [23] NIU Mingyue, HE Lang, LI Ya, et al. Depressioner: Facial dynamic representation for automatic depression level prediction[J]. Expert Systems with Applications, 2022, 204: 117512. doi: 10.1016/j.eswa.2022.117512. [24] DE MELO W C, GRANGER E, and HADID A. A deep multiscale spatiotemporal network for assessing depression from facial dynamics[J]. IEEE Transactions on Affective Computing, 2022, 13(3): 1581–1592. doi: 10.1109/TAFFC.2020.3021755. [25] DE MELO W C, GRANGER E, and LÓPEZ M. MDN: A deep maximization-differentiation network for spatio-temporal depression detection[J]. IEEE Transactions on Affective Computing, 2023, 14(1): 578–590. doi: 10.1109/TAFFC.2021.3072579. [26] PAN Yuchen, SHANG Yuanyuan, LIU Tie, et al. Spatial-temporal attention network for depression recognition from facial videos[J]. Expert Systems with Applications, 2024, 237: 121410. doi: 10.1016/j.eswa.2023.121410. [27] 安昳, 曲珍, 许宁, 等. 面部动态特征描述的抑郁症识别[J]. 中国图象图形学报, 2020, 25(11): 2415–2427. doi: 10.11834/jig.200322.AN Yi, QU Zhen, XU Ning, et al. Automatic depression estimation using facial appearance[J]. Journal of Image and Graphics, 2020, 25(11): 2415–2427. doi: 10.11834/jig.200322. [28] ZHOU Xiuzhang, JIN Kai, SHANG Yuanyaun, et al. Visually interpretable representation learning for depression recognition from facial images[J]. IEEE Transactions on Affective Computing, 2020, 11(3): 542–552. doi: 10.1109/TAFFC.2018.2828819. [29] DE MELO W C, GRANGER E, and HADID A. Combining global and local convolutional 3D networks for detecting depression from facial expressions[C]. The 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition, Lille, France, 2019: 1–8. doi: 10.1109/FG.2019.8756568. [30] AL JAZAERY M and GUO Guodong. Video-based depression level analysis by encoding deep spatiotemporal features[J]. IEEE Transactions on Affective Computing, 2021, 12(1): 262–268. doi: 10.1109/TAFFC.2018.2870884. [31] HE Lang, CHAN J C W, and WANG Zhongmin. Automatic depression recognition using CNN with attention mechanism from videos[J]. Neurocomputing, 2021, 422: 165–175. doi: 10.1016/j.neucom.2020.10.015. [32] NIU Mingyue, TAO Jianhua, and LIU Bin. Multi-scale and multi-region facial discriminative representation for automatic depression level prediction[C]. The ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, Canada, 2021: 1325–1329. doi: 10.1109/ICASSP39728.2021.9413504. [33] ACHARYA R and DASH S P. Automatic depression detection based on merged convolutional neural networks using facial features[C]. The 2022 IEEE International Conference on Signal Processing and Communications, Bangalore, India, 2022: 1–5. doi: 10.1109/SPCOM55316.2022.9840812. [34] 孙浩浩, 邵珠宏, 尚媛园, 等. 融合通道层注意力机制的多支路卷积网络抑郁症识别[J]. 中国图象图形学报, 2022, 27(11): 3292–3302. doi: 10.11834/jig.210397.SUN Haohao, SHAO Zhuhong, SHANG Yuanyuan, et al. Channel-wise attention mechanism-relevant multi-branches convolutional network-based depressive disorder recognition[J]. Journal of Image and Graphics, 2022, 27(11): 3292–3302. doi: 10.11834/jig.210397. [35] SHANG Yuanyuan, PAN Yuchen, JIANG Xiao, et al. LQGDNet: A local quaternion and global deep network for facial depression recognition[J]. IEEE Transactions on Affective Computing, 2023, 14(3): 2557–2563. doi: 10.1109/TAFFC.2021.3139651. [36] 江筱, 邵珠宏, 尚媛园, 等. 基于级联深度神经网络的抑郁症识别[J]. 计算机应用与软件, 2019, 36(10): 117–122,150. doi: 10.3969/j.issn.1000-386x.2019.10.021.JIANG Xiao, SHAO Zhuhong, SHANG Yuanyuan, et al. Depression recognition based on cascaded deep neural networks[J]. Computer Applications and Software, 2019, 36(10): 117–122,150. doi: 10.3969/j.issn.1000-386x.2019.10.021. [37] SANCHEZ A, VAZQUEZ C, MARKER C, et al. Attentional disengagement predicts stress recovery in depression: An eye-tracking study[J]. Journal of Abnormal Psychology, 2013, 122(2): 303–313. doi: 10.1037/a0031529. [38] EISENBARTH H and ALPERS G W. Happy mouth and sad eyes: Scanning emotional facial expressions[J]. Emotion, 2011, 11(4): 860–865. doi: 10.1037/a0022758. [39] SCHWARTZ G E, FAIR P L, SALT P, et al. Facial muscle patterning to affective imagery in depressed and nondepressed subjects[J]. Science, 1976, 192(4238): 489–491. doi: 10.1126/science.1257786. [40] VALSTAR M, SCHULLER B, SMITH K, et al. AVEC 2013: The continuous audio/visual emotion and depression recognition challenge[C]. The 3rd ACM International Workshop on Audio/Visual Emotion Challenge, Barcelona Spain, 2013: 3–10. doi: 10.1145/2512530.2512533. [41] VALSTAR M, SCHULLER B, SMITH K, et al. AVEC 2014: 3D dimensional affect and depression recognition challenge[C]. Proceedings of the 4th International Workshop on Audio/Visual Emotion Challenge, Orlando, USA, 2014: 3–10. doi: 10.1145/2661806.2661807. [42] SELVARAJU R R, COGSWELL M, DAS A, et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization[C]. The 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 618–626. doi: 10.1109/ICCV.2017.74. [43] LUO Wenjie, LI Yujia, URTASUN R, et al. Understanding the effective receptive field in deep convolutional neural networks[C]. The 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 2016: 4905–4913. [44] BORJI A and ITTI L. State-of-the-Art in visual attention modeling[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(1): 185–207. doi: 10.1109/TPAMI.2012.89. [45] BALTRUSAITIS T, ZADEH A, LIM Y C, et al. OpenFace 2.0: Facial behavior analysis toolkit[C]. 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition, Xi’an, China, 2018: 59–66. doi: 10.1109/FG.2018.00019. [46] ZHANG Kaipeng, ZHANG Zhanpeng, LI Zhifeng, et al. Joint face detection and alignment using multitask cascaded convolutional networks[J]. IEEE Signal Processing Letters, 2016, 23(10): 1499–1503. doi: 10.1109/LSP.2016.2603342. [47] WANG Fei, JIANG Mengqing, QIAN Chen, et al. Residual attention network for image classification[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6450–6458. doi: 10.1109/CVPR.2017.683. -

下载:

下载:

下载:

下载: