Bimodal Emotion Recognition With Adaptive Integration of Multi-level Spatial-Temporal Features and Specific-Shared Feature Fusion

-

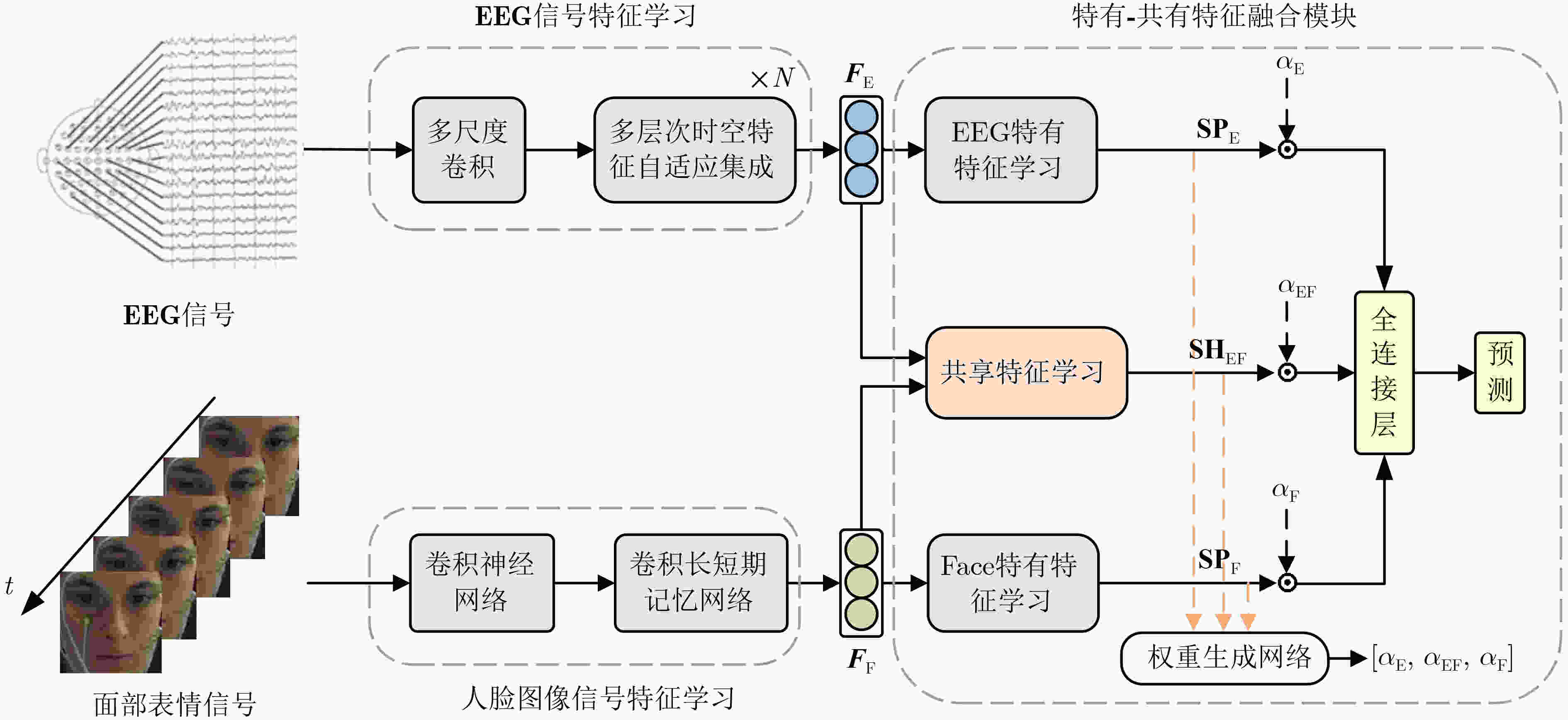

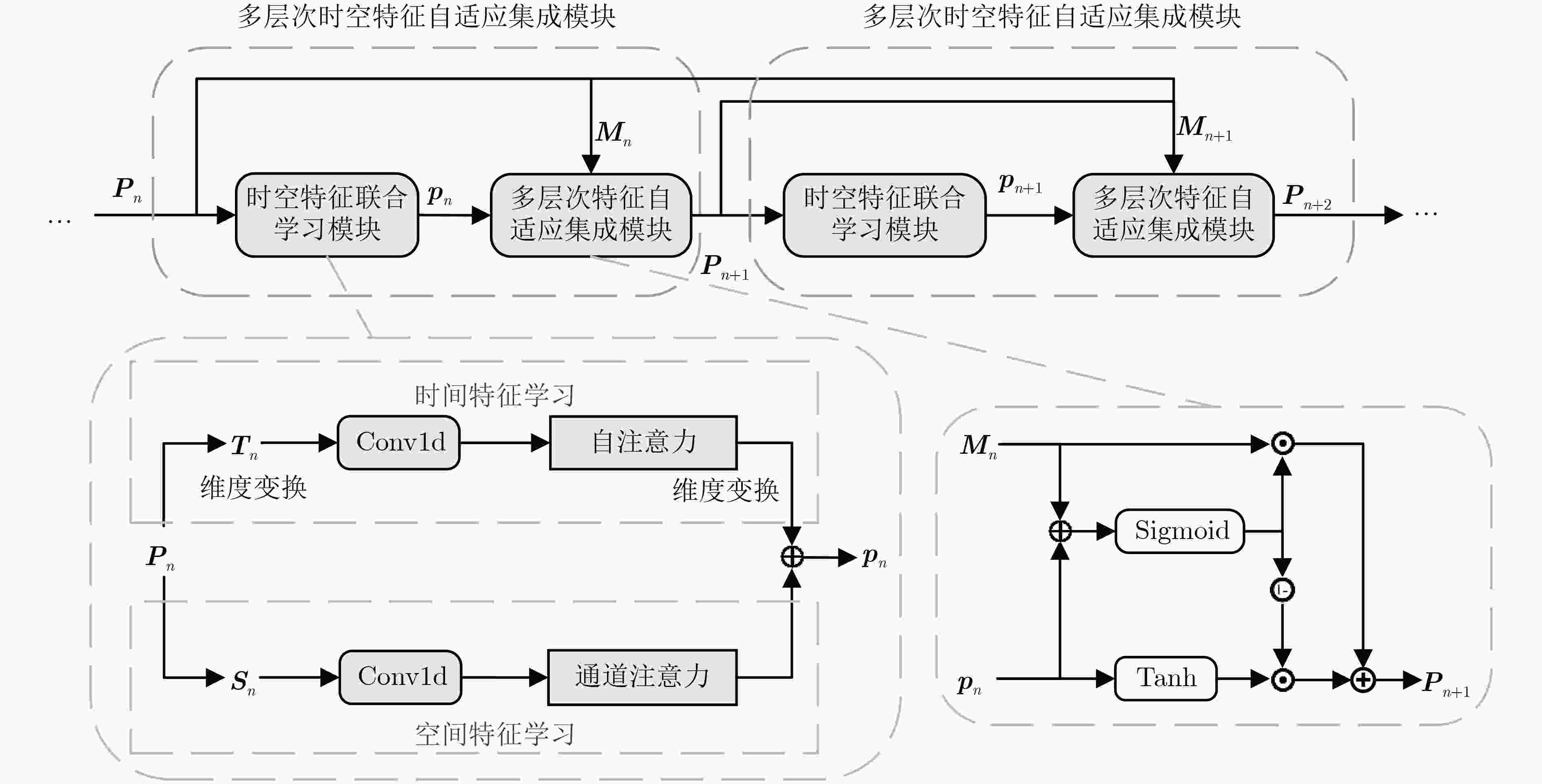

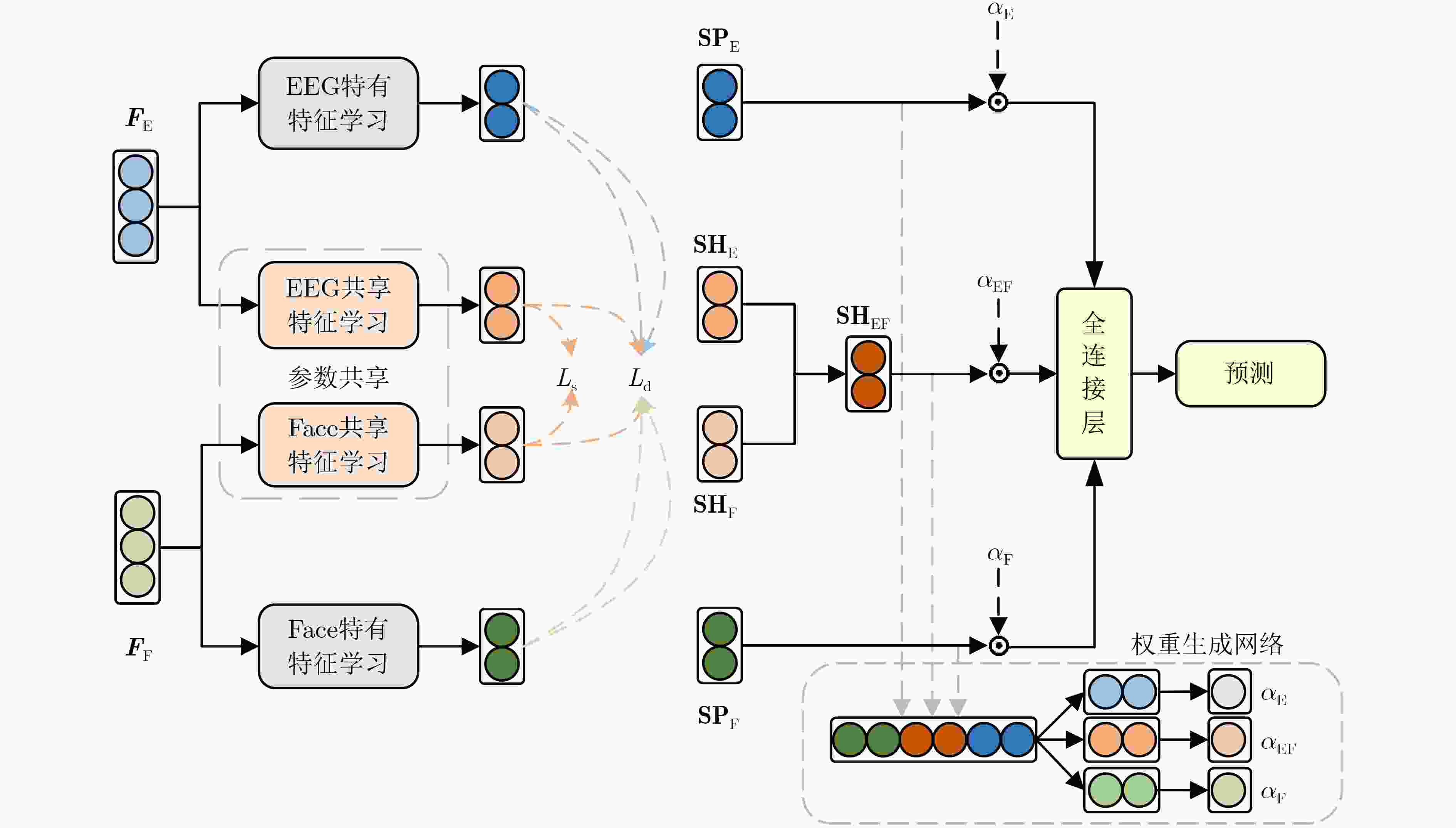

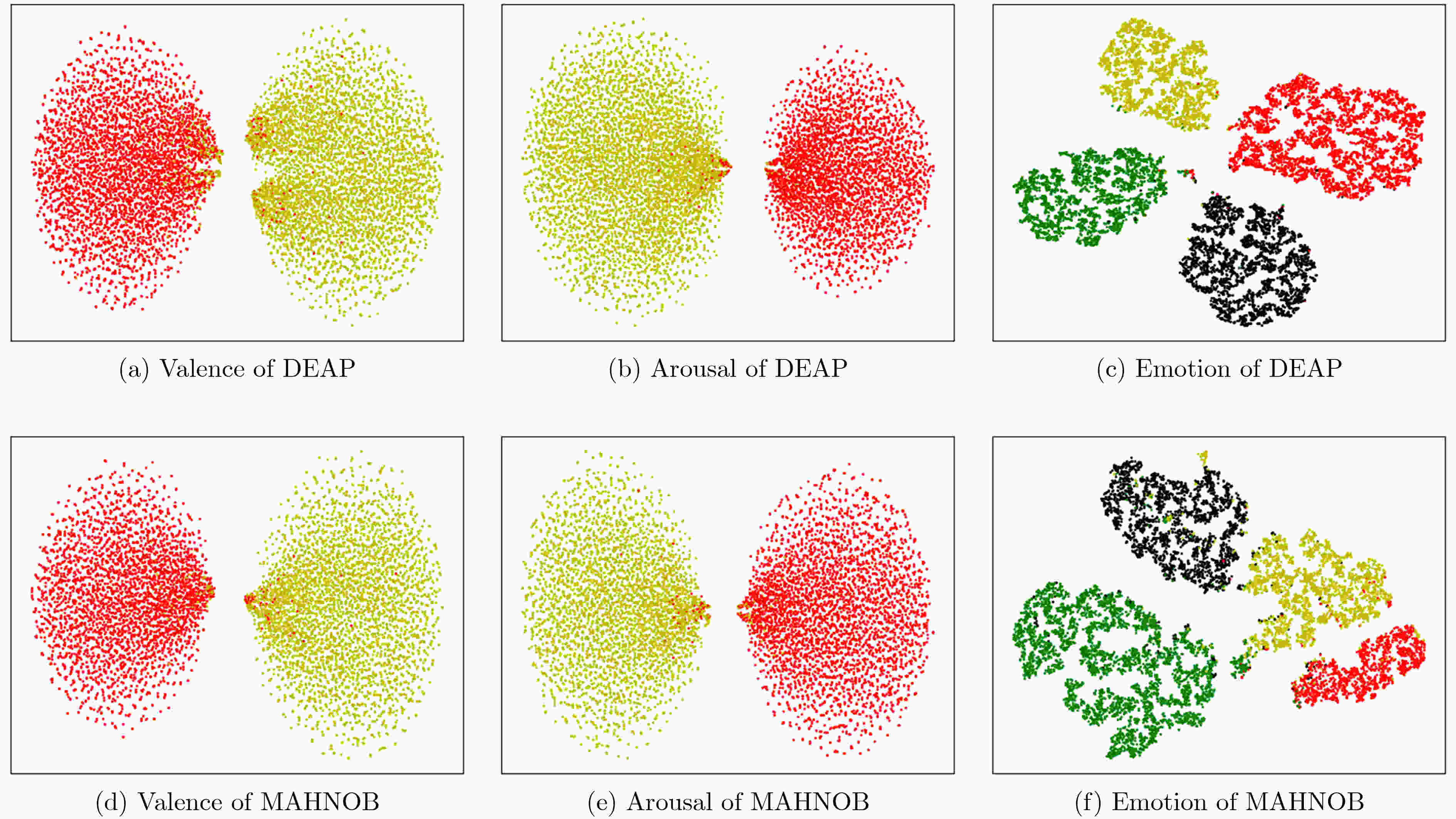

摘要: 在结合脑电(EEG)信号与人脸图像的双模态情感识别领域中,通常存在两个挑战性问题:(1)如何从EEG信号中以端到端方式学习到更具显著性的情感语义特征;(2)如何充分利用双模态信息,捕捉双模态特征中情感语义的一致性与互补性。为此,提出了多层次时空特征自适应集成与特有-共享特征融合的双模态情感识别模型。一方面,为从EEG信号中获得更具显著性的情感语义特征,设计了多层次时空特征自适应集成模块。该模块首先通过双流结构捕捉EEG信号的时空特征,再通过特征相似度加权并集成各层次的特征,最后利用门控机制自适应地学习各层次相对重要的情感特征。另一方面,为挖掘EEG信号与人脸图像之间的情感语义一致性与互补性,设计了特有-共享特征融合模块,通过特有特征的学习和共享特征的学习来联合学习情感语义特征,并结合损失函数实现各模态特有语义信息和模态间共享语义信息的自动提取。在DEAP和MAHNOB-HCI两种数据集上,采用跨实验验证和5折交叉验证两种实验手段验证了提出模型的性能。实验结果表明,该模型取得了具有竞争力的结果,为基于EEG信号与人脸图像的双模态情感识别提供了一种有效的解决方案。Abstract: There are usually two challenging issues in the field of bimodal emotion recognition combining ElectroEncephaloGram (EEG) and facial images: (1) How to learn more significant emotionally semantic features from EEG signals in an end-to-end manner; (2) How to effectively integrate bimodal information to capture the coherence and complementarity of emotional semantics among bimodal features. In this paper, a bimodal emotion recognition model is proposed via the adaptive integration of multi-level spatial-temporal features and the fusion of specific-shared features. On the one hand, in order to obtain more significant emotionally semantic features from EEG signals, a module, called adaptive integration of multi-level spatial-temporal features, is designed. The spatial-temporal features of EEG signals are firstly captured with a dual-flow structure before the features from each level are integrated by taking into consideration the weights deriving from the similarity of features. Finally, the relatively important feature information from each level is adaptively learned based on the gating mechanism. On the other hand, in order to leverage the emotionally semantic consistency and complementarity between EEG signals and facial images, one module fusing specific-shared features is devised. Emotionally semantic features are learned jointly through two branches: specific-feature learning and shared-feature learning. The loss function is also incorporated to automatically extract the specific semantic information for each modality and the shared semantic information among the modalities. On both the DEAP and MAHNOB-HCI datasets, cross-experimental verification and 5-fold cross-validation strategies are used to assess the performance of the proposed model. The experimental results and their analysis demonstrate that the model achieves competitive results, providing an effective solution for bimodal emotion recognition based on EEG signals and facial images.

-

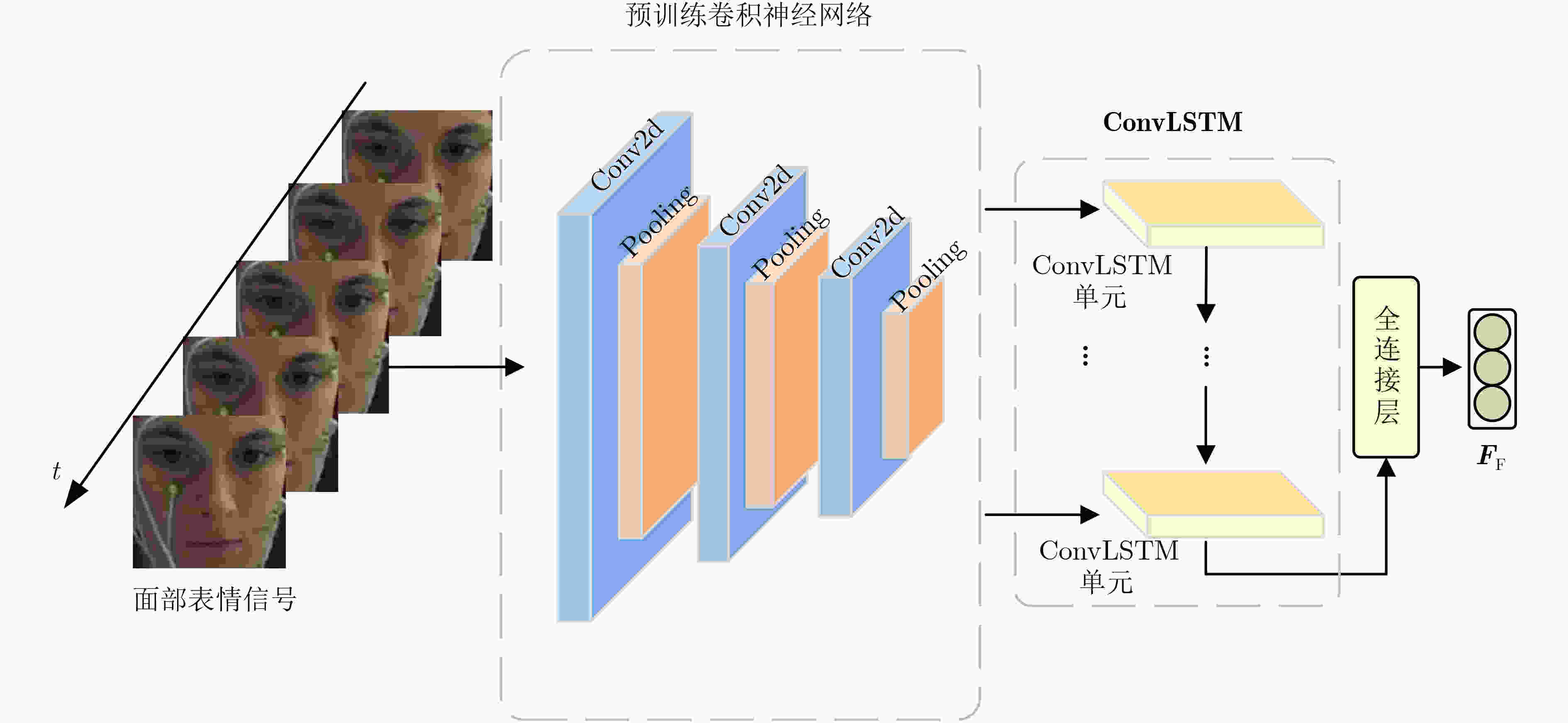

图 3 Zhang等人[14]方法结构示意图

表 1 Zhang等人[14]所使用的预训练CNN的详细结构参数

网络层名称 详细参数 步长 激活函数 输出大小 输入 \ \ \ $ 3 \times 64 \times 64 $ 卷积层1 $ 32@3 \times 3 $ 1 ReLU $ 32 \times 62 \times 62 $ 最大池化1 $ 3 \times 3 $ 2 \ $ 32 \times 30 \times 30 $ 卷积层2

最大池化2$ 64@3 \times 3 $ 1 ReLU $ 64 \times 28 \times 28 $ $ 3 \times 3 $ 2 \ $ 64 \times 13 \times 13 $ 卷积层3 $ 128@3 \times 3 $ 1 ReLU $ 128 \times 13 \times 13 $ 最大池化3 $ 3 \times 3 $ 2 \ $ 128 \times 6 \times 6 $ 表 2 提出模型的详细结构参数

模型组成 模块名称 输入特征维度 输出特征维度 EEG特征学习 EEG输入 \ $ 32 \times 128 $ 多尺度卷积 $ 32 \times 128 $ $ 32 \times 136 $ $ \left[ \begin{array}{c}时空特征联合学习\\ 多层次特征自适应集成\end{array} \right]\times 6 $ $ 32 \times 136 $ $ 32 \times 136 $ $ 32 \times 136 $, $ 32 \times 136 $ $ 32 \times 136 $ Flatten $ 32 \times 136 $ $ 1 \times 4352 $ 全连接层 $ 1 \times 4352 $ $ 1 \times 128 $ Face特征学习 Face输入 \ $ 5 \times 3 \times 64 \times 64 $ 预训练CNN $ 3 \times 64 \times 64 $ $ 32 \times 30 \times 30 $ $ 32 \times 30 \times 30 $ $ 64 \times 13 \times 13 $ $ 64 \times 13 \times 13 $ $ 128 \times 6 \times 6 $ ConvLSTM $ 5 \times 128 \times 6 \times 6 $ $ 128 \times 6 \times 6 $ Flatten $ 128 \times 6 \times 6 $ $ 1 \times 4608 $ 全连接层 $ 1 \times 4608 $ $ 1 \times 128 $ SSFF EEG特有特征学习 $ 1 \times 128 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 32 $ Face特有特征学习 $ 1 \times 128 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 32 $ EEG共享特征学习 $ 1 \times 128 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 32 $ Face共享特征学习 $ 1 \times 128 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 64 $ $ 1 \times 32 $ 全连接层 $ 1 \times 32 $,$ 1 \times 32 $ $ 1 \times 32 $ 权重生成网络 $ 1 \times 32 $, $ 1 \times 32 $, $ 1 \times 32 $ $ 1 \times 32 $ $ 1 \times 32 $ $ 1 \times 1 $ $ 1 \times 32 $ $ 1 \times 1 $ $ 1 \times 32 $ $ 1 \times 1 $ 全连接层,丢弃层 $ 1 \times 32 $, $ 1 \times 32 $, $ 1 \times 32 $ $ 1 \times 48 $ 全连接层,丢弃层 $ 1 \times 48 $ $ 1 \times 48 $ 全连接层 $ 1 \times 48 $ $ 1 \times 2 $或$ 1 \times 4 $ 表 3 提出模型与其他方法的性能对比

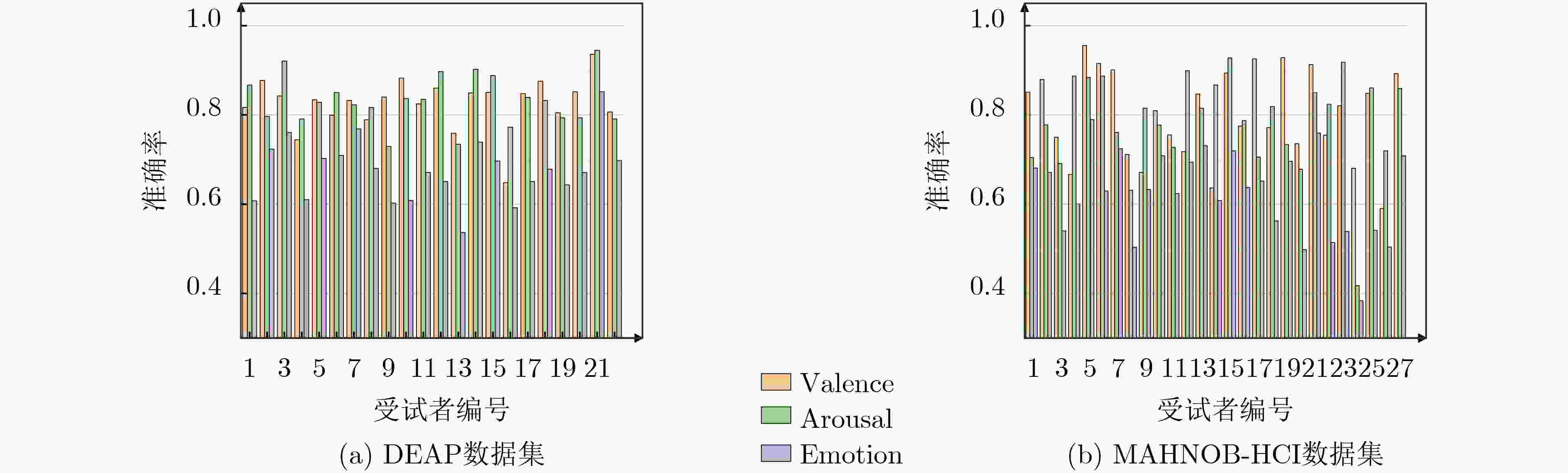

数据集 验证方法 作者 模态 准确率(%) Valence Arousal Emotion DEAP 跨实验验证 Huang等人[39] EEG, Face 80.30 74.23 \ Zhu等人[40] EEG, Face 72.20 78.84 \ Li等人[41] EEG, Face 71.00 58.75 \ Zhang等人[14] EEG, Face 77.63* 79.21* 60.63* 本文方法 EEG, Face 82.60 83.09 67.50 5折交叉验证 Zhang等人[42] EEG, PPS 90.04 93.22 \ Chen等人[43] EEG, PPS 93.19 91.82 \ Wu等人[44] EEG, Face 95.30 94.94 \ Wang等人[45] EEG, Face 96.63 97.15 \ Zhang等人[14] EEG, Face, PPS 95.56 95.33 \ 本文方法 EEG, Face 98.21 98.59 90.56 MAHNOB-HCI 跨实验验证 Huang等人[39] EEG, Face 75.63 74.17 \ Li等人[41] EEG, Face 70.04 72.14 \ Zhang等人[14] EEG, Face 73.55* 74.34* 54.21* 本文方法 EEG, Face 79.99 78.60 62.42 5折交叉验证 Salama等人[12] EEG, Face 96.13 96.79 \ Zhang等人[14] EEG, Face, PPS 96.82 97.79 \ Chen等人[43] EEG, PPS 89.96 90.37 \ Wang等人[45] EEG, Face 96.69 96.26 \ 本文方法 EEG, Face 97.02 97.36 88.77 注:PPS(Peripheral Physiological Signal): 外周生理信号 表 4 DEAP数据集上不同模态配置对模型准确率的统计结果(%)

模态 Valence Arousal Emotion EEG 80.77 80.44 64.43 Face 79.19 79.02 63.22 EEG+Face 82.60 83.09 67.50 表 5 DEAP数据集上SSFF模块消融实验结果(%)

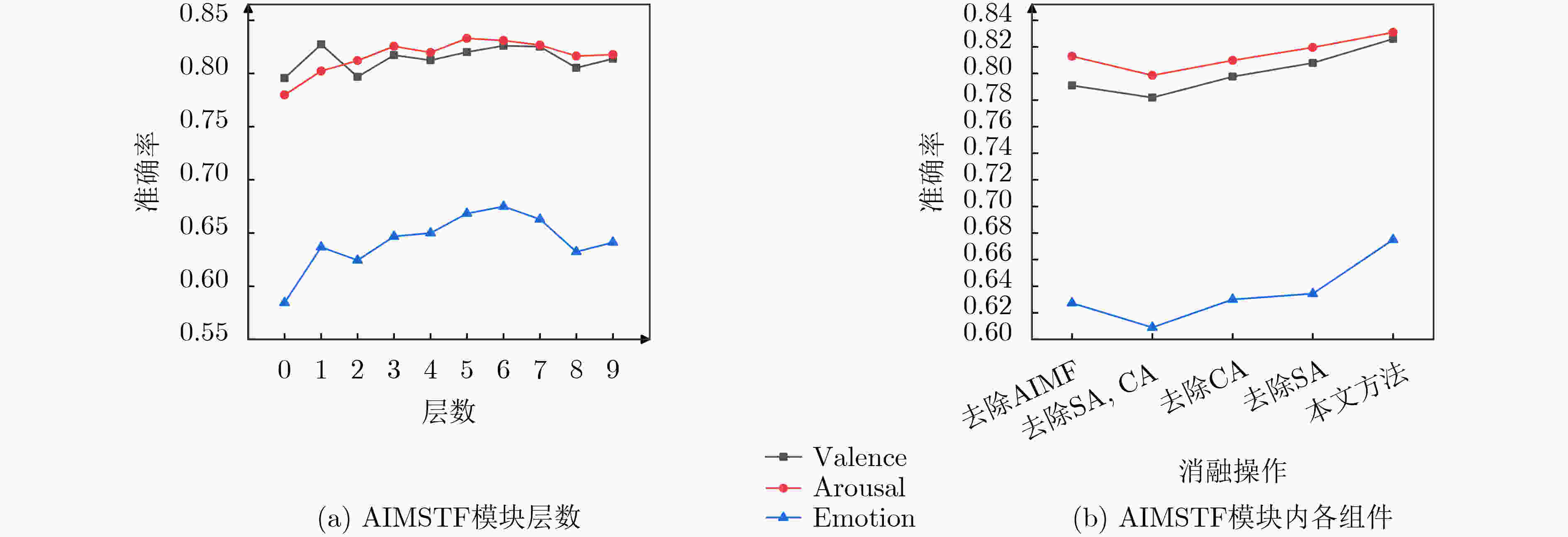

消融操作 Valence Arousal Emotion 去除SSFF 77.81 79.68 59.78 去除共享特征学习分支 80.35 80.93 62.13 去除特有特征学习分支 81.52 81.22 64.46 去除权重生成网络 81.79 82.37 66.25 本文方法 82.60 83.09 67.50 表 6 DEAP数据集上模型训练所用损失函数消融实验结果(%)

消融操作 Valence Arousal Emotion 去除$ {L_{\text{d}}} $ 81.68 82.20 64.07 去除$ {L_{\text{s}}} $ 82.01 82.43 64.53 去除$ {L_{\text{s}}} $, $ {L_{\text{d}}} $ 81.23 81.71 63.12 本文方法 82.60 83.09 67.50 -

[1] LI Wei, HUAN Wei, HOU Bowen, et al. Can emotion be transferred?—A review on transfer learning for EEG-based emotion recognition[J]. IEEE Transactions on Cognitive and Developmental Systems, 2022, 14(3): 833–846. doi: 10.1109/TCDS.2021.3098842. [2] 魏薇. 基于加权融合策略的情感识别建模方法研究[D]. [博士论文], 北京邮电大学, 2019.WEI Wei. Research on modeling approaches of emotion recognition based on weighted fusion strategy[D]. [Ph. D. dissertation], Beijing University of Posts and Telecommunications, 2019. [3] 张镱鲽. 基于注意力机制的深度学习情感识别方法研究[D]. [硕士论文], 辽宁师范大学, 2022. doi: 10.27212/d.cnki.glnsu.2022.000484.ZHANG Yidie. Research on deep learning emotion recognition method based on attention mechanism[D]. [Master dissertation], Liaoning Normal University, 2022. doi: 10.27212/d.cnki.glnsu.2022.000484. [4] 姚鸿勋, 邓伟洪, 刘洪海, 等. 情感计算与理解研究发展概述[J]. 中国图象图形学报, 2022, 27(6): 2008–2035. doi: 10.11834/jig.220085.YAO Hongxun, DENG Weihong, LIU Honghai, et al. An overview of research development of affective computing and understanding[J]. Journal of Image and Graphics, 2022, 27(6): 2008–2035. doi: 10.11834/jig.220085. [5] GONG Shu, XING Kaibo, CICHOCKI A, et al. Deep learning in EEG: Advance of the last ten-year critical period[J]. IEEE Transactions on Cognitive and Developmental Systems, 2022, 14(2): 348–365. doi: 10.1109/TCDS.2021.3079712. [6] 柳长源, 李文强, 毕晓君. 基于RCNN-LSTM的脑电情感识别研究[J]. 自动化学报, 2022, 48(3): 917–925. doi: 10.16383/j.aas.c190357.LIU Changyuan, LI Wenqiang, and BI Xiaojun. Research on EEG emotion recognition based on RCNN-LSTM[J]. Acta Automatica Sinica, 2022, 48(3): 917–925. doi: 10.16383/j.aas.c190357. [7] DU Xiaobing, MA Cuixia, ZHANG Guanhua, et al. An efficient LSTM network for emotion recognition from multichannel EEG signals[J]. IEEE Transactions on Affective Computing, 2022, 13(3): 1528–1540. doi: 10.1109/TAFFC.2020.3013711. [8] HOU Fazheng, LIU Junjie, BAI Zhongli, et al. EEG-based emotion recognition for hearing impaired and normal individuals with residual feature pyramids network based on time–frequency–spatial features[J]. IEEE Transactions on Instrumentation and Measurement, 2023, 72: 2505011. doi: 10.1109/TIM.2023.3240230. [9] 刘嘉敏, 苏远歧, 魏平, 等. 基于长短记忆与信息注意的视频–脑电交互协同情感识别[J]. 自动化学报, 2020, 46(10): 2137–2147. doi: 10.16383/j.aas.c180107.LIU Jiamin, SU Yuanqi, WEI Ping, et al. Video-EEG based collaborative emotion recognition using LSTM and information-attention[J]. Acta Automatica Sinica, 2020, 46(10): 2137–2147. doi: 10.16383/j.aas.c180107. [10] WANG Mei, HUANG Ziyang, LI Yuancheng, et al. Maximum weight multi-modal information fusion algorithm of electroencephalographs and face images for emotion recognition[J]. Computers & Electrical Engineering, 2021, 94: 107319. doi: 10.1016/j.compeleceng.2021.107319. [11] NGAI W K, XIE H R, ZOU D, et al. Emotion recognition based on convolutional neural networks and heterogeneous bio-signal data sources[J]. Information Fusion, 2022, 77: 107–117. doi: 10.1016/j.inffus.2021.07.007. [12] SALAMA E S, EL-KHORIBI R A, SHOMAN M E, et al. A 3D-convolutional neural network framework with ensemble learning techniques for multi-modal emotion recognition[J]. Egyptian Informatics Journal, 2021, 22(2): 167–176. doi: 10.1016/j.eij.2020.07.005. [13] 杨杨, 詹德川, 姜远, 等. 可靠多模态学习综述[J]. 软件学报, 2021, 32(4): 1067–1081. doi: 10.13328/j.cnki.jos.006167.YANG Yang, ZHAN Dechuan, JIANG Yuan, et al. Reliable multi-modal learning: A survey[J]. Journal of Software, 2021, 32(4): 1067–1081. doi: 10.13328/j.cnki.jos.006167. [14] ZHANG Yuhao, HOSSAIN M Z, and RAHMAN S. DeepVANet: A deep end-to-end network for multi-modal emotion recognition[C]. The 18th IFIP TC 13 International Conference on Human-Computer Interaction, Bari, Italy, 2021: 227–237. doi: 10.1007/978-3-030-85613-7_16. [15] RAYATDOOST S, RUDRAUF D, and SOLEYMANI M. Multimodal gated information fusion for emotion recognition from EEG signals and facial behaviors[C]. 2020 International Conference on Multimodal Interaction, Utrecht, The Netherlands, 2020: 655–659. doi: 10.1145/3382507.3418867. [16] FANG Yuchun, RONG Ruru, and HUANG Jun. Hierarchical fusion of visual and physiological signals for emotion recognition[J]. Multidimensional Systems and Signal Processing, 2021, 32(4): 1103–1121. doi: 10.1007/s11045-021-00774-z. [17] CHOI D Y, KIM D H, and SONG B C. Multimodal attention network for continuous-time emotion recognition using video and EEG signals[J]. IEEE Access, 2020, 8: 203814–203826. doi: 10.1109/ACCESS.2020.3036877. [18] ZHAO Yifeng and CHEN Deyun. Expression EEG multimodal emotion recognition method based on the bidirectional LSTM and attention mechanism[J]. Computational and Mathematical Methods in Medicine, 2021, 2021: 9967592. doi: 10.1155/2021/9967592. [19] HE Yu, SUN Licai, LIAN Zheng, et al. Multimodal temporal attention in sentiment analysis[C]. The 3rd International on Multimodal Sentiment Analysis Workshop and Challenge, Lisboa, Portugal, 2022: 61–66. doi: 10.1145/3551876.3554811. [20] BOUSMALIS K, TRIGEORGIS G, SILBERMAN N, et al. Domain separation networks[C]. The 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 2016: 343–351. doi: 10.5555/3157096.3157135. [21] LIU Dongjun, DAI Weichen, ZHANG Hangkui, et al. Brain-Machine coupled learning method for facial emotion recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(9): 10703–10717. doi: 10.1109/TPAMI.2023.3257846. [22] 李幼军, 黄佳进, 王海渊, 等. 基于SAE和LSTM RNN的多模态生理信号融合和情感识别研究[J]. 通信学报, 2017, 38(12): 109–120. doi: 10.11959/j.issn.1000-436x.2017294.LI Youjun, HUANG Jiajin, WANG Haiyuan, et al. Study of emotion recognition based on fusion multi-modal bio-signal with SAE and LSTM recurrent neural network[J]. Journal on Communications, 2017, 38(12): 109–120. doi: 10.11959/j.issn.1000-436x.2017294. [23] YANG Yi, GAO Qiang, SONG Yu, et al. Investigating of deaf emotion cognition pattern by EEG and facial expression combination[J]. IEEE Journal of Biomedical and Health Informatics, 2022, 26(2): 589–599. doi: 10.1109/JBHI.2021.3092412. [24] 王斐, 吴仕超, 刘少林, 等. 基于脑电信号深度迁移学习的驾驶疲劳检测[J]. 电子与信息学报, 2019, 41(9): 2264–2272. doi: 10.11999/JEIT180900.WANG Fei, WU Shichao, LIU Shaolin, et al. Driver fatigue detection through deep transfer learning in an electroencephalogram-based system[J]. Journal of Electronics & Information Technology, 2019, 41(9): 2264–2272. doi: 10.11999/JEIT180900. [25] LI Dahua, LIU Jiayin, YANG Yi, et al. Emotion recognition of subjects with hearing impairment based on fusion of facial expression and EEG topographic map[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2023, 31: 437–445. doi: 10.1109/TNSRE.2022.3225948. [26] SIDDHARTH, JUNG T P, and SEJNOWSKI T J. Utilizing deep learning towards multi-modal bio-sensing and vision-based affective computing[J]. IEEE Transactions on Affective Computing, 2022, 13(1): 96–107. doi: 10.1109/TAFFC.2019.2916015. [27] 杨俊, 马正敏, 沈韬, 等. 基于深度时空特征融合的多通道运动想象EEG解码方法[J]. 电子与信息学报, 2021, 43(1): 196–203. doi: 10.11999/JEIT190300.YANG Jun, MA Zhengmin, SHEN Tao, et al. Multichannel MI-EEG feature decoding based on deep learning[J]. Journal of Electronics & Information Technology, 2021, 43(1): 196–203. doi: 10.11999/JEIT190300. [28] AN Yi, XU Ning, and QU Zhen. Leveraging spatial-temporal convolutional features for EEG-based emotion recognition[J]. Biomedical Signal Processing and Control, 2021, 69: 102743. doi: 10.1016/j.bspc.2021.102743. [29] 陈景霞, 郝为, 张鹏伟, 等. 基于混合神经网络的脑电时空特征情感分类[J]. 软件学报, 2021, 32(12): 3869–3883. doi: 10.13328/j.cnki.jos.006123.CHEN Jingxia, HAO Wei, ZHANG Pengwei, et al. Emotion classification of spatiotemporal EEG features using hybrid neural networks[J]. Journal of Software, 2021, 32(12): 3869–3883. doi: 10.13328/j.cnki.jos.006123. [30] COMAS J, ASPANDI D, and BINEFA X. End-to-end facial and physiological model for affective computing and applications[C]. The 15th IEEE International Conference on Automatic Face and Gesture Recognition, Buenos Aires, Argentina, 2020: 93–100. doi: 10.1109/FG47880.2020.00001. [31] KUMAR A, SHARMA K, and SHARMA A. MEmoR: A multimodal emotion recognition using affective biomarkers for smart prediction of emotional health for people analytics in smart industries[J]. Image and Vision Computing, 2022, 123: 104483. doi: 10.1016/j.imavis.2022.104483. [32] LI Jia, ZHANG Ziyang, LANG Junjie, et al. Hybrid multimodal feature extraction, mining and fusion for sentiment analysis[C]. The 3rd International on Multimodal Sentiment Analysis Workshop and Challenge, Lisboa, Portugal, 2022: 81–88. doi: 10.1145/3551876.3554809. [33] DING Yi, ROBINSON N, ZHANG Su, et al. TSception: Capturing temporal dynamics and spatial asymmetry from EEG for emotion recognition[J]. IEEE Transactions on Affective Computing, 2023, 14(3): 2238–2250. doi: 10.1109/TAFFC.2022.3169001. [34] KULLBACK S and LEIBLER R A. On information and sufficiency[J]. The Annals of Mathematical Statistics, 1951, 22(1): 79–86. doi: 10.1214/aoms/1177729694. [35] GRETTON A, BORGWARDT K M, RASCH M J, et al. A kernel two-sample test[J]. The Journal of Machine Learning Research, 2012, 13: 723–773. doi: 10.5555/2188385.2188410. [36] ZELLINGER W, MOSER B A, GRUBINGER T, et al. Robust unsupervised domain adaptation for neural networks via moment alignment[J]. Information Sciences, 2019, 483: 174–191. doi: 10.1016/j.ins.2019.01.025. [37] KOELSTRA S, MUHL C, SOLEYMANI M, et al. DEAP: A database for emotion analysis using physiological signals[J]. IEEE Transactions on Affective Computing, 2012, 3(1): 18–31. doi: 10.1109/T-AFFC.2011.15. [38] SOLEYMANI M, LICHTENAUER J, PUN T, et al. A multimodal database for affect recognition and implicit tagging[J]. IEEE Transactions on Affective Computing, 2012, 3(1): 42–55. doi: 10.1109/T-AFFC.2011.25. [39] HUANG Yongrui, YANG Jianhao, LIU Siyu, et al. Combining facial expressions and electroencephalography to enhance emotion recognition[J]. Future Internet, 2019, 11(5): 105. doi: 10.3390/fi11050105. [40] ZHU Qingyang, LU Guanming, and YAN Jingjie. Valence-arousal model based emotion recognition using EEG, peripheral physiological signals and facial expression[C]. The 4th International Conference on Machine Learning and Soft Computing, Haiphong City, Vietnam, 2020: 81–85. doi: 10.1145/3380688.3380694. [41] LI Ruixin, LIANG Tan, LIU Xiaojian, et al. MindLink-Eumpy: An open-source python toolbox for multimodal emotion recognition[J]. Frontiers in Human Neuroscience, 2021, 15: 621493. doi: 10.3389/fnhum.2021.621493. [42] ZHANG Yong, CHENG Cheng, WANG Shuai, et al. Emotion recognition using heterogeneous convolutional neural networks combined with multimodal factorized bilinear pooling[J]. Biomedical Signal Processing and Control, 2022, 77: 103877. doi: 10.1016/j.bspc.2022.103877. [43] CHEN Jingxia, LIU Yang, XUE Wen, et al. Multimodal EEG emotion recognition based on the attention recurrent graph convolutional network[J]. Information, 2022, 13(11): 550. doi: 10.3390/info13110550. [44] WU Yongzhen and LI Jinhua. Multi-modal emotion identification fusing facial expression and EEG[J]. Multimedia Tools and Applications, 2023, 82(7): 10901–10919. doi: 10.1007/s11042-022-13711-4. [45] WANG Shuai, QU Jingzi, ZHANG Yong, et al. Multimodal emotion recognition from EEG signals and facial expressions[J]. IEEE Access, 2023, 11: 33061–33068. doi: 10.1109/ACCESS.2023.3263670. -

下载:

下载:

下载:

下载: