Infrared and Visible Image Fusion Network with Multi-Relation Perception

-

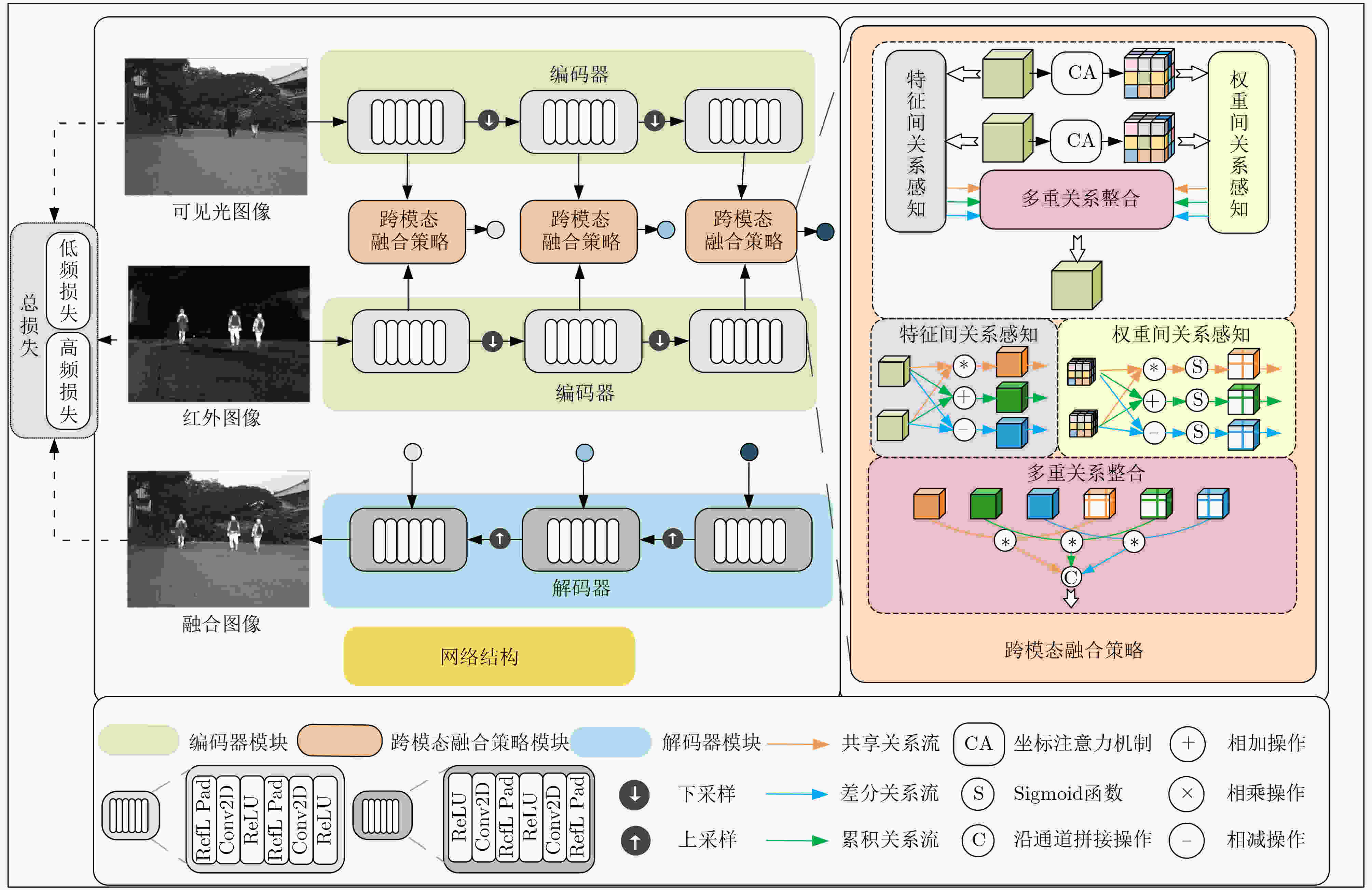

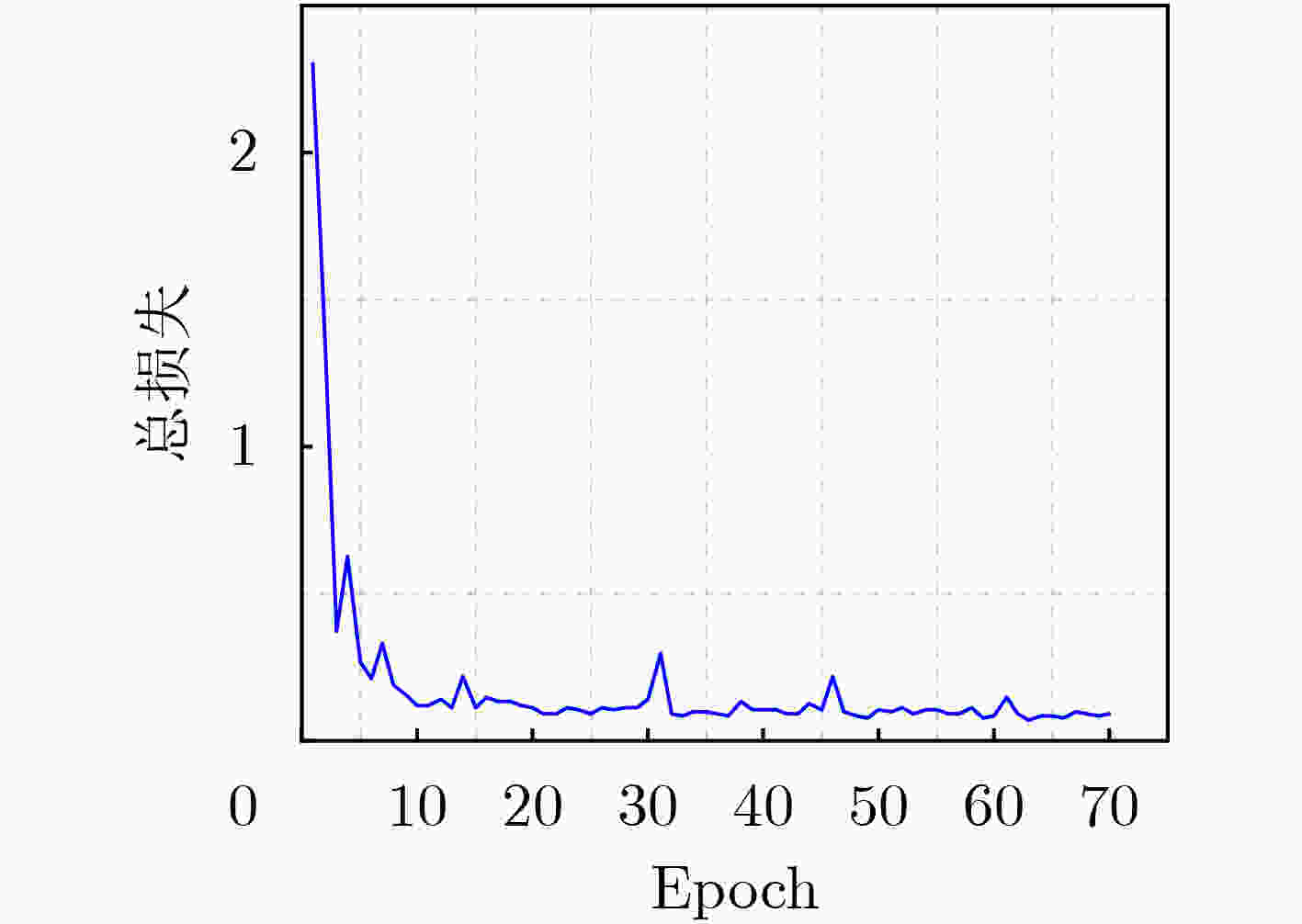

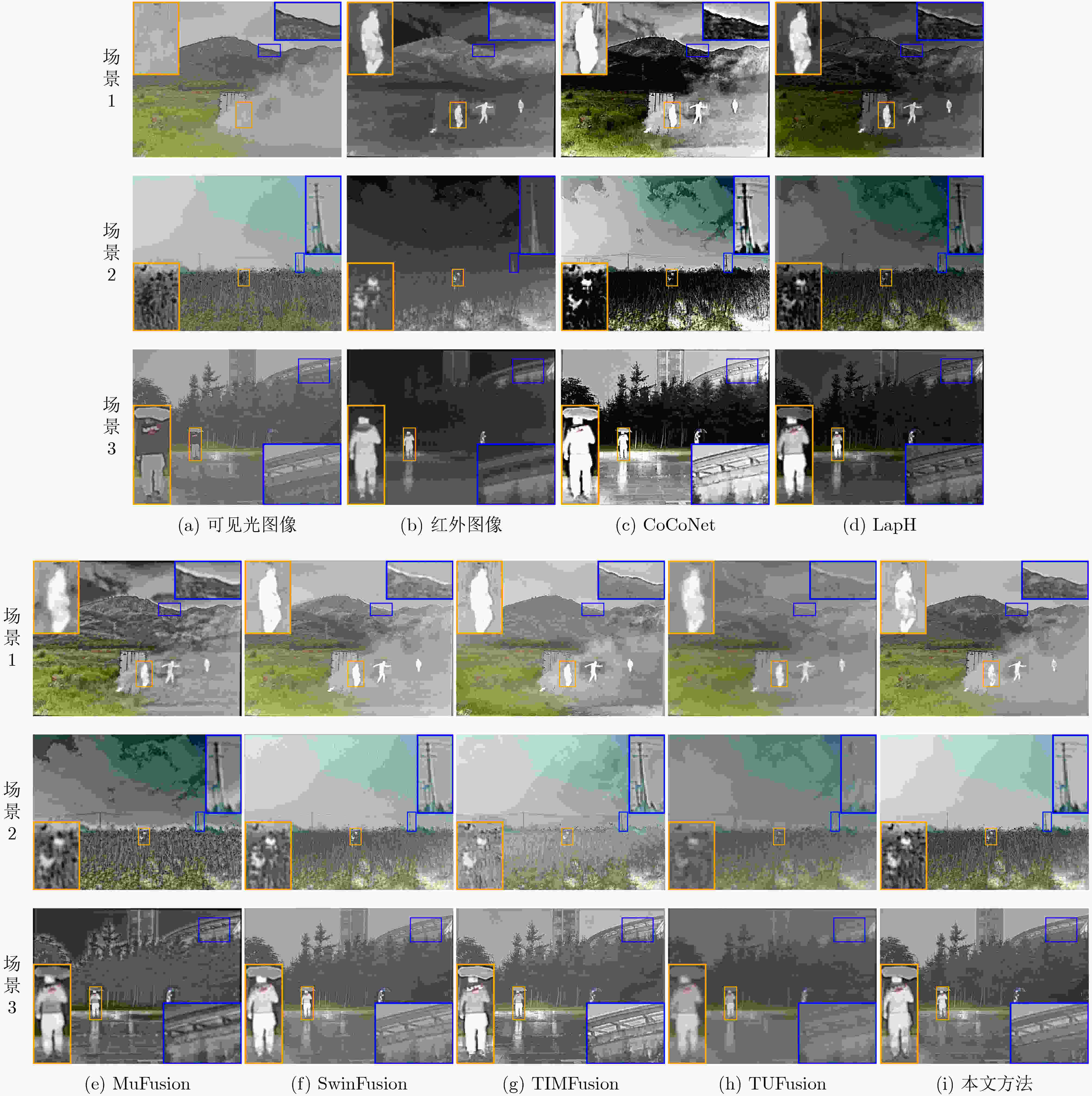

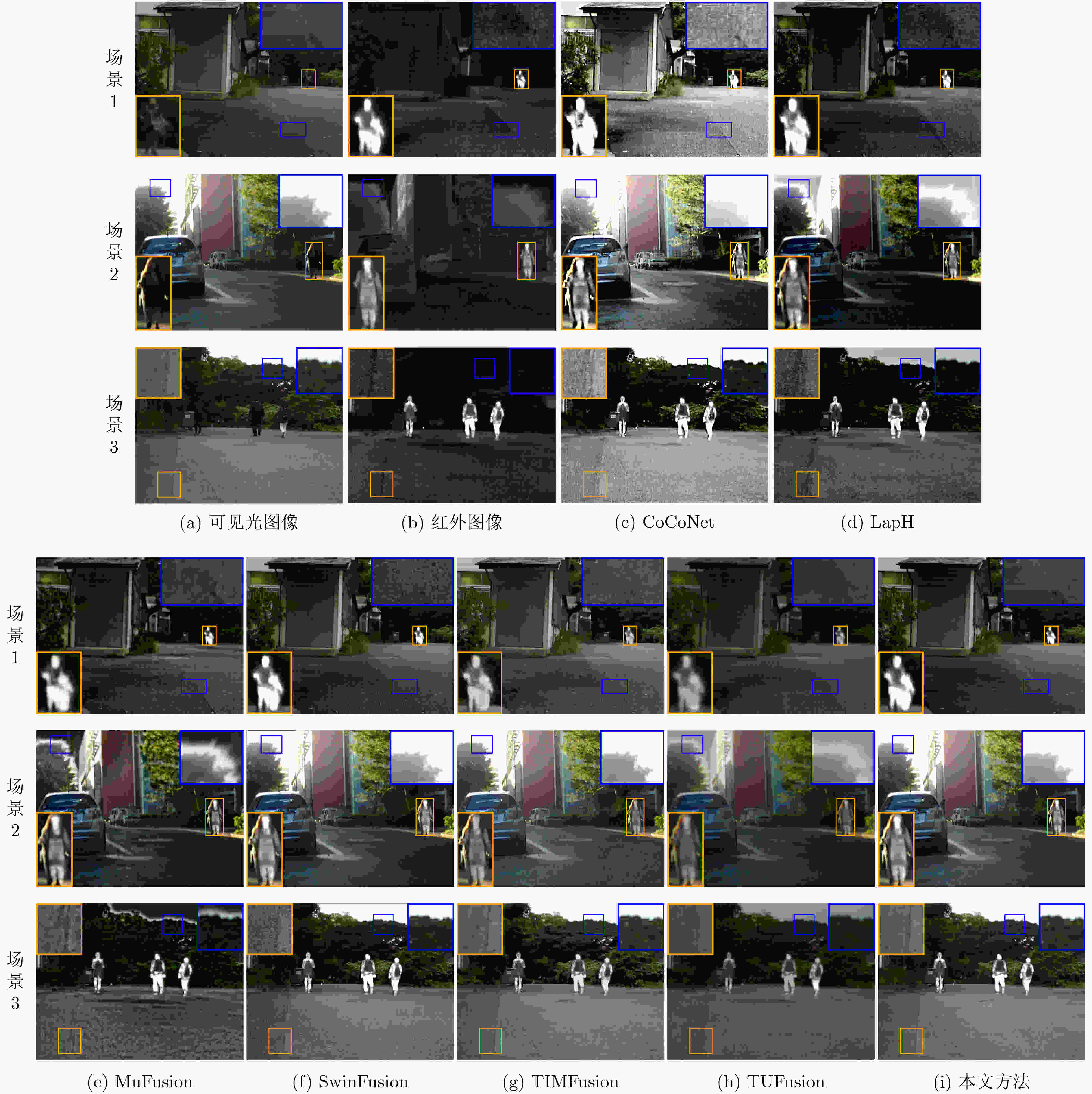

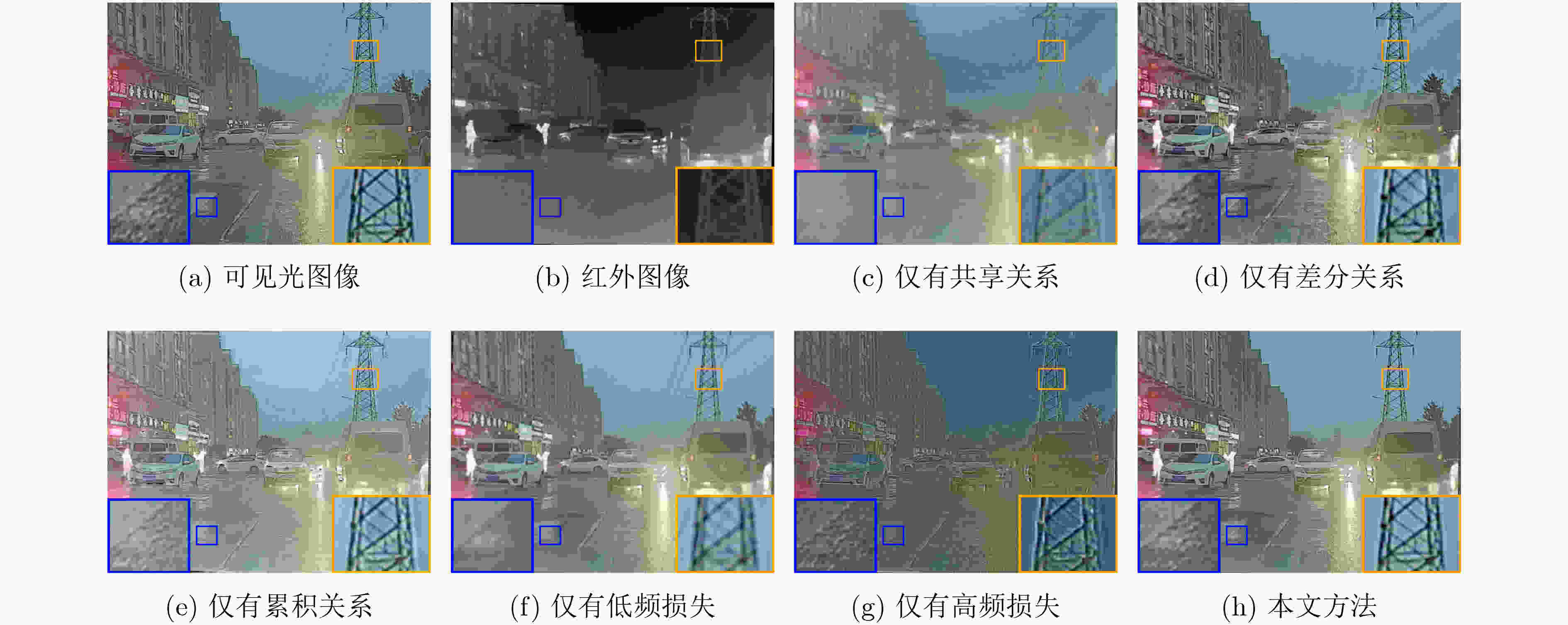

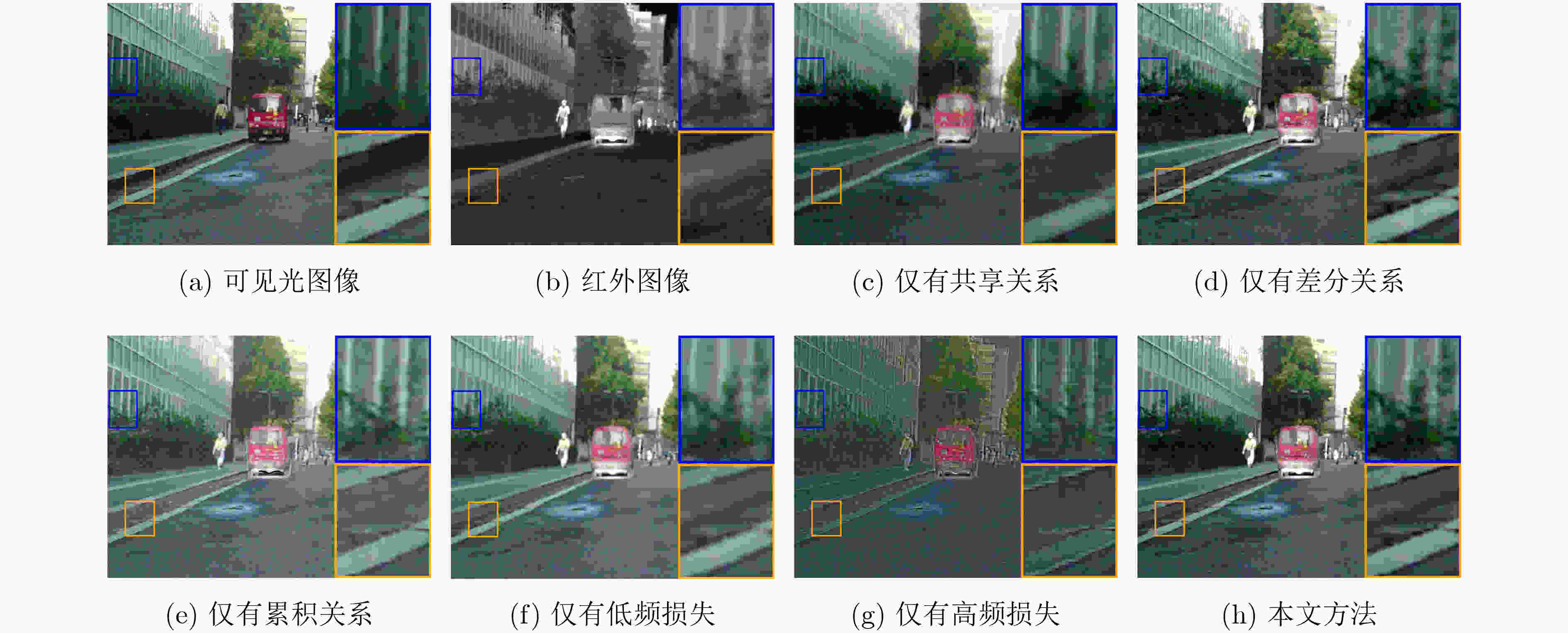

摘要: 为充分整合红外与可见光图像间的一致特征和互补特征,该文提出一种基于多重关系感知的红外与可见光图像融合方法。该方法首先利用双分支编码器提取源图像特征,然后将提取的源图像特征输入设计的基于多重关系感知的跨模态融合策略,最后利用解码器重建融合特征生成最终的融合图像。该融合策略通过构建特征间关系感知和权重间关系感知,利用不同模态间的共享关系、差分关系和累积关系的相互作用,实现源图像一致特征和互补特征的充分整合,以得到融合特征。为约束网络训练以保留源图像的固有特征,设计了一种基于小波变换的损失函数,以辅助融合过程对源图像低频分量和高频分量的保留。实验结果表明,与目前基于深度学习的图像融合方法相比,该文方法能够充分整合源图像的一致特征和互补特征,能够有效保留可见光图像的背景信息和红外图像的热目标,整体融合效果优于对比方法。Abstract: A multi-relation perception network for infrared and visible image fusion is proposed in this paper to fully integrate consistent features and complementary features between infrared and visible images. First, a dual-branch encoder module is used to extract features from the source images. The extracted features are then fed into the fusion strategy module based on multi-relation perception. Finally, a decoder module is used to reconstruct the fused features and generate the final fused image. In this fusion strategy module, the feature relationship perception and the weight relationship perception are constructed by exploring the interactions between the shared relationship, the differential relationship, and the cumulative relationship across different modalities, so as to integrate consistent features and complementary features between different modalities and obtain fused features. To constrain network training and preserve the intrinsic features of the source images, a wavelet transform-based loss function is developed to assist in preserving low-frequency components and high-frequency components of the source images during the fusion process. Experiments indicate that, compared to the state-of-the-art deep learning-based image fusion methods, the proposed method can fully integrate consistent features and complementary features between source images, thereby successfully preserving the background information of visible images and the thermal targets of infrared images. Overall, the fusion performance of the proposed method surpasses that of the compared methods.

-

Key words:

- Image fusion /

- Infrared image /

- Visible image /

- Multi-relation perception /

- Wavelet transform

-

1 跨模态融合策略伪代码

输入:红外图像特征$ {{\boldsymbol{F}}_{{\text{ir}}}} $,可见光图像特征$ {{\boldsymbol{F}}_{{\text{vis}}}} $ 输出:融合特征$ {{\boldsymbol{F}}_{{\text{fuse}}}} $ do (1) 步骤1计算共享特征、差分特征和累积特征: (2) $ {\hat {\boldsymbol{F}}_{\text{s}}} \leftarrow {{\boldsymbol{F}}_{{\text{ir}}}}*{{\boldsymbol{F}}_{{\text{vis}}}} $ (3) $ {\hat {\boldsymbol{F}}_{\text{d}}} \leftarrow {F_{{\text{ir}}}} - {{\boldsymbol{F}}_{{\text{vis}}}} $ (4) $ {\hat {\boldsymbol{F}}_{\text{a}}} \leftarrow {{\boldsymbol{F}}_{{\text{ir}}}} + {{\boldsymbol{F}}_{{\text{vis}}}} $ (5) 步骤2计算基于坐标注意力机制不同模态的加权特征表示: (6) $ {{\boldsymbol{W}}_{{\text{ir}}}} \leftarrow {{\mathrm{CA}}} \left( {{{\boldsymbol{F}}_{{\text{ir}}}}} \right) $ (7) $ {{\boldsymbol{W}}_{{\text{vis}}}} \leftarrow {{\mathrm{CA}}} \left( {{{\boldsymbol{F}}_{{\text{vis}}}}} \right) $ (8) 步骤3计算共享权重、差分权重和累积权重: (9) $ {\hat {\boldsymbol{W}}_{\text{s}}} \leftarrow {{\mathrm{Sigmoid}}} \left( {{{\boldsymbol{W}}_{{\text{ir}}}}*{{\boldsymbol{W}}_{{\text{vis}}}}} \right) $ (10) $ {\hat {\boldsymbol{W}}_{\text{d}}} \leftarrow {{\mathrm{Sigmoid}}} \left( {{{\boldsymbol{W}}_{{\text{ir}}}} - {{\boldsymbol{W}}_{{\text{vis}}}}} \right) $ (11) $ {\hat {\boldsymbol{W}}_{\text{a}}} \leftarrow {{\mathrm{Sigmoid}}} \left( {{{\boldsymbol{W}}_{{\text{ir}}}} + {{\boldsymbol{W}}_{{\text{vis}}}}} \right) $ (12) 步骤4沿通道维度拼接,获取融合特征: (13) $ {{\boldsymbol{F}}_{{\text{fuse}}}} \leftarrow {{\mathrm{Cat}}} \left( {{{\hat {\boldsymbol{W}}}_{\text{s}}} * {{\hat {\boldsymbol{F}}}_{\text{s}}},{{\hat {\boldsymbol{W}}}_{\text{d}}} * {{\hat {\boldsymbol{F}}}_{\text{d}}},{{\hat {\boldsymbol{W}}}_{\text{a}}} * {{\hat {\boldsymbol{F}}}_{\text{a}}}} \right) $ return $ {{\boldsymbol{F}}_{{\text{fuse}}}} $ 表 1 不同图像融合方法在M3FD数据集和MSRS数据集上的定量结果比较

方法 M3FD MSRS MI↑ $ {Q_{\text{p}}} $↑ $ {Q_{\text{w}}} $↑ $ {Q_{{\text{CV}}}} $↓ MI↑ $ {Q_{\text{p}}} $↑ $ {Q_{\text{w}}} $↑ $ {Q_{{\text{CV}}}} $↓ CoCoNet[19] 2.779 5 0.329 2 0.992 2 778.539 5 2.575 7 0.327 0 0.989 2 847.188 9 LapH[16] 2.628 4 0.378 5 0.992 3 728.642 3 2.169 2 0.385 6 0.996 6 436.233 3 MuFusion[17] 2.348 0 0.240 1 0.994 6 875.821 7 1.617 6 0.256 4 0.996 4 1 203.265 8 SwinFusion[15] 3.391 4 0.373 3 0.992 0 520.361 2 3.478 5 0.425 5 0.996 8 283.761 4 TIMFusion[18] 3.036 7 0.209 5 0.991 4 653.178 7 3.202 3 0.369 0 0.996 4 314.666 0 TUFusion[20] 2.882 1 0.186 4 0.995 6 611.821 8 2.504 4 0.250 7 0.997 3 664.691 8 本文方法 4.458 2 0.383 5 0.991 9 547.978 5 4.296 3 0.477 9 0.996 6 241.200 4 表 2 不同融合策略和不同损失函数在M3FD数据集和MSRS数据集上的定量结果比较

类型 M3FD MSRS MI↑ $ {Q_{\text{p}}} $↑ $ {Q_{\text{w}}} $↑ $ {Q_{{\text{CV}}}} $↓ MI↑ $ {Q_{\text{p}}} $↑ $ {Q_{\text{w}}} $↑ $ {Q_{{\text{CV}}}} $↓ 融合策略 仅有共享关系 2.549 0 0.198 2 0.993 5 604.322 8 2.865 5 0.259 5 0.996 9 357.813 6 仅有差分关系 2.956 0 0.212 6 0.991 0 615.008 8 2.637 3 0.300 2 0.996 3 333.188 2 仅有累积关系 2.737 9 0.278 6 0.990 4 794.850 3 2.904 0 0.361 1 0.996 4 632.420 0 本文方法 4.458 2 0.383 5 0.991 9 547.978 5 4.296 3 0.477 9 0.996 6 241.200 4 损失函数 仅有低频损失 3.738 7 0.200 8 0.990 3 564.404 9 3.773 1 0.346 8 0.996 4 266.584 4 仅有高频损失 1.633 7 0.142 4 0.994 7 1 030.369 4 1.268 7 0.173 2 0.995 6 2 393.834 2 本文方法 4.458 2 0.383 5 0.991 9 547.978 5 4.296 3 0.477 9 0.996 6 241.200 4 表 3 不同图像融合方法的模型复杂度和运行时间比较

CoCoNet LapH MuFusion SwinFusion TIMFusion TUFusion 本文方法 参数量(M) 9.130 0.134 2.124 0.974 0.127 76.282 14.727 FLOPs(G) 63.447 16.087 179.166 259.045 45.166 272.992 25.537 时间(s) M3FD 0.131 0.062 0.670 2.471 0.208 0.234 0.196 MSRS 0.129 0.062 0.683 2.529 0.211 0.233 0.203 -

[1] 杨莘, 田立凡, 梁佳明, 等. 改进双路径生成对抗网络的红外与可见光图像融合[J]. 电子与信息学报, 2023, 45(8): 3012–3021. doi: 10.11999/JEIT220819.YANG Shen, TIAN Lifan, LIANG Jiaming, et al. Infrared and visible image fusion based on improved dual path generation adversarial network[J]. Journal of Electronics & Information Technology, 2023, 45(8): 3012–3021. doi: 10.11999/JEIT220819. [2] 高绍兵, 詹宗逸, 匡梅. 视觉多通路机制启发的多场景感知红外与可见光图像融合框架[J]. 电子与信息学报, 2023, 45(8): 2749–2758. doi: 10.11999/JEIT221361.GAO Shaobing, ZHAN Zongyi, and KUANG Mei. Multi-scenario aware infrared and visible image fusion framework based on visual multi-pathway mechanism[J]. Journal of Electronics & Information Technology, 2023, 45(8): 2749–2758. doi: 10.11999/JEIT221361. [3] XU Guoxia, HE Chunming, WANG Hao, et al. DM-Fusion: Deep model-driven network for heterogeneous image fusion[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023: 1–15. doi: 10.1109/TNNLS.2023.3238511. [4] MA Jiayi, YU Wei, LIANG Pengwei, et al. FusionGAN: A generative adversarial network for infrared and visible image fusion[J]. Information Fusion, 2019, 48: 11–26. doi: 10.1016/j.inffus.2018.09.004. [5] TANG Wei, HE Fazhi, and LIU Yu. YDTR: Infrared and visible image fusion via Y-shape dynamic transformer[J]. IEEE Transactions on Multimedia, 2023, 25: 5413–5428. doi: 10.1109/TMM.2022.3192661. [6] LI Hui and WU Xiaojun. DenseFuse: A fusion approach to infrared and visible images[J]. IEEE Transactions on Image Processing, 2019, 28(5): 2614–2623. doi: 10.1109/TIP.2018.2887342. [7] XU Han, ZHANG Hao, and MA Jiayi. Classification saliency-based rule for visible and infrared image fusion[J]. IEEE Transactions on Computational Imaging, 2021, 7: 824–836. doi: 10.1109/TCI.2021.3100986. [8] QU Linhao, LIU Shaolei, WANG Manning, et al. TransMEF: A transformer-based multi-exposure image fusion framework using self-supervised multi-task learning[C]. The 36th AAAI Conference on Artificial Intelligence, Tel Aviv, Israel, 2022: 2126–2134. doi: 10.1609/aaai.v36i2.20109. [9] QU Linhao, LIU Shaolei, WANG Manning, et al. TransFuse: A unified transformer-based image fusion framework using self-supervised learning[EB/OL]. https://arxiv.org/abs/2201.07451, 2022. doi: 10.48550/arXiv.2201.07451. [10] LI Hui, WU Xiaojun, and KITTLER J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images[J]. Information Fusion, 2021, 73: 72–86. doi: 10.1016/j.inffus.2021.02.023. [11] LI Junwu, LI Binhua, JIANG Yaoxi, et al. MrFDDGAN: Multireceptive field feature transfer and dual discriminator-driven generative adversarial network for infrared and color visible image fusion[J]. IEEE Transactions on Instrumentation and Measurement, 2023, 72: 5006228. doi: 10.1109/TIM.2023.3241999. [12] HOU Qibin, ZHOU Daquan, and FENG Jiashi. Coordinate attention for efficient mobile network design[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 13708–13717. doi: 10.1109/cvpr46437.2021.01350. [13] ZHANG Pengyu, ZHAO Jie, WANG Dong, et al. Visible-thermal UAV tracking: A large-scale benchmark and new baseline[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 8876–8885. doi: 10.1109/cvpr52688.2022.00868. [14] LIU Jinyuan, FAN Xin, HUANG Zhanbo, et al. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 5792–5801. doi: 10.1109/cvpr52688.2022.00571. [15] MA Jiayi, TANG Linfeng, FAN Fan, et al. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer[J]. IEEE/CAA Journal of Automatica Sinica, 2022, 9(7): 1200–1217. doi: 10.1109/JAS.2022.105686. [16] LUO Xing, FU Guizhong, YANG Jiangxin, et al. Multi-modal image fusion via deep laplacian pyramid hybrid network[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2023, 33(12): 7354–7369. doi: 10.1109/TCSVT.2023.3281462. [17] CHENG Chunyang, XU Tianyang, and WU Xiaojun. MUFusion: A general unsupervised image fusion network based on memory unit[J]. Information Fusion, 2023, 92: 80–92. doi: 10.1016/j.inffus.2022.11.010. [18] LIU Risheng, LIU Zhu, LIU Jinyuan, et al. A task-guided, implicitly-searched and meta-initialized deep model for image fusion[EB/OL].https://arxiv.org/abs/2305.15862, 2023. doi: 10.48550/arXiv.2305.15862. [19] LIU Jinyuan, LIN Runjia, WU Guanyao, et al. CoCoNet: Coupled contrastive learning network with multi-level feature ensemble for multi-modality image fusion[J]. International Journal of Computer Vision, 2023. doi: 10.1007/s11263-023-01952-1. [20] ZHAO Yangyang, ZHENG Qingchun, ZHU Peihao, et al. TUFusion: A transformer-based universal fusion algorithm for multimodal images[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2024, 34(3): 1712–1725. doi: 10.1109/TCSVT.2023.3296745. [21] QU Guihong, ZHANG Dali, YAN Pingfan, et al. Information measure for performance of image fusion[J]. Electronics Letters, 2002, 38(7): 313–315. doi: 10.1049/el:20020212. [22] ZHAO Jiying, LAGANIERE R, and LIU Zheng. Performance assessment of combinative pixel-level image fusion based on an absolute feature measurement[J]. International Journal of Innovative Computing, Information and Control, 2007, 3(6(A)): 1433–1447. [23] PIELLA G and HEIJMANS H. A new quality metric for image fusion[C]. The 2003 International Conference on Image Processing, Barcelona, Spain, 2003: 173–176. doi: 10.1109/ICIP.2003.1247209. [24] CHEN Hao and VARSHNEY P K. A human perception inspired quality metric for image fusion based on regional information[J]. Information Fusion, 2007, 8(2): 193–207. doi: 10.1016/j.inffus.2005.10.001. [25] ZHANG Xingchen. Deep learning-based multi-focus image fusion: A survey and a comparative study[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(9): 4819–4838. doi: 10.1109/TPAMI.2021.3078906. -

下载:

下载:

下载:

下载: