A Dual-stream Network Based on Body Contour Deformation Field for Gait Recognition

-

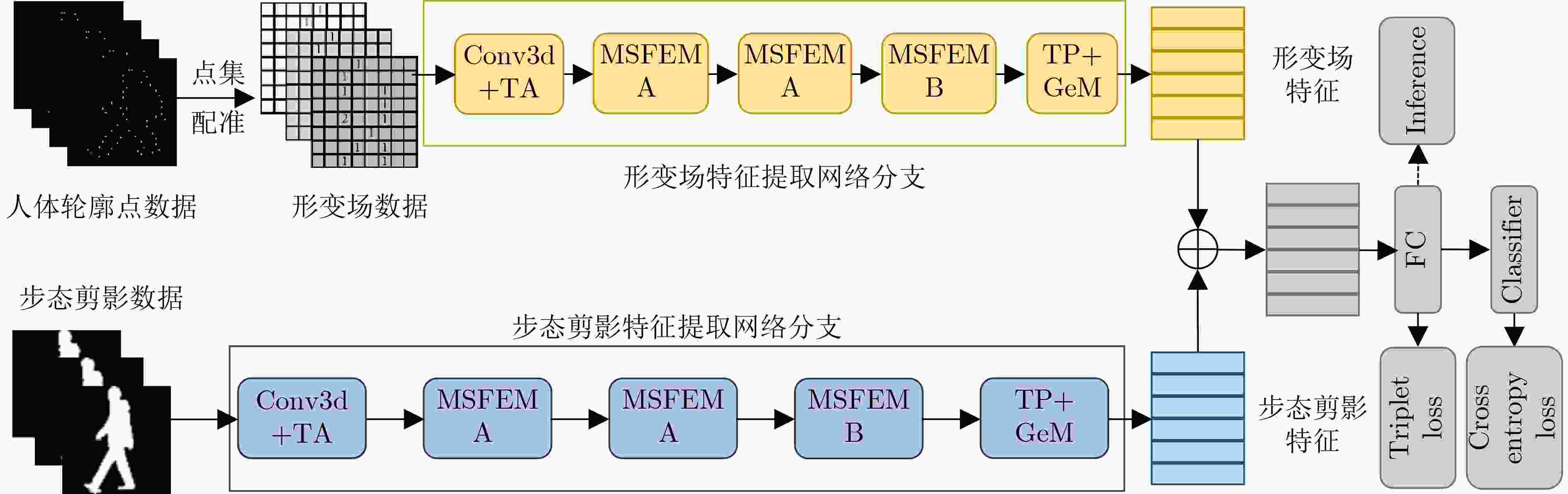

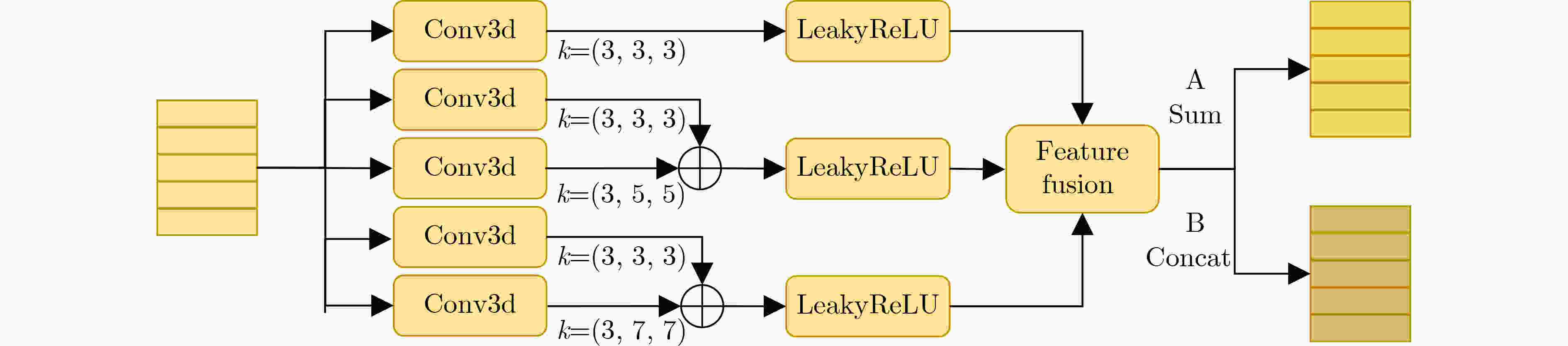

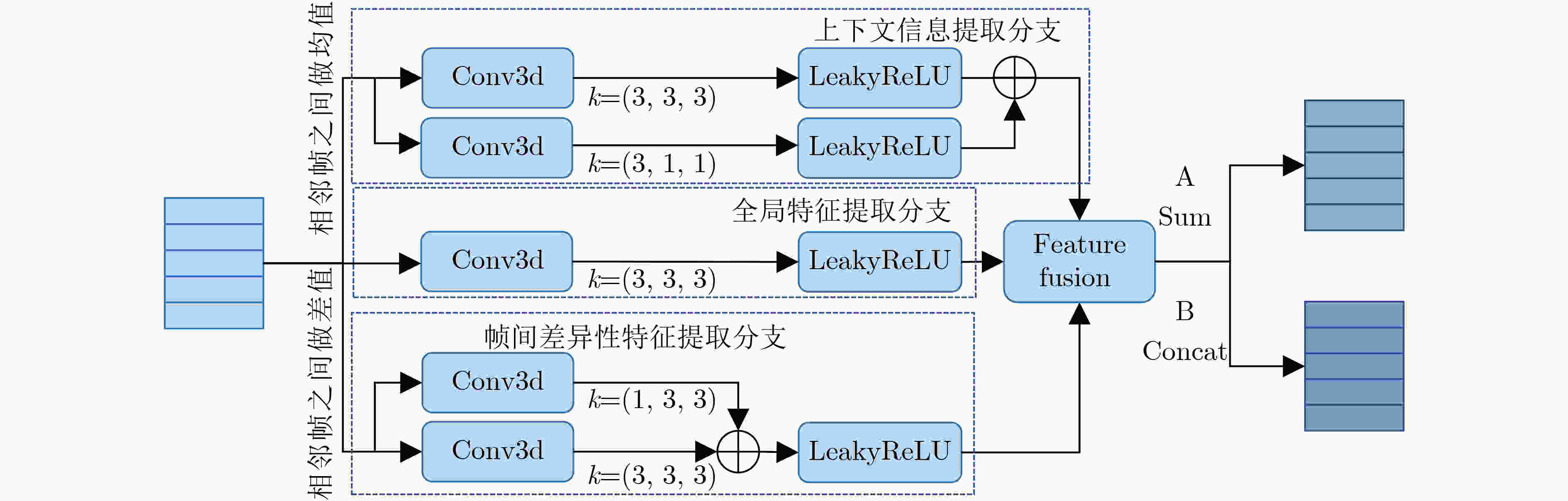

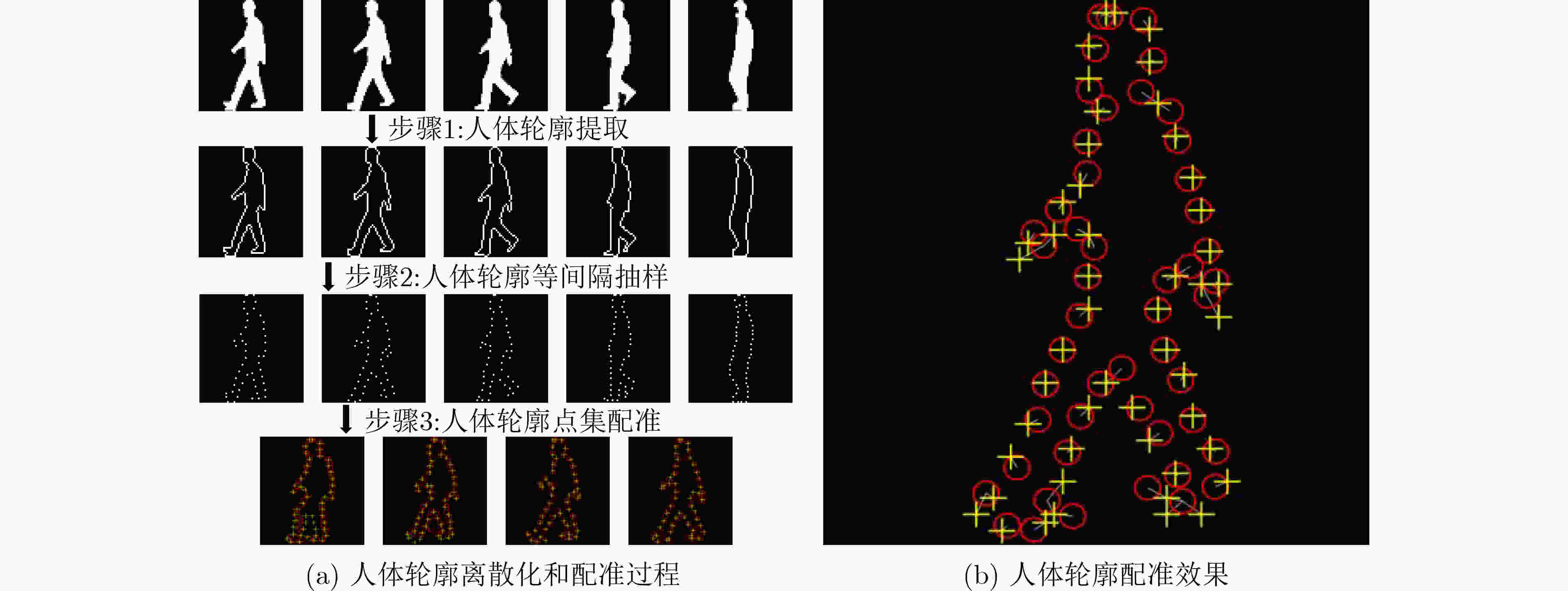

摘要: 步态识别易受相机视角、服装和携带物等外界因素影响而性能下降。为此,该文将非刚性点集配准引入步态识别,利用相邻步态帧之间的形变场表征行走过程中人体轮廓发生的位移量,从而提升对人体形态变化的动态感知能力。在此基础上,该文提出一种基于人体轮廓形变场的双流卷积神经网络GaitDef,该网络模型由形变场和步态剪影两路特征提取分支构成。针对形变场数据的稀疏性设计多尺度特征提取模块,以获取形变场的多层次空间结构信息。针对步态剪影提出动态差异捕捉模块和上下文信息增强模块,以捕捉动态区域的变化特性和利用上下文信息增强步态表征能力。双分支网络的输出特征经过特征融合得到最终的步态表示。大量实验结果表明了该文方法的有效性,在CASIA-B和CCPG数据集上,该文方法的平均Rank-1准确率分别能达到93.5%和68.3%。Abstract: Gait recognition is susceptible to external factors such as camera viewpoints, clothing, and carrying conditions, which could lead to performance degradation. To address these issues, the technique of non-rigid point set registration is introduced into gait recognition, which is used to improve the dynamic perception ability of human morphological changes by utilizing the deformation field between adjacent gait frames to represent the displacement of human contours during walking. Accordingly, a dual-flow convolutional neural network-GaitDef exploiting human contour deformation field is proposed in this paper, which consists of deformation field and gait silhouette extraction branches. Besides, a multi-scale feature extraction module is designed for the sparsity of deformation field data to obtain multi-level spatial structure information of the deformation field. A dynamic difference capture module and a context information augmentation module are proposed to capture the changing characteristics of dynamic regions in gait silhouettes and consequently enhance gait representation ability by utilizing context information. The output features of the dual-branch network structure are fused to obtain the final gait representation. Extensive experimental results verify the effectiveness of GaitDef. The average Rank-1 accuracy of GaitDef can achieve 93.5%和68.3% on CASIA-B and CCPG datasets, respectively.

-

表 1 Rank-1识别准确率在CASIA-B数据集上的对比结果,不包括相同视角的情况(%)

验证集 NM#1-4 0°~180° 均值 探针集 0° 18° 36° 54° 72° 90° 108° 126° 144° 162° 180° NM#

5-6GaitSet AAAI19 90.8 97.9 99.4 96.9 93.6 91.7 95.0 97.8 98.9 96.8 85.8 95.0 GaitPart CVPR20 94.1 98.6 99.3 98.5 94.0 92.3 95.9 98.4 99.2 97.8 90.4 96.2 GaitGL ICCV21 96.0 98.3 99.0 97.9 96.9 95.4 97.0 98.9 99.3 98.8 94.0 97.4 CSTL ICCV21 97.2 99.0 99.2 98.1 96.2 95.5 97.7 98.7 99.2 98.9 96.5 97.8 Lagrange CVPR22 95.2 97.8 99.0 98.0 96.9 94.6 96.9 98.8 98.9 98.0 91.5 96.9 MetaGait ECCV22 97.3 99.2 99.5 99.1 97.2 95.5 97.6 99.1 99.3 99.1 96.7 98.1 GaitGCI-T CVPR23 – – – – – – – – – – – 97.9 GaitDef 本文 95.3 98.1 99.2 98.0 96.7 96.0 98.6 99.4 99.2 99.1 94.1 97.6 BG#

5-6GaitSet AAAI19 83.8 91.2 91.8 88.8 83.3 81.0 84.1 90.0 92.2 94.4 79.0 87.2 GaitPart CVPR20 89.1 94.8 96.7 95.1 88.3 94.9 89.0 93.5 96.1 93.8 85.8 91.5 GaitGL ICCV21 92.6 96.6 96.8 95.5 93.5 89.3 92.2 96.5 98.2 96.9 91.5 94.5 CSTL ICCV21 91.7 96.5 97.0 95.4 90.9 88.0 91.5 95.8 97.0 95.5 90.3 93.6 Lagrange CVPR22 89.9 94.5 95.9 94.6 93.9 88.0 91.1 96.3 98.1 97.3 88.9 93.5 MetaGait ECCV22 92.9 96.7 97.1 96.4 94.7 90.4 92.9 97.2 98.5 98.1 92.3 95.2 GaitGCI-T CVPR23 – – – – – – – – – – – 95.0 GaitDef 本文 93.8 97.0 97.1 96.7 95.8 92.5 95.2 97.5 98.3 97.0 92.0 95.7 CL#

5-6GaitSet AAAI19 61.4 75.4 80.7 77.3 72.1 70.1 71.5 73.5 73.5 68.4 50.0 70.4 GaitPart CVPR20 70.7 85.5 86.9 83.3 77.1 72.5 76.9 82.2 83.8 80.2 66.5 78.7 GaitGL ICCV21 76.6 90.0 90.3 87.1 84.5 79.0 84.1 87.0 87.3 84.4 69.5 83.6 CSTL ICCV21 78.1 89.4 91.6 86.6 82.1 79.9 81.8 86.3 88.7 86.6 75.3 84.2 Lagrange CVPR22 81.6 91.0 94.8 92.2 85.5 82.1 86.0 89.8 90.6 86.0 73.5 86.6 MetaGait ECCV22 80.0 91.8 93.0 87.8 86.5 82.9 85.2 90.0 90.8 89.3 78.4 86.9 GaitGCI-T CVPR23 – – – – – – – – – – – 86.4 GaitDef 本文 77.8 92.8 94.2 91.0 87.7 82.7 86.4 90.1 91.9 88.5 75.6 87.2 表 2 Rank-1识别准确率在CCPG数据集上的对比结果,不包括相同视角的情况(%)

相机编号 1 2 3 4 5 6 7 8 9 10 均值 CL-FULL GaitSet AAAI19 50.6 44.7 57.0 63.8 59.2 61.4 58.3 65.9 62.5 67.4 59.1 GaitPart CVPR20 49.8 42.4 56.5 60.3 58.8 62.4 56.1 63.7 62.1 66.1 57.8 GaitGL ICCV21 56.0 47.9 60.9 65.8 60.7 64.9 58.2 67.8 68.2 65.7 61.6 GaitDef 本文 59.3 52.3 65.4 66.5 66.3 70.3 62.9 70.1 68.5 72.3 65.4 CL-UP GaitSet AAAI19 59.2 56.0 64.2 65.2 66.8 70.7 66.0 66.3 64.5 72.2 65.1 GaitPart CVPR20 58.6 52.3 62.4 65.1 65.9 68.3 61.8 65.8 64.4 67.6 63.2 GaitGL ICCV21 61.8 59.1 67.4 68.9 68.6 72.3 65.0 71.6 73.9 69.8 67.8 GaitDef 本文 66.1 62.4 71.2 71.2 72.7 76.8 69.3 72.9 73.0 75.6 71.1 CL-DN GaitSet AAAI19 59.9 52.9 62.7 68.0 65.1 66.3 63.7 69.6 67.6 72.4 64.8 GaitPart CVPR20 58.2 49.6 61.1 65.5 64.9 68.0 60.8 66.2 69.4 69.4 63.3 GaitGL ICCV21 63.4 51.7 63.7 65.1 63.4 67.1 59.3 68.3 71.6 66.9 64.1 GaitDef 本文 63.8 51.2 62.5 62.5 66.8 68.9 61.2 69.1 70.0 69.4 64.5 BG GaitSet AAAI19 64.3 54.8 69.9 74.1 69.6 73.3 67.5 67.7 66.2 73.6 68.1 GaitPart CVPR20 62.7 56.0 67.1 68.3 70.1 72.8 63.4 67.4 65.0 72.9 66.6 GaitGL ICCV21 64.7 55.0 71.6 72.6 67.3 74.9 66.0 74.1 73.1 75.4 69.5 GaitDef 本文 67.6 55.2 74.1 76.0 72.3 77.0 71.2 75.2 74.6 77.8 72.1 表 3 不同分支网络结构在CASIA-B数据集上的Rank-1识别准确率,不包括相同视角的情况(%)

网络分支 特征提取模块结构 NM BG CL 均值 形变场分支 MSFEM只使用卷积核尺寸为(3,3,3)的卷积 88.9 80.2 58.1 75.7 MSFEM只使用卷积核尺寸为(3,5,5)的卷积 92.4 85.3 66.9 81.5 MSFEM只包含卷积核尺寸为(3,7,7)的卷积 92.4 84.8 67.3 81.5 MSFEM使用卷积核尺寸为(3,3,3)和(3,5,5)的卷积 92.5 85.7 67.6 81.9 MSFEM使用卷积核尺寸为(3,3,3)和(3,7,7)的卷积 92.8 85.4 67.3 81.8 MSFEM使用卷积核尺寸为(3,5,5)和(7,7,7)的卷积 93.1 85.7 67.9 82.2 MSFEM 93.0 86.4 69.2 82.9 步态剪影分支 ACFEM只使用全局特征分支 96.8 94.1 84.1 91.7 ACFEM只使用帧间差异性特征提取分支 97.0 94.6 84.6 92.1 ACFEM只使用上下文特征提取分支 96.6 94.0 83.7 91.4 ACFEM使用全局特征和帧间差异性特征提取分支 97.2 95.3 86.1 92.9 ACFEM使用全局特征和上下文特征提取分支 97.2 94.7 85.2 92.4 ACFEM使用帧间差异性特征和上下文特征提取分支 97.1 95.1 86.4 92.9 ACFEM 97.5 95.4 86.6 93.2 特征融合 形变场分支(MSFEM)+步态剪影分支(ACFEM) 97.6 95.7 87.2 93.5 表 4 不同分支网络结构在CCPG数据集上的Rank-1识别准确率,不包括相同视角的情况(%)

网络分支 特征提取模块结构 CL-Full CL-UP CL-DN BG 均值 形变场分支 MSFEM 50.5 59.8 57.5 61.4 57.3 步态剪影分支 ACFEM 62.0 67.5 62.8 68.2 65.1 特征融合 形变场分支(MSFEM)+步态剪影分支(ACFEM) 65.4 71.1 64.5 72.1 68.3 表 5 3元组损失函数中的不同边界值在CASIAB数据集上的Rank-1识别准确率对比,不包括相同视角的情况(%)

m NM BG CL 均值 0.1 97.3 95.3 85.9 92.8 0.2 97.6 95.7 87.2 93.5 0.3 97.3 94.9 84.9 92.4 0.4 97.3 94.9 85.2 92.5 0.5 97.5 95.0 84.6 92.4 表 6 平均Rank-1识别准确率(%)、参数量(M)和浮点计算次数(G)在CASIA-B数据集上的对比

方法 平均Rank-1

识别准确率参数量 浮点计算次数 GaitSet 84.2 2.59 6.54 GaitPart 88.8 1.20 113.92 GaitGL 91.8 2.49 25.24 GaitDef(形变场分支) 82.9 8.07 136.45 GaitDef(步态剪影分支) 93.2 2.48 55.91 GaitDef(形变场分支+

步态剪影分支)93.5 10.55 178.38 -

[1] 杨旗, 薛定宇. 基于双尺度动态贝叶斯网络及多信息融合的步态识别[J]. 电子与信息学报, 2012, 34(5): 1148–1153. doi: 10.3724/SP.J.1146.2011.01012.YANG Qi and XUE Dingyu. Gait recognition based on two-scale dynamic Bayesian network and more information fusion[J]. Journal of Electronics & Information Technology, 2012, 34(5): 1148–1153. doi: 10.3724/SP.J.1146.2011.01012. [2] 王茜, 蔡竞, 郭柏冬, 等. 面向公共安全的步态识别技术研究[J]. 中国人民公安大学学报: 自然科学版, 2023, 29(1): 68–76. doi: 10.3969/j.issn.1007-1784.2023.01.009.WANG Qian, CAI Jing, GUO Baidong, et al. Research on gait recognition technology for public security[J]. Journal of People’s Public Security University of China: Science and Technology, 2023, 29(1): 68–76. doi: 10.3969/j.issn.1007-1784.2023.01.009. [3] LIAO Rijun, YU Shiqi, AN Weizhi, et al. A model-based gait recognition method with body pose and human prior knowledge[J]. Pattern Recognition, 2020, 98: 107069. doi: 10.1016/j.patcog.2019.107069. [4] CAO Zhe, SIMON T, WEI S E, et al. Realtime multi-person 2D pose estimation using part affinity fields[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1302–1310. doi: 10.1109/CVPR.2017.143. [5] TEEPE T, GILG J, HERZOG F, et al. Towards a deeper understanding of skeleton-based gait recognition[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 1568–1576. doi: 10.1109/CVPRW56347.2022.00163. [6] AN Weizhi, YU Shiqi, MAKIHARA Y, et al. Performance evaluation of model-based gait on multi-view very large population database with pose sequences[J]. IEEE Transactions on Biometrics, Behavior, and Identity Science, 2020, 2(4): 421–430. doi: 10.1109/tbiom.2020.3008862. [7] WANG Likai, CHEN Jinyan, and LIU Yuxin. Frame-level refinement networks for skeleton-based gait recognition[J]. Computer Vision and Image Understanding, 2022, 222: 103500. doi: 10.1016/j.cviu.2022.103500. [8] TEEPE T, KHAN A, GILG J, et al. Gaitgraph: Graph convolutional network for skeleton-based gait recognition[C]. 2021 IEEE International Conference on Image Processing, Anchorage, USA, 2021: 2314–2318. doi: 10.1109/icip42928.2021.9506717. [9] LIAO Rijun, CAO Chunshui, GARCIA E B, et al. Pose-based temporal-spatial network (PTSN) for gait recognition with carrying and clothing variations[C]. The 12th Chinese Conference on Biometric Recognition, Shenzhen, China, 2017: 474–483. doi: 10.1007/978-3-319-69923-3_51. [10] LI Xiang, MAKIHARA Y, XU Chi, et al. End-to-end model-based gait recognition[C]. The 15th Asian Conference on Computer Vision, Kyoto, Japan, 2020: 3–20. doi: 10.1007/978-3-030-69535-4_1. [11] HAN Ju and BHANU B. Individual recognition using gait energy image[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2006, 28(2): 316–322. doi: 10.1109/TPAMI.2006.38. [12] LIU Jianyi and ZHENG Nanning. Gait history image: A novel temporal template for gait recognition[C]. 2007 IEEE International Conference on Multimedia and Expo, Beijing, China, 2007: 663–666. doi: 10.1109/ICME.2007.4284737. [13] CHEN Changhong, LIANG Jimin, ZHAO Heng, et al. Frame difference energy image for gait recognition with incomplete silhouettes[J]. Pattern Recognition Letters, 2009, 30(11): 977–984. doi: 10.1016/j.patrec.2009.04.012. [14] CHAO Hanqing, HE Yiwei, ZHANG Junping, et al. GaitSet: Regarding gait as a set for cross-view gait recognition[C]. The 33th AAAI Conference on Artificial Intelligence, Honolulu, USA, 2019: 8126–8133. doi: 10.1609/aaai.v33i01.33018126. [15] HOU Saihui, CAO Chunshui, LIU Xu, et al. Gait lateral network: Learning discriminative and compact representations for gait recognition[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 382–398. doi: 10.1007/978-3-030-58545-7_22. [16] LIN Beibei, ZHANG Shunli, and YU Xin. Gait recognition via effective global-local feature representation and local temporal aggregation[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 14628–14636. doi: 10.1109/ICCV48922.2021.01438. [17] WANG Ming, LIN Beibei, GUO Xianda, et al. GaitStrip: Gait recognition via effective strip-based feature representations and multi-level framework[C]. The 16th Asian Conference on Computer Vision, Macao, China, 2023: 711–727. doi: 10.1007/978-3-031-26316-3_42. [18] LI Huakang, QIU Yidan, ZHAO Huimin, et al. GaitSlice: A gait recognition model based on spatio-temporal slice features[J]. Pattern Recognition, 2022, 124: 108453. doi: 10.1016/j.patcog.2021.108453. [19] FAN Chao, PENG Yunjie, CAO Chunhui, et al. GaitPart: Temporal part-based model for gait recognition[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 14213–14221. doi: 10.1109/CVPR42600.2020.01423. [20] HUANG Xiaohu, ZHU Duowang, WANG Hao, et al. Context-sensitive temporal feature learning for gait recognition[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 12889–12898. doi: 10.1109/ICCV48922.2021.01267. [21] LIN Beibei, ZHANG Shunli, and BAO Feng. Gait recognition with multiple-temporal-scale 3D convolutional neural network[C]. The 28th ACM International Conference on Multimedia, Seattle, USA, 2020: 3054–3062. doi: 10.1145/3394171.3413861. [22] CHAI Tianrui, LI Annan, ZHANG Shaoxiong, et al. Lagrange motion analysis and view embeddings for improved gait recognition[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 20217–20226. doi: 10.1109/CVPR52688.2022.01961. [23] LIANG Junhao, FAN Chao, HOU Saihui, et al. GaitEdge: Beyond plain end-to-end gait recognition for better practicality[C]. The 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 375–390. doi: 10.1007/978-3-031-20065-6_22. [24] CHUI H and RANGARAJAN A. A new point matching algorithm for non-rigid registration[J]. Computer Vision and Image Understanding, 2003, 89(2/3): 114–141. doi: 10.1016/s1077-3142(03)00009-2. [25] YU Shiqi, TAN Daoliang, and TAN Tieniu. A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition[C]. The 18th International Conference on Pattern Recognition, Hong Kong, China 2006: 441–444. doi: 10.1109/icpr.2006.67. [26] LI Weijia, HOU Saihui, ZHANG Chunjie, et al. An in-depth exploration of person re-identification and gait recognition in cloth-changing conditions[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 13824–13833. doi: 10.1109/CVPR52729.2023.01328. [27] DOU Huanzhang, ZHANG Pengyi, SU Wei, et al. MetaGait: Learning to learn an Omni sample adaptive representation for gait recognition[C]. The 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 357–374. doi: 10.1007/978-3-031-20065-6_21. [28] DOU Huanzhang, ZHANG Pengyi, SU Wei, et al. GaitGCI: Generative counterfactual intervention for gait recognition[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 5578–5588. doi: 10.1109/cvpr52729.2023.00540. -

下载:

下载:

下载:

下载: