FPGA-Based Unified Accelerator for Convolutional Neural Network and Vision Transformer

-

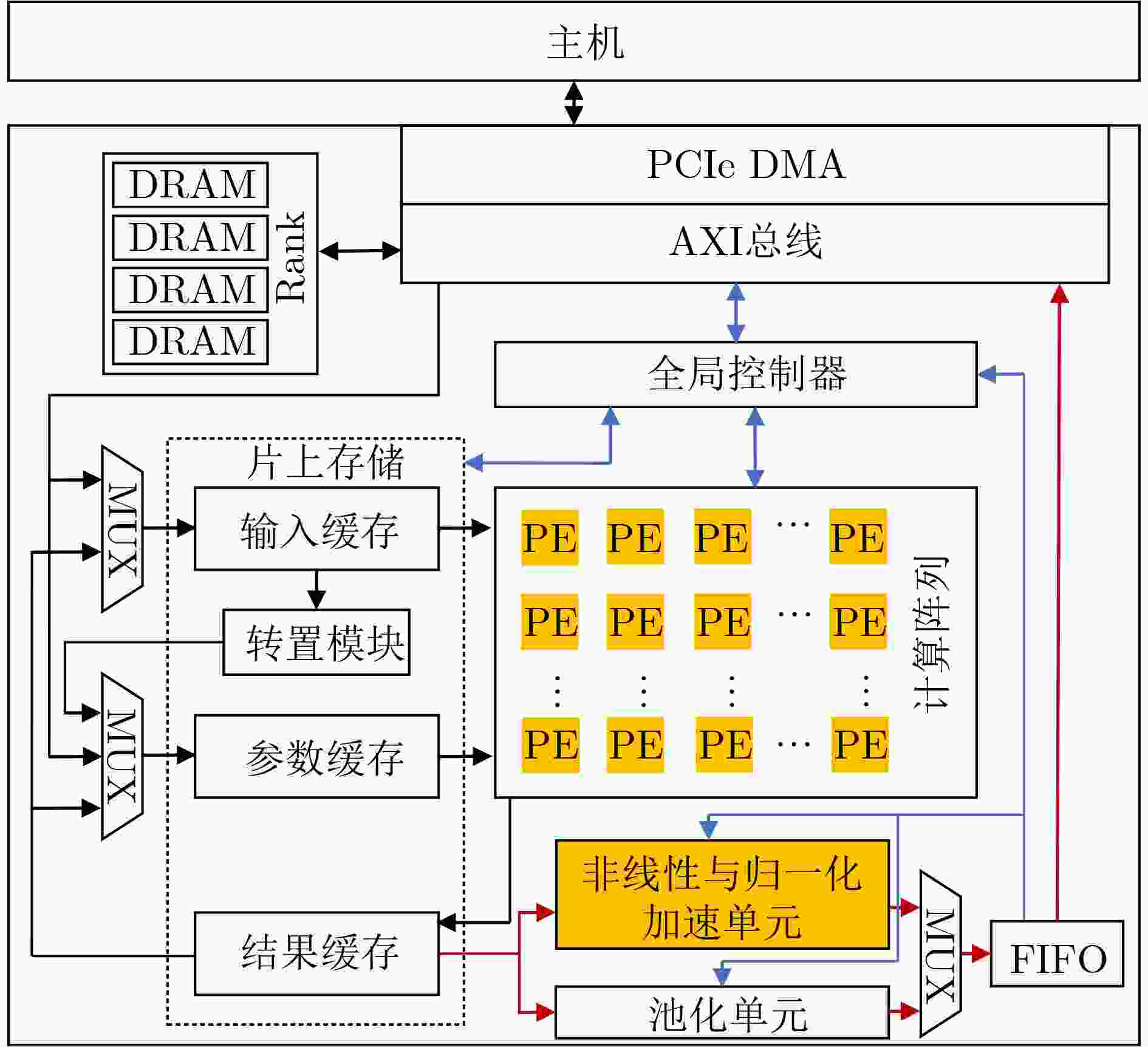

摘要: 针对计算机视觉领域中基于现场可编程逻辑门阵列(FPGA)的传统卷积神经网(CNN)络加速器不适配视觉Transformer网络的问题,该文提出一种面向卷积神经网络和Transformer的通用FPGA加速器。首先,根据卷积和注意力机制的计算特征,提出一种面向FPGA的通用计算映射方法;其次,提出一种非线性与归一化加速单元,为计算机视觉神经网络模型中的多种非线性和归一化操作提供加速支持;然后,在Xilinx XCVU37P FPGA上实现了加速器设计。实验结果表明,所提出的非线性与归一化加速单元在提高吞吐量的同时仅造成很小的精度损失,ResNet-50和ViT-B/16在所提FPGA加速器上的性能分别达到了589.94 GOPS和564.76 GOPS。与GPU实现相比,能效比分别提高了5.19倍和7.17倍;与其他基于FPGA的大规模加速器设计相比,能效比有明显提高,同时计算效率较对比FPGA加速器提高了8.02%~177.53%。

-

关键词:

- 计算机视觉 /

- 卷积神经网络 /

- Transformer /

- FPGA /

- 硬件加速器

Abstract: Considering the problem that traditional Field Programmable Gate Array (FPGA)-based Convolutional Neural Network(CNN) accelerators in computer vision are not adapted to Vision Transformer networks, a unified FPGA accelerator for convolutional neural networks and Transformer is proposed. First, a generalized computation mapping method for FPGA is proposed based on the characteristics of convolution and attention mechanisms. Second, a nonlinear and normalized acceleration unit is proposed to provide acceleration support for multiple nonlinear operations in computer vision networks. Then, we implemented the accelerator design on Xilinx XCVU37P FPGA. Experimental results show that the proposed nonlinear acceleration unit improves the throughput while causing only a small accuracy loss. ResNet-50 and ViT-B/16 achieved 589.94 GOPS and 564.76 GOPS performance on the proposed FPGA accelerator. Compared to the GPU implementation, energy efficiency is improved by a factor of 5.19 and 7.17, respectively. Compared with other large FPGA-based designs, the energy efficiency is significantly improved, and the computing efficiency is increased by 8.02%~177.53% compared to other FPGA accelerators. -

算法1 片上模型计算量穷举法 输入:神经网络模型Model, 乘法器数量$ {\text{Mul}}{{\text{t}}_{{\text{num}}}} $ 输出:$({N_{\rm{i}}},{N_{\rm{o}}})$设计空间探索结果,片上模型计算量${\text{Com}}{{\text{p}}_{{\text{num}}}}$ (1) 初始化$({N_{\rm{i}}},{N_{\rm{o}}})$,${\text{Com}}{{\text{p}}_{{\text{num}}}}$ (2) for $i = 0$;$ i < \left\lfloor {\sqrt {{\text{Mul}}{{\text{t}}_{{\text{num}}}}} } \right\rfloor $;$i + + $ do: (3) ${n_{\rm{i}}} = i + 1$

(4) ${n_{\rm{o}}} = \left\lfloor {\dfrac{ { {\text{Mul} }{ {\text{t} }_{ {\text{num} } } } } }{ { {n_{\rm{i}}} } } } \right\rfloor$(5) if $\text{mod} ({N_{\rm{o}}},{N_{\rm{i}}}) = = 0$ then (6) $({N_{\rm{i}}},{N_{\rm{o}}})$.append$({n_{\rm{i}}},{n_{\rm{o}}})$ (7) end if (8) end for (9) for each$({n_{\rm{i}}},{n_{\rm{o}}})$in$({N_{\rm{i}}},{N_{\rm{o}}})$do (10) ${\text{com}}{{\text{p}}_{{\text{total}}}} = 0$ (11) for each layer in Model do (12) ${\text{ic, oc, mad}}{{\text{d}}_{{\text{num}}}}$= layer.configuration

(13) ${n_{ {\text{iu} } } } = \text{mod} ({\text{ic} },{n_{\rm{i}}}) = = 0{\text{ } }?{\text{ } }1{\text{ } }:{\text{ } }\dfrac{ {\text{mod} ({\text{ic} },{n_{\rm{i} } })} }{ { {n_{\rm{i} } } }}$(14) ${n_{ {\text{ou} } } } = \text{mod} ({\text{oc} },{n_{\rm{o} } }) = = 0{\text{ } }?{\text{ } }1{\text{ } }:{\text{ } }\dfrac{ {\text{mod} ({\text{oc} },{n_{\rm{o} } })} }{ { {n_{\rm{o} } } }}$ (15) ${\text{com}}{{\text{p}}_{{\text{total}}}} + = {\text{mad}}{{\text{d}}_{{\text{num}}}}/({n_{{\text{iu}}}} \times {n_{{\text{ou}}}})$ (16) end for (17) ${\text{Com}}{{\text{p}}_{{\text{num}}}}$.append(${\text{com}}{{\text{p}}_{{\text{total}}}}$) (18) end for (19) return$({N_{\rm{i}}},{N_{\rm{o}}})$, $ {\text{Com}}{{\text{p}}_{{\text{num}}}} $ 表 1 计算阵列有效配置

$({N_i},{N_o})$ 256 512 1024 2048 1 (1, 256) (1, 512) (1, 1 024) (1, 2 048) 2 (2, 128) (2, 256) (2, 512) (2, 1 024) 3 (4, 64) (4, 128) (4, 256) (4, 512) 4 (6, 42) (8, 64) (8, 128) (8, 256) 5 (8, 32) (13, 39) (13, 78) (16, 128) 6 (16, 16) (16, 32) (16, 64) (26, 78) 7 / / (32, 32) (32, 64) 8 / / / (45, 45) 表 2 模型精度消融实验(%)

模型 模式 数据类型 Top-1 Accuracy Top-5 Accuracy Top-1 Diff Top-5 Diff DeiT-S/16 基线 FP32 79.834 94.950 / / OS FP16 79.814 94.968 –0.020 +0.018 OG FP16 79.812 94.952 –0.022 +0.002 OL FP16 79.816 94.948 –0.018 –0.002 ALL FP16 79.814 94.984 –0.020 +0.034 DeiT-B/16 基线 FP32 81.798 95.594 / / OS FP16 81.842 95.620 +0.044 +0.026 OG FP16 81.832 95.610 +0.034 +0.016 OL FP16 81.828 95.600 +0.030 +0.006 ALL FP16 81.824 95.634 +0.026 +0.040 ViT-B/16 基线 FP32 84.528 97.294 / / OS FP16 84.522 97.262 –0.006 –0.032 OG FP16 84.520 97.306 –0.008 +0.012 OL FP16 84.526 97.292 –0.002 –0.002 ALL FP16 84.524 97.262 –0.004 –0.032 ViT-L/16 基线 FP32 85.840 97.818 / / OS FP16 85.800 97.816 –0.040 –0.002 OG FP16 85.818 97.818 –0.022 0.000 OL FP16 85.820 97.818 –0.020 0.000 ALL FP16 85.784 97.81 –0.056 –0.008 Swin-T 基线 FP32 81.172 95.320 / / OS FP16 81.152 95.304 –0.020 –0.016 OG FP16 81.156 95.320 –0.016 0.000 OL FP16 81.164 95.322 –0.008 0.002 ALL FP16 81.148 95.300 –0.024 –0.02 Swin-S 基线 FP32 83.648 97.05 / / OS FP16 83.642 97.02 –0.006 –0.030 OG FP16 83.646 97.08 –0.002 0.030 OL FP16 83.638 97.04 –0.010 –0.010 ALL FP16 83.636 96.966 –0.012 –0.084 表 3 与相关FPGA加速器的比较结果

类别 文献[20] 文献[21] 文献[22] 文献[23] 本文 灵活性 Softmax √ √ √ √ √ GELU × × × √ √ 层归一化 × × × × √ 资源占用 LUT 17870 2564 2229 324 52639 Slice Register 16400 2794 224 318 24403 DSP 0 0 8 1 0 FPGA设备 ZYNQ-7000 – Kintex-7 KC705 Zynq-7000 ZC706 Kintex XCKU15P 频率 (MHz) 150 436 154 410 200 数据精度(bit) 32 16 16 9+12 16+32 吞吐量 (Gbit/s) 2.4 3.49 1.2 0.41 34.13 100 MHz下TPL(Mbit/s) 0.089 0.312 0.349 0.309 0.324 表 4 与GPU实现和其他文献FPGA加速器的比较结果

GPU 文献[13] 文献[24] 文献[9] 文献[10] 本文 模型 ResNet-50 ViT-B/16 ResNet-50 ResNet-50 Swin-T ViT-B/16 ResNet-50 ViT-B/16 计算平台 Nvidia V100 Xilinx KCU1500 Xilinx XCVU9P Xilinx Alveo U50 Xilinx ZC7020 Xilinx XCVU37P 制程(nm) 12 20 16 16 28 16 频率(GHz) 1.46 200 125 300 150 200/400 数据类型 FP32 INT8 INT8 FP16 INT8 INT8 INT8+FP16 输入尺寸 224×224 256×256 224×224 224×224 224×224 224×224 GOP 7.74 17.56 11.76 7.74 – 17.56 7.74 17.56 DSP – 2240 6005 2420 220 608 计算延迟(ms) 6.32 17.74 11.69 28.90 – 363.64 13.12 31.09 帧率(FPS) 158.19 56.37 85.54 34.60 – 2.75 76.22 32.16 -

[1] SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]. 3rd International Conference on Learning Representations, San Diego, USA, 2015. [2] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. [3] SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1–9. [4] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 6000–6010. [5] CARION N, MASSA F, SYNNAEVE G, et al. End-to-end object detection with transformers[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 213–229. [6] 陈莹, 匡澄. 基于CNN和TransFormer多尺度学习行人重识别方法[J]. 电子与信息学报, 2023, 45(6): 2256–2263. doi: 10.11999/JEIT220601.CHEN Ying and KUANG Cheng. Pedestrian re-identification based on CNN and Transformer multi-scale learning[J]. Journal of Electronics &Information Technology, 2023, 45(6): 2256–2263. doi: 10.11999/JEIT220601. [7] ZHAI Xiaohua, KOLESNIKOV A, HOULSBY N, et al. Scaling vision transformers[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 1204–1213. [8] DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An image is worth 16x16 words: transformers for image recognition at scale[C]. 9th International Conference on Learning Representations, 2021. [9] WANG Teng, GONG Lei, WANG Chao, et al. ViA: A novel vision-transformer accelerator based on FPGA[J]. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2022, 41(11): 4088–4099. doi: 10.1109/TCAD.2022.3197489. [10] NAG S, DATTA G, KUNDU S, et al. ViTA: A vision transformer inference accelerator for edge applications[C]. 2023 IEEE International Symposium on Circuits and Systems, Monterey, USA, 2023: 1–5. [11] LI Zhengang, SUN Mengshu, LU A, et al. Auto-ViT-Acc: an FPGA-aware automatic acceleration framework for vision transformer with mixed-scheme quantization[C]. 2022 32nd International Conference on Field-Programmable Logic and Applications, Belfast, UK, 2022: 109–116. [12] 吴瑞东, 刘冰, 付平, 等. 应用于极致边缘计算场景的卷积神经网络加速器架构设计[J]. 电子与信息学报, 2023, 45(6): 1933–1943. doi: 10.11999/JEIT220130.WU Ruidong, LIU Bing, FU Ping, et al. Convolutional neural network accelerator architecture design for ultimate edge computing scenario[J]. Journal of Electronics &Information Technology, 2023, 45(6): 1933–1943. doi: 10.11999/JEIT220130. [13] NGUYEN D T, JE H, NGUYEN T N, et al. ShortcutFusion: from tensorflow to FPGA-based accelerator with a reuse-aware memory allocation for shortcut data[J]. IEEE Transactions on Circuits and Systems I:Regular Papers, 2022, 69(6): 2477–2489. doi: 10.1109/TCSI.2022.3153288. [14] LI Tianyang, ZHANG Fan, FAN Xitian, et al. Unified accelerator for attention and convolution in inference based on FPGA[C]. 2023 IEEE International Symposium on Circuits and Systems, Monterey, USA, 2023: 1–5. [15] LOMONT C. Fast inverse square root[EB/OL]. http://lomont.org/papers/2003/InvSqrt.pdf, 2023. [16] WU E, ZHANG Xiaoqian, BERMAN D, et al. A high-throughput reconfigurable processing array for neural networks[C]. 27th International Conference on Field Programmable Logic and Applications, Ghent, Belgium, 2017: 1–4. [17] FU Yao, WU E, SIRASAO A, et al. Deep learning with INT8 optimization on Xilinx devices[EB/OL]. Xilinx. https://www.origin.xilinx.com/content/dam/xilinx/support/documents/white_papers/wp486-deep-learning-int8.pdf, 2017. [18] ZHU Feng, GONG Ruihao, YU Fengwei, et al. Towards unified INT8 training for convolutional neural network[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 1966–1976. [19] JACOB B, KLIGYS S, CHEN Bo, et al. Quantization and training of neural networks for efficient integer-arithmetic-only inference[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 2704–2713. [20] SUN Qiwei, DI Zhixiong, LV Zhengyang, et al. A high speed SoftMax VLSI architecture based on basic-split[C]. 2018 14th IEEE International Conference on Solid-State and Integrated Circuit Technology, Qingdao, China, 2018: 1–3. [21] WANG Meiqi, LU Siyuan, ZHU Danyang, et al. A high-speed and low-complexity architecture for softmax function in deep learning[C]. 2018 IEEE Asia Pacific Conference on Circuits and Systems, Chengdu, China, 2018: 223–226. [22] GAO Yue, LIU Weiqiang, and LOMBARDI F. Design and implementation of an approximate softmax layer for deep neural networks[C]. 2020 IEEE International Symposium on Circuits and Systems, Seville, Spain, 2020: 1–5. [23] LI Yue, CAO Wei, ZHOU Xuegong, et al. A low-cost reconfigurable nonlinear core for embedded DNN applications[C]. 2020 International Conference on Field-Programmable Technology, Maui, USA, 2020: 35–38. [24] HADJIS S and OLUKOTUN K. TensorFlow to cloud FPGAs: Tradeoffs for accelerating deep neural networks[C]. 29th International Conference on Field Programmable Logic and Applications, Barcelona, Spain, 2019: 360–366. -

下载:

下载:

下载:

下载: