Joint Internal and External Parameters Calibration of Optical Compound Eye Based on Random Noise Calibration Pattern

-

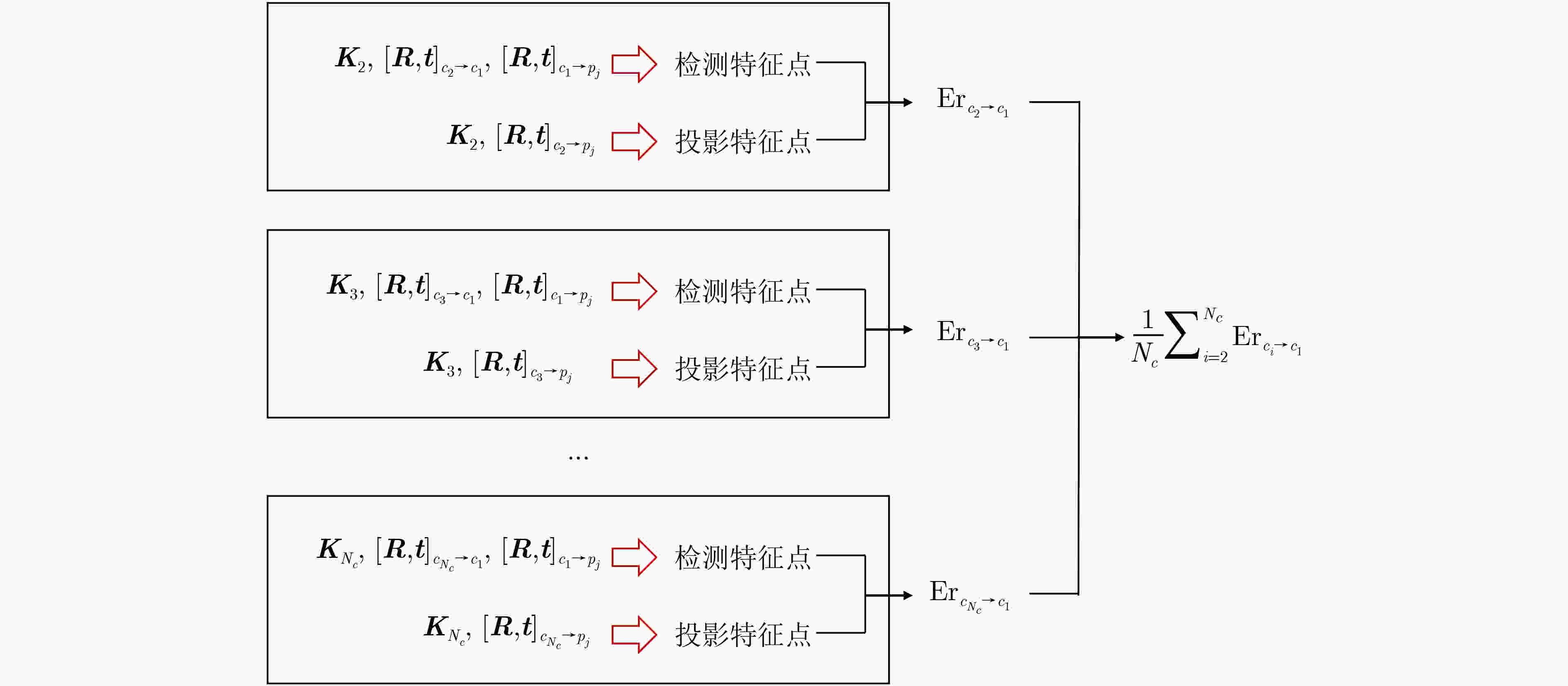

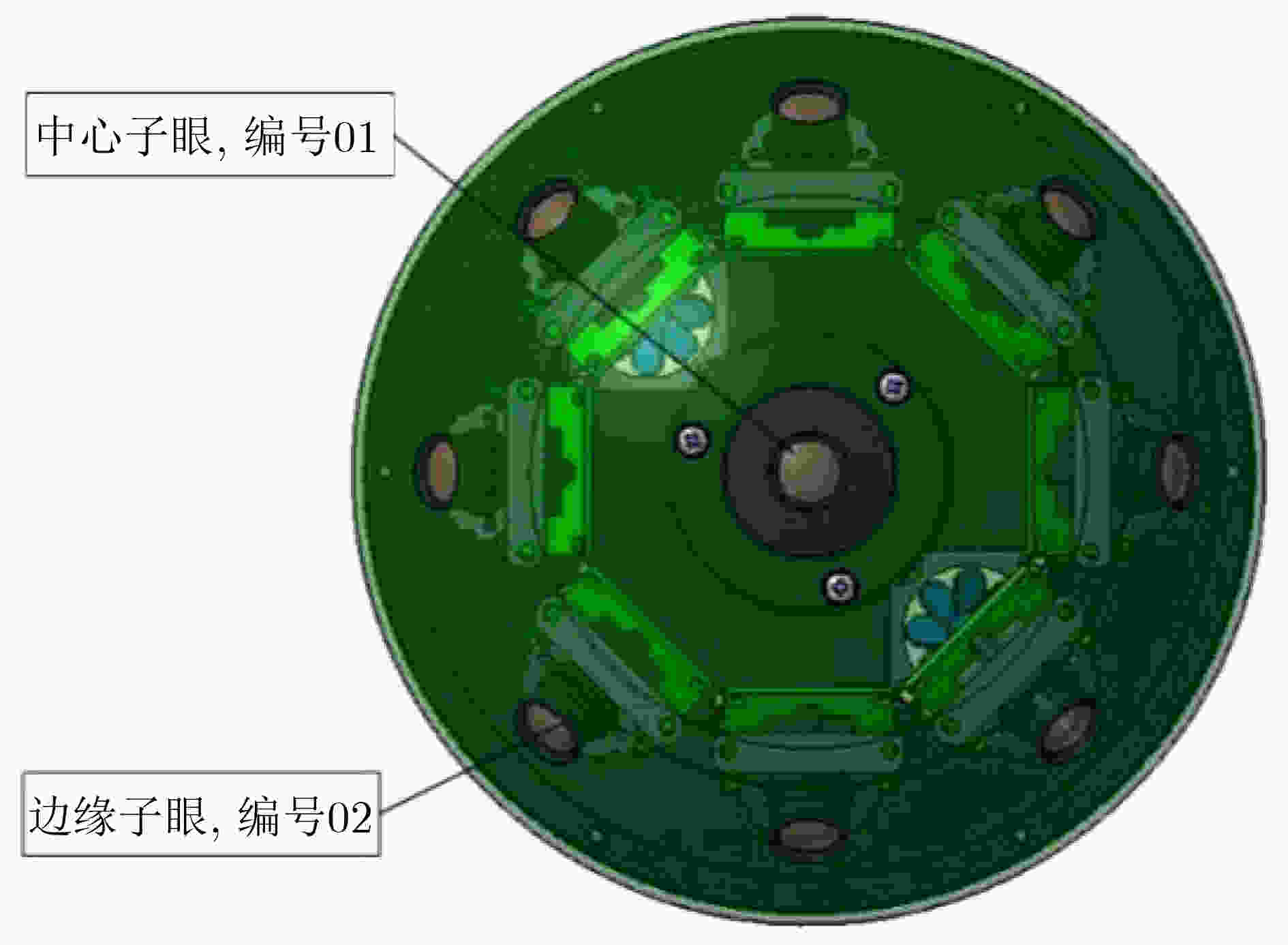

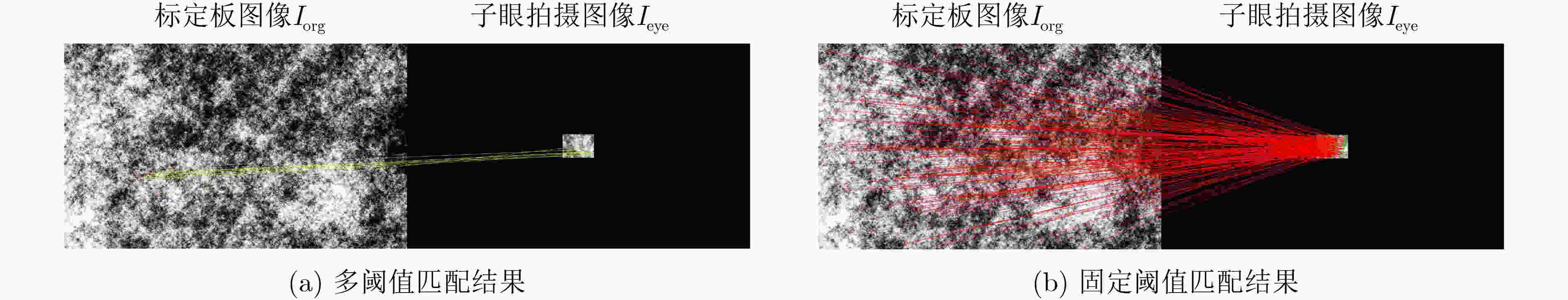

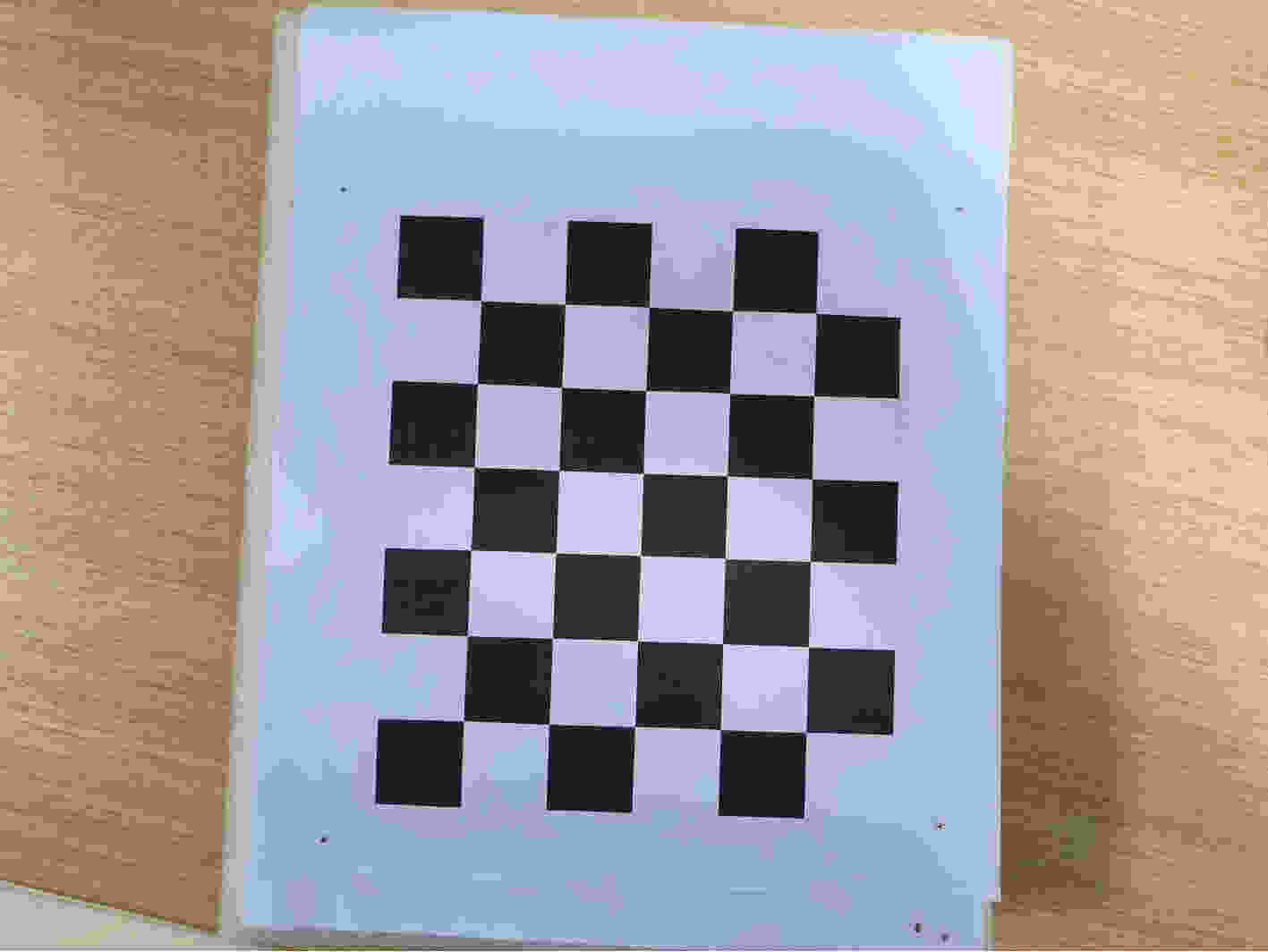

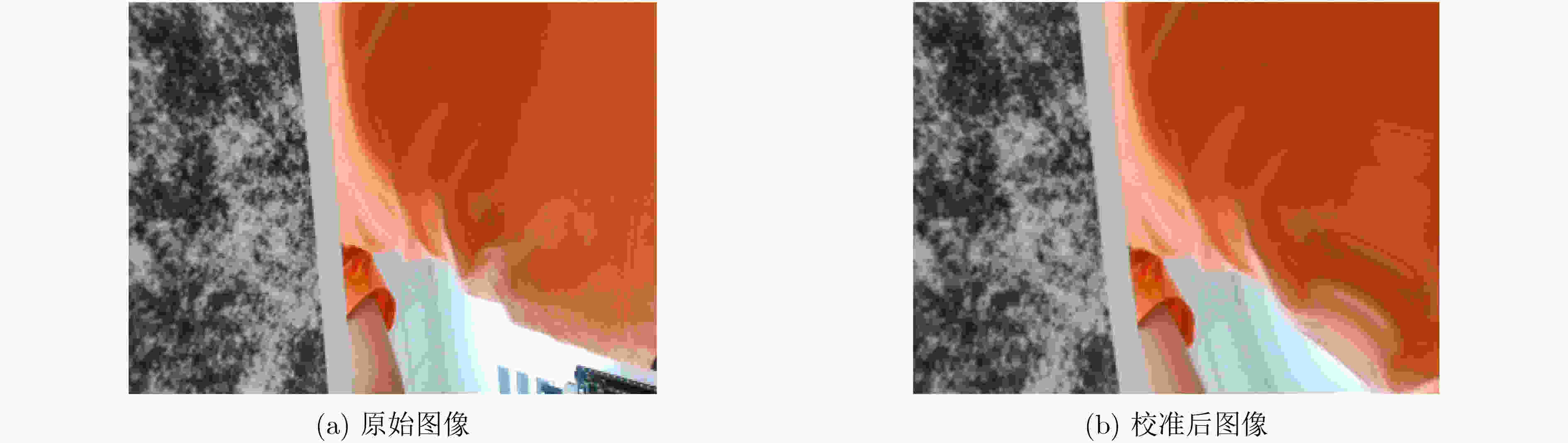

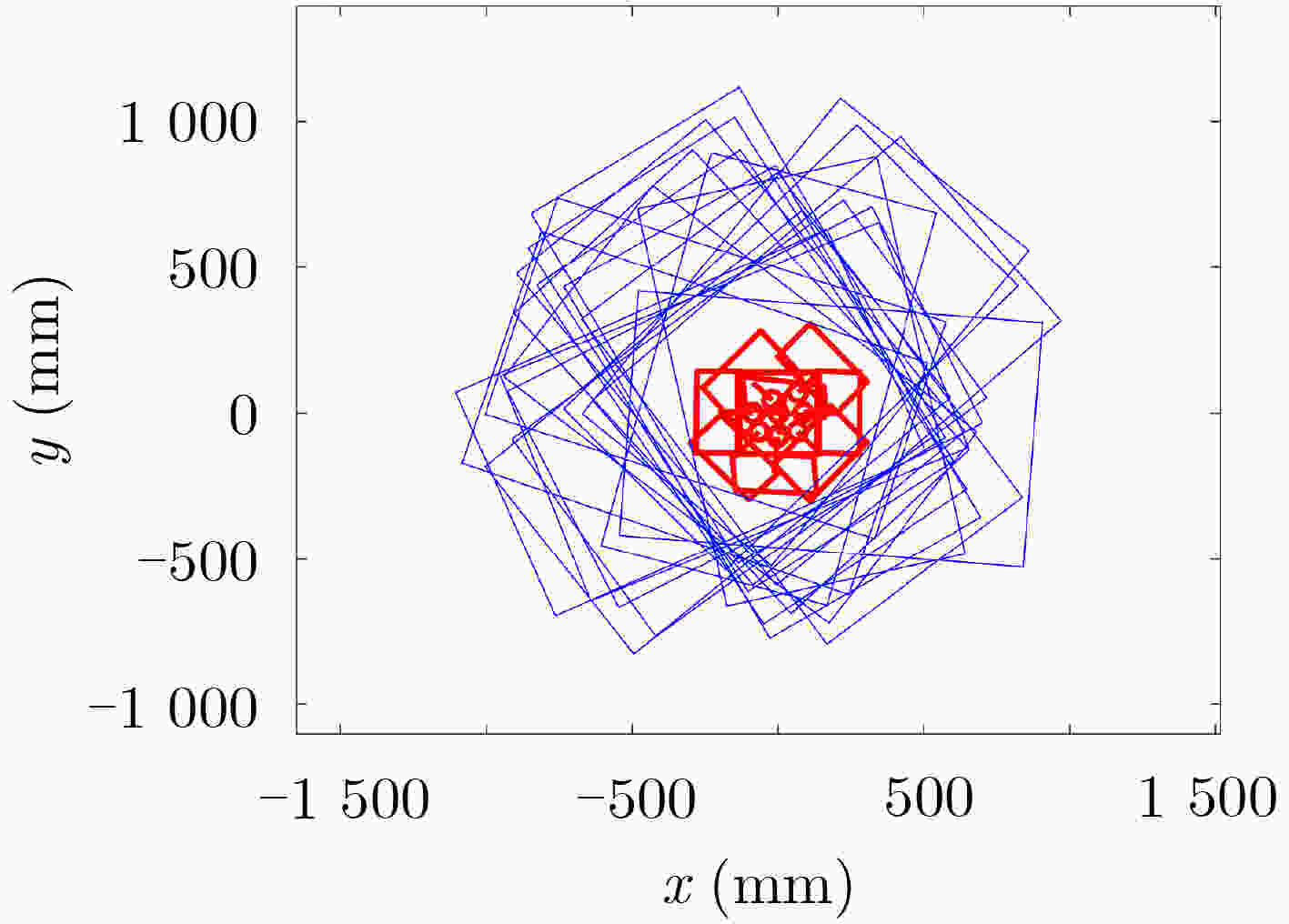

摘要: 光学复眼在无人系统的精确定位制导、避障导航等任务中得到了越来越广泛的应用,其中光学复眼的高精度标定是保障上述任务质量的前提。通常经典的张氏棋盘格标定法要求光学复眼的每个子眼都必须观测到完整的棋盘格,然而,由于光学复眼结构的复杂性,在实际标定过程中难以满足这一要求。为解决张氏标定法的局限性, 该文提出一种基于随机噪声平板的光学复眼内外参联合标定算法,该算法通过子眼拍摄随机噪声平板的局部信息,可简单快速地实现任意构型和子眼数量的光学复眼内外参联合标定。为了提高光学复眼标定的稳定性,设置多阈值匹配机制解决子眼视场特征点数量稀疏导致图像匹配失效的问题。同时,给出了光学复眼内外参联合标定的误差模型,用来衡量所提出算法的精确度。在与张氏棋盘格标定法进行实验对比中,验证所提算法的稳定性和鲁棒性,并在光学复眼实物系统中,验证了所提联合标定算法具有较高的精度。Abstract: In tasks such as precise guidance and obstacle avoidance navigation based on optical compound eyes, the calibration of optical compound eyes plays a crucial role in achieving high accuracy. The classical Zhang’s calibration method requires each ommatidium of the optical compound eyes to observe a complete chessboard pattern. However, the complexity of the optical compound eye structure makes it difficult to satisfy this requirement in practical applications. In this paper, a joint internal and external parameters calibration algorithm of optical compound eyes based on a random noise plate calibration pattern is proposed. This algorithm utilizes the local information captured by the ommatidia when photographing the random noise calibration pattern, enabling simple and fast calibration for optical compound eyes with arbitrary configurations and numbers of ommatidia. To improve the robustness of the calibration, a multi-threshold matching mechanism is introduced to address the issue of sparse feature point quantity in ommatidial visual fields leading to matching failures. Moreover, an error model for the joint internal and external parameters calibration of optical compound eyes is presented to evaluate the accuracy of the proposed algorithm. Experimental comparisons with Zhang’s calibration method demonstrate the robustness of the proposed algorithm. Furthermore, the high accuracy of the proposed joint calibration algorithm is validated in a physical system of optical compound eyes.

-

表 1 部分子眼对外部参数对比(°/pixel)

子眼对 本文方法 张氏棋盘格 本文方法(噪声) 张氏棋盘格(噪声) 01-05 54.97/0.23 53.42/0.27 54.44/0.22 57.72/0.58 01-09 175.20/0.23 178.92/0.26 177.63/0.22 177.63/0.44 02-09 48.71/0.23 47.38/0.22 49.13/0.22 46.48/0.35 06-07 54.12/0.23 54.33/0.31 55.26/0.22 54.97/0.46 表 2 子眼内在参数

子眼 $ {f_x},{f_y} $ $ {o_u},{o_v} $ $ {k_1},{k_2},{p_1},{p_2} $ 平均重投影误差(pixel) 01 177.89,236.81 159.70,125.26 0.0445, –0.0387, 0.0011, –0.0008 0.29 02 176.12,227.397 171.23,128.01 0.0324, –0.0396, 0.0066, –0.0091 0.33 03 172.933,254.3033 186.32,113.92 0.1419, –0.0760, –0.0187, 0.0142 0.34 04 191.69,259.85 178.81,120.80 0.0260, –0.03523, –0.0001, –0.0077 0.38 05 191.14,276.22 160.46, 137.70 0.1469, –0.0580, 0.0182, 0.0252 0.33 06 171.740,237.4745 182.34,114.06 –0.0024, –0.0082, 0.0098, –0.0013 0.31 07 202.45,261.1675 153.47,132.50 0.1506, –0.2704, –0.0066,0.0021 0.32 08 187.401,250.6387 184.73,115.53 0.0742, –0.1270, 0.0005, –0.0453 0.30 09 200.72,270.78 159.59,122.97 –0.0193, –0.0491, –0.0114, –0.0291 0.31 表 3 子眼外部参数

外参 $ {\boldsymbol{T}} $(mm) $ {{\boldsymbol{R}}_{{\mathrm{om}}}} $(rad) 01-02 1210.27, –174.98, 118.74 –0.28863, –0.77946, 2.0756 01-03 216.34, 122.55, –56.82 –0.31501, –0.24960, 1.3377 01-04 –1132.90, –419.11, –408.43 0.55101, –0.34772, 0.81899 01-05 –111.479, 48.309, –519.68 –0.5102, –0.8016, 0.1326 01-06 –208.97, –399.07, 394.80 –0.00237, 0.52474, –0.5904 01-07 –167.19, 1142.11, –255.55 0.7406, –0.20962, –1.48558 01-08 198.78, 701.92, 1170.49 1.00398, 0.98246, –1.99923 01-09 –476.94, 101.36, 45.59 0.64625, 0.83267, –2.87042 -

[1] QI Qiming, FU Ruigang, SHAO Zhengzheng, et al. Multi-aperture optical imaging systems and their mathematical light field acquisition models[J]. Frontiers of Information Technology & Electronic Engineering, 2022, 23(6): 823–844. doi: 10.1631/FITEE.2100058. [2] 王国锋, 王立, 刘岩, 等. 蝇复眼在导弹上的应用[J]. 弹箭与制导学报, 2002(S2): 102–104.WANG Guofeng, WANG Li, LIU Yan, et al. Research of missile based on ommateum[J]. Journal of Projectiles, Missiles and Guidance, 2002(S2): 102–104. [3] 芦丽明. 蝇复眼在导弹上的应用研究[D]. [博士论文], 西北工业大学, 2002.LU Liming. Studies on missile guidance using fly’s ommateum technology[D]. [Ph. D. dissertation], Northwestern Polytechnical University, 2002. [4] SONG Yanfeng, HAO Qun, CAO Jie, et al. The application of artificial compound eye in precision guided munitions for urban combat[J]. Journal of Physics: Conference Series, 2023, 2460: 012170. doi: 10.1088/1742-6596/2460/1/012170. [5] MA Mengchao, LI Hang, GAO Xicheng, et al. Target orientation detection based on a neural network with a bionic bee-like compound eye[J]. Optics Express, 2020, 28(8): 10794–10805. doi: 10.1364/OE.388125. [6] DENG Xinpeng, QIU Su, JIN Weiqi, et al. Three-dimensional reconstruction method for bionic compound-eye system based on MVSNet network[J]. Electronics, 2022, 11(11): 1790. doi: 10.3390/electronics11111790. [7] MARTIN N and FRANCESCHINI N. Obstacle avoidance and speed control in a mobile vehicle equipped with a compound eye[C]. Intelligent Vehicles ‘94 Symposium, Paris, France, 1994: 381–386. doi: 10.1109/IVS.1994.639548. [8] HAN Yibo, LI Xia, LI Xiaocui, et al. Recognition and detection of wide field bionic compound eye target based on cloud service network[J]. Frontiers in Bioengineering and Biotechnology, 2022, 10: 865130. doi: 10.3389/fbioe.2022.865130. [9] 雷卫宁, 郭云芝, 高挺挺. 基于仿生复眼的大视场探测系统结构研究[J]. 光学与光电技术, 2016, 14(3): 62–66.LEI Weining, GUO Yunzhi, and GAO Tingting. Study on the structure of large field view detection system based on bionic compound eye[J]. Optics & Optoelectronic Technology, 2016, 14(3): 62–66. [10] 许黄蓉, 刘晋亨, 张远杰, 等. 无人机载型曲面仿生复眼成像测速系统[J]. 光子学报, 2021, 50(9): 0911004. doi: 10.3788/gzxb20215009.0911004.XU Huangrong, LIU Jinheng, ZHANG Yuanjie, et al. UAV-borne biomimetic curved compound-eye imaging system for velocity measurement[J]. Acta Photonica Sinica, 2021, 50(9): 0911004. doi: 10.3788/gzxb20215009.0911004. [11] KOO G, JUNG W, and DOH N. A two-step optimization for extrinsic calibration of Multiple Camera System (MCS) using depth-weighted normalized points[J]. IEEE Robotics and Automation Letters, 2021, 6(4): 6608–6615. doi: 10.1109/LRA.2021.3094412. [12] 袁泽强, 谷宇章, 邱守猛, 等. 多相机式仿生曲面复眼的标定与目标定位[J]. 光子学报, 2021, 50(9): 0911005. doi: 10.3788/gzxb20215009.0911005.YUAN Zeqiang, GU Yuzhang, QIU Shoumeng, et al. Calibration and target position of bionic curved compound eye composed of multiple cameras[J]. Acta Photonica Sinica, 2021, 50(9): 0911005. doi: 10.3788/gzxb20215009.0911005. [13] 赵子良, 张宗华, 高楠, 等. 基于ChArUco平板的多目相机标定[J]. 应用光学, 2021, 42(5): 848–852. doi: 10.5768/JAO202142.0502004.ZHAO Ziliang, ZHANG Zonghua, GAO Nan, et al. Calibration of multiple cameras based on ChArUco board[J]. Journal of Applied Optics, 2021, 42(5): 848–852. doi: 10.5768/JAO202142.0502004. [14] GEIGER A, MOOSMANN F, CAR Ö, et al. Automatic camera and range sensor calibration using a single shot[C]. 2012 IEEE International Conference on Robotics and Automation, Saint Paul, USA, 2012: 3936–3943. doi: 10.1109/ICRA.2012.6224570. [15] HARTLEY R and KANG S B. Parameter-free radial distortion correction with center of distortion estimation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(8): 1309–1321. doi: 10.1109/TPAMI.2007.1147. [16] ZHU Chen, ZHOU Zihan, XING Ziran, et al. Robust plane-based calibration of multiple non-overlapping cameras[C]. The 2016 Fourth International Conference on 3D Vision, Stanford, USA, 2016: 658–666. doi: 10.1109/3DV.2016.73. [17] HENG L, BÜRKI M, LEE G H, et al. Infrastructure-based calibration of a multi-camera rig[C]. Proceedings of 2014 IEEE International Conference on Robotics and Automation, Hong Kong, China, 2014: 4912–4919. doi: 10.1109/ICRA.2014.6907579. [18] LIN Yukai, LARSSON V, GEPPERT M, et al. Infrastructure-based multi-camera calibration using radial projections[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 327–344. doi: 10.1007/978-3-030-58517-4_20. [19] HENG L, FURGALE P, and POLLEFEYS M. Leveraging image-based localization for infrastructure-based calibration of a multi-camera rig[J]. Journal of Field Robotics, 2015, 32(5): 775–802. doi: 10.1002/rob.21540. [20] DEXHEIMER E, PELUSE P, CHEN Jianhui, et al. Information-theoretic online multi-camera extrinsic calibration[J]. IEEE Robotics and Automation Letters, 2022, 7(2): 4757–4764. doi: 10.1109/LRA.2022.3145061. [21] ZHANG Z. A flexible new technique for camera calibration[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(11): 1330–1334. doi: 10.1109/34.888718. [22] XING Ziran, YU Jingyi, and MA Yi. A new calibration technique for multi-camera systems of limited overlapping field-of-views[C]. 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, Canada, 2017: 5892–5899. doi: 10.1109/IROS.2017.8206482. [23] ATCHESON B, HEIDE F, and HEIDRICH W. CALTag: High precision fiducial markers for camera calibration[C]. The 15th International Workshop on Vision, Siegen, Germany, 2010: 41–48. doi: 10.2312/PE/VMV/VMV10/041-048. [24] FIALA M. ARTag, a fiducial marker system using digital techniques[C]. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, USA, 2005: 590–596. doi: 10.1109/CVPR.2005.74. [25] FIALA M and SHU Chang. Self-identifying patterns for plane-based camera calibration[J]. Machine Vision and Applications, 2008, 19(4): 209–216. doi: 10.1007/s00138-007-0093-z. [26] HEROUT A, SZENTANDRÁSI I, ZACHARIÁ M, et al. Five shades of grey for fast and reliable camera pose estimation[C]. Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, USA, 2013: 1384–1390. doi: 10.1109/CVPR.2013.182. [27] LI Bo, HENG L, KOSER K, et al. A multiple-camera system calibration toolbox using a feature descriptor-based calibration pattern[C]. 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 2013: 1301–1307. doi: 10.1109/IROS.2013.6696517. [28] SCARAMUZZA D, MARTINELLI A, and SIEGWART R. A flexible technique for accurate omnidirectional camera calibration and structure from motion[C]. Proceedings of the Fourth IEEE International Conference on Computer Vision Systems, New York, USA, 2006: 45. doi: 10.1109/ICVS.2006.3. [29] SCARAMUZZA D, MARTINELLI A, and SIEGWART R. A toolbox for easily calibrating omnidirectional cameras[C]. Proceedings of 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 2006: 5695–5701. doi: 10.1109/IROS.2006.282372. [30] WANG Zhou, BOVIK A C, SHEIKH H R, et al. Image quality assessment: From error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600–612. doi: 10.1109/TIP.2003.819861. [31] FATMA A, KHALED K, and ZEMZEMI F. Design, construction and calibration of an omnidirectional camera[C]. 2013 International Conference on Individual and Collective Behaviors in Robotics, Sousse, Tunisia, 2013: 49–55. doi: 10.1109/ICBR.2013.6729265. [32] MEI C and RIVES P. Single view point omnidirectional camera calibration from planar grids[C]. 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 2007: 3945–3950. doi: 10.1109/ROBOT.2007.364084. [33] HEIKKILA J and SILVEN O. A four-step camera calibration procedure with implicit image correction[C]. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, USA, 1997: 1106–1112. doi: 10.1109/CVPR.1997.609468. [34] RAMEAU F, PARK J, BAILO O, et al. MC-Calib: A generic and robust calibration toolbox for multi-camera systems[J]. Computer Vision and Image Understanding, 2022, 217: 103353. doi: 10.1016/j.cviu.2021.103353. -

下载:

下载:

下载:

下载: