Tracklet Generation Method by Submodular Optimization for Multi-Object Tracking

-

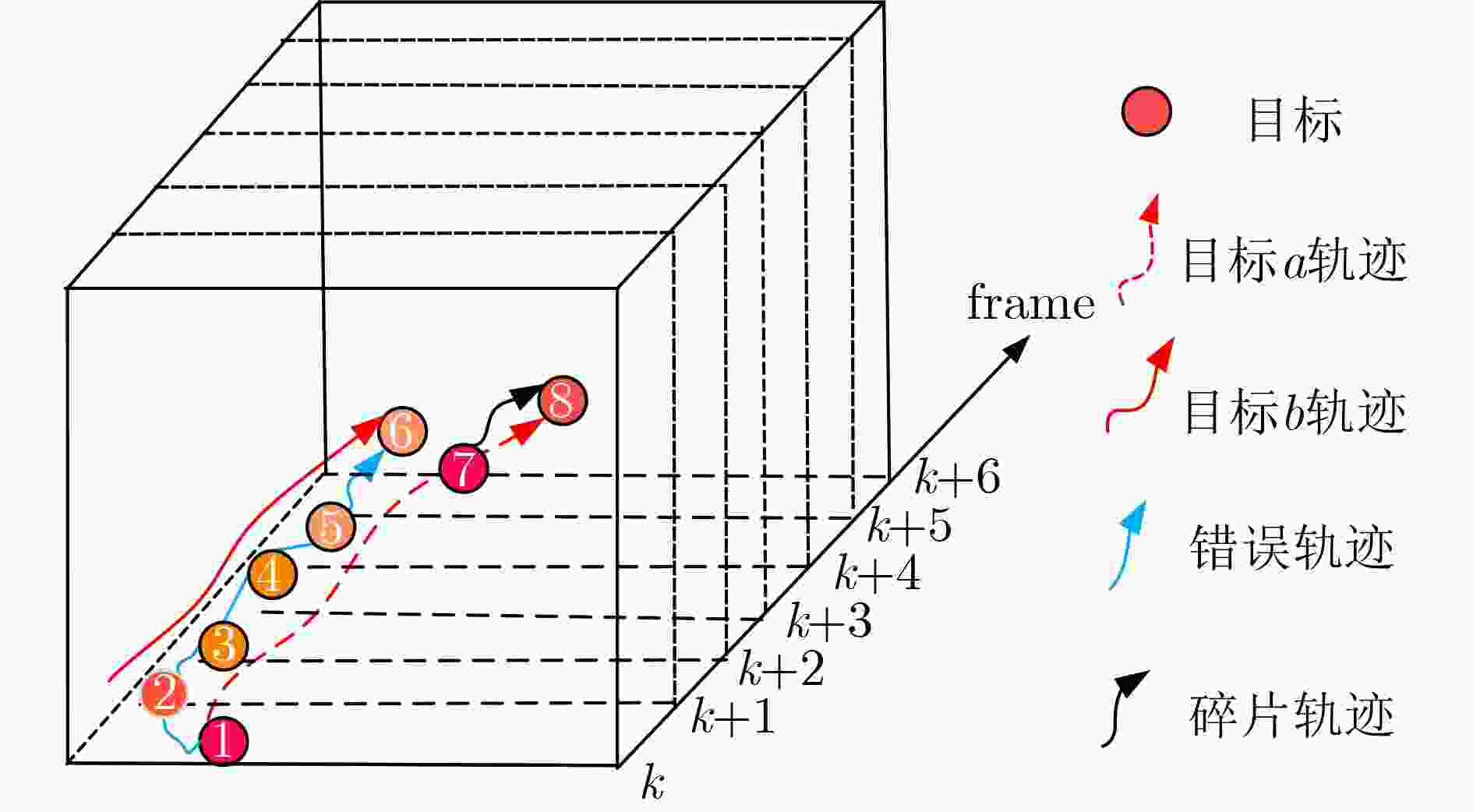

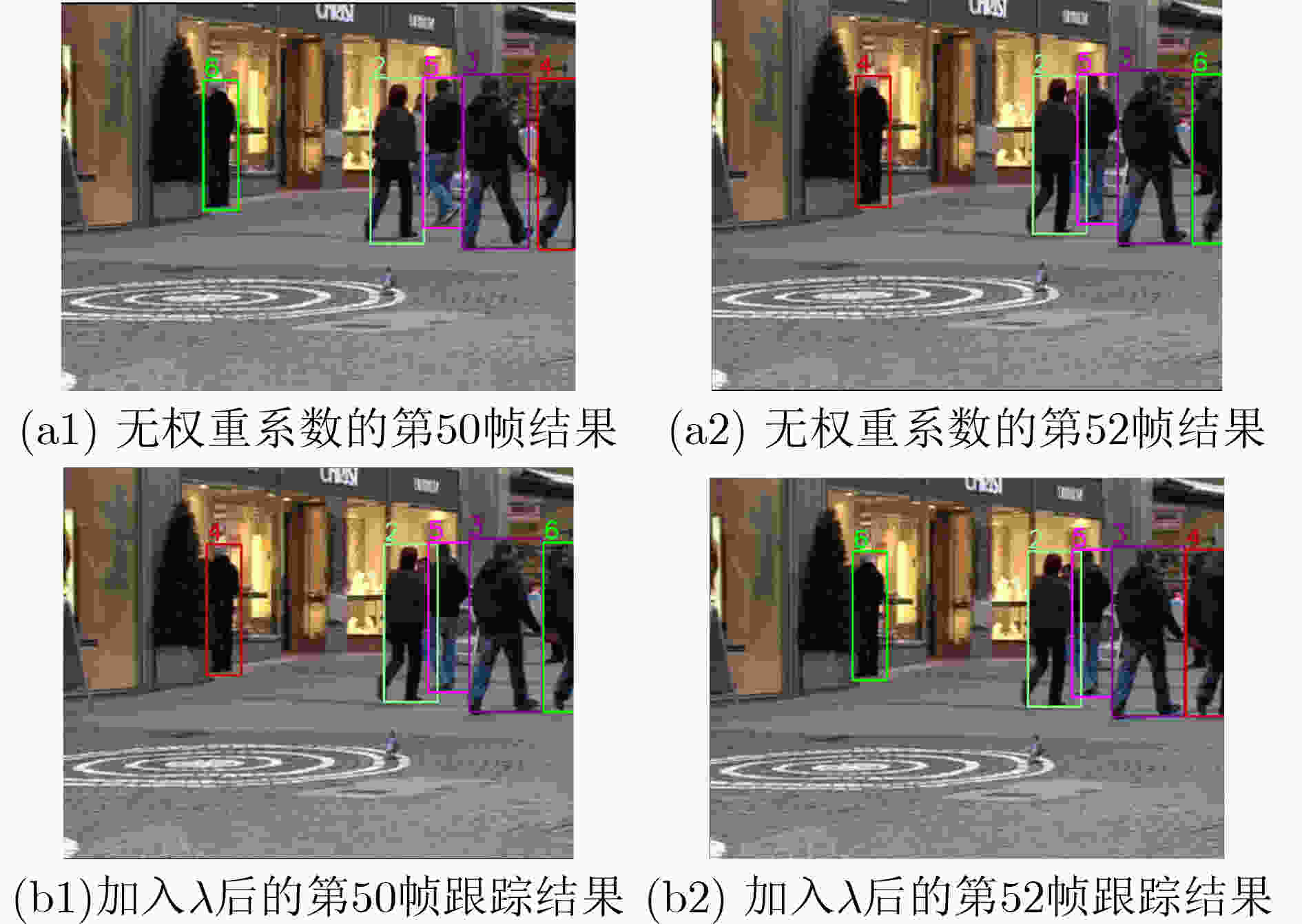

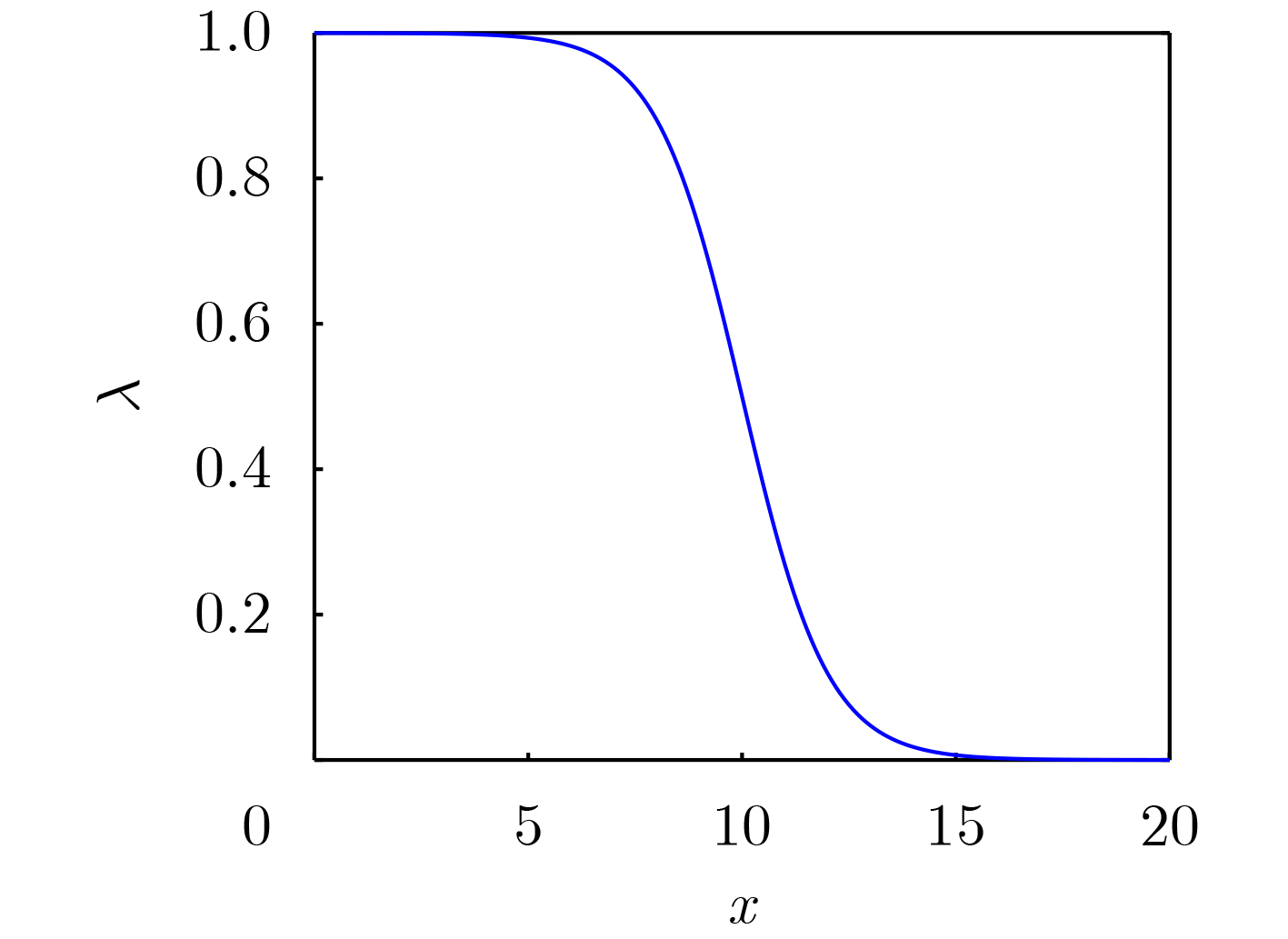

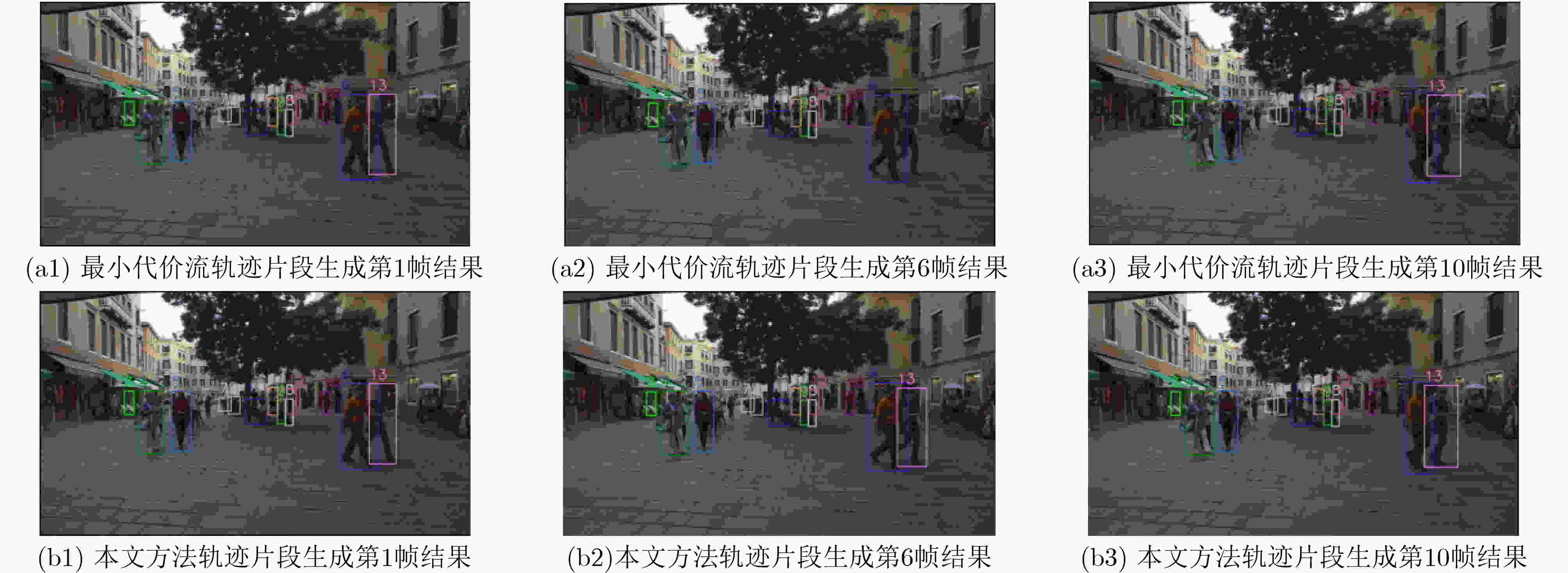

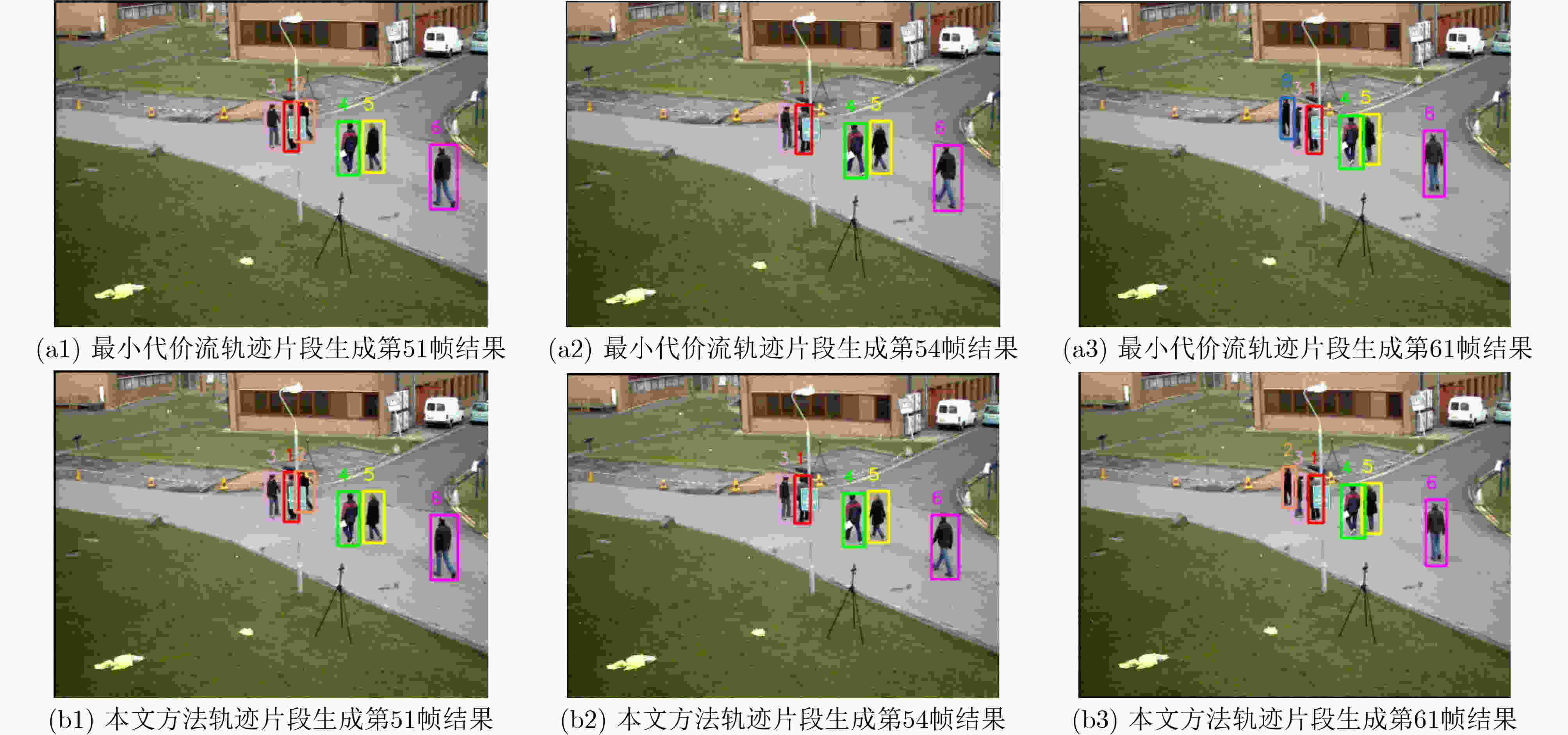

摘要: 作为智能视觉任务的基础工作,多目标跟踪(MOT)一直是计算机视觉领域具有挑战性的课题之一。遮挡是影响跟踪准确性的主要因素,为此该文采用基于检测跟踪的思想,以轨迹片段为基础进行关联获取目标的完整轨迹;同时,为提高跟踪鲁棒性,该文将轨迹片段的生成问题转化为运筹学中的设施选址问题,并进而提出基于次模优化的轨迹片段生成方法。该方法融合梯度(HOG)和颜色(CN)两个互补特征进行目标表征,并根据运动信息设计权重系数提高目标匹配准确度,最后提出具有约束的次模最大化算法实现全局范围内的数据关联生成轨迹片段。通过在多个基准数据集上的对比实验,表明该文算法在保证性能的同时能有效处理遮挡问题。Abstract: As the basis of many intelligent visual tasks, Multi-Object Tracking (MOT) is a challenging problem in computer vision. Occlusion is a main factor affecting the tracking accuracy. To solve the occlusion problem, in this paper, the strategy of tracking-by-detection is adopted to obtain complete trajectories of targets based on associating tracklets. Meanwhile, to improve the tracking robustness, the tracklet generation problem is transformed into the facility location problem in operations research area and further a submodular optimization based tracklet generation method is proposed. In this method, two complementary features including Histogram of Oriented Gradient (HOG)and Color Name (CN) are integrated to describe the target appearance, and a weighting coefficient is also designed by motion information to improve the matching accuracy. At length, a submodular maximization algorithm with constraints is developed to achieve the global data association by selecting the targets to form the tracklets. By comparative experiments on the benchmark datasets, the proposed method can solve the occlusion problem effectively with guaranteed performance.

-

Key words:

- Multi-Object Tracking (MOT) /

- Tracklet /

- Data association /

- Submodular optimization

-

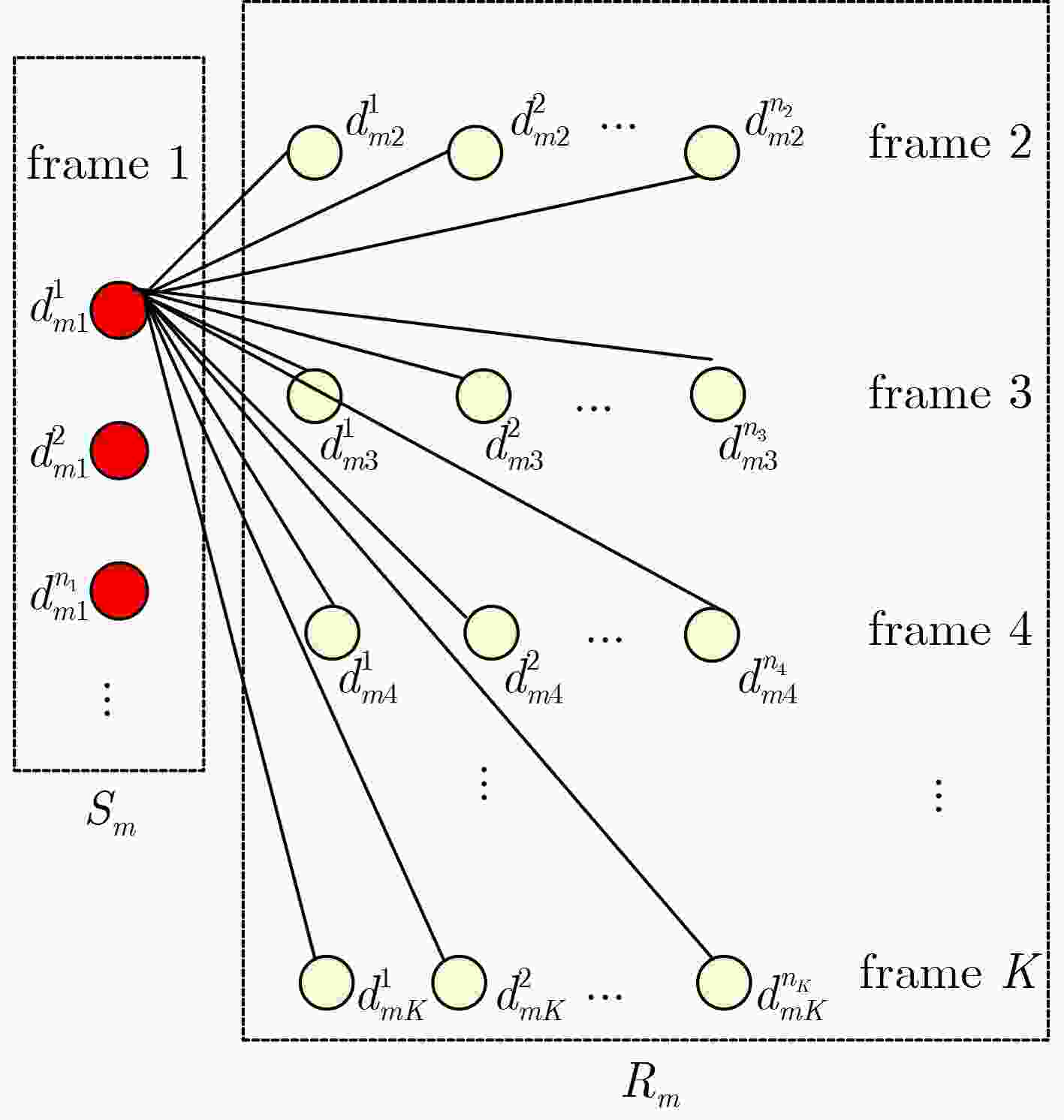

算法1 基于次模优化的轨迹片段生成 输入: 视频片段 Vm,该视频片段包含K个图像帧 输出: 生成的轨迹片段集合Tm={$ t_1^m $, $ t_2^m $, ···} 初始化:i=1,j=1,k=1,α=0.85;Tm=Ø; 检测目标集Dm=Sm∪Rm,其中初始目标集$ {S_m} = \{ d_{mk}^1,d_{mk}^2, \cdots ,d_{mk}^{{n_k}}\} $,候选集目标集

${R_m} = \{ d_{m(k + 1)}^1, \cdots ,d_{m(k + 1)}^{{n_{k + 1}}}, \cdots ,d_{mK}^1, \cdots ,d_{mK}^{{n_K}}\} $执行: (1) 提取Dm中每个检测目标的HOG和CN特征; (2) while k<=K (3) 根据式(14)计算初始目标集Sm与候选目标集Rm中目标间的相似度 (4) while i<=ni (5) $ t^i_m $ = Ø (6) while j<K (7) 在候选目标集Rm中选择与初始目标$ d^i_{mk} $具有最大相似度的目标$ d^p_{mr} $,对应相似度为sip (8) if (sip > w) (9) $ t^i_m $ ← {$ d^p_{mr}$}∪ $t^i_m $ (10) 从候选目标集中删除$ d^p_{mr} $所在第r帧的其他目标 (11) j++ (12) end if (13) end while (14) i++ (15) Tm ← {$ t^i_m $}∪Tm (16) end while (17) k=k+1 (18) 将第k帧中未被匹配关联的目标组成初始目标集合$ {S_m} = \{ d_{mk}^1,d_{mk}^2, \cdots ,d_{mk}^{{n_k}}\} $ (19) end while 表 1 PETS09-S2L1和TUD数据集跟踪性能对比

数据集 算法 MOTA(%)↑ MOTP(%)↑ MT(%)↑ ML(%)↓ IDS↓ PETS09-S2L1 Intra Track[29] 81.6 79.4 – – 684 R1TA Track[30] 96.0 82.0 100.0 0 14 DSC[31] 90.0 56.8 89.5 0 15 本文方法 96.3 72.3 96.2 0 12 TUD-Stadtmitte GMMCP[12] 82.4 73.9 – 0 3 CNNTCM[27] 80.8 – 90.0 0 – R1TA Track[30] 84.8 89.6 70.0 – – DSC[31] 72.4 52.6 60.0 0 10 本文方法 90.6 87.6 90.0 0 0 TUD-Crossing GMMCP[12] 91.9 70.0 – 0 7 SUBM[16] 60.2 77.2 15.4 7.7 32 本文方法 92.4 75.6 18.6 0 2 表 2 MOT17数据集跟踪性能对比

算法 MOTA(%)↑ IDF1↑ MOTP(%)↑ MT(%)↑ ML(%)↓ IDS↓ IOU[11] 45.5 39.4 76.9 15.7 40.5 5988 EDMT[32] 50.0 51.3 77.3 21.6 36.3 2264 jCC[33] 51.2 54.5 75.9 20.9 37.0 1802 LPT[9] 57.3 57.7 – 23.3 36.9 1424 MPNTrack[34] 58.8 61.7 – 28.8 33.5 1185 JBNOT[35] 52.6 50.8 77.1 19.7 35.8 3050 TT17[36] 54.9 63.1 – 24.4 38.1 1088 Deep-TAMA[37] 50.3 53.5 – 19.2 37.5 2192 本文方法 56.4 58.2 78.1 21.1 32.8 1097 -

[1] ZHAO Hangyue, ZHANG Hongpu, and ZHAO Yanyun. YOLOv7-sea: Object detection of maritime UAV images based on improved YOLOv7[C]. 2023 IEEE/CVF Winter Conference on Applications of Computer Vision Workshops (WACVW), Waikoloa, USA, 2023: 233–238. doi: 10.1109/WACVW58289.2023.00029. [2] GIRSHICK R. Fast R-CNN[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440–1448. doi: 10.1109/ICCV.2015.169. [3] ZHONG Xionghu, TAY W P, LENG Mei, et al. TDOA-FDOA based multiple target detection and tracking in the presence of measurement errors and biases[C]. 2016 IEEE 17th International Workshop on Signal Processing Advances in Wireless Communications, Edinburgh, UK, 2016: 1–6. doi: 10.1109/SPAWC.2016.7536786. [4] BEWLEY A, GE Zongyuan, OTT L, et al. Simple online and realtime tracking[C]. 2016 IEEE International Conference on Image Processing, Phoenix, USA, 2016: 3464–3468. doi: 10.1109/ICIP.2016.7533003. [5] WU Huiling and LI Weihai. Robust online multi-object tracking based on KCF trackers and reassignment[C]. 2016 IEEE Global Conference on Signal and Information Processing, Washington, USA, 2016: 124–128. doi: 10.1109/GlobalSIP.2016.7905816. [6] LIU Huajun, ZHANG Hui, and MERTZ C. DeepDA: LSTM-based deep data association network for multi-targets tracking in clutter[C]. 2019 22th International Conference on Information Fusion, Ottawa, Canada, 2019: 1–8. doi: 10.23919/FUSION43075.2019.9011217. [7] LENZ P, GEIGER A, and URTASUN R. Followme: Efficient online min-cost flow tracking with bounded memory and computation[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4364–4372. doi: 10.1109/ICCV.2015.496. [8] Schulter S, Vernaza P, Choi W, et al. Deep network flow for multi-object tracking[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, USA, 2017: 2730–2739. doi: 10.1109/CVPR.2017.292. [9] LI Shuai, KONG Yu, and REZATOFIGHI H. Learning of global objective for network flow in multi-object tracking[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 8845–8855. doi: 10.1109/CVPR52688.2022.00865. [10] 刘雅婷, 王坤峰, 王飞跃. 基于踪片Tracklet关联的视觉目标跟踪: 现状与展望[J]. 自动化学报, 2017, 43(11): 1869–1885. doi: 10.16383/j.aas.2017.c170117.LIU Yating, WANG Kunfeng, and WANG Feiyue. Tracklet association-based visual object tracking: The state of the art and beyond[J]. Acta Automatica Sinica, 2017, 43(11): 1869–1885. doi: 10.16383/j.aas.2017.c170117. [11] BOCHINSKI E, EISELEIN V, and SIKORA T. High-speed tracking-by-detection without using image information[C]. 2017 14th IEEE International Conference on Advanced Video and Signal based Surveillance, Lecce, Italy, 2017: 1–6. doi: 10.1109/AVSS.2017.8078516. [12] DEHGHAN A, ASSARI S M, and SHAH M. GMMCP tracker: Globally optimal generalized maximum multi clique problem for multiple object tracking[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 4091–4099. doi: 10.1109/CVPR.2015.7299036. [13] ZAMIR A R, DEHGHAN A, and SHAH M. GMCP-tracker: Global multi-object tracking using generalized minimum clique graphs[C]. 12th European Conference on Computer Vision, Florence, Italy, 2012: 343–356. doi: 10.1007/978-3-642-33709-3_25. [14] WEN Longyin, LI Wenbo, YAN Junjie, et al. Multiple target tracking based on undirected hierarchical relation hypergraph[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 1282–1289. doi: 10.1109/CVPR.2014.167. [15] CHOI W. Near-online multi-target tracking with aggregated local flow descriptor[C]. 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015: 3029–3037. doi: 10.1109/ICCV.2015.347. [16] SHEN Jianbing, LIANG Zhiyuan, LIU Jianhong, et al. Multiobject tracking by submodular optimization[J]. IEEE Transactions on Cybernetics, 2019, 49(6): 1990–2001. doi: 10.1109/TCYB.2018.2803217. [17] NAHON R, BILODEAU G A, and PESANT G. Improving tracking with a tracklet associator[C]. 2022 9th Conference on Robots and Vision, Toronto, Canada, 2022: 175–182. doi: 10.1109/CRV55824.2022.00030. [18] WU Hai, LI Qing, WEN Chenglu, et al. Tracklet proposal network for multi-object tracking on point clouds[C]. The Thirtieth International Joint Conference on Artificial Intelligence, Montreal, Canada, 2021: 1165–1171. doi: 10.24963/ijcai.2021/161. [19] DAI Peng, WENG Renliang, CHOI W, et al. Learning a proposal classifier for multiple object tracking[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 2443–2452. doi: 10.1109/CVPR46437.2021.00247. [20] GALVÃO R D. Uncapacitated facility location problems: Contributions[J]. Pesquisa Operacional, 2004, 24(1): 7–38. doi: 10.1590/S0101-74382004000100003. [21] JIANG Zhuolin and DAVIS L S. Submodular salient region detection[C]. 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, USA, 2013: 2043–2050. doi: 10.1109/CVPR.2013.266. [22] LAZIC N, GIVONI I, FREY B, et al. FLoSS: Facility location for subspace segmentation[C]. 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 2009: 825–832. doi: 10.1109/ICCV.2009.5459302. [23] VERTER V. Uncapacitated and capacitated facility location problems[M]. EISELT H and MARIANOV V. Foundations of Location Analysis. New York: Springer, 2011: 25–37. doi: 10.1007/978-1-4419-7572-0_2. [24] FELDMAN M, HARSHAW C, and KARBASI A. Greed is good: Near-optimal submodular maximization via greedy optimization[C]. The 30th Conference on Learning Theory, Amsterdam, Netherlands, 2017: 758–784. [25] NEMHAUSER G L and WOLSEY L A. Best algorithms for approximating the maximum of a submodular set function[J]. Mathematics of Operations Research, 1978, 3(3): 177–264. doi: 10.1287/moor.3.3.177. [26] BALKANSKI E, QIAN S, and SINGER Y. Instance specific approximations for submodular maximization[C]. The 38th International Conference on Machine Learning, Chongqing, China, 2021: 609–618. [27] WOJKE N, BEWLEY A, and PAULUS D. Simple online and realtime tracking with a deep association metric[C]. 2017 IEEE International Conference on Image Processing, Beijing, China, 2017: 3645–3649. doi: 10.1109/ICIP.2017.8296962. [28] BERNARDIN K and STIEFELHAGEN R. Evaluating multiple object tracking performance: The clear mot metrics[J]. EURASIP Journal on Image and Video Processing, 2008, 2008: 246309. doi: 10.1155/2008/246309. [29] DADGAR A, BALEGHI Y, and EZOJI M. Multi-View data fusion in multi-object tracking with probability density-based ordered weighted aggregation[J]. Optik, 2022, 262: 169279. doi: 10.1016/j.ijleo.2022.169279. [30] SHI Xinchu, LING Haibin, PANG Yu, et al. Rank-1 tensor approximation for high-order association in multi-target tracking[J]. International Journal of Computer Vision, 2019, 127(8): 1063–1083. doi: 10.1007/s11263-018-01147-z. [31] TESFAYE Y T, ZEMENE E, PELILLO M, et al. Multi‐object tracking using dominant sets[J]. IET Computer Vision, 2016, 10(4): 289–298. doi: 10.1049/iet-cvi.2015.0297. [32] CHEN Jiahui, SHENG Hao, ZHANG Yang, et al. Enhancing detection model for multiple hypothesis tracking[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, USA, 2017: 2143–2152. doi: 10.1109/CVPRW.2017.266. [33] KEUPER M, TANG Siyu, ANDRES B, et al. Motion segmentation & multiple object tracking by correlation co-clustering[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(1): 140–153. doi: 10.1109/TPAMI.2018.2876253. [34] BRASÓ G and LEAL-TAIXÉ L. Learning a neural solver for multiple object tracking[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Washington, USA, 2020: 6246–6256. doi: 10.1109/CVPR42600.2020.00628. [35] HENSCHEL R, ZOU Yunzhe, and ROSENHAHN B. Multiple people tracking using body and joint detections[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, USA, 2019: 770–779. doi: 10.1109/CVPRW.2019.00105. [36] ZHANG Yang, SHENG Hao, WU Yubin, et al. Long-term tracking with deep tracklet association[J]. IEEE Transactions on Image Processing, 2020, 29: 6694–6706. doi: 10.1109/TIP.2020.2993073. [37] YOON Y C, KIM D Y, and SONG Y M. Online multiple pedestrians tracking using deep temporal appearance matching association[J]. Information Sciences, 2021, 561: 326–351. doi: 10.1016/j.ins.2020.10.002. -

下载:

下载:

下载:

下载: