Visualization Analysis and Kernel Pruning of Convolutional Neural Network for Ship-Radiated Noise Classification

-

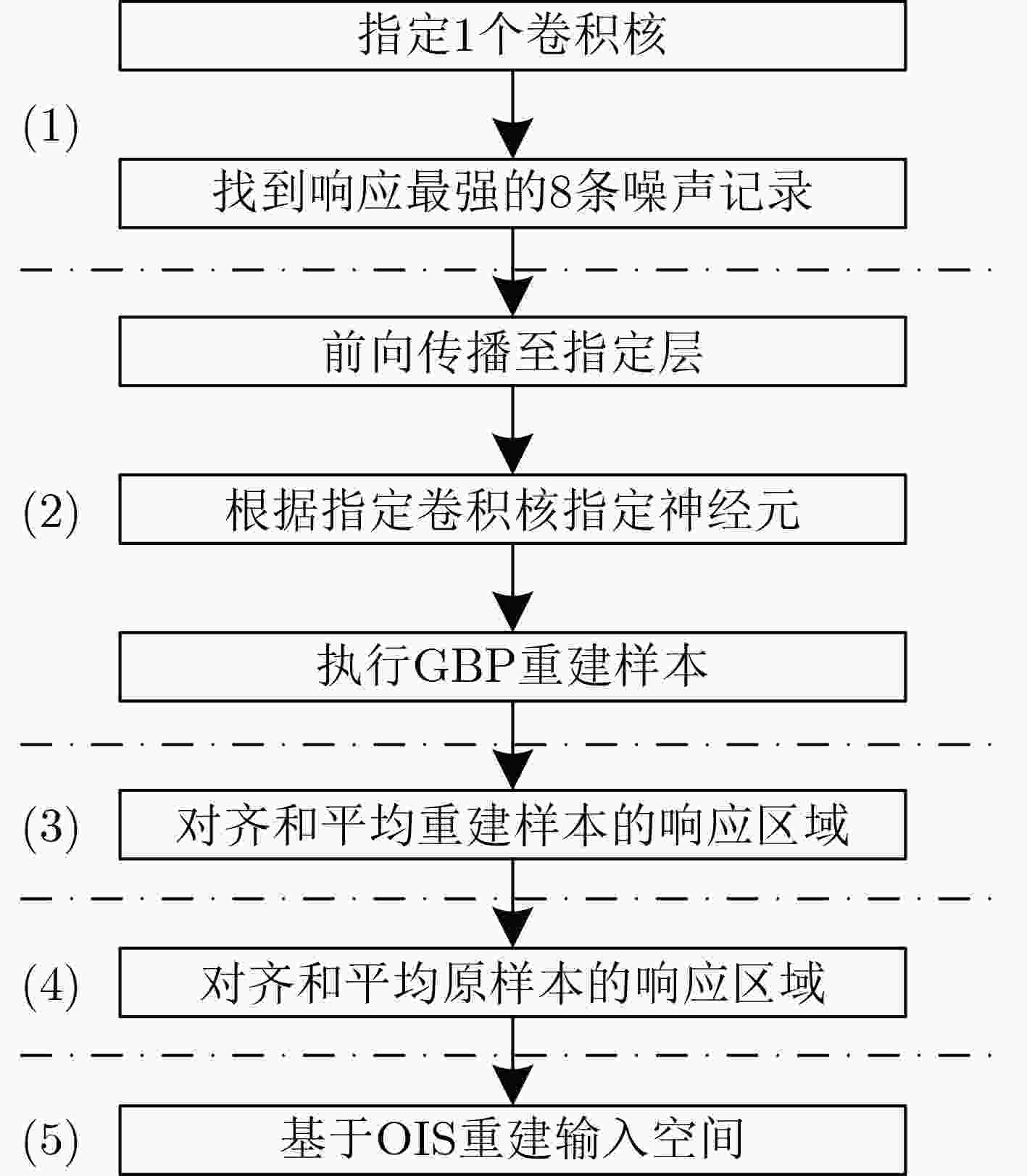

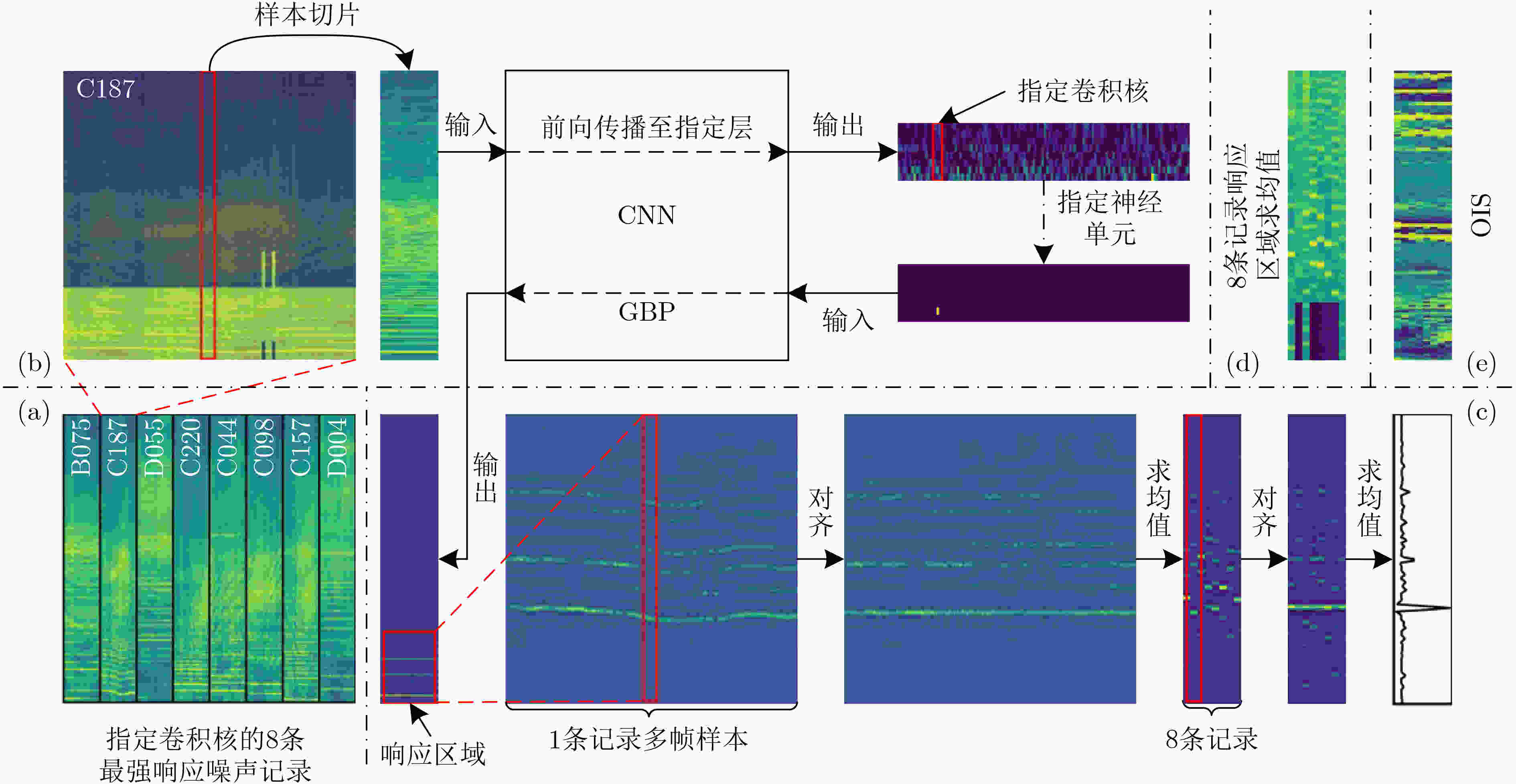

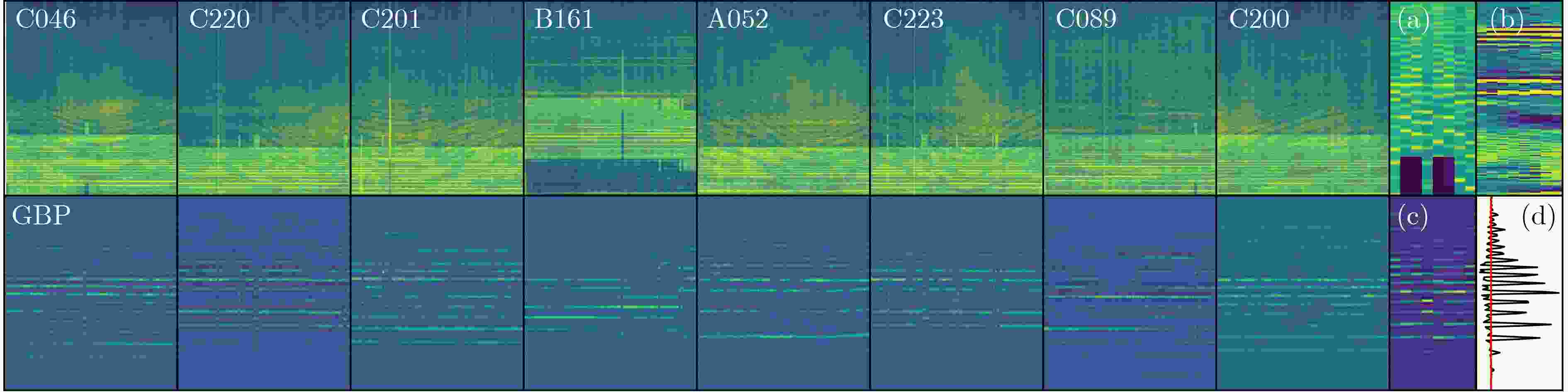

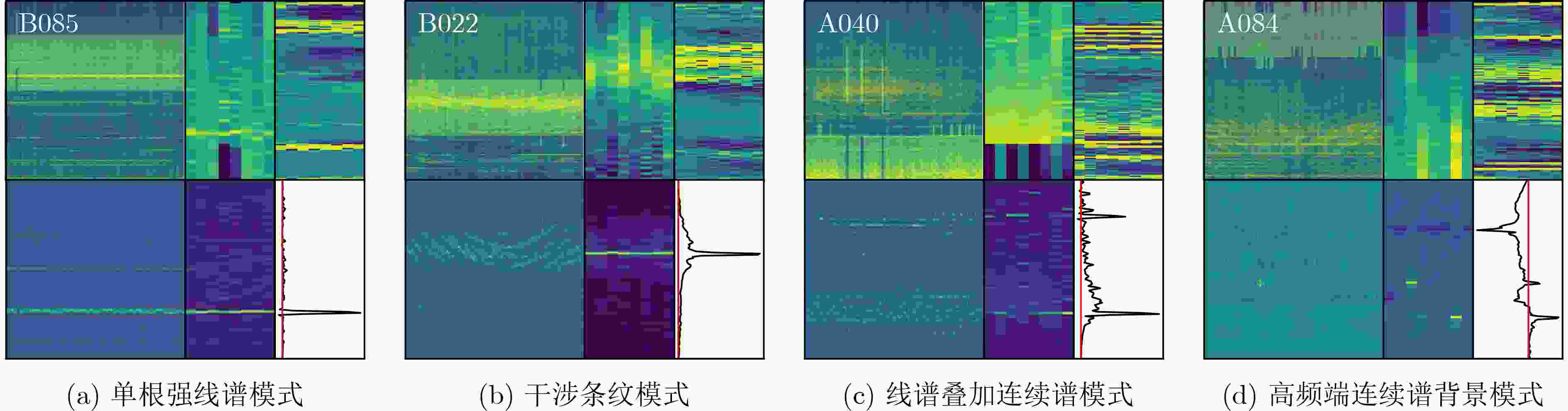

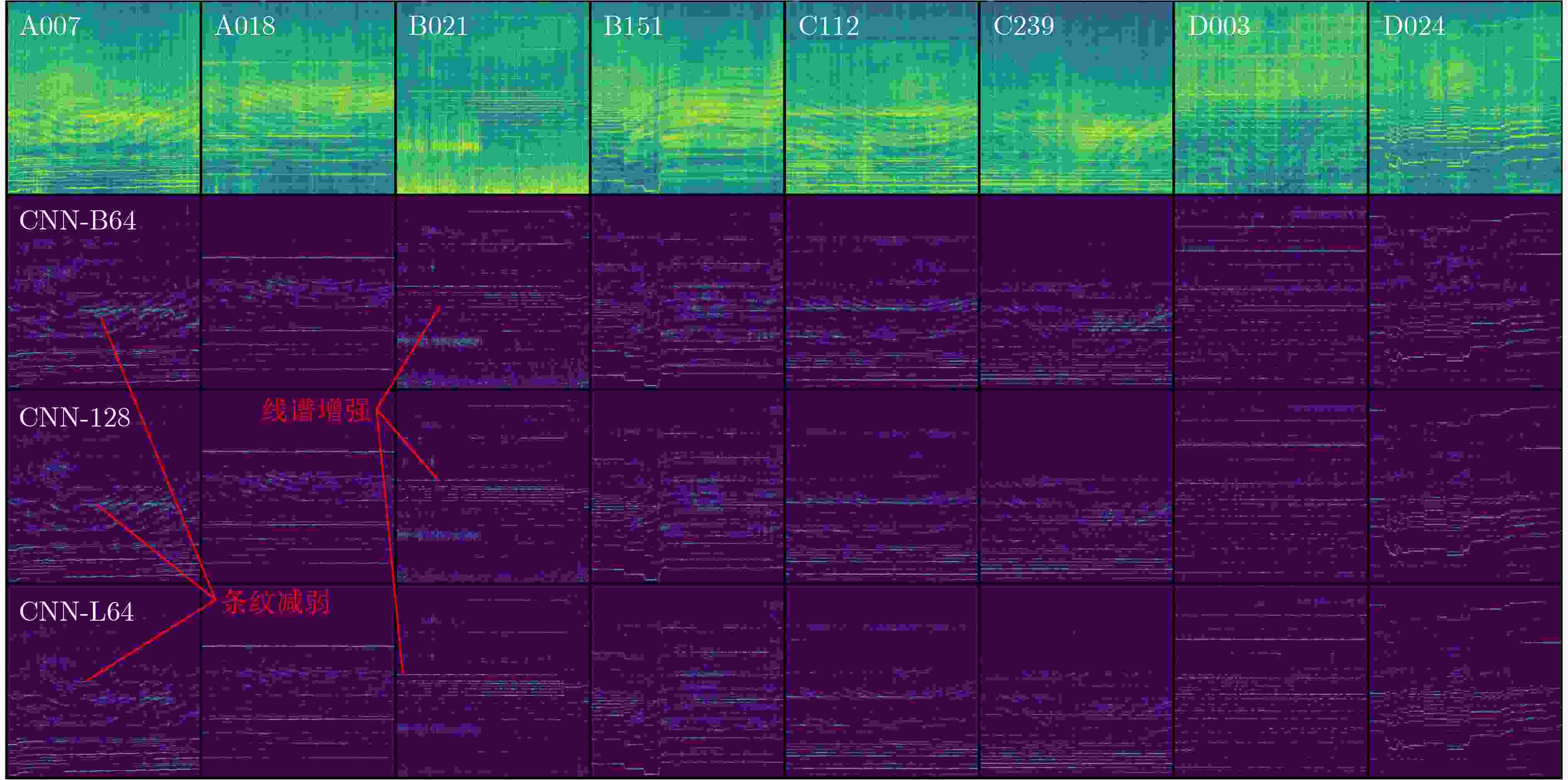

摘要: 当前基于深度神经网络的船舶辐射噪声分类研究主要关注分类性能,对模型的解释性关注较少。本文首先采用导向反向传播和输入空间优化,基于DeepShip数据集,构建以对数谱为输入的船舶辐射噪声分类卷积神经网络(CNN),提出了一种船舶辐射噪声分类CNN的可视化分析方法。结果显示,多帧特征对齐算法改进了可视化效果,深层卷积核检测线谱和背景两类特征。其次,基于线谱是船舶分类的稳健特征这一知识,提出了一种卷积核剪枝方法,不仅提升了CNN分类性能,且训练过程更加稳定。导向反向传播可视化结果表明,卷积核剪枝后的CNN更加关注线谱信息。Abstract: Current research on the classification of ship-radiated noise utilizing deep neural networks primarily focuses on aspects of classification performance and disregards model interpretation. To address this issue, an approach involving guided backwardpropagation and input space optimization has been utilized to develop a Convolutional Neural Network (CNN) for ship-radiated noise classification. This CNN takes a logarithmic scale spectrum as input and is based on the DeepShip dataset, thus presenting a visualization method for ship-radiated noise classification. Results reveal that the multiframe feature alignment algorithm enhances the visualization effect, and the deep convolutional kernel detects two types of features: line spectrum and background. Notably, the line spectrum has been identified as a reliable feature for ship classification. Therefore, a convolutional kernel pruning method has been proposed. This approach not only enhances the performance of CNN classification, but also enhances the stability of the training process. The results of the guided backwardpropagation visualization suggest that the post-pruning CNN increasingly emphasizes the consideration of line spectrum information.

-

表 1 CNN模型参数

层号 层 参数 0 输入1×8×512维 1 Conv2d (16, 3×3) 2 Conv2d+MaxPool2d (32, 3×3), 2×2 3 Conv2d+MaxPool2d (64, 1×3), 2×2 4 Conv2d+MaxPool2d (64, 1×3), 2×2 5 Conv2d+MaxPool2d (64, 1×3), 1×2 6 Conv2d+MaxPool2d (64, 1×3), 1×2 7 Conv2d+MaxPool2d (128, 1×3), 1×2 8 FC 256 9 FC 64 10 FC 4 表 2 DeepShip数据集样本划分

船舶

类别训练

记录数测试

记录数训练

样本数测试

样本数货轮(A) 88 21 7658 1922 客轮(B) 153 38 8720 2824 油轮(C) 192 48 9042 2006 拖船(D) 56 13 8142 1970 合计 489 120 33562 8722 算法1 多帧特征对齐算法 输入:$ {N} $列160个频点的特征${\boldsymbol{M} }_{160 \times N}$; 最大迭代次数$i_{\max }$; 每列的最大平移量$ s_{\max } $。 初始化:$s_{j}=0, \quad j=1, 2,\cdots, N$ for $i=1,2, \cdots, i_{\max}$ for $ j=1,2, \cdots, N $ 计算${\boldsymbol{M}}_j$与${\boldsymbol{M}}_{-j}$的相关${\rm{corr} }\left({\boldsymbol{M}}_{-j}, {\boldsymbol{M}}_{j}\right)$ 确定${\boldsymbol{M}}_j$的平移量$ s \in \{-1,0,1\} $ if ${\rm{abs}}(s_j+s )\le s_{\max}$ ${\boldsymbol{M}}_j$平移s个单位(该列末端补零):${ {\rm{shift} } }\left({\boldsymbol{M}}_{j}, s\right)$ $s_j \leftarrow s_j +s$ 输出:${\boldsymbol{M}}_{160 \times N} $ 注:${\boldsymbol{M}}_{j}$指${\boldsymbol{M}}_{160 \times N}$的第j列;${\boldsymbol{M}}_{-j}$指${\boldsymbol{M}}_{160 \times N}$的除第j列外其余列的平均值;它们都是160维的向量。 表 3 各CNN模型分类正确率(%)

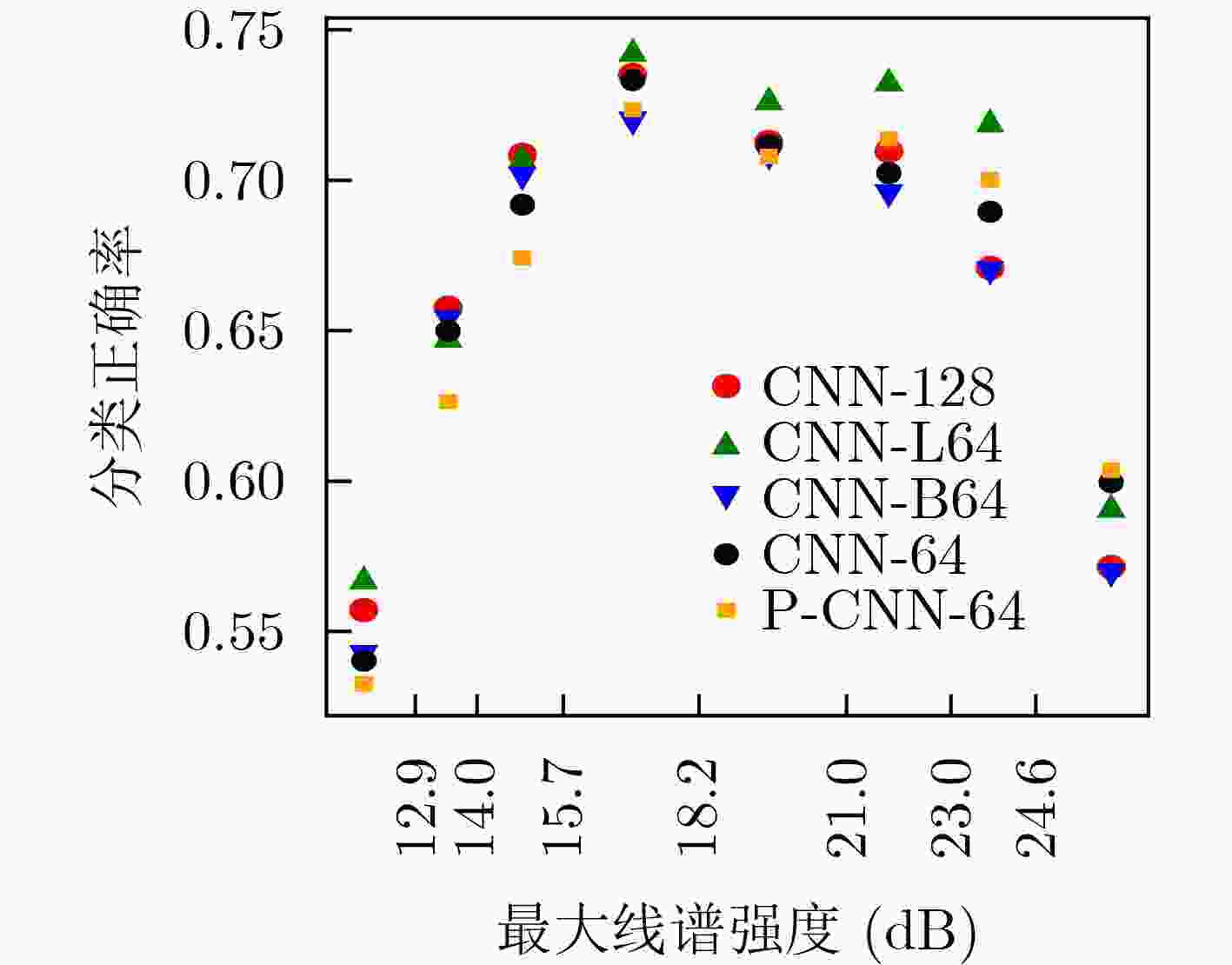

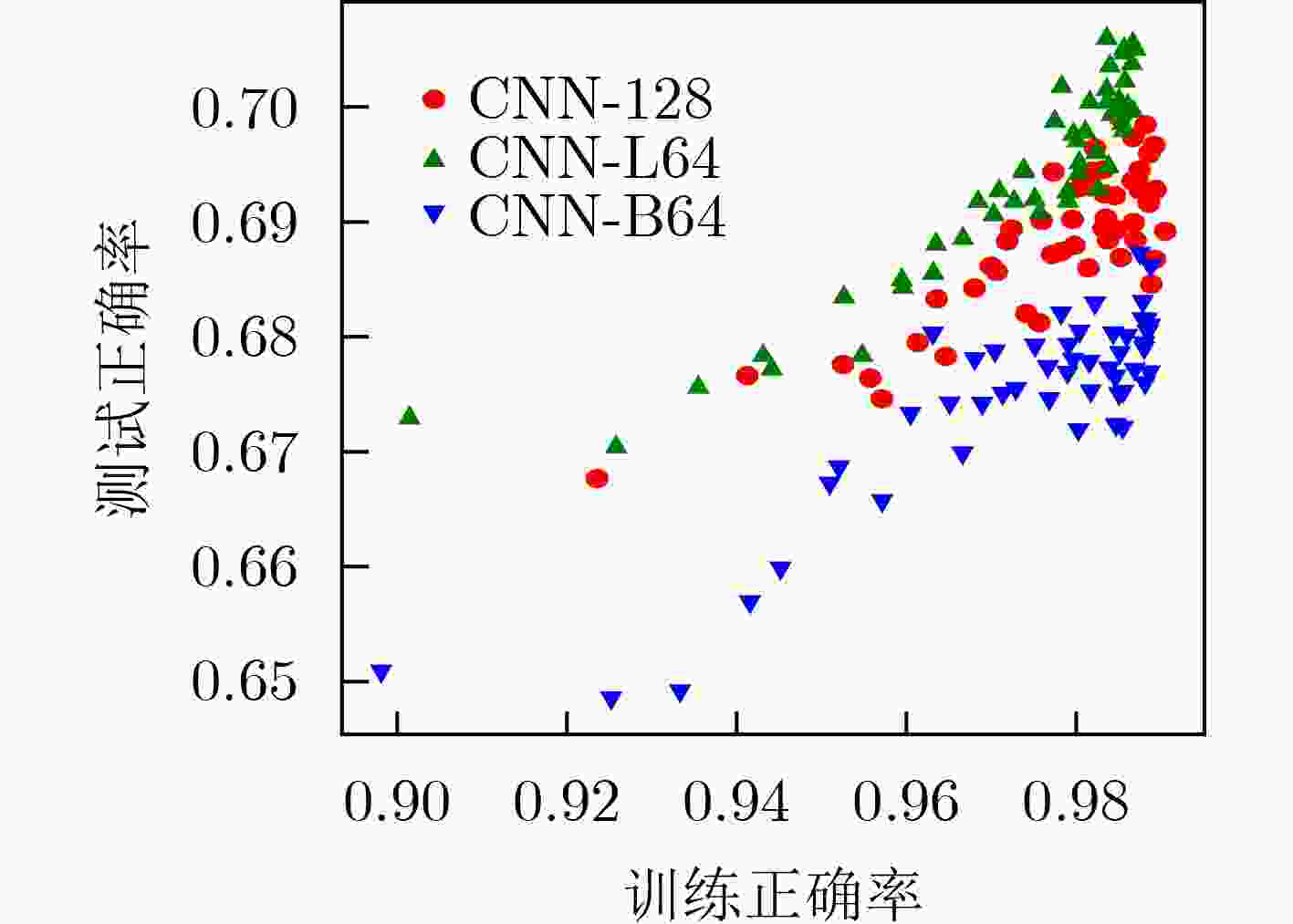

模型 训练集 测试集 CNN-128 98.94 69.28 CNN-L64 98.67 70.58 CNN-B64 98.78 68.28 CNN-64 98.48 69.05 P-CNN-64 98.37 68.53 表 4 不同最大线谱强度(dB)下CNN模型分类正确率(%)

模型 <12.9 12.9~14.0 14.0~15.7 15.7~18.2 18.2~21.0 21.0~23.0 23.0~24.6 >24.6 CNN-128 55.71 65.76 70.84 73.50 71.28 70.97 67.08 57.14 CNN-L64 56.81 64.84 70.81 74.34 72.73 73.36 72.00 59.19 CNN-B64 54.14 65.28 70.06 71.88 70.72 69.47 66.90 56.85 CNN-64 54.03 64.99 69.19 73.33 71.18 70.24 68.95 59.96 P-CNN-64 53.26 62.64 67.41 72.35 70.80 71.38 70.02 60.37 -

[1] SHEN Sheng, YANG Honghui, LI Junhao, et al. Auditory inspired convolutional neural networks for ship type classification with raw hydrophone data[J]. Entropy, 2018, 20(12): 990. doi: 10.3390/e20120990 [2] HU Gang, WANG Kejun, PENG Yuan, et al. Deep learning methods for underwater target feature extraction and recognition[J]. Computational Intelligence and Neuroscience, 2018, 2018: 1214301. doi: 10.1155/2018/1214301 [3] LI Junhao and YANG Honghui. The underwater acoustic target timbre perception and recognition based on the auditory inspired deep convolutional neural network[J]. Applied Acoustics, 2021, 182: 108210. doi: 10.1016/j.apacoust.2021.108210 [4] CHEN Yuechao and SHANG Jintao. Underwater target recognition method based on convolution autoencoder[C]. Proceedings of 2019 IEEE International Conference on Signal, Information and Data Processing, Chongqing, China, 2019: 1–5. [5] CHEN Jie, HAN Bing, MA Xufeng, et al. Underwater target recognition based on multi-decision LOFAR spectrum enhancement: A deep-learning approach[J]. Future Internet, 2021, 13(10): 265. doi: 10.3390/FI13100265 [6] ZHANG Qi, DA Lianglong, ZHANG Yanhou, et al. Integrated neural networks based on feature fusion for underwater target recognition[J]. Applied Acoustics, 2021, 182: 108261. doi: 10.1016/J.APACOUST.2021.108261 [7] 伊恩·古德费洛, 约书亚·本吉奥, 亚伦·库维尔, 赵申剑, 黎彧君, 符天凡, 等译. 深度学习[M]. 北京: 人民邮电出版社, 2017: 224–225.GOODFELLOW I, BENGIO Y, COURVILLE A, ZHAO Shenjian, LI Yujun, FU Tianfan, et al. translation. Deep Learning[M]. Beijing: Posts & Telecom Press, 2017: 224–225. [8] ZEILER M D and FERGUS R. Visualizing and understanding convolutional networks[C]. Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 818–833. [9] SPRINGENBERG J T, DOSOVITSKIY A, BROX T, et al. Striving for simplicity: The all convolutional net[EB/OL]. http://arxiv.org/abs/1412.6806, 2015. [10] SIMONYAN K, VEDALDI A, and ZISSERMAN A. Deep inside convolutional networks: Visualising image classification models and saliency maps[EB/OL]. http://arxiv.org/abs/1312.6034, 2014. [11] YOSINSKI J, CLUNE J, NGUYEN A, et al. Understanding neural networks through deep visualization[EB/OL]. http://arxiv.org/abs/1506.06579, 2015. [12] 徐源超, 蔡志明, 孔晓鹏. 基于双对数谱和卷积网络的船舶辐射噪声分类[J]. 电子与信息学报, 2022, 44(6): 1947–1955. doi: 10.11999/JEIT211407XU Yuanchao, CAI Zhiming, and KONG Xiaopeng. Classification of ship radiated noise based on Bi-logarithmic scale spectrum and convolutional network[J]. Journal of Electronics &Information Technology, 2022, 44(6): 1947–1955. doi: 10.11999/JEIT211407 [13] IRFAN M, ZHENG Jiangbin, ALI S, et al. DeepShip: An underwater acoustic benchmark dataset and a separable convolution based autoencoder for classification[J]. Expert Systems with Applications, 2021, 183: 115270. doi: 10.1016/J.ESWA.2021.115270 [14] 赖叶静, 郝珊锋, 黄定江. 深度神经网络模型压缩方法与进展[J]. 华东师范大学学报:自然科学版, 2020(5): 68–82. doi: 10.3969/j.issn.1000-5641.202091001LAI Yejing, HAO Shanfeng, and HUANG Dingjiang. Methods and progress in deep neural network model compression[J]. Journal of East China Normal University:Natural Science, 2020(5): 68–82. doi: 10.3969/j.issn.1000-5641.202091001 [15] 姜晓勇, 李忠义, 黄朗月, 等. 神经网络剪枝技术研究综述[J]. 应用科学学报, 2022, 40(5): 838–849. doi: 10.3969/j.issn.0255-8297.2022.05.013JIANG Xiaoyong, LI Zhongyi, HUANG Langyue, et al. Review of neural network pruning techniques[J]. Journal of Applied Sciences, 2022, 40(5): 838–849. doi: 10.3969/j.issn.0255-8297.2022.05.013 [16] HU Hengyuan, PENG Rui, TAI Y W, et al. Network trimming: A data-driven neuron pruning approach towards efficient deep architectures[EB/OL]. http://arxiv.org/abs/1607.03250, 2016. [17] 程玉胜, 李智忠, 邱家兴. 水声目标识别[M]. 北京: 科学出版社, 2018: 8–9.CHENG Yusheng, LI Zhizhong, and QIU Jiaxing. Underwater Acoustic Target Recognition[M]. Beijing: Science Press, 2018: 8–9. [18] SRIVASTAVA N, HINTON G, KRIZHEVSKY A, et al. Dropout: A simple way to prevent neural networks from overfitting[J]. Journal of Machine Learning Research, 2014, 15(56): 1929–1958. [19] 鲍雪山. 被动目标DEMON检测方法研究及处理系统方案设计[D]. [硕士论文], 哈尔滨工程大学, 2005.BAO Xueshan. The method research of passive target detection using DEMON spectrum and the project design of processing system[D]. [Master dissertation], Harbin Engineering University, 2005. -

下载:

下载:

下载:

下载: