Overview of Immersive Video Coding

-

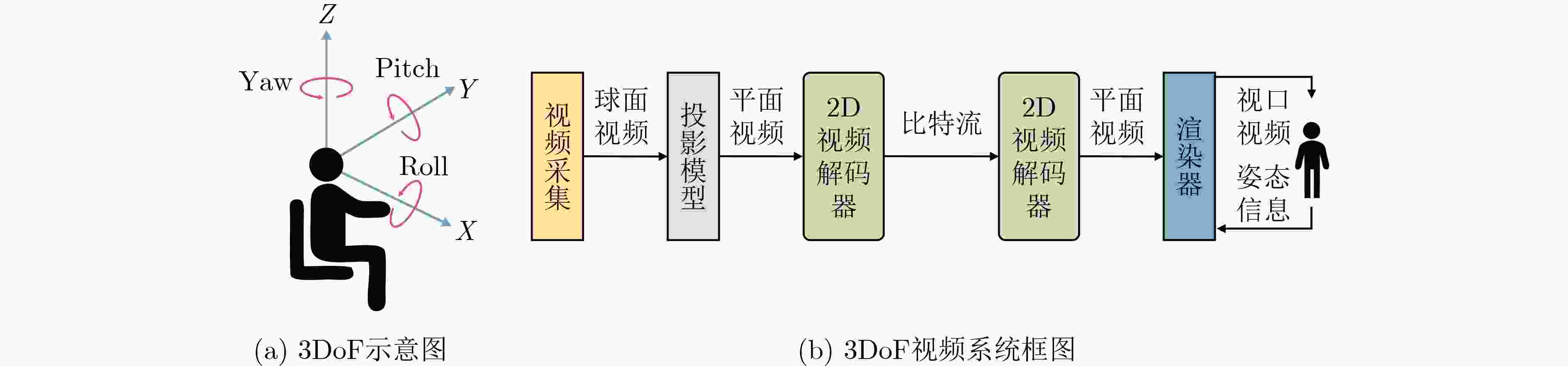

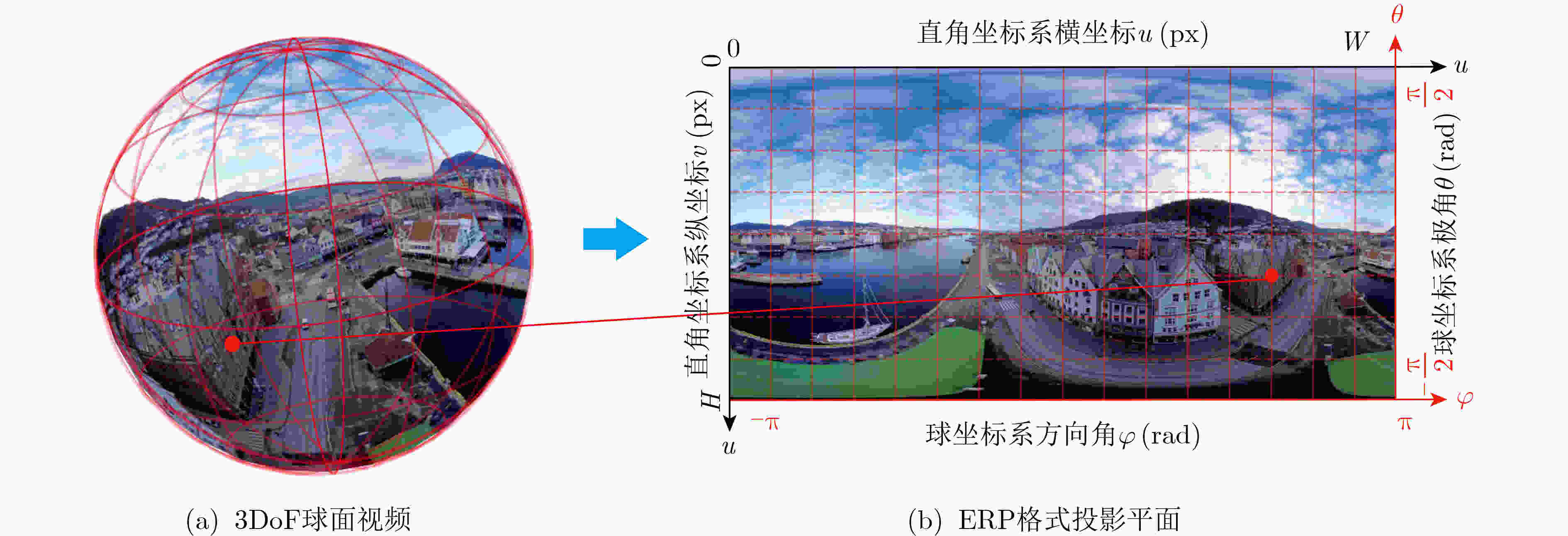

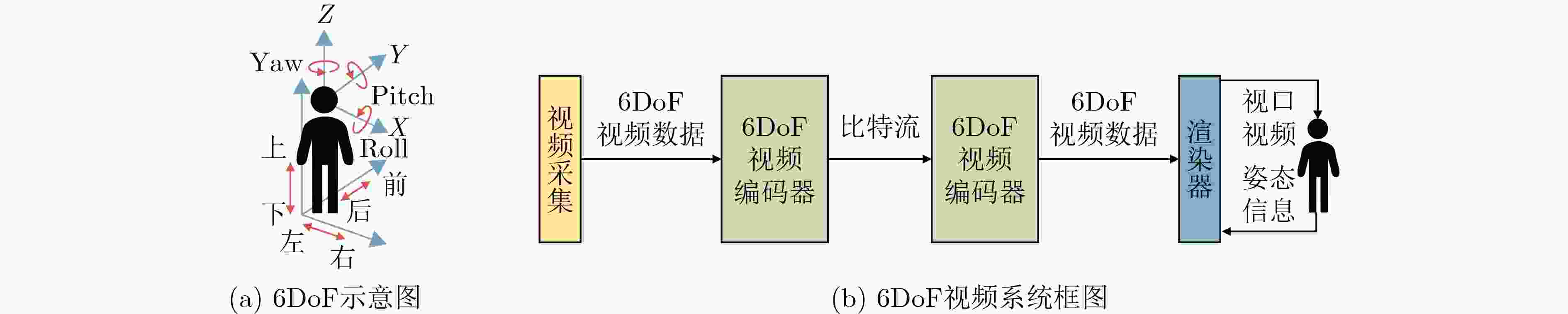

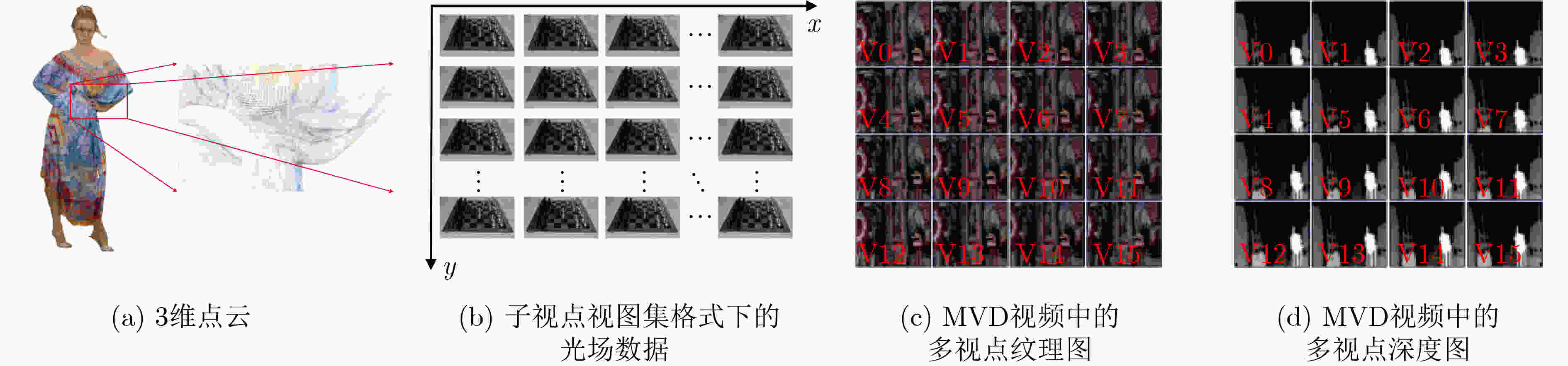

摘要: 随着虚拟现实、增强现实等沉浸式媒体技术的发展,沉浸式视频的表示、存储、传输和显示等各个环节都受到了科研及产业界的广泛关注。沉浸式视频更复杂的视频特性和庞大的数据量,对传统视频编码技术提出了挑战,新的编码技术应运而生。该文从视频自由度(DoF)出发,分别从3DoF和6DoF两个方面介绍沉浸式视频编码技术的最新成果。3DoF视频相关编码技术包括投影模型、运动估计模型和3DoF视频编码标准。6DoF视频相关编码技术包括视频表示形式、虚拟视点合成技术、6DoF视频编码技术及运动图像专家组沉浸式视频(MPEG, MIV)编码标准。最后,对沉浸式视频及其编码技术的发展进行总结和展望。Abstract: With the development of immersive media technologies such as virtual reality and augmented reality, the presentationm, storage and transmission of immersive video has received a lot of attention in both research and industry field. Due to the more complex video characteristics and huge data volume, the traditional coding techniques are not efficient for immersive video coding. How to present and encode the immersive video more efficiently is a challenge. Based on the Degree of Freedom (DoF), 3DoF and 6DoF formats of immersive video are introduced respectively in this paper. Firstly, 3DoF video related coding techniques including projection model, motion estimation are introduced, and then, 3DoF video coding standard is discussed. In 6DoF format video coding, the video representation, virtual viewpoint synthesis techniques, 6DoF video coding techniques and Moving Picture Experts Group Immersive Video (MPEG, MIV) video coding standard are illustrated. Finally, the development of immersive video and its coding technology is summarized and prospected.

-

Key words:

- Video coding /

- Immersive video /

- Panoramic video /

- Free-view video

-

图 3 分段球体投影模型[7]

图 4 CMP模型[9]

-

[1] BOYCE J M, DORÉ R, DZIEMBOWSKI A, et al. MPEG immersive video coding standard[J]. Proceedings of the IEEE, 2021, 109(9): 1521–1536. doi: 10.1109/JPROC.2021.3062590. [2] IEEE. 1857.9-2021 IEEE standard for immersive visual content coding[S]. New York: The Institute of Electrical and Electronics Engineers, 2022. doi: 10.1109/IEEESTD.2022.9726138. [3] CHEN Zhenzhong, LI Yiming, and ZHANG Yingxue. Recent advances in omnidirectional video coding for virtual reality: Projection and evaluation[J]. Signal Processing, 2018, 146: 66–78. doi: 10.1016/j.sigpro.2018.01.004. [4] 叶成英, 李建微, 陈思喜. VR全景视频传输研究进展[J]. 计算机应用研究, 2022, 39(6): 1601–1607,1621. doi: 10.19734/j.issn.1001-3695.2021.11.0623.YE Chengying, LI Jianwei, and CHEN Sixi. Research progress of VR panoramic video transmission[J]. Application Research of Computers, 2022, 39(6): 1601–1607,1621. doi: 10.19734/j.issn.1001-3695.2021.11.0623. [5] YU M, LAKSHMAN H, and GIROD B. Content adaptive representations of omnidirectional videos for cinematic virtual reality[C]. The 3rd International Workshop on Immersive Media Experiences, Brisbane, Australia, 2015: 1–6. doi: 10.1145/2814347.2814348. [6] LI Jisheng, WEN Ziyu, LI Sihan, et al. Novel tile segmentation scheme for omnidirectional video[C]. 2016 IEEE International Conference on Image Processing, Phoenix, USA, 2016: 370–374. doi: 10.1109/ICIP.2016.7532381. [7] ZHANG C, LU Y, LI J, et al. AhG8: Segmented sphere projection (SSP) for 360-degree video content[C]. Joint Video Exploration Team of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11, 4th Meeting, Geneva, Switzerland, 2016. [8] 兰诚栋, 饶迎节, 宋彩霞, 等. 基于强化学习的立体全景视频自适应流[J]. 电子与信息学报, 2022, 44(4): 1461–1468. doi: 10.11999/JEIT200908.LAN Chengdong, RAO Yingjie, SONG Caixia, et al. Adaptive streaming of stereoscopic panoramic video based on reinforcement learning[J]. Journal of Electronics & Information Technology, 2022, 44(4): 1461–1468. doi: 10.11999/JEIT200908. [9] GREENE N. Environment mapping and other applications of world projections[J]. IEEE Computer Graphics and Applications, 1986, 6(11): 21–29. doi: 10.1109/MCG.1986.276658. [10] FU C W, WAN Liang, WONG T T, et al. The rhombic dodecahedron map: An efficient scheme for encoding panoramic video[J]. IEEE Transactions on Multimedia, 2009, 11(4): 634–644. doi: 10.1109/TMM.2009.2017626. [11] LIN H C, LI C Y, LIN Jianliang, et al. AHG8: An efficient compact layout for octahedron format[C]. Joint Video Exploration Team of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11, Chengdu, China, 2016. [12] LIN H C, HUANG C C, LI C Y, et al. AHG8: An improvement on the compact OHP layout[C]. Joint Video Exploration Team of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11, 5th Meeting, Geneva, Switzerland, 2017. [13] AKULA S N, SINGH A, KK R, et al. AHG8: Efficient frame packing method for icosahedral projection (ISP)[C]. Joint Video Exploration of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11 7th Meeting, Torino, Italy, 2017: JVET-G0156. [14] COBAN M, AUWERA G V D, and KARCZEWICZ M. AHG8: Adjusted cubemap projection for 360-degree video[C]. Joint Video Exploration Team of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11 6th Meeting, Hobart, Australia, 2017: JVET-F0025. [15] ZHOU M. AHG8: A study on equi-angular cubemap projection (EAC)[C]. Joint Video Exploration Team of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11, Geneva, Switzerland, 2017. [16] LIN Jianliang, LEE Y H, SHIH C H, et al. Efficient projection and coding tools for 360° video[J]. IEEE Journal on Emerging and Selected Topics in Circuits and Systems, 2019, 9(1): 84–97. doi: 10.1109/JETCAS.2019.2899660. [17] HE Yuwen, XIU Xiaoyu, HANHART P, et al. Content-adaptive 360-degree video coding using hybrid cubemap projection[C]. 2018 Picture Coding Symposium, San Francisco, USA, 2018: 313–317. doi: 10.1109/PCS.2018.8456280. [18] PI Jinyong, ZHANG Yun, ZHU Linwei, et al. Texture-aware spherical rotation for high efficiency omnidirectional intra video coding[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(12): 8768–8780. doi: 10.1109/TCSVT.2022.3192665. [19] SU Yuchuan and GRAUMAN K. Learning compressible 360° video isomers[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7824–7833. doi: 10.1109/CVPR.2018.00816. [20] HUANG Han, WOODS J W, ZHAO Yao, et al. Control-point representation and differential coding affine-motion compensation[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2013, 23(10): 1651–1660. doi: 10.1109/TCSVT.2013.2254977. [21] DE SIMONE F, FROSSARD P, BIRKBECK N, et al. Deformable block-based motion estimation in omnidirectional image sequences[C]. 2017 IEEE 19th International Workshop on Multimedia Signal Processing, Luton, United Kingdom, 2017: 1–6. doi: 10.1109/MMSP.2017.8122254. [22] MARIE A, BIDGOLI N M, MAUGEY T, et al. Rate-distortion optimized motion estimation for on-the-sphere compression of 360 videos[C]. 2021 IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, Canada, 2021: 1570–1574. doi: 10.1109/ICASSP39728.2021.9413681. [23] VISHWANATH B, NANJUNDASWAMY T, and ROSE K. A geodesic translation model for spherical video compression[J]. IEEE Transactions on Image Processing, 2022, 31: 2136–2147. doi: 10.1109/TIP.2022.3152059. [24] VISHWANATH B, NANJUNDASWAMY T, and ROSE K. Rotational motion model for temporal prediction in 360 video coding[C]. 2017 IEEE 19th International Workshop on Multimedia Signal Processing, Luton, United Kingdom, 2017: 1–6. doi: 10.1109/MMSP.2017.8122231. [25] VISHWANATH B, ROSE K, HE Yuwen, et al. Rotational motion compensated prediction in HEVC based omnidirectional video coding[C]. 2018 Picture Coding Symposium, San Francisco, USA, 2018: 323–327. doi: 10.1109/PCS.2018.8456296. [26] WANG Yefei, LIU Dong, MA Siwei, et al. Spherical coordinates transform-based motion model for panoramic video coding[J]. IEEE Journal on Emerging and Selected Topics in Circuits and Systems, 2019, 9(1): 98–109. doi: 10.1109/JETCAS.2019.2896265. [27] VISHWANATH B and ROSE K. Spherical video coding with geometry and region adaptive transform domain temporal prediction[C]. The ICASSP 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 2020: 2043–2047. doi: 10.1109/ICASSP40776.2020.9054211. [28] VISHWANATH B, NANJUNDASWAMY T, and ROSE K. Effective prediction modes design for adaptive compression with application in video coding[J]. IEEE Transactions on Image Processing, 2022, 31: 636–647. doi: 10.1109/TIP.2021.3134454. [29] ISO/IEC 23090-2: 2019 Information technology — Coded representation of immersive media — Part 2: Omnidirectional media format[S]. 2019. [30] HERRE J, HILPERT J, KUNTZ A, et al. MPEG-H 3D audio—The new standard for coding of immersive spatial audio[J]. IEEE Journal of Selected Topics in Signal Processing, 2015, 9(5): 770–779. doi: 10.1109/JSTSP.2015.2411578. [31] YE Yan, ALSHINA E, and BOYCE J M. Algorithm descriptions of projection format conversion and video quality metrics in 360Lib[C]. Joint Video Exploration Team (JVET) of ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29/WG 11 8th Meeting, Macao, China, 2017. [32] ITU-T Rec. H. 265 and ISO/IEC 23008-2. High efficiency video coding[S]. 2020. [33] 朱秀昌, 唐贵进. H. 266/VVC: 新一代通用视频编码国际标准[J]. 南京邮电大学学报:自然科学版, 2021, 41(2): 1–11. doi: 10.14132/j.cnki.1673-5439.2021.02.001.ZHU Xiuchang and TANG Guijin. H. 266/VVC: Versatile video coding international standard[J]. Journal of Nanjing University of Posts and Telecommunications:Natural Science Edition, 2021, 41(2): 1–11. doi: 10.14132/j.cnki.1673-5439.2021.02.001. [34] ITU-T. ITU-T Rec. H. 266 and ISO/IEC 23090-3 versatile video coding[S]. 2021. [35] HE Y, BOYCE J, CHOI K, et al. JVET common test conditions and evaluation procedures for 360° video[C]. Joint Video Exploration Team of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29, 2021: JVET-U2012. [36] YU M, LAKSHMAN H, and GIROD B. A framework to evaluate omnidirectional video coding schemes[C]. 2015 IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 2015: 31–36. doi: 10.1109/ISMAR.2015.12. [37] SUN Yule, LU Ang, and YU Lu. Weighted-to-spherically-uniform quality evaluation for omnidirectional video[J]. IEEE Signal Processing Letters, 2017, 24(9): 1408–1412. doi: 10.1109/LSP.2017.2720693. [38] ZAKHARCHENKO V, CHOI K P, and PARK J H. Quality metric for spherical panoramic video[C]. SPIE 9970, Optics and Photonics for Information Processing X, San Diego, USA, 2016. doi: 10.1117/12.2235885. [39] ADELSON E H and BERGEN J R. The plenoptic function and the elements of early vision[J]. Computational Models of Visual Processing, 1991, 1: 43–54. [40] MCMILLAN L and BISHOP G. Plenoptic modeling: An image-based rendering system[C]. The 22nd Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, USA, 1995: 39–46. doi: 10.1145/218380.218398. [41] LEVOY M and HANRAHAN P. Light field rendering[C]. The 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, USA, 1996: 31–42. doi: 10.1145/237170.237199. [42] MING Yue, MENG Xuyang, FAN Chunxiao, et al. Deep learning for monocular depth estimation: A review[J]. Neurocomputing, 2021, 438: 14–33. doi: 10.1016/j.neucom.2020.12.089. [43] TEWARI A, THIES J, MILDENHALL B, et al. Advances in neural rendering[J]. Computer Graphics Forum, 2022, 41(2): 703–735. doi: 10.1111/cgf.14507. [44] LV Chenlei, LIN Weisi, and ZHAO Baoquan. Voxel structure-based mesh reconstruction from a 3D point cloud[J]. IEEE Transactions on Multimedia, 2022, 24: 1815–1829. doi: 10.1109/TMM.2021.3073265. [45] XU Yusheng, TONG Xiaohua, and STILLA U. Voxel-based representation of 3D point clouds: Methods, applications, and its potential use in the construction industry[J]. Automation in Construction, 2021, 126: 103675. doi: 10.1016/j.autcon.2021.103675. [46] CHAN S C, SHUM H Y, and NG K T. Image-based rendering and synthesis[J]. IEEE Signal Processing Magazine, 2007, 24(6): 22–33. doi: 10.1109/MSP.2007.905702. [47] TIAN Shishun, ZHANG Lu, ZOU Wenbin, et al. Quality assessment of DIBR-synthesized views: An overview[J]. Neurocomputing, 2021, 423: 158–178. doi: 10.1016/j.neucom.2020.09.062. [48] HEDMAN P, PHILIP J, PRICE T, et al. Deep blending for free-viewpoint image-based rendering[J]. ACM Transactions on Graphics, 2018, 37(6): 257. doi: 10.1145/3272127.3275084. [49] NGUYEN-PHUOC T, LI Chuan, BALABAN S, et al. RenderNet: A deep convolutional network for differentiable rendering from 3D shapes[C]. The 31st International Conference on Neural Information Processing Systems, Montréal, Canada, 2018. [50] TEWARI A, FRIED O, THIES J, et al. State of the art on neural rendering[J]. Computer Graphics Forum, 2020, 39(2): 701–727. doi: 10.1111/cgf.14022. [51] OECHSLE M, MESCHEDER L, NIEMEYER M, et al. Texture fields: Learning texture representations in function space[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 4531–4540. doi: 10.1109/ICCV.2019.00463. [52] SITZMANN V, THIES J, HEIDE F, et al. DeepVoxels: Learning persistent 3D feature embeddings[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 2437–2446. doi: 10.1109/CVPR.2019.00254. [53] MILDENHALL B, SRINIVASAN P P, TANCIK M, et al. NeRF: Representing scenes as neural radiance fields for view synthesis[J]. Communications of the ACM, 2022, 65(1): 99–106. doi: 10.1145/3503250. [54] PUMAROLA A, CORONA E, PONS-MOLL G, et al. D-NeRF: Neural radiance fields for dynamic scenes[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 10318–10327. doi: 10.1109/CVPR46437.2021.01018. [55] XIE Yiheng, TAKIKAWA T, SAITO S, et al. Neural fields in visual computing and beyond[J]. Computer Graphics Forum, 2022, 41(2): 641–676. doi: 10.1111/cgf.14505. [56] QUACH M, PANG Jiahao, TIAN Dong, et al. Survey on deep learning-based point cloud compression[J]. Frontiers in Signal Processing, 2022, 2: 846972. doi: 10.3389/frsip.2022.846972. [57] SCHAEFER R. Call for proposals for point cloud compression V2[C]. ISO/IEC JTC1 SC29/WG11 MPEG, 117th Meeting. Hobart, TAS, 2017: document N16763. [58] ISO/IEC 23090-9: 2023 Information technology-Coded representation of immersive media-Part 9: Geometry-based point cloud compression[S]. International Organization for Standardization, 2023. [59] ISO/IEC 23090-5 Video-based point cloud compression[S]. International Organization for Standardization, 2021. [60] CONTI C, SOARES L D, and NUNES P. Dense light field coding: A survey[J]. IEEE Access, 2020, 8: 49244–49284. doi: 10.1109/ACCESS.2020.2977767. [61] 刘宇洋, 朱策, 郭红伟. 光场数据压缩研究综述[J]. 中国图象图形学报, 2019, 24(11): 1842–1859. doi: 10.11834/jig.190035.LIU Yuyang, ZHU Ce, and GUO Hongwei. Survey of light field data compression[J]. Journal of Image and Graphics, 2019, 24(11): 1842–1859. doi: 10.11834/jig.190035. [62] PERRA C, MAHMOUDPOUR S, and PAGLIARI C. JPEG pleno light field: Current standard and future directions[C]. SPIE 12138, Optics, Photonics and Digital Technologies for Imaging Applications VII, Strasbourg, France, 2022: 153–156. doi: 10.1117/12.2624083. [63] TECH G, CHEN Ying, MÜLLER K, et al. Overview of the multiview and 3D extensions of high efficiency video coding[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2016, 26(1): 35–49. doi: 10.1109/TCSVT.2015.2477935. [64] SALAHIEH B, JUNG J, and DZIEMBOWSKI A. Test model for immersive video[C]. ISO/IEC JTC1 SC29/WG11 MPEG, 136th Meeting, 2021: document N0142. [65] JUNG J and KROON B. Common test conditions for MPEG immersive video[C]. ISO/IEC JTC 1/SC 29/WG 04 MPEG, 137th Meeting, 2022: document N0169. [66] DZIEMBOWSKI A, MIELOCH D, STANKOWSKI J, et al. IV-PSNR—the objective quality metric for immersive video applications[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(11): 7575–7591. doi: 10.1109/TCSVT.2022.3179575. [67] ISO/IEC 23090-10: 2022 Information technology-Coded representation of immersive media-Part 10: Carriage of visual volumetric video-based coding data[S]. International Organization for Standardization, 2022. [68] ISO/IEC FDIS 23090-12: 2023 Information technology-Coded representation of immersive media-Part 12: MPEG immersive video[S]. 2023. [69] MILOVANOVIĆ M, HENRY F, CAGNAZZO M, et al. Patch decoder-side depth estimation in MPEG immersive video[C]. 2021 IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, Canada, 2021: 1945–1949. doi: 10.1109/ICASSP39728.2021.9414056. [70] BROSS B, WANG Yekui, YE Yan, et al. Overview of the Versatile Video Coding (VVC) standard and its applications[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2021, 31(10): 3736–3764. doi: 10.1109/TCSVT.2021.3101953. [71] WIECKOWSKI A, BRANDENBURG J, HINZ T, et al. VVenC: An open and optimized VVC encoder implementation[C]. 2021 IEEE International Conference on Multimedia & Expo Workshops, Shenzhen, China, 2021: 1–2. doi: 10.1109/ICMEW53276.2021.9455944. [72] Reference view synthesizer (RVS) manual[C]. ISO/IEC JTC 1/SC 29/WG 04 MPEG, 124th Meeting, Macao, China, 2018: N18068. -

下载:

下载:

下载:

下载: