| [1] |

GOODALE M A. Lessons from human vision for robotic design[J]. Autonomous Intelligent Systems, 2021, 1(1): 2. doi: 10.1007/s43684-021-00002-2

|

| [2] |

GOLLISCH T and MEISTER M. Eye smarter than scientists believed: Neural computations in circuits of the retina[J]. Neuron, 2010, 65(2): 150–164. doi: 10.1016/j.neuron.2009.12.009

|

| [3] |

JADZINSKY P D and BACCUS S A. Transformation of visual signals by inhibitory interneurons in retinal circuits[J]. Annual Review of Neuroscience, 2013, 36: 403–428. doi: 10.1146/annurev-neuro-062012-170315

|

| [4] |

WENSEL T G. Phosphoinositides in retinal function and disease[J]. Cells, 2020, 9(4): 866. doi: 10.3390/cells9040866

|

| [5] |

O’BRIEN J and BLOOMFIELD S A. Plasticity of retinal gap junctions: Roles in synaptic physiology and disease[J]. Annual Review of Vision Science, 2018, 4: 79–100. doi: 10.1146/annurev-vision-091517-034133

|

| [6] |

TULI S, DASGUPTA I, GRANT E, et al. Are convolutional neural networks or transformers more like human vision?[EB/OL].https://arxiv.org/abs/2105.07197, 2021.

|

| [7] |

HU Yueyu, YANG Shuai, YANG Wenhan, et al. Towards coding for human and machine vision: A scalable image coding approach[C]. Proceedings of 2020 IEEE International Conference on Multimedia and Expo, London, United Kindom, 2020: 1–6.

|

| [8] |

LIU J K and GOLLISCH T. Spike-triggered covariance analysis reveals phenomenological diversity of contrast adaptation in the retina[J]. PLoS Computational Biology, 2015, 11(7): e1004425. doi: 10.1371/journal.pcbi.1004425

|

| [9] |

LIU J K, SCHREYER H M, ONKEN A, et al. Inference of neuronal functional circuitry with spike-triggered non-negative matrix factorization[J]. Nature Communications, 2017, 8(1): 149. doi: 10.1038/s41467-017-00156-9

|

| [10] |

LIU J K, KARAMANLIS D, and GOLLISCH T. Simple model for encoding natural images by retinal ganglion cells with nonlinear spatial integration[J]. bioRxiv, 2021.

|

| [11] |

MEYER A F, WILLIAMSON R S, LINDEN J F, et al. Models of neuronal stimulus-response functions: Elaboration, estimation, and evaluation[J]. Frontiers in Systems Neuroscience, 2017, 10: 109. doi: 10.3389/fnsys.2016.00109

|

| [12] |

PILLOW J W, SHLENS J, PANINSKI L, et al. Spatio-temporal correlations and visual signalling in a complete neuronal population[J]. Nature, 2008, 454(7207): 995–999. doi: 10.1038/nature07140

|

| [13] |

YAN Boyuan and NIRENBERG S. An embedded real-time processing platform for optogenetic neuroprosthetic applications[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2018, 26(1): 233–243. doi: 10.1109/tnsre.2017.2763130

|

| [14] |

YAN Qi, ZHENG Yajing, JIA Shanshan, et al. Revealing fine structures of the retinal receptive field by deep-learning networks[J]. IEEE Transactions on Cybernetics, 2022, 52(1): 39–50. doi: 10.1109/tcyb.2020.2972983

|

| [15] |

SAHANI M and LINDEN J F. How linear are auditory cortical responses?[C]. Proceedings of the 15th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2002: 125–132.

|

| [16] |

MCFARLAND J M, CUI Yuwei, and BUTTS D A. Inferring nonlinear neuronal computation based on physiologically plausible inputs[J]. PLoS Computational Biology, 2013, 9(7): e1003143. doi: 10.1371/journal.pcbi.1003143

|

| [17] |

LINDSAY G W. Convolutional neural networks as a model of the visual system: Past, present, and future[J]. Journal of Cognitive Neuroscience, 2021, 33(10): 2017–2031. doi: 10.1162/jocn_a_01544

|

| [18] |

MCINTOSH L T, MAHESWARANATHAN N, NAYEBI A, et al. Deep learning models of the retinal response to natural scenes[C]. Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 2016: 1361–1369.

|

| [19] |

RICHARDS B A, LILLICRAP T P, BEAUDOIN P, et al. A deep learning framework for neuroscience[J]. Nature Neuroscience, 2019, 22(11): 1761–1770. doi: 10.1038/s41593-019-0520-2

|

| [20] |

BATTY E, MEREL J, BRACKBILL N, et al. Multilayer recurrent network models of primate retinal ganglion cell responses[C]. Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 2017.

|

| [21] |

MAHESWARANATHAN N, MCINTOSH L, KASTNER D B, et al. Deep learning models reveal internal structure and diverse computations in the retina under natural scenes[J]. bioRxiv, 2018: 340943.

|

| [22] |

YAMINS D L K and DICARLO J J. Using goal-driven deep learning models to understand sensory cortex[J]. Nature Neuroscience, 2016, 19(3): 356–365. doi: 10.1038/nn.4244

|

| [23] |

杨俊, 马正敏, 沈韬, 等. 基于深度时空特征融合的多通道运动想象EEG解码方法[J]. 电子与信息学报, 2021, 43(1): 196–203. doi: 10.11999/JEIT190300YANG Jun, MA Zhengmin, SHEN Tao, et al. Multichannel MI-EEG feature decoding based on deep learning[J]. Journal of Electronics &Information Technology, 2021, 43(1): 196–203. doi: 10.11999/JEIT190300

|

| [24] |

CADENA S A, DENFIELD G H, WALKER E Y, et al. Deep convolutional models improve predictions of macaque V1 responses to natural images[J]. PLoS Computational Biology, 2019, 15(4): e1006897. doi: 10.1371/journal.pcbi.1006897

|

| [25] |

YAMINS D L K, HONG Ha, CADIEU C F, et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex[J]. Proceedings of the National Academy of Sciences of the United States of America, 2014, 111(23): 8619–8624. doi: 10.1073/pnas.1403112111

|

| [26] |

ZHANG Yijun, YU Zhaofei, LIU J K, et al. Neural decoding of visual information across different neural recording modalities and approaches[J]. Machine Intelligence Research, 2022, 19(5): 350–365. doi: 10.1007/s11633-022-1335-2

|

| [27] |

BRACKBILL N, RHOADES C, KLING A, et al. Reconstruction of natural images from responses of primate retinal ganglion cells[J]. eLife, 2020, 9: e58516. doi: 10.7554/eLife.58516

|

| [28] |

WARLAND D K, REINAGEL P, and MEISTER M. Decoding visual information from a population of retinal ganglion cells[J]. Journal of Neurophysiology, 1997, 78(5): 2336–2350. doi: 10.1152/jn.1997.78.5.2336

|

| [29] |

ZHANG Yichen, JIA Shanshan, ZHENG Yajing, et al. Reconstruction of natural visual scenes from neural spikes with deep neural networks[J]. Neural Networks, 2020, 125: 19–30. doi: 10.1016/j.neunet.2020.01.033

|

| [30] |

ZHANG Yijun, BU Tong, ZHANG Jiyuan, et al. Decoding pixel-level image features from two-photon calcium signals of macaque visual cortex[J]. Neural Computation, 2022, 34(6): 1369–1397. doi: 10.1162/neco_a_01498

|

| [31] |

XU Qi, SHEN Jiangrong, RAN Xuming, et al. Robust transcoding sensory information with neural spikes[J]. IEEE Transactions on Neural Networks and Learning Systems, 2022, 33(5): 1935–1946. doi: 10.1109/tnnls.2021.3107449

|

| [32] |

ZHANG Yimeng, LEE T S, LI Ming, et al. Convolutional neural network models of V1 responses to complex patterns[J]. Journal of Computational Neuroscience, 2019, 46(1): 33–54. doi: 10.1007/s10827-018-0687-7

|

| [33] |

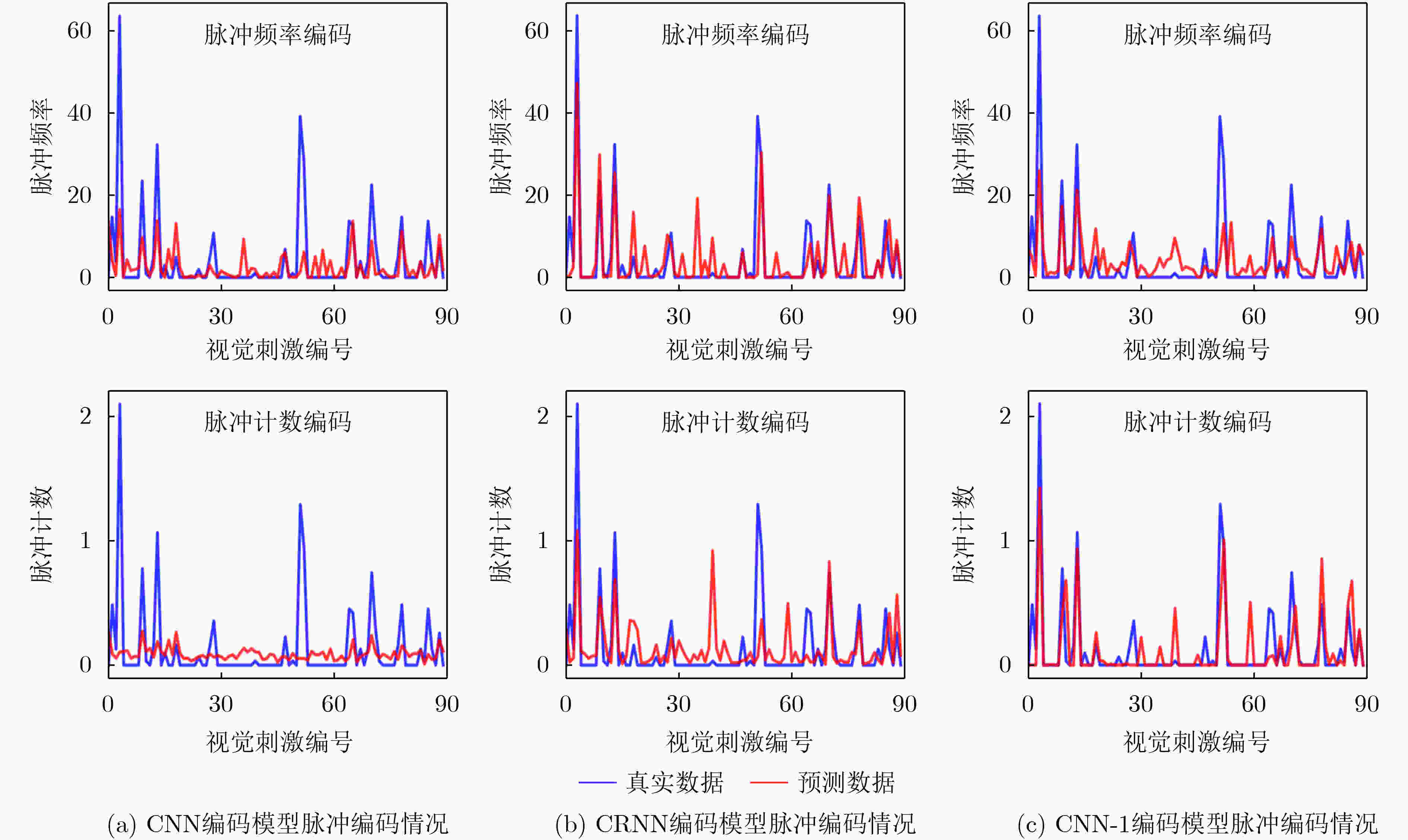

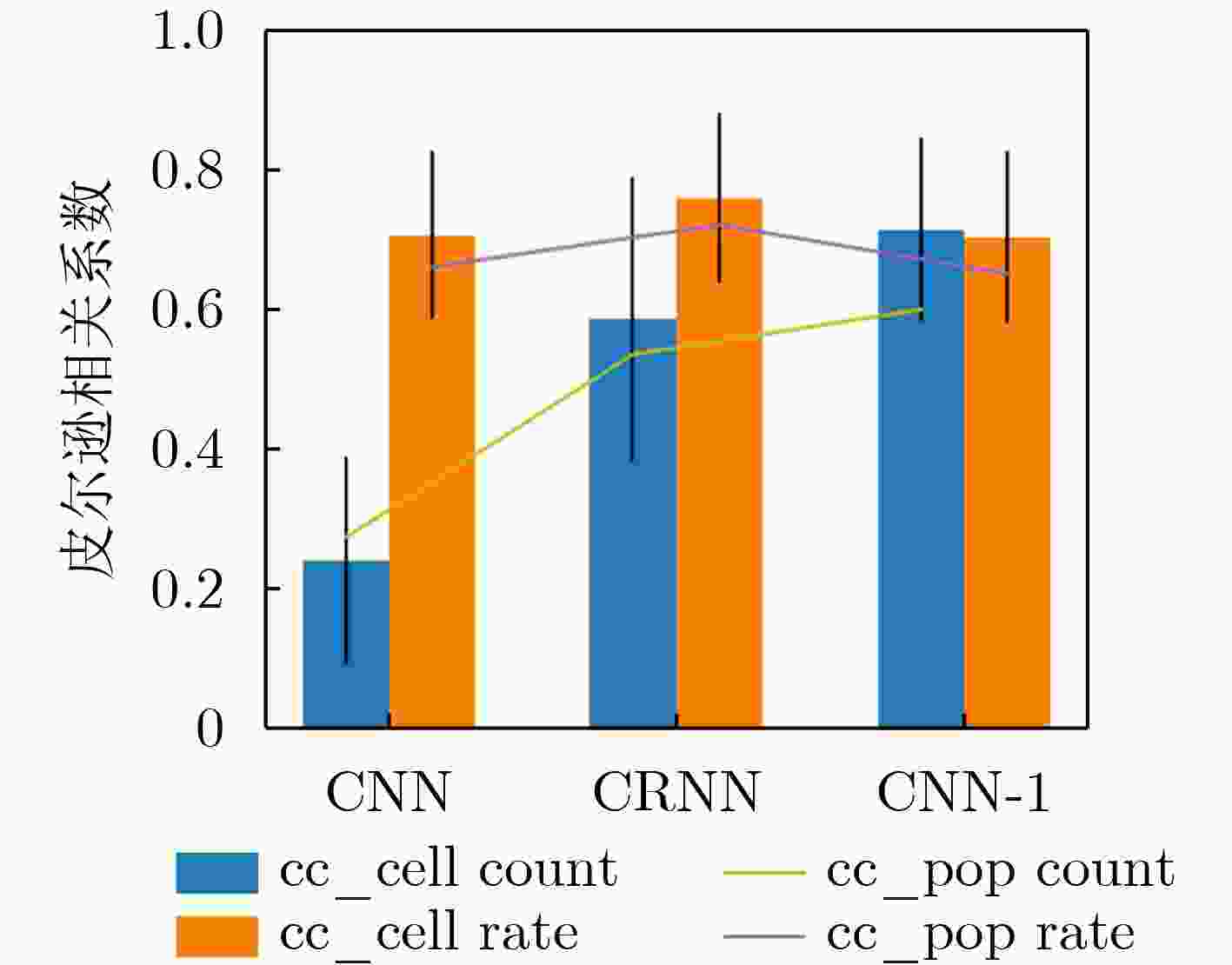

ZHENG Yajing, JIA Shanshan, YU Zhaofei, et al. Unraveling neural coding of dynamic natural visual scenes via convolutional recurrent neural networks[J]. Patterns, 2021, 2(10): 100350. doi: 10.1016/j.patter.2021.100350

|

| [34] |

SHAH N P, BRACKBILL N, RHOADES C, et al. Inference of nonlinear receptive field subunits with spike-triggered clustering[J]. eLife, 2020, 9: e45743. doi: 10.7554/elife.45743

|

| [35] |

HOCHREITER S and SCHMIDHUBER J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735–1780. doi: 10.1162/neco.1997.9.8.1735

|

| [36] |

SHAH N P, BRACKBILL N, SAMARAKOON R, et al. Individual variability of neural computations in the primate retina[J]. Neuron, 2022, 110(4): 698–708.e5. doi: 10.1016/j.neuron.2021.11.026

|

| [37] |

DUMOULIN V, SHLENS J, and KUDLUR M. A learned representation for artistic style[C]. Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 2017.

|

| [38] |

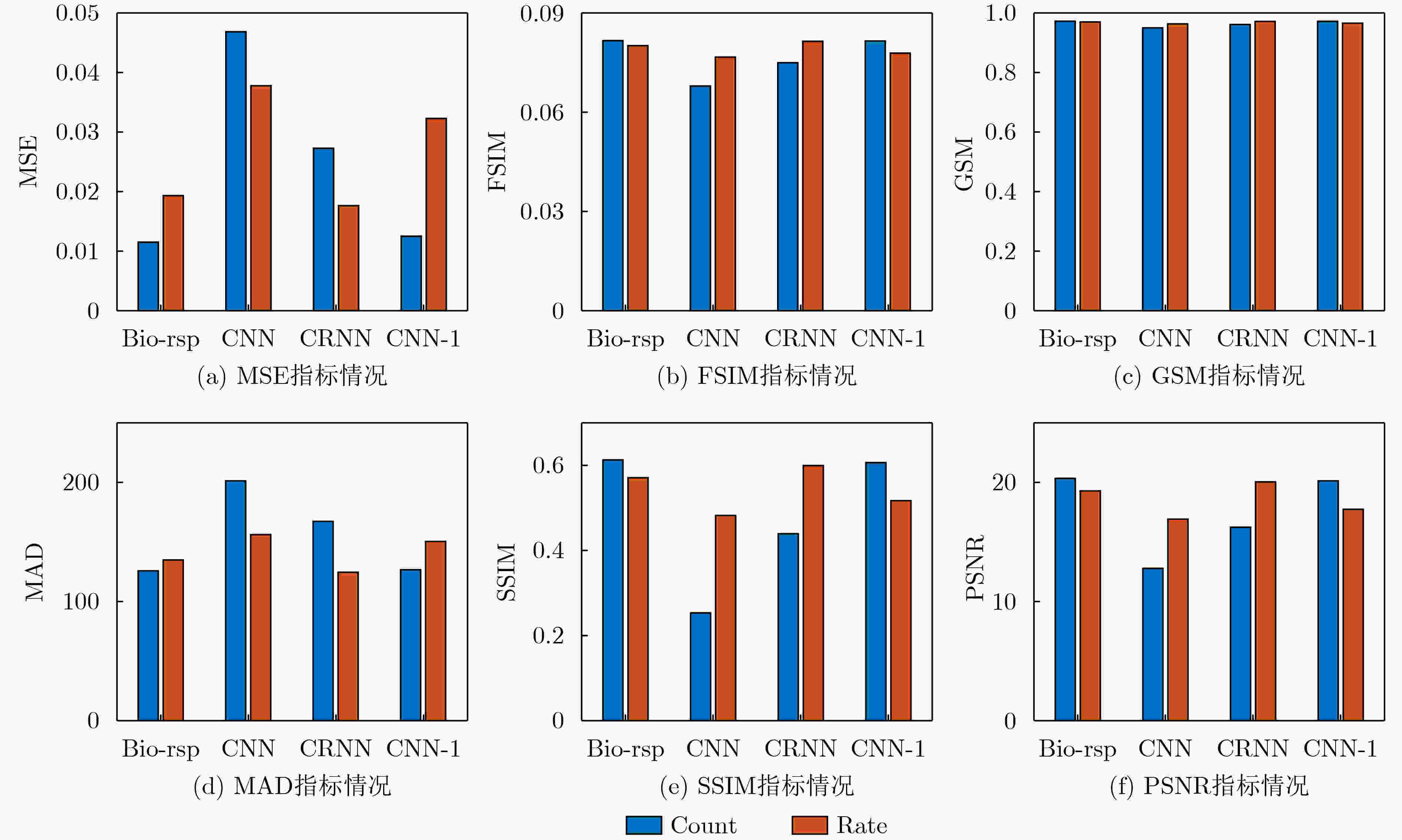

WANG Zhou, BOVIK A C, SHEIKH H R, et al. Image quality assessment: From error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600–612. doi: 10.1109/tip.2003.819861

|

| [39] |

LARSON E C and CHANDLER D M. Most apparent distortion: Full-reference image quality assessment and the role of strategy[J]. Journal of Electronic Imaging, 2010, 19(1): 011006. doi: 10.1117/1.3267105

|

| [40] |

ZHANG Lin, ZHANG Lei, MOU Xuanqin, et al. FSIM: A feature similarity index for image quality assessment[J]. IEEE Transactions on Image Processing, 2011, 20(8): 2378–2386. doi: 10.1109/tip.2011.2109730

|

| [41] |

MORRONE M C, ROSS J, BURR D C, et al. Mach bands are phase dependent[J]. Nature, 1986, 324(6094): 250–253. doi: 10.1038/324250a0

|

| [42] |

LIU Anmin, LIN Weisi, and NARWARIA M. Image quality assessment based on gradient similarity[J]. IEEE Transactions on Image Processing, 2012, 21(4): 1500–1512. doi: 10.1109/tip.2011.2175935

|

下载:

下载:

下载:

下载: