Research on Bidirectional Attenuation Loss Method for Rotating Object Detection in Remote Sensing Image

-

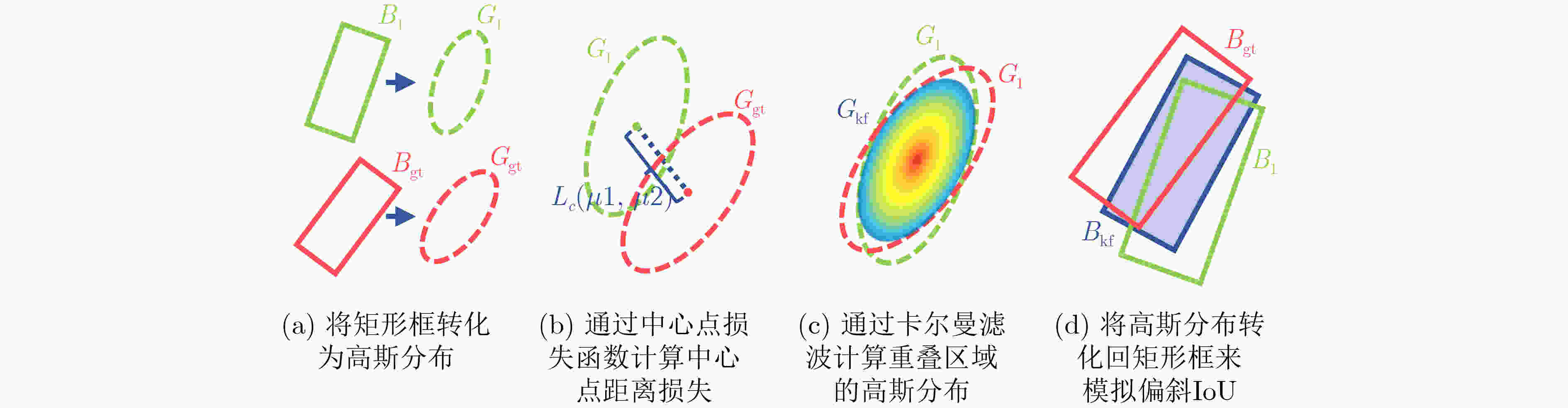

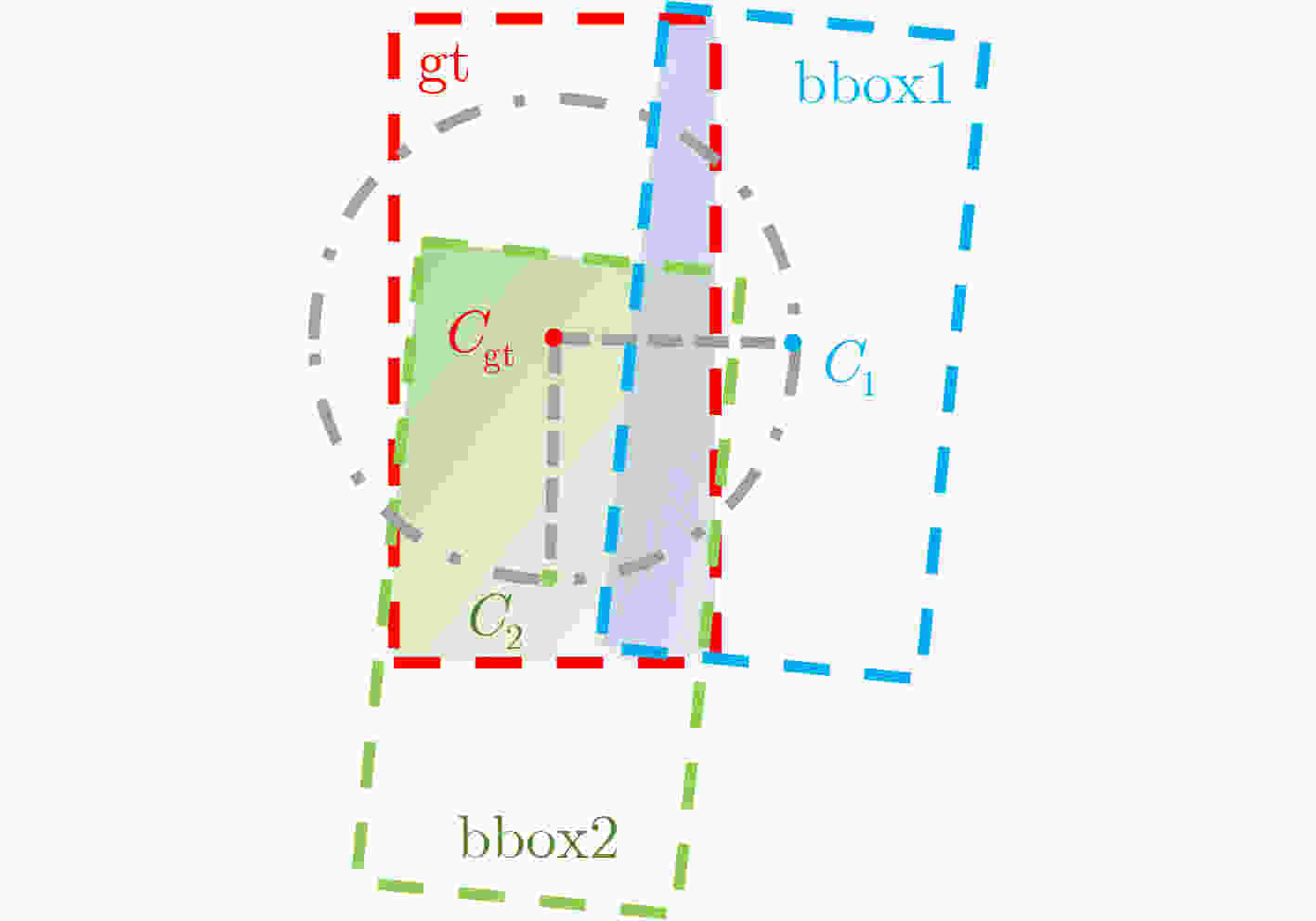

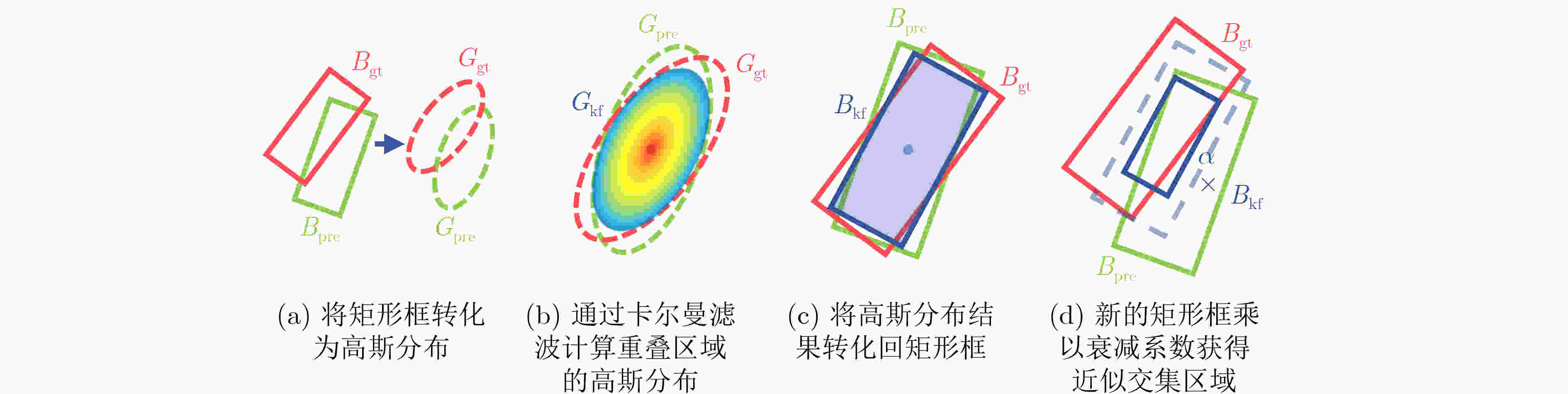

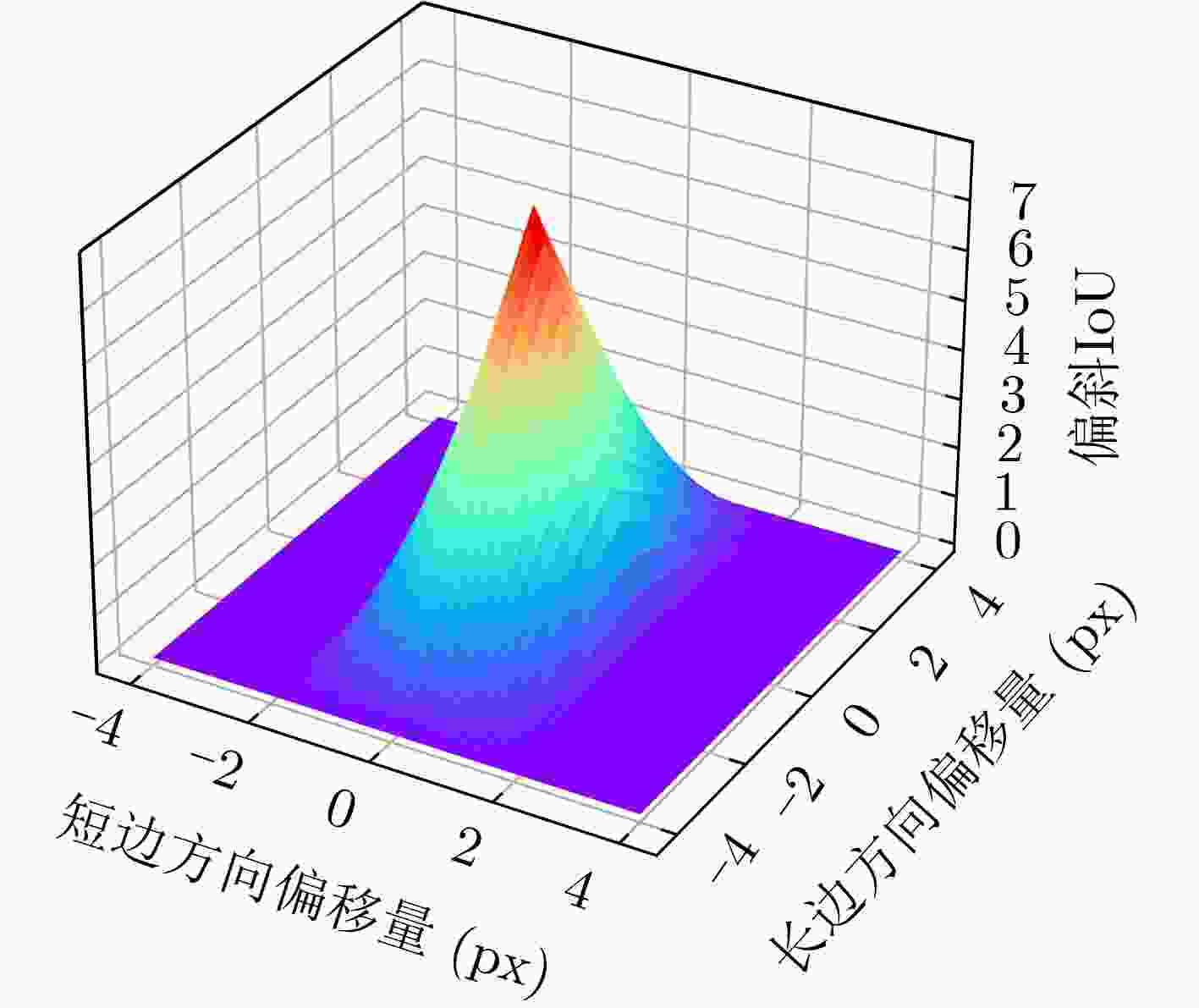

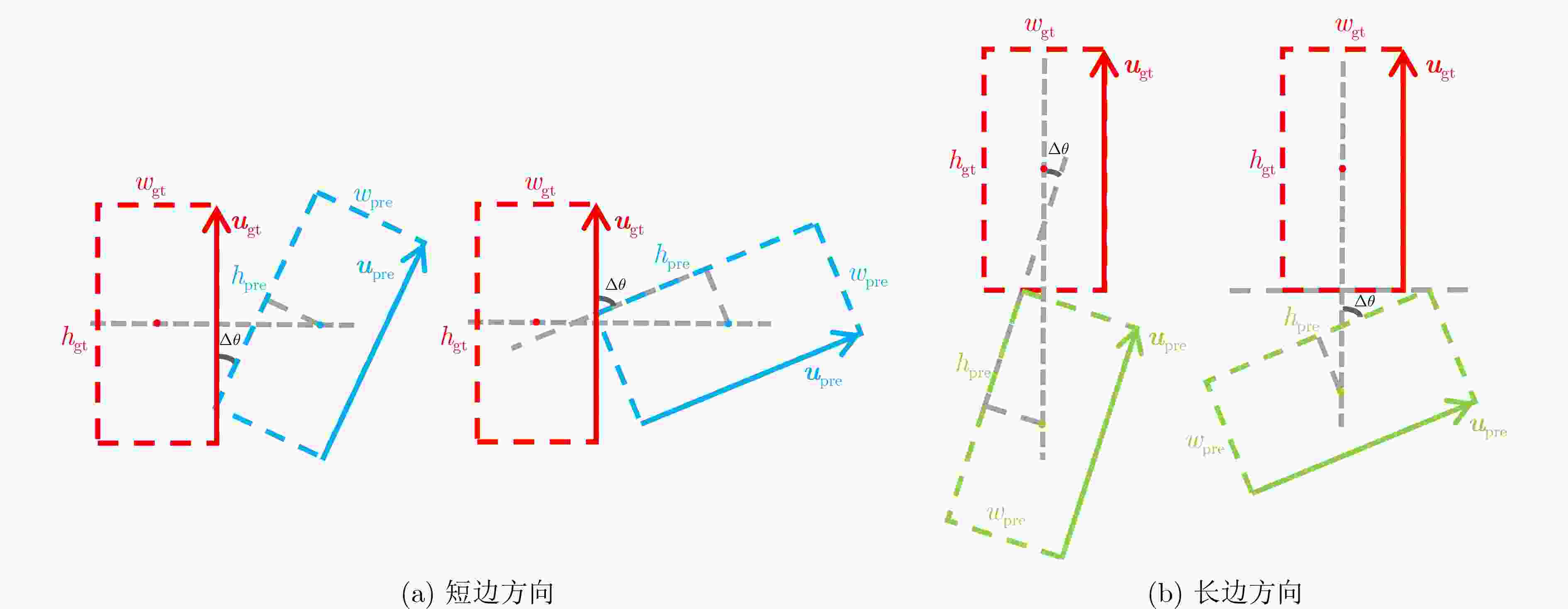

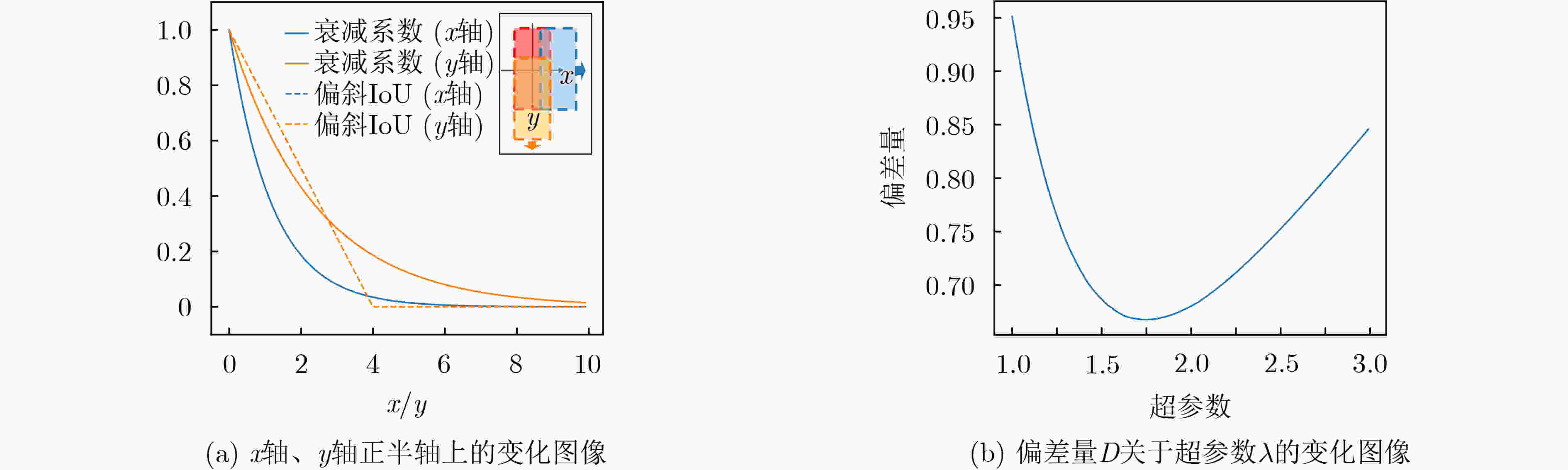

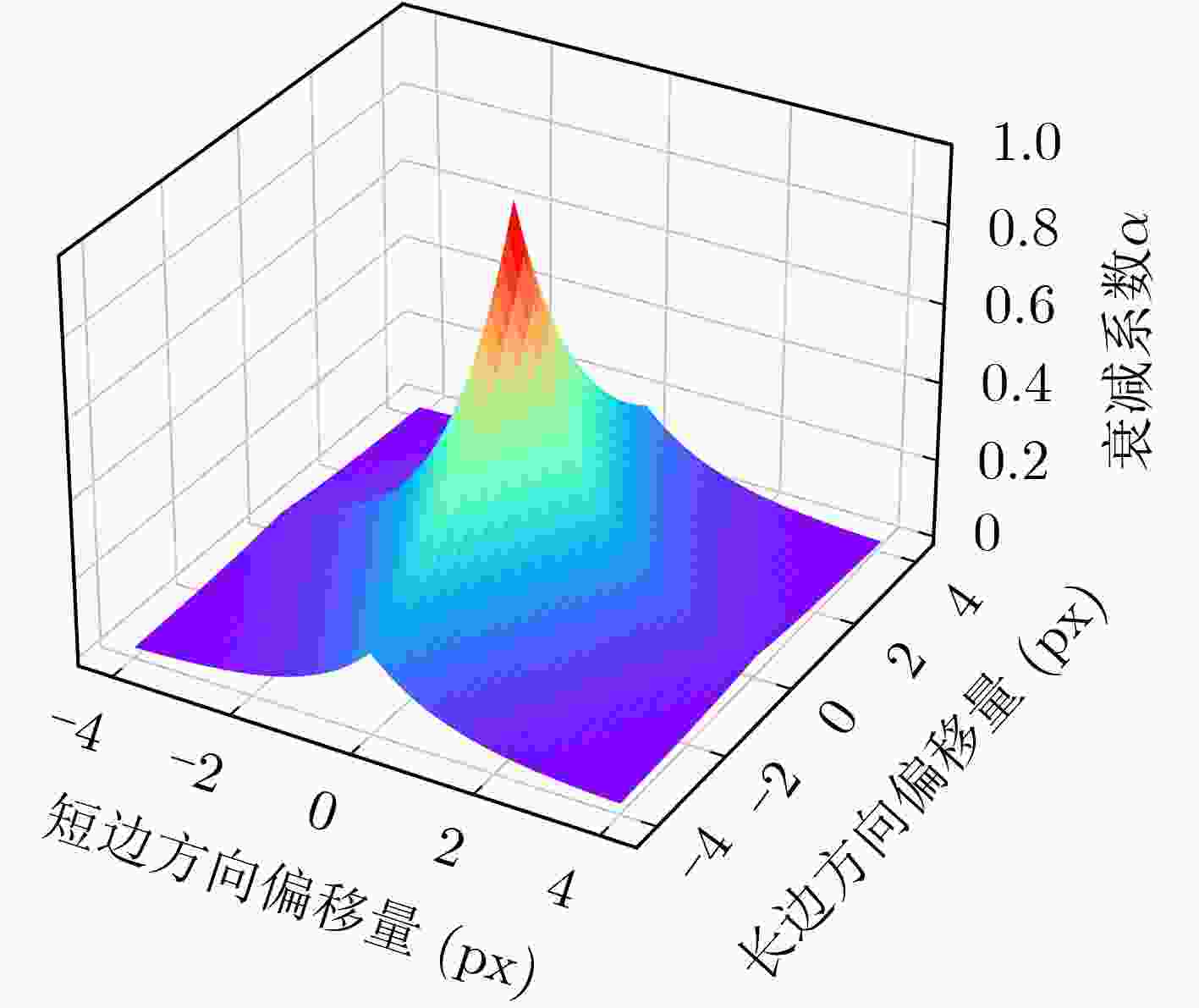

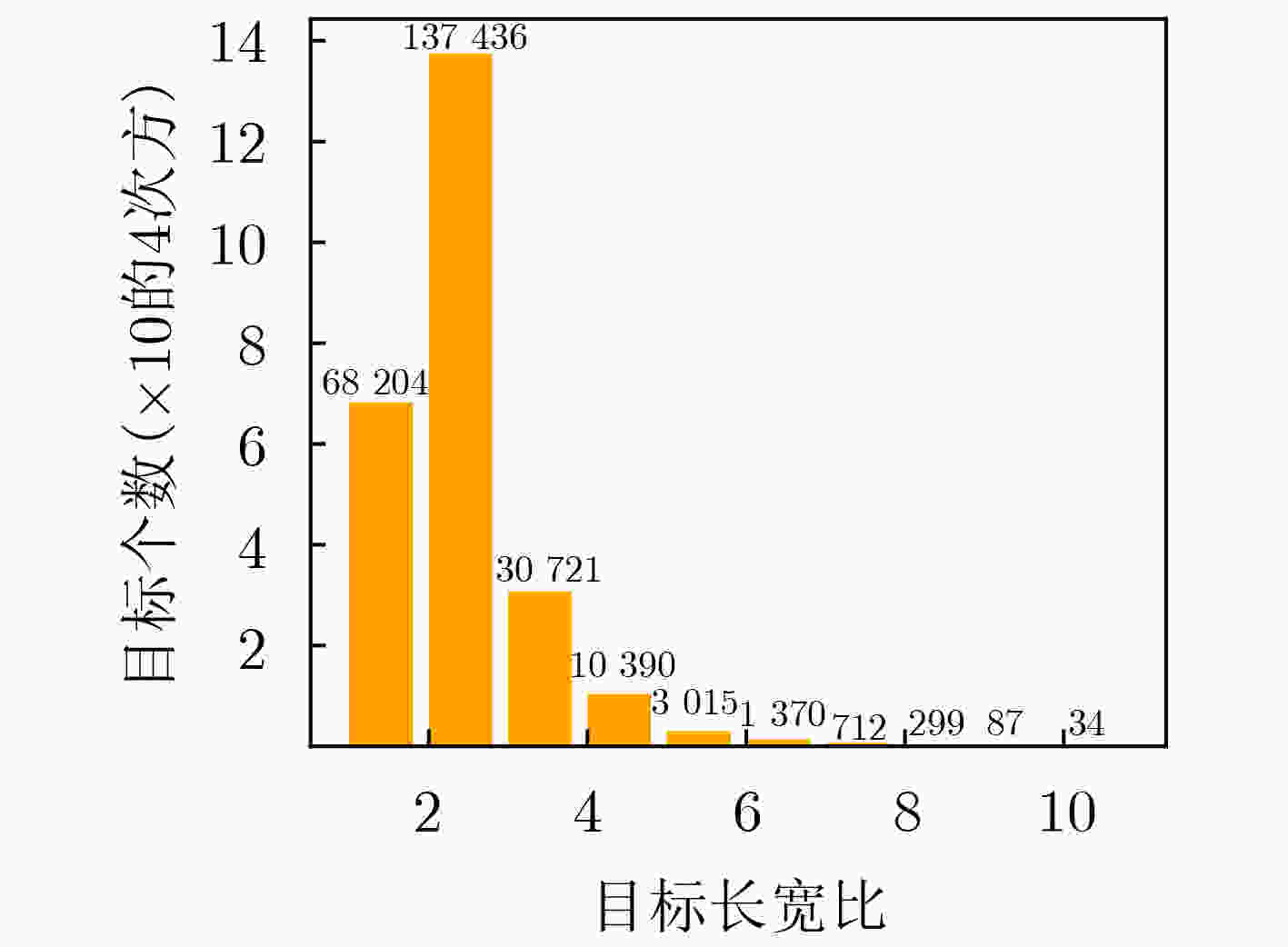

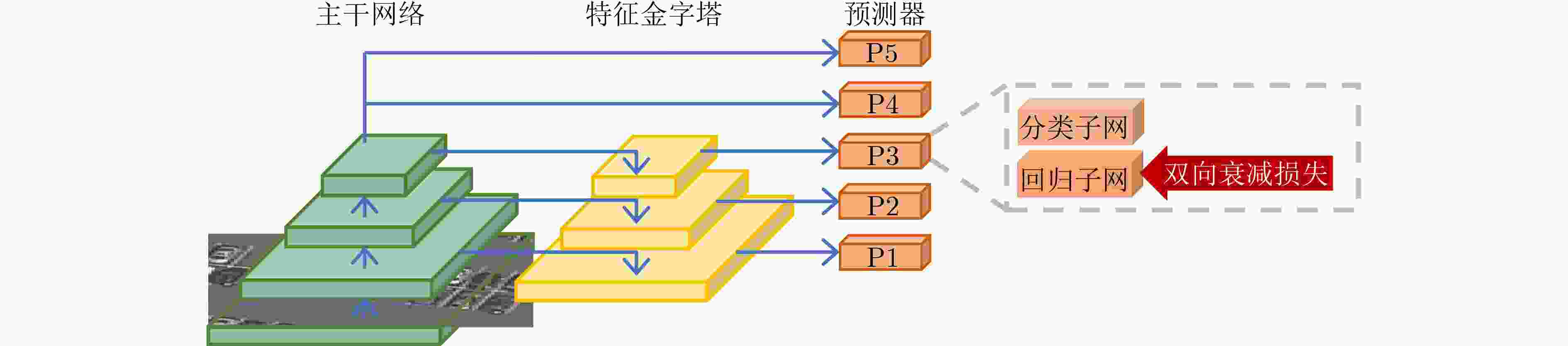

摘要: 遥感图像中的目标检测技术是计算机视觉领域的热点研究之一。为了适应遥感图像中的复杂背景和任意方向的目标,主流的目标检测模型均采用旋转检测方法。然而,用于旋转检测的定位损失函数通常存在变化趋势与实际偏斜交并比(Intersection-over-Union, IoU)的变化趋势不一致的问题。为此,该文提出一种新的面向旋转目标检测的双向衰减损失方法。具体而言,该方法通过高斯乘积模拟偏斜IoU,并依据预测位置的偏差从两个方向衰减乘积。双向衰减损失能够反映由位置偏差引起的偏斜IoU变化,其变化趋势与偏斜IoU有着更强的一致性,并且与其他相关方法相比性能更好。在DOTAv1.0数据集上的实验表明,所提方法在多种基底函数和不同精度条件下都是有效的。Abstract: Object detection in remote sensing image is one of the hot research topics in the field of remote sensing. In order to adapt to complex backgrounds and multi-directional objects in remote sensing images, the mainstream object detection model uses rotation detection method. However, most of positioning losses used for rotation detection generally has the problem that its trend is inconsistent with the trend of SkewIoU(Skew Intersection-over-Union). To solve this problem, a new bidirectional attenuation loss for rotating object detection is designed. Specifically, this method simulates SkewIoU by Gaussian product, and attenuates the product from two directions according to the deviation of the predicted position. The bidirectional attenuation loss has stronger trend-level alignment with SkewIoU and works better compared with other methods, thanks to its ability to reflect the SkewIoU change caused by position deviation. Experiments on DOTAv1.0 show the effectiveness of this method of various loss forms and different accuracy conditions.

-

Key words:

- Remote sensing image /

- Object detection /

- Deep neural network /

- Loss function

-

表 1 不同$\lambda $取值对RetinaNet网络检测性能的影响

$\lambda $ mAP(%) 1.60 70.59 1.70 70.71 1.75 71.24 1.80 71.22 1.90 70.12 表 2 基于不同损失计算方式的目标检测精度比较

自变量x $ - \ln \left( {x + \varepsilon } \right)$ $1 - x$ ${{\rm{e}}^{1 - x} } - 1$ KFIoU 69.35 70.07 70.30 BAIoU 71.01(+1.66) 70.93(+0.86) 71.24(+0.94) 表 3 基于不同损失函数的目标检测精度比较

损失函数 AP50 AP75 AP50:95 KFIoU 70.30 33.16 34.45 BAIoU 71.24(+0.94) 36.58(+3.42) 37.06(+2.61) 表 4 双向衰减损失与经典水平损失函数的检测精度比较

损失函数 mAP SmoothL1 55.03 GIoU 55.54 BAIoU 55.44 表 5 不同损失函数在DOTAv1.0中5类典型目标的检测结果对比(%)

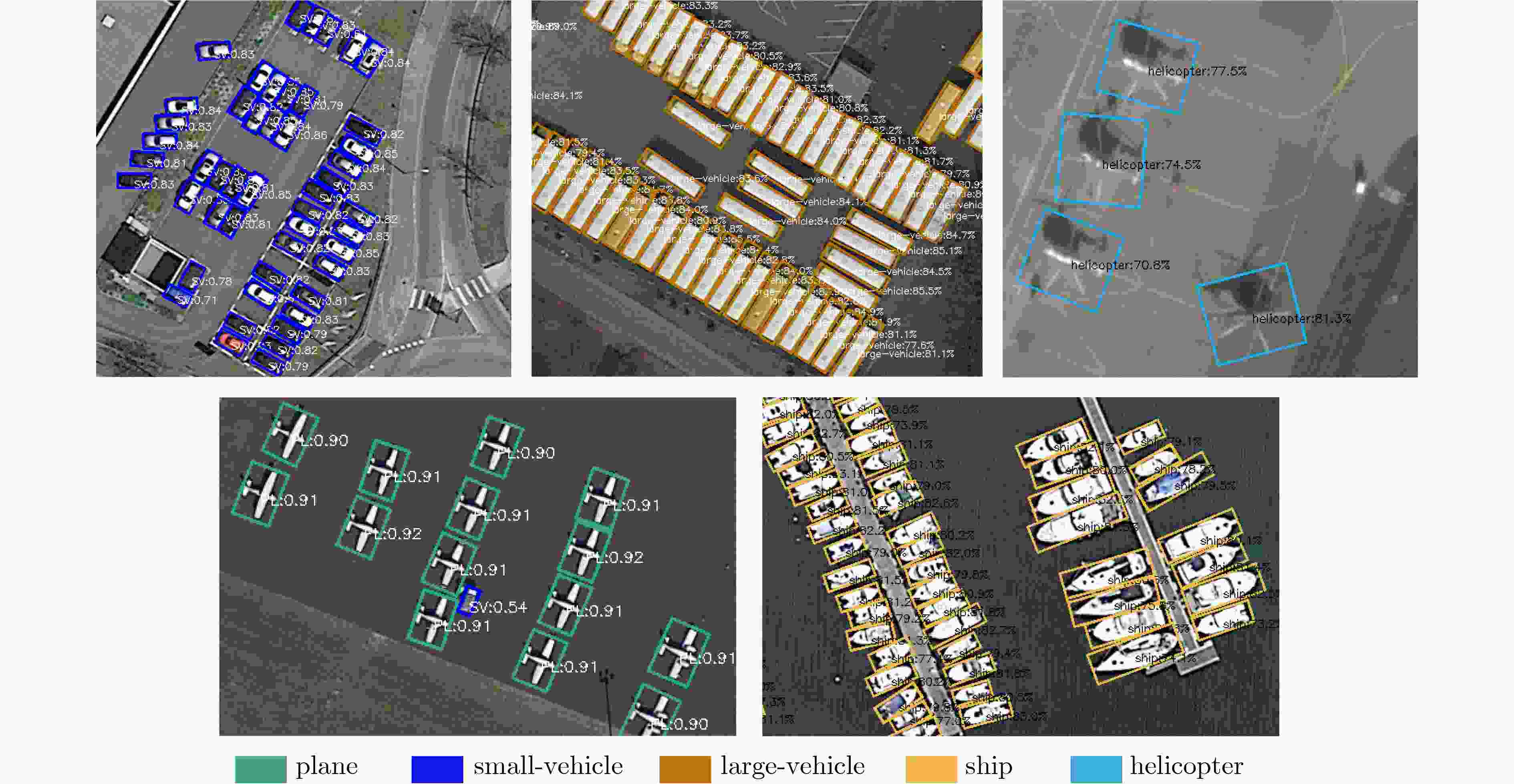

网络模型 损失函数 飞机 小型汽车 大型汽车 船 直升机 mAP RetinaNet SmoothL1[7] 70.90 56.30 48.40 64.80 40.31 56.14 GWD[18] 79.98 68.55 66.53 74.18 52.75 68.39 KFIoU 83.56 74.46 67.69 76.43 59.36 70.30 BAIoU 85.13 74.01 70.29 76.02 60.77 71.24 R3Det DCL[16] 85.14 71.02 79.56 85.62 54.45 75.15 KLD[19] 87.41 73.43 83.07 87.00 60.73 78.32 KFIoU 87.61 75.65 84.06 88.38 62.01 79.54 BAIoU 87.70 76.45 85.02 89.22 62.34 80.14 -

[1] 李晓博, 孙文方, 李立. 静止轨道遥感卫星海面运动舰船快速检测方法[J]. 电子与信息学报, 2015, 37(8): 1862–1867. doi: 10.11999/JEIT141615LI Xiaobo, SUN Wenfang, and LI Li. Ocean moving ship detection method for remote sensing satellite in geostationary orbit[J]. Journal of Electronics &Information Technology, 2015, 37(8): 1862–1867. doi: 10.11999/JEIT141615 [2] 陈琪, 陆军, 赵凌君, 等. 基于特征的SAR遥感图像港口检测方法[J]. 电子与信息学报, 2010, 32(12): 2873–2878. doi: 10.3724/SP.J.1146.2010.00079CHEN Qi, LU Jun, ZHAO Lingjun, et al. Harbor detection method of SAR remote sensing images based on feature[J]. Journal of Electronics &Information Technology, 2010, 32(12): 2873–2878. doi: 10.3724/SP.J.1146.2010.00079 [3] 李轩, 刘云清. 基于似圆阴影的光学遥感图像油罐检测[J]. 电子与信息学报, 2016, 38(6): 1489–1495. doi: 10.11999/JEIT151334LI Xuan and LIU Yunqing. Oil tank detection in optical remote sensing imagery based on quasi-circular shadow[J]. Journal of Electronics &Information Technology, 2016, 38(6): 1489–1495. doi: 10.11999/JEIT151334 [4] ZHANG Zheng, MIAO Chunle, LIU Chang’an, et al. DCS-TransUperNet: Road segmentation network based on CSwin transformer with dual resolution[J]. Applied Sciences, 2022, 12(7): 3511. doi: 10.3390/app12073511 [5] ZHANG Zheng, XU Zhiwei, LIU Chang’an, et al. Cloudformer: Supplementary aggregation feature and mask-classification network for cloud detection[J]. Applied Sciences, 2022, 12(7): 3221. doi: 10.3390/app12073221 [6] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587. [7] GIRSHICK R. Fast R-CNN[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440–1448. [8] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031 [9] REDMON J and FARHADI A. YOLOv3: An incremental improvement[J]. arXiv preprint arXiv: 1804.02767, 2018. [10] BOCHKOVSKIY A, WANG C Y, and LIAO H Y M. YOLOv4: Optimal speed and accuracy of object detection[J]. arXiv preprint arXiv: 2004.10934, 2020. [11] 邵延华, 张铎, 楚红雨, 等. 基于深度学习的YOLO目标检测综述[J]. 电子与信息学报, 2022, 44(10): 3697–3708. doi: 10.11999/JEIT210790SHAO Yanhua, ZHANG Duo, CHU Hongyu, et al. A review of YOLO object detection based on deep learning[J]. Journal of Electronics &Information Technology, 2022, 44(10): 3697–3708. doi: 10.11999/JEIT210790 [12] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot MultiBox detector[C]. 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 21–37. [13] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2): 318–327. doi: 10.1109/TPAMI.2018.2858826 [14] DING Jian, XUE Nan, LONG Yang, et al. Learning RoI transformer for oriented object detection in aerial images[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 2844–2853. [15] YANG Xue, YAN Junchi, FENG Ziming, et al. R3Det: Refined single-stage detector with feature refinement for rotating object[C]. Proceedings of the 35th AAAI Conference on Artificial Intelligence, 2021: 3163–3171. [16] YANG Xue, HOU Liping, ZHOU Yue, et al. Dense label encoding for boundary discontinuity free rotation detection[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 15814–15824. [17] CHEN Zhiming, CHEN Ke’an, LIN Weiyao, et al. PIoU loss: Towards accurate oriented object detection in complex environments[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 195–211. [18] YANG Xue, YAN Junchi, MING Qi, et al. Rethinking rotated object detection with Gaussian Wasserstein distance loss[C]. Proceedings of the 38th International Conference on Machine Learning, 2021: 11830–11841. [19] YANG Xue, YANG Xiaojiang, YANG Jirui, et al. Learning high-precision bounding box for rotated object detection via kullback-leibler divergence appendix[C]. 35th Conference on Neural Information Processing Systems, 2021. [20] YANG Xue, ZHOU Yue, ZHANG Gefan, et al. The KFIoU loss for rotated object detection[J]. arXiv preprint arXiv: 2201.12558, 2022. [21] XIA Guisong, BAI Xiang, DING Jian, et al. DOTA: A large-scale dataset for object detection in aerial images[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3974–3983. [22] EVERINGHAM M, VAN GOOL L, WILLIAMS C K I, et al. The PASCAL visual object classes (VOC) challenge[J]. International Journal of Computer Vision, 2010, 88(2): 303–338. doi: 10.1007/s11263-009-0275-4 [23] LIN T Y, MAIRE M, BELONGIE S, et al. Microsoft COCO: Common objects in context[C]. 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 740–755. -

下载:

下载:

下载:

下载: