Energy Consumption Optimization of Unmanned Aerial Vehicle Assisted Mobile Edge Computing Systems Based on Deep Reinforcement Learning

-

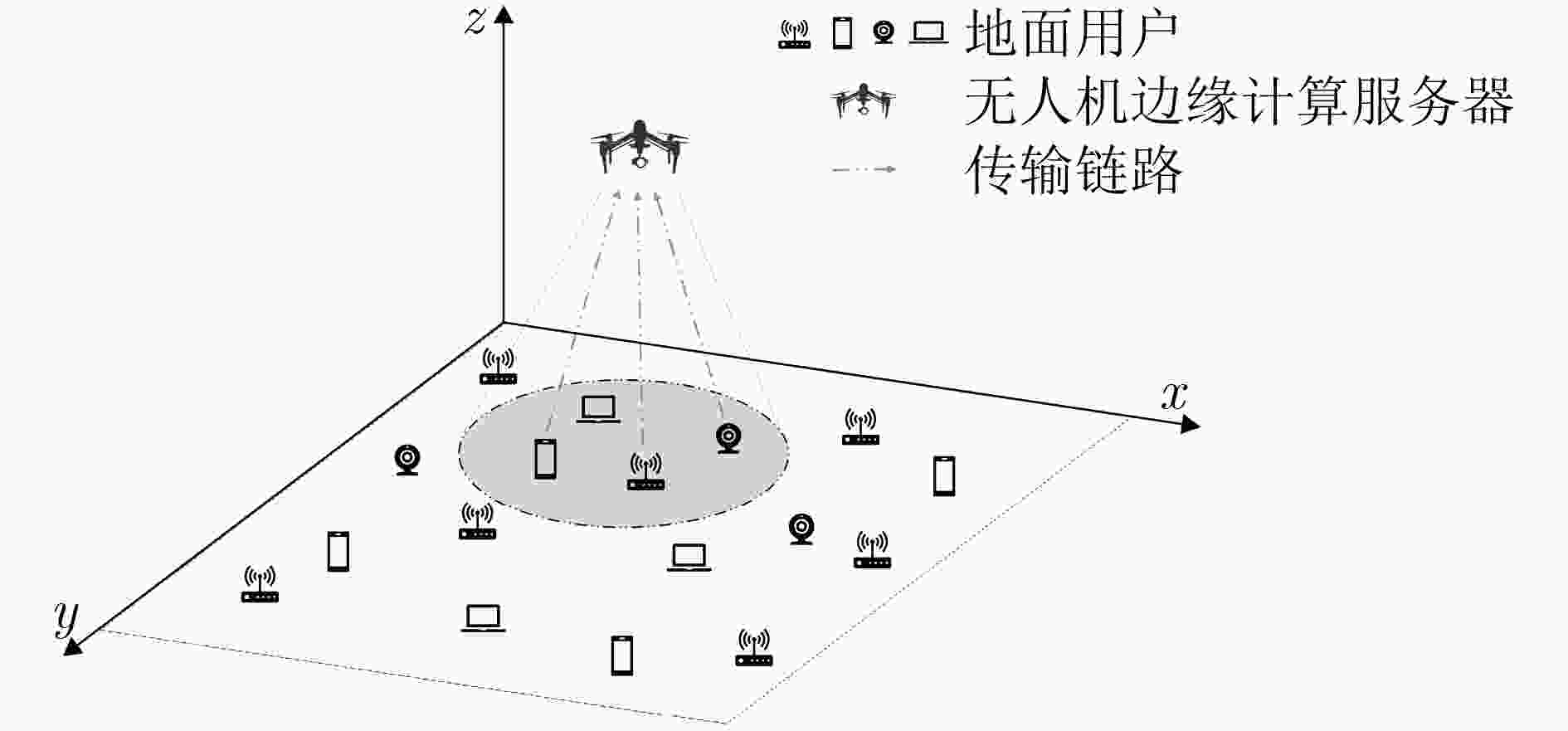

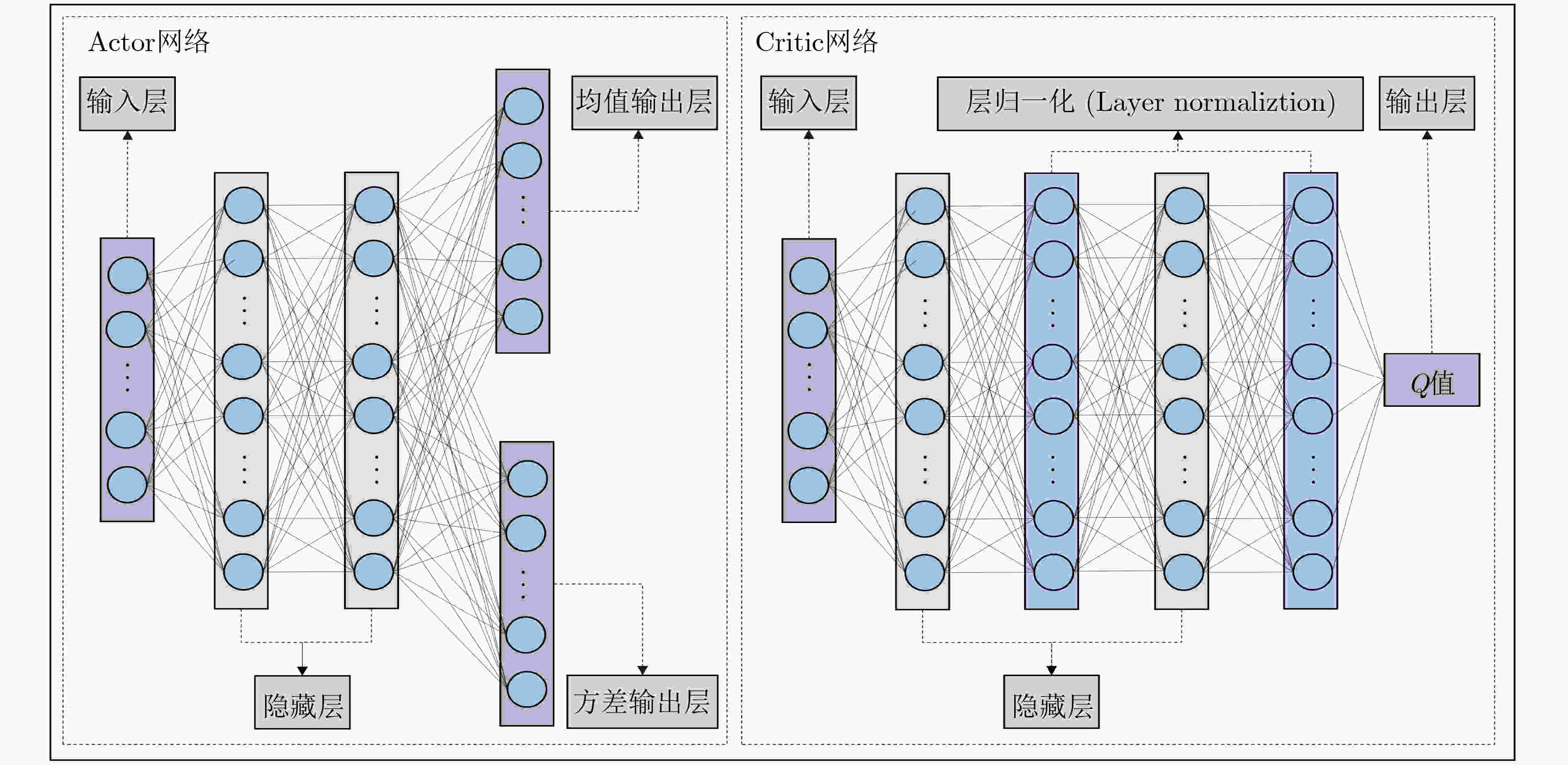

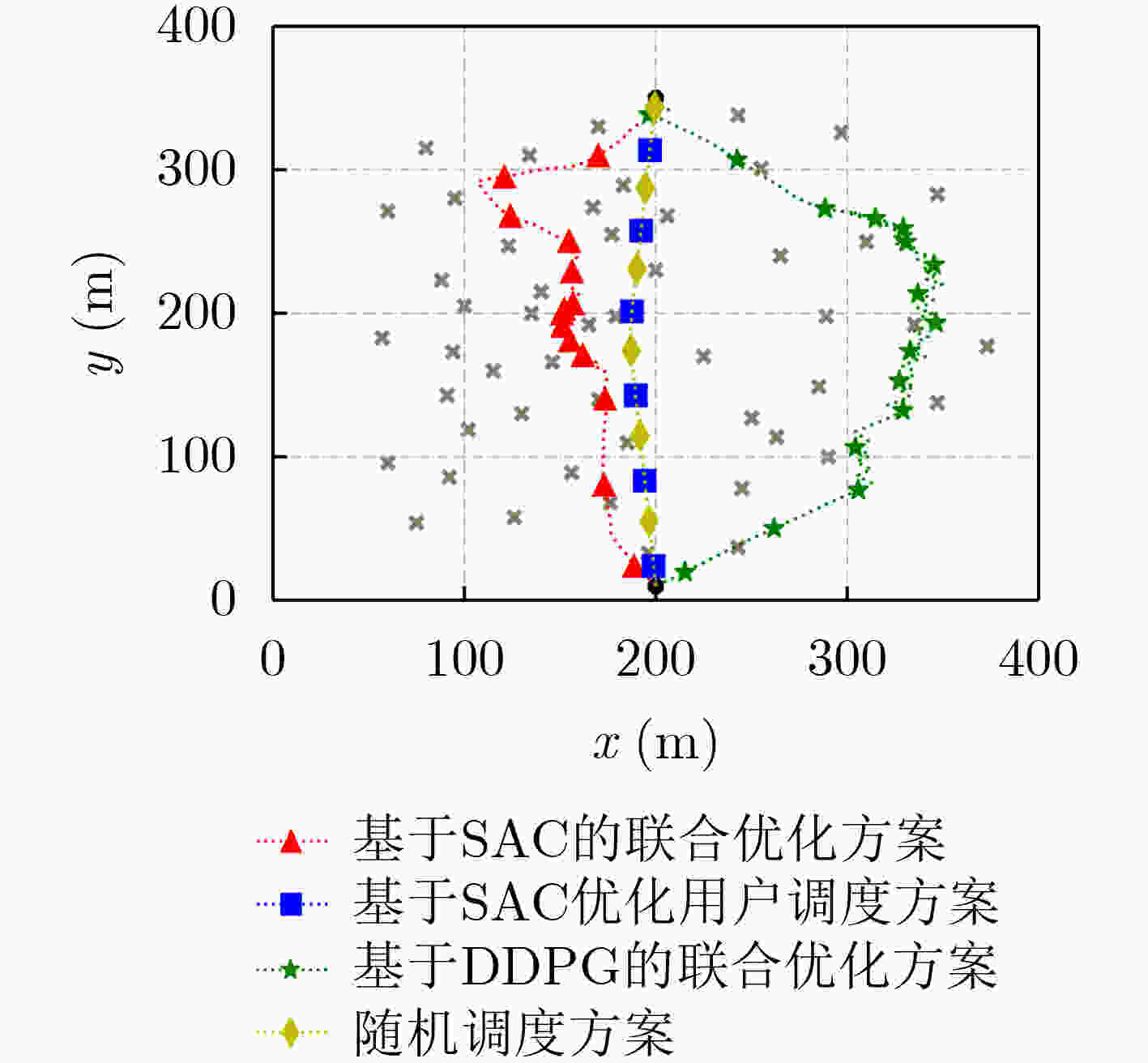

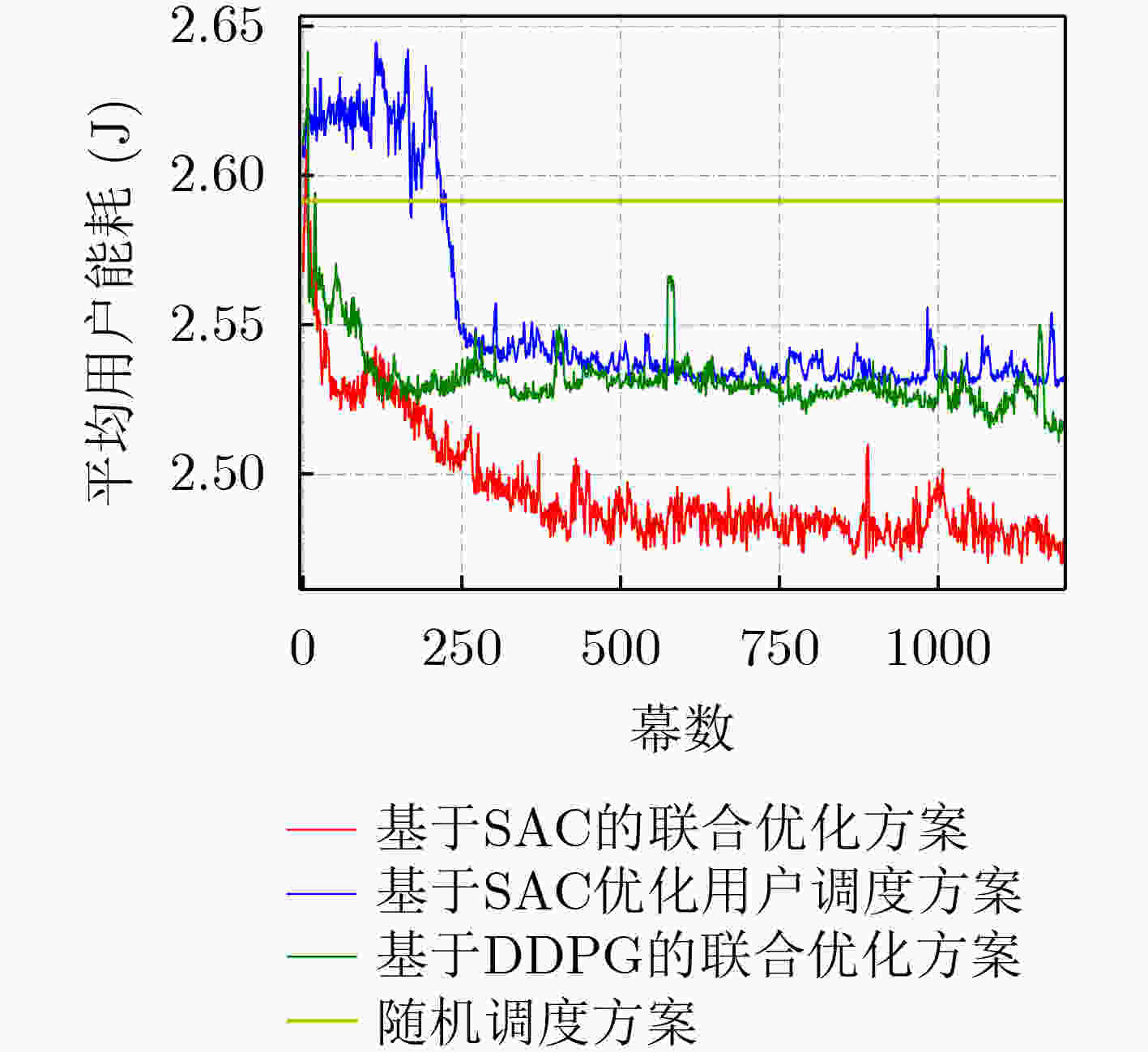

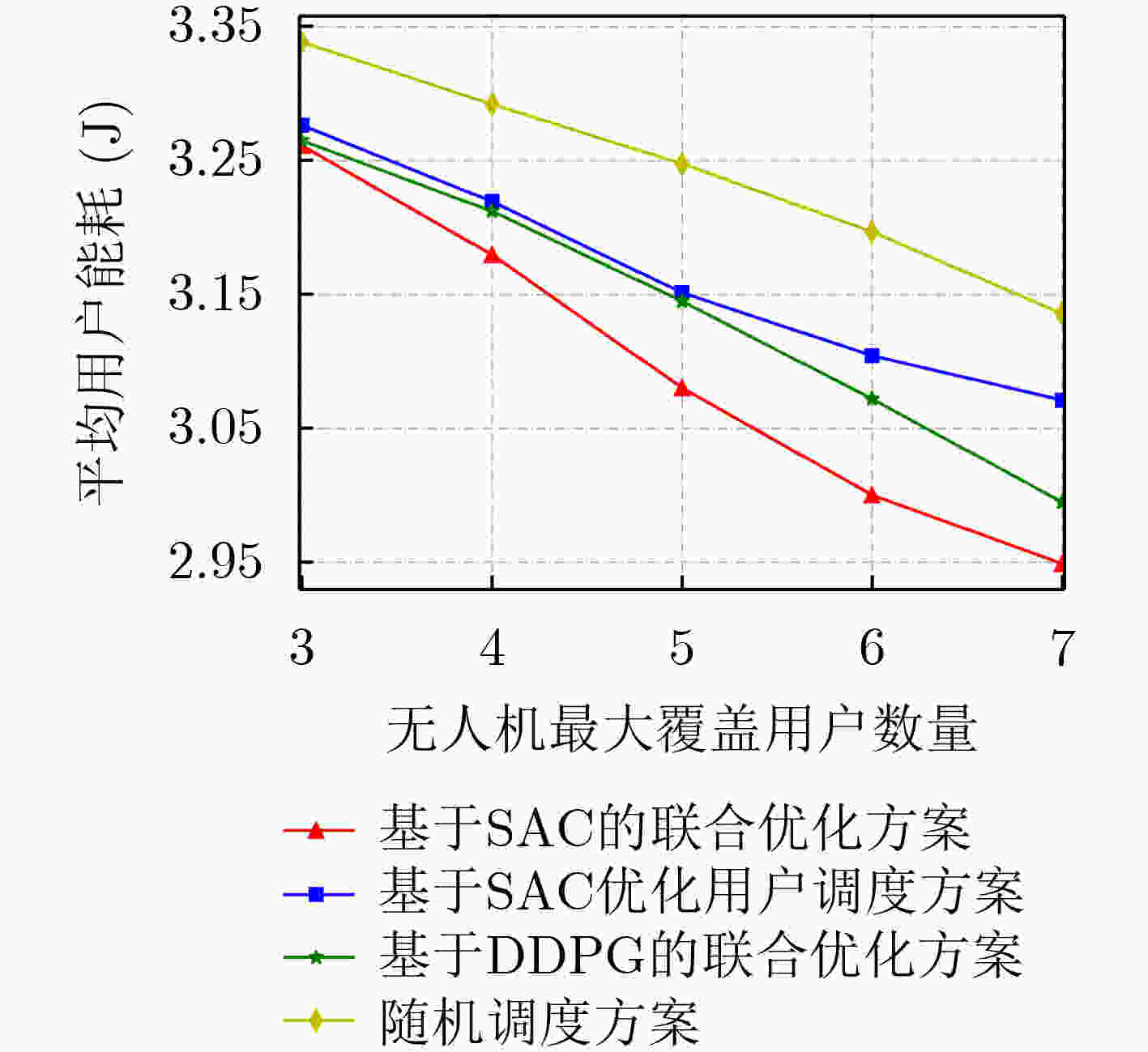

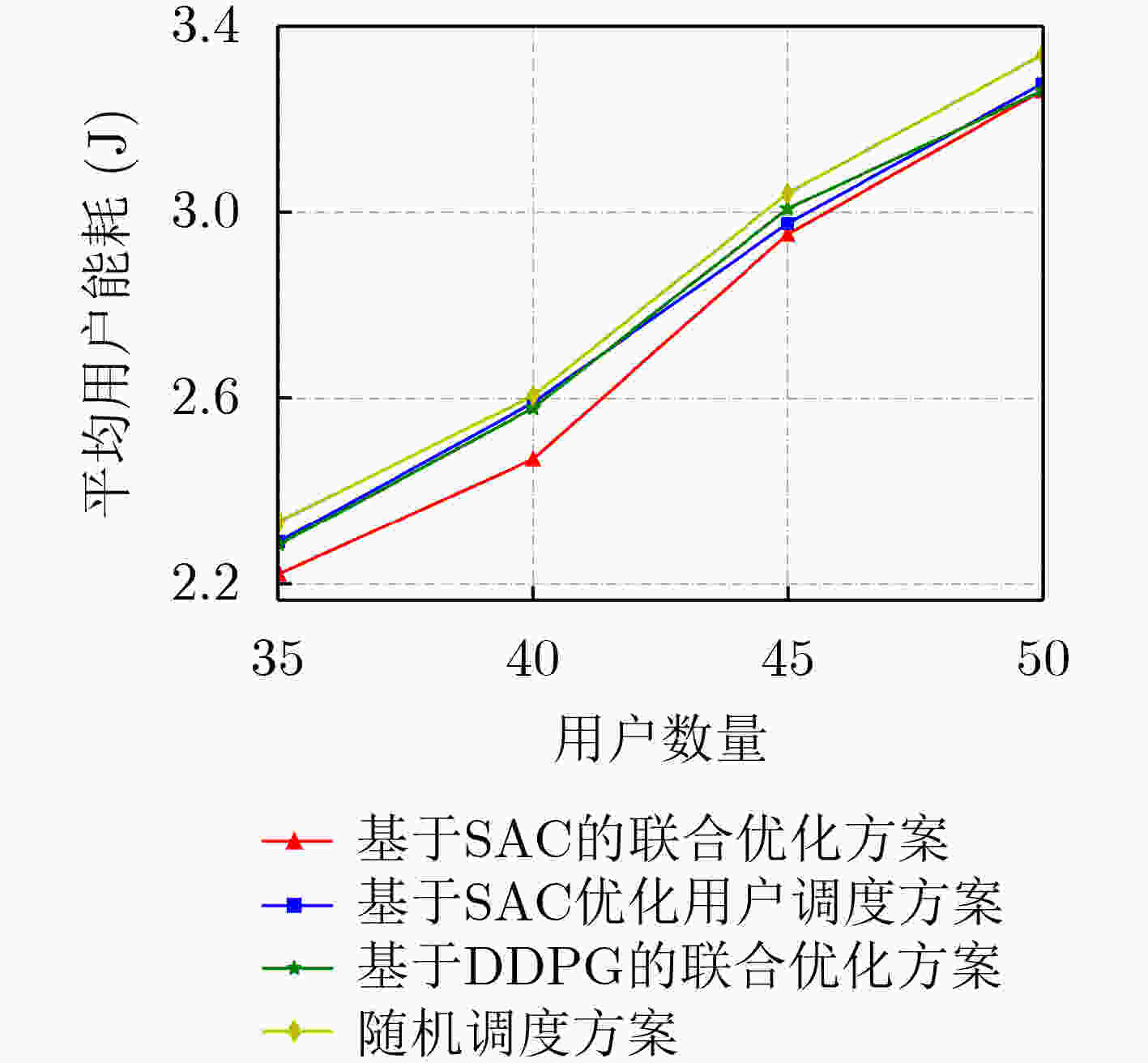

摘要: 近年来,部署搭载有移动边缘计算(MEC)服务器的无人机(UAVs)为地面用户提供计算资源已成为一种新兴的技术。针对无人机辅助多用户移动边缘计算系统,该文构建了以最小化用户平均能耗为目标的模型,联合优化无人机的飞行轨迹和用户计算策略的调度。通过深度强化学习(DRL)求解能耗优化问题,提出基于柔性参与者-评论者(SAC)的优化算法。该算法应用最大熵的思想来探索最优策略并使用高效迭代更新获得最优策略,通过保留所有高回报值的策略,增强算法的探索能力,提高训练过程的收敛速度。仿真结果表明与已有算法相比,所提算法能有效降低用户的平均能耗,并具有很好的稳定性和收敛性。Abstract: In recent years, the deployment of Unmanned Aerial Vehicles (UAVs) equipped with Mobile Edge Computing (MEC) servers to provide computing services for ground users has become an emerging method. Considering an UAV-assisted MEC system with multi-users, a scheme is investigated to minimize the average energy consumption for all users to complete their computation tasks via optimizing the trajectory of UAV and computation strategies of the users during the UAV’s whole flight duration. A Deep Reinforcement Learning (DRL)-based Soft Actor-Critic (SAC) algorithm is proposed to tackle the energy consumption optimization problem. With the iteration of the network training procedure, the best action is obtained according to the maximum entropy rule, which does not neglect any action with high reward value and thus can enhance the exploration and convergence performance of the proposed algorithm. Simulation results reveal that the proposed SAC algorithm can effectively decrease the average energy consumption of all users and achieves better stability and convergence performance, as compared to some existing baseline algorithms.

-

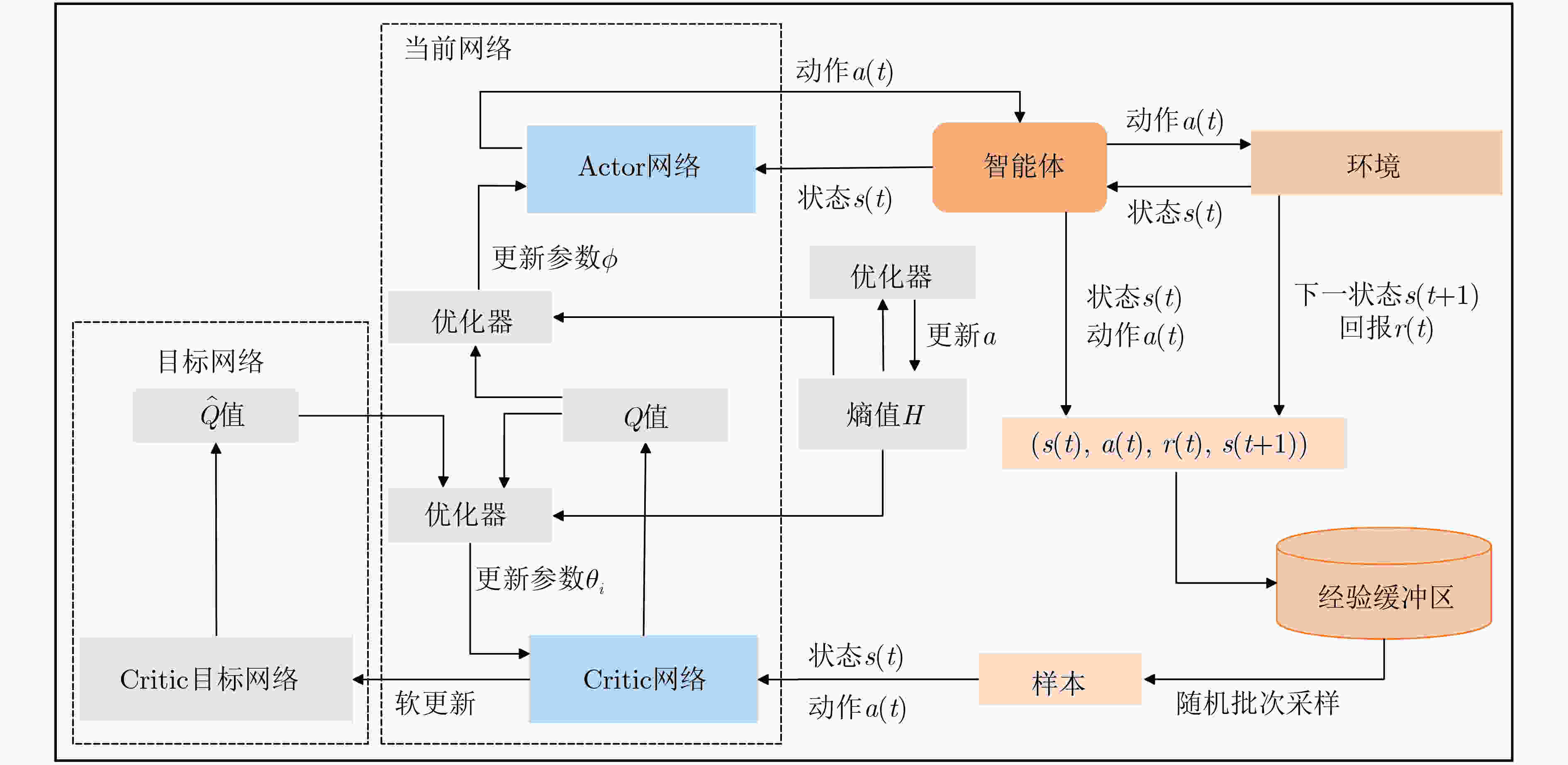

算法1 基于SAC的最小化用户设备平均能量损耗算法的算法流程 (1)初始化经验缓冲区,Actor网络,Critic网络及目标网络,初始化无人机起始位置坐标及终点位置坐标,随机生成用户坐标以及计算任务; (2)循环训练幕数 Episode = 1,2,···,$ M $: (3) 重新初始化无人机起始坐标以及初始状态$ s\left( 0 \right) $; (4) 循环时间步数 Time = 1,2,···,$ T $: (5) 由状态$ s\left( t \right) $根据策略$ {\pi _\phi } $选择动作$ a\left( t \right) $; (6) 无人机在状态$ s\left( t \right) $下执行动作$ a\left( t \right) $,进入下一状态$ s\left( {t + 1} \right) $且更新无人机坐标$ \left[ {X\left( t \right),Y\left( t \right),H} \right] $,并根据式(24)得到回报$ r\left( t \right) $以及

根据式(8)计算所有用户的能耗$ \sum\nolimits_{i = 1}^N {{E_i}\left( t \right)} $;(7) 将$ \left[ {s\left( t \right),a\left( t \right),r\left( t \right),s\left( {t + 1} \right)} \right] $存储在经验缓冲区; (8) 更新状态$ s\left( t \right) = s\left( {t + 1} \right) $; (9) 从经验缓冲区中随机采样批次经验样本,根据式(21)、式(22)和式(23)分别计算损失函数$ {L_{{{\text{C}}_i}}}\left( {{\theta _i}} \right) $,$ {L_{\text{A}}}\left( \phi \right) $和$ L\left( \alpha \right) $,并更新Critic网

络参数$ {\theta _i} $、Actor网络参数$ \phi $和熵正则化系数$ \alpha $;更新Critic目标函数参数,$ {\hat \theta _i} \leftarrow \tau {\theta _i} $$ + \left( {1 - \tau } \right){\hat \theta _i} $;(10) 直到Episode = $ M $; (11) 直到Time = $ T $; (12) 输出无人机飞行轨迹以及用户平均能量损耗。 表 1 实验仿真参数

参数 符号表示 设定值 无人机最大覆盖用户数量 $ {K_{\max }} $ 3 最大时延( s) $ {T_{\max }} $ 1 无人机最大飞行距离( m) $ {d_{\max }} $ 10 用户发射功率(W) $ {P^{{\text{Tr}}}} $ 0.1 无人机总带宽( MHz) $ W $ 6 无人机接收天线的最大接收角度 $ \theta $ $ \pi /4 $ 参考距离(1 m)的信道功率增益 $ {g_0} $ $ 1.42 \times {10^{ - 4}} $ 噪声功率(dBm) $ {n^2} $ –90 无人机提供的CPU周期数( Hz) $ {f^{\text{U}}} $ $ 5 \times {10^9} $ -

[1] MAO Yuyi, YOU Changsheng, ZHANG Jun, et al. A survey on mobile edge computing: The communication perspective[J]. IEEE Communications Surveys & Tutorials, 2017, 19(4): 2322–2358. doi: 10.1109/COMST.2017.2745201 [2] MAO Yuyi, ZHANG Jun, and LETAIEF K B. Dynamic computation offloading for mobile-edge computing with energy harvesting devices[J]. IEEE Journal on Selected Areas in Communications, 2016, 34(12): 3590–3605. doi: 10.1109/JSAC.2016.2611964 [3] LIU Tianyu, CUI Miao, ZHANG Guangchi, et al. 3D trajectory and transmit power optimization for UAV-enabled multi-link relaying systems[J]. IEEE Transactions on Green Communications and Networking, 2021, 5(1): 392–405. doi: 10.1109/TGCN.2020.3048135 [4] LYU Xinchen, TIAN Hui, NI Wei, et al. Energy-efficient admission of delay-sensitive tasks for mobile edge computing[J]. IEEE Transactions on Communications, 2018, 66(6): 2603–2616. doi: 10.1109/TCOMM.2018.2799937 [5] WU Qingqing and ZHANG Rui. Common throughput maximization in UAV-enabled OFDMA systems with delay consideration[J]. IEEE Transactions on Communications, 2018, 66(12): 6614–6627. doi: 10.1109/TCOMM.2018.2865922 [6] LI Zhiyang, CHEN Ming, PAN Cunhua, et al. Joint trajectory and communication design for secure UAV networks[J]. IEEE Communications Letters, 2019, 23(4): 636–639. doi: 10.1109/LCOMM.2019.2898404 [7] LI Yuxi. Deep reinforcement learning: An overview[EB/OL]. https://arxiv.org/abs/1701.07274, 2021. [8] PENG Yingsheng, LIU Yong, and ZHANG Han. Deep reinforcement learning based path planning for UAV-assisted edge computing networks[C]. 2021 IEEE Wireless Communications and Networking Conference, Nanjing, China, 2021: 1–6. [9] SEID A M, BOATENG G O, ANOKYE S, et al. Collaborative computation offloading and resource allocation in multi-UAV-assisted IoT networks: A deep reinforcement learning approach[J]. IEEE Internet of Things Journal, 2021, 8(15): 12203–12218. doi: 10.1109/JIOT.2021.3063188 [10] FUJIMOTO S and GU S S. A minimalist approach to offline reinforcement learning[C]. The 34th Annual Conference on Neural Information Processing Systems, Vancouver, Canada, 2021. [11] HAARNOJA T, ZHOU A, HARTIKAINEN K, et al. Soft actor-critic algorithms and applications[EB/OL]. https://arxiv.org/abs/1812.05905, 2021. [12] LILLICRAP T P, HUNT J J, PRITZEL A, et al. Continuous control with deep reinforcement learning[C]. The 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2021. [13] ZHANG Guangchi, YAN Haiqiang, ZENG Yong, et al. Trajectory optimization and power allocation for multi-hop UAV relaying communications[J]. IEEE Access, 2018, 6: 48566–48576. doi: 10.1109/ACCESS.2018.2868117 [14] YU Zhe, GONG Yanmin, GONG Shimin, et al. Joint task offloading and resource allocation in UAV-enabled mobile edge computing[J]. IEEE Internet of Things Journal, 2020, 7(4): 3147–3159. doi: 10.1109/JIOT.2020.2965898 [15] HUANG Yingqian, CUI Miao, ZHANG Guangchi, et al. Bandwidth, power and trajectory optimization for UAV base station networks with backhaul and user QoS constraints[J]. IEEE Access, 2020, 8: 67625–67634. doi: 10.1109/ACCESS.2020.2986075 [16] YANG Zhaohui, PAN Cunhua, WANG Kezhi, et al. Energy efficient resource allocation in UAV-enabled mobile edge computing networks[J]. IEEE Transactions on Wireless Communications, 2019, 18(9): 4576–4589. doi: 10.1109/TWC.2019.2927313 [17] ZHANG Guangchi, WU Qingqing, CUI Miao, et al. Securing UAV communications via joint trajectory and power control[J]. IEEE Transactions on Wireless Communications, 2019, 18(2): 1376–1389. doi: 10.1109/TWC.2019.2892461 [18] WANG Xinhou, WANG Kezhi, WU Song, et al. Dynamic resource scheduling in mobile edge cloud with cloud radio access network[J]. IEEE Transactions on Parallel and Distributed Systems, 2018, 29(11): 2429–2445. doi: 10.1109/TPDS.2018.2832124 [19] JIANG Feibo, WANG Kezhi, DONG Li, et al. Deep-learning-based joint resource scheduling algorithms for hybrid MEC networks[J]. IEEE Internet of Things Journal, 2020, 7(7): 6252–6265. doi: 10.1109/JIOT.2019.2954503 -

下载:

下载:

下载:

下载: