Lightweight GB-YOLOv5m State Detection Method for Power Switchgear

-

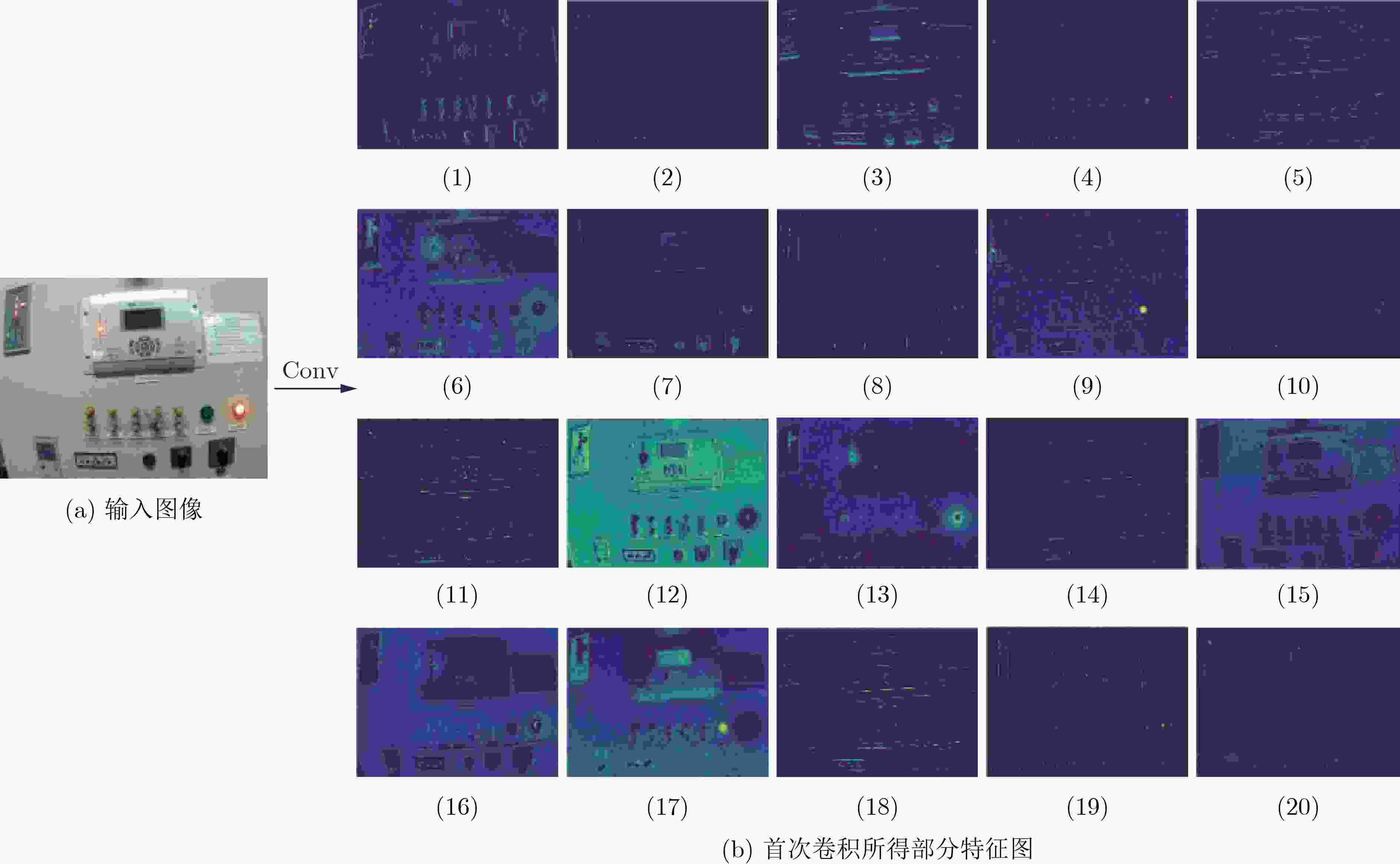

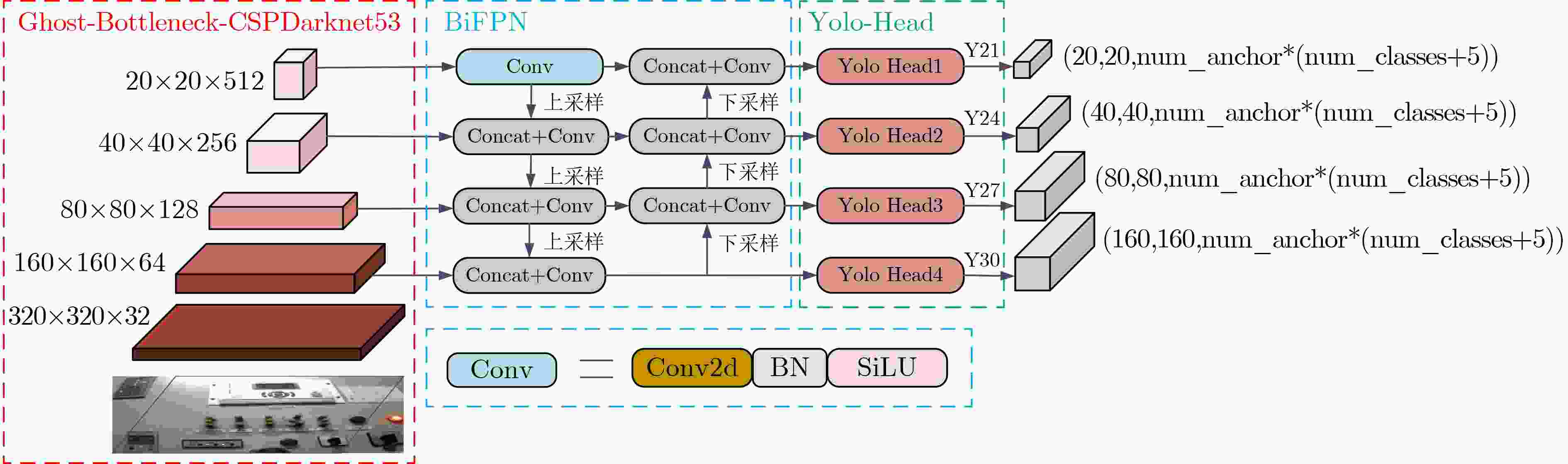

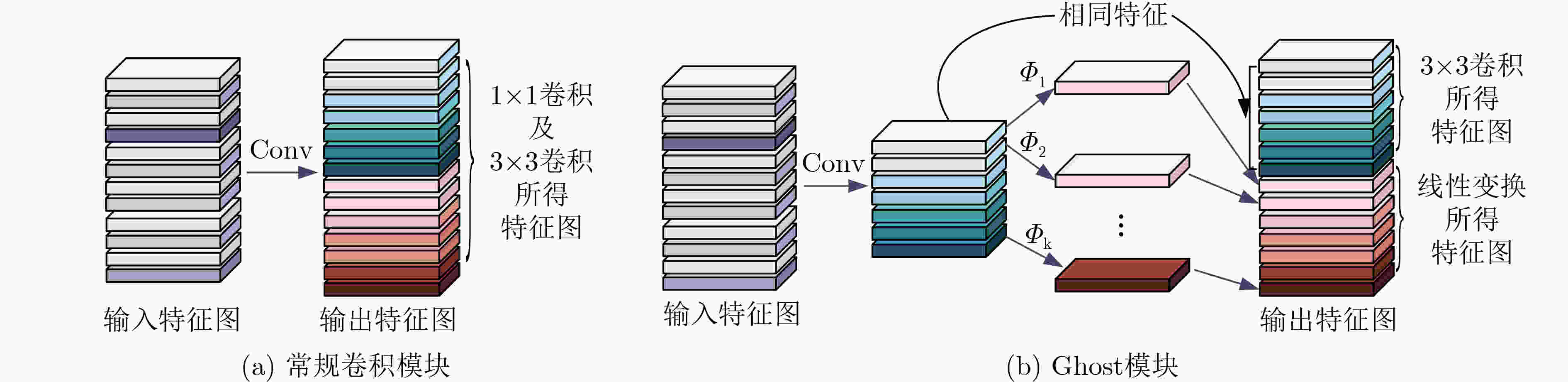

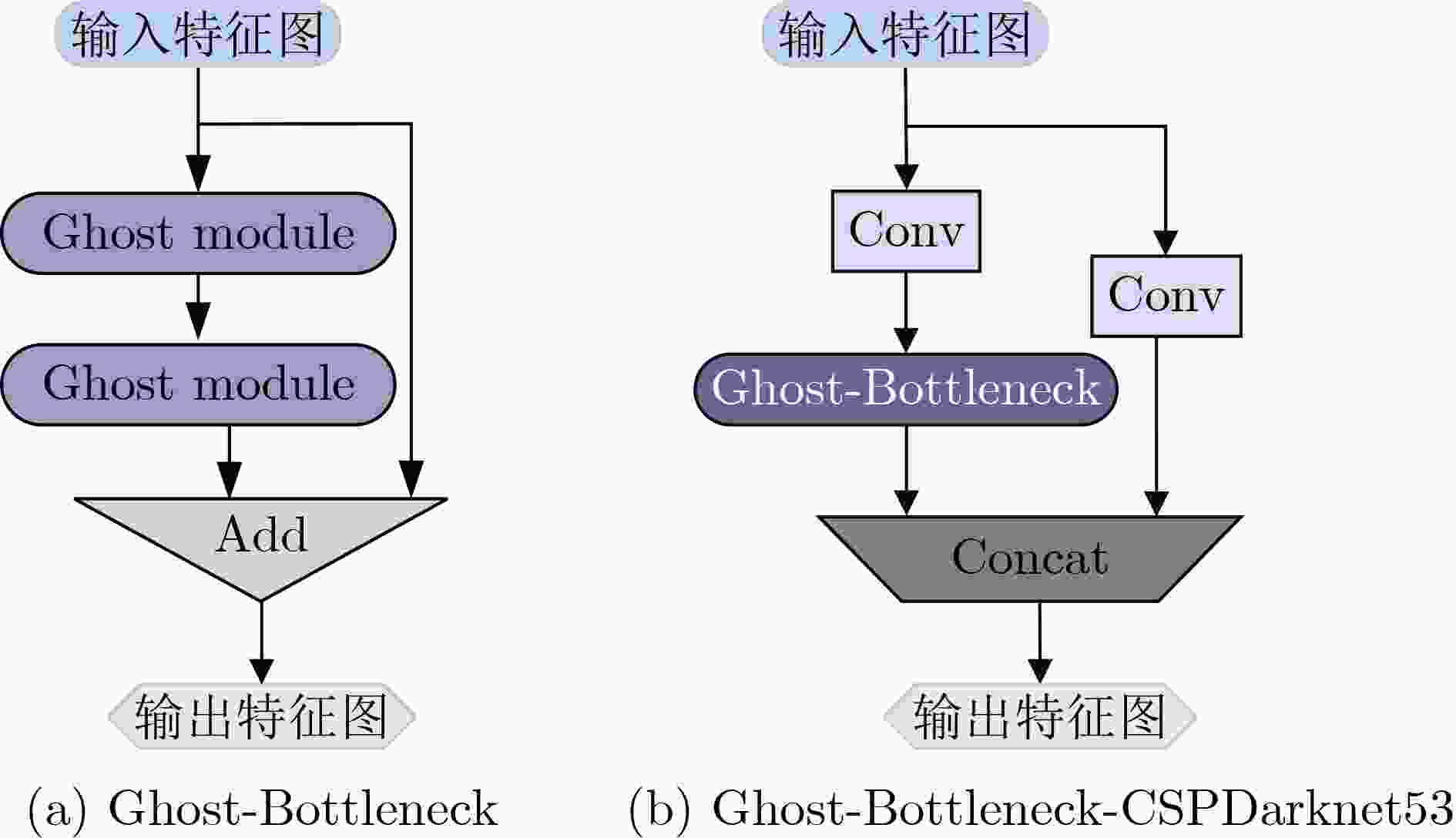

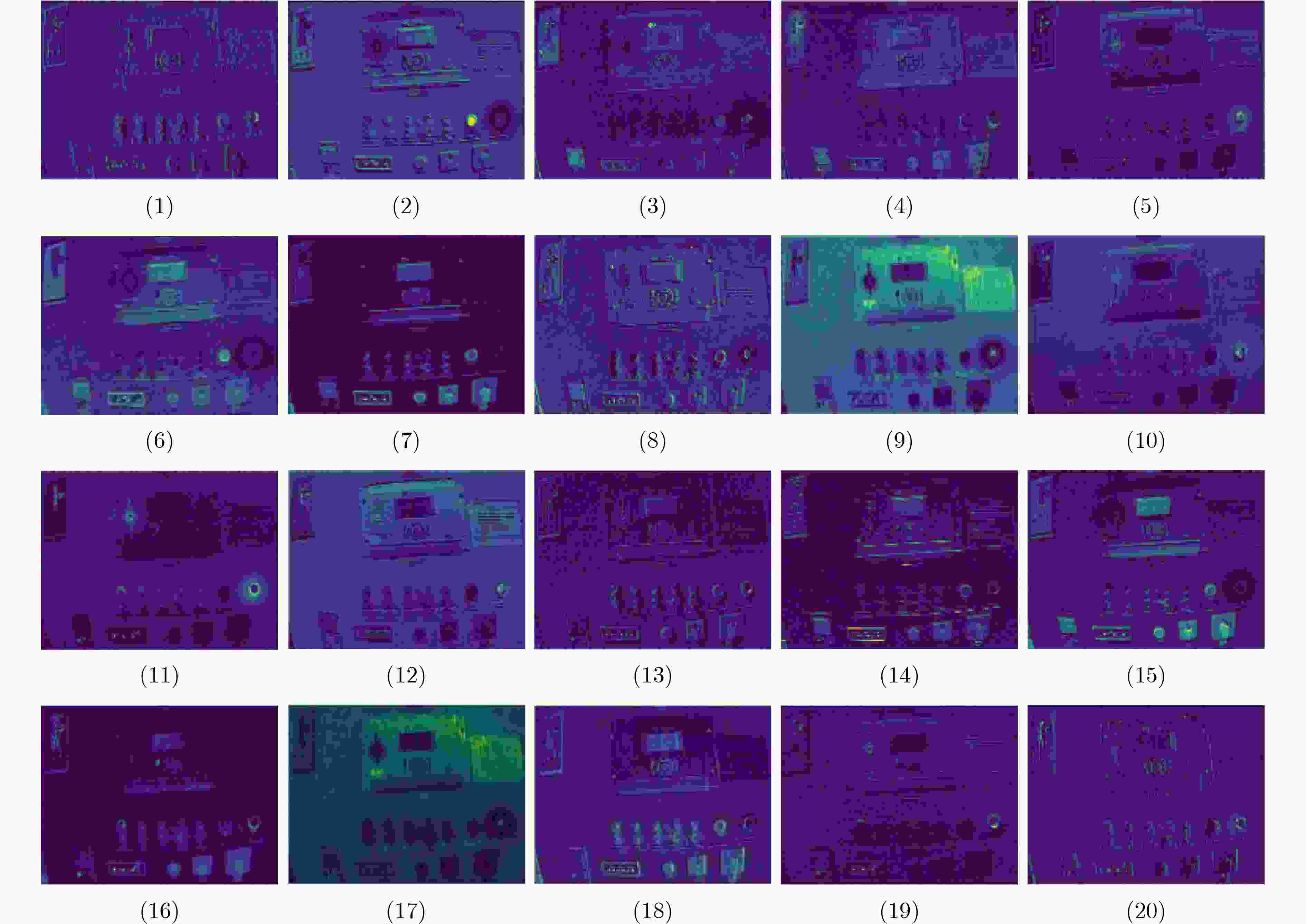

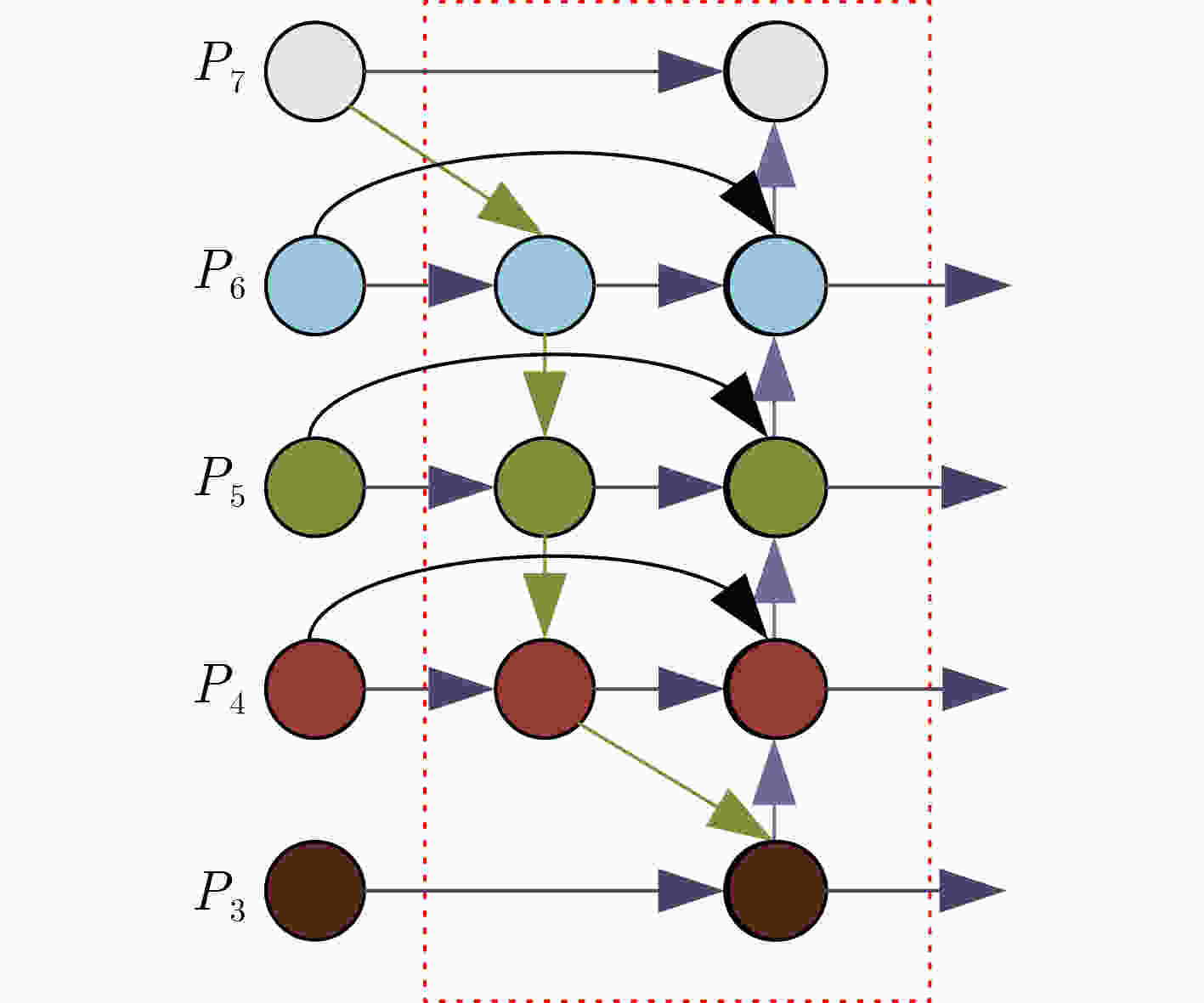

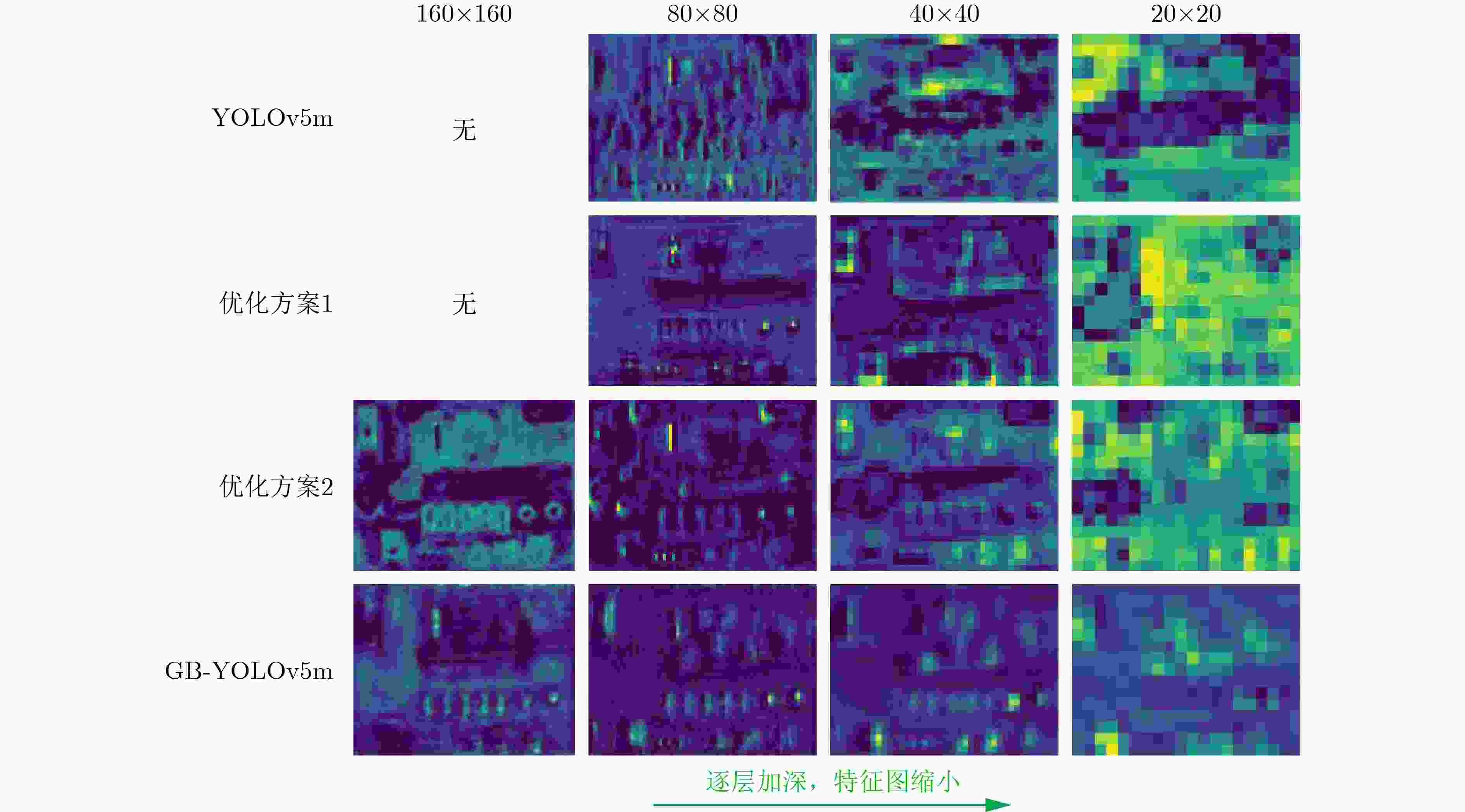

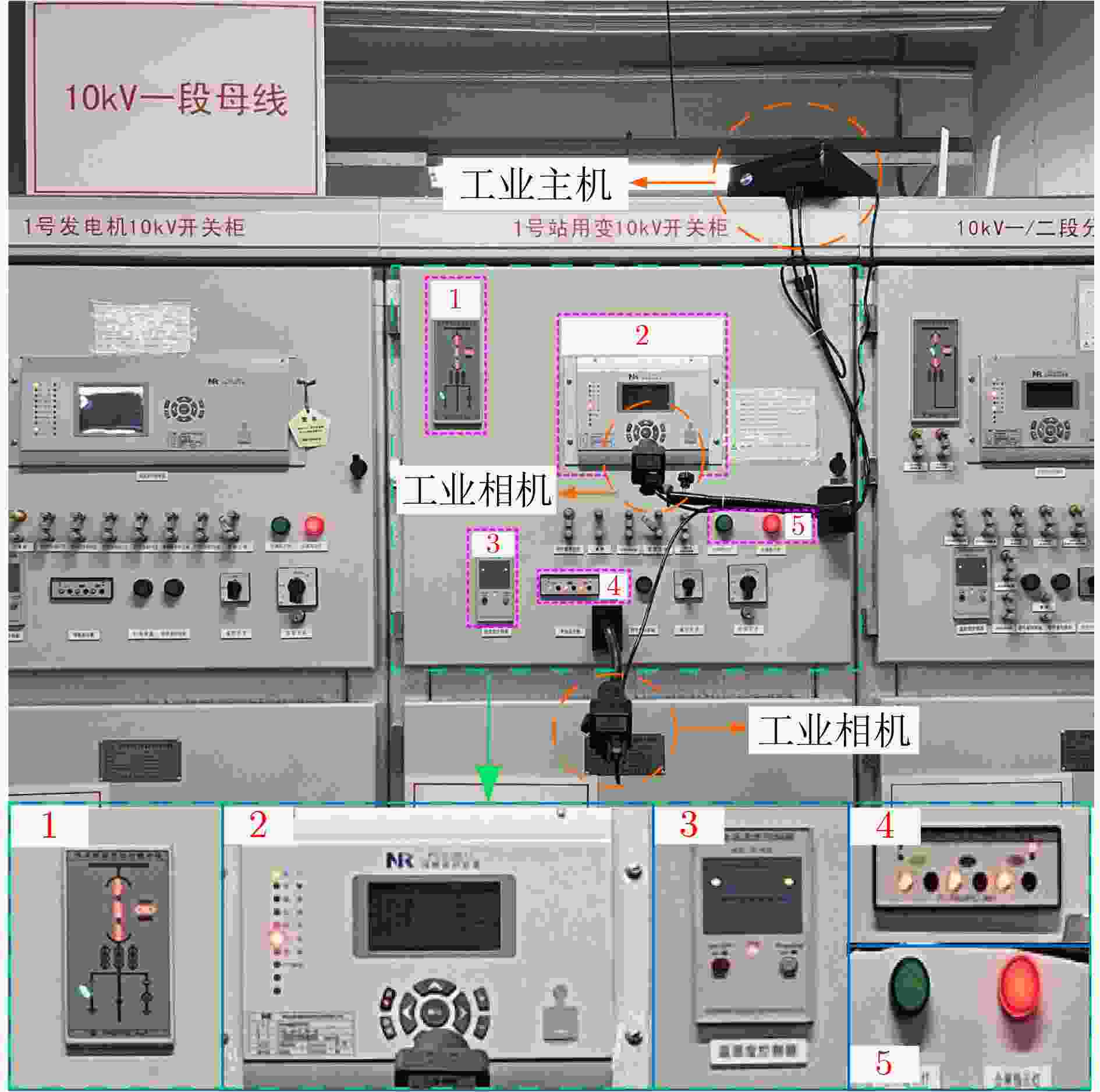

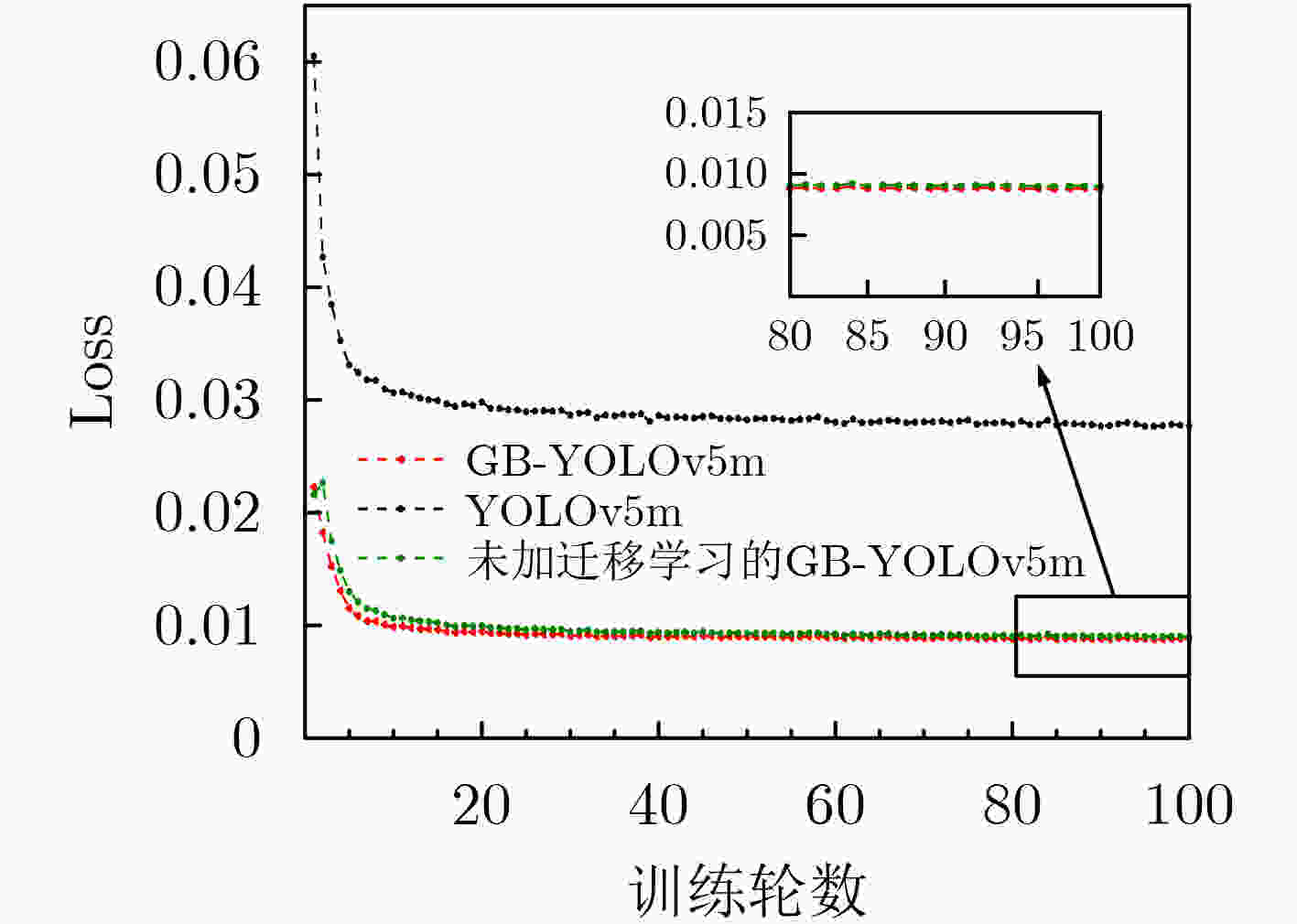

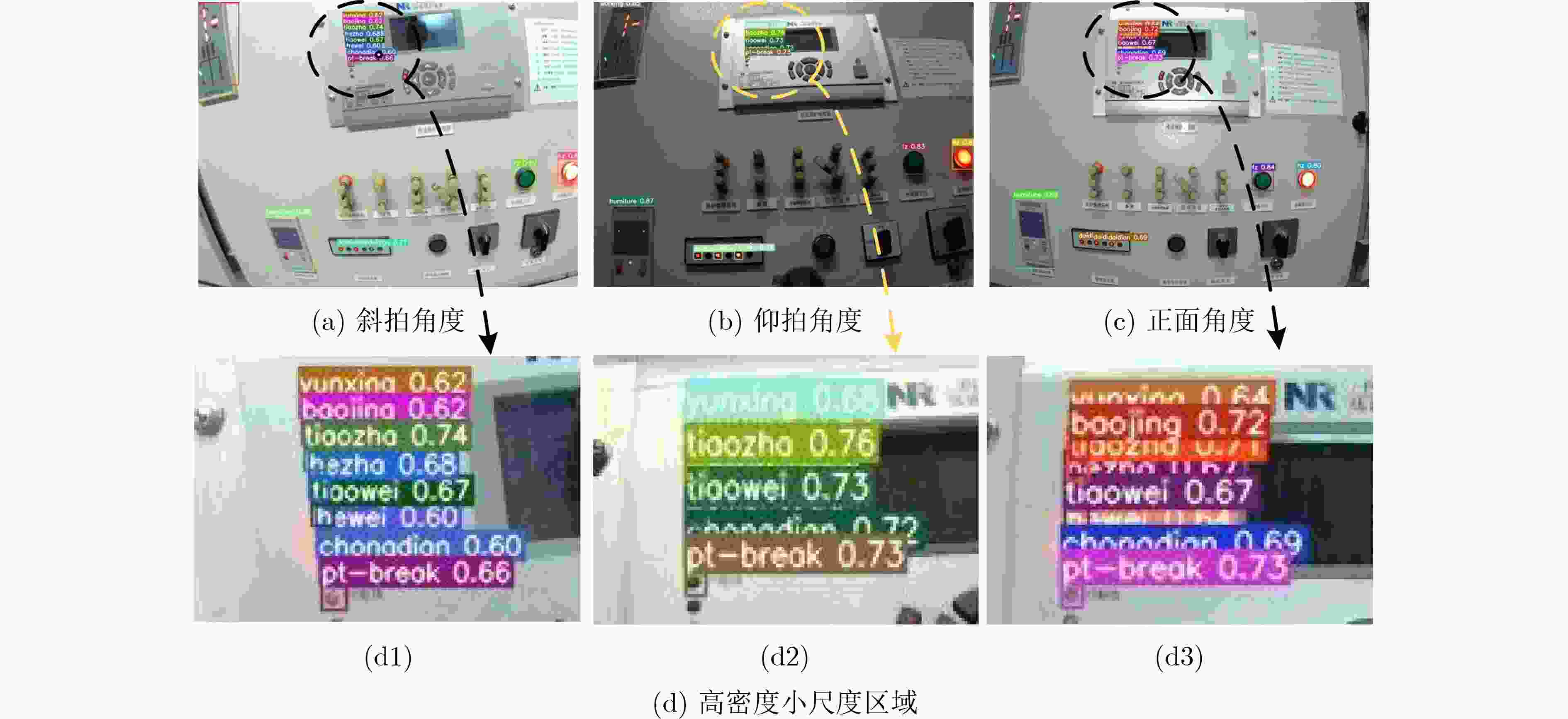

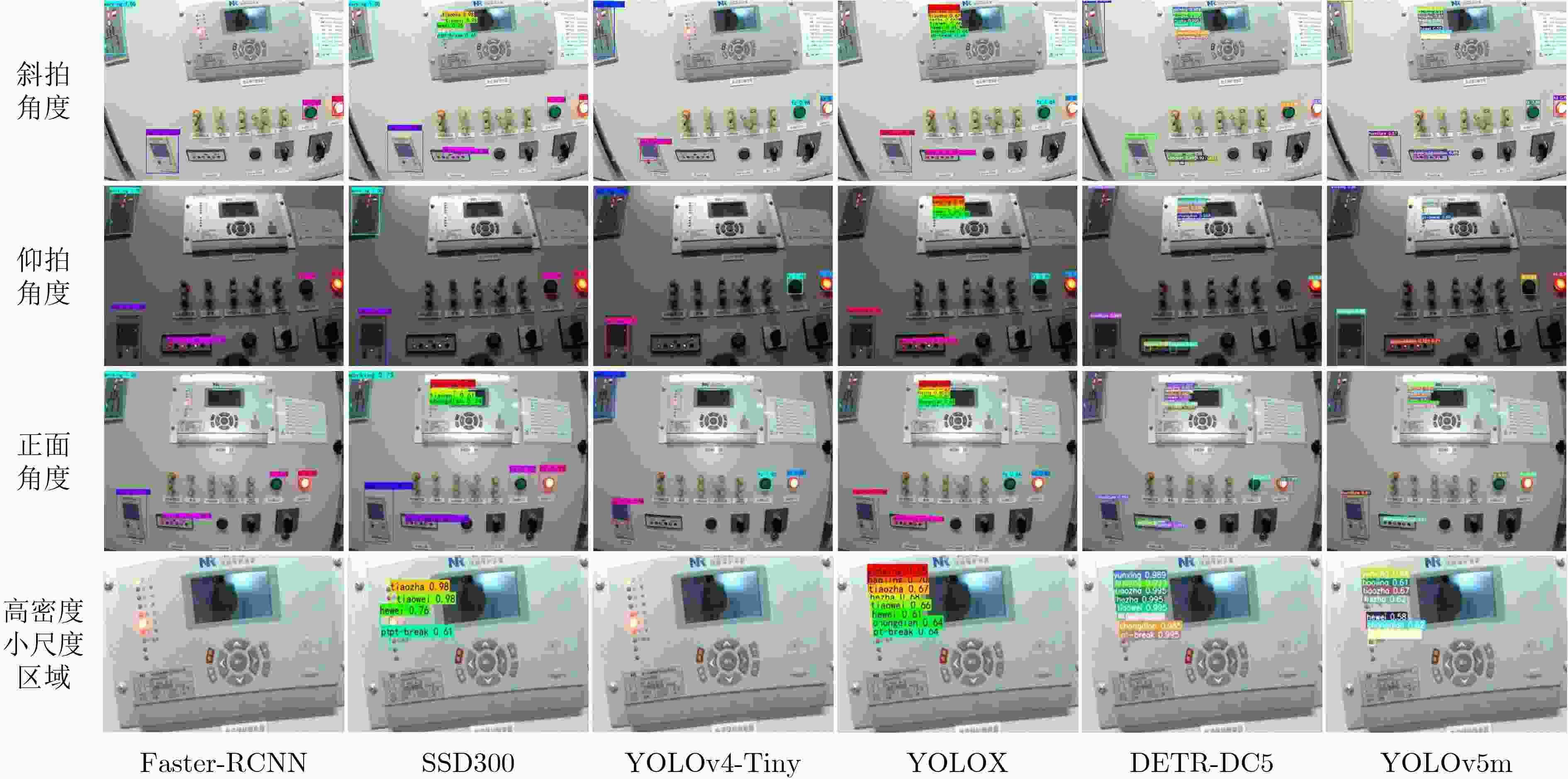

摘要: 电力开关柜状态灯及仪表具有布局高密、异位同像的特点,从而对边端图像处理技术中的目标形貌、色度对比等基础特征检测能力以及轻量识别能力提出更高要求,为此该文提出一种Ghost-BiFPN-YOLOv5m(GB-YOLOv5m)方法。采用加权双向特征金字塔(BiFPN)结构,赋予特征层不同权重以传递更多有效特征信息;增加一个检测层尺度,提升网络对于小目标的检测精度,解决状态灯高密布局引起的小目标识别难问题;利用Ghost-Bottleneck结构替换原主干网络的Bottleneck复杂结构,实现模型的轻量化,为在边端部署模型提供有利条件;通过图像增强技术对有限样本进行状态灯和仪表传递特征的扩充,并通过迁移学习实现算法高速收敛。经10 kV开关柜实测,结果表明该算法对柜体状态灯及仪表共16类目标识别准确率高,均值平均精度(mAP)达97.3%,fps为37.533帧;相较于YOLOv5m算法,在模型大小缩小了37.04%的基础上,mAP提升了10.2%,说明所提方法对灯体与表体的检测能力大幅提升,且轻量识别效率提升明显,对于开关柜电力状态的实时核验与数字孪生信息交互,具有一定的现实意义。Abstract: Since status lights and instruments of power switchgear have the characteristics of high density and ectopic image, the detection ability of basic features such as target morphology, chromaticity comparison, and lightweight recognition ability in edge image processing technology presents higher requirements. Therefore, a Ghost-Bifpn-YOLOv5m(GB-YOLOv5m) method is proposed. Specifically, the Bi-directional Feature Pyramid Network (BiFPN) structure is adopted to give different weights to the feature layer to transmit more effective feature information. A detection layer scale is added to improve the detection accuracy of the network for small targets and tackle the complication of small target recognition caused by the high-density layout of status lights. The Ghost-Bottleneck structure is employed to replace the complex Bottleneck structure of the original backbone and realize the lightweight of the model, contributing to the formation of favorable conditions for deploying the model at the edge. Additionally, the transmission characteristics of status lights and instruments for limited samples are expanded with the image enhancement technology, and the high-speed convergence is realized through migration learning. The experimental results of 10 kV switchgear demonstrate that the algorithm has high recognition accuracy for 16 categories of cabinet status lights and instruments, with mean Average Precision (mAP) of 97.3% and fps of 37.533. Compared with the YOLOv5m algorithm, the model size is reduced by 37.04%, and mAP is increased by 10.2%, implying that the proposed method possesses a significantly enhanced detection ability for lamp bodies and table bodies, as well as remarkably improved lightweight recognition efficiency, which has certain practical significance for the real-time verification of the power state of the switchgear and the interaction of digital twins.

-

Key words:

- Small target detection /

- Lightweight /

- YOLOv5 /

- Instrument and status light detection

-

表 1 GB-YOLOv5m与其他算法对比结果

序号 算法 P(%) R(%) mAP(%) Param(MB) fps 1 FasterR-CNN 39.760 44.690 42.91 25.56 11.220 2 SSD300 38.405 37.633 39.99 99.76 23.270 3 YOLOv4-Tiny 43.600 37.800 43.60 5.91 33.707 4 YOLOX 82.324 83.033 84.49 34.21 14.633 5 DETR-DC5 28.622 35.411 29.18 60.22 6.970 6 YOLOv5m 88.900 91.000 87.10 21.11 43.243 7 GB-YOLOv5m 88.900 99.000 97.30 13.29 37.553 表 2 GB-YOLOv5m与其他算法对不同尺度目标检测精度结果对比

序号 算法 APs(%) APM(%) APL(%) 1 SSD300 14.560 40.400 96.893 2 Faster R-CNN 0.784 93.980 99.370 3 YOLOv4-Tiny 2.624 66.583 98.728 4 YOLOX 74.560 98.987 97.346 5 DETR-DC5 30.611 28.942 25.440 6 YOLOv5m 77.600 99.533 99.225 7 GB-YOLOv5m 95.822 99.400 99.200 表 3 基于YOLOv5m模型的消融实验

序号 实验组 P (%) R (%) APs (%) APM (%) APL (%) mAP (%) Param (MB) fps 1 YOLOv5m 88.9 91 77.600 99.533 99.225 87.1 21.11 43.243 2 YOLOv5m+ Ghost 88.9 90 76.056 99.533 99.050 86.2 11.24 46.180 3 YOLOv5m+BiFPN 90.8 91 79.010 99.400 99.400 87.9 23.62 42.517 4 YOLOv5m+4层检测层 88.5 99 94.880 99.466 98.900 96.7 23.17 37.355 5 YOLOv5m+ Ghost+BiFPN 89.8 91 78.733 99.433 99.525 87.8 12.82 43.975 6 YOLOv5m+ Ghost+4层检测层 88.1 99 94.611 99.433 99.150 96.6 12.09 39.124 7 YOLOv5m+ BiFPN+4层检测层 90.8 99 96.200 99.400 99.275 97.6 25.49 36.364 8 YOLOv5m+Ghost+BiFPN +4层检测层(GB-YOLOv5m) 88.9 99 95.822 99.400 99.200 97.3 13.29 37.553 -

[1] 张春晓, 陆志浩, 刘相财. 智慧变电站联合巡检技术及其应用[J]. 电力系统保护与控制, 2021, 49(9): 158–164. doi: 10.19783/j.cnki.pspc.201045ZHANG Chunxiao, LU Zhihao, and LIU Xiangcai. Joint inspection technology and its application in a smart substation[J]. Power System Protection and Control, 2021, 49(9): 158–164. doi: 10.19783/j.cnki.pspc.201045 [2] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779–788. [3] 杨观赐, 杨静, 苏志东, 等. 改进的YOLO特征提取算法及其在服务机器人隐私情境检测中的应用[J]. 自动化学报, 2018, 44(12): 2238–2249. doi: 10.16383/j.aas.2018.c170265YANG Guanci, YANG Jing, SU Zhidong, et al. An improved YOLO feature extraction algorithm and its application to privacy situation detection of social robots[J]. Acta Automatica Sinica, 2018, 44(12): 2238–2249. doi: 10.16383/j.aas.2018.c170265 [4] GIRSHICK R. Fast R-CNN[C]. The IEEE International Conference on Computer Vision. Santiago, Chile, 2015: 1440–1448. [5] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[C]. The 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 91–99. [6] REDMON J and FARHADI A. YOLOv3: An incremental improvement[EB/OL]. https://arxiv.org/abs/1804.02767, 2018. [7] 鞠默然, 罗海波, 王仲博, 等. 改进的YOLO V3算法及其在小目标检测中的应用[J]. 光学学报, 2019, 39(7): 0715004. doi: 10.3788/AOS201939.0715004JU Moran, LUO Haibo, WANG Zhongbo, et al. Improved YOLO V3 algorithm and its application in small target detection[J]. Acta Optica Sinica, 2019, 39(7): 0715004. doi: 10.3788/AOS201939.0715004 [8] BOCHKOVSKIY A, WANG C Y, and LIAO H Y M. YOLOv4: Optimal speed and accuracy of object detection[EB/OL]. https://arxiv.org/abs/2004.10934, 2020. [9] 谢昊源, 黄群星, 林晓青, 等. 基于图像深度学习的垃圾热值预测研究[J]. 化工学报, 2021, 72(5): 2773–2782. doi: 10.11949/0438-1157.20201481XIE Haoyuan, HUANG Qunxing, LIN Xiaoqing, et al. Study on the calorific value prediction of municipal solid wastes byimage deep learning[J]. CIESC Journal, 2021, 72(5): 2773–2782. doi: 10.11949/0438-1157.20201481 [10] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot MultiBox detector[C]. The 14th European Conference on Computer Vision. Amsterdam, The Netherlands, 2016: 21–37. [11] 赵辉, 李志伟, 张天琪. 基于注意力机制的单发多框检测器算法[J]. 电子与信息学报, 2021, 43(7): 2096–2104. doi: 10.11999/JEIT200304ZHAO Hui, LI Zhiwei, and ZHANG Tianqi. Attention based single shot multibox detector[J]. Journal of Electronics &Information Technology, 2021, 43(7): 2096–2104. doi: 10.11999/JEIT200304 [12] 马鹏, 樊艳芳. 基于深度迁移学习的小样本智能变电站电力设备部件检测[J]. 电网技术, 2020, 44(3): 1148–1159. doi: 10.13335/j.1000-3673.pst.2018.2793MA Peng and FAN Yanfang. Small sample smart substation power equipment component detection based on deep transfer learning[J]. Power System Technology, 2020, 44(3): 1148–1159. doi: 10.13335/j.1000-3673.pst.2018.2793 [13] 王永平, 张红民, 彭闯, 等. 基于YOLO v3的高压开关设备异常发热点目标检测方法[J]. 红外技术, 2020, 42(10): 983–987. doi: 10.3724/SP.J.7103116038WANG Yongping, ZHANG Hongmin, PENG Chuang, et al. The target detection method for abnormal heating point of high-voltage switchgear based on YOLO v3[J]. Infrared Technology, 2020, 42(10): 983–987. doi: 10.3724/SP.J.7103116038 [14] 华泽玺, 施会斌, 罗彦, 等. 基于轻量级YOLO-v4模型的变电站数字仪表检测识别[J/OL]. 西南交通大学学报, http://kns.cnki.net/kcms/detail/51.1277.U.20211027.1050.003.html, 2021.HUA Zexi, SHI Huibin, LUO Yan, et al. Detection and recognition of digital instruments based on lightweight YOLO-v4 model at substations[J/OL]. Journal of Southwest Jiaotong University, http://kns.cnki.net/kcms/detail/51.1277.U.20211027.1050.003.html, 2021. [15] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis Machine Intelligence, 2015, 37(9): 1904–1916. doi: 10.1109/TPAMI.2015.2389824 [16] 刘颖, 刘红燕, 范九伦, 等. 基于深度学习的小目标检测研究与应用综述[J]. 电子学报, 2020, 48(3): 590–601. doi: 10.3969/j.issn.0372-2112.2020.03.024LIU Ying, LIU Hongyan, FAN Jiulun, et al. A survey of research and application of small object detection based on deep learning[J]. Acta Electronica Sinica, 2020, 48(3): 590–601. doi: 10.3969/j.issn.0372-2112.2020.03.024 [17] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 936–944. [18] LIU Shu, QI Lu, QIN Haifang, et al. Path aggregation network for instance segmentation[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8759–8768. [19] GHIASI G, LIN T Y, and LE Q V. NAS-FPN: Learning scalable feature pyramid architecture for object detection[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 7029–7038. [20] 赵德安, 曹硕, 孙月平, 等. 基于联动扩展神经网络的水下自由活蟹检测器研究[J]. 农业机械学报, 2020, 51(9): 163–174. doi: 10.6041/j.issn.1000-1298.2020.09.019ZHAO Dean, CAO Shuo, SUN Yueping, et al. Small-sized efficient detector for underwater freely live crabs based on compound scaling neural network[J]. Transactions of the Chinese Society for Agricultural Machinery, 2020, 51(9): 163–174. doi: 10.6041/j.issn.1000-1298.2020.09.019 [21] SEKARA V, STOPCZYNSKI A, and LEHMANN S. Fundamental structures of dynamic social networks[J]. Proceedings of the National Academy of Sciences of the United States of America, 2016, 113(36): 9977. doi: 10.1073/pnas.1602803113 -

下载:

下载:

下载:

下载: