Building Extraction from Satellite Imagery Based on Footprint Map and Bidirectional Connection Map

-

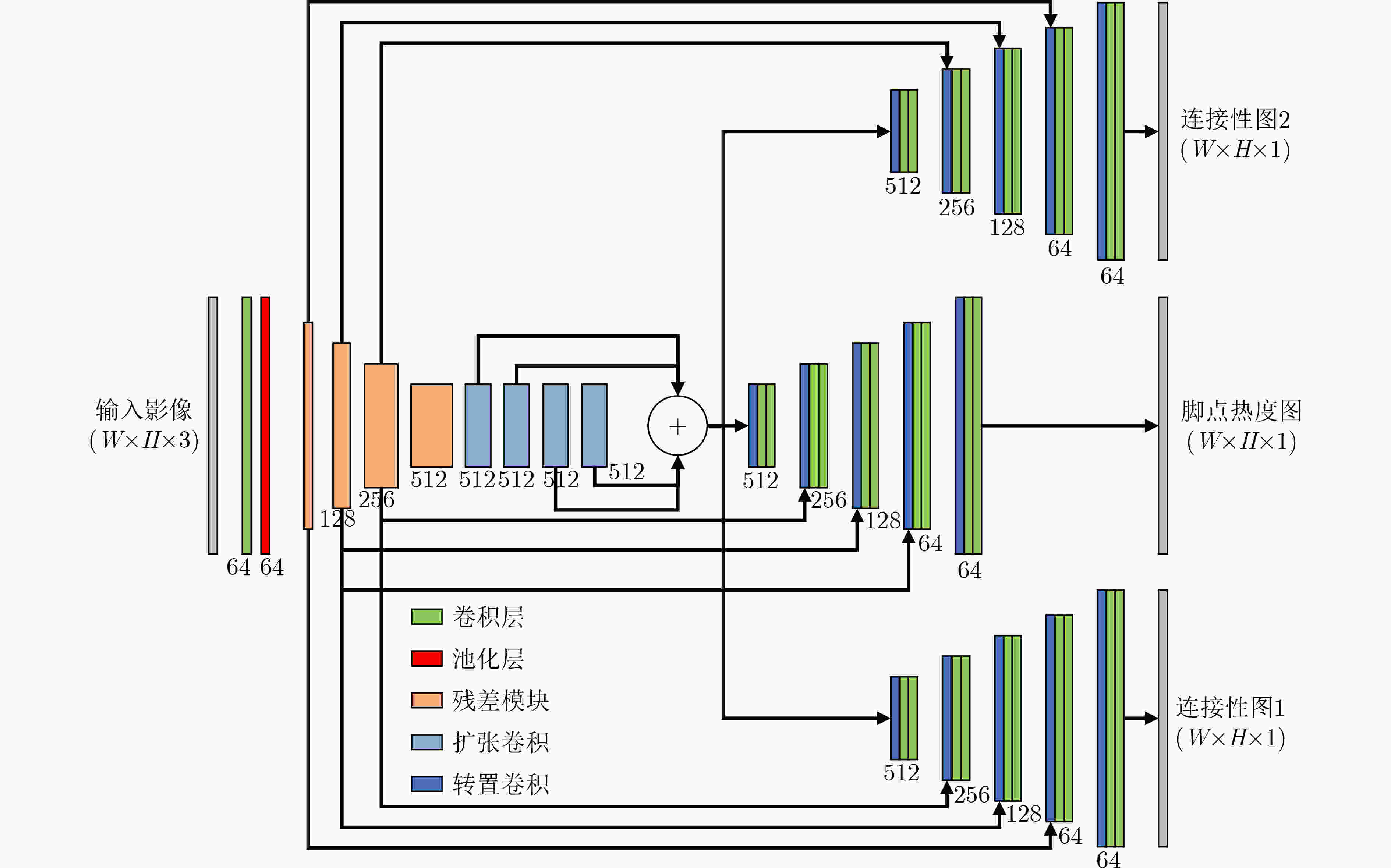

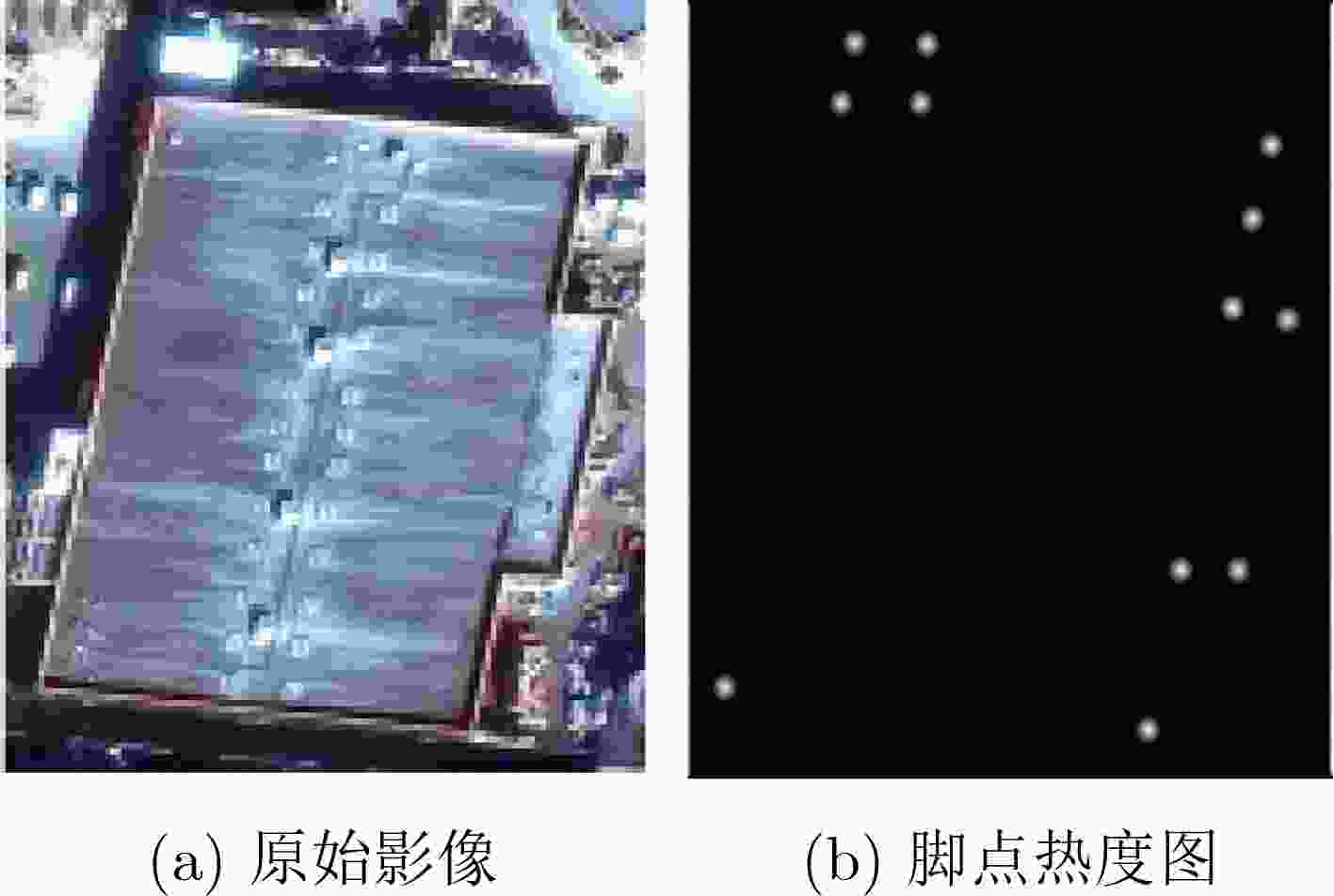

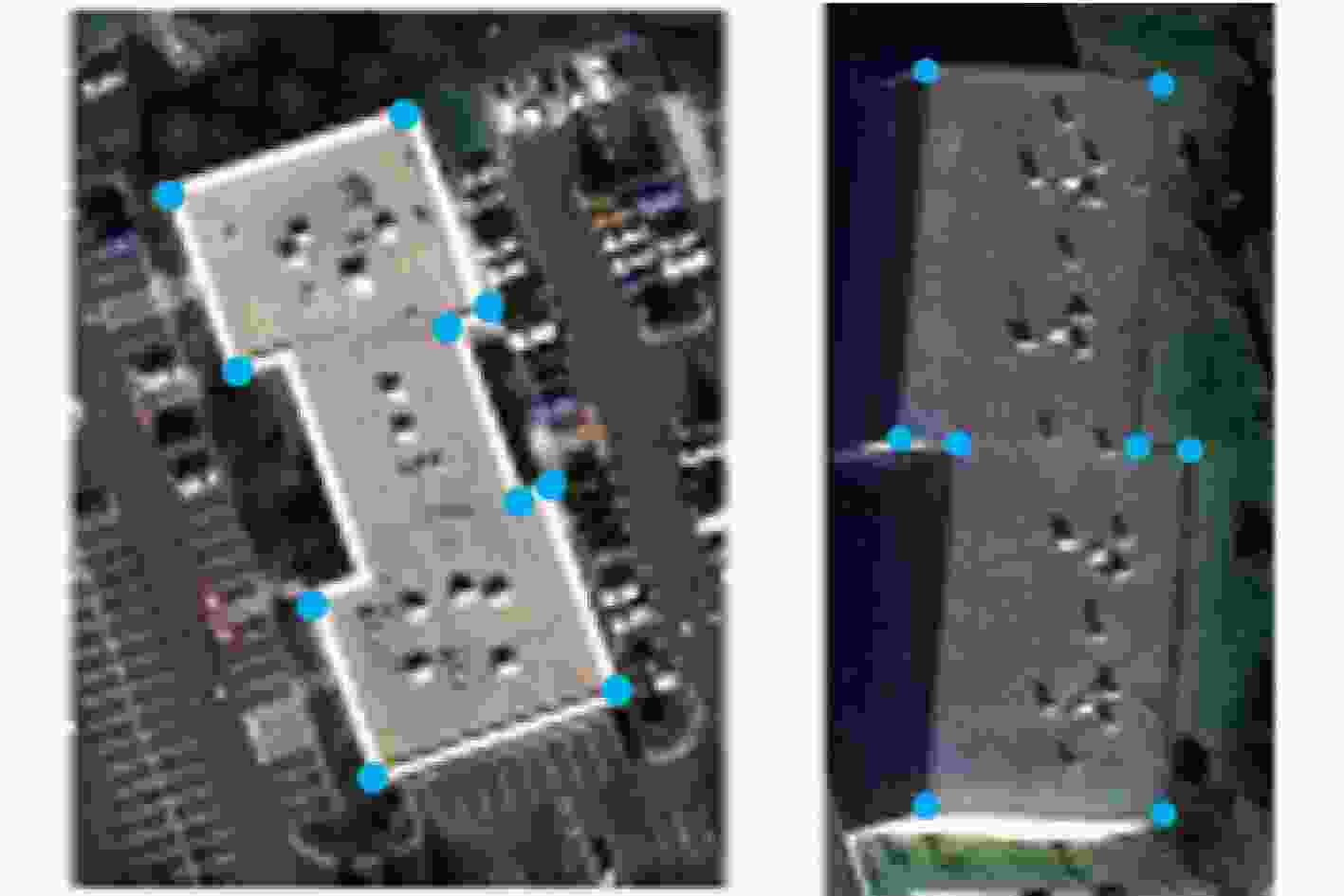

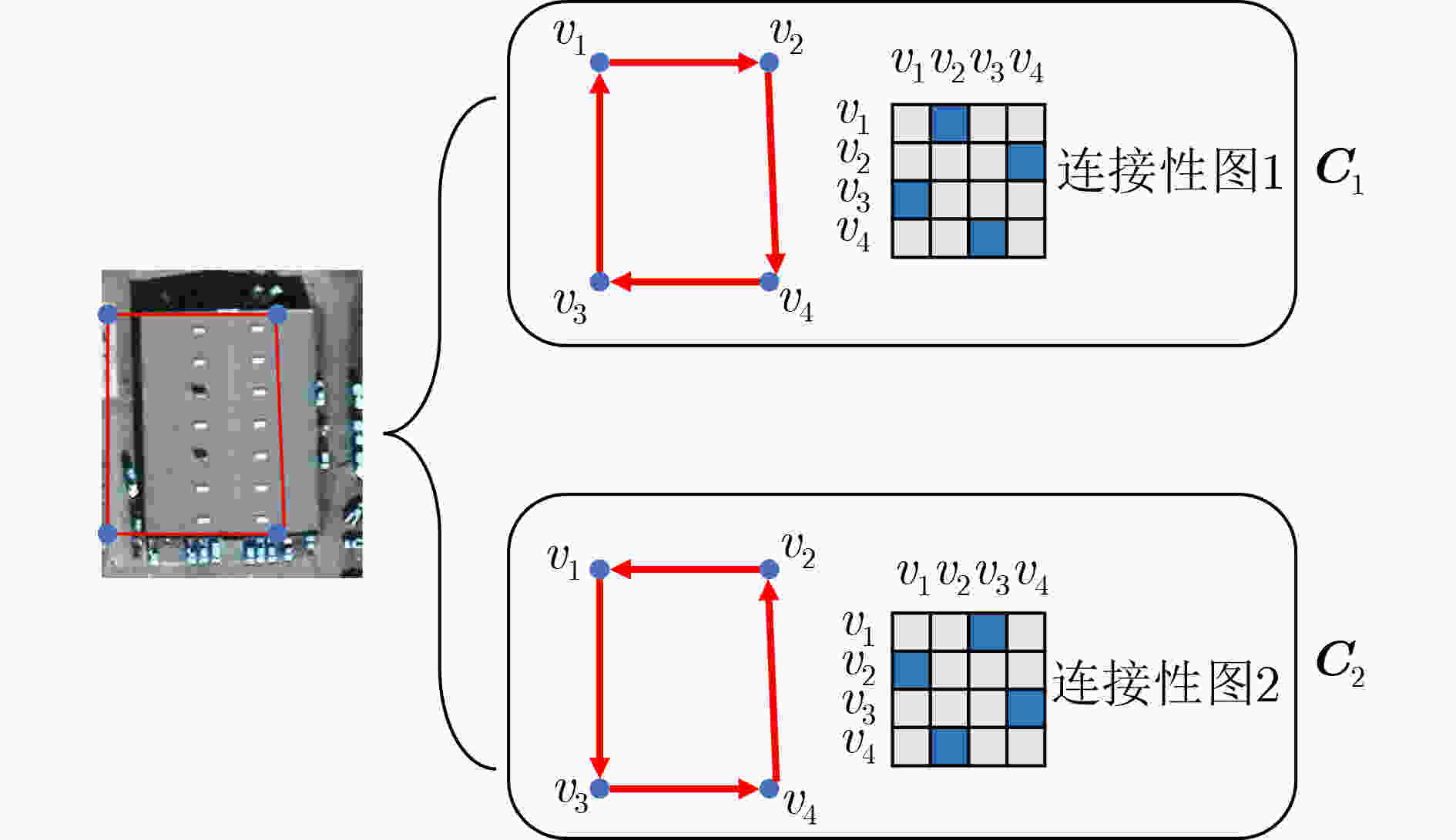

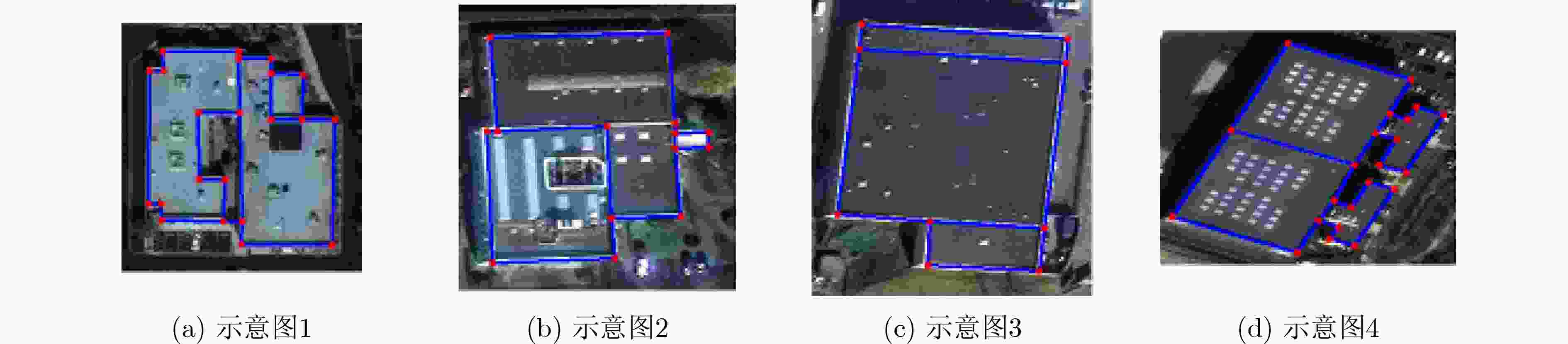

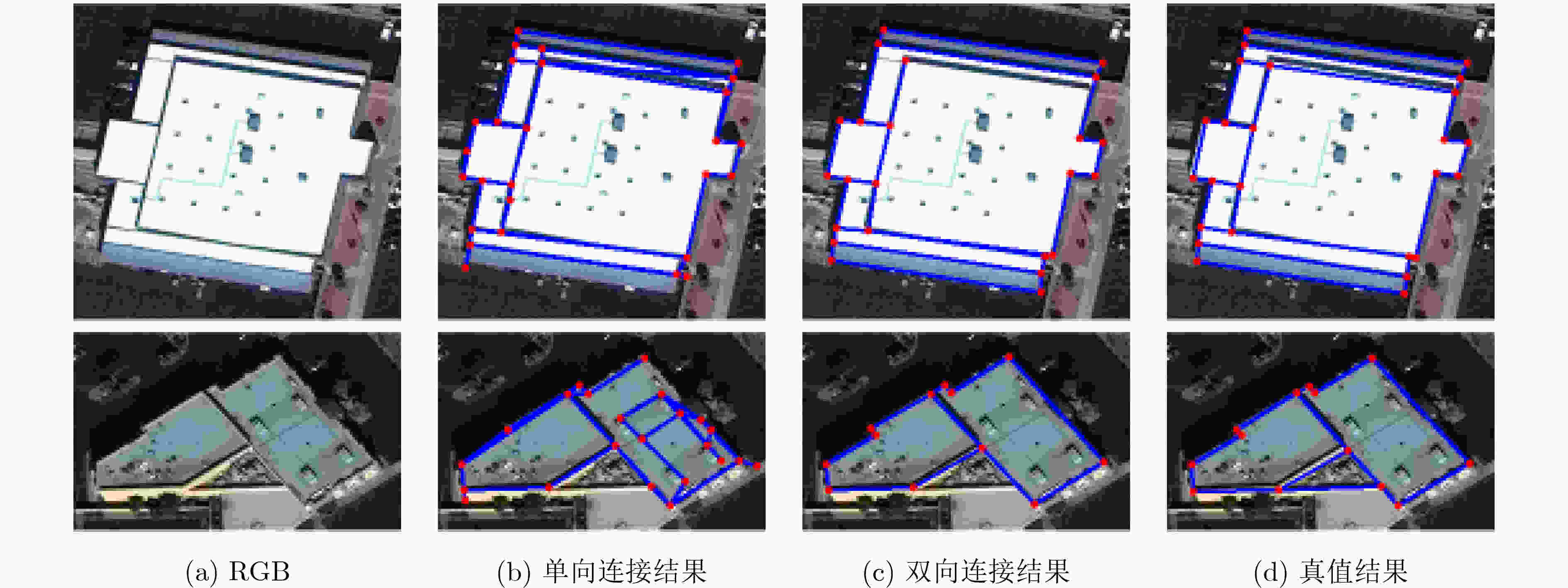

摘要: 目前,大多数基于深度学习的遥感影像建筑物提取方法采用语义分割的方式,对遥感影像进行二分类预测。然而,该类方法没有考虑建筑物的几何特性,难以进行精确提取。为了更精确地提取建筑物,该文引入建筑物的几何信息,提出一种基于脚点热度图和双向连接图的建筑物轮廓提取方法。该方法为一种多分支的深度卷积网络,分别对建筑物的脚点以及脚点间的连接性进行预测。在其中一个分支中,预测建筑物的脚点热度图,并用非极大抑制算法得到建筑物的脚点像素坐标;利用另外两个分支预测脚点之间的正向连通性和反向连通性,并通过这种双向连接图对脚点间是否具有连接性进行判断,在将具有连通性的脚点进行连接后,可得到最终的建筑物轮廓。该文算法在Buildings2Vec数据集上进行了验证,结果表明该方法在遥感影像建筑物提取中具有一定的优越性。Abstract: Most state-of-the-art building extraction from satellite imagery are based on binary segmentation. However, the geographic information has not been considered in these methods, thus, it is difficult to extract building accurately. To consider fully the geographic information on feature extraction, a building extraction convolutional neural network based on footprint map and bidirectional connection is proposed. The proposed method is a multi-branch network, which is designed to predict the footprint and bidirectional connection map, respectively. This paper predicts the footprint heatmap of buildings and uses the Non-Maximum Suppression (NMS) algorithm to obtain the pixel coordinates. Another two branches are used to predict positive connectivity and negative connectivity between footprints. Each pair of nodes is connected according to the bidirectional connectivity map to obtain the final building outline. Experiments on the Buildings2Vec dataset demonstrate that the proposed method outperforms various previous work, which illustrate the superiority in building extraction from satellite imagery.

-

Key words:

- Satellite imagery /

- Deep learning /

- Footprint prediction /

- Bidirectional connection map

-

表 1 双向连接性效果定量指标

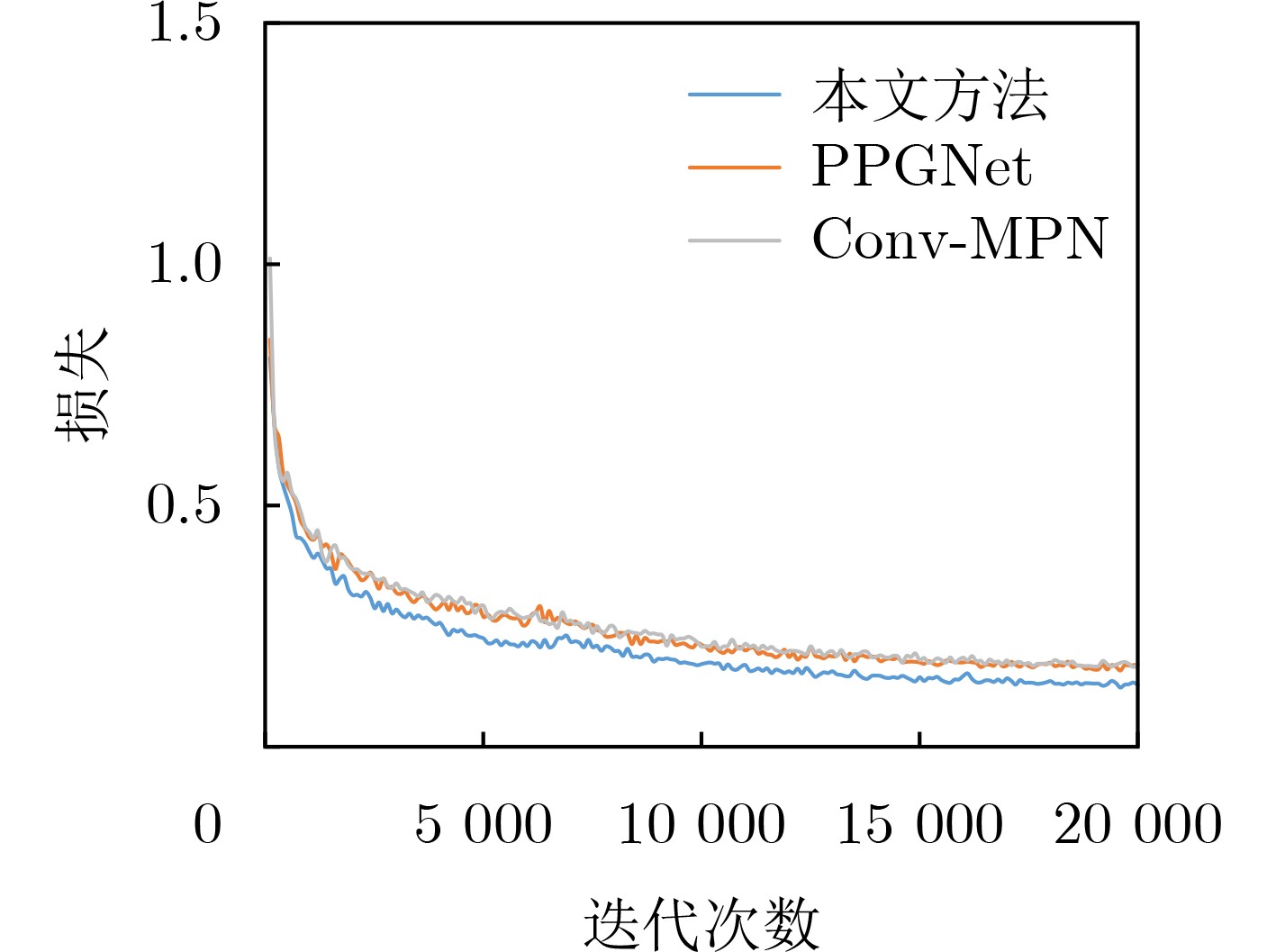

方法 ${P}_{{\rm{c}}}$ $ {R}_{{\rm{c}}} $ $ {{\rm{F}}1}_{{\rm{c}}} $ $ {P}_{{\rm{e}}} $ $ {R}_{{\rm{e}}} $ $ {{\rm{F}}1}_{{\rm{e}}} $ 单向连接性效果 0.790 0.832 0.810 0.575 0.661 0.615 双向连接性效果 0.803 0.846 0.824 0.591 0.682 0.633 表 2 本文方法与其他方法定量化对比

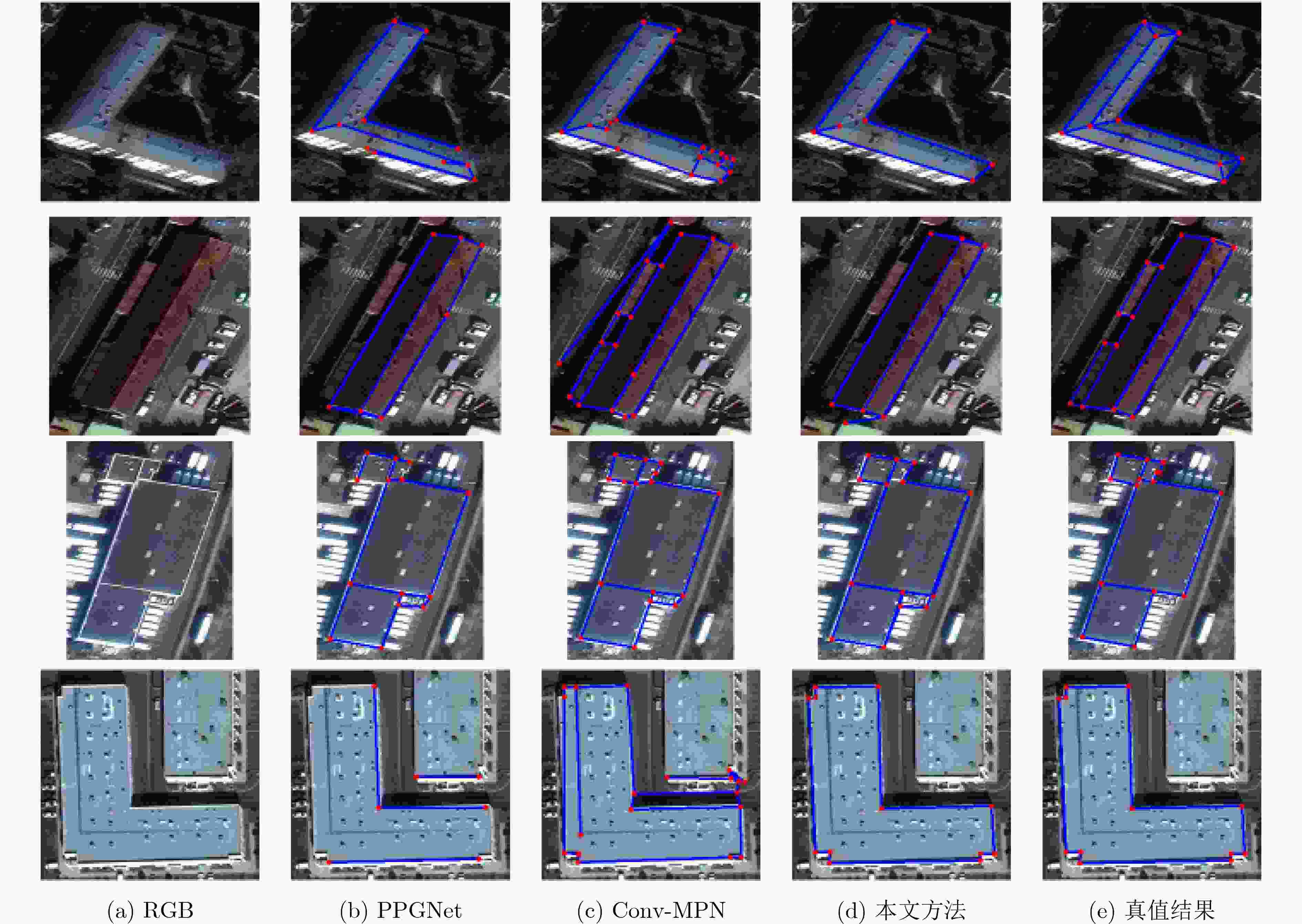

方法 $ {P}_{{\rm{c}}} $ $ {R}_{{\rm{c}}} $ $ {{\rm{F}}1}_{{\rm{c}}} $ $ {P}_{{\rm{e}}} $ $ {R}_{{\rm{e}}} $ $ {{\rm{F}}1}_{{\rm{e}}} $ PPGNet 0.893 0.694 0.781 0.737 0.501 0.596 Conv-MPN 0.779 0.802 0.790 0.569 0.607 0.587 本文方法 0.803 0.846 0.824 0.591 0.682 0.633 -

[1] 徐旺, 游雄, 张威巍, 等. 2D地图的建筑物场景结构提取方法及其在城市增强现实中的应用[J]. 测绘学报, 2020, 49(12): 1619–1629.XU Wang, YOU Xiong, ZHANG Weiwei, et al. Method of building scene structure extraction based on 2D map and its application in urban augmented reality[J]. Acta Geodaetica et Cartographica Sinica, 2020, 49(12): 1619–1629. [2] SUN Yao, MONTAZERI S, WANG Yuanyuan, et al. Automatic registration of a single SAR image and GIS building footprints in a large-scale urban area[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 170: 1–14. doi: 10.1016/j.isprsjprs.2020.09.016 [3] WANG Jun, YANG Xiucheng, QIN Xuebin, et al. An efficient approach for automatic rectangular building extraction from very high resolution optical satellite imagery[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(3): 487–491. doi: 10.1109/LGRS.2014.2347332 [4] COTE M and SAEEDI P. Automatic rooftop extraction in nadir aerial imagery of suburban regions using corners and variational level set evolution[J]. IEEE Transactions on Geoscience and Remote Sensing, 2013, 51(1): 313–328. doi: 10.1109/TGRS.2012.2200689 [5] MANNO-KOVÁCS A and OK A O. Building detection from monocular VHR images by integrated urban area knowledge[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(10): 2140–2144. doi: 10.1109/LGRS.2015.2452962 [6] NGO T T, COLLET C, and MAZET V. Automatic rectangular building detection from VHR aerial imagery using shadow and image segmentation[C]. 2015 IEEE International Conference on Image Processing, Quebec City, Canada, 2015: 1483–1487. [7] SUN Yao, HUA Yuansheng, MOU Lichao, et al. CG-Net: Conditional GIS-aware network for individual building segmentation in VHR SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5201215. doi: 10.1109/TGRS.2020.3043089 [8] CHEN Ziyi, LI Dilong, FAN Wentao, et al. Self-attention in reconstruction bias U-Net for semantic segmentation of building rooftops in optical remote sensing images[J]. Remote Sensing, 2021, 13(13): 2524. doi: 10.3390/rs13132524 [9] BOONPOOK W, TAN Yumin, and XU Bo. Deep learning-based multi-feature semantic segmentation in building extraction from images of UAV photogrammetry[J]. International Journal of Remote Sensing, 2021, 42(1): 1–19. doi: 10.1080/01431161.2020.1788742 [10] DING Cheng, WENG Liguo, XIA Min, et al. Non-local feature search network for building and road segmentation of remote sensing image[J]. ISPRS International Journal of Geo-Information, 2021, 10(4): 245. doi: 10.3390/ijgi10040245 [11] NOSRATI M S and SAEEDI P. A novel approach for polygonal rooftop detection in satellite/aerial imageries[C]. The 16th IEEE International Conference on Image Processing, Cairo, Egypt, 2009: 1709–1712. [12] IZADI M and SAEEDI P. Three-dimensional polygonal building model estimation from single satellite images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2012, 50(6): 2254–2272. doi: 10.1109/TGRS.2011.2172995 [13] BALUYAN H, JOSHI B, AL HINAI A, et al. Novel approach for rooftop detection using support vector machine[J]. ISRN Machine Vision, 2013, 2013: 819768. doi: 10.1155/2013/819768 [14] LI Er, FEMIANI J, XU Shibiao, et al. Robust rooftop extraction from visible band images using higher order CRF[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(8): 4483–4495. doi: 10.1109/TGRS.2015.2400462 [15] HOSSEINPOUR H, SAMADZADEGAN F, and JAVAN F D. CMGFNet: A deep cross-modal gated fusion network for building extraction from very high-resolution remote sensing images[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 184: 96–115. doi: 10.1016/j.isprsjprs.2021.12.007 [16] YANG M Y, KUMAAR S, LYU Ye, et al. Real-time semantic segmentation with context aggregation network[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 178: 124–134. doi: 10.1016/j.isprsjprs.2021.06.006 [17] LI Kun and HU Xiangyun. A deep interactive framework for building extraction in remotely sensed images via a coarse-to-fine strategy[C]. 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 2021: 4039–4042. [18] LI Kun, HU Xiangyun, JIANG Huiwei, et al. Attention-guided multi-scale segmentation neural network for interactive extraction of region objects from high-resolution satellite imagery[J]. Remote Sensing, 2020, 12(5): 789. doi: 10.3390/rs12050789 [19] DAS P and CHAND S. AttentionBuildNet for building extraction from aerial imagery[C]. 2021 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 2021: 576–580. [20] CHENG D, LIAO Renjie, FIDLER S, et al. DARNet: Deep active ray network for building segmentation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2020: 7423–7431. [21] HE Hao, WANG Shuyang, ZHAO Qian, et al. Building extraction based on U-net and conditional random fields[C]. The 2021 6th International Conference on Image, Vision and Computing (ICIVC), Qingdao, China, 2021: 273–277. [22] GUO Wen and ZHANG Qiao. Building extraction using high-resolution satellite imagery based on an attention enhanced full convolution neural network[J]. Remote Sensing for Land & Resources, 2021, 33(2): 100–107. doi: 10.6046/gtzyyg.2020230 [23] 刘德祥, 张海荣, 承达瑜, 等. 融合注意力机制的建筑物提取方法[J]. 遥感信息, 2021, 36(4): 119–124. doi: 10.3969/j.issn.1000-3177.2021.04.016LIU Dexiang, ZHANG Hairong, CHENG Dayu, et al. A building extraction method combined attention mechanism[J]. Remote Sensing Information, 2021, 36(4): 119–124. doi: 10.3969/j.issn.1000-3177.2021.04.016 [24] ZHU Qing, LIAO Cheng, HU Han, et al. MAP-Net: Multiple attending path neural network for building footprint extraction from remote sensed imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(7): 6169–6181. doi: 10.1109/TGRS.2020.3026051 [25] CHEN Keyan, ZOU Zhengxia, and SHI Zhewei. Building extraction from remote sensing images with sparse token transformers[J]. Remote Sensing, 2021, 13(21): 4441. doi: 10.3390/rs13214441 [26] TOMPSON J, JAIN A, LECUN Y, et al. Joint training of a convolutional network and a graphical model for human pose estimation[C]. The 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2014: 1799–1807. [27] TOSHEV A and SZEGEDY C. DeepPose: Human pose estimation via deep neural networks[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 1653–1660. [28] CHEN Yilun, WANG Zhicheng, PENG Yuxiang, et al. Cascaded pyramid network for multi-person pose estimation[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7103–7112. [29] NAUATA N and FURUKAWA Y. Vectorizing world buildings: Planar graph reconstruction by primitive detection and relationship inference[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 711–726. [30] ZHANG Ziheng, LI Zhengxin, BI Ning, et al. Ppgnet: Learning point-pair graph for line segment detection[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Los Angeles, USA. 2019: 7098–7107. [31] ZHANG Fuyang, NAUATA N, and FURUKAWA Y. Conv-MPN: Convolutional message passing neural network for structured outdoor architecture reconstruction[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 2795–2804. [32] JI Shunping, WEI Shiqing, and LU Meng. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(1): 574–586. doi: 10.1109/TGRS.2018.2858817 -

下载:

下载:

下载:

下载: