Indoor Localization of UAV Using Monocular Vision

-

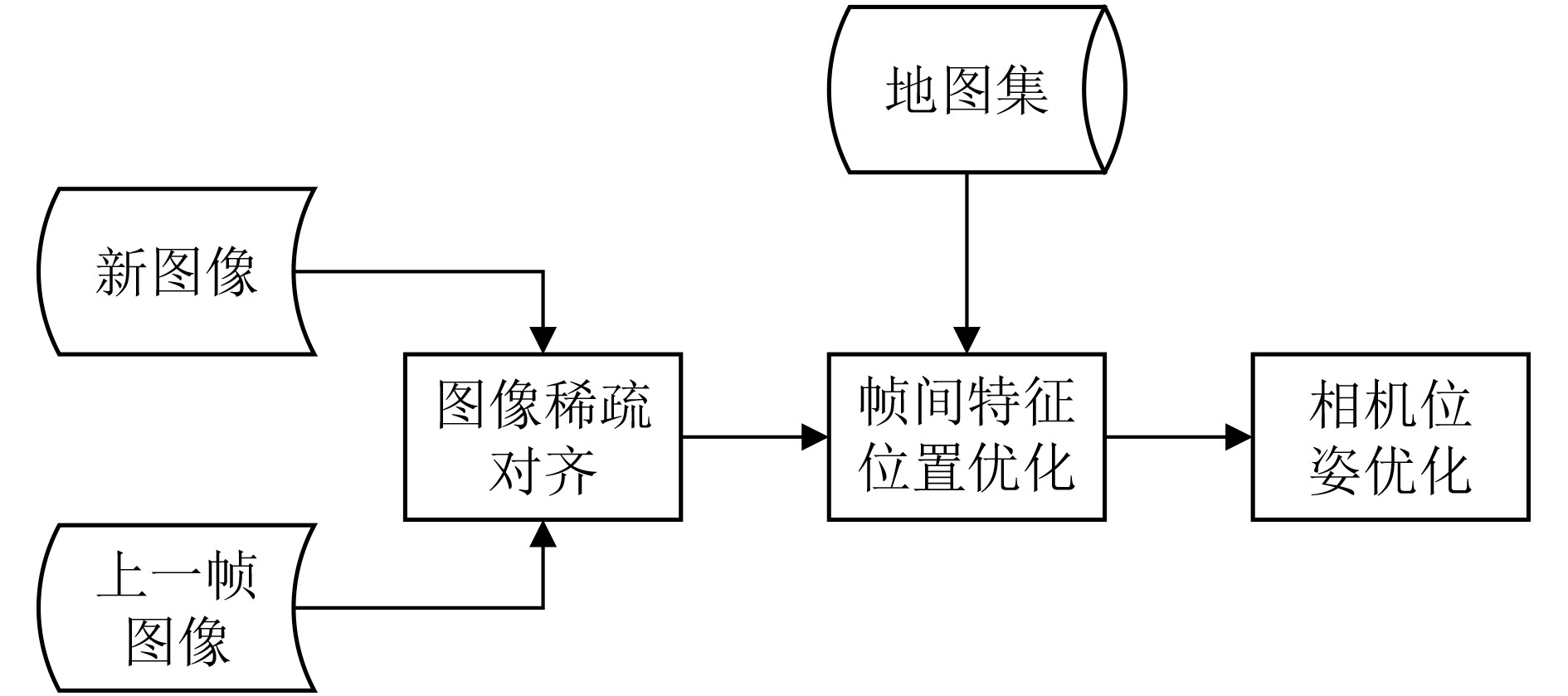

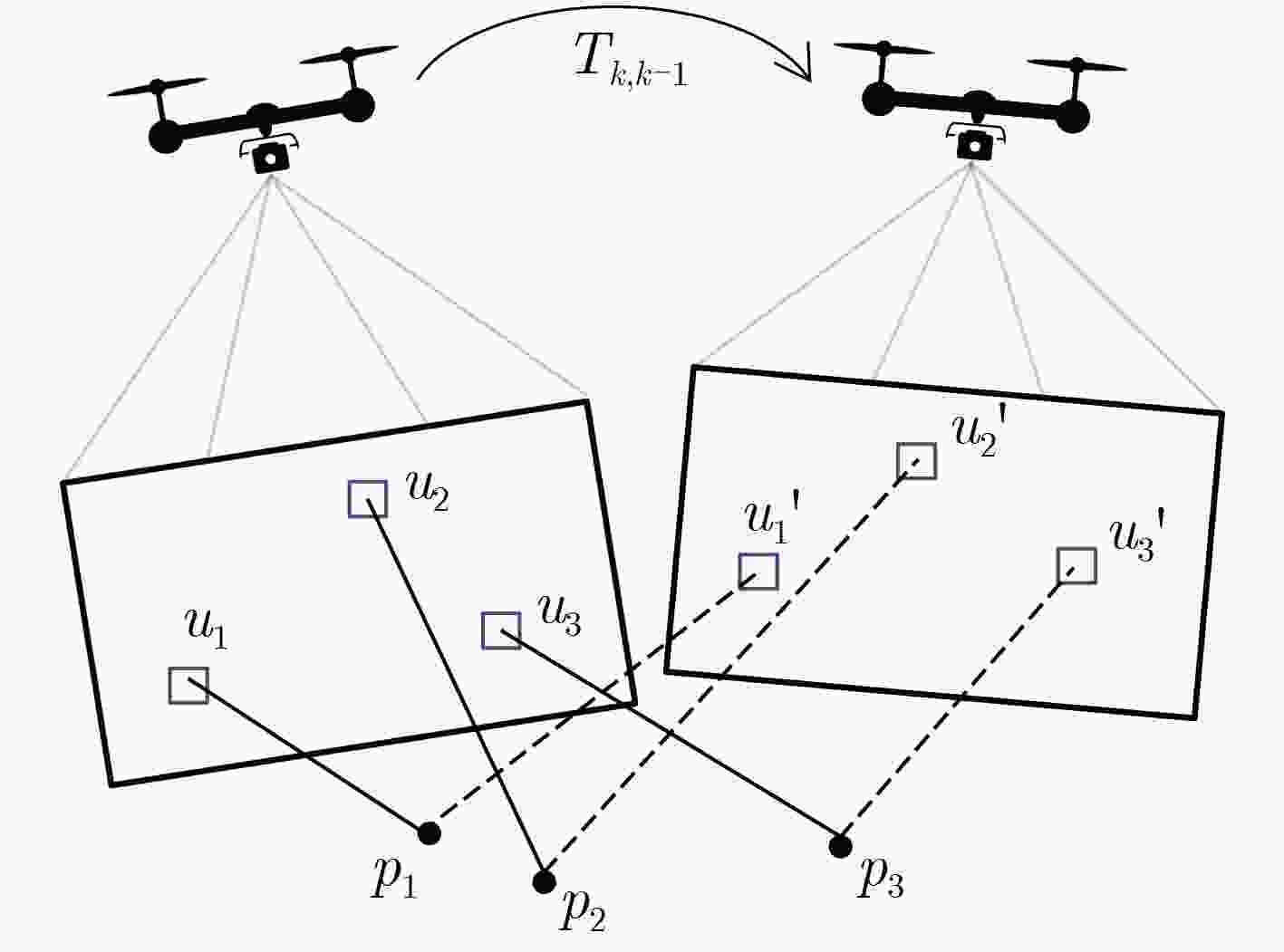

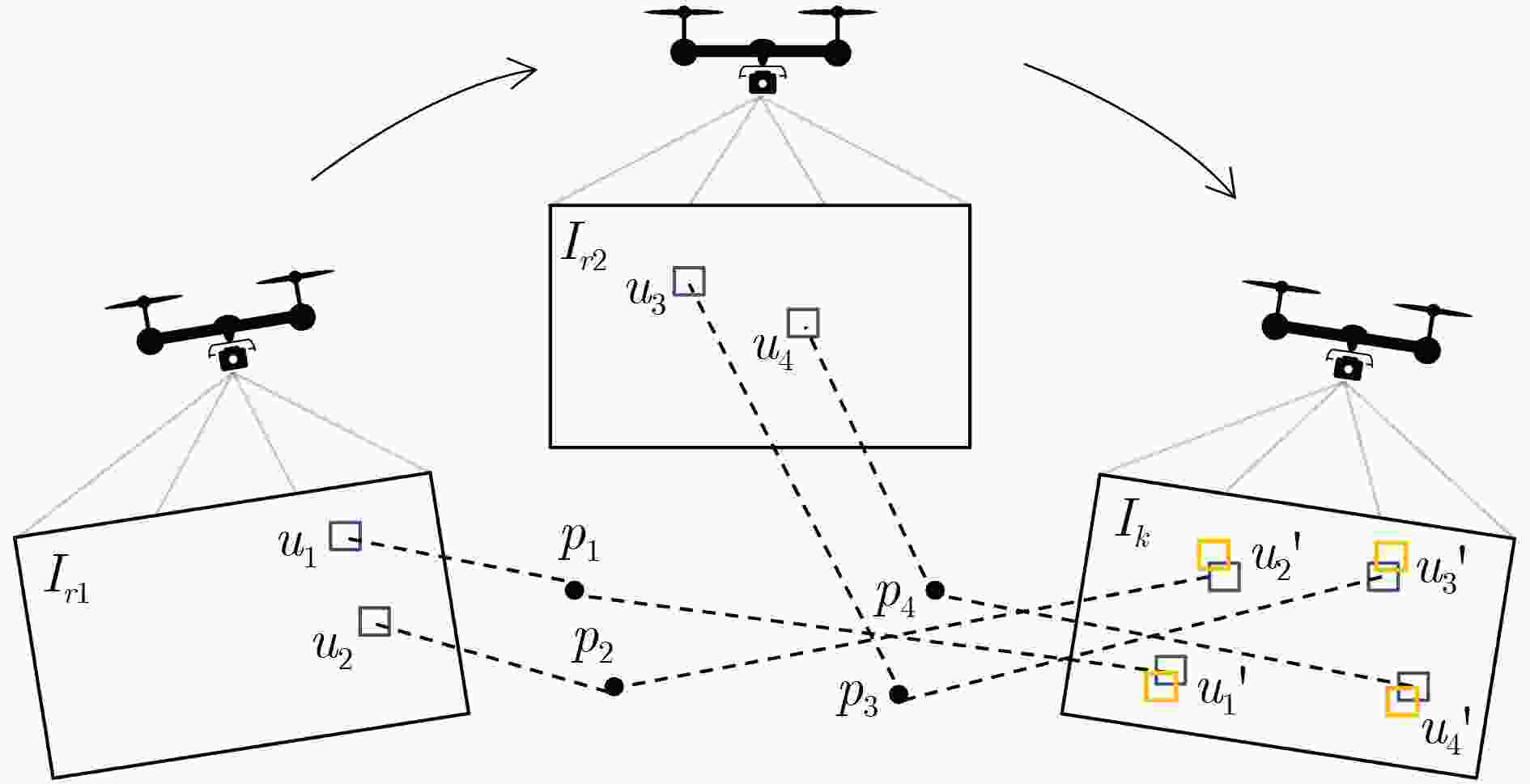

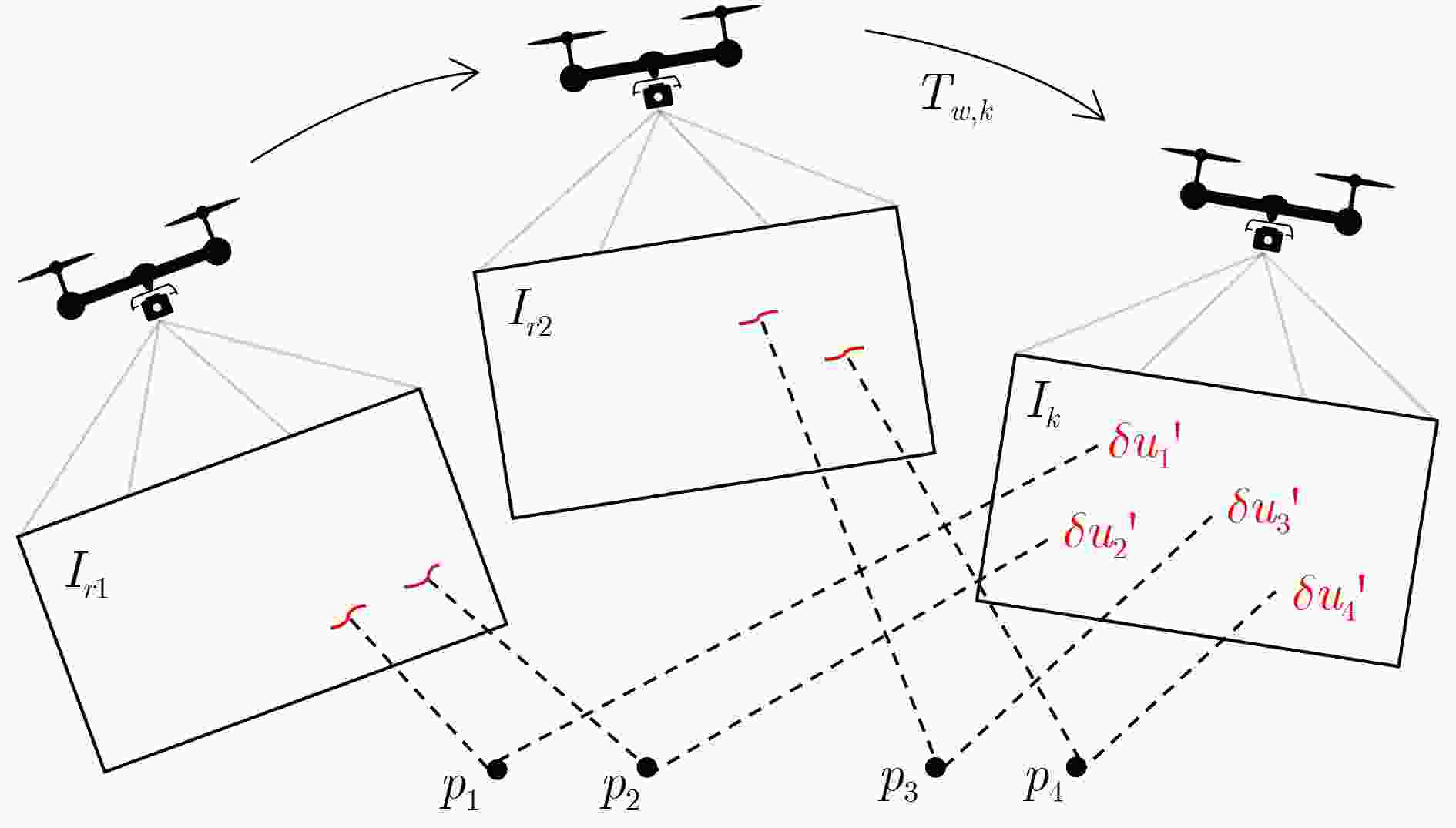

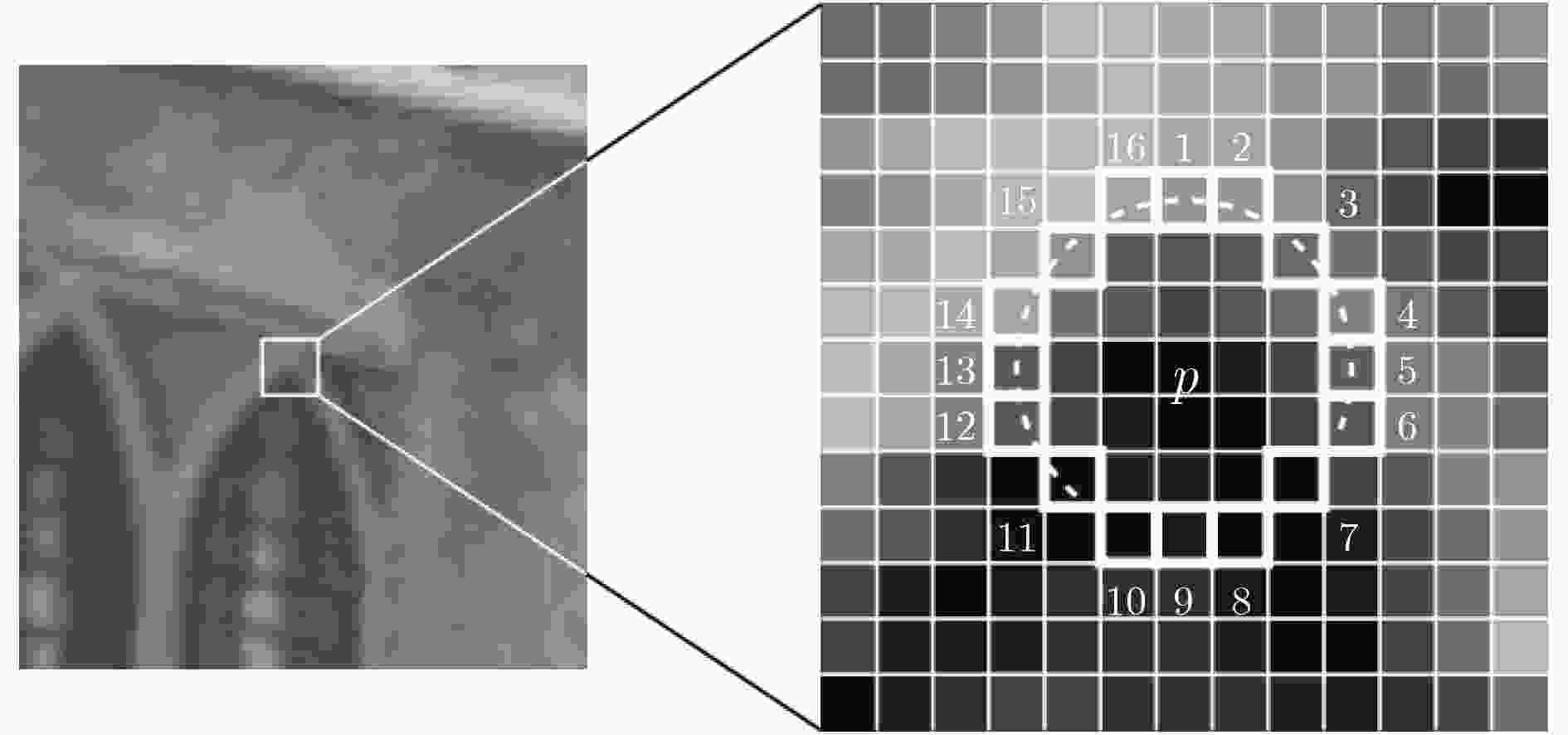

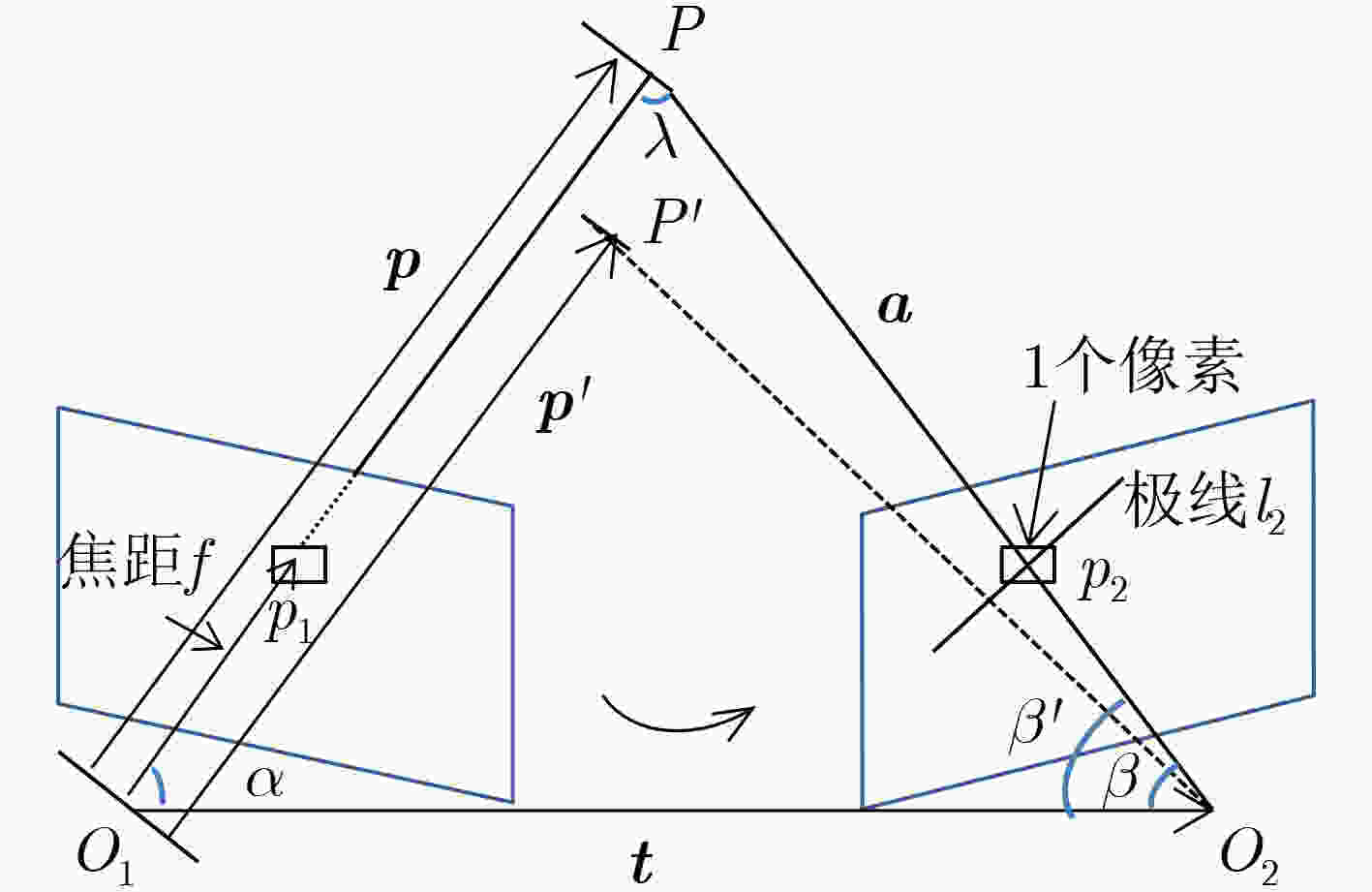

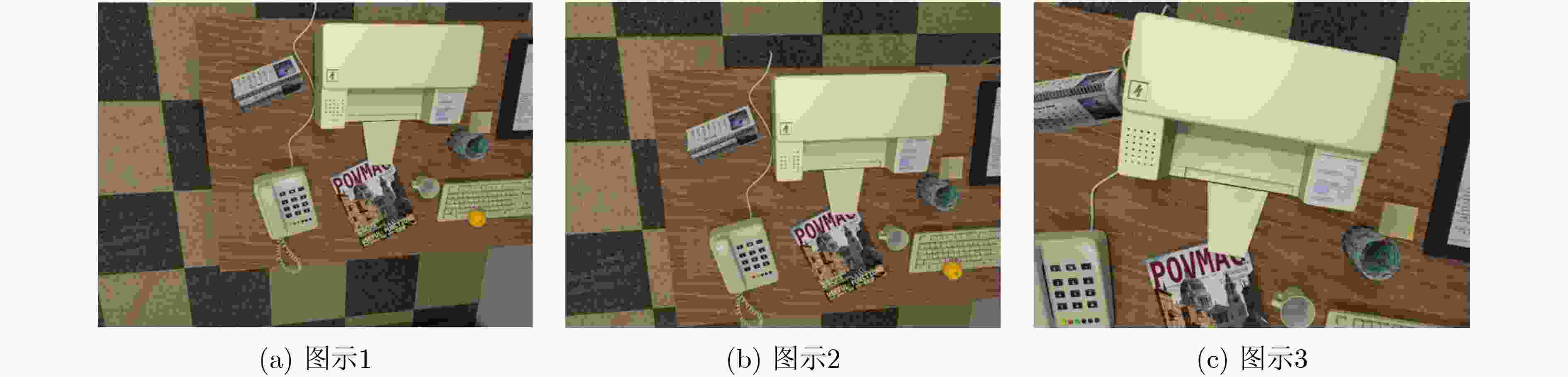

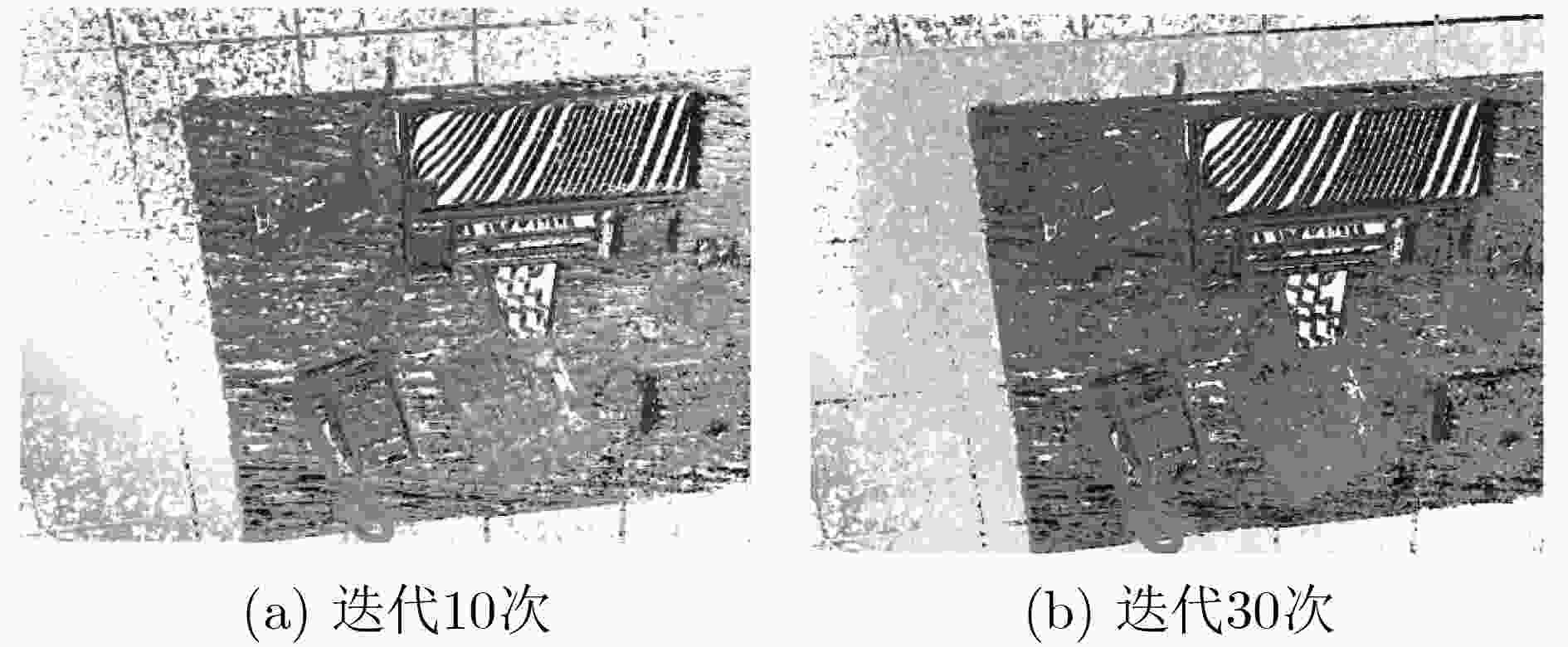

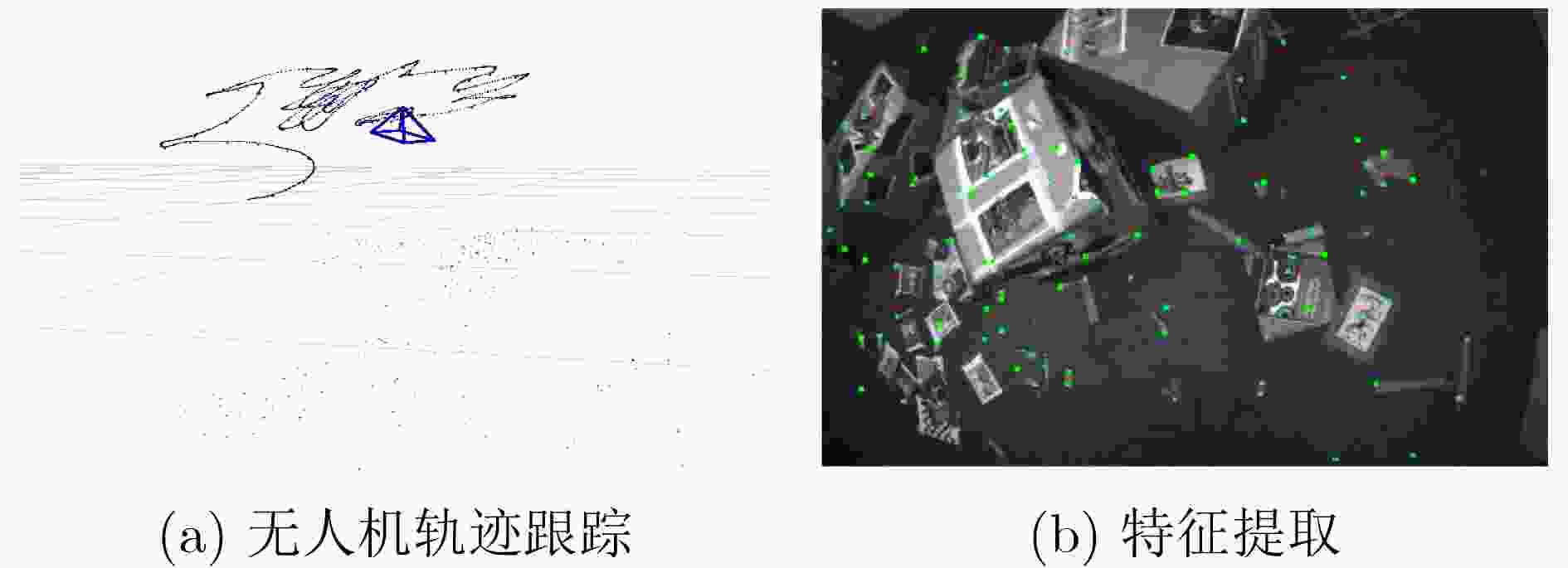

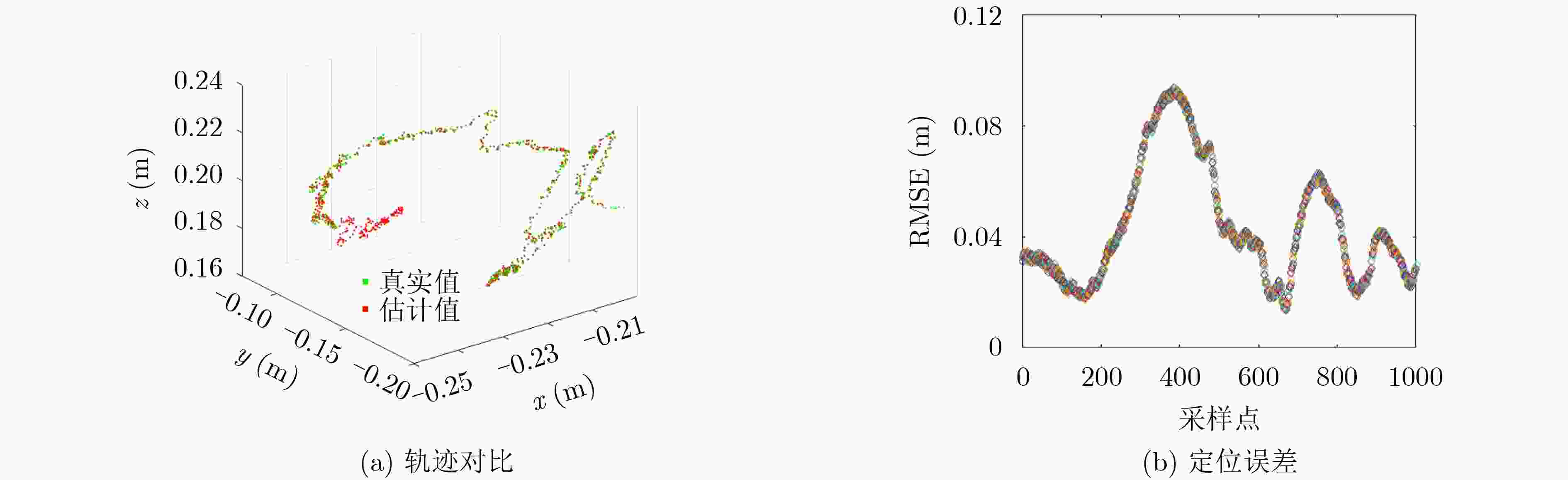

摘要: 目前,无人机定位技术主要依赖以GPS(Global Positioning System)为代表的全球定位系统,然而在室内等GPS信号缺失的地方进行定位则比较困难。另外,传统的室内定位技术主要采用蓝牙、WiFi、基站定位等多种方式融合成一套定位体系,但是该类方法受环境的影响比较大,而且往往需要部署多个设备。此外,这种方式只能得到远近信息,无法知道设备在空间中的姿态。该文提出一种基于单目视觉的无人机室内定位方法。首先,通过无人机机载相机拍摄的图像,结合特征点法和直接法,先跟踪特征点,然后利用直接法根据关键点进行块匹配,估计相机位姿。然后利用深度滤波器对特征点进行深度估计,建立一个当前环境的稀疏地图,最后利用ROS (Robot Operating System)的3维可视化工具RVIZ对真实环境进行仿真。仿真结果表明,所提方法在室内环境下可以获得良好的性能,定位精度达到0.04 m。Abstract: At present, Unmanned Aerial Vehicle (UAV) positioning technology relies mainly on the represented Global Positioning System (GPS). However, it is difficult to locate where GPS signals are missing in the room. On the other hand, the traditional indoor positioning technology uses mainly Bluetooth, WiFi, base station positioning and other methods to merge into a set of positioning system. However, this kind of methods are often affected by the environment, and they needs often to deploy multiple devices. In addition, they can only get far and near information, and can not know the device's posture in space. In this paper, an UAV indoor positioning system is proposed based on monocular vision. Firstly, the image taken by the camera is used, and combined with the feature point method and the direct method, to track the feature points first, then the direct method is used to match the features according to the key points, and then the camera position and posture are estimated. Then, the depth filter is used to estimate the 3D depth of feature points, and a sparse map in the current environment is established. Finally, the real environment is simulated using the three-dimensional visualization tool RVIZ of Robot Operating System (ROS). The simulation results show that the proposed method can achieve good performance in indoor environment, and the positioning accuracy reaches 0.04 m.

-

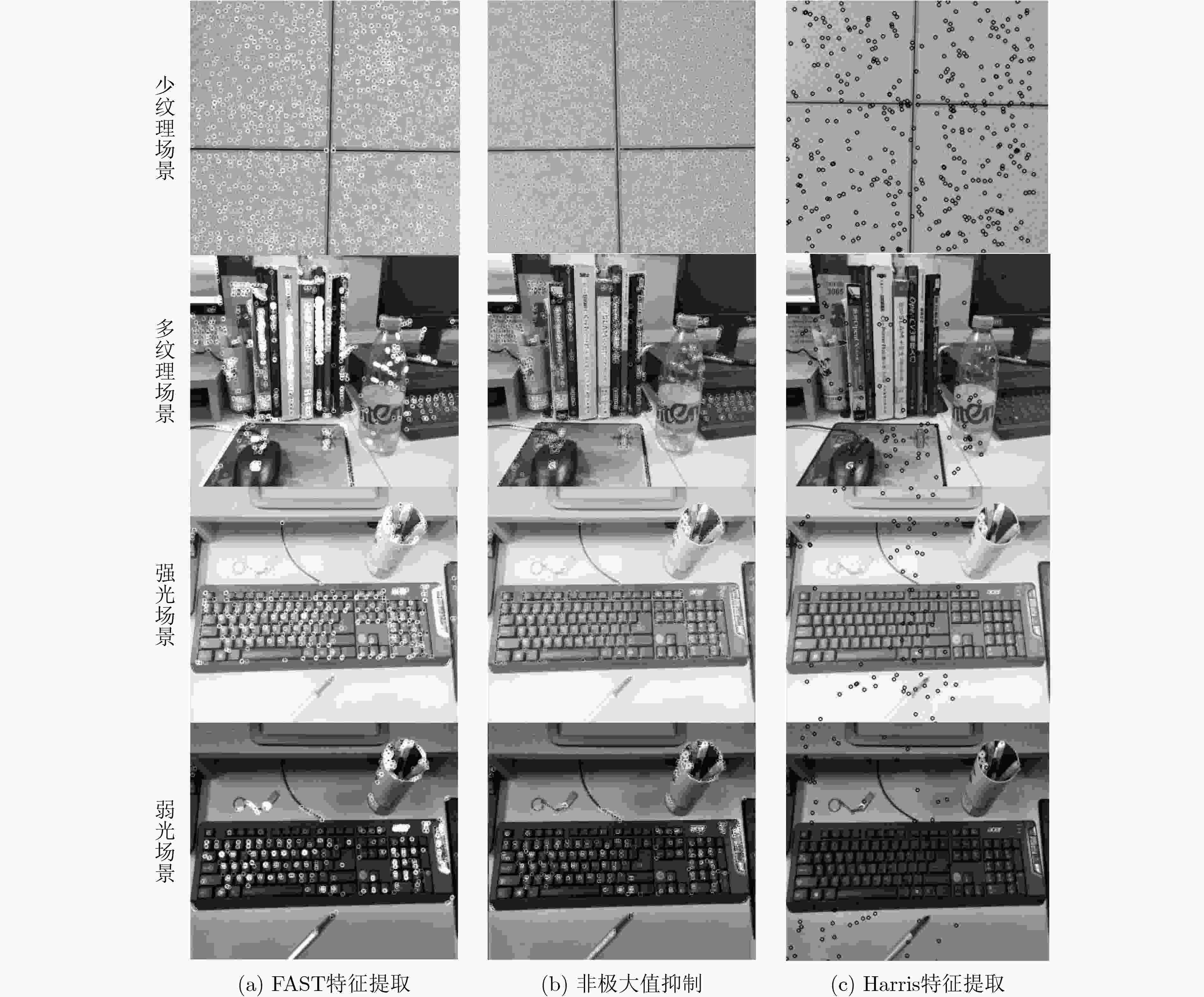

表 1 不同环境下两种算法数据指标对比

算法 少纹理 多纹理 强光 弱光 特征点 时间(ms) 特征点 时间(ms) 特征点 时间(ms) 特征点 时间(ms) FAST 332 204 384 208 213 201 192 207 Harris 262 1492 73 1558 42 1680 57 1528 表 2 本文算法与PTAM算法对比

数据集 本文算法 PTAM RMSE(m) 耗时(s) RMSE(m) 耗时(s) fr1/desk 0.053 9.32 0.057 13.77 fr1/desk2 0.047 7.45 0.051 11.54 fr1/room 0.041 10.20 0.049 15.68 fr2/xyz 0.056 9.89 0.062 12.42 fr3/office 0.042 9.55 0.048 12.78 -

[1] FAN Bangkui, LI Yun, ZHANG Ruiyu, et al. Review on the technological development and application of UAV systems[J]. Chinese Journal of Electronics, 2020, 29(2): 199–207. doi: 10.1049/cje.2019.12.006 [2] 陈友鹏, 李雷, 赖刘生, 等. 多旋翼无人机的特点及应用[J]. 时代汽车, 2021(16): 20–21. doi: 10.3969/j.issn.1672-9668.2021.16.010CHEN Youpeng, LI Lei, LAI Liusheng, et al. Features and applications of multi-rotor UAV[J]. Auto Time, 2021(16): 20–21. doi: 10.3969/j.issn.1672-9668.2021.16.010 [3] NEMRA A and AOUF N. Robust INS/GPS sensor fusion for UAV localization using SDRE nonlinear filtering[J]. IEEE Sensors Journal, 2010, 10(4): 789–798. doi: 10.1109/JSEN.2009.2034730 [4] ZAFARI F, GKELIAS A, and LEUNG K K. A survey of indoor localization systems and technologies[J]. IEEE Communications Surveys & Tutorials, 2019, 21(3): 2568–2599. doi: 10.1109/COMST.2019.2911558 [5] 赵帅杰. 基于WiFi/蓝牙融合的室内定位算法研究[D]. [硕士论文], 桂林电子科技大学, 2020.ZHAO Shuaijie. Research on indoor location algorithm based on WiFi and Bluetooth fusion[D]. [Master dissertation], Guilin University of Electronic Technology, 2020. [6] TAKETOMI T, UCHIYAMA H, and IKEDA S. Visual SLAM algorithms: A survey from 2010 to 2016[J]. IPSJ Transactions on Computer Vision and Applications, 2017, 9(1): 16. doi: 10.1186/s41074-017-0027-2 [7] SILVEIRA G, MALIS E, and RIVES P. An efficient direct approach to visual SLAM[J]. IEEE Transactions on Robotics, 2008, 24(5): 969–979. doi: 10.1109/TRO.2008.2004829 [8] DAVISON A J, REID I D, MOLTON N D, et al. MonoSLAM: Real-time single camera SLAM[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(6): 1052–1067. doi: 10.1109/TPAMI.2007.1049 [9] KLEIN G and MURRAY D. Parallel tracking and mapping for small AR workspaces[C]. Proceedings of the 6th IEEE And ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 2007: 225–234. [10] MUR-ARTAL R, MONTIEL J M M, and TARDÓS J D. ORB-SLAM: A versatile and accurate monocular SLAM system[J]. IEEE Transactions on Robotics, 2015, 31(5): 1147–1163. doi: 10.1109/TRO.2015.2463671 [11] ENGEL J, SCHÖPS T, and CREMERS D. LSD-SLAM: Large-scale direct monocular SLAM[C]. Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 834–849. [12] FORSTER C, ZHANG Zichao, GASSNER M, et al. SVO: Semidirect visual odometry for monocular and multicamera systems[J]. IEEE Transactions on Robotics, 2017, 33(2): 249–265. doi: 10.1109/TRO.2016.2623335 [13] BAKER S and MATTHEWS I. Lucas-kanade 20 years on: A unifying framework[J]. International Journal of Computer Vision, 2004, 56(3): 221–255. doi: 10.1023/B:VISI.0000011205.11775.fd [14] TRIGGS B, MCLAUCHLAN P F, HARTLEY R I, et al. Bundle adjustment—a modern synthesis[C]. Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 1999: 298–372. [15] VISWANATHAN D G. Features from accelerated segment test (FAST)[C]. Proceedings of the 10th workshop on Image Analysis for Multimedia Interactive Services, London, UK, 2009: 6–8. [16] DOLLÁR P, APPEL R, BELONGIE S, et al. Fast feature pyramids for object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(8): 1532–1545. doi: 10.1109/TPAMI.2014.2300479 [17] CHIU L C, CHANG T S, CHEN J Y, et al. Fast SIFT design for real-time visual feature extraction[J]. IEEE Transactions on Image Processing, 2013, 22(8): 3158–3167. doi: 10.1109/TIP.2013.2259841 [18] MISTRY S and PATEL A. Image stitching using Harris feature detection[J]. International Research Journal of Engineering and Technology (IRJET) , 2016, 3(4): 1363–1369. [19] PIZZOLI M, FORSTER C, and SCARAMUZZA D. REMODE: Probabilistic, monocular dense reconstruction in real time[C]. Proceedings of 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 2014: 2609–2616. -

下载:

下载:

下载:

下载: