Deblurring and Restoration of Gastroscopy Image Based on Gradient-guidance Generative Adversarial Networks

-

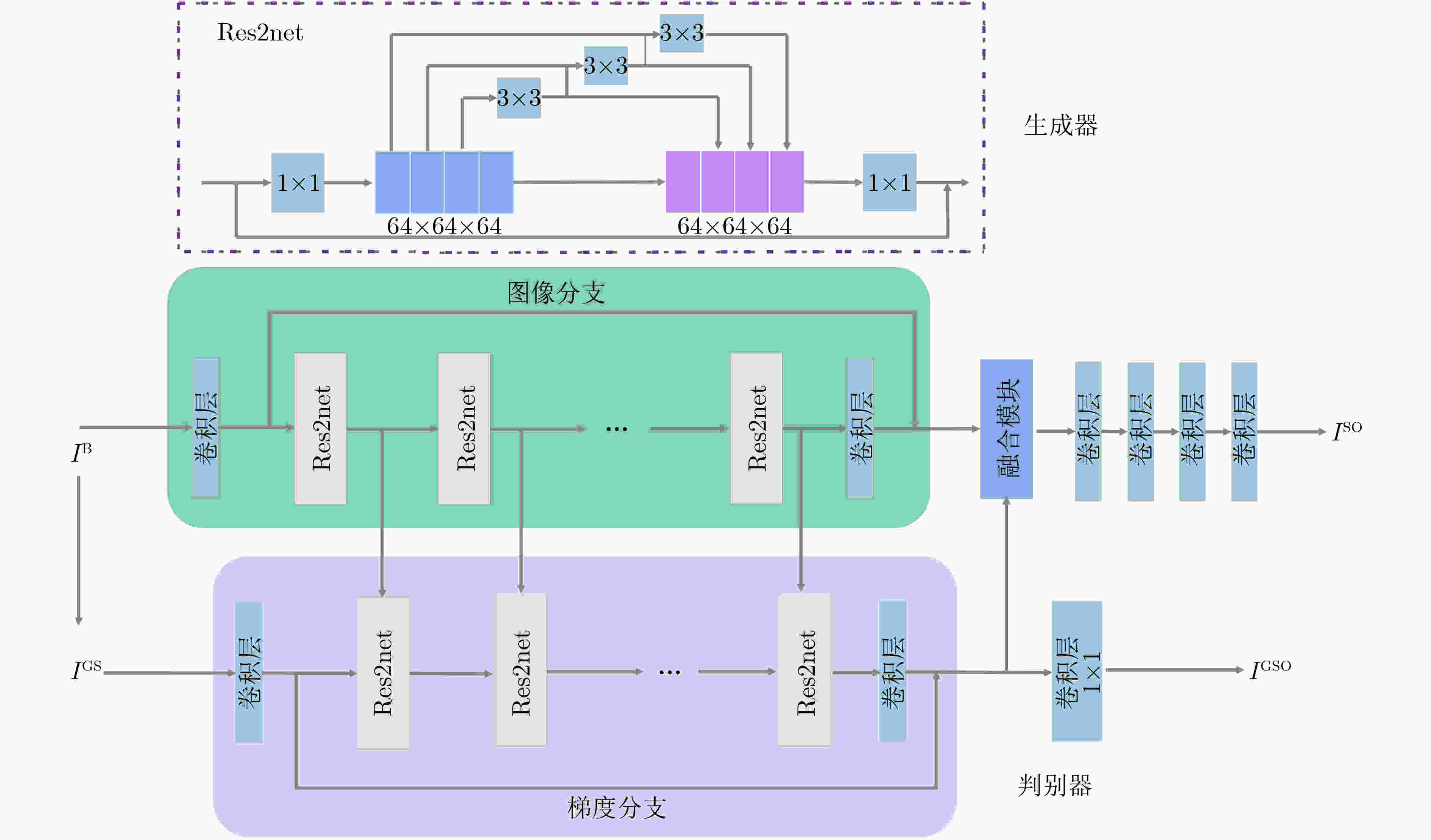

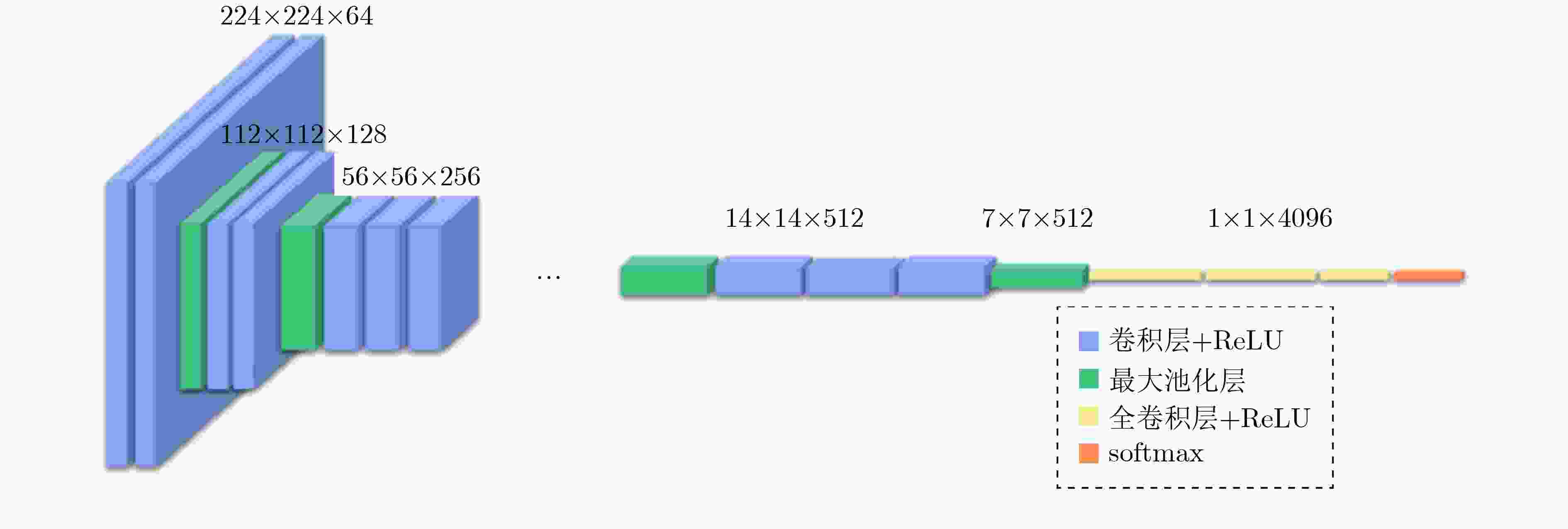

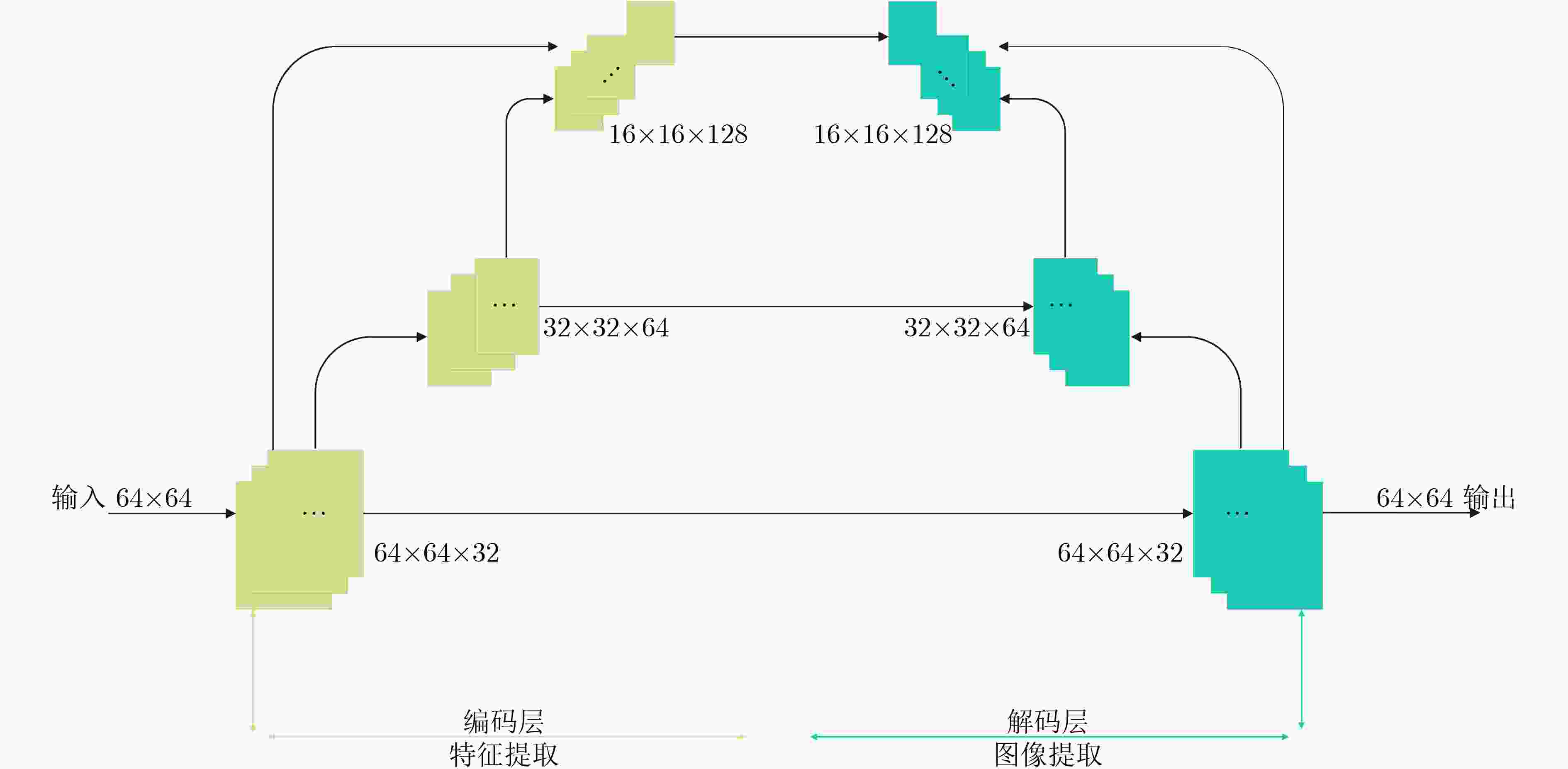

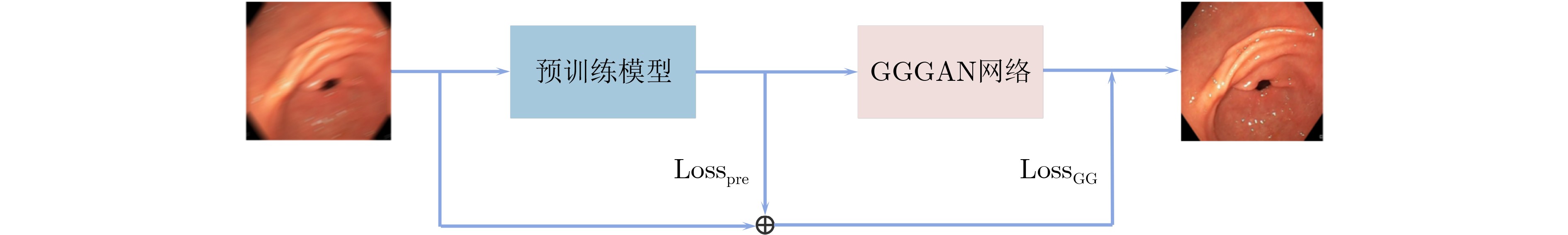

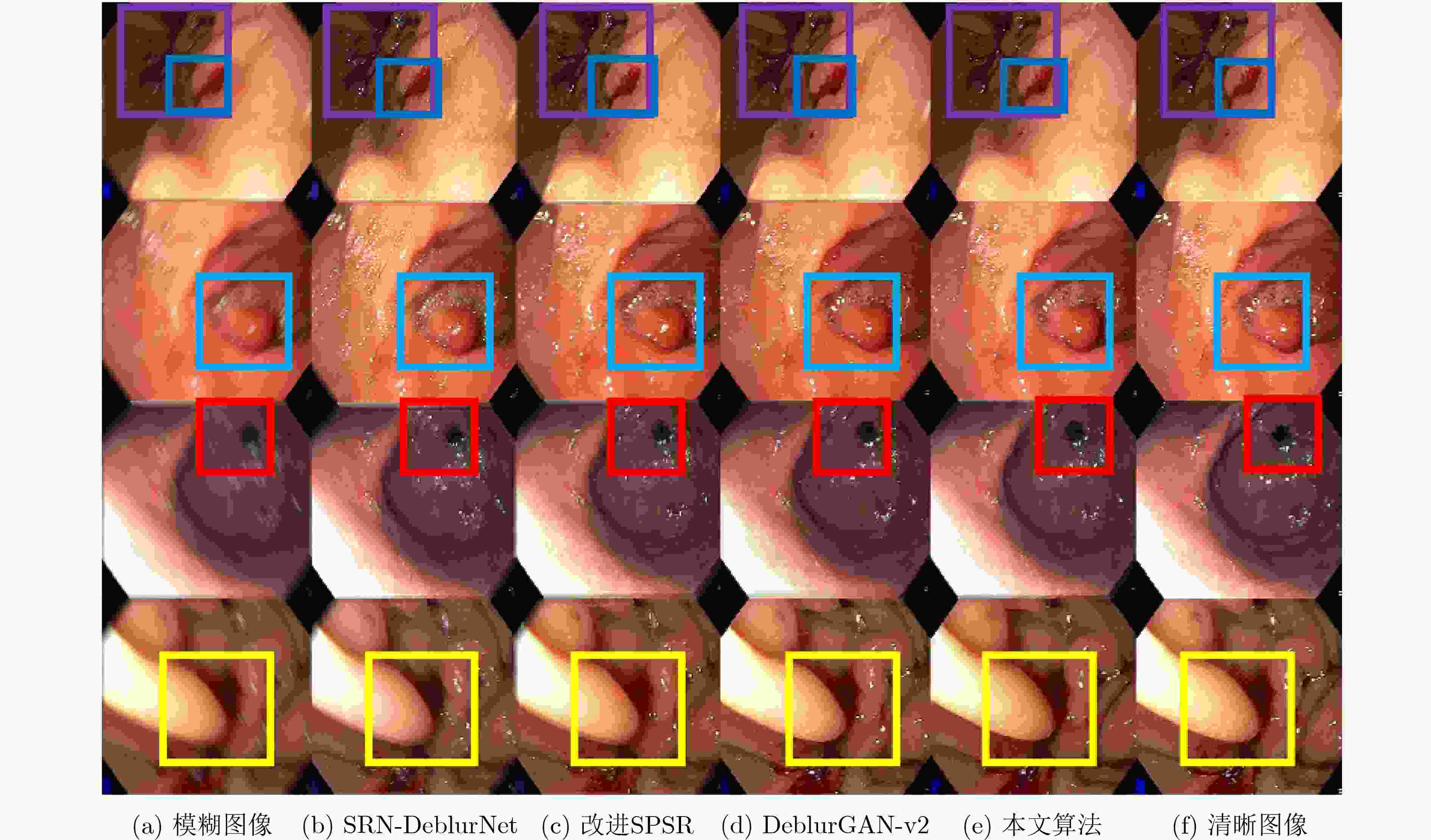

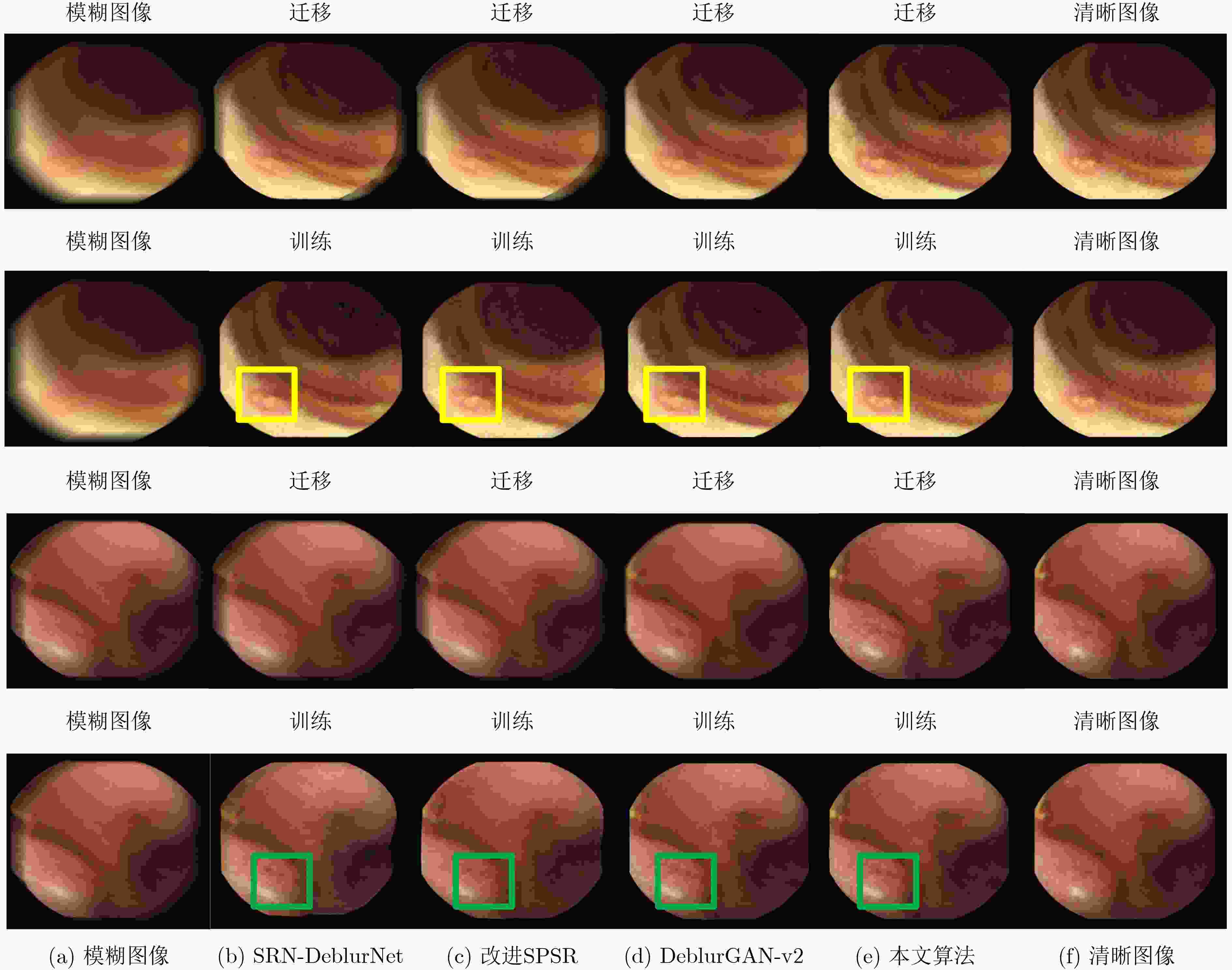

摘要: 胃肠镜检查是目前临床上检查和诊断消化道疾病最重要的途径,内窥镜图像的运动模糊会对医生诊断和机器辅助诊断造成干扰。现有的去模糊网络由于缺乏对结构信息的关注,在处理内窥镜图像时普遍存在着伪影和结构变形的问题。为解决这一问题,提高胃镜图像质量,该文提出一种基于梯度指导的生成对抗网络,网络以多尺度残差网络(Res2net)结构作为基础模块,包含图像信息支路和梯度支路两个相互交互的支路,通过梯度支路指导图像去模糊重建,从而更好地保留图像结构信息,消除伪影、缓解结构变形;设计了类轻量化预处理网络来纠正过度模糊,提高训练效率。在传统胃镜和胶囊胃镜数据集上分别进行了实验,实验结果表明,该算法的峰值信噪比(PSNR)和结构相似度(SSIM)指标均优于对比算法,且复原后的视觉效果更佳,无明显伪影和结构变形。Abstract: Gastrointestinal endoscopy plays a critical role in examination and diagnosis upper gastrointestinal diseases. The motion blur of endoscopic images can interfere with doctor's judgment and machine-assisted diagnosis. Due to the lack of attention to structural information in existing deblurring networks, artifacts and structural distortions occur easily when processing endoscopic images. In order to solve this problem and improve the image quality of gastroscopy, a gradient-guided generative adversarial network is proposed in this paper. The network uses the Res2net structure as the backbone, including two interactive branches, the image branch with its intensity and the gradient one. The gradient branch guides the deblurring and reconstruction of the image which in the other branch. Thus more structure information of the image can be kept, with less artifacts and alleviating structural deformation. A quasi-lightweight preprocessing network is designed to correct excessive blur and improve training efficiency. Experiments are performed on the traditional gastroscopy and the capsule gastroscopy datasets. The test results show that the Peak Signal to Noise Ratio(PSNR) and Structural SIMilarity(SSIM) indicators of the algorithm are better than those of the comparison algorithms, and the visual effect of the restored image is evidently improved, without obvious artifacts and structural deformation.

-

Key words:

- Gastroscopy image /

- Deblurring /

- Generative adversarial network /

- Gradient guidance

-

表 1 不同算法在传统胃镜数据集上的指标测试结果

SRN-DeblurNet 改进SPSR DeblurGAN-v2 本文算法 峰值信噪比(PSNR) 26.40 26.47 26.55 26.89 结构相似度(SSIM) 0.799 0.815 0.818 0.849 表 2 不同算法在胶囊胃镜数据集上的指标测试结果

SRN-DeblurNet 改进SPSR DeblurGAN-v2 本文算法 峰值信噪比(PSNR) 迁移 26.93 28.33 28.79 28.83 训练 28.01 28.70 28.81 29.04 结构相似度(SSIM) 迁移 0.777 0.767 0.783 0.801 训练 0.795 0.771 0.813 0.826 -

[1] All cancers source: Globocan 2020[EB/OL]. https://gco.iarc.fr/today/data/factsheets/cancers/39-All-cancers-fact-sheet.pdf, 2020. [2] MAHESH M M R, RAJAGOPALAN A N, and SEETHARAMAN G. Going unconstrained with rolling shutter deblurring[C]. 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 4030–4038. [3] TAO Xin, GAO Hongyun, SHEN Xiaoyong, et al. Scale-recurrent network for deep image deblurring[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8174–8182. [4] KUPYN O, BUDZAN V, MYKHAILYCH M, et al. DeblurGAN: Blind motion deblurring using conditional adversarial networks[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8183–8192. [5] KUPYN O, MARTYNIUK T, WU Junru, et al. DeblurGAN-v2: Deblurring (orders-of-magnitude) faster and better[C]. The IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 8877–8886. [6] YAN Qing, XU Yi, YANG Xiaokang, et al. Single image superresolution based on gradient profile sharpness[J]. IEEE Transactions on Image Processing, 2015, 24(10): 3187–3202. doi: 10.1109/TIP.2015.2414877 [7] ZHU Yu, ZHANG Yanning, BONEV B, et al. Modeling deformable gradient compositions for single-image super-resolution[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 5417–5425. [8] MA Cheng, RAO Yongming, CHENG Ye’an, et al. Structure-preserving super resolution with gradient guidance[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 7766–7775. [9] TRAN P, TRAN A T, PHUNG Q, et al. Explore image deblurring via encoded blur kernel space[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 11951–11960. [10] CHI Zhixiang, WANG Yang, YU Yuanhao, et al. Test-time fast adaptation for dynamic scene deblurring via meta-auxiliary learning[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 9133–9142. [11] CHEN Liang, ZHANG Jiawei, PAN Jinshan, et al. Learning a non-blind deblurring network for night blurry images[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 10537–10545. [12] DONG Jiangxin, ROTH S, and SCHIELE B. Learning spatially-variant MAP models for non-blind image deblurring[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 4884–4893. [13] GAO Shanghua, CHENG Mingming, ZHAO Kai, et al. Res2Net: A new multi-scale backbone architecture[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(2): 652–662. doi: 10.1109/TPAMI.2019.2938758 [14] WANG Xintao, YU Ke, WU Shixiang, et al. ESRGAN: Enhanced super-resolution generative adversarial networks[C]. The European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 2019: 63–79. [15] HUANG Gao, LIU Zhuang, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]. IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2261–2269. [16] SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]. 3rd International Conference on Learning Representations, San Diego, USA, 2015: 1–14. [17] GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial nets[C]. The 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2014: 2672–2680. [18] RONNEBERGER O, FISCHER P, and BROX T. U-Net: Convolutional networks for biomedical image segmentation[C]. 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234–241. [19] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. [20] ALI S, ZHOU F, DAUL C, et al. Endoscopy artifact detection (EAD 2019) challenge dataset[EB/OL]. https://arxiv.org/abs/1905.03209, 2019. [21] KOULAOUZIDIS A, IAKOVIDIS D K, YUNG D E, et al. KID Project: An internet-based digital video atlas of capsule endoscopy for research purposes[J]. Endoscopy International Open, 2017, 5(6): E477–E483. doi: 10.1055/s-0043-105488 [22] HORÉ A and ZIOU D. Image quality metrics: PSNR vs. SSIM[C]. 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 2010: 2366–2369. [23] WANG Zhou, BOVIK A C, SHEIKH H R, et al. Image quality assessment: From error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600–612. doi: 10.1109/TIP.2003.819861 [24] KINGMA D P and BA J. Adam: A method for stochastic optimization[C]. 3rd International Conference on Learning Representations, San Diego, USA, 2015. -

下载:

下载:

下载:

下载: