Dynamic Resource Allocation Based on K-armed Bandit for Multi-UAV Air-Ground Network

-

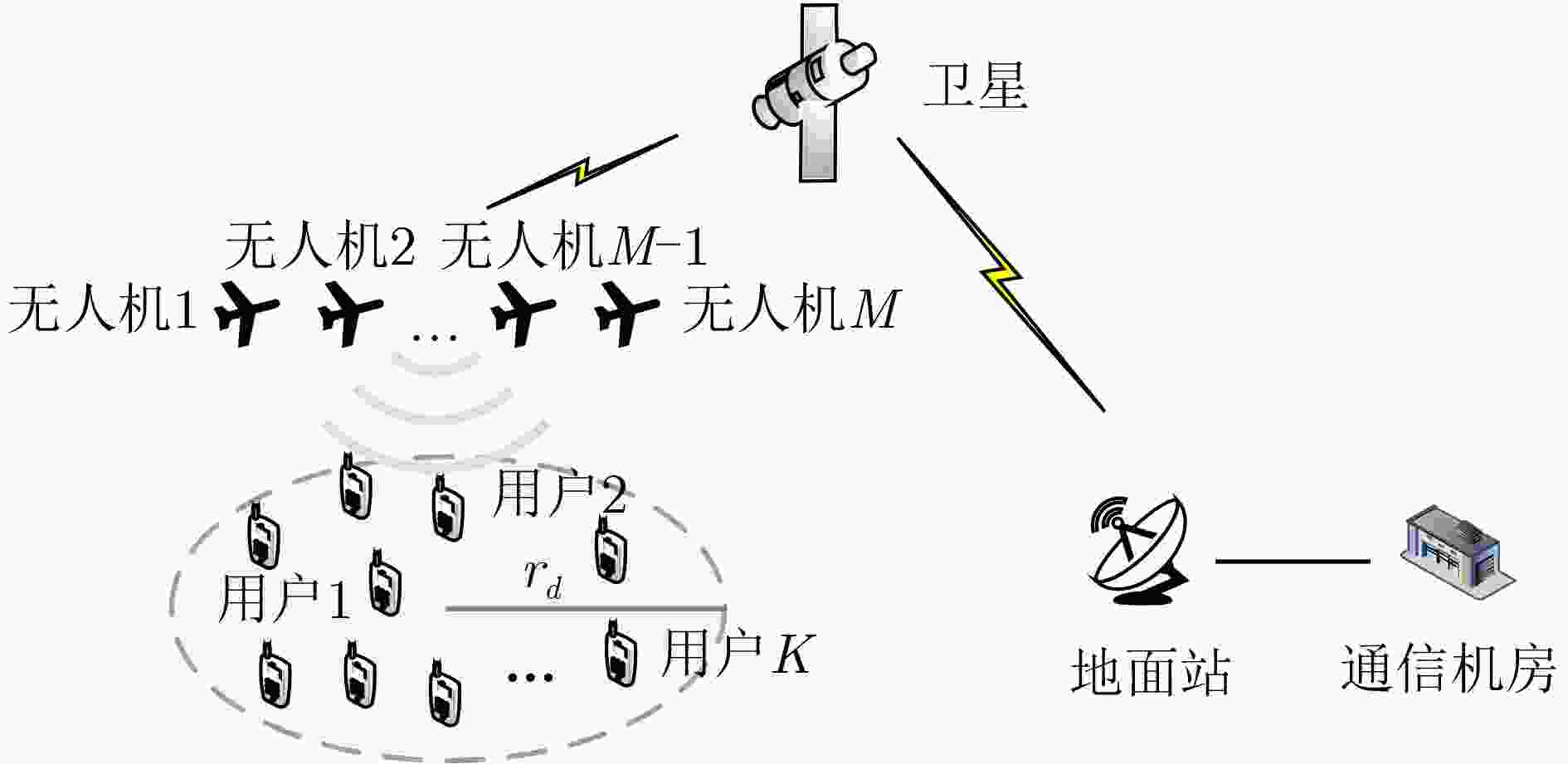

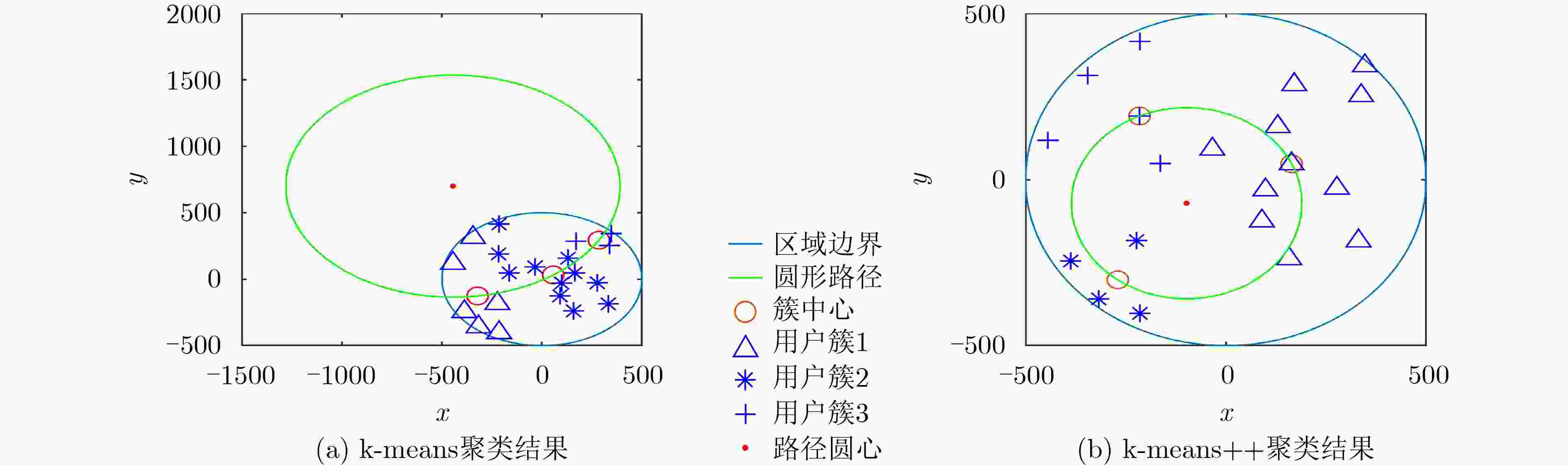

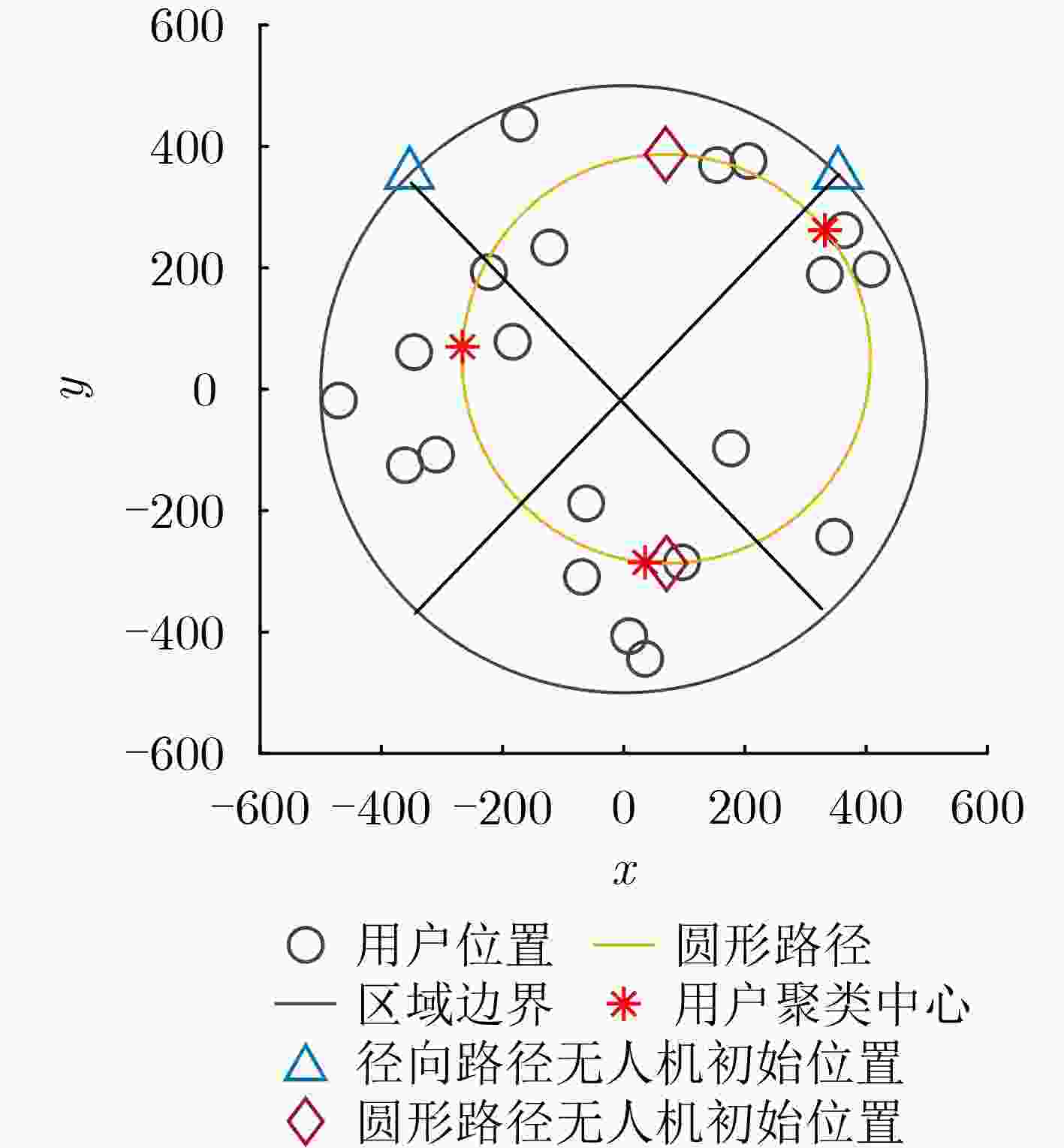

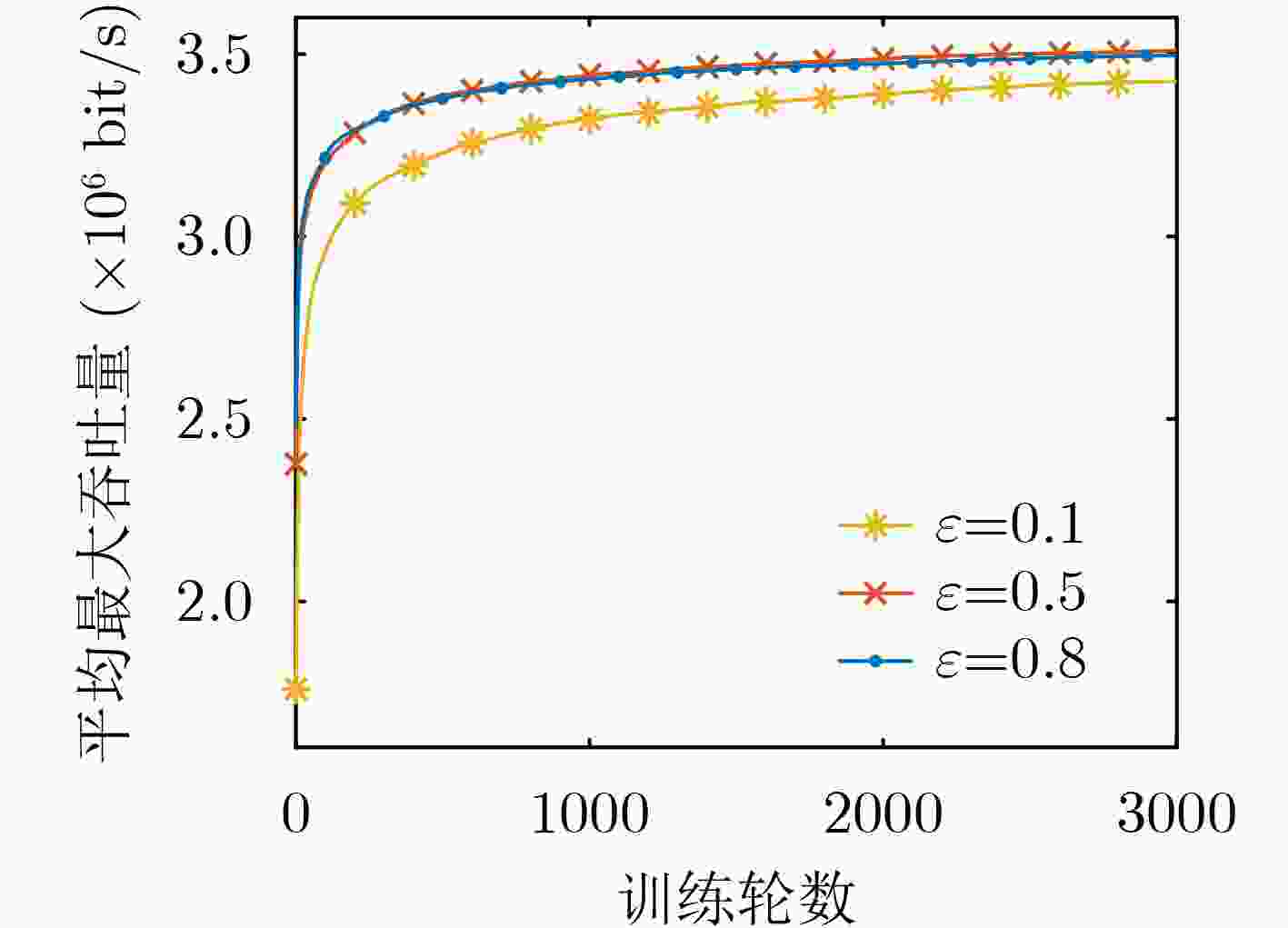

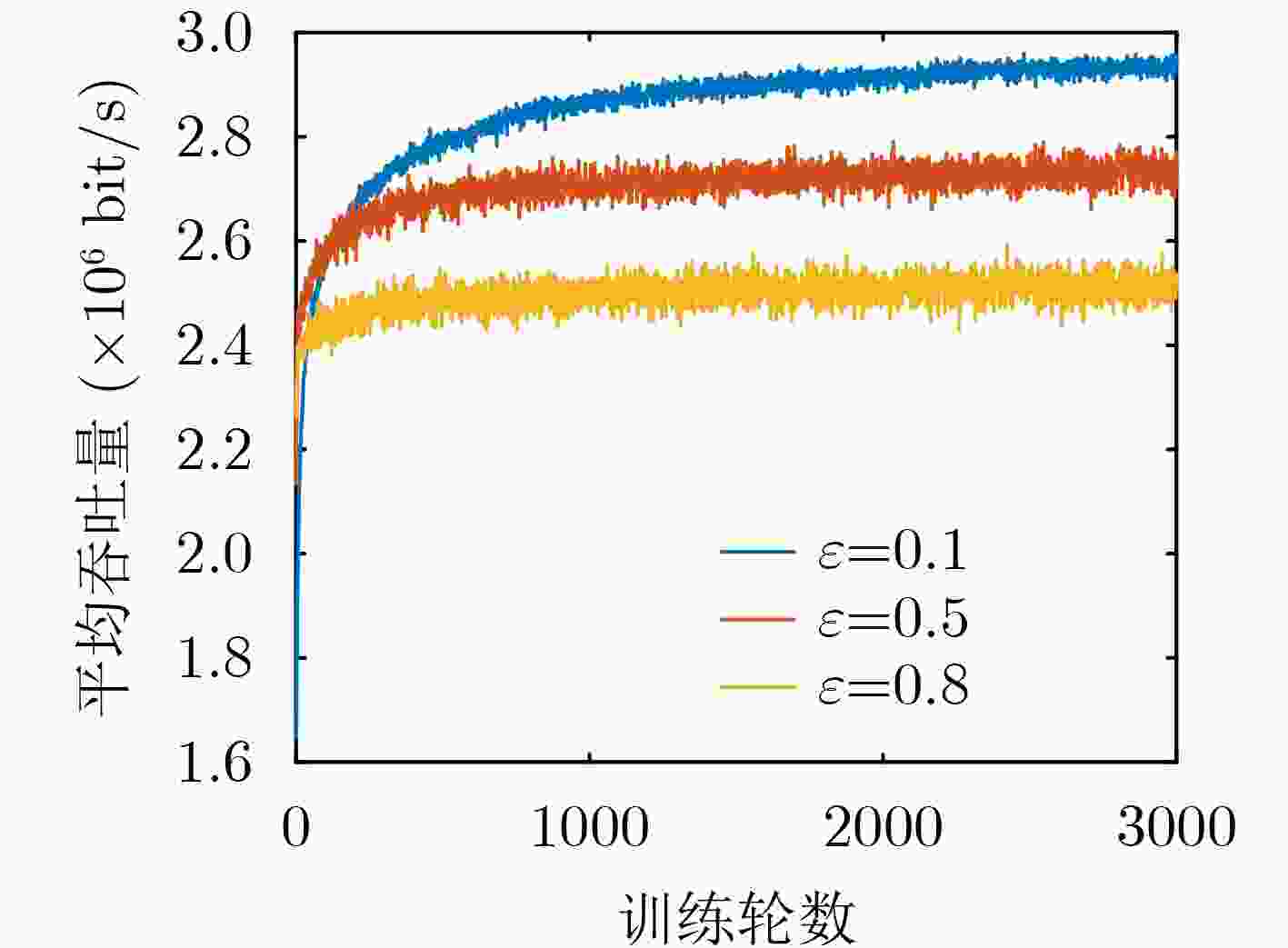

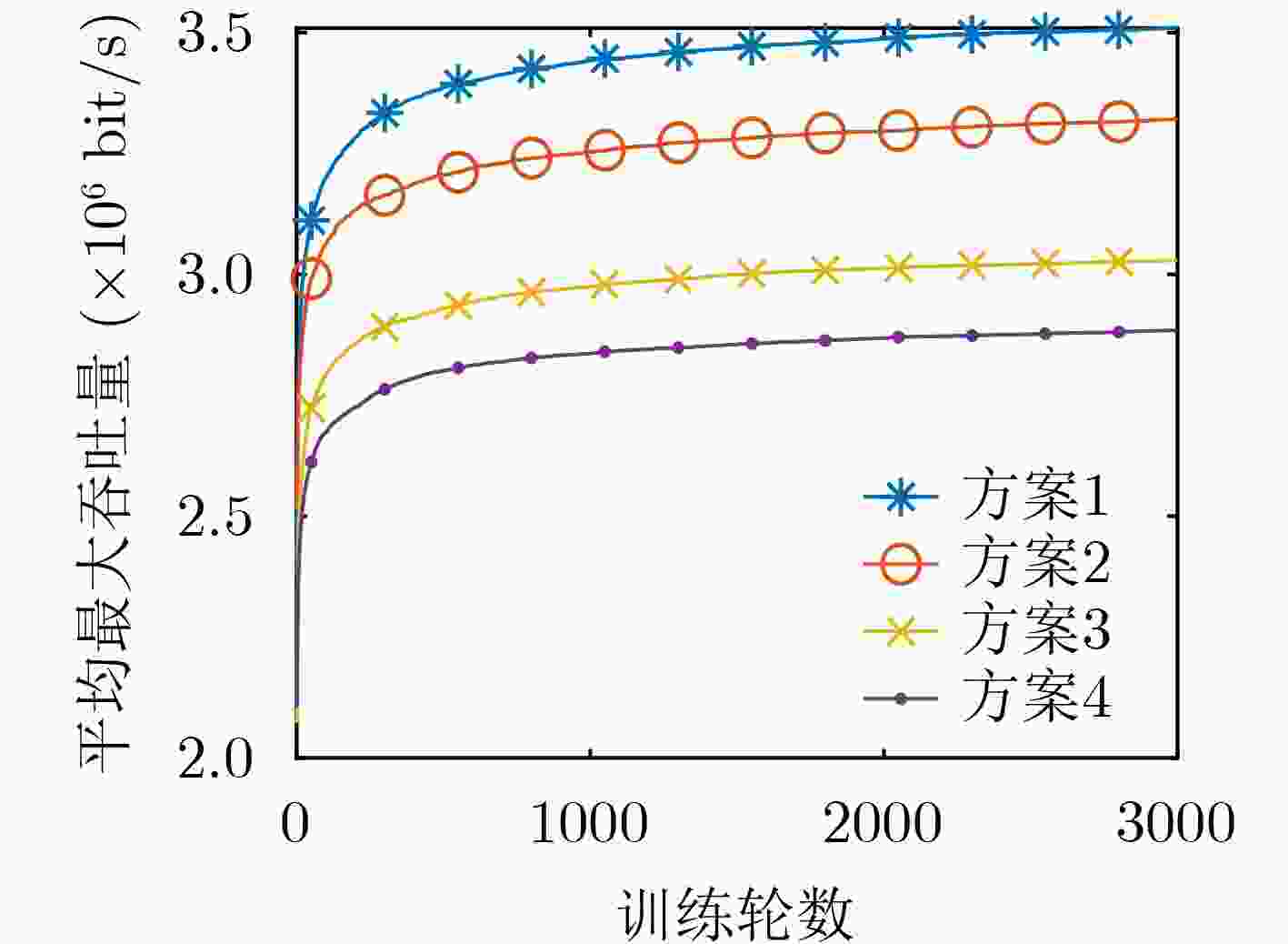

摘要: 针对配置大规模MIMO的多无人机空地网络中的动态资源分配问题,从最大化系统吞吐量的角度出发,该文提出一种基于K-臂赌博机的强化学习算法联合优化多个无人机的用户选择与功率分配策略。首先根据地理位置对用户进行分簇,利用簇中心节点规划无人机飞行路径;其次在不考虑无人机之间端到端通信的情况下,将多无人机资源分配问题转化为相互独立的多个智能体强化学习问题;最后提出分幕式多智能体多状态K-臂赌博机算法来实现用户选择与功率分配的联合优化。通过将无人机每个时刻的位置索引定义为状态空间,从而使得无人机可动态适配自身位置及信道的动态变化。仿真结果表明,所提方案可根据环境状态变化自主智能调整资源分配策略,相比于已有方案能有效提升系统总吞吐量。Abstract: In view of the problem of resource allocation in the Unmanned Aerial Vehicle (UAV) enabled air-ground network with massive MIMO, a K-armed bandit-based reinforcement learning algorithm is proposed to jointly optimize the user selection and power allocation to maximize the total throughput of ground users. Firstly, users are clustered according to their geographic location, and the cluster center nodes are used to plan the trajectory of UAVs. Secondly, without considering the UAV-UAV communication links, the problem of multi-UAV resource allocation is transformed into a mutually independent multi-agent reinforcement learning problem. Finally, an episode-based K-armed bandit algorithm with multi-agent and multi-state is proposed to realize the joint optimization of user selection and power allocation, so that the UAV can dynamically adapt to the changes of its position and channel state by defining the position index of the UAV as the state space. Simulation results verify that the proposed algorithm can adaptively adjust the resource allocation strategy according to the channel conditions, which can effectively improve the total system throughput compared with the existing schemes.

-

表 1 基于k-means++的簇中心选择算法

初始化:分簇数$ {k_{\text{c}}} $ (1)在所有用户中随机选择第1个簇中心,记为${c_1}$; (2)计算其他所有用户到${c_1}$的水平距离,将其他用户到${c_1}$的水平距

离记为$d({{\mathbf{v}}_k},{c_1})$;(3)从所有用户中选择第2个簇中心节点${c_2}$,选择第$m$个用户的概

率为

$ \dfrac{{{d^2}({{\mathbf{v}}_m},{c_1})}}{{\displaystyle\sum\limits_{j = 1}^K {{d^2}({{\mathbf{v}}_j},{c_1})} }} $ (12)

(4)要选择中心$j$,需要执行以下操作:(a) 计算从每个观测值到每个簇中心节点的距离,并将每个观测

值分配给其最近的簇;(b) 对于$m = 1,2, \cdots ,K$和$p = 1,2, \cdots ,{k_{\text{c}}} - 1$,从所有用户中随

机选择中心$j$,其概率为

$ \dfrac{{{d^2}({{\mathbf{v}}_m},{c_p})}}{{\displaystyle\sum\limits_{\{ h;{{\mathbf{v}}_h} \in {C_p}\} } {{d^2}({{\mathbf{v}}_h},{c_p})} }} $ (13)

其中,${C_p}$是所有最接近簇中心节点${c_p}$的用户的集合,而

${{\mathbf{v}}_m} \in {C_p}$,也就是说,选择每个后续中心时,其选择概率与它到

已选最近中心的距离成比例;(5)重复步骤(4),直到选择了${k_{\text{c}}}$个中心。 表 2 分幕式多智能体多状态K-臂赌博机用户选择和功率分配算法

初始化:探索参数$\varepsilon $,最大训练幕数${N_{{\text{epi}}}}$,状态-动作价值函数$ Q_m^1(s,a) = 0 $,$\forall m \in \mathcal{M}$; (1)对于所有无人机,给定初始状态${s_m}(0)$; (2)${N_{{\text{epi}}}} = {N_{{\text{epi}}}} - 1$; (3)对幕中的每一步循环,$t = 1,2, \cdots ,T$,每架无人机独立执行以下步骤: (a) 依据策略$ \pi _m^\varepsilon $选取动作${a_m}(t)$; (b) 执行动作${a_m}(t)$,获得即时回报${R_m}(t + 1)$,状态转换为${s_m}(t + 1)$; (c) 更新状态-动作价值函数$ Q_m^{t + 1}(s,a) = Q_m^t(s,a) + \alpha (R_m^t - Q_m^t(s,a)) $; (4)重复步骤(1)—步骤(3),直到${N_{{\text{epi}}}} = 0$。 -

[1] Qualcomm Technologies, Inc. LTE unmanned aircraft systems[R]. Trial Report v1.0. 1, 2017. [2] LIU Xin, ZHAI X B, LU Weidang, et al. QoS-guarantee resource allocation for multibeam satellite industrial internet of things with NOMA[J]. IEEE Transactions on Industrial Informatics, 2021, 17(3): 2052–2061. doi: 10.1109/TII.2019.2951728 [3] ZHAO Nan, LU Weidang, SHENG Min, et al. UAV-assisted emergency networks in disasters[J]. IEEE Wireless Communications, 2019, 26(1): 45–51. doi: 10.1109/MWC.2018.1800160 [4] MOZAFFARI M, SAAD W, BENNIS M, et al. Efficient deployment of multiple unmanned aerial vehicles for optimal wireless coverage[J]. IEEE Communications Letters, 2016, 20(8): 1647–1650. doi: 10.1109/LCOMM.2016.2578312 [5] LYU Jiangbin, ZENG Yong, ZHANG Rui, et al. Placement optimization of UAV-mounted mobile base stations[J]. IEEE Communications Letters, 2017, 21(3): 604–607. doi: 10.1109/LCOMM.2016.2633248 [6] LIU Liang, ZHANG Shuowen, and ZHANG Rui. CoMP in the sky: UAV placement and movement optimization for multi-user communications[J]. IEEE Transactions on Communications, 2019, 67(8): 5645–5658. doi: 10.1109/TCOMM.2019.2907944 [7] HAMMOUTI H E, BENJILLALI M, SHIHADA B, et al. Learn-as-you-fly: A distributed algorithm for joint 3D placement and user association in multi-UAVs networks[J]. IEEE Transactions on Wireless Communications, 2019, 18(12): 5831–5844. doi: 10.1109/TWC.2019.2939315 [8] ZHANG Shuowen and ZHANG Rui. Trajectory design for cellular-connected UAV under outage duration constraint[C]. 2019 IEEE International Conference on Communications (ICC), Shanghai, China, 2019: 1–6. [9] 张广驰, 严雨琳, 崔苗, 等. 无人机基站的飞行路线在线优化设计[J]. 电子与信息学报, 2021, 43(12): 3605–3611. doi: 10.11999/JEIT200525ZHANG Guangchi, YAN Yulin, CUI Miao, et al. Online trajectory optimization for the UAV-mounted base stations[J]. Journal of Electronics &Information Technology, 2021, 43(12): 3605–3611. doi: 10.11999/JEIT200525 [10] ALZENAD M, EL-KEYI A, LAGUM F, et al. 3-D placement of an unmanned aerial vehicle base station (UAV-BS) for energy-efficient maximal coverage[J]. IEEE Wireless Communications Letters, 2017, 6(4): 434–437. doi: 10.1109/LWC.2017.2700840 [11] ZENG Yong and ZHANG Rui. Energy-efficient UAV communication with trajectory optimization[J]. IEEE Transactions on Wireless Communications, 2017, 16(6): 3747–3760. doi: 10.1109/TWC.2017.2688328 [12] WU Qingqing, ZENG Yong, and ZHANG Rui. Joint trajectory and communication design for multi-UAV enabled wireless networks[J]. IEEE Transactions on Wireless Communications, 2018, 17(3): 2109–2121. doi: 10.1109/TWC.2017.2789293 [13] NIU Haibin, ZHAO Xinyu, and LI Jing. 3D location and resource allocation optimization for UAV-enabled emergency networks under statistical QoS constraint[J]. IEEE Access, 2021, 9: 41566–41576. doi: 10.1109/ACCESS.2021.3065055 [14] ZHANG Qianqian, MOZAFFARI M, SAAD W, et al. Machine learning for predictive on-demand deployment of Uavs for wireless communications[C]. 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, UAE, 2018: 1–6. [15] CHEN Mingzhe, SAAD W, and YIN Changchuan. Liquid state machine learning for resource and cache management in LTE-U unmanned aerial vehicle (UAV) networks[J]. IEEE Transactions on Wireless Communications, 2019, 18(3): 1504–1517. doi: 10.1109/TWC.2019.2891629 [16] ALNAGAR S I, SALHAB A M, and ZUMMO S A. Q-learning-based power allocation for secure wireless communication in UAV-aided relay network[J]. IEEE Access, 2021, 9: 33169–33180. doi: 10.1109/ACCESS.2021.3061406 [17] ARANI A H, HU Peng, and ZHU Yeying. Fairness-aware link optimization for space-terrestrial integrated networks: A reinforcement learning framework[J]. IEEE Access, 2021, 9: 77624–77636. doi: 10.1109/ACCESS.2021.3082862 [18] CUI Jingjing, LIU Yuanwei, and NALLANATHAN A. Multi-agent reinforcement learning-based resource allocation for UAV networks[J]. IEEE Transactions on Wireless Communications, 2020, 19(2): 729–743. doi: 10.1109/TWC.2019.2935201 [19] FENG Wei, WANG Jingchao, CHEN Yunfei, et al. UAV-aided MIMO communications for 5G internet of things[J]. IEEE Internet of Things Journal, 2019, 6(2): 1731–1740. doi: 10.1109/JIOT.2018.2874531 [20] LI Chunguo, SUN Fan, CIOFFI J M, et al. Energy efficient MIMO relay transmissions via joint power allocations[J]. IEEE Transactions on Circuits and Systems II:Express Briefs, 2014, 61(7): 531–535. doi: 10.1109/TCSII.2014.2327317 [21] LI Chunguo, LIU Peng, ZOU Chao, et al. Spectral-efficient cellular communications with coexistent one- and two-hop transmissions[J]. IEEE Transactions on Vehicular Technology, 2016, 65(8): 6765–6772. doi: 10.1109/TVT.2015.2472456 [22] AN Jue and ZHAO Feng. Trajectory optimization and power allocation algorithm in MBS-assisted cell-free massive MIMO systems[J]. IEEE Access, 2021, 9: 30417–30425. doi: 10.1109/ACCESS.2021.3054652 [23] ARTHUR D and VASSILVITSKII S. K-means++: The advantages of careful seeding[C]. The Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, USA, 2007: 1027–1035. -

下载:

下载:

下载:

下载: