Siamese Network Visual Tracking Based on Asymmetric Convolution

-

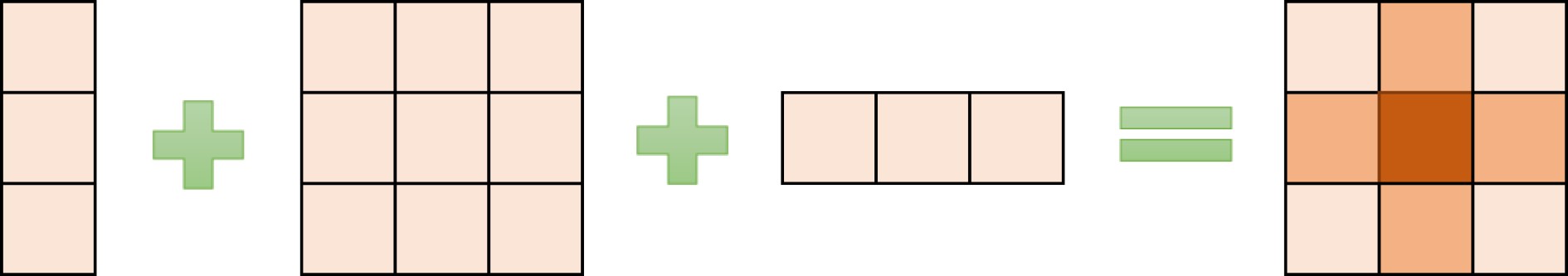

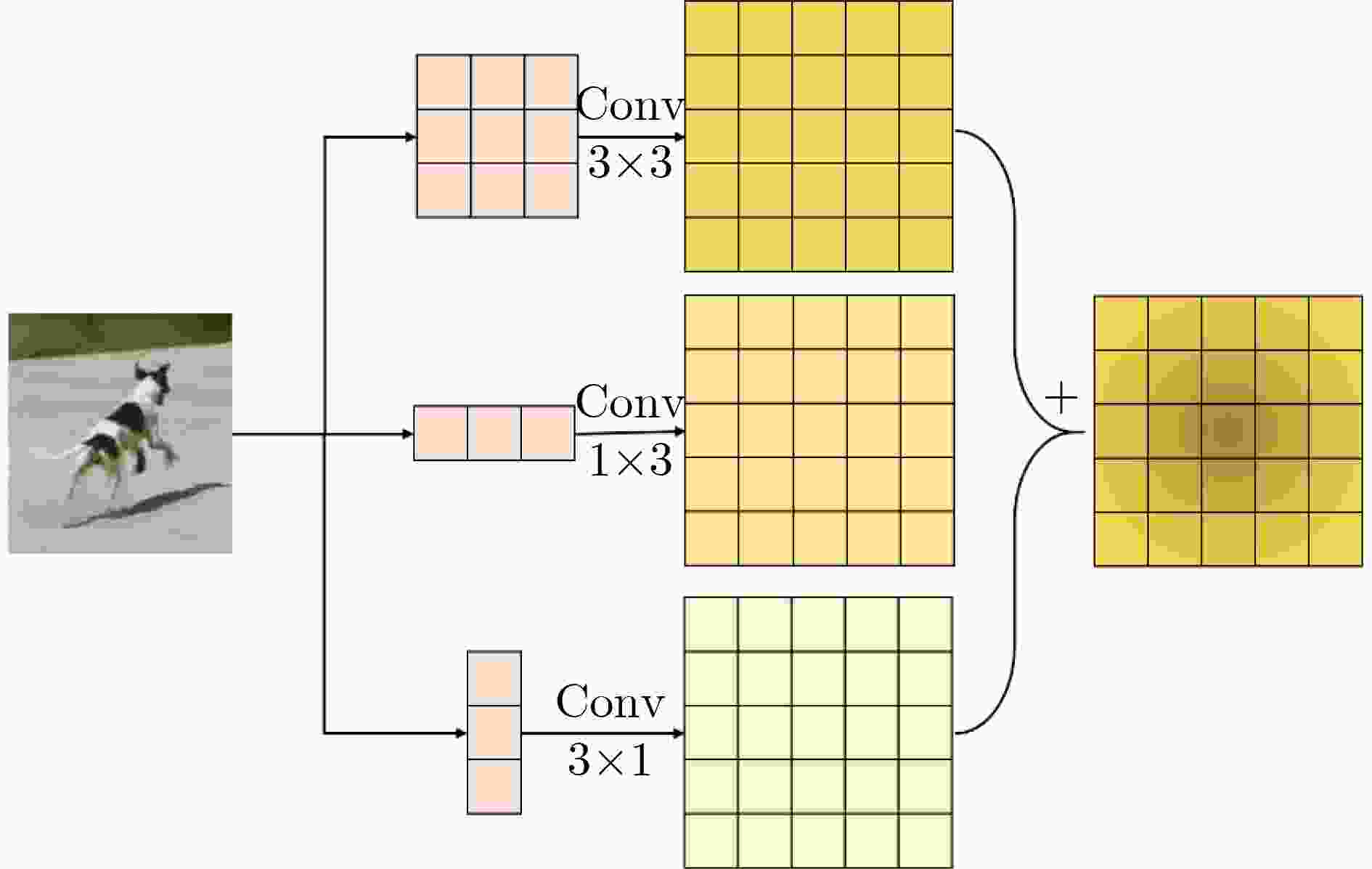

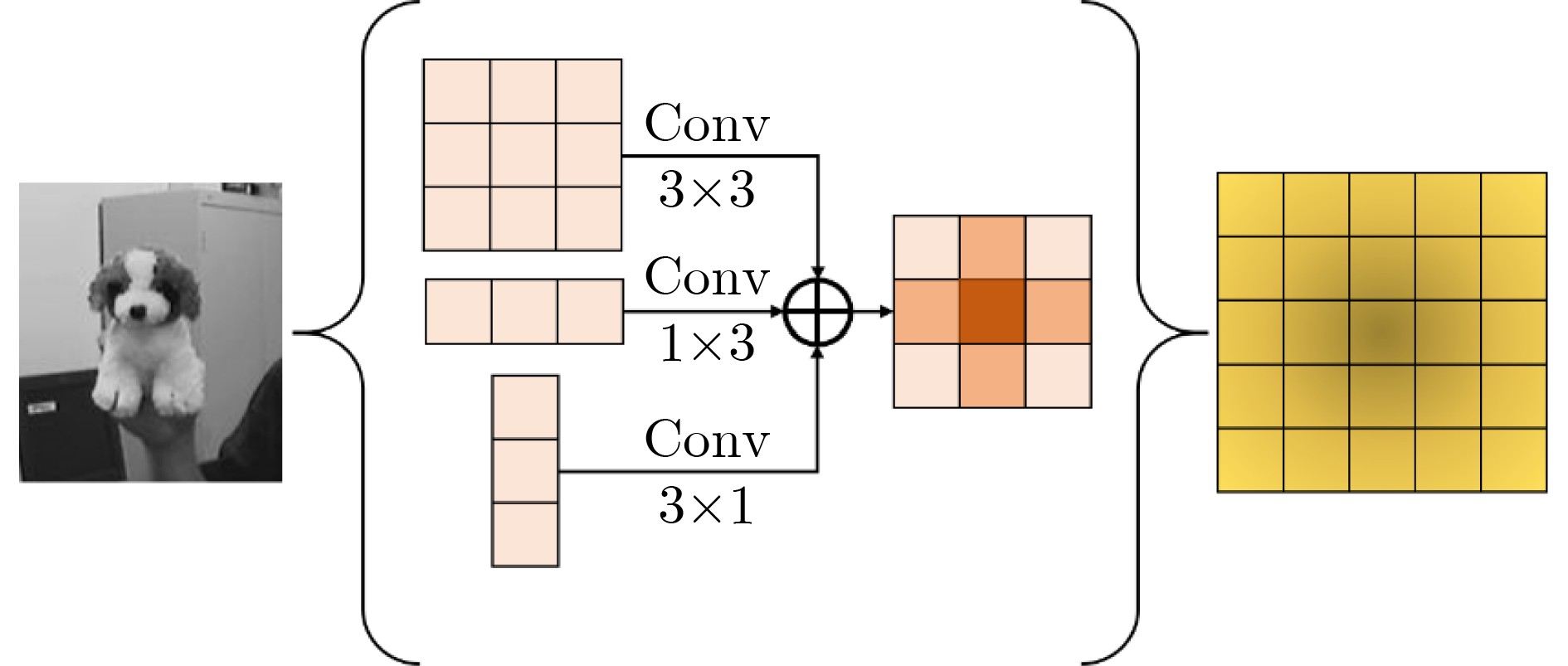

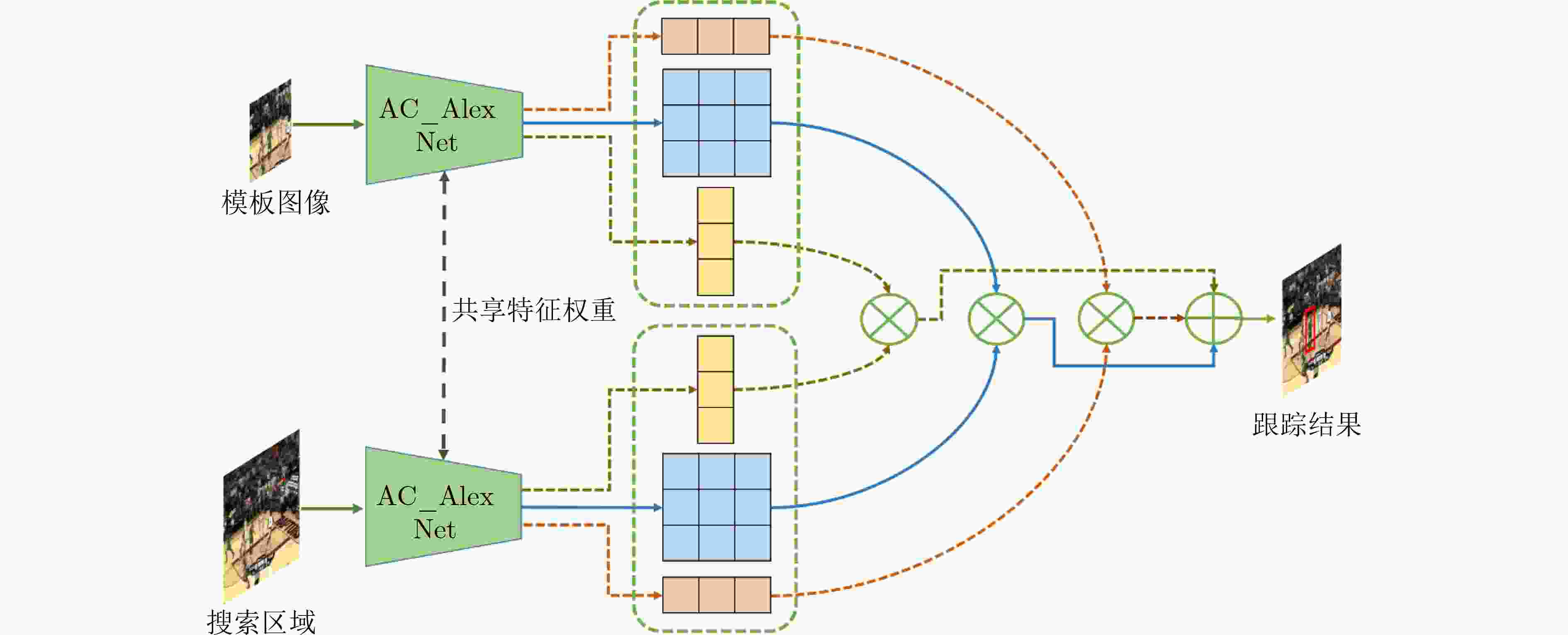

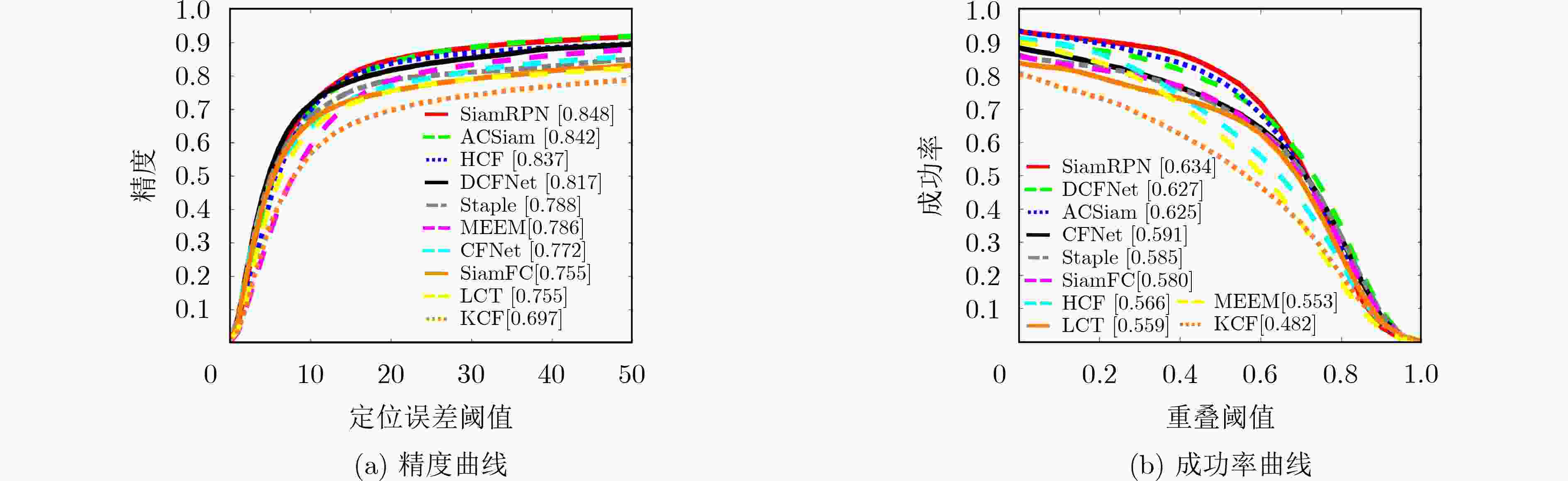

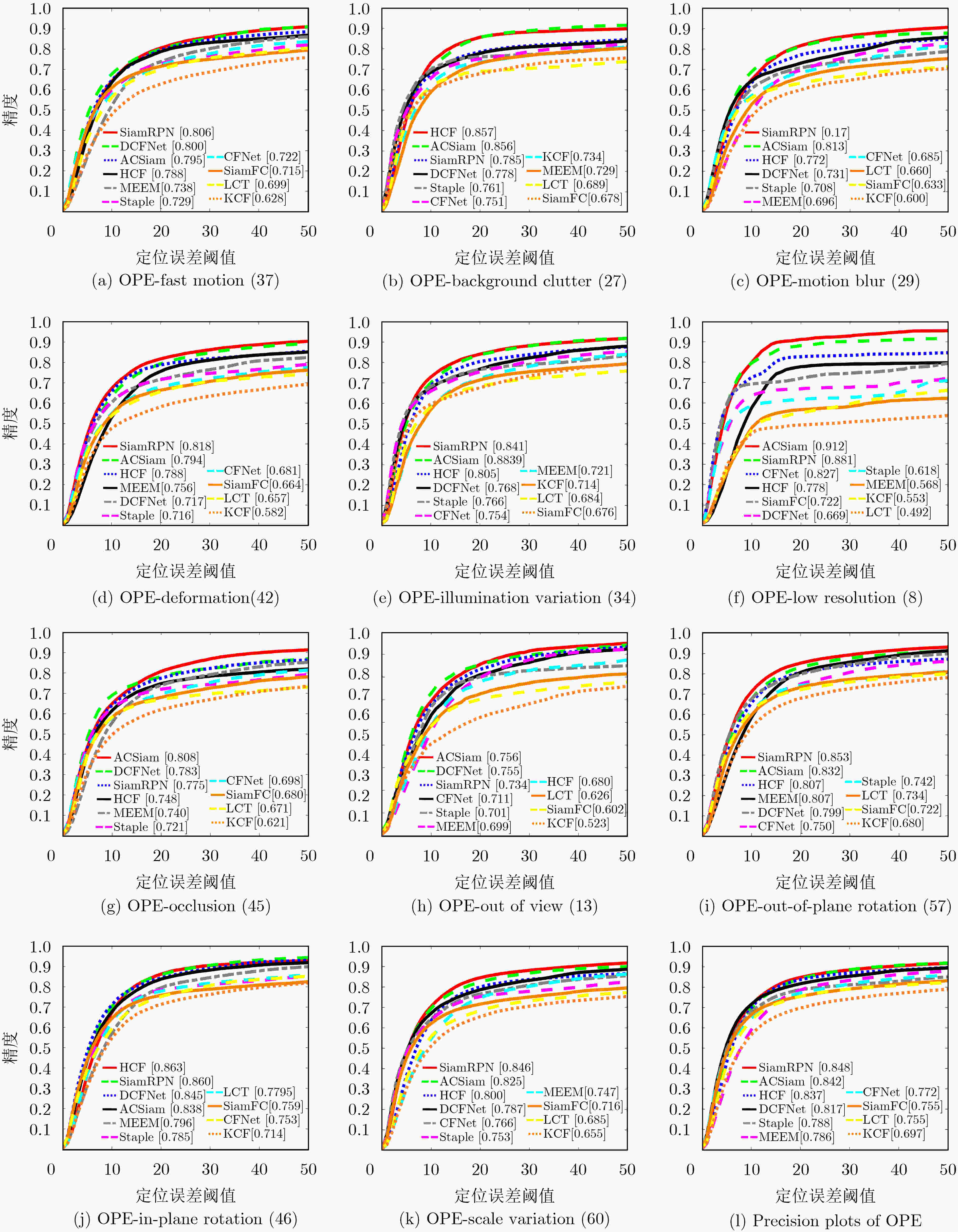

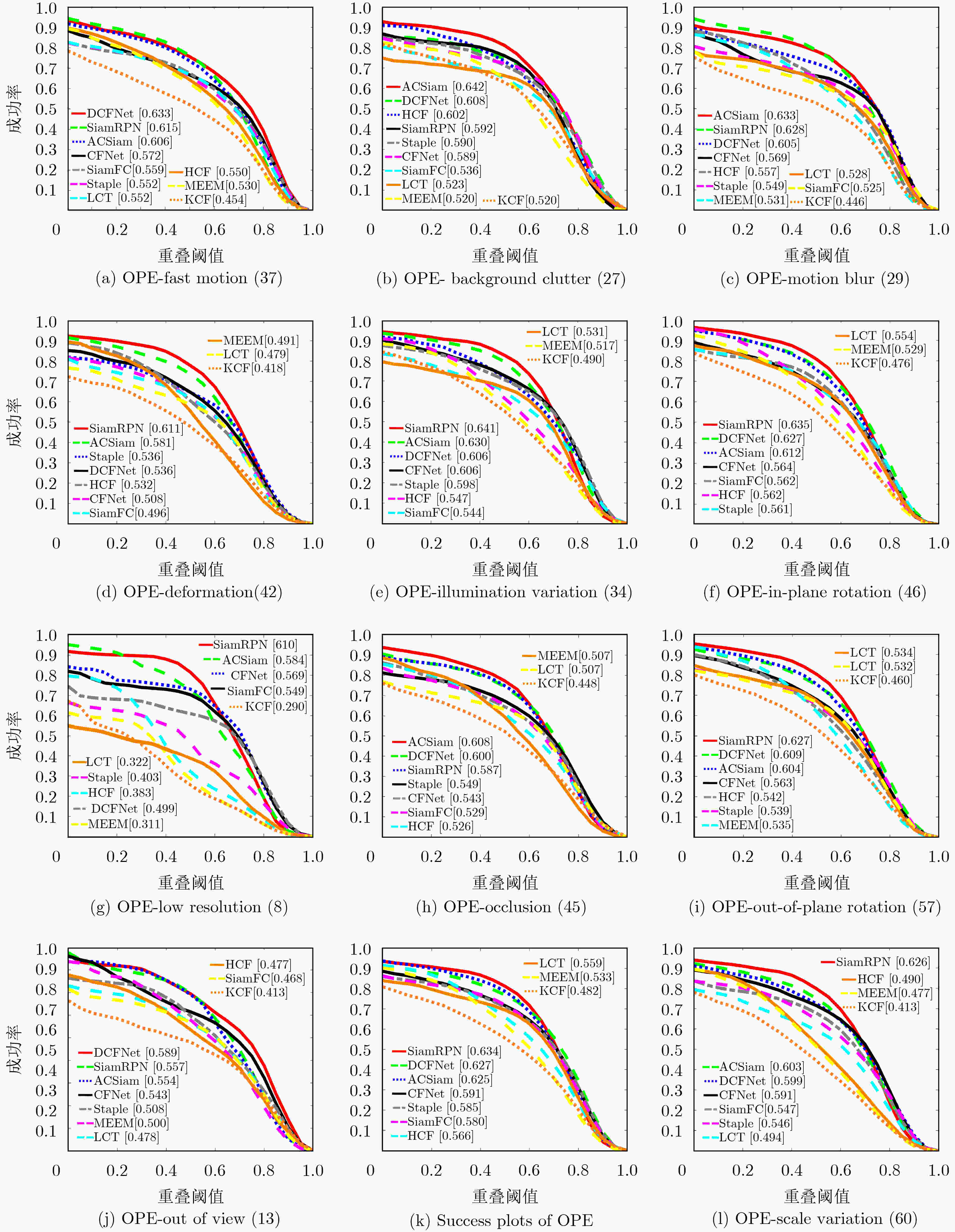

摘要: 针对孪生网络对旋转变化目标特征表达能力不足的问题,该文提出了基于非对称卷积的孪生网络跟踪算法。首先利用卷积核的可加性构建非对称卷积核组,可以将其应用于任意卷积核大小的已有网络结构。接着在孪生网络跟踪框架下,对AlexNet的卷积模块进行替换,并在训练和跟踪阶段对网络进行分别设计。最后在网络的末端并联地添加3个非对称卷积核,分别经过相关运算后得到3个响应图,进行加权融合后选取最大值即为目标的位置。实验结果表明,相比于SiamFC,在OTB2015数据集上精度提高了8.7%,成功率提高了4.5%。Abstract: In order to solve the problem that the Siamese network can not express the rotating target, a Siamese network tracking algorithm based on asymmetric convolution is proposed. Firstly, asymmetric convolution kernels are constructed, which can be applied to existing networks. Then, under the framework of Siamese network, the convolution module of AlexNet is replaced, and the network is designed separately in the training and tracking stages. Finally, three asymmetric convolution kernels are added in parallel in the last layer of the network, and the maximum value is selected as the target position after the three response maps are weighted fused. The experimental results show that compared with SiamFC, the accuracy and success rate are improved by 8.7% and 4.5% on OTB2015 dataset, respectively.

-

Key words:

- Visual tracking /

- Siamese network /

- Asymmetric convolution /

- Rotation

-

表 1 训练阶段的ACSiam网络结构

卷积层 卷积核 Stride 通道数 模板尺寸 搜索尺寸 3 127×127 255×255 Conv1 11×11, 1×11, 11×1 2 96 59×59 123×123 Pool1 3×3 2 96 29×29 61×61 Conv2 5×5, 1×5, 5×1 1 256 25×25 57×57 Pool2 3×3 2 256 12×12 28×28 Conv3 3×3, 1×3, 3×1 1 192 10×10 26×26 Conv4 3×3, 1×3, 3×1 1 192 8×8 24×24 Conv5 3×3, 1×3, 3×1,多分支输出 1 128 6×6 22×22 表 2 跟踪阶段的ACSiam网络结构

卷积层 卷积核 Stride 通道数 模板尺寸 搜索尺寸 3 127×127 255×255 Conv1 11×11 2 96 59×59 123×123 Pool1 3×3 2 96 29×29 61×61 Conv2 5×5 1 256 25×25 57×57 Pool2 3×3 2 256 12×12 28×28 Conv3 3×3 1 192 10×10 26×26 Conv4 3×3 1 192 8×8 24×24 Conv5 3×3, 1×3, 3×1,多分支输出 1 128 6×6 22×22 -

[1] RAWAT W and WANG Zenghui. Deep convolutional neural networks for image classification: A comprehensive review[J]. Neural Computation, 2017, 29(9): 2352–2449. doi: 10.1162/neco_a_00990 [2] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587. [3] LONG J, SHELHAMER E, and DARRELL T. Fully convolutional networks for semantic segmentation[C]. Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 3431–3440. [4] SMEULDERS A W M, CHU D M, CUCCHIARA R, et al. Visual tracking: An experimental survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(7): 1442–1468. doi: 10.1109/TPAMI.2013.230 [5] BOLME D S, BEVERIDGE J R, DRAPER B A, et al. . Visual object tracking using adaptive correlation filters[C]. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, USA, 2010: 2544–2550. [6] HENRIQUES J F, CASEIRO R, MARTINS P, et al. . Exploiting the circulant structure of tracking-by-detection with kernels[C]. Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 2012: 702–715. [7] HENRIQUES J F, CASEIRO R, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583–596. doi: 10.1109/tpami.2014.2345390 [8] DANELLJAN M, KHAN F S, FELSBERG M, et al. . Adaptive color attributes for real-time visual tracking[C]. Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 1090–1097. [9] DANELLJAN M, HÄGER G, KHAN F S, et al. . Convolutional features for correlation filter based visual tracking[C]. Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 2015: 621–629. [10] QI Yuankai, ZHANG Shengping, QIN Lei, et al. . Hedged deep tracking[C]. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 4303–4311. [11] ZHANG Tianzhu, XU Changsheng, and YANG M H. Learning multi-task correlation particle filters for visual tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 41(2): 365–378. doi: 10.1109/TPAMI.2018.2797062 [12] 蒲磊, 冯新喜, 侯志强, 等. 基于空间可靠性约束的鲁棒视觉跟踪算法[J]. 电子与信息学报, 2019, 41(7): 1650–1657. doi: 10.11999/JEIT180780PU Lei, FENG Xinxi, HOU Zhiqiang, et al. Robust visual tracking based on spatial reliability constraint[J]. Journal of Electronics &Information Technology, 2019, 41(7): 1650–1657. doi: 10.11999/JEIT180780 [13] PU Lei, FENG Xinxi, and HOU Zhiqiang. Learning temporal regularized correlation filter tracker with spatial reliable constraint[J]. IEEE Access, 2019, 7: 81441–81450. doi: 10.1109/ACCESS.2019.2922416 [14] LI Feng, TIAN Cheng, ZUO Wangmeng, et al. . Learning spatial-temporal regularized correlation filters for visual tracking[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 4904–4913. [15] 侯志强, 王帅, 廖秀峰, 等. 基于样本质量估计的空间正则化自适应相关滤波视觉跟踪[J]. 电子与信息学报, 2019, 41(8): 1983–1991. doi: 10.11999/JEIT180921HOU Zhiqiang, WANG Shuai, LIAO Xiufeng, et al. Adaptive regularized correlation filters for visual tracking based on sample quality estimation[J]. Journal of Electronics &Information Technology, 2019, 41(8): 1983–1991. doi: 10.11999/JEIT180921 [16] DANELLJAN M, HÄGER G, KHAN F S, et al. . Accurate scale estimation for robust visual tracking[C]. Proceedings of the British Machine Vision Conference, Nottingham, UK, 2014: 65.1–65.11. [17] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional Siamese networks for object tracking[C]. European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 850–865. [18] GUO Qing, FENG Wei, ZHOU Ce, et al. Learning dynamic Siamese network for visual object tracking[C]. Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 1781–1789. [19] LI Peixia, CHEN Boyu, OUYANG Wanli, et al. . GradNet: Gradient-guided network for visual object tracking[C]. Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 6161–6170. [20] LI Bo, YAN Junjie, WU Wei, et al. High performance visual tracking with Siamese region proposal network[C]. Computer Vision and Pattern Recognition, Salt Lake City, UT, 2018: 8971–8980. [21] WU Yi, LIM J, and YANG M H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834–1848. doi: 10.1109/TPAMI.2014.2388226 [22] MA Chao, HUANG Jiabin, YANG Xiaokang, et al. . Hierarchical convolutional features for visual tracking[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 3074–3082. [23] BERTINETTO L, VALMADRE J, GOLODETZ S, et al. Staple: Complementary learners for real-time tracking[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1401–1409. [24] MA Chao, YANG Xiaokang, ZHANG Chongyang, et al. Long-term correlation tracking[C]. Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 5388–5396. [25] VALMADRE J, BERTINETTO L, HENRIQUES J, et al. . End-to-end representation learning for Correlation Filter based tracking[C]. Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 5000–5008. [26] WANG Qiang, GAO Jin, XING Junliang, et al. DCFNet: Discriminant correlation filters network for visual tracking[EB/OL].https://arxiv.org/abs/1704.04057, 2017. [27] ZHANG Jianming, MA Shugao, and SCLAROFF S. MEEM: Robust tracking via multiple experts using entropy minimization[C]. Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 188–203. -

下载:

下载:

下载:

下载: