Control System of Robotic Arm Based on Steady-State Visual Evoked Potentials in Augmented Reality Scenarios

-

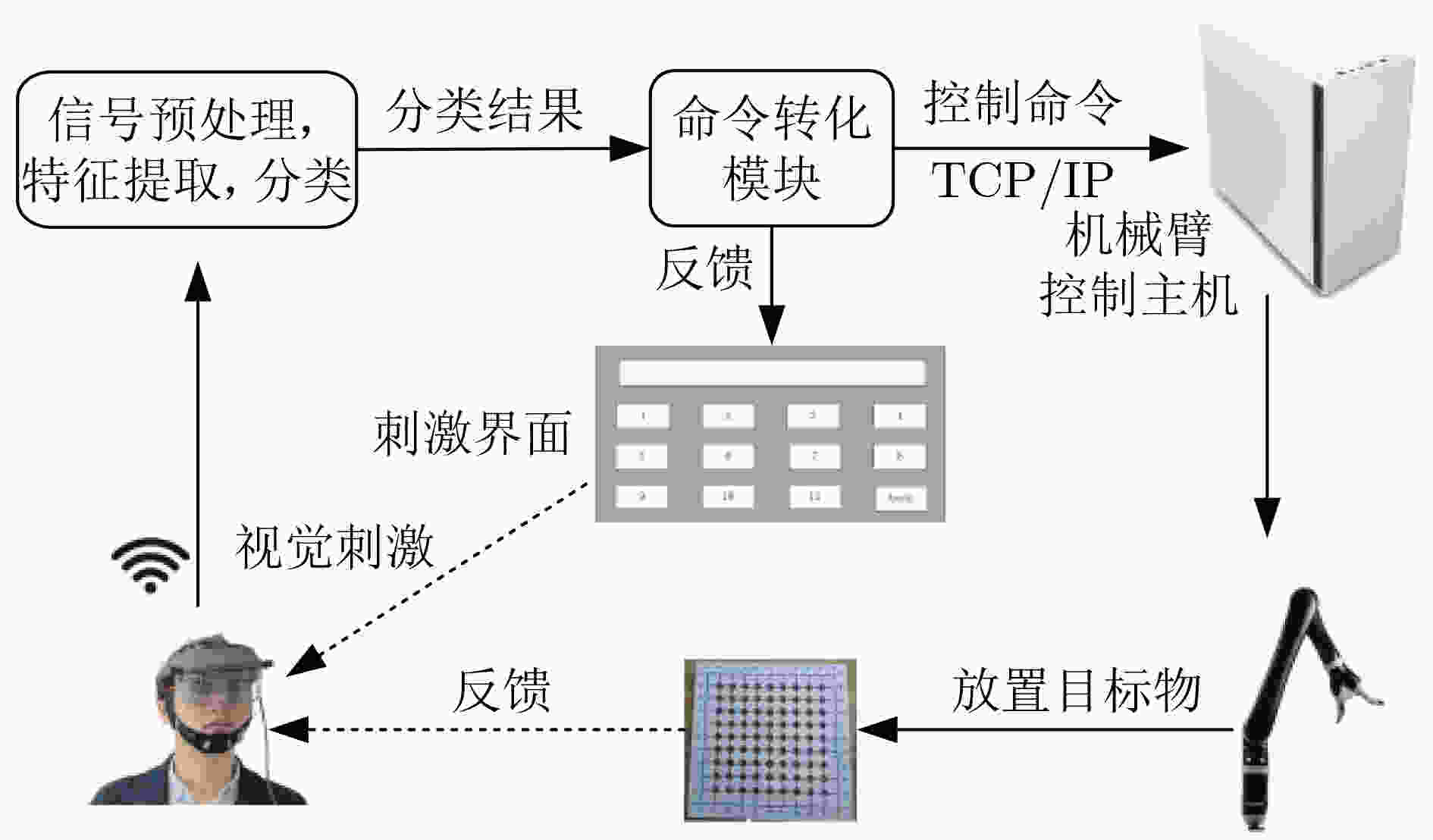

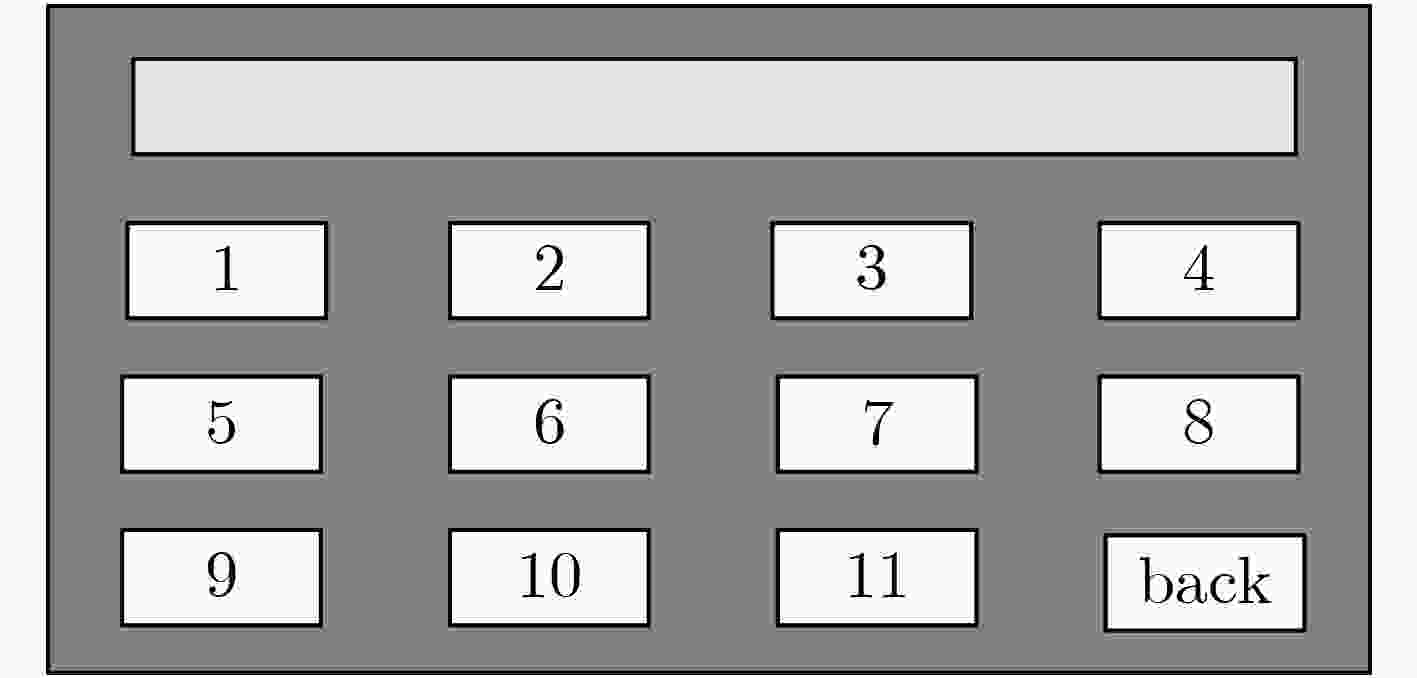

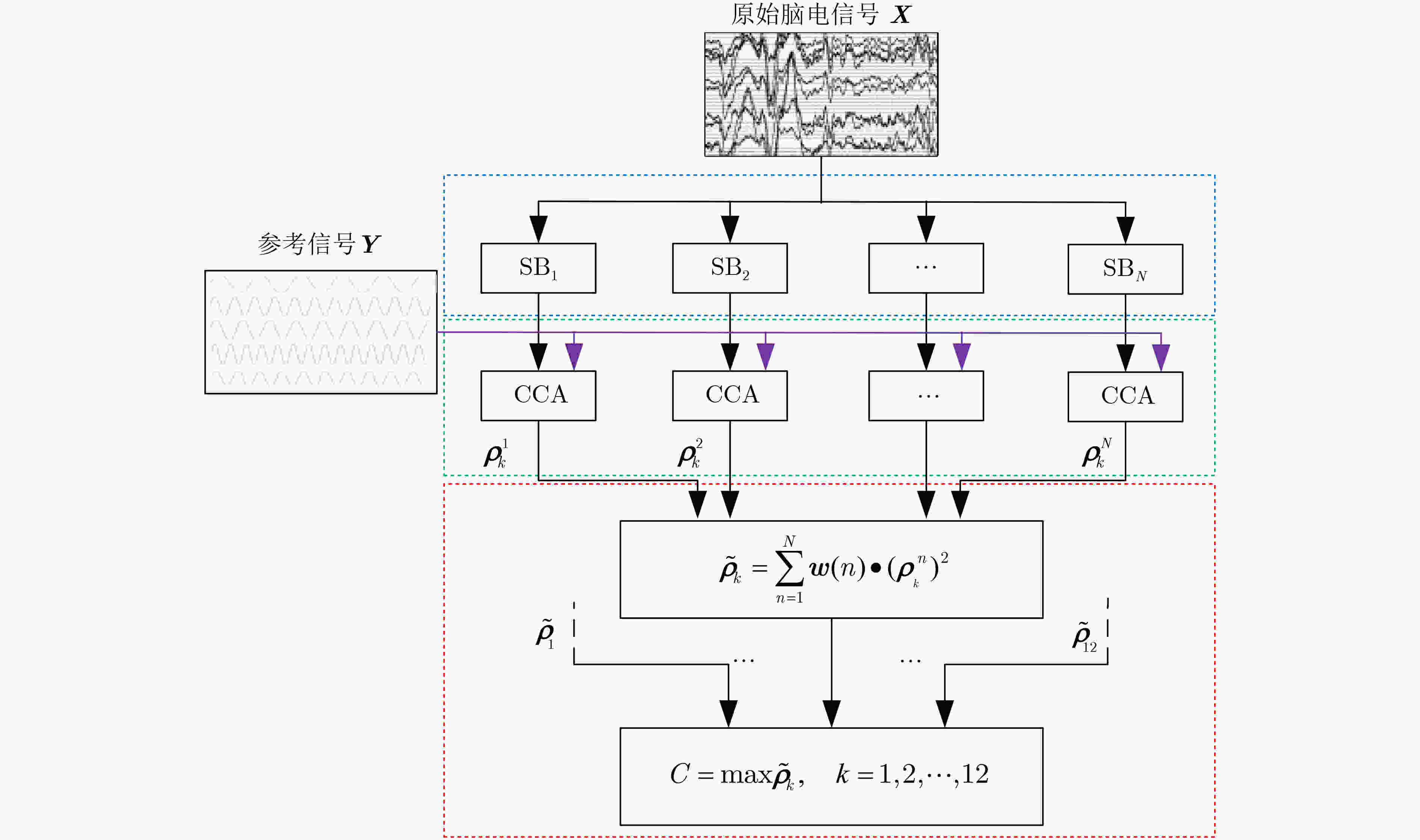

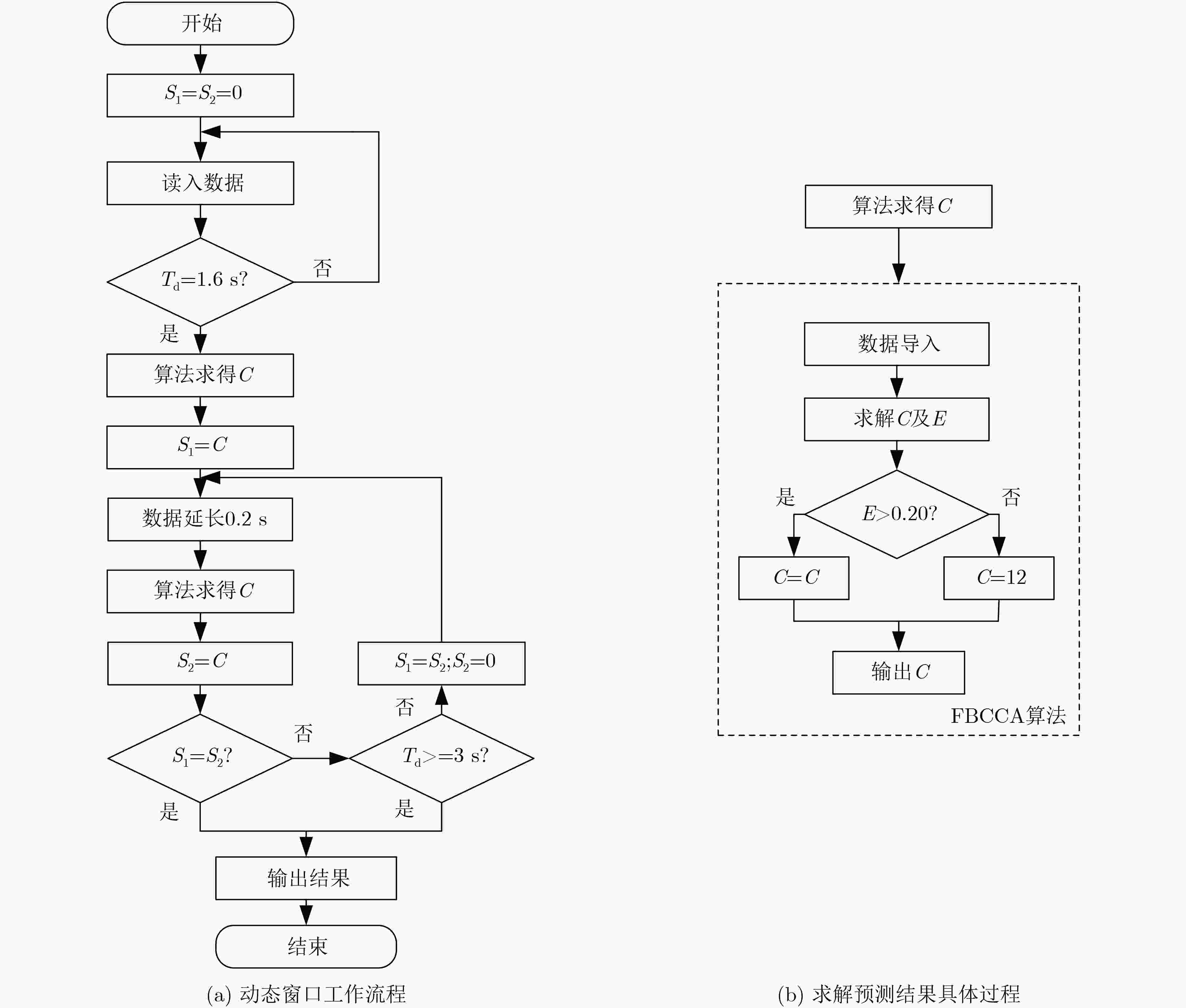

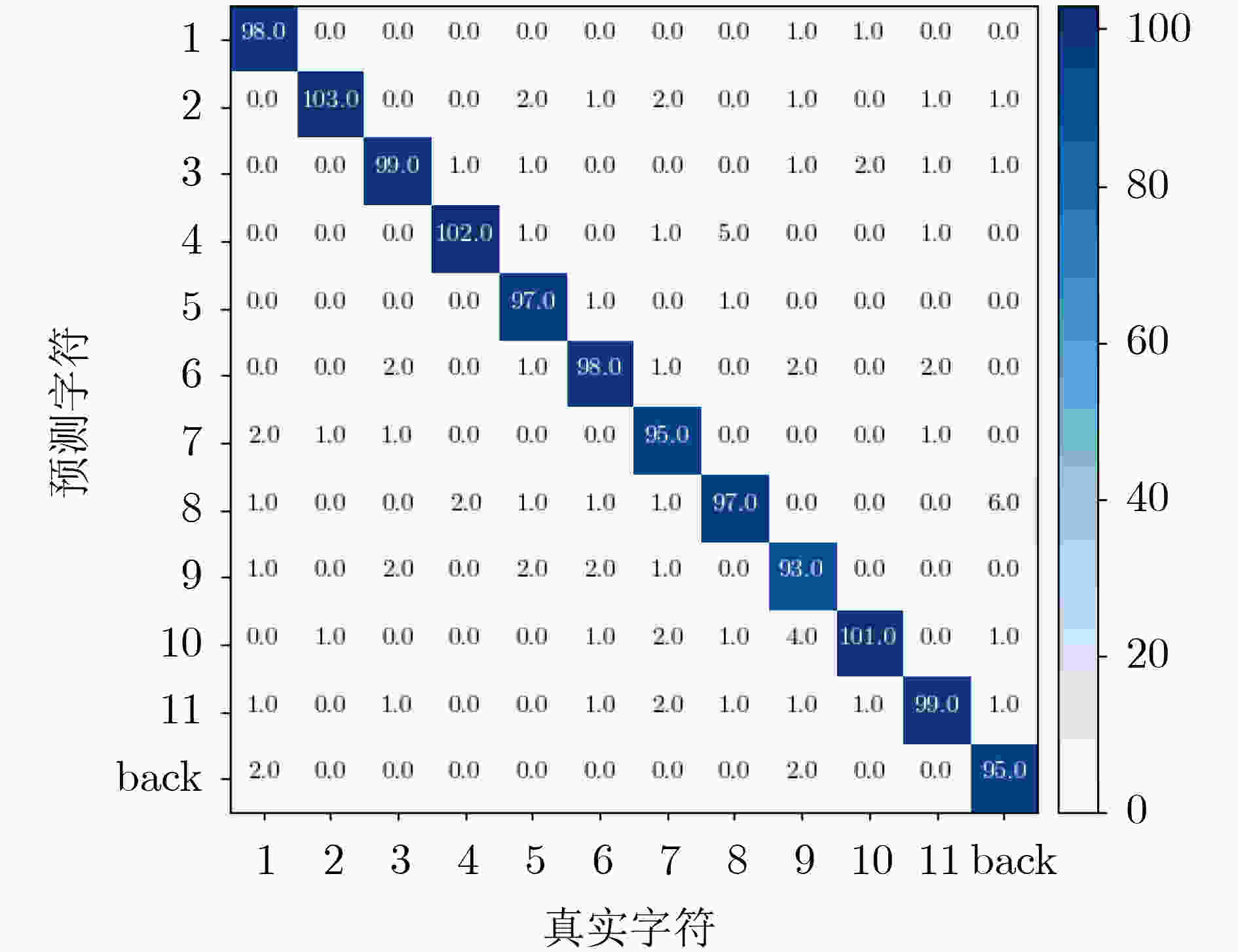

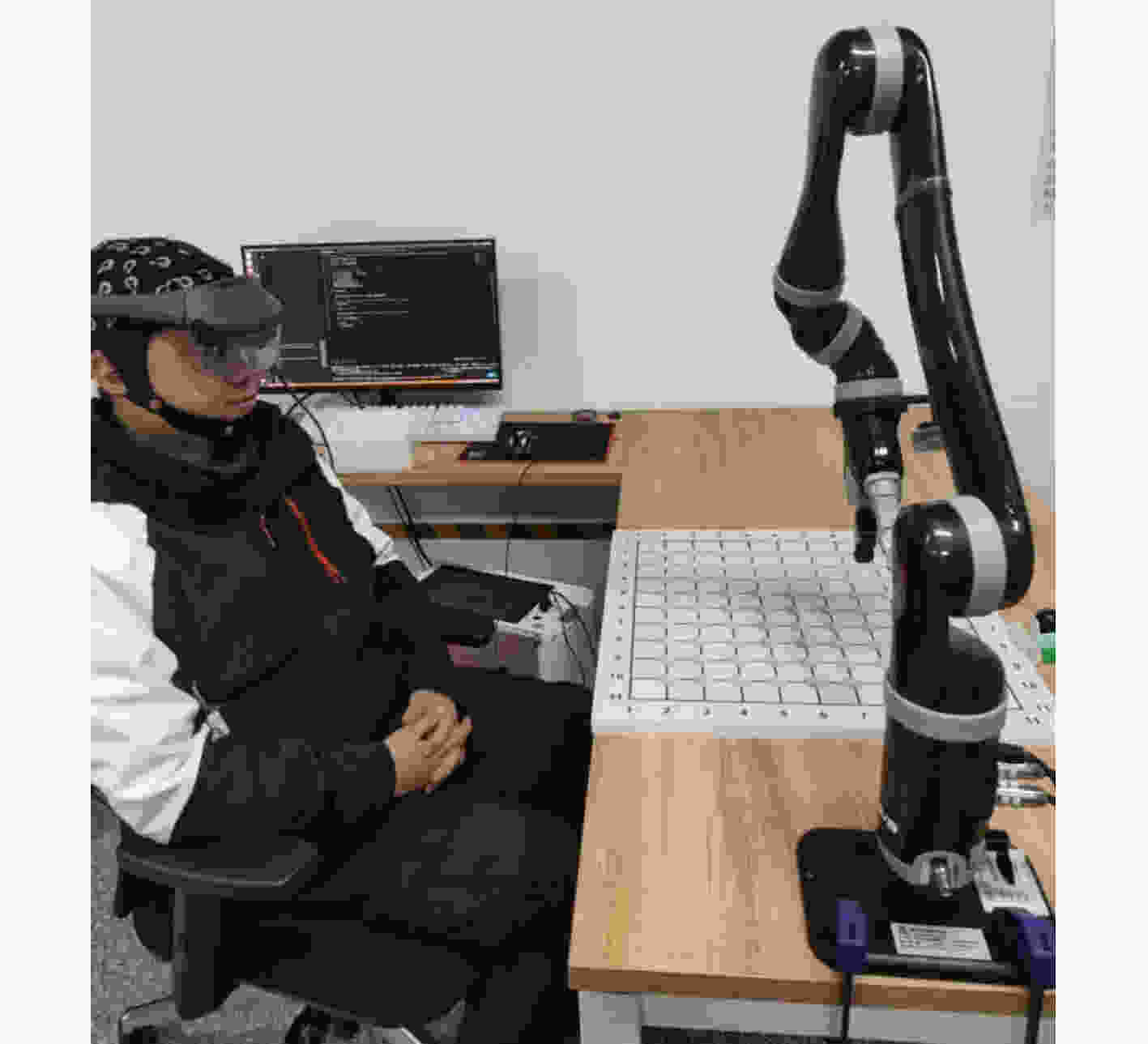

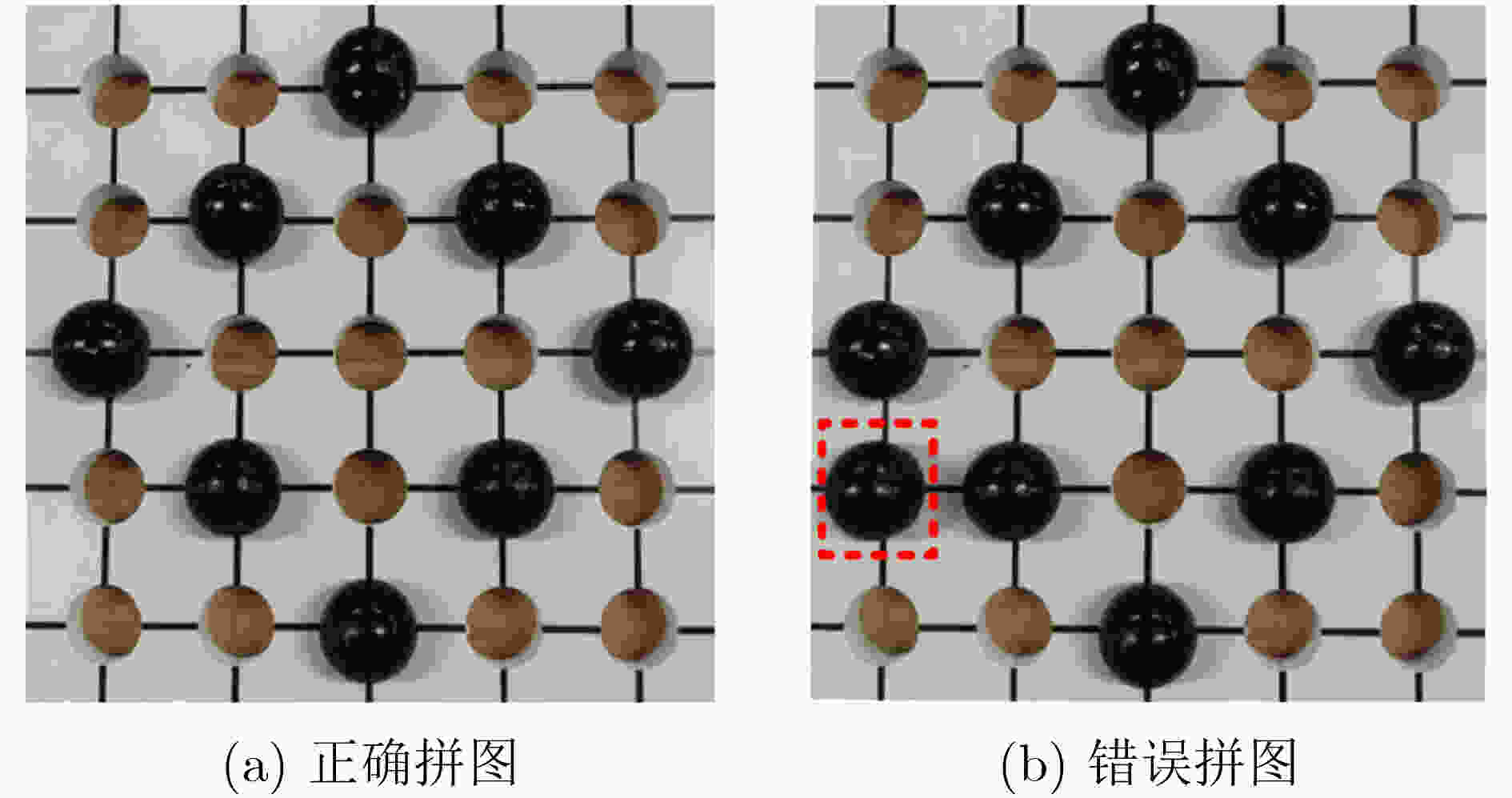

摘要: 目前脑控机械臂在医疗康复等多个领域展现出了宽广的应用前景,但也存在灵活性较差、使用者易疲劳等不足之处。针对上述不足,该文设计一套增强现实(AR)环境下基于稳态视觉诱发电位(SSVEP)的机械臂异步控制系统。利用滤波器组典型相关分析方法(FBCCA)实现对12个目标的识别;提出基于投票策略和差值预测的动态窗口,实现刺激时长的自适应调节;利用伪密钥实现机械臂异步控制,完成拼图任务。试验结果表明,动态窗口可以根据受试者状态自动调整刺激时长,离线平均准确度为(93.11±5.85)%,平均信息传输速率(ITR)为(59.69±8.11) bit·min–1。在线单次命令平均选择时间为2.18 s,有效地减轻受试者的视觉疲劳。每位受试者均能迅速完成拼图任务,证明了该人机交互方法的可行性。Abstract: At present, brain-controlled robotic arms have shown broad application prospects in many fields such as medical rehabilitation, but they also have disadvantages such as poor flexibility and fatigue of users. In view of the above shortcomings, an asynchronous control system based on Steady-State Visual Evoked Potential (SSVEP) in an Augmented Reality (AR) environment is designed. A Filter Bank Canonical Correlation Analysis (FBCCA) is applied to identify 12 targets. A dynamic window based on voting strategy and difference prediction is proposed to adjust the stimulus duration adaptively. The robotic arm is asynchronously controlled by pseudo-key to complete the task of the Jigsaw Puzzle. The experimental results demonstrate that the dynamic window can automatically adjust the length of stimulation according to the state of subjects. The average offline accuracy is (93.11±5.85)%, the average offline ITR is (59.69±8.11) bit·min–1. The average selection time of an online single command is 2.18 s. It can reduce the visual fatigue of the subjects effectively. Each subject can accomplish the puzzle task quickly, which indicates the feasibility of this human-computer interaction method.

-

表 1 离线试验1的结果

受试者 分类准确率 (%) ITR(bit·min–1) S1 99.44 70.31 S2* 94.17 61.25 S3 90.83 56.55 S4* 86.67 51.14 S5* 93.33 60.02 S6 100.00 71.70 S7 91.67 57.66 S8* 76.39 40.22 S9* 98.33 71.70 S10 90.00 55.40 Mean±SD 92.08±7.02 59.59±9.90 (*:初次参加BCI试验的受试者;Mean:平均值;SD:标准偏差) 表 2 离线试验2的结果

受试者 分类准确率(%) ITR(bit·min–1) S1 99.44 52.74 S2* 95.00 46.88 S3 97.50 49.95 S4* 92.50 44.12 S5* 95.83 47.86 S6 100.00 53.77 S7 96.21 48.32 S8* 86.81 38.49 S9* 99.17 71.70 S10 91.67 43.24 Mean±SD 95.41±4.13 47.87±4.92 表 3 离线试验3的结果

受试者 分类准确率(%) ITR(bit·min–1) 刺激时间(s) S1 99.44 70.24 2.01 S2* 94.17 61.08 2.01 S3 92.50 58.24 2.03 S4* 88.33 53.05 2.01 S5* 94.17 61.04 2.01 S6 100.00 67.15 2.01 S7 91.67 57.21 2.02 S8* 80.83 43.38 2.07 S9* 99.17 69.28 2.02 S10 90.83 56.24 2.02 Mean±SD 93.11±5.85 59.69±8.11 2.02±0.02 表 4 选择控制指令试验结果

受试者 窗口类型 完成时间(s) 命令选择时间(s) 总命令数目 错误命令数目 识别准确率(%) 最终执行错误数目 S1 固定 745 3 36 4 88.89 1 动态 689 2.17 35 3 91.43 1 S2* 固定 725 3 35 3 91.43 0 动态 673 2.19 34 2 94.12 0 S3 固定 721 3 35 3 91.43 0 动态 685 2.21 36 4 88.89 1 S4* 固定 730 3 36 4 88.89 1 动态 674 2.16 35 3 91.43 0 S5* 固定 707 3 32 0 100 0 动态 669 2.16 33 1 96.97 0 S6 固定 712 3 34 2 94.12 1 动态 677 2.17 35 3 91.43 0 S7 固定 726 3 36 4 88.89 0 动态 689 2.18 35 3 91.43 0 S8* 固定 735 3 34 2 94.12 0 动态 680 2.20 35 3 91.43 0 S9* 固定 731 3 35 3 91.43 1 动态 702 2.18 37 5 86.49 2 S10 固定 722 3 33 1 96.67 0 动态 679 2.17 34 2 91.42 0 Mean 固定 725.4 3 34.6 2.6 92.59 0.4 动态 681.7 2.18 34.9 2.9 91.50 0.4 表 5 选择控制指令试验结果

受试者 窗口类型 总命令数目 错误命令数目 识别准确率(%) S1 固定 43 2 95.35 动态 41 1 97.56 S2* 固定 44 3 93.18 动态 42 3 92.86 S3 固定 39 2 94.87 动态 38 2 94.74 S4* 固定 40 0 100 动态 44 1 97.73 S5* 固定 39 0 100 动态 37 1 97.30 S6 固定 42 1 97.62 动态 45 2 95.56 S7 固定 36 3 91.67 动态 37 2 94.60 S8* 固定 41 2 95.12 动态 40 0 100 S9* 固定 45 4 91.11 动态 43 3 93.02 S10 固定 41 2 95.12 动态 38 1 97.37 Mean 固定 41.0 1.9 95.40 动态 40.5 1.6 96.07 -

[1] BAJAJ N M, SPIERS A J, and DOLLAR A M. State of the art in artificial wrists: A review of prosthetic and robotic wrist design[J]. IEEE Transactions on Robotics, 2019, 35(1): 261–277. doi: 10.1109/TRO.2018.2865890 [2] SOBREPERA M J, LEE V G, GARG S, et al. Perceived usefulness of a social robot augmented telehealth platform by therapists in the United States[J]. IEEE Robotics and Automation Letters, 2021, 6(2): 2946–2953. doi: 10.1109/LRA.2021.3062349 [3] LINDENROTH L, BANO S, STILLI A, et al. A fluidic soft robot for needle guidance and motion compensation in intratympanic steroid injections[J]. IEEE Robotics and Automation Letters, 2021, 6(2): 871–878. doi: 10.1109/LRA.2021.3051568 [4] CÉSPEDES N, MÚNERA M, GÓMEZ C, et al. Social human-robot interaction for gait rehabilitation[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(6): 1299–1307. doi: 10.1109/TNSRE.2020.2987428 [5] XIE Shenglong, HU Kaiming, LIU Haitao, et al. Dynamic modeling and performance analysis of a new redundant parallel rehabilitation robot[J]. IEEE Access, 2020, 8: 222211–222225. doi: 10.1109/ACCESS.2020.3043429 [6] CIO Y S L K, RAISON M, MÉNARD C L, et al. Proof of concept of an assistive robotic arm control using artificial stereovision and eye-tracking[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2019, 27(12): 2344–2352. doi: 10.1109/TNSRE.2019.2950619 [7] ZHANG Xiangzi, GUO Yaqiu, GAO Boyu, et al. Alpha frequency intervention by electrical stimulation to improve performance in Mu-Based BCI[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(6): 1262–1270. doi: 10.1109/TNSRE.2020.2987529 [8] XU Minpeng, HAN Jin, WANG Yijun, et al. Implementing over 100 command codes for a high-speed hybrid brain-computer interface using concurrent P300 and SSVEP features[J]. IEEE Transactions on Biomedical Engineering, 2020, 67(11): 3073–3082. doi: 10.1109/TBME.2020.2975614 [9] SARASA G, GRANADOS A, and RODRÍGUEZ F B. Algorithmic clustering based on string compression to extract P300 structure in EEG signals[J]. Computer Methods and Programs in Biomedicine, 2019, 176: 225–235. doi: 10.1016/j.cmpb.2019.03.009 [10] OBEIDAT Q T, CAMPBELL T A, and KONG J. Spelling with a small mobile brain-computer interface in a moving wheelchair[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2017, 25(11): 2169–2179. doi: 10.1109/TNSRE.2017.2700025 [11] HOSNI S M, BORGHEAI S B, J MCLINDEN, et al. An fNIRS-based motor imagery BCI for ALS: A subject-specific data-driven approach[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(12): 3063–3073. doi: 10.1109/TNSRE.2020.3038717 [12] DOWNEY J E, WEISS J M, MUELLING K, et al. Blending of brain-machine interface and vision-guided autonomous robotics improves neuroprosthetic arm performance during grasping[J]. Journal of Neuroengineering and Rehabilitation, 2016, 13(1): 28. doi: 10.1186/s12984-016-0134-9 [13] CHEN Xiaogang, ZHAO Bing, WANG Yijun, et al. Control of a 7-DOF robotic arm system with an SSVEP-based BCI[J]. International Journal of Neural Systems, 2018, 28(8): 1850018. doi: 10.1142/S0129065718500181 [14] 陈超, 平尧, 郝斌, 等. 基于脑机接口技术的写字系统建模仿真与实现[J]. 系统仿真学报, 2018, 30(12): 4499–4505. doi: 10.16182/j.issn1004731x.joss.201812001CHEN Chao, PING Yao, HAO Bin, et al. Modeling, simulation and realization of writing system based on BCI technology[J]. Journal of System Simulation, 2018, 30(12): 4499–4505. doi: 10.16182/j.issn1004731x.joss.201812001 [15] 徐阳. 脑机接口与机器视觉结合的机械臂共享控制研究[D]. [硕士论文], 上海交通大学, 2019. doi: 10.27307/d.cnki.gsjtu.2019.001896.XU Yang. Shared control of a robotic ARM using non-invasive brain-computer interface and machine vision[D]. [Master dissertation], Shanghai Jiao Tong University, 2019. doi: 10.27307/d.cnki.gsjtu.2019.001896. [16] CHEN Xiaogang, HUANG Xiaoshan, WANG Yijun, et al. Combination of augmented reality based brain-computer interface and computer vision for high-level control of a robotic arm[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(12): 3140–3147. doi: 10.1109/TNSRE.2020.3038209 [17] 左词立, 毛盈, 刘倩倩, 等. 不同复杂度汉字模式下运动想象脑机接口性能研究[J]. 生物医学工程学杂志, 2021, 38(3): 417–424,454. doi: 10.7507/1001-5515.202010031ZUO Cili, MAO Ying, LIU Qianqian, et al. Research on performance of motor-imagery-based brain-computer interface in different complexity of Chinese character patterns[J]. Journal of Biomedical Engineering, 2021, 38(3): 417–424,454. doi: 10.7507/1001-5515.202010031 [18] CHEN Xiaogang, ZHAO Bing, WANG Yijun, et al. Combination of high-frequency SSVEP-based BCI and computer vision for controlling a robotic arm[J]. Journal of Neural Engineering, 2019, 16(2): 026012. doi: 10.1088/1741-2552/aaf594 [19] WONG C M, WANG Ze, WANG Boyu, et al. Inter-and intra-subject transfer reduces calibration effort for high-speed SSVEP-based BCIs[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(10): 2123–2135. doi: 10.1109/TNSRE.2020.3019276 [20] YANG Chen, HAN Xu, WANG Yijun, et al. A dynamic window recognition algorithm for SSVEP-based brain–computer interfaces using a spatio-temporal equalizer[J]. International Journal of Neural Systems, 2018, 28(10): 1850028. doi: 10.1142/S0129065718500284 [21] WONG C M, WANG Ze, ROSA A C, et al. Transferring subject-specific knowledge across stimulus frequencies in SSVEP-based BCIs[J]. IEEE Transactions on Automation Science and Engineering, 2021, 18(2): 552–563. doi: 10.1109/TASE.2021.3054741 [22] LI Yao, XIANG Jiayi, and KESAVADAS T. Convolutional correlation analysis for enhancing the performance of SSVEP-based brain-computer interface[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(12): 2681–2690. doi: 10.1109/TNSRE.2020.3038718 [23] 王春慧, 江京, 李海洋, 等. 基于动态自适应策略的SSVEP快速目标选择方法[J]. 清华大学学报:自然科学版, 2018, 58(9): 788–795. doi: 10.16511/j.cnki.qhdxxb.2018.22.038WANG Chunhui, JIANG Jing, LI Haiyang, et al. High-speed target selection method for SSVEP based on a dynamic stopping strategy[J]. Journal of Tsinghua University:Science and Technology, 2018, 58(9): 788–795. doi: 10.16511/j.cnki.qhdxxb.2018.22.038 [24] 陈小刚, 赵秉, 刘明, 等. 稳态视觉诱发电位脑-机接口控制机械臂系统的设计与实现[J]. 生物医学工程与临床, 2018, 22(3): 20–26. doi: 10.13339/j.cnki.sglc.20180517.002CHEN Xiaogang, ZHAO Bing, LIU Ming, et al. Design and implementation of controlling robotic arms using steady-state visual evoked potential brain-computer interface[J]. Biomedical Engineering and Clinical Medicine, 2018, 22(3): 20–26. doi: 10.13339/j.cnki.sglc.20180517.002 [25] 伏云发, 郭衍龙, 李松, 等. 基于SSVEP直接脑控机器人方向和速度研究[J]. 自动化学报, 2016, 42(11): 1630–1640.FU Yunfa, GUO Yanlong, LI Song, et al. Direct-brain-controlled robot direction and speed based on SSVEP brain computer interaction[J]. Acta Automatica Sinica, 2016, 42(11): 1630–1640. [26] MAYE A, ZHANG Dan, and ENGEL A K. Utilizing retinotopic mapping for a multi-target SSVEP BCI with a single flicker frequency[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2017, 25(7): 1026–1036. doi: 10.1109/TNSRE.2017.2666479 [27] GRUBERT J, LANGLOTZ T, ZOLLMANN S, et al. Towards pervasive augmented reality: Context-awareness in augmented reality[J]. IEEE Transactions on Visualization and Computer Graphics, 2017, 23(6): 1706–1724. doi: 10.1109/tvcg.2016.2543720 [28] GAFFARY Y, LE GOUIS B, MARCHAL M, et al. AR Feels "Softer" than VR: Haptic perception of stiffness in augmented versus virtual reality[J]. IEEE Transactions on Visualization and Computer Graphics, 2017, 23(11): 2372–2377. doi: 10.1109/TVCG.2017.2735078 [29] KOOP M M, ROSENFELDT A B, JOHNSTON J D, et al. The HoloLens augmented reality system provides valid measures of gait performance in healthy adults[J]. IEEE Transactions on Human-Machine Systems, 2020, 50(6): 584–592. doi: 10.1109/THMS.2020.3016082 [30] WILLIAMS A S, GARCIA J, and ORTEGA F. Understanding multimodal user gesture and speech behavior for object manipulation in augmented reality using elicitation[J]. IEEE Transactions on Visualization and Computer Graphics, 2020, 26(12): 3479–3489. doi: 10.1109/TVCG.2020.3023566 [31] JEONG J, LEE C K, LEE B, et al. Holographically printed freeform mirror array for augmented reality near-eye display[J]. IEEE Photonics Technology Letters, 2020, 32(16): 991–994. doi: 10.1109/LPT.2020.3008215 [32] ARPAIA P, DURACCIO L, MOCCALDI N, et al. Wearable brain–computer interface instrumentation for robot-based rehabilitation by augmented reality[J]. IEEE Transactions on Instrumentation and Measurement, 2020, 69(9): 6362–6371. doi: 10.1109/TIM.2020.2970846 [33] PARK S, CHA H S, and IM C H. Development of an online home appliance control system using augmented reality and an SSVEP-based brain–computer interface[J]. IEEE Access, 2019, 7: 163604–163614. doi: 10.1109/ACCESS.2019.2952613 [34] ZHAO Xincan, LIU Chenyang, XU Zongxin, et al. SSVEP stimulus layout effect on accuracy of brain-computer interfaces in augmented reality glasses[J]. IEEE Access, 2020, 8: 5990–5998. doi: 10.1109/ACCESS.2019.2963442 [35] ZHOU Yajun, HE Shenghong, HUANG Qiyun, et al. A hybrid asynchronous brain-computer interface combining SSVEP and EOG signals[J]. IEEE Transactions on Biomedical Engineering, 2020, 67(10): 2881–2892. doi: 10.1109/TBME.2020.2972747 -

下载:

下载:

下载:

下载: