Dense Crowd Counting Algorithm Based on New Multi-scale Attention Mechanism

-

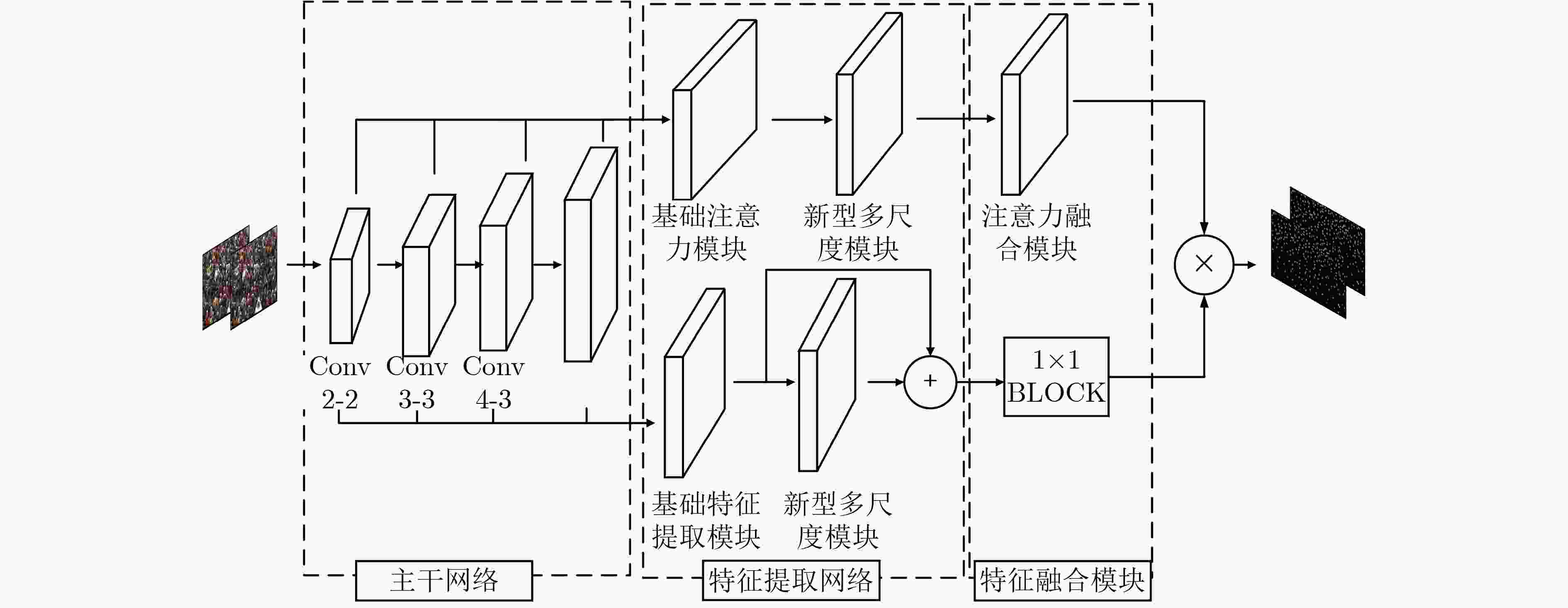

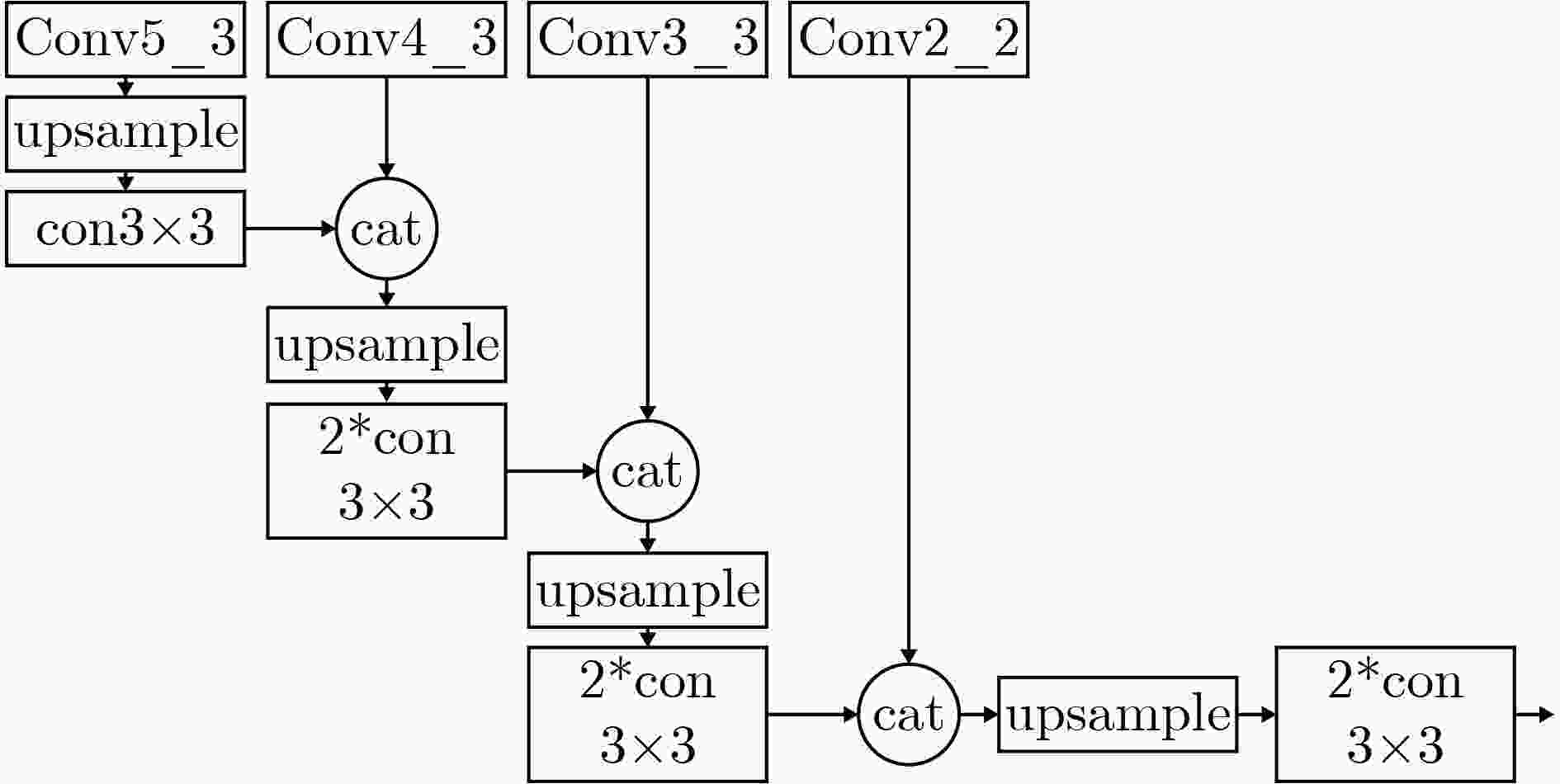

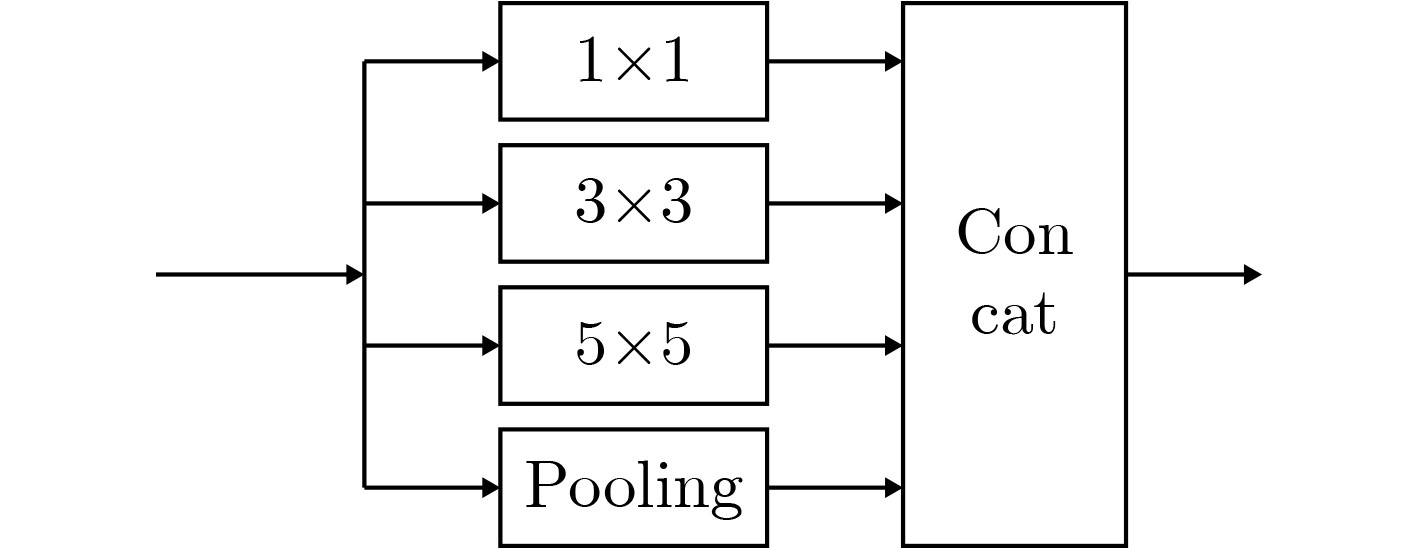

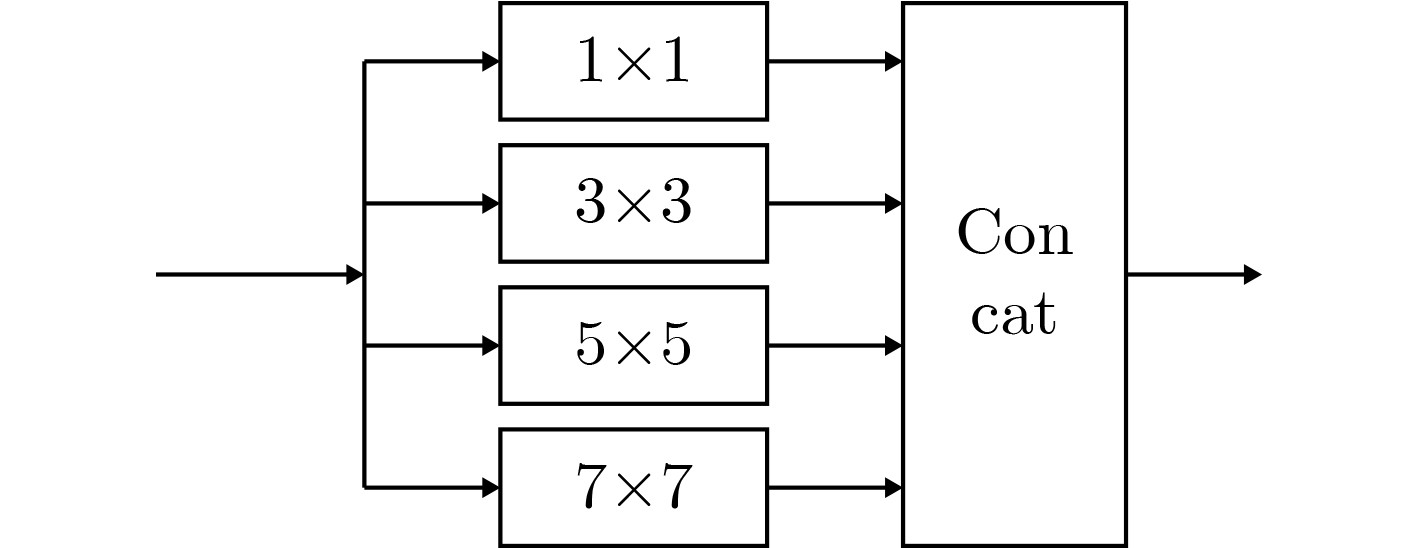

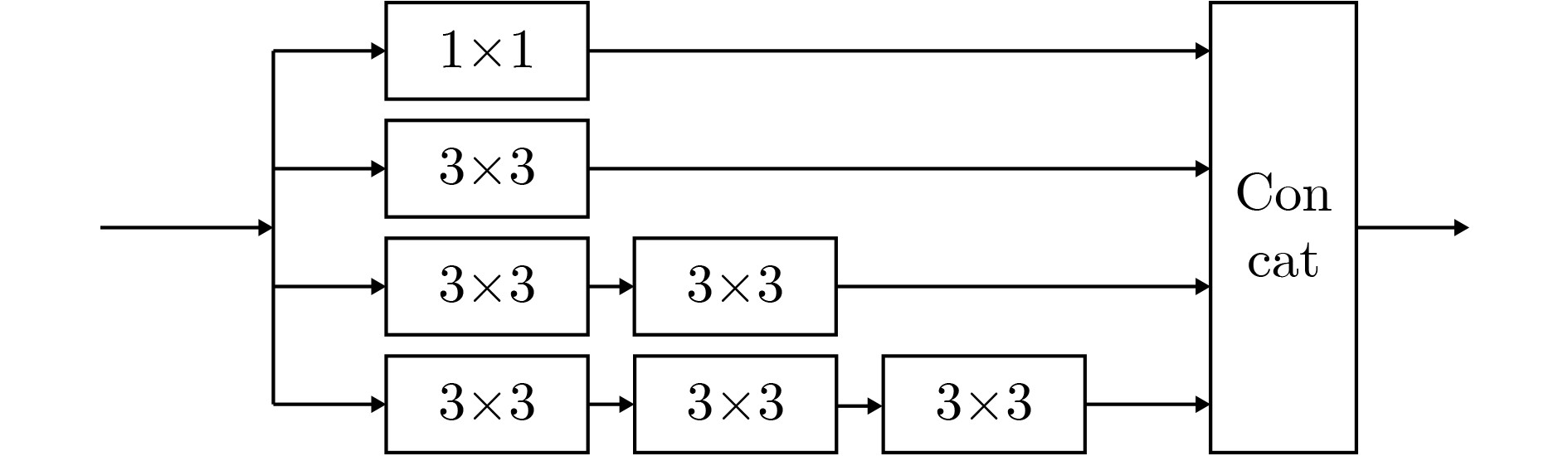

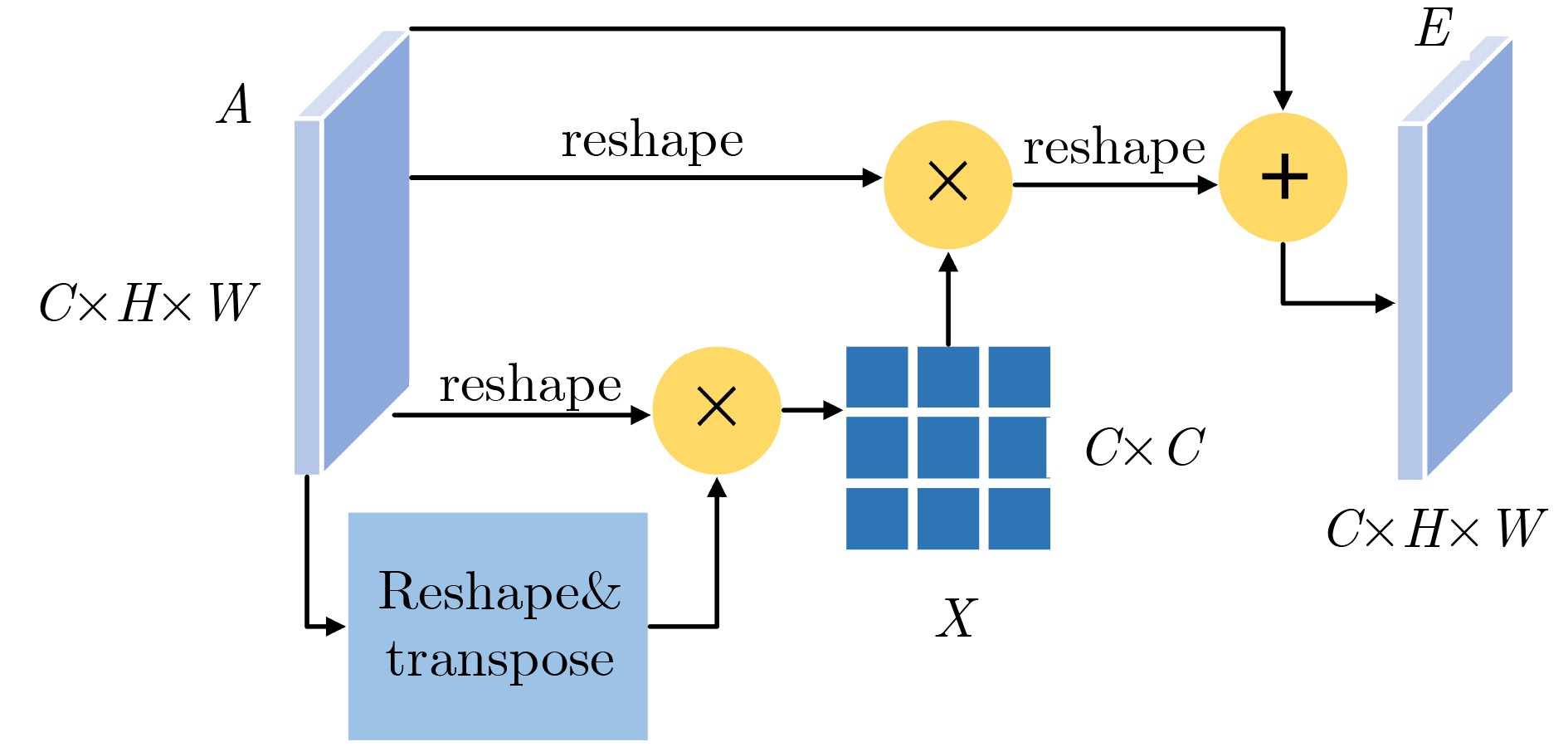

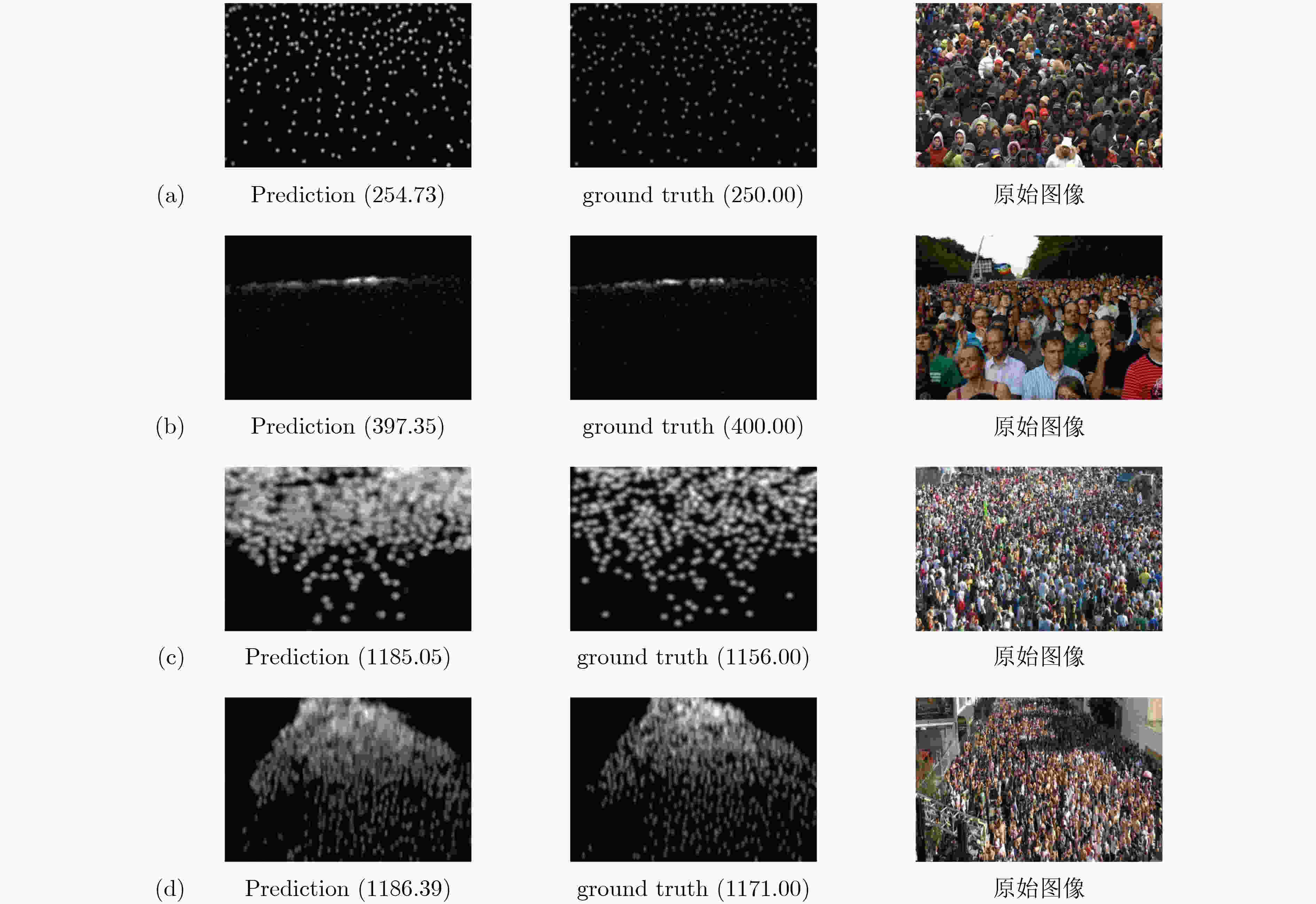

摘要: 密集人群计数是计算机视觉领域的一个经典问题,仍然受制于尺度不均匀、噪声和遮挡等因素的影响。该文提出一种基于新型多尺度注意力机制的密集人群计数方法。深度网络包括主干网络、特征提取网络和特征融合网络。其中,特征提取网络包括特征支路和注意力支路,采用由并行卷积核函数组成的新型多尺度模块,能够更好地获取不同尺度下的人群特征,以适应密集人群分布的尺度不均匀特性;特征融合网络利用注意力融合模块对特征提取网络的输出特征进行增强,实现了注意力特征与图像特征的有效融合,提高了计数精度。在ShanghaiTech, UCF_CC_50, Mall和UCSD等公开数据集的实验表明,提出的方法在MAE和MSE两项指标上均优于现有方法。Abstract: Dense crowd counting is a classic problem in the field of computer vision, and it is still subject to the influence of factors such as uneven scale, noise and occlusion. This paper proposes a dense crowd counting method based on a new multi-scale attention mechanism. Deep network includes backbone network, feature extraction network and feature fusion network. Among them, the feature extraction network includes feature branch and attention branch. It adopts a new multi-scale module composed of parallel convolution kernel functions, which can better obtain the characteristics of people at different scales to adapt to the uneven scale of dense population distribution features; The feature fusion network uses the attention fusion module to enhance the output features of the feature extraction network, realizes the effective fusion of attention features and image features, and improves counting accuracy. Experiments on public data sets such as ShanghaiTech, UCF_CC_50, Mall and UCSD show that the proposed method outperforms existing methods in both MAE and MSE indicators.

-

表 1 ShanghaiTech数据集实验结果

方法 Part A Part B MAE MSE MAE MSE MCNN[4] 110.2 173.2 26.4 41.3 EDMNet[14] 76.5 100.2 15.4 26.3 MSFNet[15] 63.4 97.2 9.6 14.3 Switching-CNN[9] 90.4 135.0 21.6 33.4 CSRNet[8] 68.2 115.0 10.6 16.0 SCAR[21] 66.3 114.1 9.5 15.2 MRA-CNN[22] 74.2 112.5 11.9 21.3 ACSPNet[23] 85.2 137.1 15.4 23.1 ACM-CNN[16] 72.2 103.5 17.5 22.7 SFANet[24] 59.8 99.3 26.0 30.5 FPNet[33] 108.6 126.3 26.0 30.5 本文方法 57.1 91.9 6.87 9.8 表 2 UCF_CC_50实验结果

表 3 Mall实验结果

表 4 UCSD实验结果

表 5 消融实验结果

方法 MAE MSE Backbone + D +M

Backbone+D+M+C58.6

57.896.6

92.7Backbone + ND +NM+C 57.1 91.9 -

[1] ARTETA C, LEMPITSKY V, and ZISSERMAN A. Counting in the wild[C]. Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 483–498. [2] ARTETA C, LEMPITSKY V, NOBLE J A, et al. Interactive object counting[C]. Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 504–518. [3] SHANG Chong, AI Haizhou, and BAI Bo. End-to-end crowd counting via joint learning local and global count[C]. Proceedings of 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, USA, 2016: 1215–1219. [4] ZHANG Yingying, ZHOU Desen, CHEN Siqin, et al. Single-image crowd counting via multi-column convolutional neural network[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 589–597. [5] OÑORO-RUBIO D and LÓPEZ-SASTRE R J. Towards perspective-free object counting with deep learning[C]. Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 2016: 615–629. [6] MARSDEN M, MCGUINNESS K, LITTLE S, et al. ResnetCrowd: A residual deep learning architecture for crowd counting, violent behaviour detection and crowd density level classification[C]. Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance, Lecce, Italy, 2017: 123–126. [7] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. [8] LI Yuhong, ZHANG Xiaofan, and CHEN Deming. CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes[C]. Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1091–1100. [9] SAM D B, SURYA S, and BABU R V. Switching convolutional neural network for crowd counting[C]. Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 4031–4039. [10] WANG Qi, GAO Junyu, LIN Wei, et al. Learning from synthetic data for crowd counting in the wild[C]. Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 8190–8199. [11] LI Zhengqi, DEKEL T, COLE F, et al. Learning the depths of moving people by watching frozen people[C]. Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4516–4525. [12] WANG Shunzhou, LU Yao, ZHOU Tianfei, et al. SCLNet: Spatial context learning network for congested crowd counting[J]. Neurocomputing, 2020, 404: 227–239. doi: 10.1016/j.neucom.2020.04.139 [13] CHEN Xinya, BIN Yanrui, GAO Changxin, et al. Relevant region prediction for crowd counting[J]. Neurocomputing, 2020, 407: 399–408. doi: 10.1016/j.neucom.2020.04.117 [14] 孟月波, 纪拓, 刘光辉, 等. 编码-解码多尺度卷积神经网络人群计数方法[J]. 西安交通大学学报, 2020, 54(5): 149–157.MENG Yuebo, JI Tuo, LIU Guanghui, et al. Encoding-decoding multi-scale convolutional neural network for crowd counting[J]. Journal of Xi'an Jiaotong University, 2020, 54(5): 149–157. [15] 左静, 巴玉林. 基于多尺度融合的深度人群计数算法[J]. 激光与光电子学进展, 2020, 57(24): 307–315.ZUO Jing and BA Yulin. Population-depth counting algorithm based on multiscale fusion[J]. Laser &Optoelectronics Progress, 2020, 57(24): 307–315. [16] ZOU Zhikang, CHENG Yu, QU Xiaoye, et al. Attend to count: Crowd counting with adaptive capacity multi-scale CNNs[J]. Neurocomputing, 2019, 367: 75–83. doi: 10.1016/j.neucom.2019.08.009 [17] SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]. Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1–9. [18] IDREES H, SALEEMI I, SEIBERT C, et al. Multi-source multi-scale counting in extremely dense crowd images[C]. Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, USA, 2013: 2547–2554. [19] CHEN Ke, LOY C C, GONG Shaogang, et al. Feature mining for localised crowd counting[C]. Proceedings of the British Machine Vision Conference, Surrey, UK, 2012: 3–5. [20] CHAN A B, LIANG Z S J, and VASCONCELOS N. Privacy preserving crowd monitoring: Counting people without people models or tracking[C]. Proceedings of 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, USA, 2008: 1–7. [21] GAO Junyu, WANG Qi, and YUAN Yuan. SCAR: Spatial-/channel-wise attention regression networks for crowd counting[J]. Neurocomputing, 2019, 363: 1–8. doi: 10.1016/j.neucom.2019.08.018 [22] ZHANG Youmei, ZHOU Chunluan, CHANG Faliang, et al. Multi-resolution attention convolutional neural network for crowd counting[J]. Neurocomputing, 2018, 329: 144–152. [23] MA Junjie, DAI Yaping, and TAN Y P. Atrous convolutions spatial pyramid network for crowd counting and density estimation[J]. Neurocomputing, 2019, 350: 91–101. doi: 10.1016/j.neucom.2019.03.065 [24] ZHU Liang, ZHAO Zhijian, LU Chao, et al. Dual path multi-scale fusion networks with attention for crowd counting[J]. arXiv: 1902.01115, 2019. [25] RANJAN V, LE H, and HOAI M. Iterative crowd counting[C]. Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 2018: 278–293. [26] YANG Biao, ZHAN Weiqin, WANG Nan, et al. Counting crowds using a scale-distribution-aware network and adaptive human-shaped kernel[J]. Neurocomputing, 2020, 390: 207–216. doi: 10.1016/j.neucom.2019.02.071 [27] DAI Jifeng, LI Yi, HE Kaiming, et al. R-FCN: Object detection via region-based fully convolutional networks[C]. Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 2016: 379–387. [28] REN Shaoqiang, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031 [29] XIONG Feng, SHI Xingjian, and YEUNG D Y. Spatiotemporal modeling for crowd counting in videos[C]. Proceedings of 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 5161–5169. [30] XU Mingliang, GE Zhaoyang, JIANG Xiaoheng, et al. Depth Information Guided Crowd Counting for complex crowd scenes[J]. Pattern Recognition Letters, 2019, 125: 563–569. doi: 10.1016/j.patrec.2019.02.026 [31] SHEN Zan, XU Yi, NI Bingbing, et al. Crowd counting via adversarial cross-scale consistency pursuit[C]. Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 5245–5254. [32] CAO Xinkun, WANG Zhipeng, ZHAO Yanyun, et al. Scale aggregation network for accurate and efficient crowd counting[C]. Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 2018: 757–773. [33] 邓远志, 胡钢. 基于特征金字塔的人群密度估计方法[J]. 测控技术, 2020, 39(6): 108–114.DENG Yuanzhi and HU Gang. Crowd density evaluation method based on feature pyramid[J]. Measurement &Control Technology, 2020, 39(6): 108–114. -

下载:

下载:

下载:

下载: