Person Re-Identification Based on Diversified Local Attention Network

-

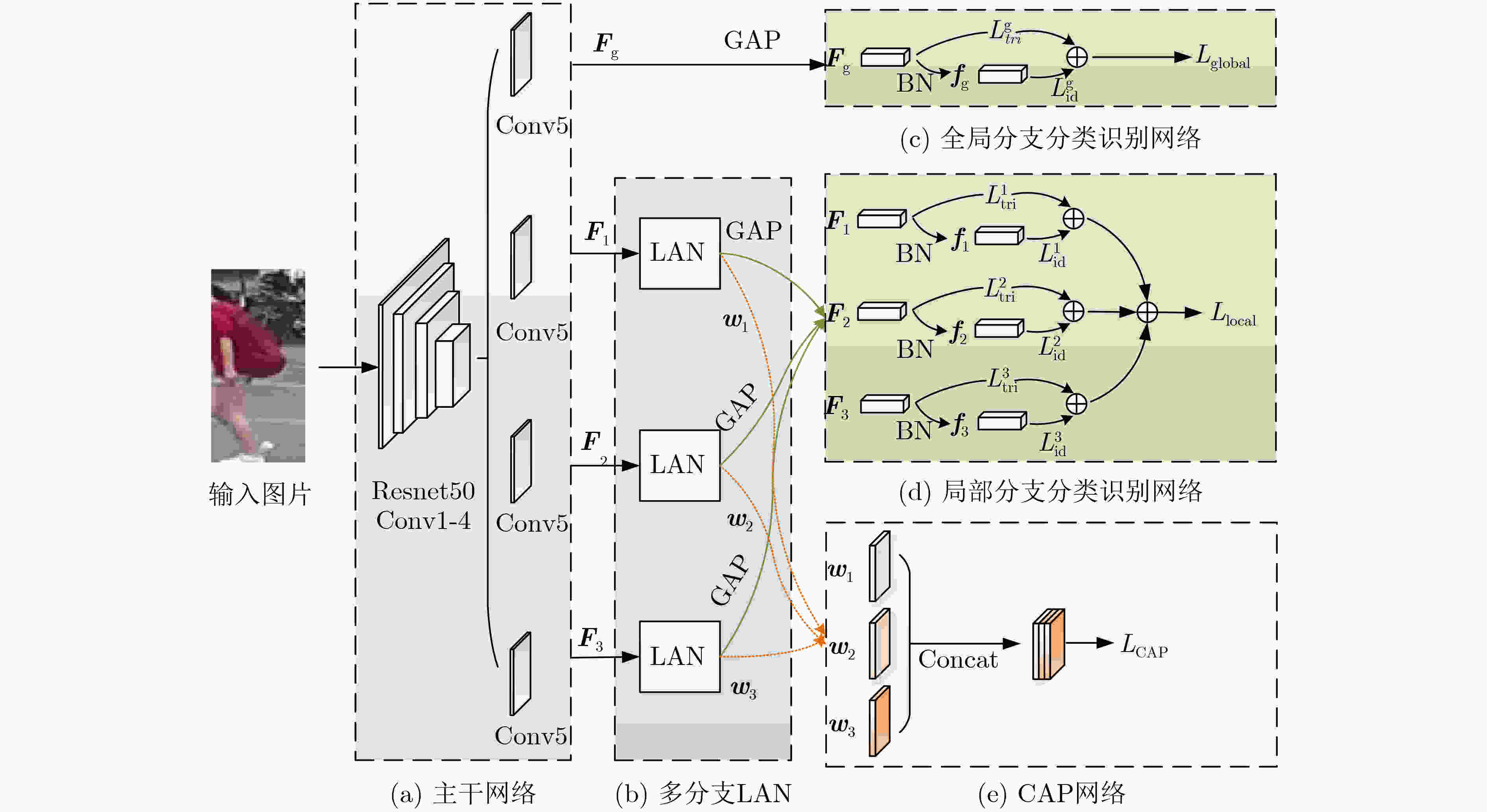

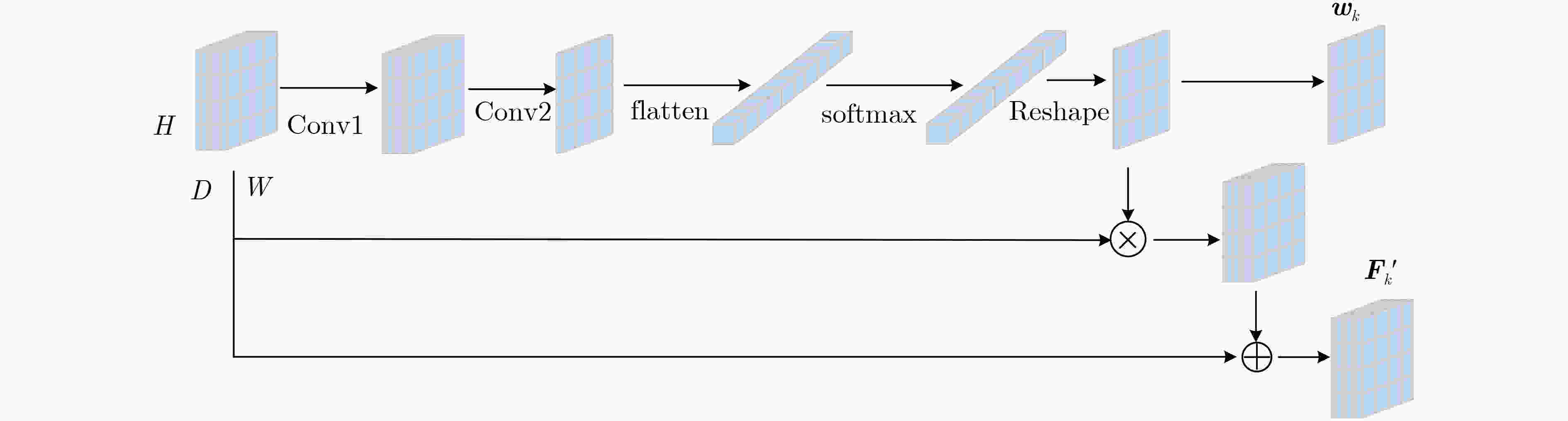

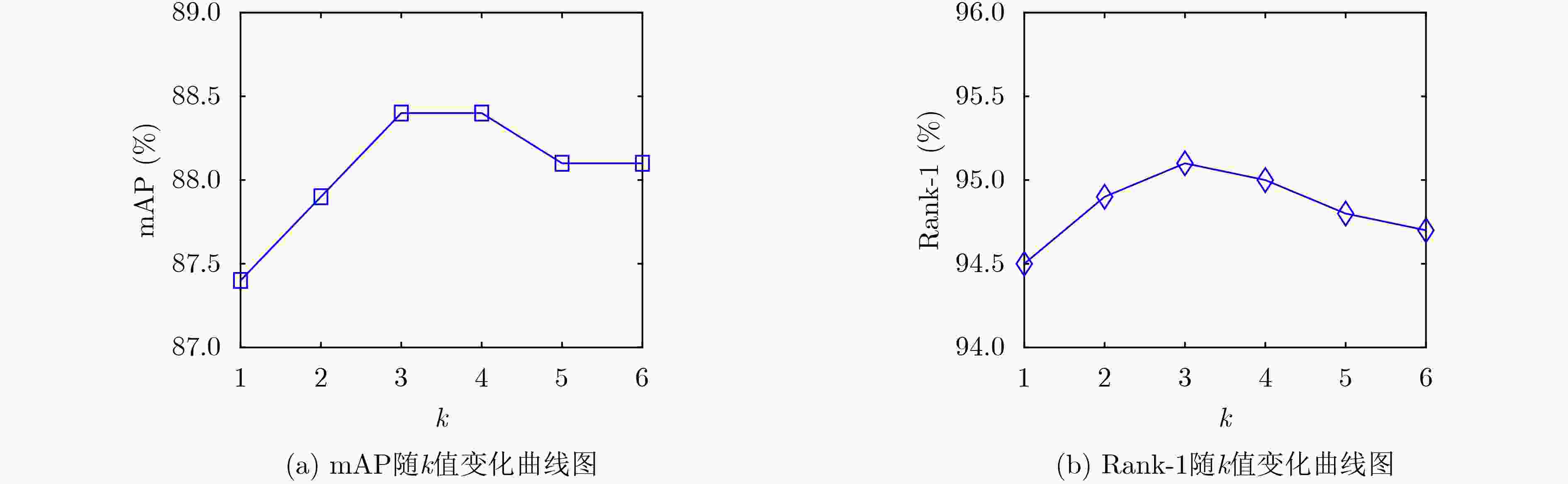

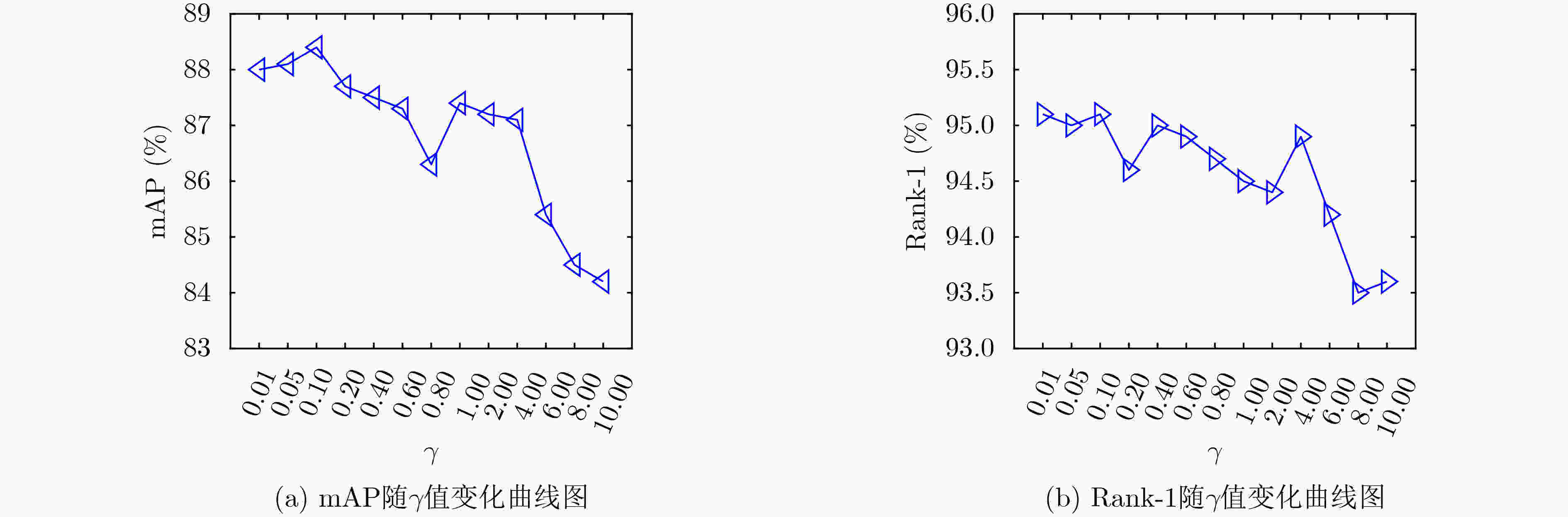

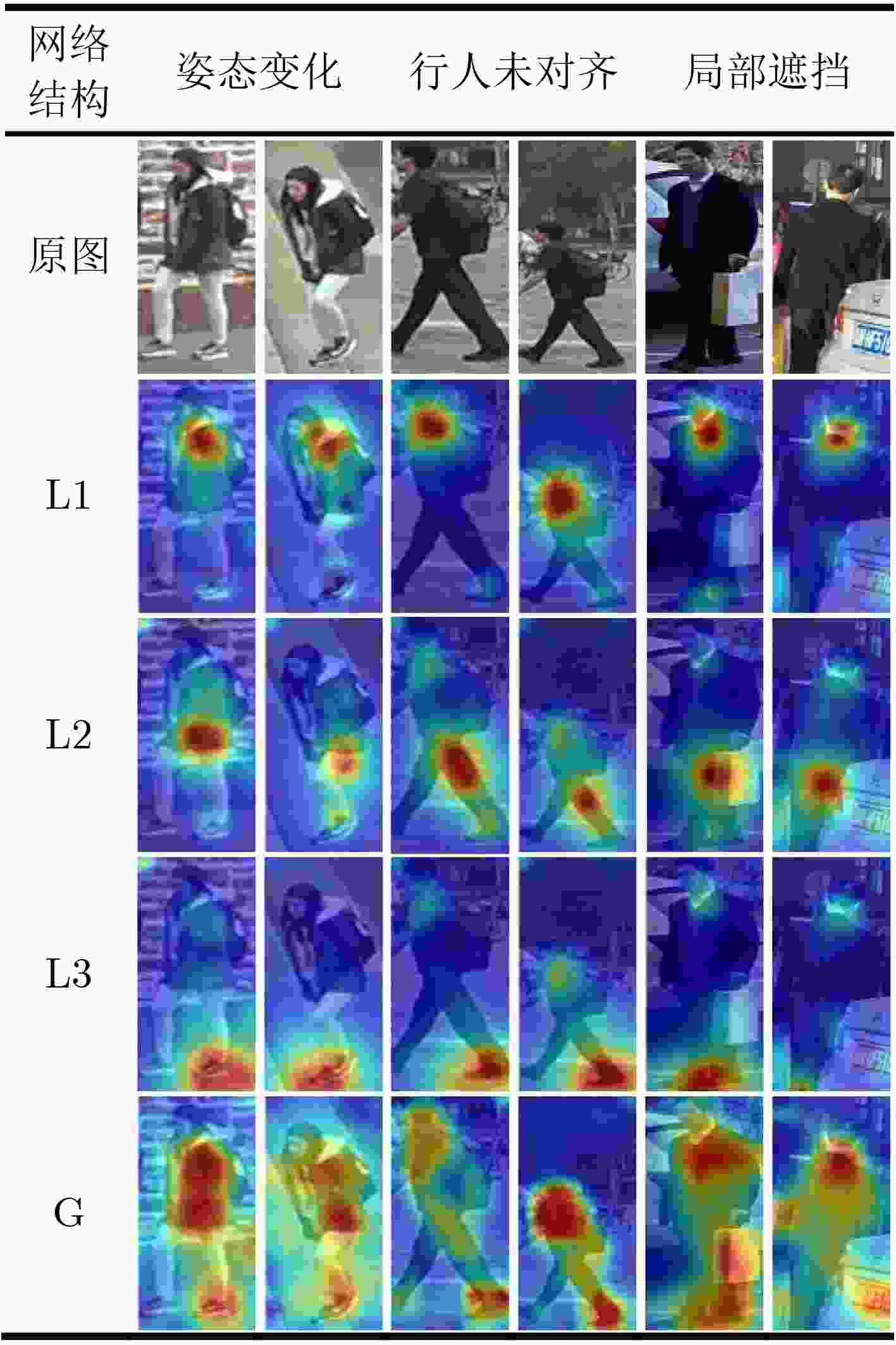

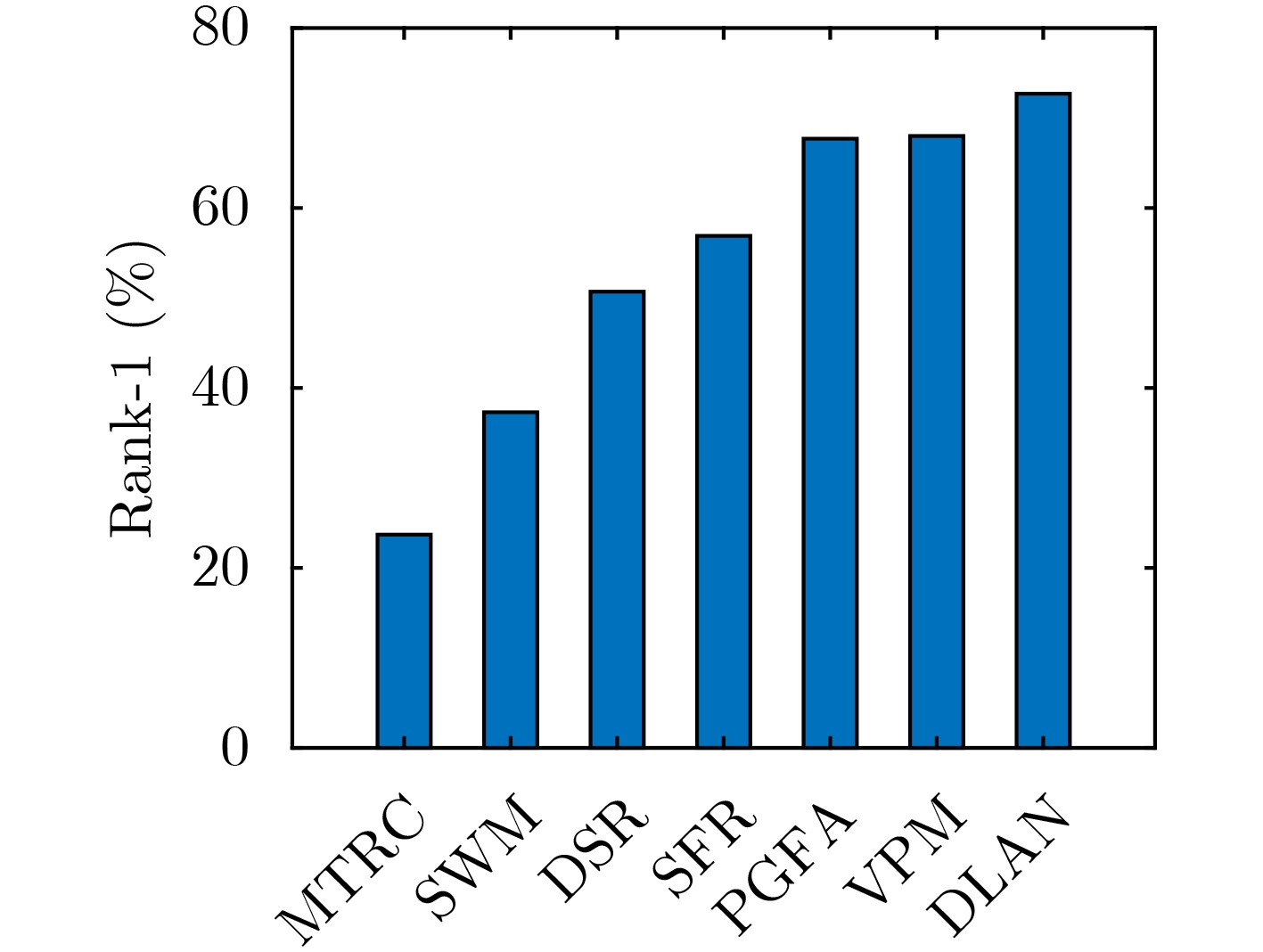

摘要: 针对现实场景中行人图像被遮挡以及行人姿态或视角变化造成的未对齐问题,该文提出一种基于多样化局部注意力网络(DLAN)的行人重识别(Re-ID)方法。首先,在骨干网络后分别设计了全局网络和多分支局部注意力网络,一方面学习全局的人体空间结构特征,另一方面自适应地获取人体不同部位的显著性局部特征;然后,构造了一致性激活惩罚函数引导各局部分支学习不同身体区域的互补特征,从而获取行人的多样化特征表示;最后,将全局特征与局部特征集成到分类识别网络中,通过联合学习形成更全面的行人描述。在Market1501, DukeMTMC-reID和CUHK03行人重识别数据集上,DLAN模型的mAP值分别达到了88.4%, 79.5%和74.3%,Rank-1值分别达到了95.1%, 88.7%和76.3%,明显优于大多数现有方法,实验结果充分验证了所提方法的鲁棒性和判别能力。Abstract: To relieve the problem of occlusion and misalignment caused by pose/view variations in real world, a new deep architecture named Diversified Local Attention Network (DLAN) for person Re-IDentification (Re-ID) is proposed in this paper. On the whole, a global branch and multiple local attention branches are designed following the backbone network, which simultaneously learn the pedestrians' global spatial structure and salient local features of different body parts. Furthermore, a novel Consistent Activation Penalty (CAP) is devised to constraint the output of local networks so as to obtain the complementary and diversified feature representations. Finally, the global and local features are fed into the classification network to form more comprehensive description of pedestrian via jointly learning. Utilizing Market1501, DukeMTMC-reID and CUHK03 datasets, the proposed DLAN model has reached 88.4%/95.1%, 79.5%/88.7% and 74.3%/76.3% (mAP/Rank-1) respectively, which are better than the compared methods. The experiments adequately verify the robustness and discriminability of the proposed method.

-

表 1 DLAN模型网络变种结构表

网络变种 分支结构 损失函数 网络变种 分支结构 损失函数 全局 局部 全局 局部 LAN Baseline GAP

BN— $L_{{\rm{id}}}^{} + L_{{\rm{tri}}}^{}$ 3L+LAN+CAP — GAP

BNCAP $L_{{\rm{id}}}^{}{\rm{ + }}L_{{\rm{tri}}}^{}{\rm{ + }}{L_{{\rm{CAP}}}}$ 3L — GAP

BN$L_{{\rm{id}}}^{} + L_{{\rm{tri}}}^{}$ G+3L+LAN GAP

BNLAN

GAP

BN$L_{{\rm{id}}}^{} + L_{{\rm{tri}}}^{}$ LAN 3L+LAN — LAN

GAP

BN$L_{{\rm{id}}}^{} + L_{{\rm{tri}}}^{}$ G+3L+LAN+CAP

(DLAN)GAP

BNGAP

BNCAP $L_{{\rm{id}}}^{}{\rm{ + }}L_{{\rm{tri}}}^{}{\rm{ + }}{L_{{\rm{CAP}}}}$ 表 2 消融实验结果(%)

网络变种 mAP Rank-1 Rank-5 Rank-10 Params Baseline 84.3 93.5 97.5 98.9 25.1M 3L 85.9 94.0 98.3 99.0 38.2M 3L+LAN 86.3 94.3 98.4 99.0 43.5M 3L+LAN+CAP 86.9 94.7 98.4 99.0 43.5M G+3L+LAN 87.8 94.7 98.5 99.0 49.6M G+3L+LAN+CAP(DLAN) 88.4 95.1 98.6 99.1 49.6M 表 3 DLAN模型及各对比算法在不同遮挡水平下的重识别结果(%)

Market1501 DukeMTMC-reID s = 0 s = 0.3 s = 0.6 s = 0 s = 0.3 s = 0.6 Rank-1 mAP Rank-1 mAP Rank-1 mAP Rank-1 mAP Rank-1 mAP Rank-1 mAP XQDA 43.0 21.7 28.3 28.3 28.3 12.0 31.2 17.2 20.5 10.6 17.4 9.4 NPD 55.4 30.0 39.6 19.1 32.5 16.1 46.7 27.3 33.7 17.7 29.7 15.7 IDE 81.9 61.0 62.4 48.2 45.6 36.4 66.3 45.2 57.9 41.6 39.0 28.4 TriNet 83.2 64.9 68.6 54.7 47.9 38.9 71.4 51.6 56.0 40.8 43.1 28.4 P2S 69.9 50.1 36.2 27.0 35.8 25.9 58.7 40.5 45.2 31.5 33.5 22.9 PAN 81.0 63.4 52.0 36.5 43.2 30.0 71.6 51.5 44.7 29.0 39.9 25.9 SVDNet 81.4 61.2 62.3 46.9 52.0 40.3 75.9 56.3 59.1 43.5 50.6 37.9 RandEra 85.8 68.4 73.8 58.7 51.4 38.9 73.3 57 62.9 47.4 47.9 35.1 RNLSTMA 90.6 76.9 77.0 64.0 53.1 45.1 77.4 62.5 70.2 58.6 52.3 41.5 mGD+RNLSTMA 91.3 77.9 85.1 71.2 65.8 53.2 80.8 63.9 74.1 58.8 63.0 47.7 DLAN 95.1 88.4 89.2 79.3 71.5 59.0 88.7 79.5 83.6 72.4 68.9 56.6 表 4 DLAN方法与现有Re-ID方法的性能比较(%)

方法 Market1501 DukeMTMC-REID CUHK03-NP-Labled CUHK03-NP-Detected mAP Rank-1 mAP Rank-1 mAP Rank-1 mAP Rank-1 SVDNet[29] 62.1 82.3 56.8 76.7 – – 37.3 41.5 SGGNN[30] 82.8 92.3 68.2 81.1 – – – – PCB[8] 81.6 93.8 69.2 83.3 – – 57.5 63.7 BDB[32] 84.3 94.2 72.1 86.8 71.7 73.6 69.3 72.8 CAM[14] 84.5 94.7 73.7 87.7 – – – – MHN[31] 85.0 95.1 77.2 89.1 72.4 77.2 65.4 71.7 CCAN[22] 87.0 94.6 76.8 87.2 72.9 75.2 70.7 73.0 DLAN 88.4 95.1 79.5 88.7 74.3 76.3 71.8 73.4 -

[1] YE Mang, SHEN Jianbing, LIN Gaojie, et al. Deep learning for person re-identification: A survey and outlook[J]. arXiv preprint arXiv: 2001.04193, 2020. [2] 罗浩, 姜伟, 范星, 等. 基于深度学习的行人重识别研究进展[J]. 自动化学报, 2019, 45(11): 2032–2049. doi: 10.16383/j.aas.c180154LUO Hao, JIANG Wei, FAN Xing, et al. A survey on deep learning based person re-identification[J]. Acta Automatica Sinica, 2019, 45(11): 2032–2049. doi: 10.16383/j.aas.c180154 [3] LUO Hao, JIANG Wei, GU Youzhi, et al. A strong baseline and batch normalization neck for deep person re-identification[J]. IEEE Transactions on Multimedia, 2020, 22(10): 2597–2609. doi: 10.1109/TMM.2019.2958756 [4] 周智恒, 刘楷怡, 黄俊楚, 等. 一种基于等距度量学习策略的行人重识别改进算法[J]. 电子与信息学报, 2019, 41(2): 477–483. doi: 10.11999/JEIT180336ZHOU Zhiheng, LIU Kaiyi, HUANG Junchu, et al. Improved metric learning algorithm for person re-identification based on equidistance[J]. Journal of Electronics &Information Technology, 2019, 41(2): 477–483. doi: 10.11999/JEIT180336 [5] ZHAO Haiyu, TIAN Maoqing, SUN Shuyang, et al. Spindle net: Person re-identification with human body region guided feature decomposition and fusion[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 907–915. doi: 10.1109/CVPR.2017.103. [6] ZHENG Liang, HUANG Yujia, LU Huchuan, et al. Pose-invariant embedding for deep person re-identification[J]. IEEE Transactions on Image Processing, 2019, 28(9): 4500–4509. doi: 10.1109/TIP.2019.2910414 [7] LI Jianing, ZHANG Shiliang, TIAN Qi, et al. Pose-guided representation learning for person re-identification[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(2): 622–635. doi: 10.1109/TPAMI.2019.2929036 [8] SUN Yifan, ZHENG Liang, YANG Yi, et al. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline)[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 501–518. doi: 10.1007/978-3-030-01225-0_30. [9] LUO Hao, JIANG Wei, ZHANG Xuan, et al. AlignedReID++: Dynamically matching local information for person re-identification[J]. Pattern Recognition, 2019, 94: 53–61. doi: 10.1016/j.patcog.2019.05.028 [10] WANG Guanshuo, YUAN Yufeng, CHEN Xiong, et al. Learning discriminative features with multiple granularities for person re-identification[C]. The 26th ACM international conference on Multimedia, Seoul, South Korea, 2018: 274–282. doi: 10.1145/3240508.3240552. [11] SONG Chunfeng, HUANG Yan, OUYANG Wanli, et al. Mask-guided contrastive attention model for person re-identification[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1179–1188. doi: 10.1109/CVPR.2018.00129. [12] ZHANG Zhizheng, LAN Cuiling, ZENG Wenjun, et al. Relation-aware global attention for person re-identification[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 3183–3192. doi: 10.1109/CVPR42600.2020.00325. [13] 王粉花, 赵波, 黄超, 等. 基于多尺度和注意力融合学习的行人重识别[J]. 电子与信息学报, 2020, 42(12): 3045–3052. doi: 10.11999/JEIT190998WANG Fenhua, ZHAO Bo, HUANG Chao, et al. Person re-identification based on multi-scale network attention fusion[J]. Journal of Electronics &Information Technology, 2020, 42(12): 3045–3052. doi: 10.11999/JEIT190998 [14] YANG Wenjie, HUANG Houjing, ZHANG Zhang, et al. Towards rich feature discovery with class activation maps augmentation for person re-identification[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 1389–1398. doi: 10.1109/CVPR.2019.00148. [15] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. [16] MÜLLER R, KORNBLITH S, and HINTON G. When does label smoothing help?[C]. The 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada, 2019: 4694–4703. [17] LI Shuang, BAK S, CARR P, et al. Diversity regularized spatiotemporal attention for video-based person re-identification[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 369–378. doi: 10.1109/CVPR.2018.00046. [18] ZHENG Liang, SHEN Liyue, TIAN Lu, et al. Scalable person re-identification: A benchmark[C]. 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015: 1116–1124. doi: 10.1109/ICCV.2015.133. [19] RISTANI E, SOLERA F, ZOU R, et al. Performance measures and a data set for multi-target, multi-camera tracking[C]. European Conference on Computer Vision, Amsterdam, Netherlands, 2016: 17–35. doi: 10.1007/978-3-319-48881-3_2. [20] LI Wei, ZHAO Rui, XIAO Tong, et al. Deepreid: Deep filter pairing neural network for person re-identification[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 152–159. doi: 10.1109/CVPR.2014.27. [21] ZHENG Weishi, LI Xiang, XIANG Tao, et al. Partial person re-identification[C]. 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015: 4678–4686. doi: 10.1109/ICCV.2015.531. [22] ZHOU Jieming, ROY S K, FANG Pengfei, et al. Cross-correlated attention networks for person re-identification[J]. Image and Vision Computing, 2020, 100: 103931. doi: 10.1016/j.imavis.2020.103931 [23] 杨婉香, 严严, 陈思, 等. 基于多尺度生成对抗网络的遮挡行人重识别方法[J]. 软件学报, 2020, 31(7): 1943–1958. doi: 10.13328/j.cnki.jos.005932YANG Wanxiang, YAN Yan, CHEN Si, et al. Multi-scale generative adversarial network for person re-identification under occlusion[J]. Journal of Software, 2020, 31(7): 1943–1958. doi: 10.13328/j.cnki.jos.005932 [24] LIAO Shengcai, JAIN A K, and LI S Z. Partial face recognition: Alignment-free approach[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(5): 1193–1205. doi: 10.1109/TPAMI.2012.191 [25] HE Lingxiao, LIANG Jian, LI Haiqing, et al. Deep spatial feature reconstruction for partial person re-identification: Alignment-free approach[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7073–7082. doi: 10.1109/CVPR.2018.00739. [26] HE Lingxiao, SUN Zhenan, ZHU Yuhao, et al. Recognizing partial biometric patterns[J]. arXiv preprint arXiv: 1810.07399, 2018. [27] MIAO Jiaxu, WU Yu, LIU Ping, et al. Pose-guided feature alignment for occluded person re-identification[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, 2019: 542–551. doi: 10.1109/ICCV.2019.00063. [28] SUN Yifan, XU Qin, LI Yali, et al. Perceive where to focus: Learning visibility-aware part-level features for partial person re-identification[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 393–402. doi: 10.1109/CVPR.2019.00048. [29] SUN Yifan, ZHENG Liang, DENG Weijian, et al. Svdnet for pedestrian retrieval[C]. 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 3820–3828. doi: 10.1109/ICCV.2017.410. [30] SHEN Yantao, LI Hongsheng, YI Shuai, et al. Person re-identification with deep similarity-guided graph neural network[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 508–526. doi: 10.1007/978-3-030-01267-0_30. [31] CHEN Binghui, DENG Weihong, and HU Jiani. Mixed high-order attention network for person re-identification[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, 2019: 371–381. doi: 10.1109/ICCV.2019.00046. [32] DAI Zuozhuo, CHEN Mingqiang, GU Xiaodong, et al. Batch DropBlock network for person re-identification and beyond[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, 2019: . 3690-3700. doi: 10.1109/ICCV.2019.00379. -

下载:

下载:

下载:

下载: