Adversarial Training Defense Based on Second-order Adversarial Examples

-

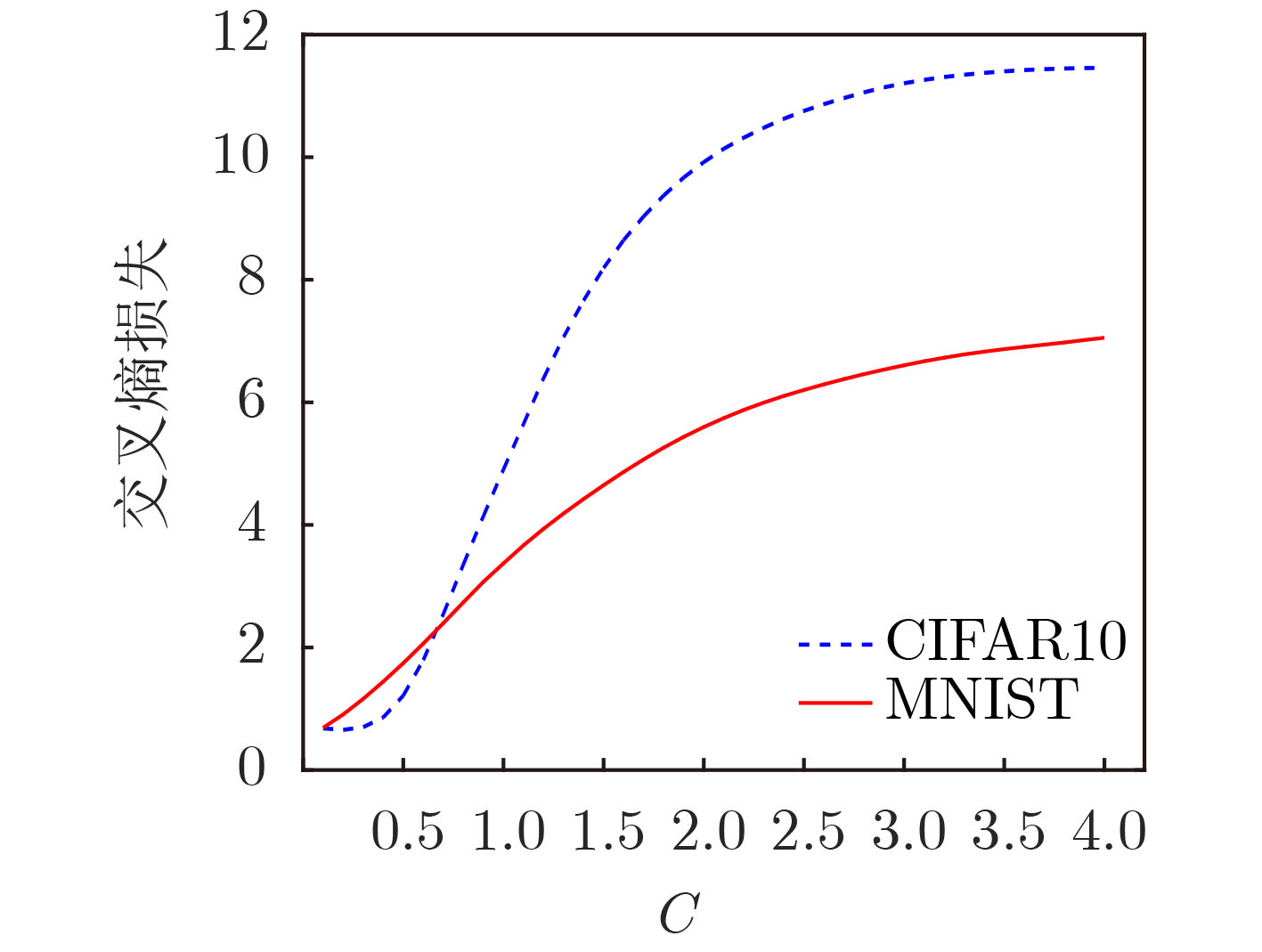

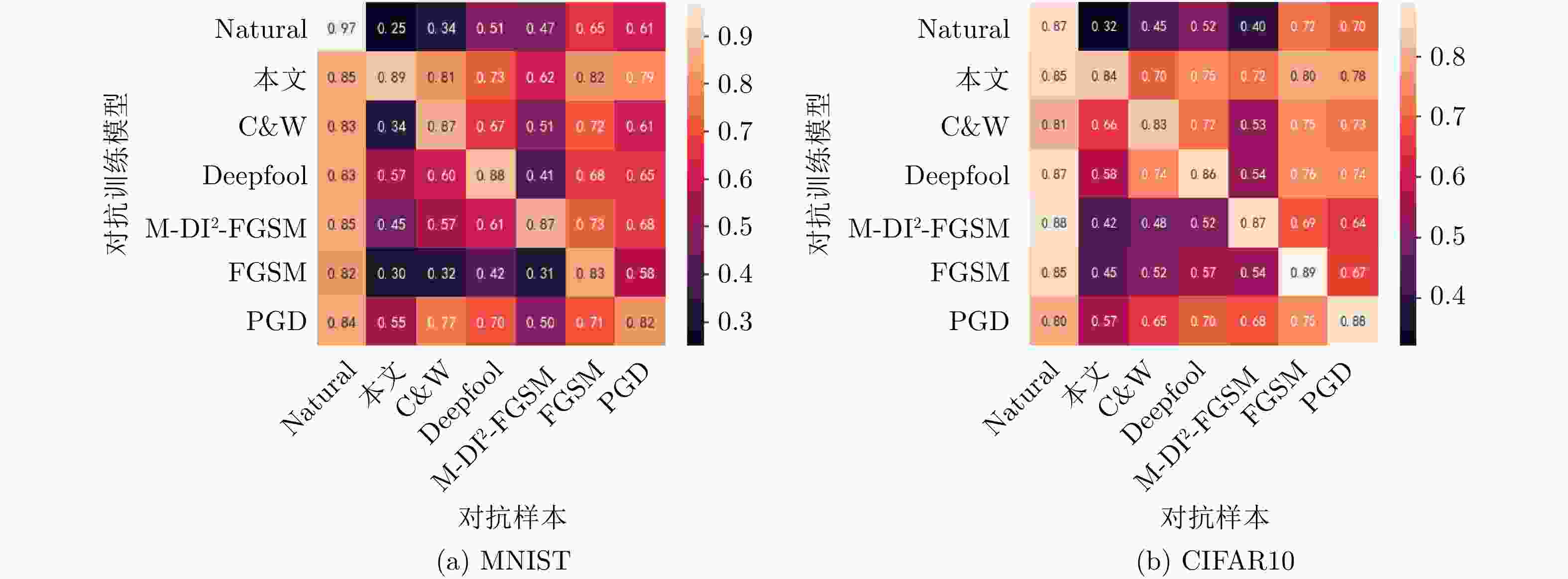

摘要: 深度神经网络(DNN)应用于图像识别具有很高的准确率,但容易遭到对抗样本的攻击。对抗训练是目前抵御对抗样本攻击的有效方法之一。生成更强大的对抗样本可以更好地解决对抗训练的内部最大化问题,是提高对抗训练有效性的关键。该文针对内部最大化问题,提出一种基于2阶对抗样本的对抗训练,在输入邻域内进行2次多项式逼近,生成更强的对抗样本,从理论上分析了2阶对抗样本的强度优于1阶对抗样本。在MNIST和CIFAR10数据集上的实验表明,2阶对抗样本具有更高的攻击成功率和隐蔽性。与PGD对抗训练相比,2阶对抗训练防御对当前典型的对抗样本均具有鲁棒性。Abstract: Although Deep Neural Networks (DNN) achieves high accuracy in image recognition, it is significantly vulnerable to adversarial examples. Adversarial training is one of the effective methods to resist adversarial examples empirically. Generating more powerful adversarial examples can solve the inner maximization problem of adversarial training better, which is the key to improve the effectiveness of adversarial training. In this paper, to solve the inner maximization problem, an adversarial training based on second-order adversarial examples is proposed to generate more powerful adversarial examples through quadratic polynomial approximation in a tiny input neighborhood. Through theoretical analysis, second-order adversarial examples are shown to outperform first-order adversarial examples. Experiments on MNIST and CIFAR10 data sets show that second-order adversarial examples have high attack success rate and high concealment. Compared with PGD adversarial training, adversarial training based on second-order adversarial examples is robust to all the existing typical attacks.

-

表 1 基于2阶对抗样本的对抗训练算法

输入 $ {\boldsymbol{X}} $为数据集;$T$为训练批次;$M$为训练集大小;$n$为梯度下

降迭代次数;$\tau $为学习率输出 $ q $为模型参数 1:初始化模型参数 $ q $ 2: for ${\text{epoch} } = 1,2,\cdots, T$ do 3: for $m = 1,2,\cdots, M$ do 4: $\nabla L(X) \leftarrow {\left[ {\dfrac{{\partial L({\boldsymbol{X}})}}{{\partial {{\boldsymbol{X}}_i}}}} \right]_{n \times 1}}$

5: ${\nabla ^2}L({\boldsymbol{X}}) \leftarrow {\left[ {\dfrac{{{\partial ^2}L({\boldsymbol{X}})}}{{\partial {{\boldsymbol{X}}_i}\partial {{\boldsymbol{X}}_j}}}} \right]_{n \times n}}$6: $T({{\boldsymbol{\delta }}}) = L({\boldsymbol{X}}) + \nabla L{({\boldsymbol{X}})^T}{\delta } + \dfrac{1}{2}{{{\boldsymbol{\delta}} }^T}{\nabla ^2}L({\boldsymbol{X}}){{\boldsymbol{\delta}} }$ 7: for $k = 1,2,\cdots, n$ do 8: ${{\boldsymbol{\delta}} } \leftarrow {{\boldsymbol{\delta}} } + \alpha \cdot {\text{sign}}({\nabla _{\delta }}T({{\boldsymbol{\delta}} }))$ 9: end for 10: $ {{\boldsymbol{\theta}} } \leftarrow {{\boldsymbol{\theta}} } - \tau \cdot {\nabla _{\theta }}L({\boldsymbol{X}} + {{\boldsymbol{\delta}} }) $ 11: end for 12:end for 表 2 不同的对抗样本在MNIST和CIFAR10的对比

MNIST CIFAR10 ${\ell _2}$ ${\ell _\infty }$ PSNR ASR(%) ${\ell _2}$ ${\ell _\infty }$ PSNR ASR(%) 本文 1.97 0.24 76.0 100 1.84 0.24 81.4 100 C&W 2.56 0.27 71.4 100 2.42 0.30 79.3 100 Deepfool 3.25 0.30 73.8 88.1 2.92 0.30 74.2 81.4 M-DI2-FGSM 2.75 0.29 75.6 95.1 3.12 0.25 77.1 91.7 FGSM 3.26 0.30 74.2 54.1 2.34 0.30 75.0 51.3 PGD 2.25 0.26 72.3 100 2.18 0.58 78.7 100 -

[1] CHICCO D, SADOWSKI P, and BALDI P. Deep autoencoder neural networks for gene ontology annotation predictions[C]. Proceedings of the 5th ACM Conference on Bioinformatics, Computational Biology, and Health Informatics, Newport Beach, America, 2014: 533–554. [2] SPENCER M, EICKHOLT J, and CHENG Jianlin. A deep learning network approach to ab initio protein secondary structure prediction[J]. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 2015, 12(1): 103–112. doi: 10.1109/TCBB.2014.2343960 [3] MIKOLOV T, DEORAS A, POVEY D, et al. Strategies for training large scale neural network language models[C]. 2011 IEEE Workshop on Automatic Speech Recognition & Understanding, Waikoloa, America, 2011: 196–201. [4] HINTON G, DENG Li, YU Dong, et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups[J]. IEEE Signal Processing Magazine, 2012, 29(6): 82–97. doi: 10.1109/MSP.2012.2205597 [5] LECUN Y, KAVUKCUOGLU K, FARABET C, et al. Convolutional networks and applications in vision[C]. Proceedings of 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 2010: 253–256. [6] KRIZHEVSKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[C]. Proceedings of the 25th International Conference on Neural Information Processing Systems - Volume 1, Lake Tahoe Nevada, America, 2012: 1097–1105. [7] SZEGEDY C, ZAREMBA W, SUTSKEVER I, et al. Intriguing properties of neural networks[C]. 2nd International Conference on Learning Representations, ICLR 2014, Banff, Canada, 2014. [8] STALLKAMP J, SCHLIPSING M, SALMEN J, et al. Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition[J]. Neural Networks, 2012, 32: 323–332. doi: 10.1016/j.neunet.2012.02.016 [9] CARLINI N and WAGNER D. Towards evaluating the robustness of neural networks[C]. 2017 IEEE Symposium on Security and Privacy (SP), San Jose, America, 2017: 39–57. [10] MOOSAVI-DEZFOOLI S M, FAWZI A, and FROSSARD P. DeepFool: A simple and accurate method to fool deep neural networks[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, America, 2016: 2574–2582. doi: 10.1109/CVPR.2016.282. [11] XIE Cihang, ZHANG Zhishuai, ZHOU Yuyin, et al. Improving transferability of adversarial examples with input diversity[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, America, 2019: 2725–2734. [12] LEE J G, JUN S, CHO Y W, et al. Deep learning in medical imaging: General overview[J]. Korean Journal of Radiology, 2017, 18(4): 570–584. doi: 10.3348/kjr.2017.18.4.570 [13] MADRY A, MAKELOV A, SCHMIDT L, et al. Towards deep learning models resistant to adversarial attacks[C]. ICLR 2018 Conference Blind Submission, Vancouver, Canada, 2018. [14] GOODFELLOW I J, SHLENS J, and SZEGEDY C. Explaining and harnessing adversarial examples[C]. 3rd International Conference on Learning Representations, San Diego, America, 2015. [15] ARAUJO A, MEUNIER L, PINOT R, et al. Robust neural networks using randomized adversarial training[EB/OL]. https://arxiv.org/pdf/1903.10219.pdf, 2020. [16] LAMB A, BINAS J, GOYAL A, et al. Fortified networks: Improving the robustness of deep networks by modeling the manifold of hidden representations[C]. ICLR 2018 Conference Blind Submission, Vancouver, Canada, 2018. [17] XU Weilin, EVANS D, and QI Yanjun. Feature squeezing: Detecting adversarial examples in deep neural networks[C]. Network and Distributed Systems Security Symposium (NDSS), San Diego, America, 2018. doi: 10.14722/ndss.2018.23198. [18] BELINKOV Y and BISK Y. Synthetic and natural noise both break neural machine translation[C]. ICLR 2018 Conference Blind Submission, Vancouver, Canada, 2018. [19] YANG Yuzhe, ZHANG Guo, KATABI D, et al. ME-net: Towards effective adversarial robustness with matrix estimation[C]. Proceedings of the 36th International Conference on Machine Learning, Long Beach, America, 2019. -

下载:

下载:

下载:

下载: