Shuffling Step Recognition Using 3D Convolution for Parkinsonian Patients

-

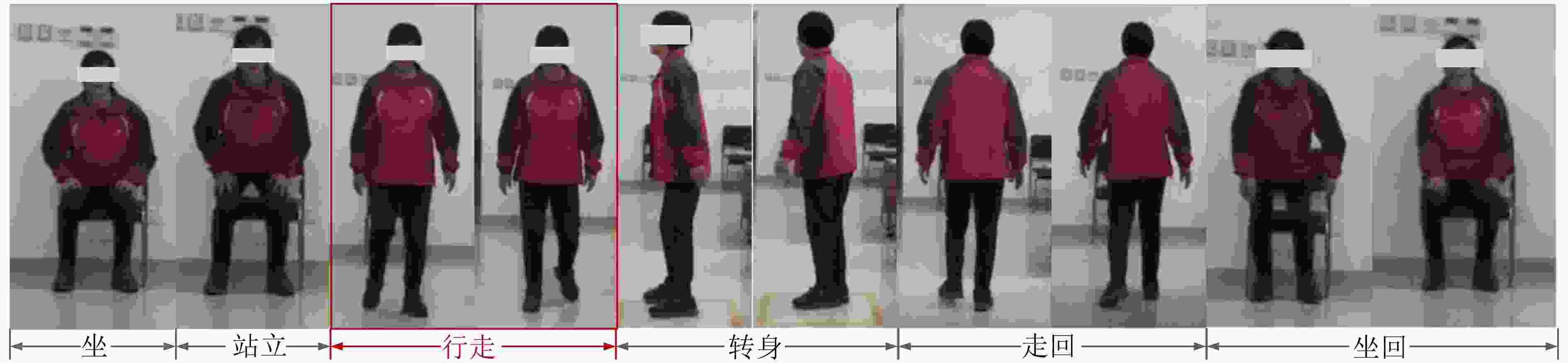

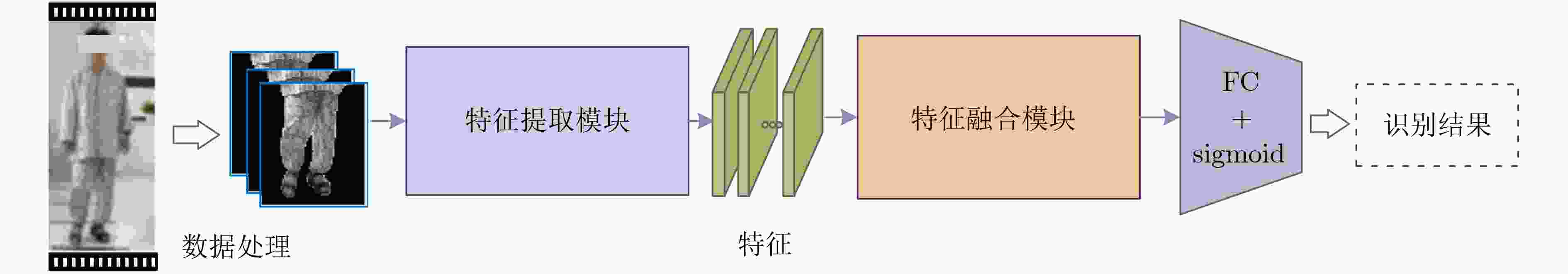

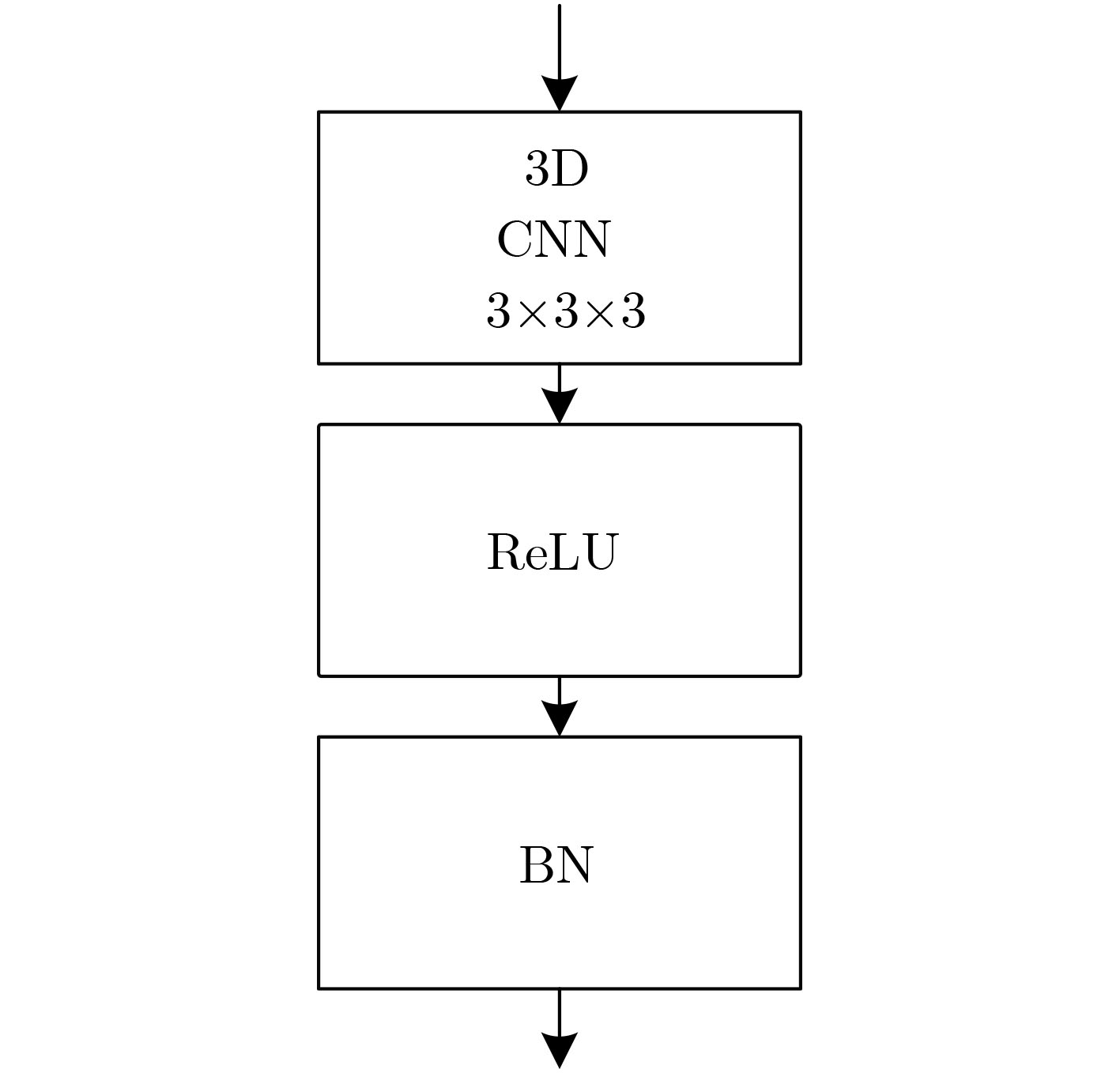

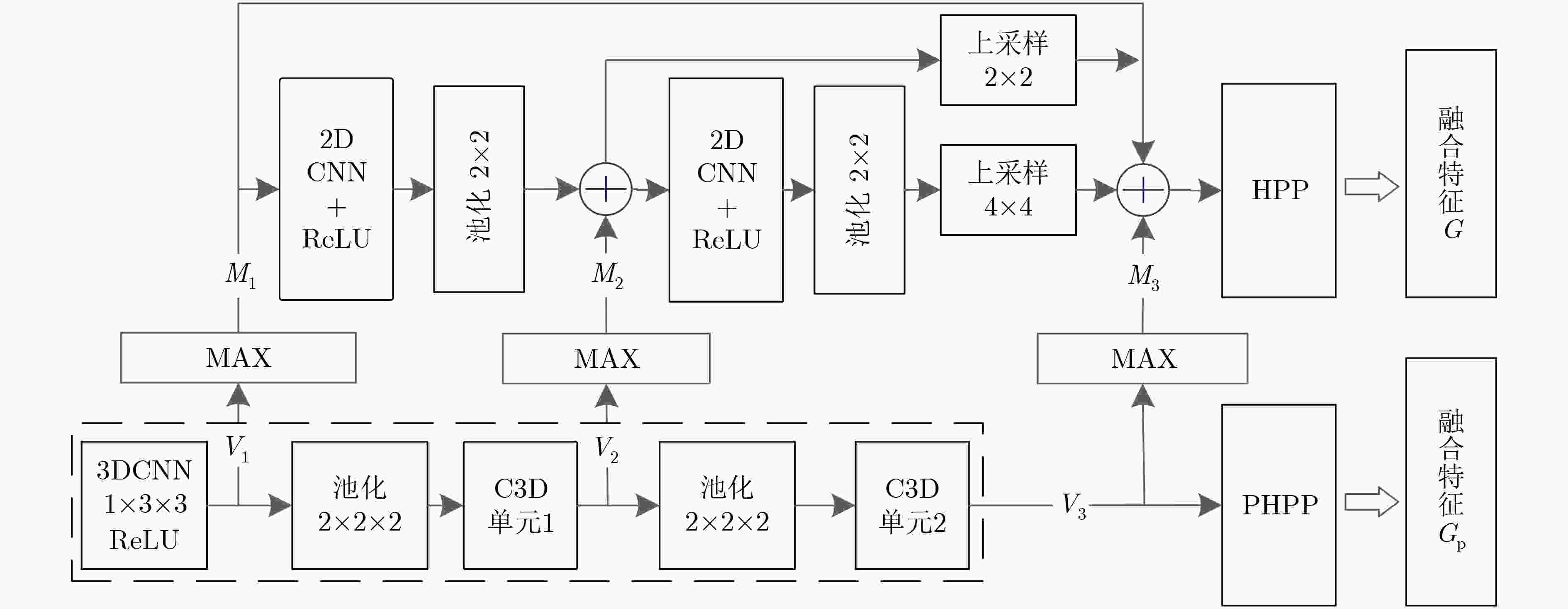

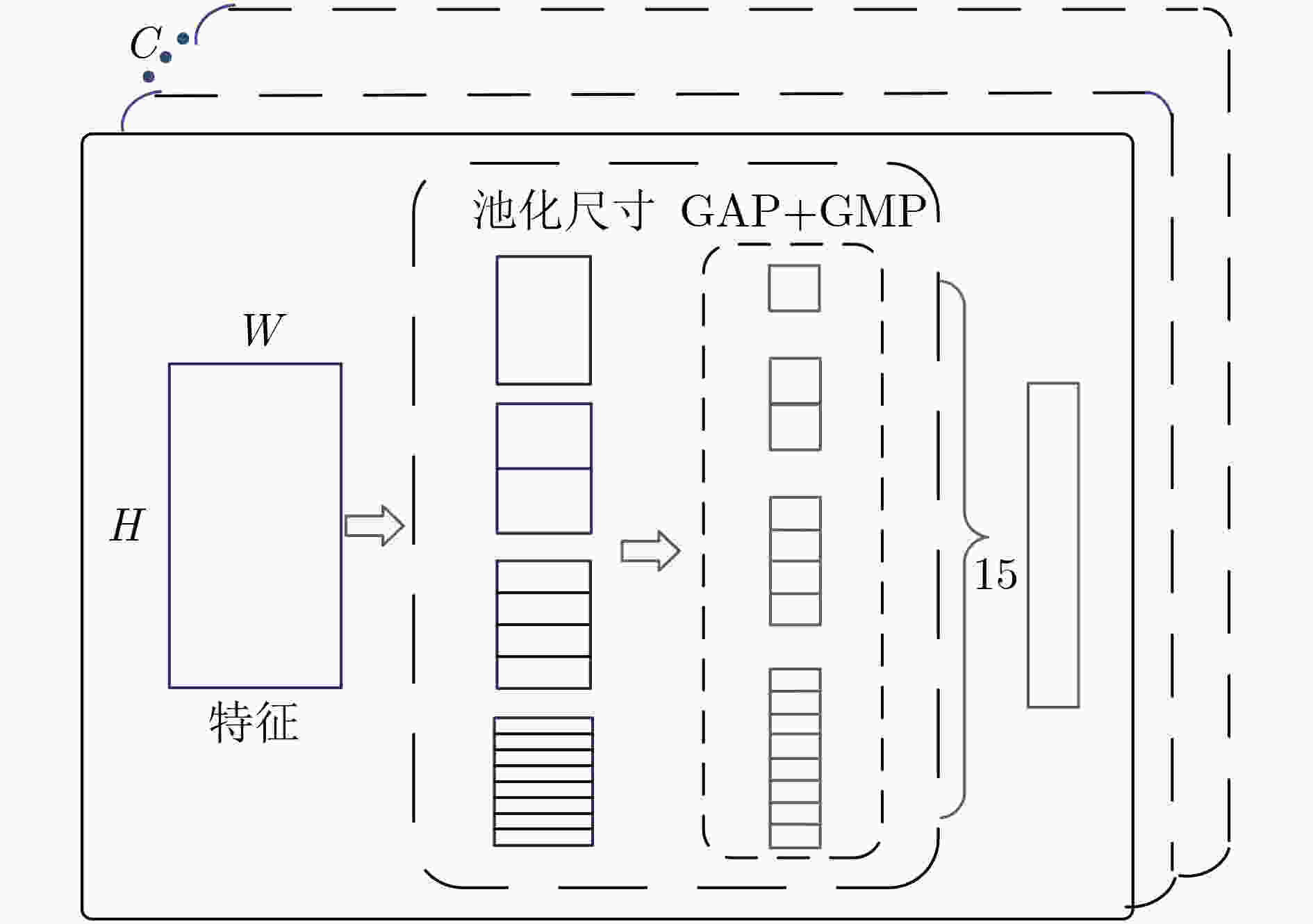

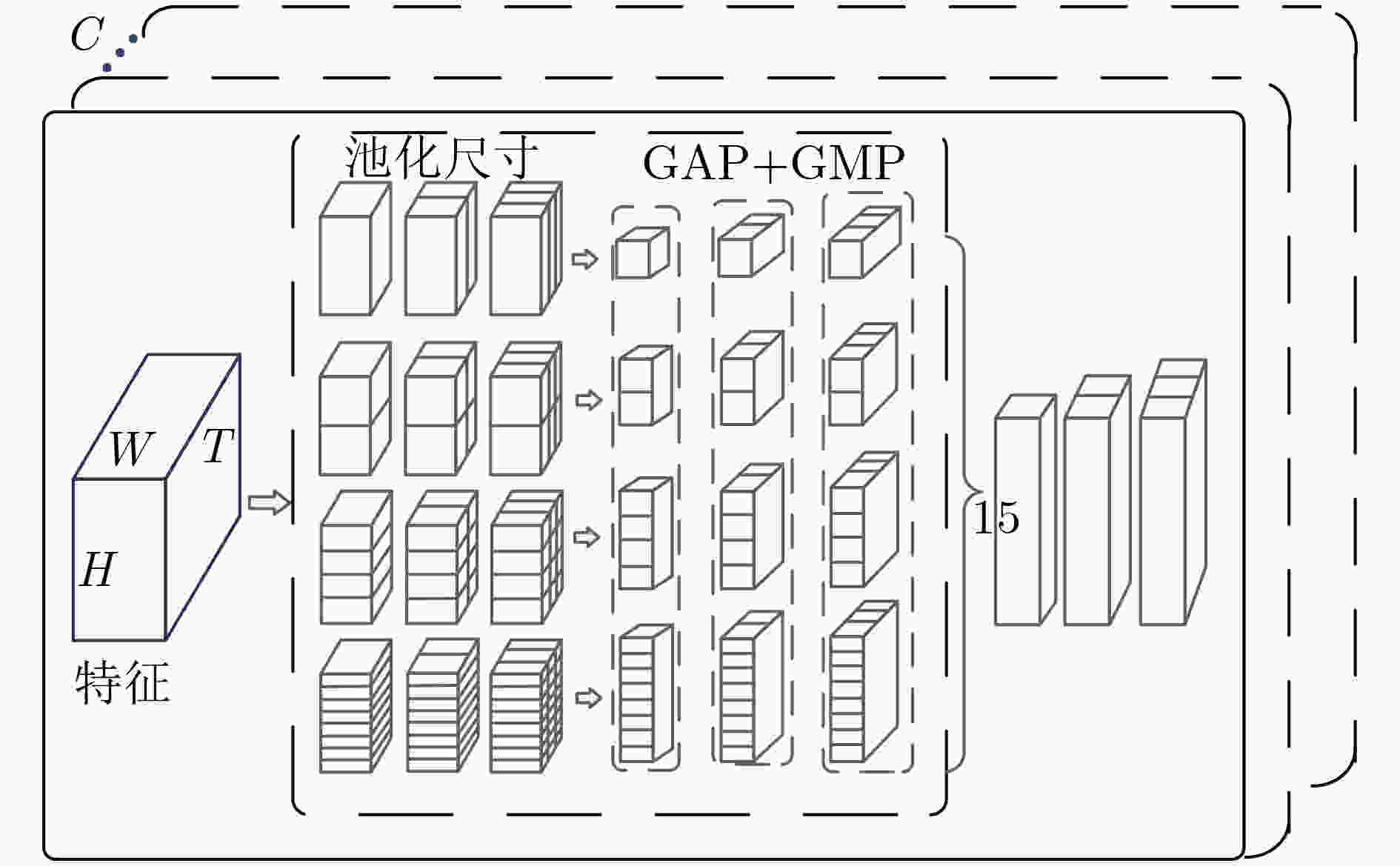

摘要: 冻结步态(FoG)是一种在帕金森病(PD)中常见的异常步态,而拖步则是冻结步态的一种表现形式,也是医生用来判断患者的治疗状况的重要因素,并且拖步状态也对PD患者的日常生活有很大影响。该文提出一种通过计算机视觉来实现患者拖步状态自动识别的方法,该方法通过以3维卷积为基础的网络结构,可以从PD患者的TUG测试视频中自动识别出患者是否具有拖步症状。其思路是首先利用特征提取模块从经过预处理的视频序列中提取出时空特征,然后将得到的特征在不同空间和时间尺度上进行融合,之后将这些特征送入分类网络中得到相应的识别结果。在该工作中共收集364个正常步态样本和362个具有拖步状态的样本来构成实验数据集,在该数据集上的实验表明,该方法的平均准确率能够达到91.3%。并且其能从临床常用的TUG测试视频中自动准确地识别出患者的拖步状态,这也为远程监测帕金森病患者的治疗状态提供了助力。Abstract: Freezing of Gait (FoG) is a common symptom among patients with Parkinson’s Disease (PD). In this paper, a vision-based method is proposed to recognize automatically the shuffling step symptom from the Timed Up-and-Go (TUG) videos based. In this method, a feature extraction block is utilized to extract features from image sequences, then features are fused along a temporal dimension, and these features are fed into a classification layer. In this experiment, the dataset with 364 normal gait examples and 362 shuffling step examples is used. And the experiment on the collected dataset shows that the average accuracy of the best method is 91.3%. Using this method, the symptom of the shuffling step can be recognized automatically and efficiently from TUG videos, showing the possibility to remotely monitor the movement condition of PD patients.

-

表 1 PD患者信息统计

平均值 范围 年龄 56.79±9.48 [37,73] 体重(kg) 63.8±10.37 [49,90] 身高(cm) 164.8±6.12 [156,178] 表 2 不同网络比较结果(%)

表 3 不同组成的网络结构试验

准确率(%) 精确率(%) 召回率(%) 参数量(M) FLOPs (M) 时间(ms) 无UP和PHPP结构 88.1 89.0 88.2 1.5 2.9 3.7 无PHPP结构 89.7 88.2 93.6 1.6 3.2 5.1 完整结构 91.3 89.7 92.0 1.9 3.8 7.5 表 4 多种图像输入格式实验结果比较(%)

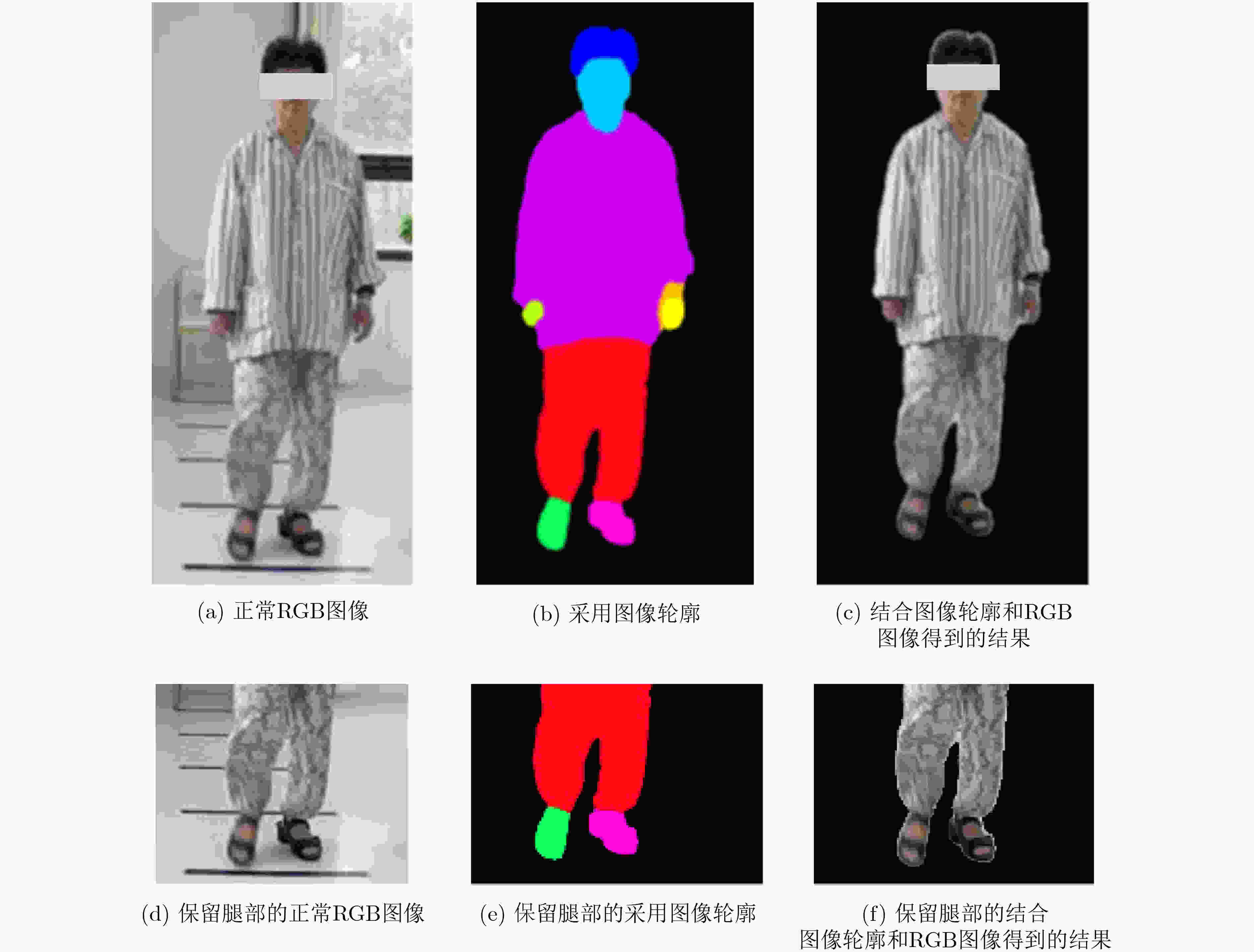

输入数据形式 准确率 精确率 召回率 a 84.8 84.6 90.2 b 81.1 83.9 80.4. c 82.9 83.7 87.4 d 84.9 85.0 90.1 e 83.9 83.5 87.3 f 90.8 92.1 90.8 -

[1] GBD 2016 Parkinson’s Disease Collaborators. Global, regional, and national burden of Parkinson’s disease, 1990–2016: A systematic analysis for the global burden of disease study 2016[J]. The Lancet Neurology, 2018, 17(11): 939–953. doi: 10.1016/S1474-4422(18)30295-3 [2] BLOEM B R, HAUSDORFF J M, VISSER J E, et al. Falls and freezing of gait in Parkinson’s disease: A review of two interconnected, episodic phenomena[J]. Movement Disorders, 2004, 19(8): 871–884. doi: 10.1002/mds.20115 [3] DE LAU L M L and BRETELER M M B. Epidemiology of Parkinson’s disease[J]. The Lancet Neurology, 2006, 5(6): 525–535. doi: 10.1016/S1474-4422(06)70471-9 [4] SVEINBJORNSDOTTIR S. The clinical symptoms of Parkinson’s disease[J]. Journal of Neurochemistry, 2016, 139(S1): 318–324. doi: 10.1111/jnc.13691 [5] FERRAYE M U, DEBÛ B, and POLLAK P. Deep brain stimulation effect on freezing of gait[J]. Movement Disorders, 2008, 23(S2): S489–S494. doi: 10.1002/mds.21975 [6] GILADI N and HERMAN T. How do i examine Parkinsonian gait?[J]. Movement Disorders Clinical Practice, 2016, 3(4): 427. doi: 10.1002/mdc3.12347 [7] SCHAAFSMA J D, BALASH Y, GUREVICH T, et al. Characterization of freezing of gait subtypes and the response of each to levodopa in Parkinson’s disease[J]. European Journal of Neurology, 2003, 10(4): 391–398. doi: 10.1046/j.1468-1331.2003.00611.x [8] CAMPS J, SAMÀ A, MARTÍN M, et al. Deep learning for detecting freezing of gait episodes in Parkinson’s disease based on accelerometers[C]. The 14th International Work-Conference on Artificial Neural Networks, Cadiz, Spain, 2017: 344–355. doi: 10.1007/978-3-319-59147-6_30. [9] MILETI I, GERMANOTTA M, ALCARO S, et al. Gait partitioning methods in Parkinson’s disease patients with motor fluctuations: A comparative analysis[C]. IEEE International Symposium on Medical Measurements and Applications, Rochester, USA, 2017: 402–407. doi: 10.1109/MeMeA.2017.7985910. [10] NGUYEN T N, HUYNH H H, and MEUNIER J. Skeleton-based abnormal gait detection[J]. Sensors, 2016, 16(11): 1792. doi: 10.3390/s16111792 [11] 高发荣, 王佳佳, 席旭刚, 等. 基于粒子群优化-支持向量机方法的下肢肌电信号步态识别[J]. 电子与信息学报, 2015, 37(5): 1154–1159. doi: 10.11999/JEIT141083GAO Farong, WANG Jiajia, XI Xugang, et al. Gait recognition for lower extremity electromyographic signals based on PSO-SVM method[J]. Journal of Electronics &Information Technology, 2015, 37(5): 1154–1159. doi: 10.11999/JEIT141083 [12] MORRIS T R, CHO C, DILDA V, et al. Clinical assessment of freezing of gait in Parkinson’s disease from computer-generated animation[J]. Gait & Posture, 2013, 38(2): 326–329. doi: 10.1016/j.gaitpost.2012.12.011 [13] HU Kun, WANG Zhiyong, MEI Shaohui, et al. Vision-based freezing of gait detection with anatomic directed graph representation[J]. IEEE Journal of Biomedical and Health Informatics, 2020, 24(4): 1215–1225. doi: 10.1109/JBHI.2019.2923209 [14] TANG Yunqi, LI Zhuorong, TIAN Huawei, et al. Detecting toe-off events utilizing a vision-based method[J]. Entropy, 2019, 21(4): 329. doi: 10.3390/e21040329 [15] WOLF T, BABAEE M, and RIGOLL G. Multi-view gait recognition using 3D convolutional neural networks[C]. IEEE International Conference on Image Processing, Phoenix, USA, 2016: 4165–4169. doi: 10.1109/ICIP.2016.7533144. [16] 刘天亮, 谯庆伟, 万俊伟, 等. 融合空间-时间双网络流和视觉注意的人体行为识别[J]. 电子与信息学报, 2018, 40(10): 2395–2401. doi: 10.11999/JEIT171116LIU Tianliang, QIAO Qingwei, WAN Junwei, et al. Human action recognition via spatio-temporal dual network flow and visual attention fusion[J]. Journal of Electronics &Information Technology, 2018, 40(10): 2395–2401. doi: 10.11999/JEIT171116 [17] 吴培良, 杨霄, 毛秉毅, 等. 一种视角无关的时空关联深度视频行为识别方法[J]. 电子与信息学报, 2019, 41(4): 904–910. doi: 10.11999/JEIT180477WU Peiliang, YANG Xiao, MAO Bingyi, et al. A perspective-independent method for behavior recognition in depth video via temporal-spatial correlating[J]. Journal of Electronics &Information Technology, 2019, 41(4): 904–910. doi: 10.11999/JEIT180477 [18] TRAN D, BOURDEV L, FERGUS R, et al. Learning spatiotemporal features with 3D convolutional networks[C]. IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4489–4497. doi: 10.1109/ICCV.2015.510. [19] LIU Jiawei, ZHA Zhengjun, CHEN Xuejin, et al. Dense 3D-convolutional neural network for person re-identification in videos[J]. ACM Transactions on Multimedia Computing, Communications, and Applications, 2019, 15(1S): 8. doi: 10.1145/3231741 [20] QIU Zhaofan, YAO Ting, and MEI Tao. Learning spatio-temporal representation with pseudo-3D residual networks[C]. IEEE International Conference on Computer Vision, Venice, Italy, 2017: 5534–5542. doi: 10.1109/ICCV.2017.590. [21] CHAO Hanqing, HE Yiwei, ZHANG Junping, et al. Gaitset: Regarding gait as a set for cross-view gait recognition[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33(1): 8126–8133. doi: 10.1609/aaai.v33i01.33018126 [22] PODSIADLO D and RICHARDSON S. The timed “up & go”: A test of basic functional mobility for frail elderly persons[J]. Journal of the American Geriatrics Society, 1991, 39(2): 142–148. doi: 10.1111/j.1532-5415.1991.tb01616.x [23] LI Tianpeng, CHEN Jiansheng, HU Chunhua, et al. Automatic timed up-and-go sub-task segmentation for Parkinson’s disease patients using video-based activity classification[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2018, 26(11): 2189–2199. doi: 10.1109/TNSRE.2018.2875738 [24] HE Kaiming, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]. IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980–2988. doi: 10.1109/ICCV.2017.322. [25] LI Tianpeng, WAN Weitao, HUANG Yiqing, et al. Improving human parsing by extracting global information using the non-local operation[C]. IEEE International Conference on Image Processing, Taipei, China, 2019: 2961–2965. doi: 10.1109/ICIP.2019.8804412. [26] FU Yang, WEI Yunchao, ZHOU Yuqian, et al. Horizontal pyramid matching for person re-identification[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33(1): 8295–8302. doi: 10.1609/aaai.v33i01.33018295 -

下载:

下载:

下载:

下载: