A Multi-scale Detection Method for Dropper States in High-speed Railway Contact Network Based on RefineDet Network and Hough Transform

-

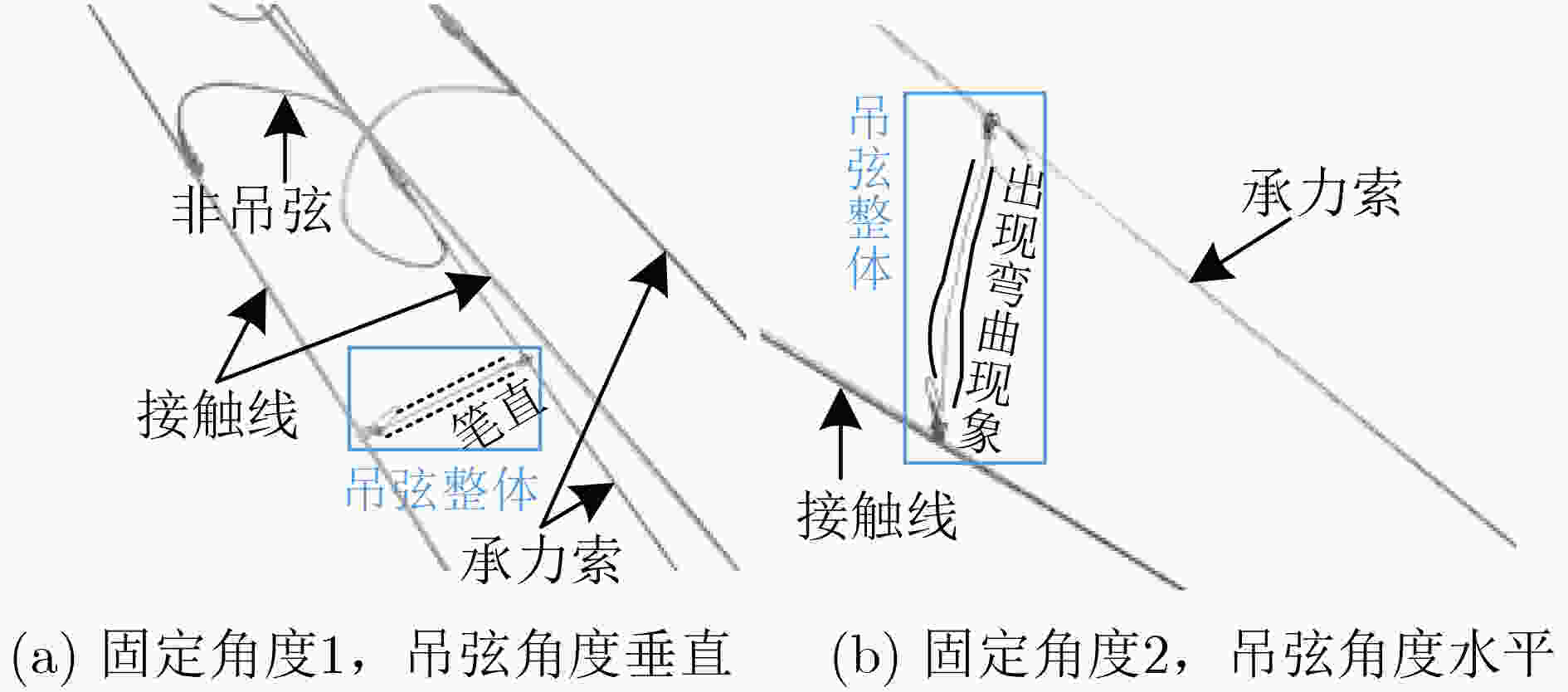

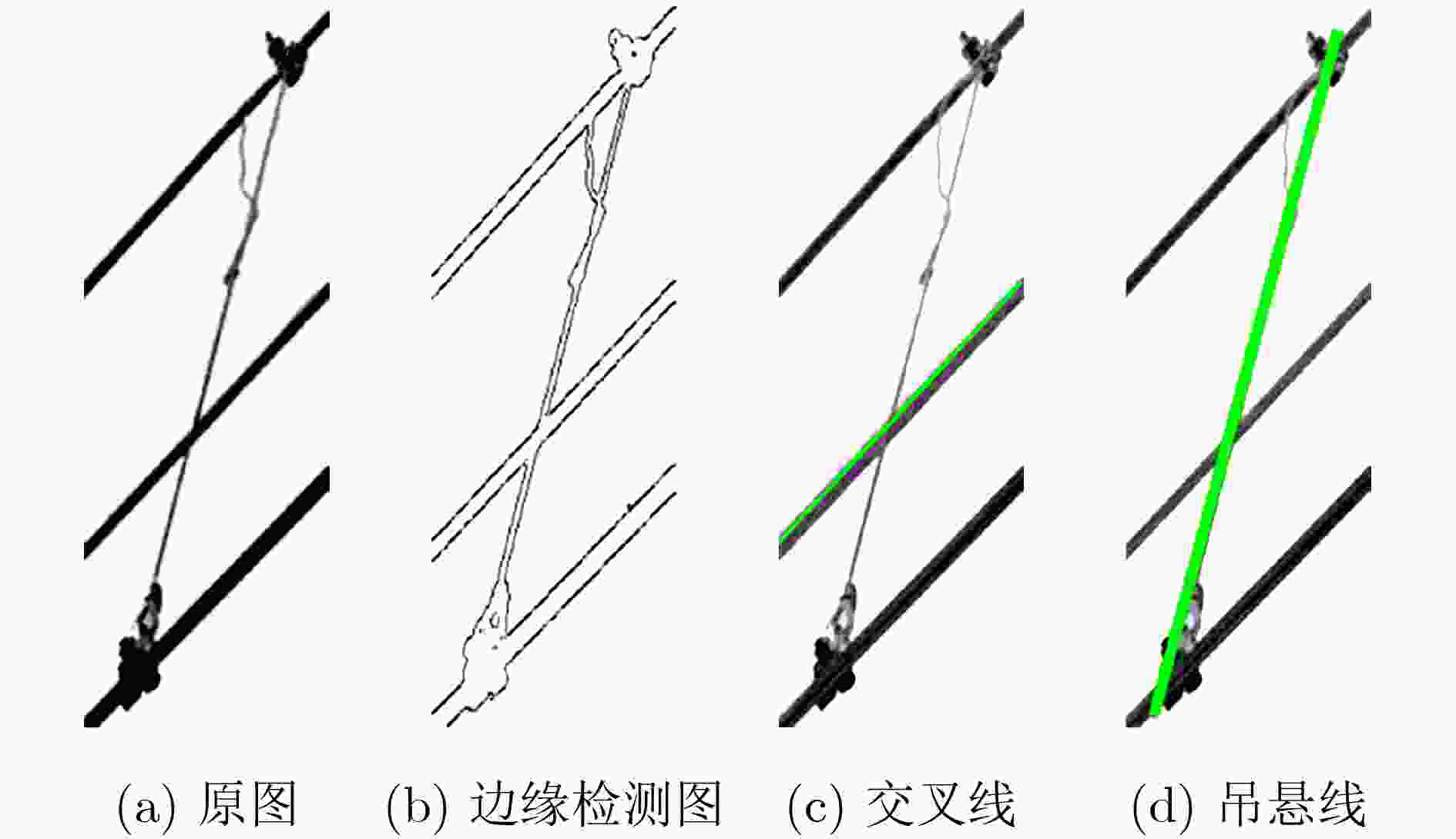

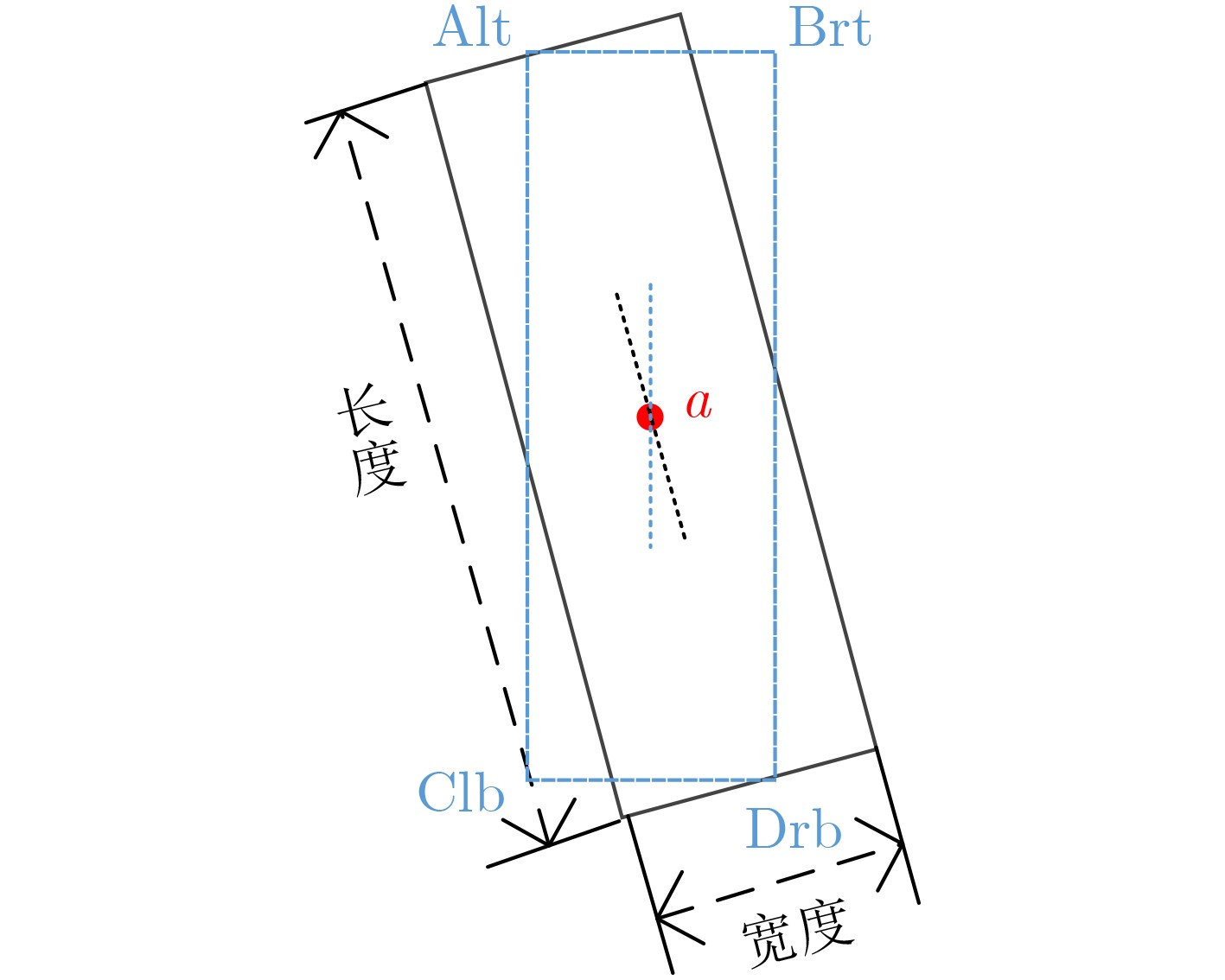

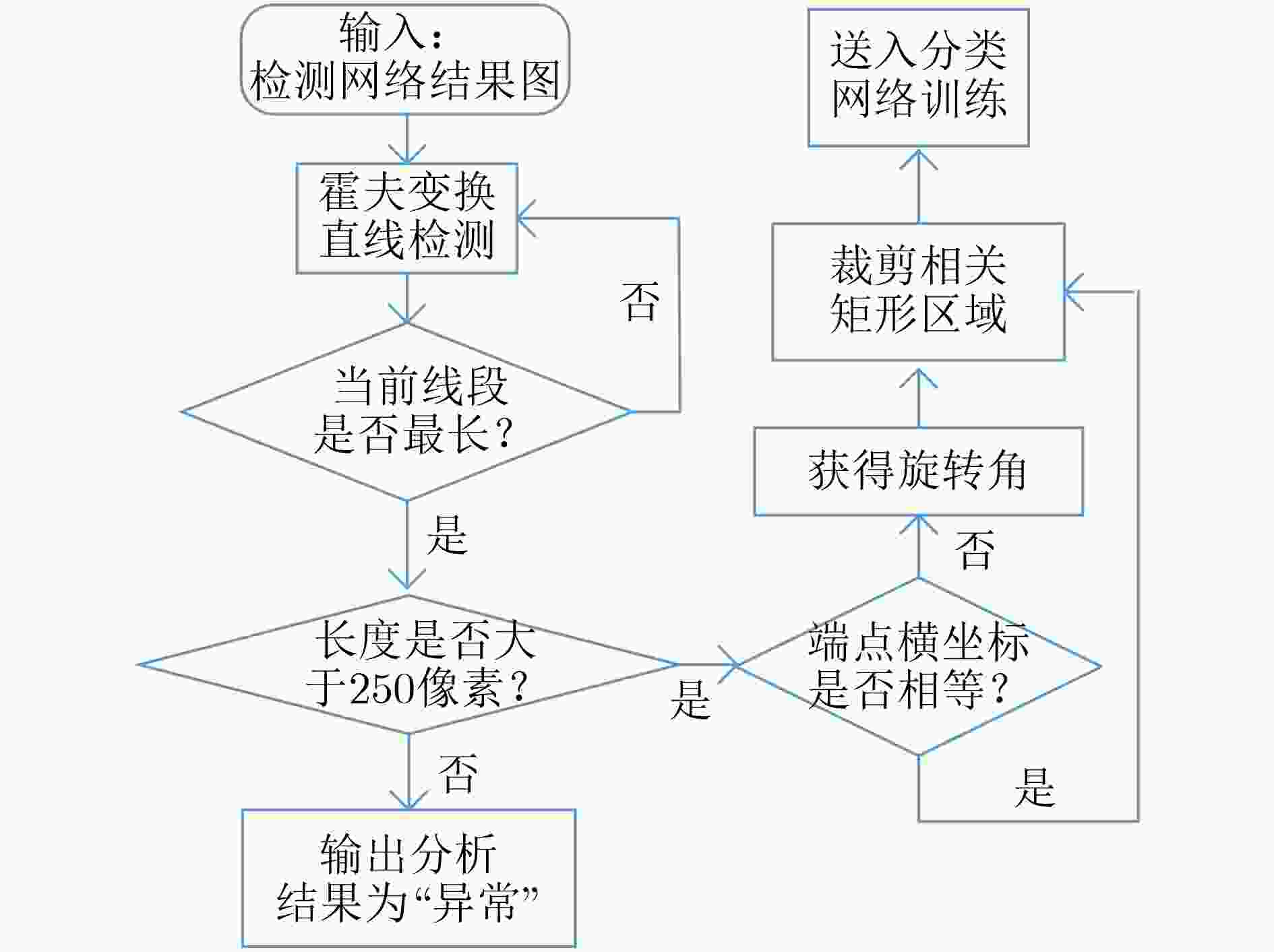

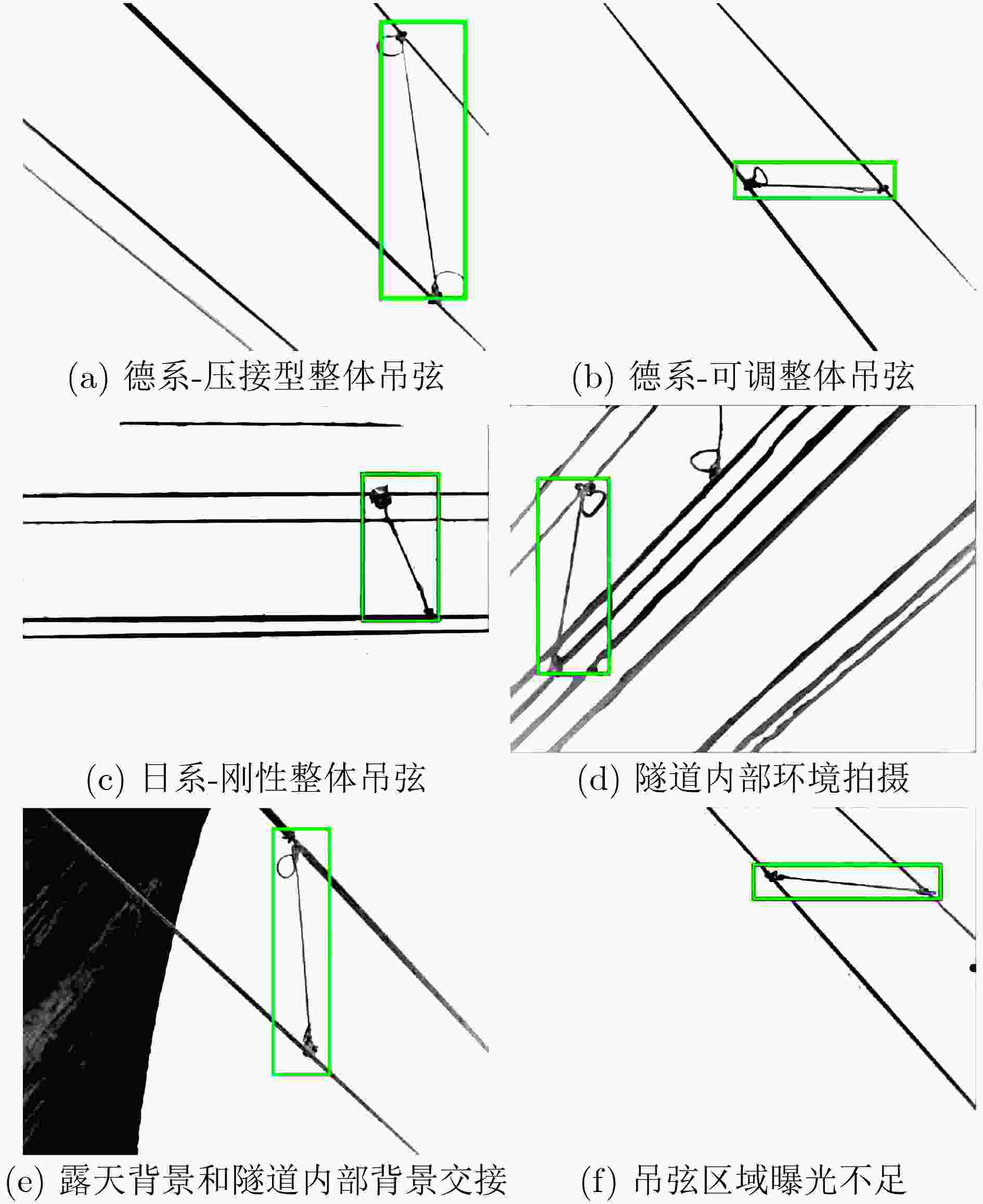

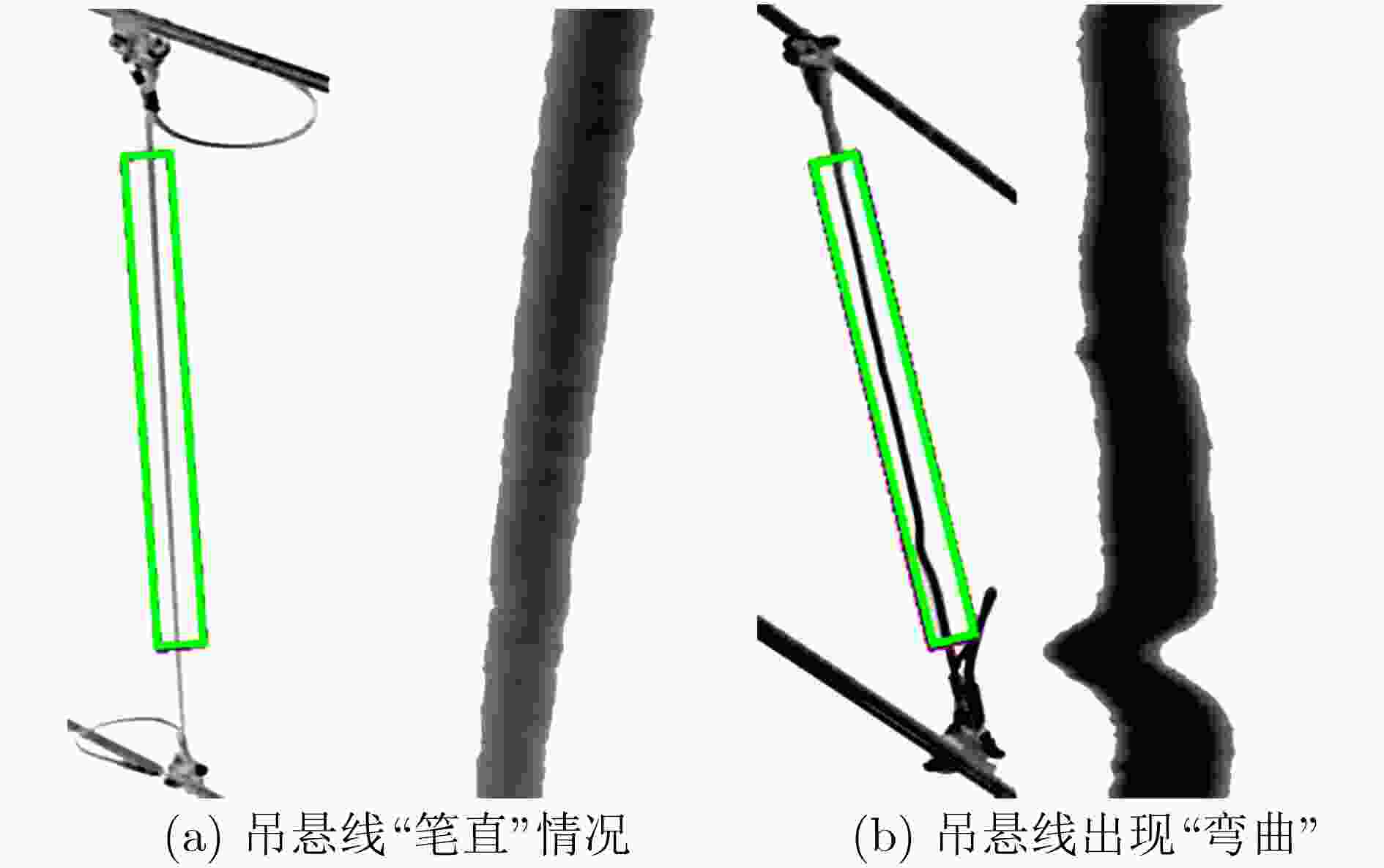

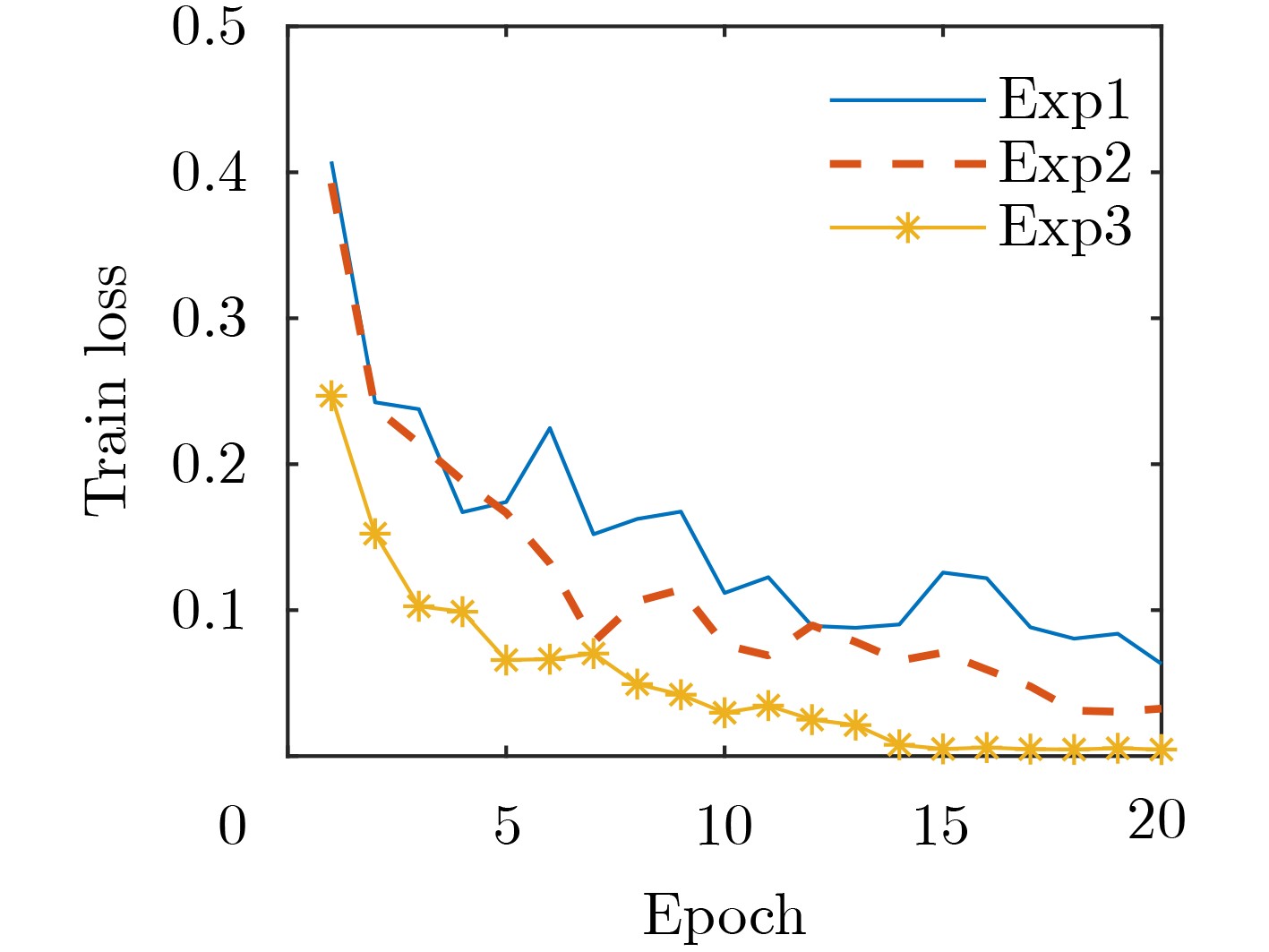

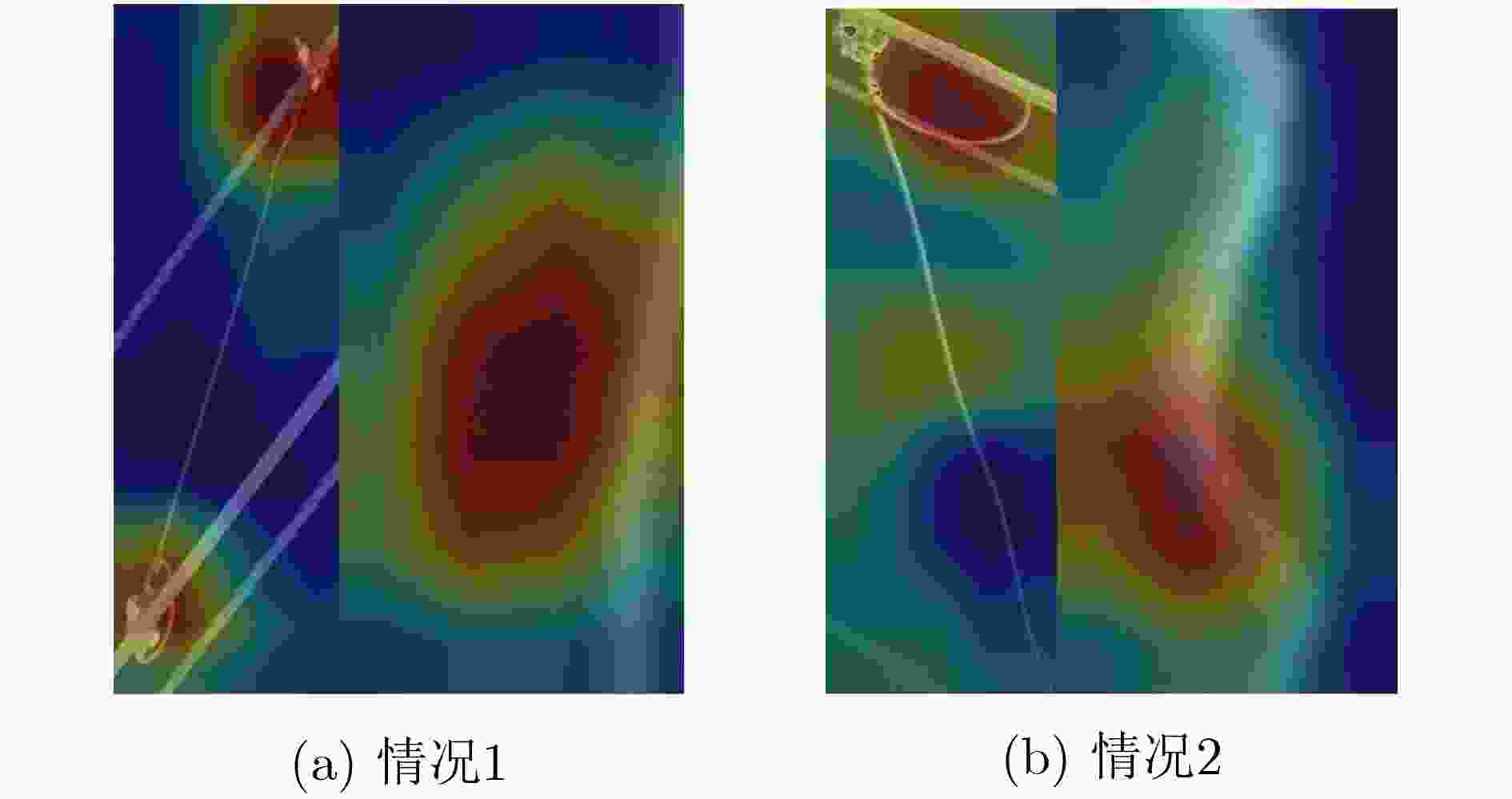

摘要: 针对高速铁路接触网吊弦的状态检测问题,该文提出一种基于RefineDet网络和霍夫变换的吊弦多尺度定位与识别方法。通过设计RefineDet网络的粗调和精调模块对吊弦整体结构进行定位,采用霍夫变换锁定吊弦中部吊悬线所在直线,并利用旋转因子沿直线方向提取吊悬线区域;以吊悬线区域代替吊弦结构整体区域送入分类网络进行训练,通过所建立的多尺度吊弦状态检测模型,实现吊弦状态的精确识别。实验结果表明,吊弦定位模型的准确率达95.3%以上;霍夫变换可排除无效区域对吊弦状态识别的干扰,提高分类网络的训练速度,吊弦状态识别模型准确率达97.5%以上。Abstract: In order to solve the problems of detection and state analysis of high-speed railway catenary droppers, this paper proposes a multi-scale detection method for dropper states based on Refinedet network and Hough transform. First, the positioning result of droppers through Refinedet network is obtained, and Hough transform is used to locate where the dropper line is; Then the surrounding area of the dropper line is extracted with a ralated twiddle factor. Those extracted areas, replacing the results of detection net, are fed into classification network for training the final dropper state analysis mode. Experiments show that the accuracy of dropper detection model is over 95.3%, and the dropper state analysis model can eliminate the impact of meaningless area pixels while accelerating training process, the final state analysis model achieves a high accuracy over 97.5%.

-

Key words:

- Target detection /

- Deep learning /

- Contact network 4C /

- Defect analysis /

- Hough transform

-

表 1 基于霍夫变换的吊悬线检测算法伪代码

Input: images from RefinDet Network (himage×wimage) Output: coordinate pairs at end points of line segmentsM(x1, y1),N(x2, y2) 1: Edge detection of images by Canny operator 2: Set θstep=1°, γstep=1 pixel; θmax=180°,

${\gamma _{\max } } = \sqrt { {{h} }_{ {\rm{image} } }^2 + {{w} }_{ {\rm{image} } }^2}$3: for i∈himage, j∈wimage do: 4: if edge[i][j]==255 then: 5: for m∈180°/θstep do: 6: γ=i·cos(θstep·m/180·pi)+j·sin(θstep·m/180·pi) 7: n =(γ+L)/γstep 8: count[n, m]+=1 9: store[n, m]=append((i, j)) 10: end for 11: end if 12: end for 表 2 ResNet18网络结构

层名称 输出 具体结构 conv1 P/2×Q/2 卷积核=7×7,步长=2 conv_2x P/4×Q/4 3×3最大池化,步长=2 2×残差映射模块1 conv_3x P/8×Q/8 2×残差映射模块2 conv_4x P/16×Q/16 2×残差映射模块3 conv_5x P/32×Q/32 2×残差映射模块4 1×1 全连接层 表 3 吊弦检测定位及状态分析数据集

类别 检测定位数据集 状态分析数据集 德系 日系 正常受力 非正常受力 训练集(张) 2000 1000 592 317 测试集(张) 200 100 149 80 表 4 两个网络训练参数

检测定位网络 状态分析网络 学习率 0.0005 0.0001 偏移量 0.0001 0.0001 单次训练样本数量 16 16 迭代次数 120000 / Epoch / 20 表 5 RefineDet吊弦检测模型定位结果

吊弦类型 吊弦个数 正确个数 误检个数 漏检个数 准确率(%) 德系 340 324 27 16 95.3 日系 100 99 0 1 99.0 表 6 不同网络模型在吊弦数据集上的定位结果

表 7 3个实验测试集结果对比

实验 检测定位结果 灰度调整 霍夫变换 正常受力准确率(%) 非正常受力准确率(%) 1 + — — 99.33 88.75 2 + + — 98.66 91.25 3 + + + 99.33 97.50 -

[1] LIU Xiaomin, WU Junyong, YANG Yuan, et al. Multiobjective optimization of preventive maintenance schedule on traction power system in high-speed railway[C]. 2009 Annual Reliability and Maintainability Symposium, Fort Worth, USA, 2009: 365–370. [2] SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]. 2014 Computer Vision and Pattern Recognition, Columbus, USA, 2014. [3] LAW H and DENG Jia. CornerNet: Detecting objects as paired keypoints[C]. 15th European Conference on Computer Vision, Munich, Germany, 2018: 765–781. [4] ZHOU Xingyi, WANG Dequan, KRÄHENBUHL P, et al. Objects as points[J]. arXiv: 1904.07850, 2019. [5] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587. [6] GIRSHICK R. Fast R-CNN[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440–1448. [7] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[C]. Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 91–99. [8] HE Kaiming, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980–2988. [9] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779–788. [10] REDMON J and FARHADI A. YOLO9000: Better, faster, stronger[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6517–6525. [11] REDMON J and FARHADI A. YOLOv3: An incremental improvement[J]. arXiv: 1804.02767, 2018. [12] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot multibox detector[C]. 14th European Conference on Computer Vision, Amsterdam, Holland, 2016: 21–37. [13] FU Chengyang, LIU Wei, RANGA A, et al. DSSD: Deconvolutional single shot detector[J]. arXiv: 1701.06659, 2017. [14] 刘凯, 刘志刚, 陈隽文. 基于加速区域卷积神经网络的高铁接触网承力索底座裂纹检测研究[J]. 铁道学报, 2019, 41(7): 43–49. doi: 10.3969/j.issn.1001-8360.2019.07.006LIU Kai, LIU Zhigang, and CHEN Junwen. Crack detection of messenger wire supporter in catenary support devices of high-speed railway based on faster R-CNN[J]. Journal of the China Railway Society, 2019, 41(7): 43–49. doi: 10.3969/j.issn.1001-8360.2019.07.006 [15] 李彩林, 张青华, 陈文贺, 等. 基于深度学习的绝缘子定向识别算法[J]. 电子与信息学报, 2020, 42(4): 1033–1040. doi: 10.11999/JEIT190350LI Cailin, ZHANG Qinghua, CHEN Wenhe, et al. Insulator orientation detection based on deep learning[J]. Journal of Electronics &Information Technology, 2020, 42(4): 1033–1040. doi: 10.11999/JEIT190350 [16] 杨红梅, 刘志刚, 韩烨, 等. 基于快速鲁棒性特征匹配的电气化铁路绝缘子不良状态检测[J]. 电网技术, 2013, 37(8): 2297–2302. doi: 10.13335/j.1000-3673.pst.2013.08.038YANG Hongmei, LIU Zhigang, HAN Ye, et al. Defective condition detection of insulators in electrified railway based on feature matching of speeded-up robust features[J]. Power System Technology, 2013, 37(8): 2297–2302. doi: 10.13335/j.1000-3673.pst.2013.08.038 [17] 闵锋, 郎达, 吴涛. 基于语义分割的接触网开口销状态检测[J]. 华中科技大学学报: 自然科学版, 2020, 48(1): 77–81. doi: 10.13245/j.hust.200114MIN Feng, LANG Da, and WU Tao. The state detection of split pin in overhead contact system based on semantic segmentation[J]. Journal of Huazhong University of Science and Technology:Natural Science Edition, 2020, 48(1): 77–81. doi: 10.13245/j.hust.200114 [18] ZHANG Shifeng, WEN Longyin, BIAN Xiao, et al. Single-shot refinement neural network for object detection[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 4203–4212. [19] IOFFE S and SZEGEDY C. Batch normalization: Accelerating deep network training by reducing internal covariate shift[C]. Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 2015: 448–456. [20] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 936–944. [21] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. -

下载:

下载:

下载:

下载: