Self-adaptive Entropy Weighted Decision Fusion Method for Ship Image Classification Based on Multi-scale Convolutional Neural Network

-

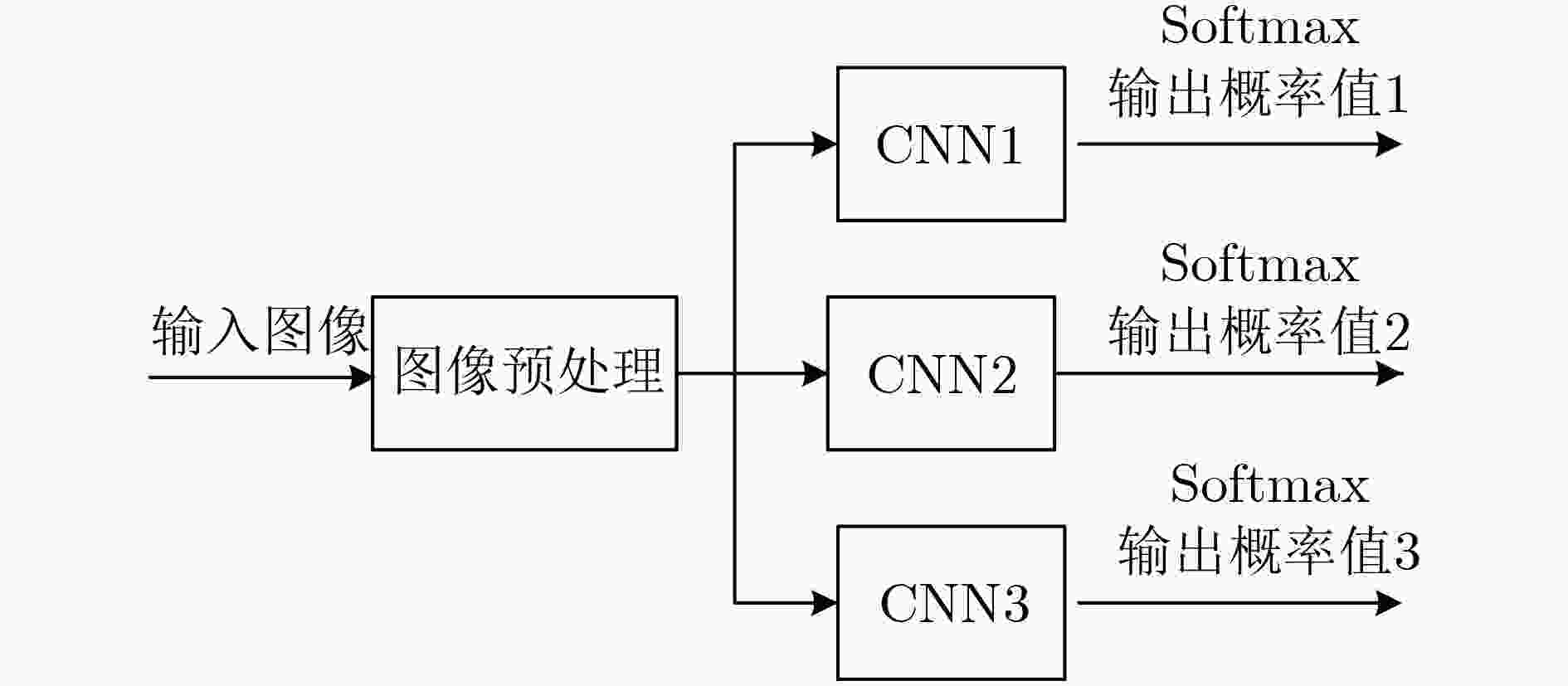

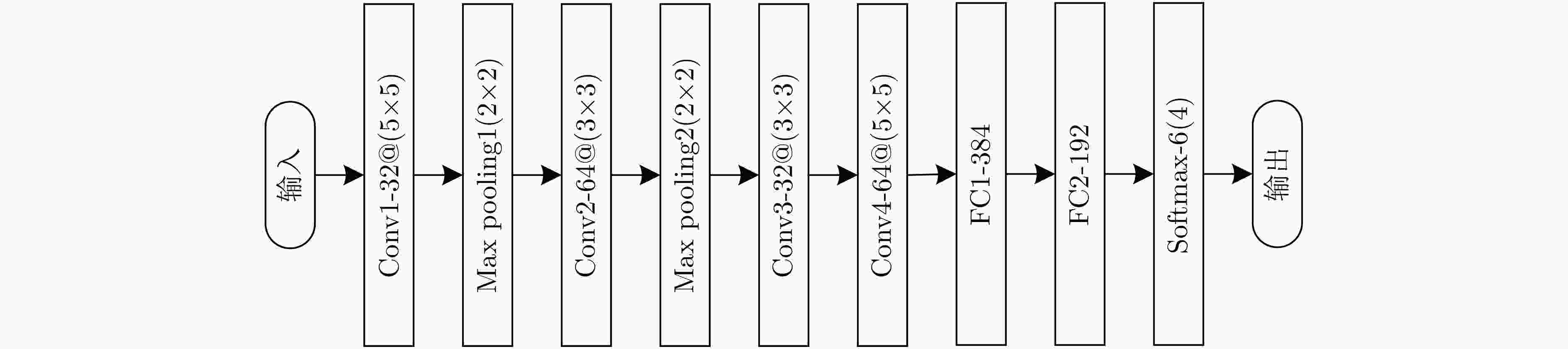

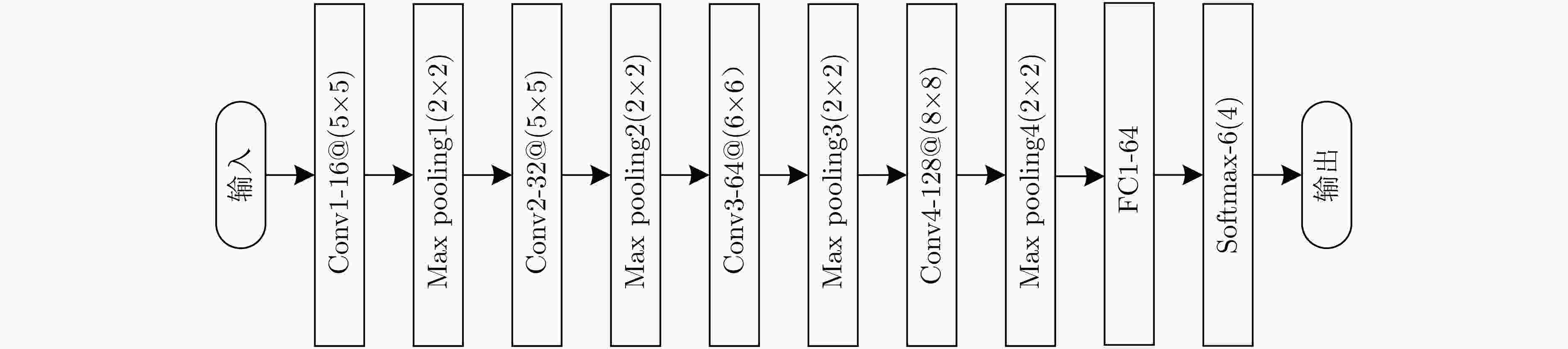

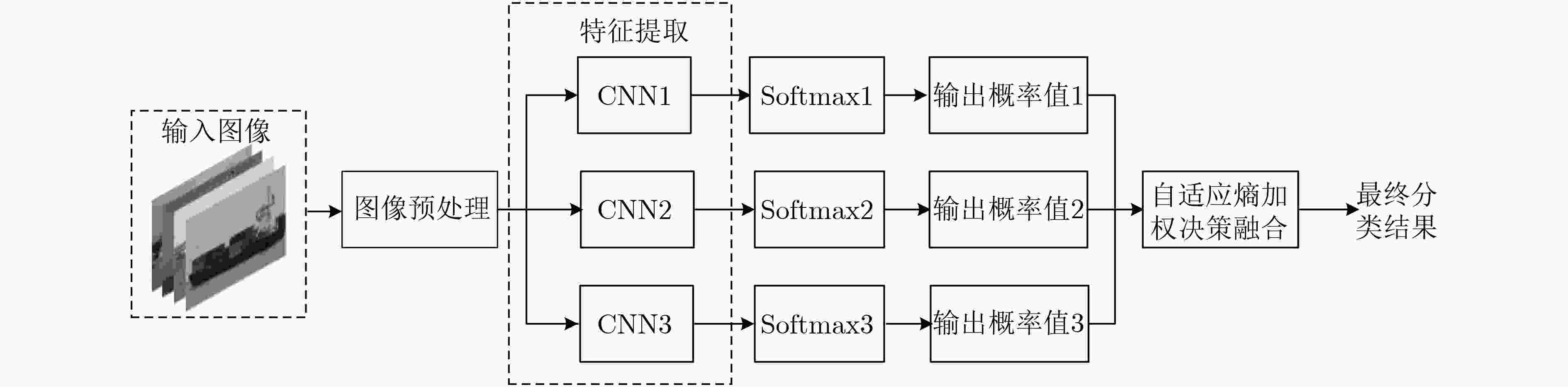

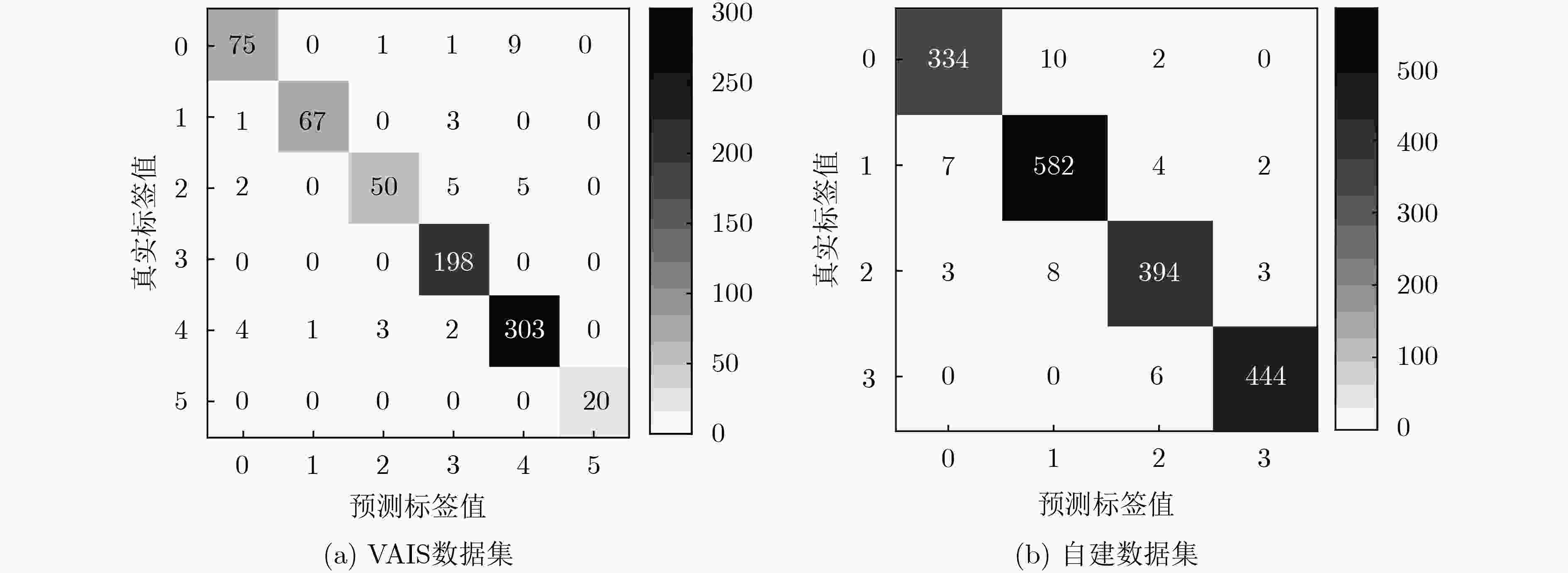

摘要: 针对单一尺度卷积神经网络(CNN)对船舶图像分类的局限性,该文提出一种多尺度CNN自适应熵加权决策融合方法用于船舶图像分类。首先使用多尺度CNN提取不同尺寸的船舶图像的多尺度特征,并训练得到不同子网络的最优模型;接着利用测试集船舶图像在最优模型上测试,得到多尺度CNN的Softmax函数输出的概率值,并计算得到信息熵,进而实现对不同输入船舶图像赋予自适应的融合权重;最后对不同子网络的Softmax函数输出概率值进行自适应熵加权决策融合实现船舶图像的最终分类。在VAIS数据集和自建数据集上分别进行了实验,提出的方法的分类准确率分别达到了95.07%和97.50%,实验结果表明,与单一尺度CNN分类方法以及其他较新方法相比,所提方法具有更优的分类性能。Abstract: Considering the limitation of single scale Convolutional Neural Network (CNN) for ship image classification, a self-adaptive entropy weighted decision fusion method for ship image classification based on multi-scale CNN is proposed. Firstly, the multi-scale CNN is used to extract the multi-scale features of ship image with different sizes, and the optimum models of different sub-networks are trained. Then, the ship images of test set are tested on the optimum models, and the probability value that is output by Softmax function of multi-scale CNN is obtained, which is used to calculate the information entropy so as to realize the adaptive weight assigned to different input ship images. Finally, self-adaptive entropy weighted decision fusion is carried out for the probability value that is output by Softmax function of different sub-networks to realize the final ship image classification. Experiments perform on VAIS (Visible And Infrared Spectrums) and self-built datasets respectively, and the proposed method achieves average accuracy of 95.07% and 97.50% on these datasets respectively. The experimental results show that the proposed method has better classification performance than those of the single scale CNN classification method and other state-of-the-art methods.

-

表 1 VAIS数据集的训练样本和测试样本数量

序号 类名 训练 测试 1 medium-other 99 86 2 merchant 103 71 3 medium-passenger 78 62 4 sailing 214 198 5 small 342 313 6 tug 37 20 合计 873 750 表 2 自建数据集的训练样本和测试样本数量

序号 类名 训练 测试 1 散货船 1385 346 2 集装箱船 2381 595 3 客船 1632 408 4 帆船 1803 450 合计 7201 1799 表 3 不同方法在VAIS数据集上的分类准确率和对于每幅图像用于特征提取的平均时间消耗

方法 分类准确率(%) 特征提取时间(ms) CNN1 92.13 0.104 CNN2 90.93 0.045 CNN3 90.67 0.092 本文方法 95.07 0.391 表 4 不同方法在自建数据集上的分类准确率和对于每幅图像用于特征提取的平均时间消耗

方法 分类准确率(%) 特征提取时间(ms) CNN1 96.50 0.047 CNN2 94.61 0.048 CNN3 96.16 0.071 本文方法 97.50 0.396 表 5 不同方法在VAIS数据集上对每一类的分类准确率(%)

方法 类别 medium-other merchant medium-passenger sailing small tug CNN1 80.23 87.32 88.71 94.95 94.89 100.00 CNN2 83.72 87.32 70.96 93.94 95.85 90.00 CNN3 81.40 94.37 75.80 100.00 89.78 85.00 本文方法 87.21 94.37 80.65 100.00 96.81 100.00 表 6 不同方法在自建数据集上对每一类的分类准确率(%)

方法 类别 散货船 集装箱船 客船 帆船 CNN1 95.95 95.97 96.56 97.56 CNN2 89.60 96.30 96.81 94.22 CNN3 94.22 97.48 93.14 98.67 本文方法 96.53 97.82 96.57 98.67 表 7 本文方法与其他方法在VAIS数据集上的分类准确率和误分类样本数

-

[1] ZHANG Erhu, WANG Kelu, and LIN Guangfeng. Classification of marine vessels with multi-feature structure fusion[J]. Applied Sciences, 2019, 9(10): 2153. doi: 10.3390/app9102153 [2] DONG Chao, LIU Jinghong, and XU Fang. Ship detection in optical remote sensing images based on saliency and a rotation-invariant descriptor[J]. Remote Sensing, 2018, 10(3): 400. doi: 10.3390/rs10030400 [3] 吴映铮, 杨柳涛. 基于HOG和SVM的船舶图像分类算法[J]. 上海船舶运输科学研究所学报, 2019, 42(1): 58–64.WU Yingzheng and YANG Liutao. Ship image classification by combined use of HOG and SVM[J]. Journal of Shanghai Ship and Shipping Research Institute, 2019, 42(1): 58–64. [4] PARAMESWARAN S and RAINEY K. Vessel classification in overhead satellite imagery using weighted “bag of visual words”[C]. SPIE 9476, Automatic Target Recognition XXV, Baltimore, USA, 2015: 947609. doi: 10.1117/12.2177779. [5] ARGUEDAS V F. Texture-based vessel classifier for electro-optical satellite imagery[C]. 2015 IEEE International Conference on Image Processing, Quebec City, Canada, 2015: 3866–3870. doi: 10.1109/ICIP.2015.7351529. [6] 王鑫, 李可, 宁晨, 等. 基于深度卷积神经网络和多核学习的遥感图像分类方法[J]. 电子与信息学报, 2019, 41(5): 1098–1105. doi: 10.11999/JEIT180628WANG Xin, LI Ke, NING Chen, et al. Remote sensing image classification method based on deep convolution neural network and multi-kernel learning[J]. Journal of Electronics &Information Technology, 2019, 41(5): 1098–1105. doi: 10.11999/JEIT180628 [7] 李健伟, 曲长文, 彭书娟, 等. 基于生成对抗网络和线上难例挖掘的SAR图像舰船目标检测[J]. 电子与信息学报, 2019, 41(1): 143–149. doi: 10.11999/JEIT180050LI Jianwei, QU Changwen, PENG Shujuan, et al. Ship detection in SAR images based on generative adversarial network and online hard examples mining[J]. Journal of Electronics &Information Technology, 2019, 41(1): 143–149. doi: 10.11999/JEIT180050 [8] CHEN Yunfan, XIE Han, and SHIN H. Multi-layer fusion techniques using a CNN for multispectral pedestrian detection[J]. IET Computer Vision, 2018, 12(8): 1179–1187. doi: 10.1049/iet-cvi.2018.5315 [9] 闫河, 王鹏, 董莺艳, 等. 一种CNN与ELM相结合的船舶分类识别方法[J]. 重庆理工大学学报: 自然科学, 2019, 33(1): 53–57. doi: 10.3969/j.issn.1674-8425(z).2019.01.008YAN He, WANG Peng, DONG Yingyan, et al. A classification identification method of ships combining CNN and ELM[J]. Journal of Chongqing Institute of Technology:Natural Science, 2019, 33(1): 53–57. doi: 10.3969/j.issn.1674-8425(z).2019.01.008 [10] 陈兴伟. 深度学习船舶分类技术研究[J]. 舰船科学技术, 2019, 41(7A): 142–144. doi: 10.3404/j.issn.1672-7649.2019.7A.048CHEN Xingwei. Research on ship classification technology based on deep learning[J]. Ship Science and Technology, 2019, 41(7A): 142–144. doi: 10.3404/j.issn.1672-7649.2019.7A.048 [11] SHI Qiaoqiao, LI Wei, TAO Ran, et al. Ship classification based on multifeature ensemble with convolutional neural network[J]. Remote Sensing, 2019, 11(4): 419. doi: 10.3390/rs11040419 [12] BENTES C, VELOTTO D, and TINGS B. Ship classification in TerraSAR-X images with convolutional neural networks[J]. IEEE Journal of Oceanic Engineering, 2018, 43(1): 258–266. doi: 10.1109/JOE.2017.2767106 [13] 杨亚东, 王晓峰, 潘静静. 改进CNN及其在船舶识别中的应用[J]. 计算机工程与设计, 2018, 39(10): 3228–3233. doi: 10.16208/j.issn1000-7024.2018.10.039YANG Yadong, WANG Xiaofeng, and PAN Jingjing. Improved CNN and its application in ship identification[J]. Computer Engineering and Design, 2018, 39(10): 3228–3233. doi: 10.16208/j.issn1000-7024.2018.10.039 [14] LI Xiaobin, JIANG Bitao, SUN Tong, et al. Remote sensing scene classification based on decision-level fusion[C]. 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference, Chongqing, China, 2018: 393–397. doi: 10.1109/ITOEC.2018.8740526. [15] GENG Jie, JIANG Wen, and DENG Xinyang. Multi-scale deep feature learning network with bilateral filtering for SAR image classification[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 167: 201–213. doi: 10.1016/j.isprsjprs.2020.07.007 [16] 李凯, 韩冰, 张景滔. 基于条件随机场与多尺度卷积神经网络的交通标志检测与识别[J]. 计算机应用, 2018, 38(S2): 270–275.LI Kai, HAN Bing, and ZHANG Jingtao. Traffic sign detection and recognition based on conditional random field and multi-scale convolutional neural network[J]. Journal of Computer Applications, 2018, 38(S2): 270–275. [17] REN Yongmei, YANG Jie, ZHANG Qingnian, et al. Multi-feature fusion with convolutional neural network for ship classification in optical images[J]. Applied Sciences, 2019, 9(20): 4209. doi: 10.3390/app9204209 [18] CHEN Wangcai, LIU Wenbo and LI Kaiyu. Rail crack recognition based on adaptive weighting multi-classifier fusion decision[J]. Measurement, 2018, 123: 102–114. doi: 10.1016/j.measurement.2018.03.059 [19] ZHANG M M, CHOI J, DANIILIDIS K, et al. VAIS: A dataset for recognizing maritime imagery in the visible and infrared spectrums[C]. The 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, USA, 2015: 10–16. doi: 10.1109/CVPRW.2015.7301291. [20] DING Jun, CHEN Bo, LIU Hongwei, et al. Convolutional neural network with data augmentation for SAR target recognition[J]. IEEE Geoscience and Remote Sensing Letters, 2016, 13(3): 364–368. doi: 10.1109/LGRS.2015.2513754 [21] RAINEY K, REEDER J D, and CORELLI A G. Convolution neural networks for ship type recognition[C]. SPIE 9844, Automatic Target Recognition XXVI, Baltimore, USA, 2016: 984409. doi: 10.1117/12.2229366. [22] LI Zhenzhen, ZHAO Baojun, TANG Linbo, et al. Ship classification based on convolutional neural networks[J]. The Journal of Engineering, 2019, 2019(21): 7343–7346. doi: 10.1049/joe.2019.0422 -

下载:

下载:

下载:

下载: