The Combination and Pooling Based on High-level Feature Map for High-resolution Remote Sensing Image Retrieval

-

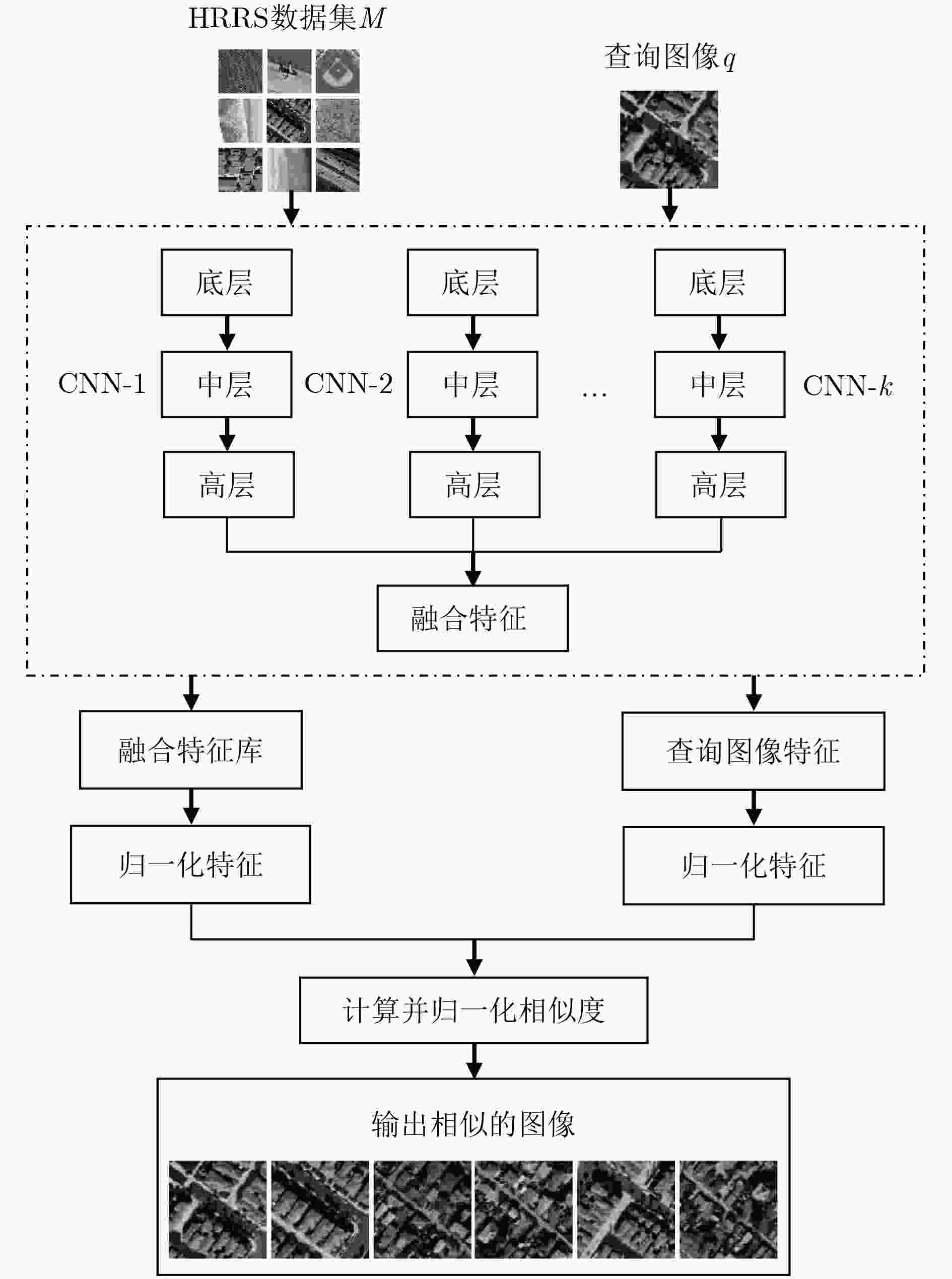

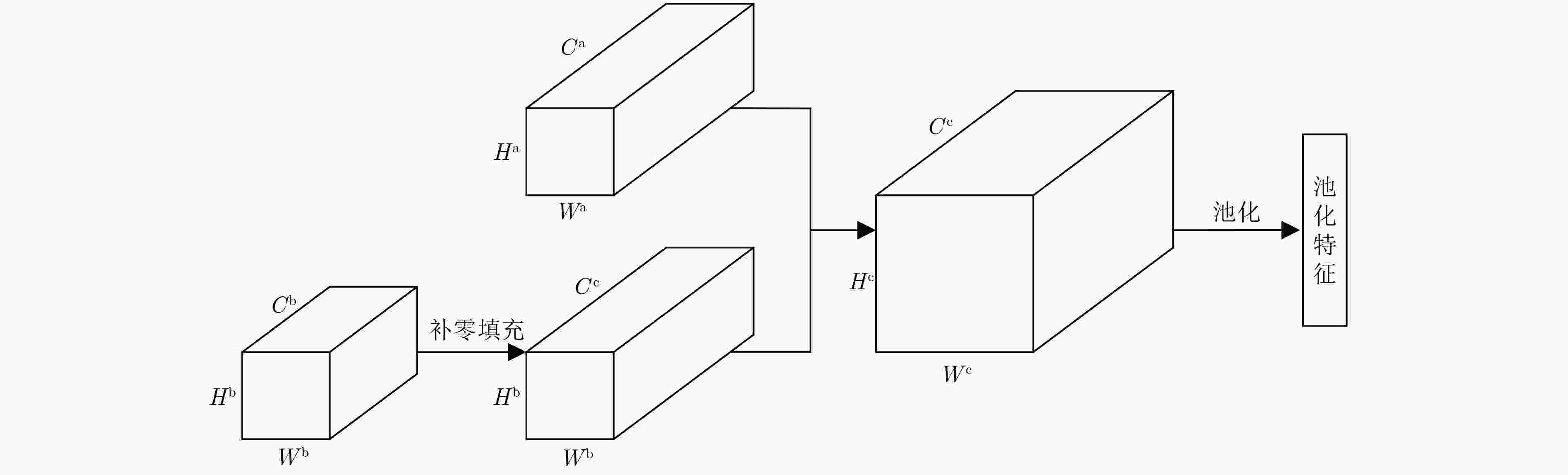

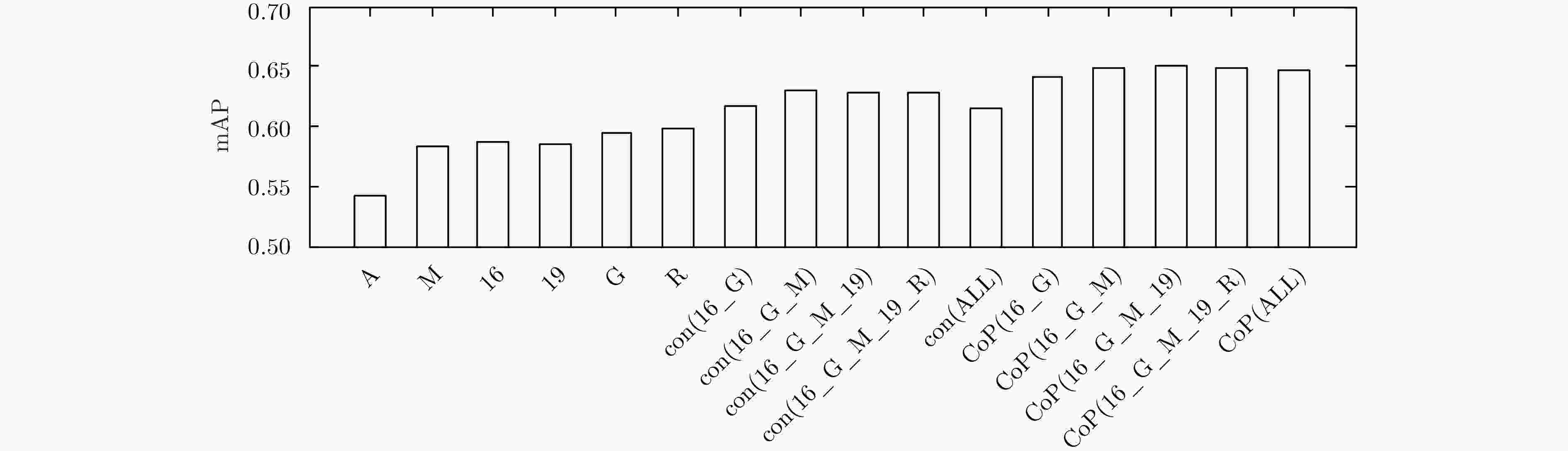

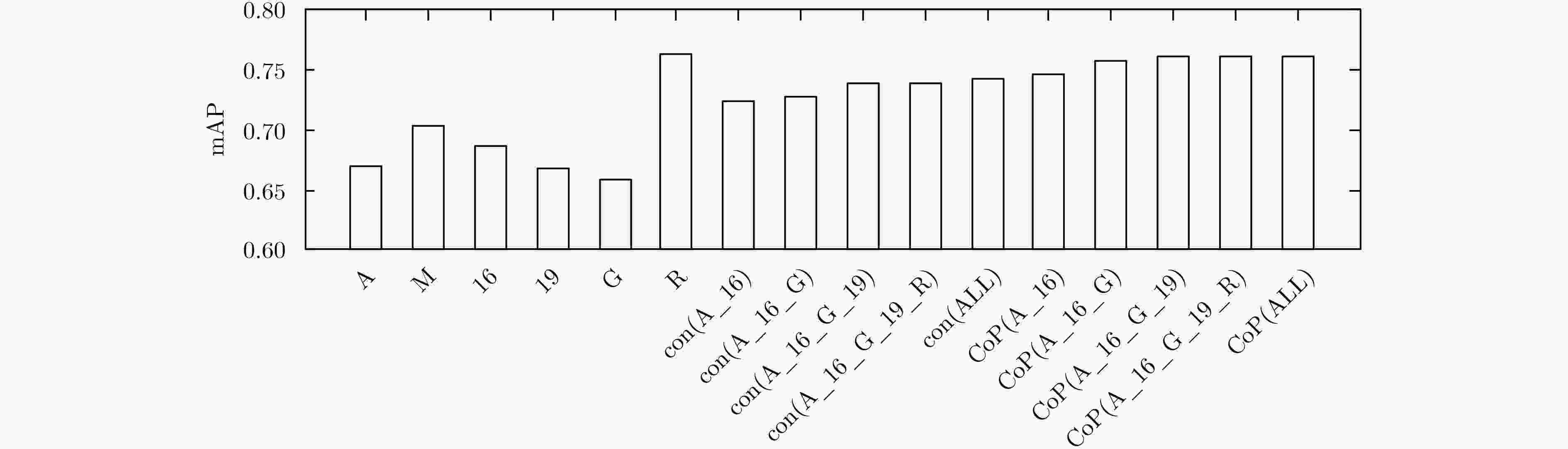

摘要: 高分辨率遥感图像内容复杂,提取特征来准确地表达图像内容是提高检索性能的关键。卷积神经网络(CNN)迁移学习能力强,其高层特征能够有效迁移到高分辨率遥感图像中。为了充分利用高层特征的优点,该文提出一种基于高层特征图组合及池化的方法来融合不同CNN中的高层特征。首先将高层特征作为特殊的卷积层特征,进而在不同输入尺寸下保留高层输出的特征图;然后将不同高层输出的特征图组合成一个更大的特征图,以综合不同CNN学习到的特征;接着采用最大池化的方法对组合特征图进行压缩,提取特征图中的显著特征;最后,采用主成分分析(PCA)来降低显著特征的冗余度。实验结果表明,与现有检索方法相比,该方法提取的特征在检索效率和准确率上都有优势。Abstract: High-resolution remote sensing images have complex visual contents, and extracting feature to represent image content accurately is the key to improving image retrieval performance. Convolutional Neural Networks (CNN) have strong transfer learning ability, and the high-level features of CNN can be efficiently transferred to high-resolution remote sensing images. In order to make full use of the advantages of high-level features, a combination and pooling method based on high-level feature maps is proposed to fuse high-level features from different CNNs. Firstly, the high-level features are adopted as special convolutional features to preserve the feature maps of the high-level outputs under different input sizes, and then the feature maps are combined into a larger feature map to integrate the features learned by different CNNs. The combined feature map is compressed by max-pooling method to extract salient features. Finally, the Principal Component Analysis (PCA) is utilized to reduce the redundancy of the salient features. The experimental results show that compared with the existing retrieval methods, the features extracted by this method have advantages in retrieval efficiency and precision.

-

Key words:

- Remote sensing image retrieval /

- Transfer learning /

- High-level feature map /

- Combination /

- Pooling

-

表 1 不同输入图像尺寸下高层CNN特征的输出值

输入图像尺寸 fc G-pool5 R-pool5 默认尺寸 1×1×4096 1×1×1024 1×1×2048 256×256×3(UC-Merced) 2×2×4096 2×2×1024 2×2×2048 600×600×3(WHU-RS) 13×13×4096 12×12×1024 13×13×2048 表 2 UC-Merced中特征的相关系数

特征 A M 16 19 G M –0.0037 16 0.0006 0.0028 19 –0.0023 0.0053 0.4817 G 0.0012 –0.0063 –0.0086 –0.0100 R –0.0100 0.0008 –0.0060 –0.0021 0.1175 表 3 WHU-RS中特征的相关系数

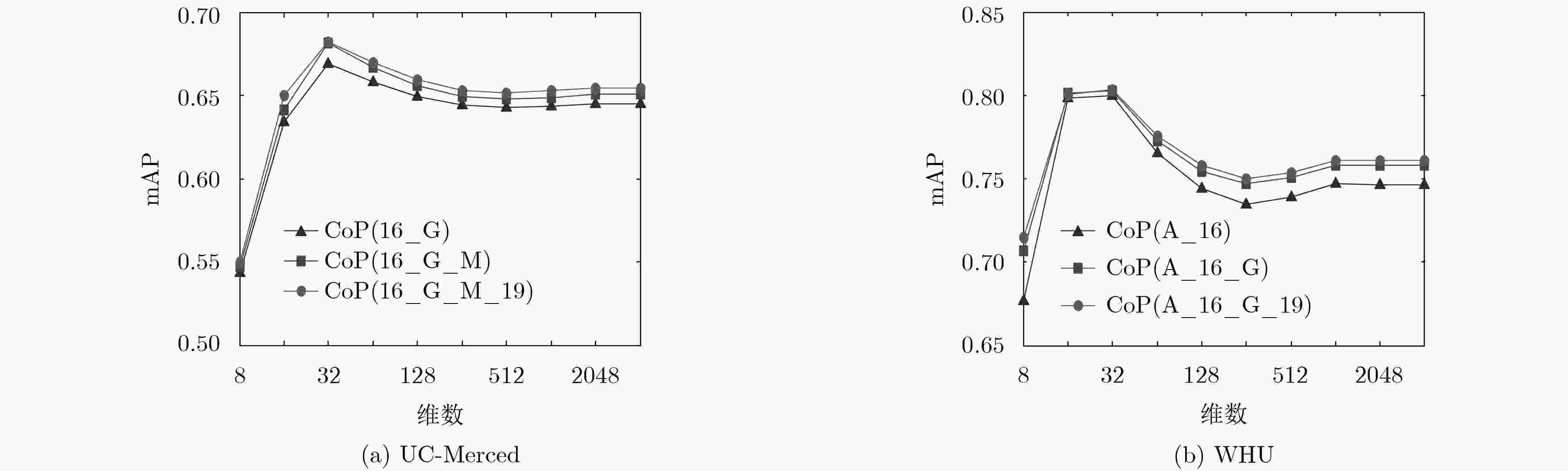

特征 A M 16 19 G M –0.0080 16 –0.0009 0.0027 19 0.0001 0.0051 0.4762 G –0.0024 –0.0038 –0.0110 –0.0093 R –0.0045 0.0084 –0.0069 –0.0022 0.1138 表 4 不同输入尺寸CoP特征检索结果比较

数据集 特征 默认尺寸 原始尺寸 ANMRR mAP ANMRR mAP UC-Merced CoP(16_G) 0.2898 0.6411 0.2880 0.6446 CoP(16_G_M) 0.2834 0.6485 0.2832 0.6504 CoP(16_G_M_19) 0.2834 0.6496 0.2805 0.6544 WHU-RS CoP(A_16) 0.2007 0.7466 0.2330 0.7116 CoP(A_16_G) 0.1891 0.7582 0.2319 0.7125 CoP(A_16_G_19) 0.1875 0.7610 0.2318 0.7124 表 5 UC-Merced中微调CoP特征检索结果比较

数据集 特征 默认尺寸 原始尺寸 ANMRR mAP ANMRR mAP UC-Merced CoP(16_G)-FT 0.2738 0.6602 0.2777 0.6566 CoP(16_G_M)-FT 0.2642 0.6716 0.2678 0.6683 CoP(16_G_M_19)-FT 0.2604 0.6767 0.2561 0.6822 WHU-RS CoP(A_16)-FT 0.1723 0.7809 0.1975 0.7501 CoP(A_16_G)-FT 0.1582 0.7971 0.1924 0.7559 CoP(A_16_G_19)-FT 0.1519 0.8048 0.1879 0.7615 表 6 UC-Merced中CoP特征与其他特征检索结果比较

特征 ANMRR 维数 浅层特征 VLAD[2] 0.4604 16384 3层图[4] 0.4317 – CNN特征 VGGM-fc[14] 0.3780 4096 VGGM-conv5-IFK[15] 0.4580 102400 VGG16-fc[15] 0.3940 4096 VGG16-conv5-IFK[15] 0.4070 102400 LDCNN[15] 0.4390 30 GoogLeNet-MultiPatch-FT[16] 0.3140 1024 GoogLeNet-MultiPatch-FT-PCA[16] 0.2850 32 CoP(16_G) 0.2880 4096 CoP(16_G_M_19) 0.2805 4096 CoP(16_G_M_19)-FT 0.2561 4096 CoP(16_G_M_19)-PCA 0.2577 32 -

DEMIR B and BRUZZONE L. A novel active learning method in relevance feedback for content-based remote sensing image retrieval[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(5): 2323–2334. doi: 10.1109/TGRS.2014.2358804 ÖZKAN S, ATEŞ T, TOLA E, et al. Performance analysis of state-of-the-art representation methods for geographical image retrieval and categorization[J]. IEEE Geoscience and Remote Sensing Letters, 2014, 11(11): 1996–2000. doi: 10.1109/LGRS.2014.2316143 陆丽珍, 刘仁义, 刘南. 一种融合颜色和纹理特征的遥感图像检索方法[J]. 中国图象图形学报, 2004, 9(3): 328–333. doi: 10.3969/j.issn.1006-8961.2004.03.013LU Lizhen, LIU Renyi, and LIU Nan. Remote sensing image retrieval using color and texture fused features[J]. Journal of Image and Graphics, 2004, 9(3): 328–333. doi: 10.3969/j.issn.1006-8961.2004.03.013 WANG Yuebin, ZHANG Liqiang, TONG Xiaohua, et al. A three-layered graph-based learning approach for remote sensing image retrieval[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(10): 6020–6034. doi: 10.1109/TGRS.2016.2579648 郭智, 宋萍, 张义, 等. 基于深度卷积神经网络的遥感图像飞机目标检测方法[J]. 电子与信息学报, 2018, 40(11): 2684–2690. doi: 10.11999/JEIT180117GUO Zhi, SONG Ping, ZHANG Yi, et al. Aircraft detection method based on deep convolutional neural network for remote sensing images[J]. Journal of Electronics &Information Technology, 2018, 40(11): 2684–2690. doi: 10.11999/JEIT180117 YE Famao, SU Yanfei, XIAO Hui, et al. Remote sensing image registration using convolutional neural network features[J]. IEEE Geoscience and Remote Sensing Letters, 2018, 15(2): 232–236. doi: 10.1109/LGRS.2017.2781741 KRIZHEVSKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[C]. The 25th International Conference on Neural Information Processing Systems, Nevada, USA, 2012: 1097–1105. CHATFIELD K, SIMONYAN K, VEDALDI A, et al. Return of the devil in the details: Delving deep into convolutional networks[C]. The 25th British Machine Vision Conference, Nottingham, UK, 2014. SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]. The 3rd International Conference on Learning Representations, San Diego, USA, 2015. SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1–9. HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. CASTELLUCCIO M, POGGI G, SANSONE C, et al. Land use classification in remote sensing images by convolutional neural networks[J]. Acta Ecologica Sinica, 2015, 28(2): 627–635. ALIAS B, KARTHIKA R, and PARAMESWARAN L. Content based image retrieval of remote sensing images using deep learning with different distance measures[J]. Journal of Advanced Research in Dynamical and Control Systems, 2018, 10(3): 664–674. NAPOLETANO P. Visual descriptors for content-based retrieval of remote-sensing Images[J]. International Journal of Remote Sensing, 2018, 39(5): 1343–1376. doi: 10.1080/01431161.2017.1399472 ZHOW Weixun, NEWSAM S, LI Congmin, et al. Learning low dimensional convolutional neural networks for high-resolution remote sensing image retrieval[J]. Remote Sensing, 2017, 9(5): 489. doi: 10.3390/rs9050489 HU Fan, TONG Xinyi, XIA Guisong, et al. Delving into deep representations for remote sensing image retrieval[C]. The IEEE 13th International Conference on Signal Processing, Chengdu, China, 2016: 198–203. SHELHAMER E, LONG J, and DARRELL T. Fully convolutional networks for semantic segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(4): 640–651. doi: 10.1109/TPAMI.2016.2572683 VEDALDI A and LENC K. MatConvNet: Convolutional neural networks for MATLAB[C]. The 23rd ACM International Conference on Multimedia, Brisbane, Australia, 2015: 689–692. HU Fan, XIA Guisong, HU Jingwen, et al. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery[J]. Remote Sensing, 2015, 7(11): 14680–14707. doi: 10.3390/rs71114680 ZOU Qin, NI Lihao, ZHANG Tong, et al. Deep learning based feature selection for remote sensing scene classification[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(11): 2321–2325. doi: 10.1109/LGRS.2015.2475299 -

下载:

下载:

下载:

下载: