Application of Residual Network to Infant Crying Recognition

-

摘要:

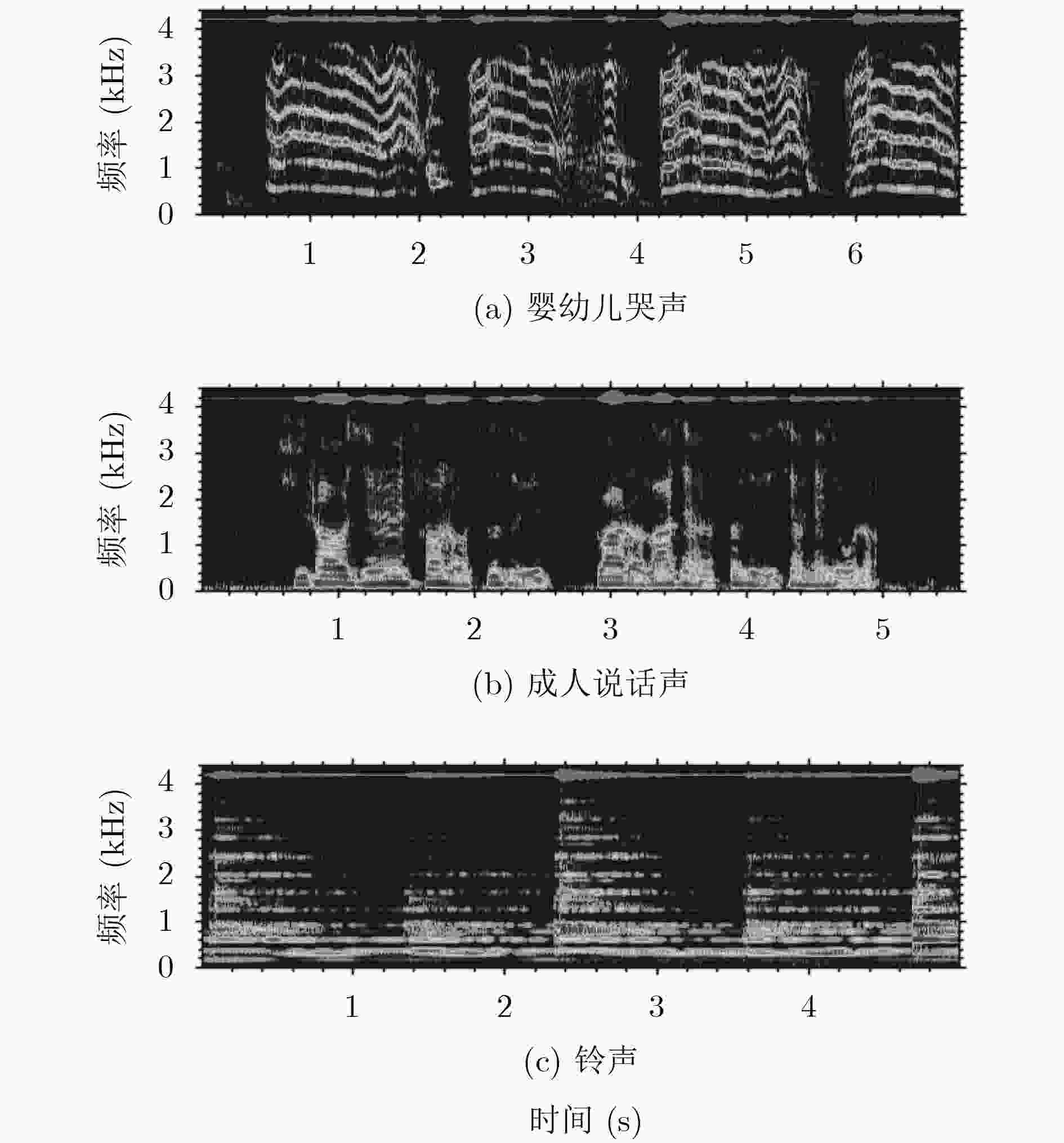

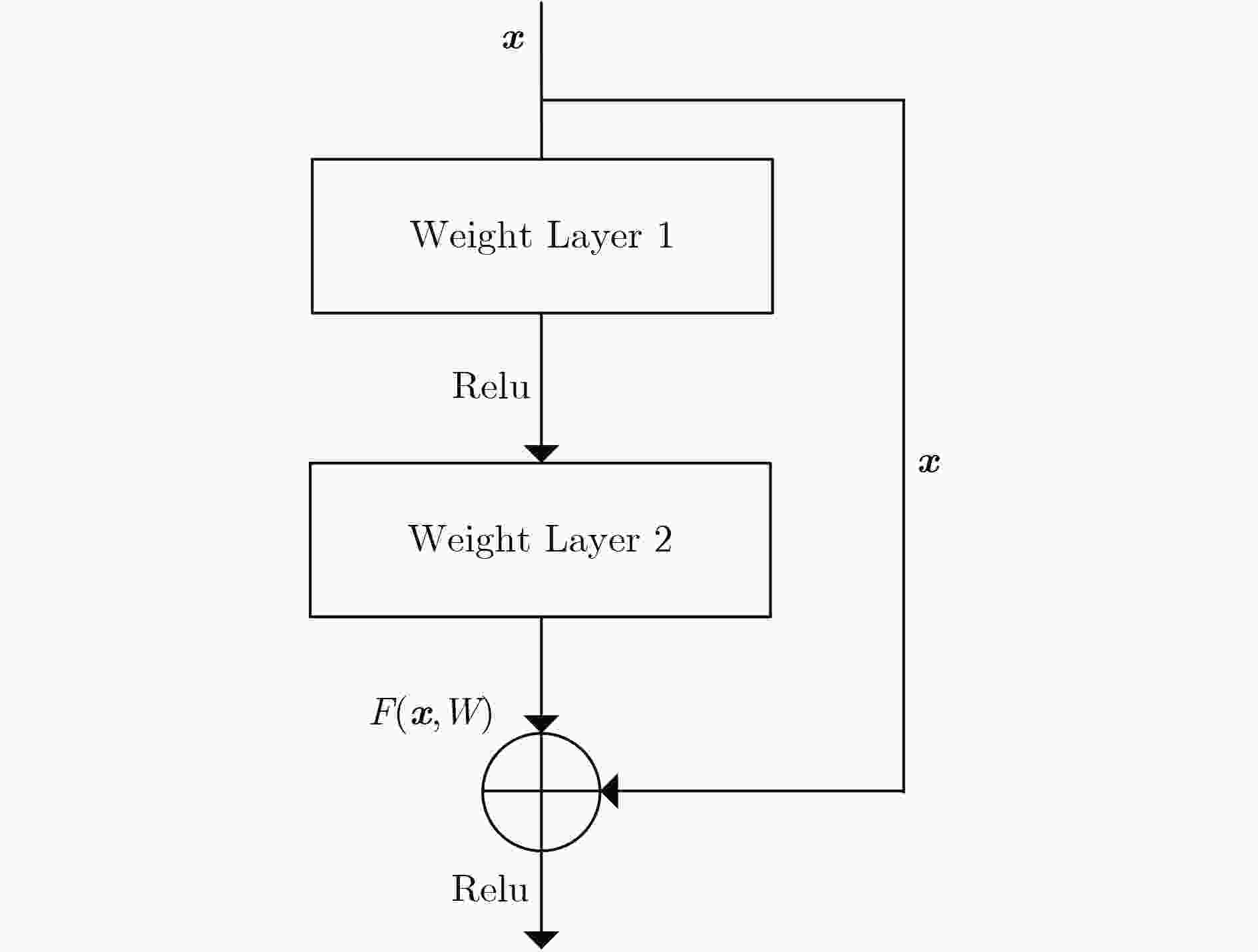

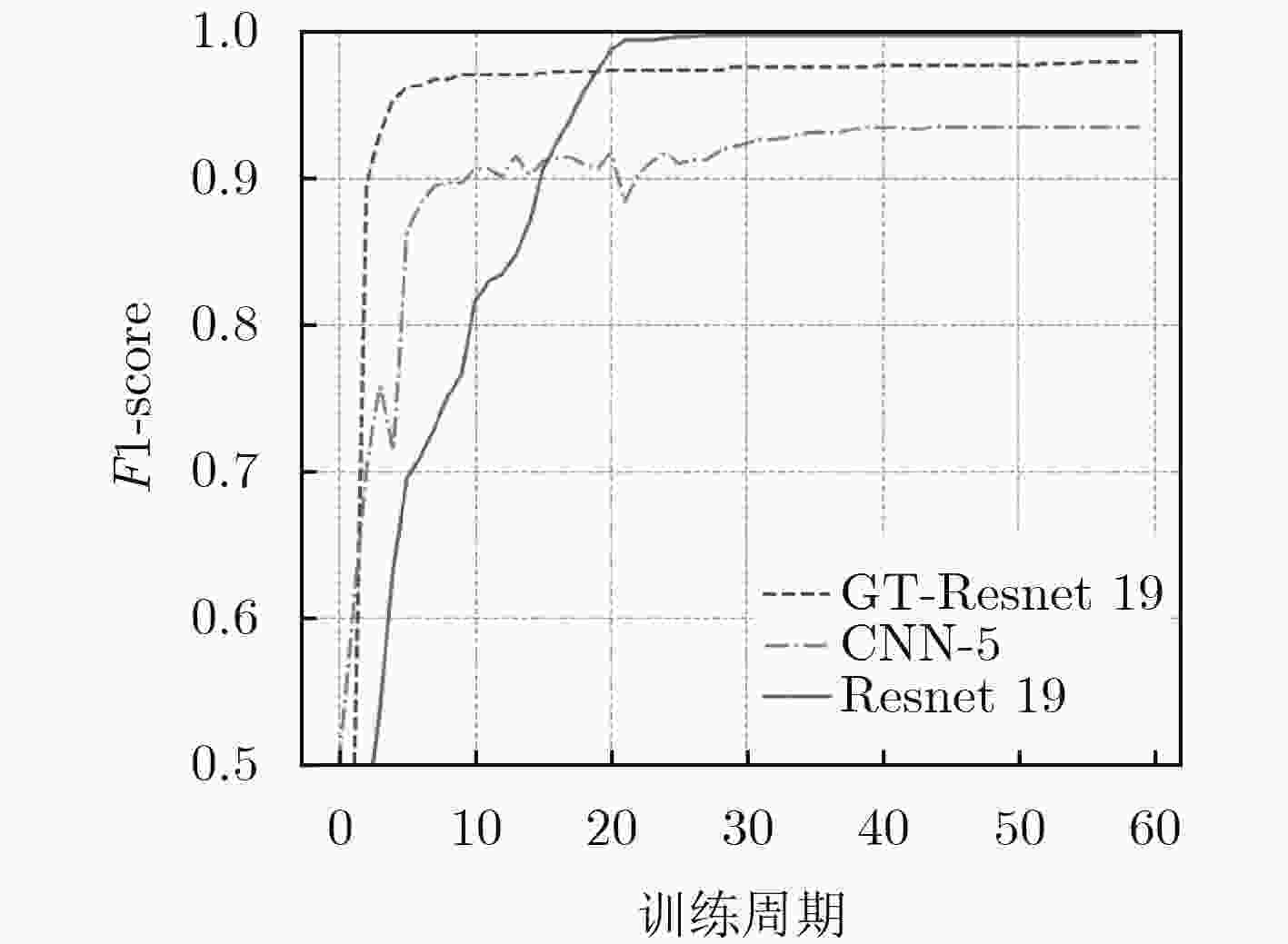

该文使用语谱图结合残差网络的深度学习模型进行婴幼儿哭声的识别,使用婴幼儿哭声与非哭声样本比例均衡的语料库,经过五折交叉验证,与支持向量机(SVM),卷积神经网络(CNN),基于Gammatone滤波器的听觉谱残差网络(GT-Resnet)3种模型相比,基于语谱图的残差网络取得了最优结果,F1-score达到0.9965,满足实时性要求,证明了语谱图在婴幼儿哭声识别任务中能直观地反映声学特征,基于语谱图的残差网络是解决婴幼儿哭声识别任务的优秀方法。

Abstract:The deep learning model based on the residual network and the spectrogram is used to recognize infant crying. The corpus has balanced proportion of infant crying and non-crying samples. Finally, through the 5-fold cross validation, compared with three models of Support Vector Machine (SVM), Convolutional Neural Network (CNN) and the cochleagram residual network based on Gammatone filters (GT-Resnet), the spectrogram based residual network gets the best F1-score of 0.9965 and satisfies requirements of real time. It is proved that the spectrogram can react acoustics features intuitively and comprehensively in the recognition of infant crying. The residual network based on spectrogram is a good solution to infant crying recognition problem.

-

Key words:

- Infant crying recognition /

- Deep learning /

- Residual network /

- Spectrogram

-

表 1 五折交叉验证数据集平均规模(条)

婴幼儿哭声 非哭声 总计 训练集规模 1243 1148 2391 测试集规模 310 286 596 表 2 SVM实验特征提取

提取特征类型 统计处理方法 维数 MFCC及其1阶2阶差分 均值、方差 72 短时能量 均值、方差 2 基音频率 均值、方差、最大值、最小值、极差 5 表 3 SVM不同核函数性能比较

核函数类型 F1-score 参数 线性核函数 0.8717 c=0.68 多项式核函数 0.9316 c=0.30, g=0.35, r=–0.20, d=3.00 高斯核函数 0.9458 c=0.98, g=1.71 Sigmod核函数 0.8874 c=5.00, g=0.04, r=1.80 表 4 不同层数CNN性能对比

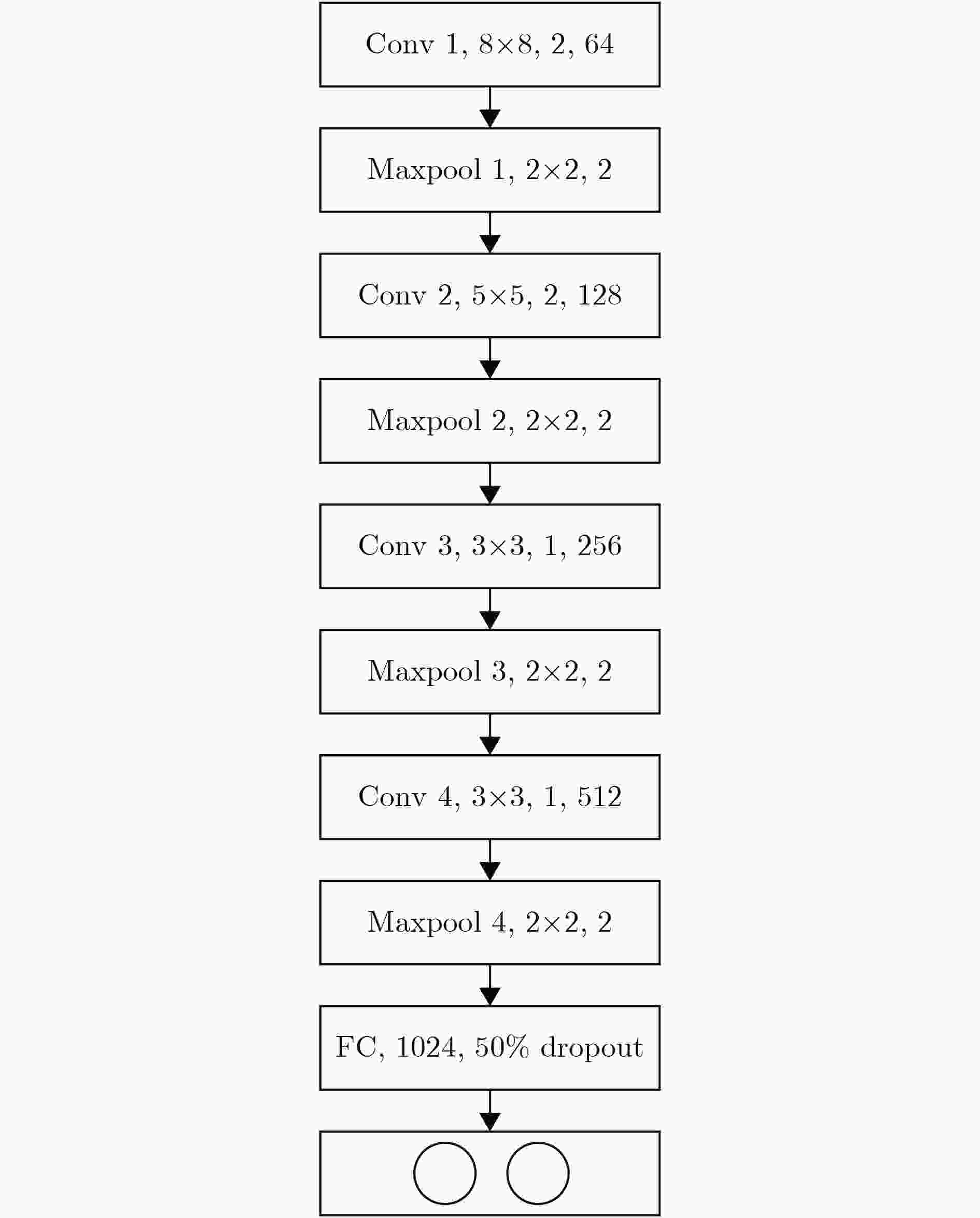

CNN模型 输入特征 F1-score CNN-4-MEL 40×128Mel语谱图 0.9184 CNN-4-227 227×227语谱图 0.9233 CNN-4 128×128语谱图 0.9229 CNN-5-227 227×227语谱图 0.9482 CNN-5 128×128语谱图 0.9489 CNN-6 128×128语谱图 0.9365 CNN-7 128×128语谱图 0.9398 表 5 模型性能对比

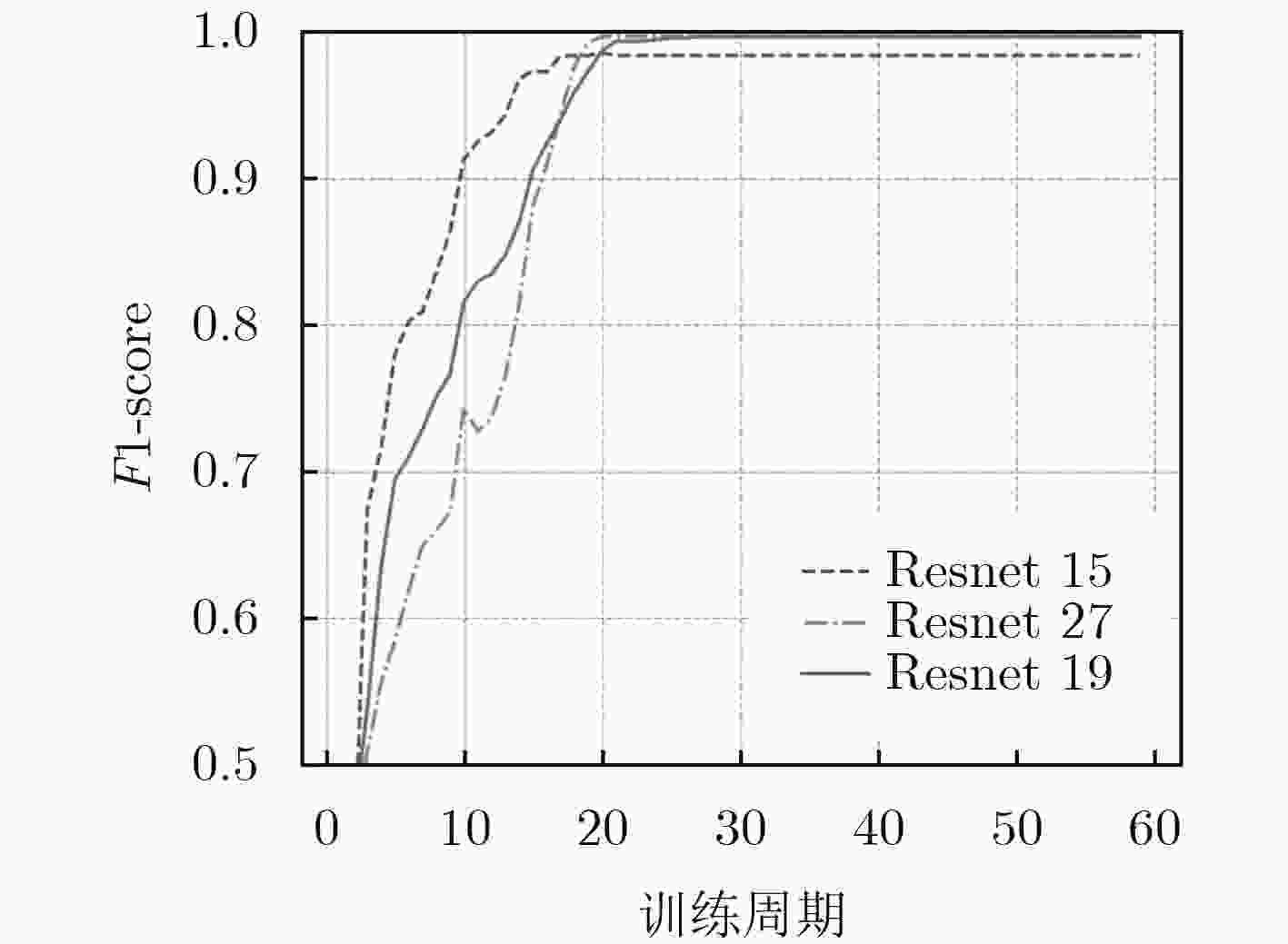

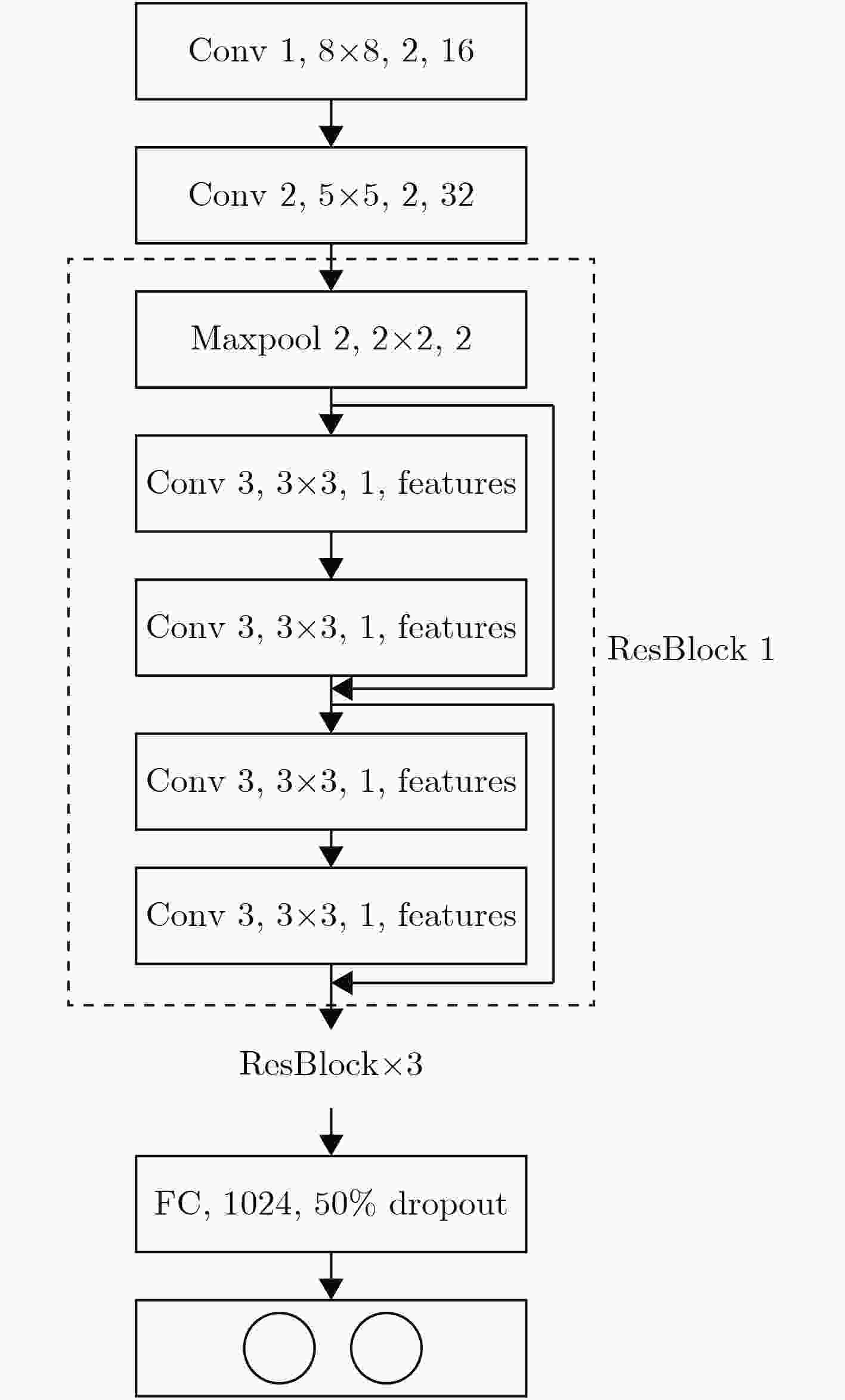

模型 网络结构 输入特征 生成模型大小(MB) 平均测试时间(s) F1-score SVM 单层网络 统计特征 0.7 0.0910+0.0001 0.9458 CNN-5 4conv+1fc 语谱图 10 0.1251+0.0093 0.9489 Resnet15 3resblock+1fc 语谱图 48 0.1251+0.0281 0.9836 Resnet19 4resblock+1fc 语谱图 87 0.1251+0.0315 0.9965 Resnet27 6resblock+1fc 语谱图 171 0.1251+0.0355 0.9965 GT-Resnet15 3resblock+1fc 听觉谱 48 0.1933+0.0218 0.9803 GT-Resnet19 4resblock+1fc 听觉谱 87 0.1933+0.0237 0.9782 GT-Resnet27 6resblock+1fc 听觉谱 171 0.1933+0.0285 0.9719 注:平均测试时间=特征提取时间+模型预测时间 -

于洪志, 刘思思. 三个月婴儿啼哭声的声学分析[C]. 全国人机语音通讯学术会议, 西安, 2011: 1–4.YU Hongzhi and LIU Sisi. Crying sound learning analysis of three months baby[C]. National Conference on Man-Machine Speech Communication, Xi’an, China, 2011: 1–4. 王之禹, 雷云珊. 婴儿啼哭声的声学特征[C]. 中国声学学会2006年全国声学学术会议, 厦门, 2006: 389–390.WANG Zhiyu and LEI Yunshan. Acoustic characteristic of infant cries[C]. National Conference on Acoustics. Acoustical Society of China, Xiamen, China, 2006: 389–390. ABDULAZIZ Y and AHMAD S M S. Infant cry recognition system: A comparison of system performance based on mel frequency and linear prediction cepstral coefficients[C]. International Conference on Information Retrieval & Knowledge Management, Shah Alam, Malaysia, 2010: 260–263. doi: 10.1109/INFRKM.2010.5466907. COHEN R and LAVNER Y. Infant cry analysis and detection[C]. Electrical & Electronics Engineers in Israel, Eilat, Israel, 2012: 1–5. LAVNER Y, COHEN R, RUINSKIY D, et al. Baby cry detection in domestic environment using deep learning[C]. 2016 IEEE International Conference on the Science of Electrical Engineering (ICSEE), Eilat, Israel, 2016: 1–5. doi: 10.1109/EEEI.2012.6376996. TORRES R, BATTAGLINO D, and LEPAULOUX L. Baby cry sound detection: A comparison of hand crafted features and deep learning approach[C]. International Conference on Engineering Applications of Neural Networks. Springer, Cham, 2017: 168–179. doi: 10.1007/978-3-319-65172-9_15. CHANG Chuanyu and LI Jiajing. Application of deep learning for recognizing infant cries[C]. IEEE International Conference on Consumer Electronics, Nantou, China, 2016: 1–2. doi: 10.1109/ICCE-TW.2016.7520947. SHARAN R V and MOIR T J. Cochleagram image feature for improved robustness in sound recognition[C]. IEEE International Conference on Digital Signal Processing, Singapore, 2015: 441–444. PATTERSON R D, NIMMO-SMITH I, HOLDSWORTH J, et al. An efficient auditory filterbank based on the gammatone function[C]. Proceedings of the 1987 Speech-Group Meeting of the Institute of Acoustics on Auditory Modelling, RSRE, Malvern, 1987: 2–18. 刘文举, 聂帅, 梁山, 等. 基于深度学习语音分离技术的研究现状与进展[J]. 自动化学报, 2016, 42(6): 819–833. doi: 10.16383/j.aas.2016.c150734LIU Wenju, NIE Shuai, LIANG Shan, et al. Deep learning based speech separation technology and its developments[J]. Acta Automatica Sinica, 2016, 42(6): 819–833. doi: 10.16383/j.aas.2016.c150734 MITTAL V K. Discriminating features of infant cry acoustic signal for automated detection of cause of crying[C]. International Symposium on Chinese Spoken Language Processing, Tianjin, China, 2017: 1–5. doi: 10.1109/ISCSLP.2016.7918391. RPSITA Y D and JUNAEDI H. Infant’s cry sound classification using Mel-Frequency Cepstrum Coefficients feature extraction and Backpropagation Neural Network[C]. International Conference on Science and Technology-Computer, Yogyakarta, Indonesia, 2017: 160–166. doi: 10.1109/ICSTC.2016.7877367. 雷云珊. 婴儿啼哭声分析与模式分类[D]. [硕士论文], 山东科技大学, 2006.LEI Yunshan. Analysis and pattern classification of infants’ cry[D]. [Master dissertation], Shandong University of Science and Technology, 2006. KRIZHEVAKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[C]. International Conference on Neural Information Processing Systems, Nevada, USA, 2012: 1097–1105. HE Kaiming, ZHANG Xianyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. Computer Vision and Pattern Recognition, Nevada, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. GVERES. donateacry-corpus[OL]. https://github.com/gveres/donateacry-corpus, 2017.3. 彭天强, 栗芳. 基于深度卷积神经网络和二进制哈希学习的图像检索方法[J]. 电子与信息学报, 2016, 38(8): 2068–2075. doi: 10.11999/JEIT151346PENG Tianqiang and LI Fang. Image retrieval based on deep convolutional neural networks and binary hashing learning[J]. Journal of Electronics &Information Technology, 2016, 38(8): 2068–2075. doi: 10.11999/JEIT151346 CHANG Chihchung and LIN Chihjen. LIBSVM: A library for support vector machines[J]. ACM Transactions on Intelligent Systems and Technology, 2011, 2(3): 1–27. doi: 10.1145/1961189.1961199 徐利强, 谢湘, 黄石磊, 等. 连续语音中的笑声检测研究与实现[C]. 全国声学学术会议, 武汉, 2016: 581–584.XU Liqiang, XIE Xiang, HUANG Shilei, et al. Research and implementation of laughter detection in continuous speech[C]. National Conference on Acoustics. Acoustical Society of China, Wuhan, China, 2016: 581–584. -

下载:

下载:

下载:

下载: