An Audio-visual Generalized Zero-Shot Learning Method Based on Multimodal Fusion Transformer

-

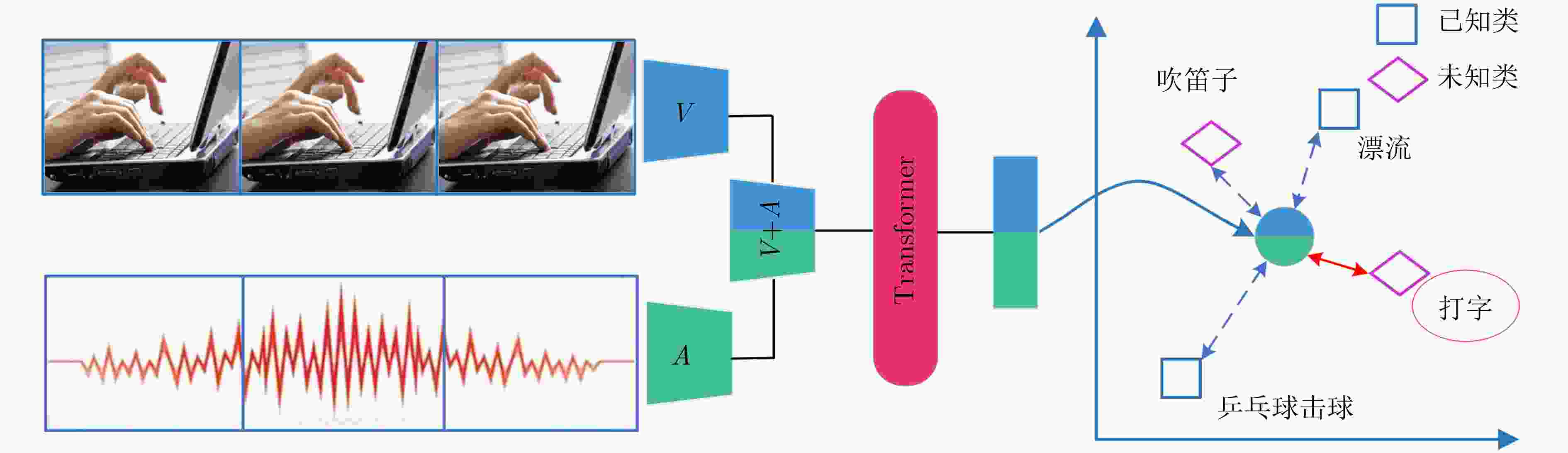

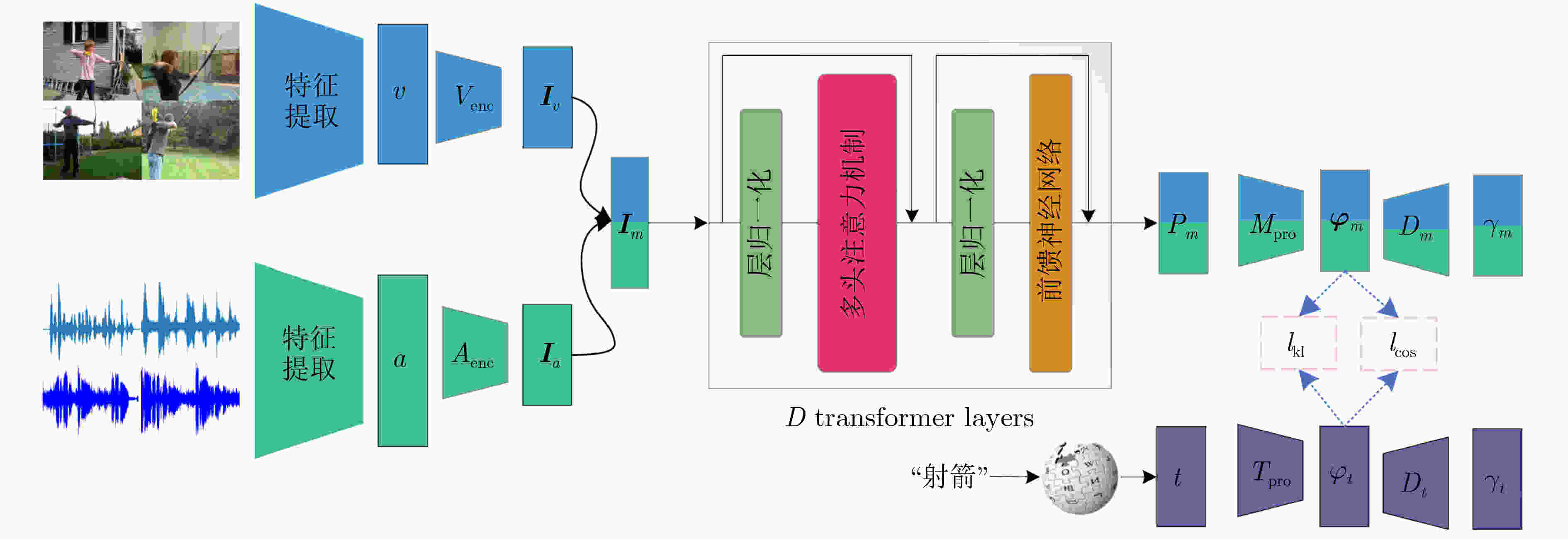

摘要: 视听零次学习需要理解音频和视觉信息之间的关系,以便能够推理未见过的类别。尽管领域做出了许多努力并取得了重大进展,但往往专注于学习强大的表征,从而忽视了音频和视频之间的依赖关系和输出分布与目标分布不一致的问题。因此,该文提出了基于Transformer的视听广义零次学习方法。具体来说,使用注意力机制来学习数据的内部信息,增强不同模态的信息交互,以捕捉视听数据之间的语义一致性;为了度量不同概率分布之间的差异和类别之间的一致性,引入了Kullback-Leibler(KL)散度和余弦相似度损失。为了评估所提方法,在VGGSound-GZSLcls, UCF-GZSLcls和ActivityNet-GZSLcls 3个基准数据集上进行测试。大量的实验结果表明,所提方法在3个数据集上都取得了最先进的性能。Abstract:

Objective Audio-visual Generalized Zero-Shot Learning (GZSL) integrates audio and visual signals in videos to enable the classification of known classes and the effective recognition of unseen classes. Most existing approaches prioritize the alignment of audio-visual and textual label embeddings, but overlook the interdependence between audio and video, and the mismatch between model outputs and target distributions. This study proposes an audio-visual GZSL method based on a Multimodal Fusion Transformer (MFT) to address these limitations. Methods The MFT employs a transformer-based multi-head attention mechanism to enable effective cross-modal interaction between visual and audio features. To optimize the output probability distribution, the Kullback-Leibler (KL) divergence between the predicted and target distributions is minimized, thereby aligning predictions more closely with the true distribution. This optimization also reduces overfitting and improves generalization to unseen classes. In addition, cosine similarity loss is applied to measure the similarity of learned representations within the same class, promoting feature consistency and improving discriminability. Results and Discussions The experiments include both GZSL and Zero-Shot Learning (ZSL) tasks. The ZSL task requires classification of unseen classes only, whereas the GZSL task addresses both unseen and seen class classification to mitigate catastrophic forgetting. To evaluate the proposed method, experiments are conducted on three benchmark datasets: VGGSound-GZSLcls, UCF-GZSLcls, and ActivityNet-GZSLcls ( Table 1 ). MFT is quantitatively compared with five ZSL methods and nine GZSL methods (Table 2 ). The results show that the proposed method achieves state-of-the-art performance on all three datasets. For example, on ActivityNet-GZSLcls, MFT exceedes the previous best ClipClap-GZSL method by 14.6%. This confirms the effectiveness of MFT in modeling cross-modal dependencies, aligning predicted and target distributions, and achieving semantic consistency between audio and visual features. Ablation studies (Tables 3 ~5 ) further support the contribution of each module in the proposed framework.Conclusions This study proposes a transformer-based audio-visual GZSL method that uses a multi-head self-attention mechanism to extract intrinsic information from audio and video data and enhance cross-modal interaction. This design enables more accurate capture of semantic consistency between modalities, improving the quality of cross-modal feature representations. To align the predicted and target distributions and reinforce intra-class consistency, KL divergence and cosine similarity loss are incorporated during training. KL divergence improves the match between predicted and true distributions, while cosine similarity loss enhances discriminability within each class. Extensive experiments demonstrate the effectiveness of the proposed method. -

表 1 数据集划分情况

阶段

数据集C CS CU 第1阶段 第2阶段 训练 验证 训练 测试 TrS ValS ValU TrS TeS TeU UCF-GZSLcls 51 30 21 30 30 12 42 42 9 VGGSound-GZSLcls 276 138 138 138 138 69 207 207 69 ActivityNet-GZSLcls 200 99 101 99 99 51 150 150 50 表 2 不同方法的性能对比分析

VGGSound-GZSLcls UCF-GZSLcls ActivityNet-GZSLcls S U HM ZSL S U HM ZSL S U HM ZSL DeViSE[31]NeurIPS’13 36.22 1.07 2.08 5.59 55.59 14.94 23.56 16.09 3.45 8.53 4.91 8.53 ALE[32]T-PAMI’15 0.28 5.48 0.53 5.48 57.59 14.89 23.66 16.32 2.63 7.87 3.94 7.90 SJE[33]CVPR’20 48.33 1.10 2.15 4.06 63.10 16.77 26.50 18.93 4.61 7.04 5.57 7.08 f-VAEGAN-D2[35]CVPR’19 12.77 0.95 1.77 1.91 17.29 8.47 11.37 11.11 4.36 2.14 2.87 2.40 APN[34]IJCV’22 7.48 3.88 5.11 4.49 28.46 16.16 20.61 16.44 9.84 5.76 7.27 6.34 †CJME[17]>WACV’20 11.96 5.41 7.45 6.84 48.18 17.68 25.87 20.46 16.06 9.13 11.64 9.92 †AVGZSLNet[18]WACV’21 13.02 2.88 4.71 5.44 56.26 34.37 42.67 35.66 14.81 11.11 12.70 12.39 †AVCA[19]CVPR’22 32.47 6.81 11.26 8.16 34.90 38.67 36.69 38.67 24.04 19.88 21.76 20.88 TCAF[20]ECCV’22 12.63 6.72 8.77 7.41 67.14 40.83 50.78 44.64 30.12 7.65 12.20 7.96 AVFS[21]IJCNN’23 15.62 6.00 8.67 7.31 54.57 36.94 44.06 41.55 14.41 8.91 11.01 9.15 †Hyper-multiple[22]ICCV’23 21.99 8.12 11.87 8.47 43.52 39.77 41.56 40.28 20.52 21.30 20.90 22.18 KDA[23]ARXIV’23 13.30 7.74 9.78 8.32 75.88 42.97 54.84 52.66 37.55 10.25 17.95 11.85 STFT[36]TIP’24 11.74 8.83 10.08 8.79 61.42 43.81 51.14 49.74 25.12 9.83 14.13 9.46 †ClipClap-GZSL[24]CVPR’24 29.68 11.12 16.18 11.53 77.14 43.91 55.97 46.96 45.98 20.06 27.93 22.76 †MFT(本文) 31.63 13.37 18.80 13.97 72.52 47.12 57.12 48.56 42.58 25.66 32.02 27.88 表 3 注意力机制的影响

模型 VGGSound-GZSLcls UCF-GZSLcls ActivityNet-GZSLcls S U HM ZSL S U HM ZSL S U HM ZSL w\o att 25.87 10.47 14.91 10.69 74.36 43.89 55.20 45.28 40.85 20.97 27.71 22.51 MFT 31.63 13.37 18.80 13.97 72.52 47.12 57.12 48.56 42.58 25.66 32.02 27.88 表 4 比较使用全损失函数$l$和去除组件$ {l_{\cos }} $, $ {l_{kl}} $的影响

Model VGGSound-GZSLcls UCF-GZSLcls ActivityNet-GZSLcls S U HM ZSL S U HM ZSL S U HM ZSL $ l {\text{-}} {l_{\cos }} $ 34.45 11.84 17.63 12.84 56.88 38.61 46.00 39.79 32.88 24.32 27.96 24.92 $ l {\text{-}} {l_{{\mathrm{kl}}}} $ 31.04 11.59 16.88 11.91 78.42 35.46 48.84 37.27 40.58 24.24 30.35 26.05 $ l $ 31.63 13.37 18.80 13.97 72.52 47.12 57.12 48.56 42.58 25.66 32.02 27.88 表 5 分别使用不同模态输入的影响

模型 VGGSound-GZSLcls UCF-GZSLcls ActivityNet-GZSLcls S U HM ZSL S U HM ZSL S U HM ZSL Audio 16.24 9.78 12.21 9.91 55.23 34.10 42.25 39.02 10.71 7.72 8.97 8.31 Visual 15.51 6.81 9.47 7.91 54.92 43.23 48.38 46.17 33.22 23.52 27.54 24.21 两者 31.63 13.37 18.80 13.97 72.52 47.12 57.12 48.56 42.58 25.66 32.02 27.88 -

[1] AN Hongchao, YANG Jing, ZHANG Xiuhua, et al. A class-incremental learning approach for learning feature-compatible embeddings[J]. Neural Networks, 2024, 180: 106685. doi: 10.1016/j.neunet.2024.106685. [2] LI Qinglang, YANG Jing, RUAN Xiaoli, et al. SPIRF-CTA: Selection of parameter importance levels for reasonable forgetting in continuous task adaptation[J]. Knowledge-Based Systems, 2024, 305: 112575. doi: 10.1016/j.knosys.2024.112575. [3] MA Xingjiang, YANG Jing, LIN Jiacheng, et al. LVAR-CZSL: Learning visual attributes representation for compositional zero-shot learning[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2024, 34(12): 13311–13323. doi: 10.1109/tcsvt.2024.3444782. [4] DING Shujie, RUAN Xiaoli, YANG Jing, et al. LRDTN: Spectral-spatial convolutional fusion long-range dependence transformer network for hyperspectral image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2025, 63: 5500821. doi: 10.1109/tgrs.2024.3510625. [5] AFOURAS T, OWENS A, CHUNG J S, et al. Self-supervised learning of audio-visual objects from video[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 208–224. doi: 10.1007/978-3-030-58523-5_13. [6] ASANO Y M, PATRICK M, RUPPRECHT C, et al. Labelling unlabelled videos from scratch with multi-modal self-supervision[C]. The 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 4660–4671. [7] MITTAL H, MORGADO P, JAIN U, et al. Learning state-aware visual representations from audible interactions[C]. The 36th International Conference on Neural Information Processing Systems, New Orleans, USA, 2022: 23765–23779. [8] MORGADO P, MISRA I, and VASCONCELOS N. Robust audio-visual instance discrimination[C]. The 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 12929–12940. doi: 10.1109/cvpr46437.2021.01274. [9] XU Hu, GHOSH G, HUANG Poyao, et al. VideoCLIP: Contrastive pre-training for zero-shot video-text understanding[C]. The 2021 Conference on Empirical Methods in Natural Language Processing, Online, 2021: 6787–6800. doi: 10.18653/v1/2021.emnlp-main.544. [10] LIN K Q, WANG A J, SOLDAN M, et al. Egocentric video-language pretraining[C]. The 36th International Conference on Neural Information Processing Systems, New Orleans, USA, 2022: 7575–7586. [11] LIN K Q, ZHANG Pengchuan, CHEN J, et al. UniVTG: Towards unified video-language temporal grounding[C]. The 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 2023: 2782–2792. doi: 10.1109/iccv51070.2023.00262. [12] MIECH A, ALAYRAC J B, SMAIRA L, et al. End-to-end learning of visual representations from uncurated instructional videos[C]. The 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 9876–9886. doi: 10.1109/cvpr42600.2020.00990. [13] AFOURAS T, ASANO Y M, FAGAN F, et al. Self-supervised object detection from audio-visual correspondence[C]. The 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 10565–10576. doi: 10.1109/cvpr52688.2022.01032. [14] CHEN Honglie, XIE Weidi, AFOURAS T, et al. Localizing visual sounds the hard way[C]. The 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 16862–16871. doi: 10.1109/cvpr46437.2021.01659. [15] QIAN Rui, HU Di, DINKEL H, et al. Multiple sound sources localization from coarse to fine[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 292–308. doi: 10.1007/978-3-030-58565-5_18. [16] XU Haoming, ZENG Runhao, WU Qingyao, et al. Cross-modal relation-aware networks for audio-visual event localization[C]. The 28th ACM International Conference on Multimedia, Seattle, USA, 2020: 3893–3901. doi: 10.1145/3394171.3413581. [17] PARIDA K K, MATIYALI N, GUHA T, et al. Coordinated joint multimodal embeddings for generalized audio-visual zero-shot classification and retrieval of videos[C]. The 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, USA, 2020: 3240–3249. doi: 10.1109/wacv45572.2020.9093438. [18] MAZUMDER P, SINGH P, PARIDA K K, et al. AVGZSLNet: Audio-visual generalized zero-shot learning by reconstructing label features from multi-modal embeddings[C]. The 2021 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2021: 3089–3098. doi: 10.1109/wacv48630.2021.00313. [19] MERCEA O B, RIESCH L, KOEPKE A S, et al. Audiovisual generalised zero-shot learning with cross-modal attention and language[C]. The 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 10543–10553. doi: 10.1109/cvpr52688.2022.01030. [20] MERCEA O B, HUMMEL T, KOEPKE A S, et al. Temporal and cross-modal attention for audio-visual zero-shot learning[C]. 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 488–505. doi: 10.1007/978-3-031-20044-1_28. [21] ZHENG Qichen, HONG Jie, and FARAZI M. A generative approach to audio-visual generalized zero-shot learning: Combining contrastive and discriminative techniques[C]. 2023 International Joint Conference on Neural Networks, Gold Coast, Australia, 2023: 1–8. doi: 10.1109/ijcnn54540.2023.10191705. [22] HONG Jie, HAYDER Z, HAN Junlin, et al. Hyperbolic audio-visual zero-shot learning[C]. The 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 2023: 7839–7849. doi: 10.1109/iccv51070.2023.00724. [23] CHEN Haoxing, LI Yaohui, HONG Yan, et al. Boosting audio-visual zero-shot learning with large language models[J]. arXiv: 2311.12268, 2023. doi: 10.48550/arXiv.2311.12268. [24] KURZENDÖRFER D, MERCEA O B, KOEPKE A S, et al. Audio-visual generalized zero-shot learning using pre-trained large multi-modal models[C]. The 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, USA, 2024: 2627–2638. doi: 10.1109/cvprw63382.2024.00269. [25] RADFORD A, KIM J W, HALLACY C, et al. Learning transferable visual models from natural language supervision[C]. The 38th International Conference on Machine Learning, 2021: 8748–8763. [26] MEI Xinhao, MENG Chutong, LIU Haohe, et al. WavCaps: A ChatGPT-assisted weakly-labelled audio captioning dataset for audio-language multimodal research[J]. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2024, 32: 3339–3354. doi: 10.1109/TASLP.2024.3419446. [27] CHEN Honglie, XIE Weidi, VEDALDI A, et al. Vggsound: A large-scale audio-visual dataset[C]. ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 2020: 721–725. doi: 10.1109/icassp40776.2020.9053174. [28] SOOMRO K, ZAMIR A R, and SHAH M. A dataset of 101 human action classes from videos in the wild[J]. Center for Research in Computer Vision, 2012, 2(11): 1–7. [29] HEILBRON F C, ESCORCIA V, GHANEM B, et al. ActivityNet: A large-scale video benchmark for human activity understanding[C]. The 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 961–970. doi: 10.1109/cvpr.2015.7298698. [30] CHAO Weilun, CHANGPINYO S, GONG Boqing, et al. An empirical study and analysis of generalized zero-shot learning for object recognition in the wild[C]. 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 52–68. doi: 10.1007/978-3-319-46475-6_4. [31] FROME A, CORRADO G S, SHLENS J, et al. DeViSE: A deep visual-semantic embedding model[C]. The 27th International Conference on Neural Information Processing Systems, Lake Tahoe, Nevada, 2013: 2121–2129. [32] AKATA Z, PERRONNIN F, HARCHAOUI Z, et al. Label-embedding for image classification[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(7): 1425–1438. doi: 10.1109/TPAMI.2015.2487986. [33] AKATA Z, REED S, WALTER D, et al. Evaluation of output embeddings for fine-grained image classification[C]. The 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 2927–2936. doi: 10.1109/cvpr.2015.7298911. [34] XU Wenjia, XIAN Yongqin, WANG Jiuniu, et al. Attribute prototype network for any-shot learning[J]. International Journal of Computer Vision, 2022, 130(7): 1735–1753. doi: 10.1007/s11263-022-01613-9. [35] XIAN Yongqin, SHARMA S, SCHIELE B, et al. F-VAEGAN-D2: A feature generating framework for any-shot learning[C]. The 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 10267–10276. doi: 10.1109/cvpr.2019.01052. [36] LI Wenrui, WANG Penghong, XIONG Ruiqin, et al. Spiking tucker fusion transformer for audio-visual zero-shot learning[J]. IEEE Transactions on Image Processing, 2024, 33: 4840–4852. doi: 10.1109/tip.2024.3430080. [37] TRAN D, BOURDEV L, FERGUS R, et al. Learning spatiotemporal features with 3D convolutional networks[C]. The 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4489–4497. doi: 10.1109/iccv.2015.510. [38] HERSHEY S, CHAUDHURI S, ELLIS D P W, et al. CNN architectures for large-scale audio classification[C]. 2017 IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, USA, 2017: 131–135. doi: 10.1109/icassp.2017.7952132. -

下载:

下载:

下载:

下载: